Servicios Personalizados

Articulo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares en Google

Similares en Google

Compartir

SAIEE Africa Research Journal

versión On-line ISSN 1991-1696

versión impresa ISSN 0038-2221

SAIEE ARJ vol.111 no.3 Observatory, Johannesburg sep. 2020

REGULAR PAPER(S)

Automatic pupil detection and gaze estimation using the vestibulo-ocular reflex in a low-cost eye-tracking setup

Vered AharonsonI; Verushen Y. CoopooI; Kyle L. GovenderI; Michiel PostemaII

ISchool of Electrical and Information Engineering, University of the Witwatersrand, Johannesburg, 1 Jan Smuts Laan, Braamfontein 2050, South Africa (e-mail: vered.aharonson@wits.ac.za)

IISenior Member, IEEE; School of Electrical and Information Engineering, University of the Witwatersrand, Johannesburg, 1 Jan Smuts Laan, Braamfontein 2050, South Africa

ABSTRACT

Automatic eye tracking is of interest for interaction with people suffering from amyotrophic lateral sclerosis, for using the eyes to control a computer mouse, and for controlled radiotherapy of uveal melanoma. It has been speculated that gaze estimation accuracy might be improved by using the vestibulo-ocular reflex. This involuntary reflex results in slow, compensatory eye movements, opposing the direction of head motion. We therefore hypothesised that leaving the head to move freely during eye tracking must produce more accurate results than keeping the head fixed, only allowing the eyes to move. The purpose of this study was to create a low-cost eye tracking system that incorporates the vestibulo-ocular reflex in gaze estimation, by keeping the head freely moving. The instrument used comprised a low-cost head-mounted webcam which recorded a single eye. Pupil detection was fully automatic and in real time with a straightforward hybrid colour-based and model-based algorithm, despite the lower-end webcam used for recording and despite the absence of direct illumination. A model-based algorithm and an interpolation-based algorithm were tested in this study. Based on mean absolute angle difference in the gaze estimation results, we conclude that the model-based algorithm performed better when the head was not moving and equally well when the head was moving. With most deviations of the points of gaze from the target points being less than 1° using either algorithm when the head is moving freely, it can be concluded that our setup performs fully within the 2° benchmark from literature, whereas deviations when the head was not moving exceeded 2° . The algorithms used were not previously tested under passive illumination. This was the first study of a low-cost eye-tracking setup taking into account the vestibulo-ocular reflex.

Index Terms: Pupil tracking, video-oculography, eye position mapping, VO reflex.

I. INTRODUCTION

AUTOMATIC eye tracking is of interest for interaction with people suffering from amyotrophic lateral sclerosis [1], for using the eyes to control a computer mouse [24], and for controlled radiotherapy of uveal melanoma [5]. Eye-tracking systems are commercially available [6,7]. Eye-tracking devices are conceptually composed of a data acquisition and a data processing part, where the data consist of eye position recordings. The mapping of eye positions from visual images is referred to as video-oculography [8]. Using an active light source for illumination shortens the processing time to determine the eye position [9-11], but this is costly and risk retina and skin burns [12,13]. Video-oculography under passive illumination requires more processing [14,15]. The performance of eye-tracking algorithms in video-oculography highly depends on the hardware used and the recording conditions. Video capturing systems attached to the head are notably faster than remote capturing systems [16].

Video-oculography data processing comprises pupil detection and subsequent gaze estimation. Gaze estimation seeks the point of regard (POR, gaze point). Pupil detection algorithms segment images based on colour and shape properties [17,18]. Colour-based algorithms differentiating between the iris and its surroundings may produce false boundary edges [19]. Shape-based algorithms using prior knowledge of the circularity of the iris and the pupil are invariant to translation, scale, and lighting [20]. The optimisation of a shape model requires multiple, time-consuming, iterations [21,22]. Hybrid algorithms reduce the computation time by starting from a rough colour-based algorithm [23, 24]. In addition, a shape model can be taken prior to colour processing [25]. Gaze estimation algorithms can be either interpolation-based or model-based [26-28]. Interpolation-based algorithms perform a mapping from the eye to the point of regard either paramet-rically using polynomials [28-30] or non-parametrically using neural networks or Support Vector Machines [26]. Model-based algorithms create a vector from the eye to the point of regard [31].

The accuracy of video-based eye-trackers using systems with high-quality cameras and multiple light sources is less than 2° [32].

It has been speculated that gaze estimation accuracy might be improved by using the vestibulo-ocular reflex. This involuntary reflex results in slow, compensatory movements of the eye in the opposing direction of head motion [33,34]. We therefore hypothesise that leaving the head to freely move during eye tracking must produce more accurate results than keeping the head fixed, only allowing the eyes to move.

The purpose of this study was to create a low-cost eye tracking system that incorporates the vestibulo-ocular reflex in gaze estimation.

II. MATERIALS AND METHODS

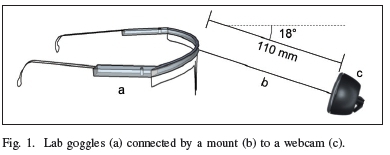

A schematic of the apparatus and its dimensions is shown in Figure 1. A Logitech C270 (Logitech International S.A., Lausanne, Switzerland) webcam with 1280x720 pixels, operating at a 30-Hz framerate, was mounted on a pair of 570295 Evrigard Euro Anti Scratch Spectacle - Clear laboratory safety goggles (Evrigard, Johannesburg, South Africa). An aluminium mount was bolted to the upper frame of the goggles, positioning the webcam 110 mm from the eyes, at an elevation of -18°. The eye tracker goggles were worn by a user seated at 52-cm horizontal distance from the 15,6" liquid crystal display of a Lenovo IdeaPad 300-15ISK 80Q7009DSA laptop computer (Lenovo Group Limited, Beijing, P.R. China) with 1366 x 768 pixels.

The webcam was purchased for 485 ZAR and the lab goggles for 20 ZAR.

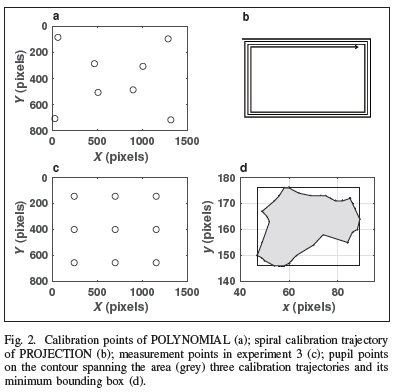

The experiments took place in a laboratory in the Chamber of Mines Building of the University of the Witwatersrand, Johannesburg, with standard-height ceiling and fluorescent lighting. The setup was positioned such that the windows were to the left-hand side of the user, without direct sunlight. Fluorescent lighting was on during all experiments. During the experiments, video footage was collected from an individual user wearing the goggles. The user was instructed to move the eyes around the edges of the computer display, clockwise, for three consecutive times (cf. Figure 2b). Thereafter, eight points were shown on the screen (cf. Figure 2a). The user was instructed to gaze at each point for three seconds, only moving the eyes and not the head. The data from these two experiments were used for calibration purposes.

During the third experiment, a nine-point raster was displayed (cf. Figure 2c). The user was instructed to gaze at each point for three seconds. However, sometimes the user was instructed only to move the eyes and not the head, sometimes head movement was allowed. Even slight head movements triggered the vestibulo-ocular reflex, whose contribution could be therefore quantified without the need of additional processing.

From these experiments, a total number of 35 280 images from six users were recorded and processed. In real-time, the pupil position was detected from each image, after which the point of regard was computed using two different algorithms. The deviation [degrees] of each computed point of regard from the target point was stored per user number. No user data were stored. Images were immediately discarded after processing. The parameters of the algorithms were determined interactively from the recordings of two of the six users. All results from these two users were excluded from the study and only the results from the four remaining users, two male and two female, were included in the study. The deviation results were analysed and presented according to literature [35]. Python and OpenCV libraries were used for all data processing in this study.

The pupil detection algorithm is illustrated by Figure 3. In an image, the area containing the eye of choice was automatically cropped using the known dimensions of the positions of the hardware. The image was flipped so that its direction axes corresponded to those of the computer display (cf. Figure 3a). Gaussian blurring was performed using an 11 X 11 pixels core convolution kernel, with a standard deviation of 0 in both x and y directions (cf Figure 3b). The image was converted to 8-bit greyscale (cf. Figure 3c), which was followed by linear histogram equalisation (cf. Figure 3d). The image was inversely thresholded with grey values of 12 and less converted to 1 (white) and grey values higher than 12 converted to 0 (black) (cf. Figure 3e). This binary image was subjected to three iterations of dilation with a 3x3 pixels kernel (cf Figure 3f). After contour detection (cf. Figure 3g, bold red lines), the largest contour was automatically selected, which was subjected to convex hull fitting (cf. Figure 3g, thin green line). The centre of mass was determined from the moments inside the convex-fitted contour, whilst the minimum enclosing circle around the convex fitted contour was computed to determine its radius. A white disk with this radius and a centre corresponding to the centre of mass mentioned was plotted over the binary image after dilation (cf. Figure 3h), followed by contour detection (cf. Figure 3i, bold blue lines), automatic selection of the largest contour, convex hull fitting thereof (cf Figure 3j, thin green line), and computing the centre of mass. The new centre of mass was taken as the pupil position (cf. Figure 3j, bold green dot) and stored as point (x, y).

Two separate gaze estimation algorithms were used, the first a parametrical interpolation-based algorithm, in this article referred to as POLYNOMIAL, the second a model-based algorithm, in this article referred to as PROJECTION.

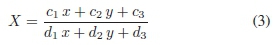

In POLYNOMIAL, pupil position points (x, y) were projected on screen coordinates (X,Y ) according to [10]:

and

The four unknown coefficients were computed using least squares regression. The coordinates to be tracked on the computer display had been chosen such, that the gaze angle relative to the eye-display axis a< 12,5°. For small angles, sin a « tan a « a [rad]. Consequently, using higher order polynomials would not change accuracy [10].

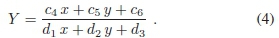

In PROJECTION, a planar input region containing pupil positions was mapped onto the planer screen region via a homographical relationship [9]:

and

Pupil points from experiment 1 were plotted in a coordinate system, where only pupil points on the contour spanning the three calibration trajectories were used (cf. Figure 2d, points on the surface). It should be noted that although a user may experience the eye motion as fluent straight motion, the actual recorded movement can be an irregular, asymmetric contour. Points inside the contour were used for (x, y). The minimum bounding box around (x, y) was chosen for (X,Y). Coefficients cn...dnwere computed using the ProjectiveTransform class of the skimage Python library.

III. Results and discussion

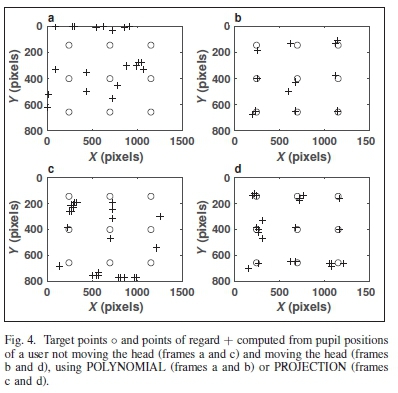

Figure 4 shows a small subset of experiment 3 in one user. The left-hand-side frames show the results of the user not moving the head; the right-hand-side frames show the results of the user moving the head. The data represented in the upper frames had been processed with POLYNOMIAL, whilst the data represented in the lower frames had been processed with PROJECTION. Thus, the points of regard represented in frame a were computed from the same pupil positions as the points of regard in frame c and the points of regard represented in frame b were computed from the same pupil positions as the points of regard in frame d. Most of the points of regard on frame d are on or less than 1° from the target. Frame b, computed the same pupil positions, shows most points of gaze on the targets as well, with one outlier on the centre. This can be attributed to the positions of the calibration points of POLYNOMIAL. This is the opposite of PROJECTION, where the points closer to the edge require more extrapolation. However, when the head is not moving, i.e., when the vestibulo-ocular reflex is not triggered, the points of regard deviate substantially from the target points, which is shown in frames a and c. since the same algorithms were used to compute the points in b and d, this means that the pupil positions must have been different. Frame b shows points of regard grouped closer to each other, suggesting that PROJECTION resulted in gaze estimations closer to the true point of regard. This small subset of the data was representative for the full data set.

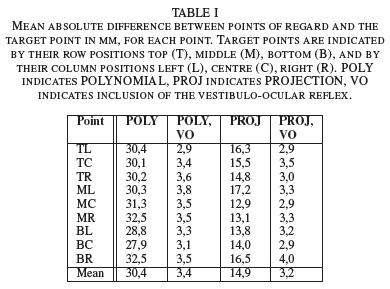

Table I shows the mean absolute difference between points of regard and the target point [in mm], for each point, averaged over all users. PROJECTION was more accurate than POLYNOMIAL for most points. Incorporating the vestibulo-ocular reflex drastically lowered the deviation in both algorithms, to an equal deviation of 3 mm.

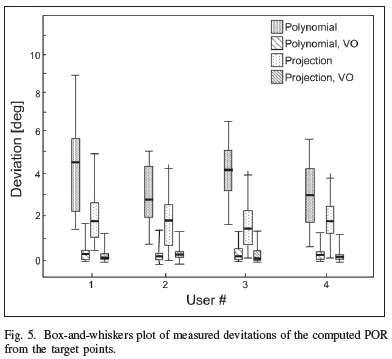

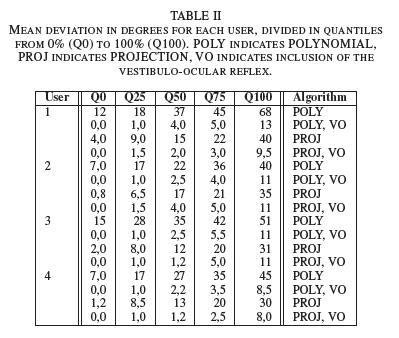

The full dataset is represented in the box-and-whiskers plot in Figure 5. For all users, the deviation in the points of regard was greater when using POLYNOMIAL compared to when using PROJECTION. The source data of the box-and-whiskers plot are shown Table II. When moving the head, the accuracy of gaze estimation, represented by the deviation angle, was less than 1° for all users, independent of the algorithm used. The deviation angle was narrowed when moving the head, increasing the accuracy of gaze estimation. This confirms our hypothesis that leaving the head to freely move during eye tracking must produce more accurate results than keeping the head fixed, only allowing the eyes to move.

We speculate that involuntary eye movement during gazing - the eye wandering off - is the reason why the gaze estimations without head movement are deviating so much from the target points.

IV. Conclusions

The eye-tracking system studied was straightforward to assemble and affordable, having cost 505 ZAR in components. The system did not require active illumination, eliminating a health risk.

Pupil detection was fully automatic and in real time with a straightforward hybrid colour-based and model-based algorithm, despite the lower-end webcam used for recording and despite the absence of direct illumination.

Based on mean absolute angle difference in the gaze estimation results, we conclude that the model-based algorithm performed better when the head was not moving and equally well when the head was moving.

With most deviations of the points of gaze from the target points being less than 1° using either algorithm when the head is moving, it can be concluded that our setup performs fully within the 2° benchmark from literature.

The algorithms used were not previously tested under passive illumination. This was the first study of a low-cost eye-tracking setup taking into account the vestibulo-ocular reflex.

Acknowledgement

Ethical approval was granted under clearance certificate No. M180420 by the Human Research Ethics Committee (Medical) of the University of the Witwatersrand, Johannesburg.

REFERENCES

[1] E.W. Sellers and E. Donchin: "A P300-based brain-computer interface: initial tests by ALS patients", Clinical Neurophysiology, Vol. 117, pp. 538-548, 2006. [ Links ]

[2] M. Betke, J. Gips and P. Fleming: "The camera mouse: visual tracking of body features to provide computer access for people with severe disabilities", IEEE Transactions on Neural Systems and Rehabilitation Engineering, Vol. 10, pp. 1-10, 2002. [ Links ]

[3] R.J.K. Jacob and K.S. Karn: "Eye tracking in human-computer interaction and usability research: ready to deliver the promises", in R. Radach, J. Hyona and H. Deubel (Eds.): The Mind's Eye: Cognitive and Applied Aspects of Eye Movement Research, Elsevier, Amsterdam, pp. 573-605, 2003.

[4] J. Gips, M. Betke and P. Fleming: "The camera mouse: preliminary investigation of automated visual tracking for computer access", Proceedings: Conference on Rehabilitation Engineering and Assistive Technology Society of North America, pp. 98-100, 2000.

[5] K. Muller, N. Naus, P.J.C.M. Nowak, P.I.M. Schmitz, C. de Pan, C.A. van Santen, J.P. Marijnissen, D.A. Paridaens, P.C. Levendag, G.P.M. Luyten: "Fractionated stereotactic radiotherapy for uveal melanoma, late clinical results", Radiotherapy & Oncology, Vol. 102, pp. 219-224, 2012. [ Links ]

[6] S.A. Johansen, J. San Agustin, H. Skovsgaard, J.P. Hansen and M. Tall: "Low cost vs. high-end eye tracking for usability testing", Proceedings: CHI'11 Extended Abstracts on Human Factors in Computing Systems, pp. 1177-1182, 2011.

[7] G. Funke, E. Greenlee, M. Carter, A. Dukes, R. Brown and L. Menke: "Which eye tracker is right for your research? Performance evaluation of several cost variant eye trackers", Proceedings: Human Factors and Ergonomics Society Annual Meeting, pp. 1240-1244, 2016.

[8] T. Haslwanter and A.H. Clarke: "Eye movement measurement: electro-oculography and video-oculography", in S.D. Eggers D. Zee (Eds.): Handbook of Clinical Neurophysiology. Volume 9. Vertigo and Imbalance: Clinical Neurophysiology of the Vestibular System, pp. 61-79, 2010.

[9] Z. Zhu and Q. Ji: "Eye and gaze tracking for interactive graphic display", Machine Vision and Applications, Vol. 15, pp. 139-148, 2004. [ Links ]

[10] P. Blignaut: "Mapping the pupil-glint vector to gaze coordinates in a simple video-based eye tracker", Journal of Eye Movement Research, Vol. 7, pp. 1-11, 2013. [ Links ]

[11] D. Li, D. Winfield and D.J. Parkhurst: "Starburst: a hybrid algorithm for video-based eye tracking combining feature-based and model-based approaches", Proceedings: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, p. 79, 2005.

[12] J. San Agustin, H. Skovsgaard, E. Mollenbach, M. Barret, M. Tall, D.W. Hansen, et al.: "Evaluation of a low-cost open-source gaze tracker", Proceedings: Symposium on Eye-Tracking Research & Applications, pp. 77-80, 2010.

[13] F. Mulvey, A. Villanueva, D. Sliney, R. Lange, S. Cotmore and M. Donegan: Exploration of safety issues in eyetracking, COGAIN EU Network of Excellence, Frederiksberg, 2008.

[14] S. Kawato and N. Tetsutani: "Detection and tracking of eyes for gaze-camera control", Image and Vision Computing, Vol. 22, pp. 1031-1038, 2004. [ Links ]

[15] B. Noureddin, P.D. Lawrence and C. Man: "A non-contact device for tracking gaze in a human computer interface", Computer Vision and Image Understanding, Vol. 98, pp. 52-82, 2005. [ Links ]

[16] A. Bulling and H. Gellersen: "Toward mobile eye-based human-computerinteraction", IEEE Pervasive Computing, Vol. 9, pp. 8-12, 2010. [ Links ]

[17] D.W. Hansen and A.E. Pece: "Eye tracking in the wild", Computer Vision and Image Understanding, Vol. 98, pp. 155-181, 2005. [ Links ]

[18] S. Patil, U. Bhangale and N. More: "Comparative study of color iris recognition: DCT vs. vector quantization approaches in RGB and HSV color spaces", Proceedings: International Conference on Advances in Computing, Communications and Informatics, pp. 1600-1603, 2017.

[19] F. Timm and E. Barth: "Accurate eye centre localisation by means of gradients", Proceedings: 6th International Conference on Computer Vision Theory and Applications, Vol. 11, pp. 125-130, 2011. [ Links ]

[20] D. Borza, A. Darabant and R. Danescu: "Real-time detection and measurement of eye features from color images", Sensors, Vol. 16, p. 1105, 2016. [ Links ]

[21] A. Soetedjo: "Eye detection based-on color and shape features", Proceedings: International Journal of Advanced Computer Science and Applications, Vol. 3, pp. 17-22, 2012. [ Links ]

[22] M. Vidal, A. Bulling and H. Gellersen: "Detection of smooth pursuits using eye movement shape features", Proceedings: Symposium on Eye Tracking Research and Applications, pp. 177-180, 2012.

[23] S. Cooray and N. O'Connor: "A hybrid technique for face detection in color images", Proceedings: IEEE Conference on Advanced Video and Signal Based Surveillance, pp. 253-258, 2005.

[24] T. Rajpathak, R. Kumar and E. Schwartz: "Eye detection using morphological and color image processing", Proceedings: Florida Conference on Recent Advances in Robotics, pp. 1-6, 2009.

[25] N. Markus, M. Frljak, I.S. Pandzic, J. Ahlberg and R. Forchheimer: "Eye pupil localization with an ensemble of randomized trees", Pattern Recognition, Vol. 47, pp. 578-587, 2014. [ Links ]

[26] L. Sesma-Sanchez, A. Villanueva and R. Cabeza: "Gaze estimation interpolation methods based on binocular data", IEEE Transactions on Biomedical Engineering, Vol. 59, pp. 2235-2243, 2012. [ Links ]

[27] H. Chennamma and X. Yuan: "A survey on eye-gaze tracking techniques", Indian Journal of Computer Science and Engineering, Vol. 4, pp. 388-393, 2013. [ Links ]

[28] A.T. Duchowski: Eye Tracking Methodology: Theory and Practice, Springer, Berlin, 2007.

[29] J.J. Cerrolaza, A. Villanueva and R. Cabeza: "Study of polynomial mapping functions in video-oculography eye trackers", ACMTransactions on Computer-Human Interaction, Vol. 19, p. 10, 2012. [ Links ]

[30] S. Bernet, C. Cudel, D. Lefloch and M. Basset: "Autocalibration-based partioning relationship and parallax relation for head-mounted eye trackers", Machine Vision and Applications, Vol. 24, pp. 393-406, 2013. [ Links ]

[31] Y. Sugano, Y. Matsushita and Y. Sato: "Learning-by-synthesis for appearance-based 3D gaze estimation", Proceedings: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1821-1828, 2014.

[32] D.W. Hansen and Q. Ji: "In the eye of the beholder: a survey of models for eyes and gaze", IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 32, pp. 478-500, 2010. [ Links ]

[33] R.S. Allison, M. Eizenman and B.S. Cheung: "Combined head and eye tracking system for dynamic testing of the vestibular system", IEEE Transactions on Biomedical Engineering, Vol. 43, pp. 1073-1082, 1996. [ Links ]

[34] M. Ranjbaran and H.L. Galiana: "Identification of the vestibulo-ocular reflex dynamics", Proceedings: 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, pp. 1485-1488, 2014.

[35] K. Aldridge, S.A. Boyadjiev, G.T. Capone, V.B. DeLeon and J.T. Richtsmeier: "Precision and error of three-dimensional phenotypic measures acquired from 3dMD photogrammic images", American Journal of Medical Genetics Part A, Vol. 138, pp. 247-253. [ Links ]

Manuscript received July 29, 2019; revised May 9, 2020; accepted May 14, 2020.

Vered Aharsonson received the B.Sc. (1990) and M.Sc. (1992) degrees in physics from Technion, Israel, and the Ph.D. degree in electrical engineering from Tel Aviv University, Israel, in 1998. She was a research fellow at the Eaton Peabody Lab, Harvard University, Boston, a Professor at Afeka, Tel Aviv Academy College of Engineering, Israel, and the founder and CEO of NexSig Ltd, which developed biometric signal-based neurological examination technologies. She is a professor of electrical engineering at the University of the Witwatersrand, Johannesburg, South Africa. Her primary research interest lies in speech and biomedical signal processing.

Verushen Y. Coopoo received the B.Eng.Sc. degree in biomedical engineering in 2016 and the B.Sc. (Hons) degree in electrical engineering in 2018, both from the University of the Witwatersrand, Johannesburg, South Africa. He is an application engineer at Opti-Num Solutions, promoting MathWorks tools in Southern Africa and engaging customers to support a solution-driven adoption of MATLAB into their workflow.

Kyle L. Govender received the B.Sc. (Hons) degree in Electrical Engineering from the University of the Witwatersrand, Johannesburg, South Africa in 2018. He is an electrical engineer in railway engineering at Transnet's Freight Rail operating division in South Africa. He is involved with the management of asset maintenance and protection associated with national freight transportation operations.

Michiel Postema (A'01-S'02-M'05-SM'08) received the M.Sc. degree in geophysics from Utrecht University, The Netherlands, in 1996, the Ph.D. degree in physics from the University ofTwente, The Netherlands, in 2004, and the Habilitation a Diriger des Recherches (D.Sc.) degree in life and health sciences from the University of Tours, France, in 2018. He was appointed Professor of Experimental Acoustics at the Universtiy of Bergen, Norway, in 2010, Professor of Ultrasonics at the Polish Academy of Sciences in 2016, and Distinguished Professor of Biomedical Engineering at the University of the Witwatersrand, Johannesburg, South Africa, in 2018. He works with medical microparticles under sonication and in high-speed photography.