Servicios Personalizados

Articulo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares en Google

Similares en Google

Compartir

Journal of Education (University of KwaZulu-Natal)

versión On-line ISSN 2520-9868

versión impresa ISSN 0259-479X

Journal of Education no.93 Durban 2023

http://dx.doi.org/10.17159/2520-9868/i93a06

ARTICLES

Students' and lecturers' perceptions of computerised adaptive testing as the future of assessing students

Shamola PramjeethI; Priya RamgovindII

ISchool of Management, The IIE Varsity College, Durban, South Africa. spramjeeth@varsitycollege.co.za; https://orcid.org/0000-0002-8673-1634

IIFaculty of Commerce, The Independent Institute of Education, Johannesburg, South Africa. pramgovind@iie.ac.za; https://orcid.org/0000-0002-3171-7050

ABSTRACT

The Covid-19 pandemic has been a catalyst for the increased adoption and acceptance of technology in teaching and learning, forcing higher education institutions (HEIs) to re-look at their assessment strategy as learning, development, and engagement move more fluidly into the online arena. A total of 623 lecturers and students in private HEIs in South Africa shared their views on adopting computerised adaptive testing (CAT) prior to its implementation at their HEI in their respective modules, and their perceptions of such a testing methodology. Our study found that lecturers and students were comfortable engaging in online learning, with a large percentage being the most comfortable with assessing and completing exams, tests, and activities online. Positive perceptions of adopting CAT as an assessment tool for their qualifications were expressed, with the majority recommending their HEI to implement CAT.

Keywords: assessment methodology, computerised adaptive testing, lecturer perceptions, personalised learning, student perceptions

Introduction

Technology can be seen in almost all facets of human life. The higher education (HE) sector is no exception to this. For many years, there has been a gradual use and, ultimately, reliance on technology to assist higher education institutions (HEIs) in the facilitation of teaching and learning, and assessments (Ghanbari & Nowroozi, 2021). This is evidenced by the increased offering of distance qualifications in South Africa (Bolton et al., 2020).

Before Covid-19, HEIs had the luxury of choosing between offline learning and blended learning (Jin et al., 2021; Meccawy et al., 2021). However, the HE landscape will be changed forever because of the Covid-19 pandemic; it served as a catalyst for the integration of technology into teaching and learning, and assessment testing. South African institutions had varying responses to the immediate reliance on technology during the pandemic (Mtshweni, 2022; Thaba-Nkadimene, 2020). Although some HEIs could migrate students seamlessly to their learning management system (LMS), others did so at a slower pace (Du Plessis et al., 2021). The rest had to create an online interface for student engagement and assessment (Khoza et al., 2021). This saw many HEIs extend the academic year to compensate for lost time (Kgosana, 2021).

During Covid-19, HEIs implemented various tools and techniques to cope with the contingencies of students being able to complete their academic year. However, upon reflection, not all assistance provided to students was effective or impactful (Slack & Priestley, 2022). Among the lessons learnt by HEIs during Covid-19 were how to provide e-learning training to lecturers and students, create an online learning community, and revise traditional face-to-face facilitation to actively include elements of blended learning such as an LMS (Ghanbari & Nowroozi, 2021).

Given the increased acceptance and, at times, preference, for online learning (Mpungose, 2020; Zalat et al., 2021), HEIs are seeking innovative ways to assess students. Although there has been little difference in the past between the scores of students in studies comparing paper-based tests (PBTs) and computer-based tests (CBTs), studies have shown that in the presence of extenuating circumstances such as Covid-19, there is a significant difference in the performance of students engaging in CBTs. Therefore, with the increased emphasis on student well-being and personalised learning, computerised adaptive testing (CAT) can be leveraged to provide HEIs with a means of meeting the growing demand for increased online learning while individually addressing students' needs.

The purpose of this study was to explore students' and lecturers' perceptions and applications of CAT as a new form of assessment and how they perceive the adoption of such a mode of assessment in South African private higher education institutions (PHEIs). The key research objectives that guided this study were:

• to determine the level of knowledge and understanding of CAT among students and lecturers;

• to determine lecturers' academic and students' personal perceptions of CAT; and

• to determine the ease with which lecturers and students adopt CAT as an assessment tool in their respective modules.

CAT has been in use for many years ("Flexible assessment systems . . . ", 2022; "Computer adaptive testing", 2019). However, the use of this mode of assessment has not been fully realised in HEIs, especially in countries like South Africa where there has been, historically, a technological divide between citizens that reflects the socio-economic conditions in the HE landscape. A lack of research on HEI lecturers' and students' perceptions of CAT as a testing methodology in their respective qualifications, especially in South Africa, has not been explored. A student or lecturer may agree with the approach and might want to use it, but they may not have the academic skills, competencies, or comfort with technology to ensure successful engagement. The pandemic has proved to HEIs that online teaching, learning, and testing are a reality and can be achieved quite easily as lecturers and students become more familiar with online learning. Consequently, conducting research into this area is of vital importance as HEIs begin to evolve further, embracing the Fourth Industrial Revolution along with the lessons learnt during the Covid-19 pandemic.

Literature review

The history of racial injustices in South Africa has created compounding socio-economic complexities and challenges for students entering HEIs, including problems with literacy and numeracy, and with the digital divide. These complexities are seen in the limited number of students who can access HEIs and for those who can, the subsequent challenges of obtaining financial assistance, accommodation, and transportation. These factors all determine whether students manage to attend HEIs (Scott, 2018). Consequently, the South African HE sector now has a diverse student body that requires a robust education system if society is to be enhanced (Tj0nneland, 2017). It is the responsibility of HEIs to understand the changing student demographics, the quality concerns pertaining to qualification offerings, and the increased competition among institutional offerings to ensure that student and lecturer teaching and learning addresses the changing needs of students and of industry. The emphasis on this can be seen in the many contingency practices including emergency remote teaching, the reliance on LMSs for teaching and learning, and the shift to take-home assessments that HEIs had to implement during and after the pandemic to continue teaching, learning, and assessing (Alturki & Aldraiweesh, 2021).

The onus is now on HEIs to ensure that teaching, learning, and the assessment practices that are selected for student engagement are not only fit for purpose but are also tailored to the new needs of students. Post-pandemic, there has been an increased need and reliance on online LMSs for personalised learning, asynchronous learning, and meaningful engagement and feedback (De Silva, 2021; Hussein et al., 2020; Ismaili, 2021). Therefore, HEIs must ensure that the way in which learning and teaching are facilitated is done in accordance with understanding students' and lecturers' skills, requirements, and challenges.

Paper-based testing (PBT) versus computer-based testing (CBT)

The current reliance on computers to facilitate teaching and learning has led to an increased interest in conducting assessments online. However, there is a dispute regarding the validity of paper- and computer-based assessments. Traditionally, the comparison between them has been based on an indicator such as reading speed, but contemporary measures are being increasingly favoured, such as measures of memory and cognitive workload (Dillon, 1992).

There is a debate about which mode of assessment-computer-based or paper-based-is a better indicator of the achievement of learning outcomes (Noyes & Garland, 2009). However, there has been a significant number of studies that have shown very little significant difference in student performance between computer- and paper-based assessments (Barros, 2019; Choi et al., 2003; Ita et al., 2014; Khoshsima et al., n.d.; Nergiz & Seniz, 2013).

To date, a few studies have provided a significant difference in performance and justified the disparity between the two mediums of assessment in the absence of extenuating circumstances (Stone & Davey, 2014) such as Covid-19. Traditional assessment methods favour offline and blended learning approaches. However, given the current assessment methodology trends that have emerged post-Covid-19, there is now an increased reliance on computer-based assessments. It is evident that HEIs must seek to leverage online assessment tools in a manner that creates and sustains student engagement, stakeholder buy-in, and overall academic achievement.

Computerised adaptive testing

CBT requires examinees to log into a computer terminal allocated to them. CBT can be either linear or adaptive. Linear CBTs administer full-length assessments in which the computer algorithms, using AI, select questions for the examinees without considering an examinee's performance (Oladele et al., 2020). However, CAT has a computer's assessment algorithm to select questions for an examinee based on their performance level; it is categorised by content and difficulty and has a large pool of possible questions (Mercer, 2021).

There are various online assessment tools and methodologies that can be used to measure students' performance and understanding. With the reliance on emergency remote teaching (ERT), HEIs used the most suitable assessment methods that would ensure that students were not disadvantaged in the absence of physical assessment ones. Simultaneously, HEIs also strove to ensure that the assessment methodologies addressed the need for digitally competent graduates, given the rapid shift from the Fourth to the Fifth Industrial Revolution (Asrizal et al., 2018).

An adaptive learning system uses instructor interventions and automated procedures that consider students' abilities, needs, and skill attainment. These systems seek first to determine a student's proficiency before they sequentially engage with learning materials in a manner that facilitates meeting learning outcomes (Troussas et al., 2017). The purpose of CAT is to ensure that students are asked questions that are appropriate to their current level of knowledge and understanding. This, therefore, should ensure that questions that are neither too difficult nor too easy are posed. CAT measures the accuracy of a test score in relation to the duration of the test (Oladele & Ndlovu, 2021).

An institution can choose from several different CAT systems to address various learning requirements. The difference between the systems can be attributed to the question pool size and structure, the selection of questions either individually or in predetermined bundles, the selection algorithm, and the security to prevent the sharing of questions. The use of AI-based algorithms and biometric authentication methods in CAT are used to minimise the risks of cheating and impersonation, thus ensuring the credibility and validity of these assessment practices.

However, each CAT system is underpinned by two fundamental steps. Step one requires that the most appropriate item is selected and administered to a student based on their existing level of knowledge and understanding. Step two is to analyse the student's response(s) to each item selected and then to pose questions until the student obtains a required score, or when the required performance has been obtained. Steps one and two are repeated until the student has successfully answered a predetermined number of questions, or when a precision score has been attained (Gibbons et al., 2016; Vie et al., 2017).

Designing computerised adaptive tests

Computer systems and/or applications are used in the creation of adaptive tests. Before CAT can be developed, the institution must identify and evaluate the needs and abilities of students. This will inform adequately the development of algorithms that will enable the effective selection of activities and items from a pool of tests. There are standard components that CAT must contain for it to be truly adaptive, such as an item pool, a decision rule for the first selection, methods that dictate additional items or a set of item selection, and the selection of items that ensure enhanced efficiency. The test must include balanced content and a termination criterion (Ramadan & Aleksandrovna, 2018).

Given the various CAT systems, the varying needs of institutions, and the academic requirements, the concept of CAT and its unique characteristics must be comprehensively understood by all stakeholders (Gibbons et al., 2016).

Advantages and disadvantages of computerised adaptive tests

The advantages of CAT include having tests of shorter duration and are characterised by enhanced student motivation through equiprecision. These factors ensure that a more precise and appropriate measurement is applied to the assessment (Baik, 2020; Rezaie & Golshan, 2015). Given the increased algorithm flexibility, there is increased security in the administration of the assessment. Furthermore, students engaging in CAT have reported having decreased levels of stress, tiredness, boredom, and anxiety (Oladele & Ndlovu, 2021). In addition, the use of CAT enables students to identify areas of weakness that allow for corrective action to ensure that competency is developed. The interface design of CAT incorporates graphics, and this creates a rich user interface for the examinee. Furthermore, for the examiner, the use of CAT allows for the immediate calculation of student performance through their scores that present a snapshot of the examinee's performance (Han, 2018; Stone & Davey, 2014; Thompson, 2011).

However, despite the countless advantages, there are also disadvantages associated with CAT. Institutions must be cognisant of these before they implement this adaptive testing system. For students to engage successfully in CAT and to take ownership of the assessment method, they must be computer literate. A lack of computer skills will hinder students' ability to leverage the value derived from CAT (Wise, 2018). Once CAT has been administered, the examinee is unable to return to questions already answered and this can have an impact on the way the test is taken, thus affecting the final score obtained. Given that these tests are based on a student's ability and skills, anxiety can contribute to the attainment of a poor score rather than an examinee's inability to answer the questions (Thompson, 2011). Items in a CAT pool can be viewed many times by students, throughout their assessment, thus necessitating the use of an algorithm (Rezaie & Golshan, 2015). The implementation of CAT requires a significant capital contribution to ensure the procurement of an adequate computer lab to meet students' needs, a large sample size, and the expertise to ensure the reliability and validity of the assessment tool (Alderson, 2000; Oladele et al., 2020).

CAT in South African higher education

Studies by Lilley and Barker (2007) and Lilley et al. (2005) sought to understand students' perceptions of CAT during its implementation, but not prior to it. Kim and Huh (2005) investigated students' attitudes towards and acceptability of CAT in medical school, and their effect on examinees' abilities. The studies that relate to students' perceptions of CAT are not contemporary, nor do they reflect the perceptions of students post-pandemic. As evidenced, there are studies of CAT in HE but, despite the documented evidence of its implementation, there are only a few studies that take students' and lecturers' perceptions of CAT into consideration before implementation. Also, CAT is not used as a decision-making tool in HE.

Studies on the advantages, disadvantages, implementation, and evaluation of CAT outlined in this review, and on our experiences of teaching during Covid-19 suggest that HEIs in South Africa can no longer ignore the use of online assessment tools for personalised learning, given the need for student engagement and lecturer facilitation. Considering this, the adoption of CAT in South African HEIs must be done while considering the comfort levels of students and lecturers with online learning, and the academic contribution of this assessment tool. Therefore, the purpose of this study is to determine the personal and academic perceptions of students and lecturers towards CAT prior to its implementation, given the increased reliance on online assessment methods post-pandemic and against the unique backdrop of a multicultural and multilingual South Africa.

Research methodology

The study was exploratory in nature, guided by the positivist paradigm (see Creswell & Creswell, 2018) since there is little to no previous research at present to serve as a reference point on CAT as an assessment approach in the South African HE landscape (Rahi, 2017; Saunders et al., 2019) that takes students' and lecturers perceptions of CAT into consideration before implementation. Accordingly, using a quantitative methodological approach, we sought to understand PHEI students' and lecturers' views on adopting CAT, and their perceptions of such a testing methodology prior to implementation.

We created a structured questionnaire, informed by the literature on CAT, to gauge students' and lecturers' level of comfort with online learning, their knowledge and understanding of CAT, their personal and academic perceptions of CAT, and whether they believed CAT should be implemented at their respective PHEI. The questions were based on a 3-point Likert scale in terms of the above criteria. The instrument was vetted by the Ethics Committee and three academics. The PHEI at which the researchers are based reviewed the instrument for clarity, with changes to the Likert scale from a 5-point to a 3-point scale being recommended. Ethical clearance for the study was obtained from the researchers' institution in accordance with its ethics review and approval procedures (R. 00036).

The targeted population was made up of students (undergraduate and postgraduate) and lecturers at PHEIs in South Africa. Using a non-probability sampling methodology (purposive sampling) data was collected through the online survey platform Microsoft Forms, from 10 to 22 July 2022.

The survey link was distributed to the targeted population of students and lecturers via email. Using the snowballing approach, we distributed the survey link via LinkedIn and WhatsApp to colleagues in other PHEIs. The names of institutions were not included in the survey to ensure the anonymity of respondents. An online questionnaire was chosen as the most suitable instrument in terms of the geographical reach of the PHEIs in the major provinces of South Africa. Using the sampling size table of Bougie et al. (2020) for a target population greater than 100 000 at a 95% confidence interval, the appropriate sample size was determined as 384. We were aiming for 400 responses, with a 50% completion rate. A total of 585 students and 38 lecturers completed the questionnaire.

The collected data from Microsoft Forms was exported into an Excel spreadsheet, cleaned, and coded. Using the Statistical Software Package for Social Sciences version 28, descriptive and inferential statistical analyses were performed on the data. Descriptive analyses were performed to summarise the study sample characteristics and to establish the means, quantiles, and measures of dispersion in the data. Mann-Whitney U tests were conducted to establish if there were significant differences in the factor scores for student vs. lecturer. An exploratory factor analysis (EFA) was performed using principal axis factoring with a minimum factor loading of greater than 0,5 to explore its structure. To assess if a significant correlation exists between the factors, Spearman's correlation coefficients were obtained. Before performing the EFA, the suitability of the data had to be assessed. This was done through the Kaiser-Meyer-Olkin measure of sampling adequacy (KMO-MSA) and Bartlett's test of sphericity. The KMO-MSA value needed to be greater than 0,4, and Bartlett's test of sphericity needed to be statistically significant. With the KMO and Bartlett's test of sphericity significant, both conditions were satisfied and therefore the data was deemed suitable for us to perform the EFA analysis. To assess the internal consistency of each factor, a Cronbach's alpha reliability test was performed. A 0,705 score was returned and was therefore deemed adequate to perform the EFA (see Saunders et al., 2019).

Results and discussion

The following discussion presents the findings of the study, with further corroborations and/or refutations of the relevant literature findings.

Demographics

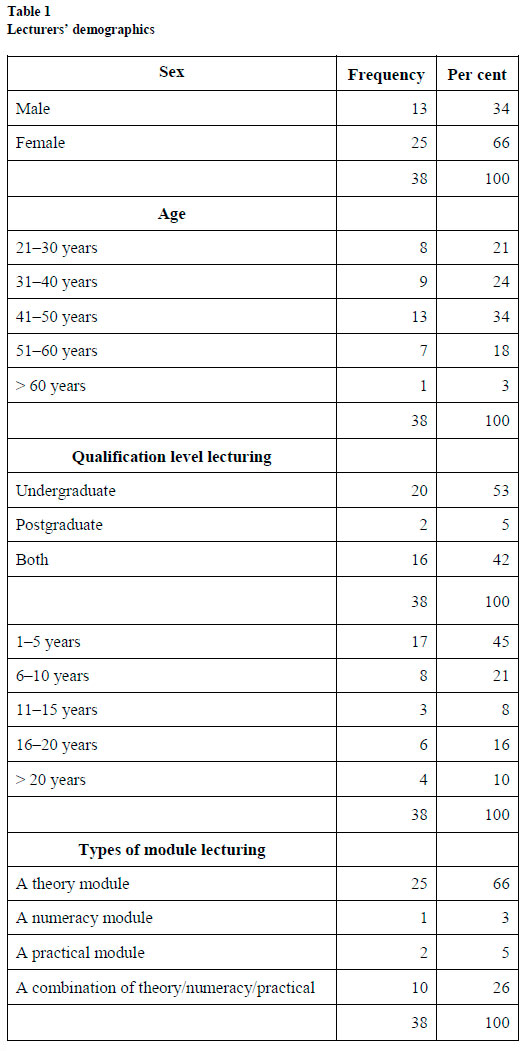

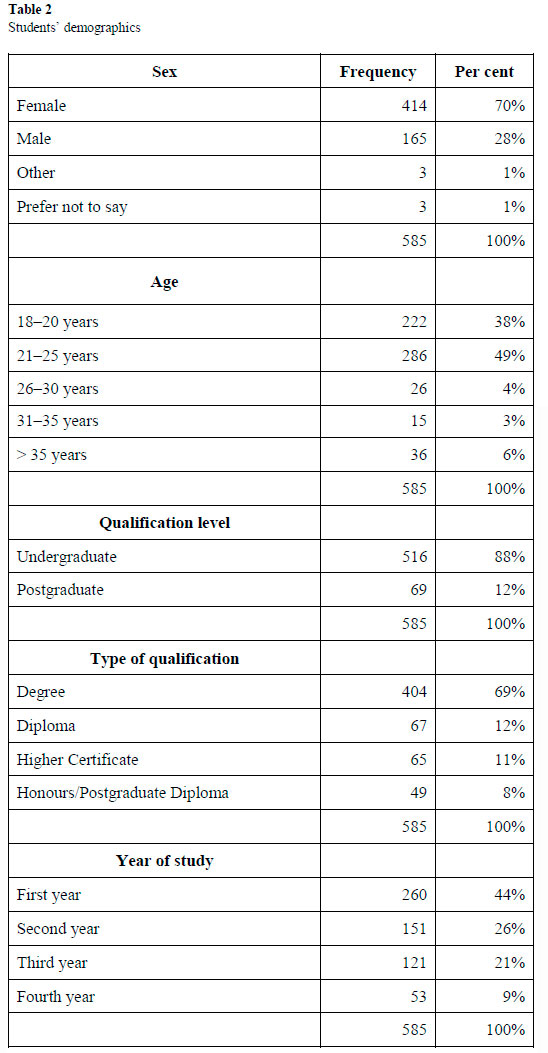

As mentioned above, a total of 38 lecturers and 585 students completed the online questionnaire.

In terms of the lecturer profile, most of the lecturers were within the 41-50 age range (34%), with more females (66%) responding to the survey. Regarding the number of years of lecturing, 45% were within the 1-5-year range. Of the lecturers, 53% lectured undergraduate students, with 5% working with postgraduates, and 42% with both postgraduates and undergraduates. In terms of the types of modules lectured, 66% lectured a theory module, 5% a practical, and 3% a numeracy module, and 26% lectured across both theory and numeracy types of modules.

In terms of the student profile, most of the students were within the 18-25 age range (87%), with more females (70%) responding to the survey. Of the students 88% were undergraduate students and 12% postgraduate students while 44% were first year, 26% were second year, 21% were third year, and 9% were in their fourth year of study. In terms of qualification, 69% were studying towards a degree, 12% for a diploma, 11% for a higher certificate, and 8% for a postgraduate degree.

Comfort level of lecturers and students with engaging in online learning and testing

Considering the results obtained from a combined student and lecturer view on engaging in online learning and testing, as presented in Figure 1, most of the students and lecturers felt most comfortable engaging with tasks and activities as well as exams and tests online. However, a fair percentage of lecturers and students were not completely comfortable conducting and attending lectures online (30%), engaging with content online (32%), completing tasks and activities on the online platform (22%), and completing tests and exams on the online platform (16%). Fewer than 10% of the respondents were uncomfortable. As the use of technology and online learning becomes more entrenched in teaching and learning strategies, the PHEIs and HEIs need to understand the reasons for the lecturers' and students' sense of discomfort in engaging in online learning and testing.

For a deeper view of the lecturers' and students' responses, refer to Table 3 and Table 4. It was established that lecturers felt most comfortable (95%) in assessing tests and exams on an online learning platform, followed by setting tasks and activities (87%). However, 76% of students felt comfortable with completing tests and exams on online platforms, followed by 70% attempting tasks and activities.

Regarding engaging with the content and attending lectures online, only 59% of the students felt comfortable, indicating that students may prefer face-to-face interaction with lecturers and learning materials. Lecturers, meanwhile, were very comfortable (87%) with engaging with the content on the online learning platform and conducting lectures on it (90%). Overall, lecturers showed a 95% comfort level with online testing, whereas students showed 76% comfort. Song et al. (2004) postulated that a student's online learning experience is influenced by their motivation level, time management, degree of comfort with online technology, and the course design. Alkamel et al. (2021) found similar results when students have a favourable attitude towards online learning and testing, and Yildirim et al. (2017) noted that students were comfortable with the system and with lecturers' feedback. The challenges mentioned by the students were that the time to complete the online test was insufficient and that they had difficulty gaining access to the device and to the online test (Yildirim et al., 2017).

Knowledge and understanding of CAT

From the results obtained, as shown in Figure 2, most of the participants indicated agreement with all the statements about CAT in terms of the Understanding and Knowledge scale. It is comforting to know that lecturers and students are aware of what CAT is and of its various attributes and features. Accordingly, should HEIs and PHEIs introduce CAT, the acceptance and adoption of the testing methodology will be easier given their knowledge and understanding of it and its associated benefits.

Because the lecturers and students understood what the key features of CAT are, they were then asked if they were comfortable accepting CAT in their respective modules. In terms of lecturers' and students' level of comfort with adopting CAT, 97% were very comfortable and 3% were somewhat comfortable. Most of the students felt comfortable (63%), 33% were somewhat comfortable, and 4% were uncomfortable about adopting CAT in their current studies.

An EFA was performed on the Knowledge and Understanding of CAT data. All variables were loaded into 1 factor, APT1, with a KMO-MSA of 0,851. The cumulative variance percentage extracted was 30,788. Two variables were below 0,5 loading and were removed, namely, AT utilises automated testing processes (0,417) and AT is computer-aided tests that are designed to adjust the level of difficulty based on students' responses (0,401).

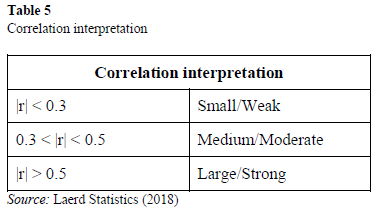

To determine if a relationship existed between knowledge and understanding of CAT and the comfort of adopting CAT, a Spearman's correlation analysis was performed on this factor. To assess the correlation coefficients, the relationship needs to be statistically significant, with a p-value of < 0,01. Once the statistical significance was determined, the direction and strength of the relationship was assessed. The guideline below can be used to interpret the correlation coefficients (Laerd Statistics, 2018).

The relationship between knowledge and understanding of CAT and the comfort of adopting CAT was found to be statistically significant, with a weak positive correlation (p = 0,267, p < 0,01). This indicates that the higher the level of understanding of CAT that students and lecturers displayed, the higher their comfort with adopting CAT.

The acceptance and adoption of technology, using the technology acceptance model, is based on how useful the user perceives the technology to be, its ease of use, and the credibility and reliability of the technology to perform the required task, such as the administration of a test (Nathaniel et al., 2021). Given the emergence of remote teaching and learning during the pandemic, many lecturers and students were forced to embrace the use of technology and adopt it. However, with time, they have become much more familiar and comfortable with the resource as they realise its ease of use and associated benefits (Mpungose, 2020; Zalat et al., 2021). In this study, therefore, this indicated comfort in adopting CAT in their respective modules.

Personal perceptions of CAT

Lecturers and students were asked to respond to a Likert scale survey comprised of several statements (see Figure 3) to determine their personal perceptions of CAT and if any specific requirements and considerations were needed for them to engage with CAT. From the results obtained, it was clear that most of the participants indicated agreement with all statements pertaining to the Personal Perceptions of the CAT scale. The only statement that differed from this pattern was "CAT would hinder my lecturing/learning performance given that I am not digitally comfortable1 with navigating online platforms" that had a majority disagreement (51,8%). This highlights that students and lecturers perceive themselves to be fairly skilled in engaging on various online platforms, so for them the adoption of CAT would be easier and there would not be a very heightened need for continuous and vigorous training to upskill students and lecturers.

Regarding questions related to using the institution's data/WI-FI and campus technology infrastructure when engaging with CAT, there were differing responses, with 30,7% and 36,9% indicating disagreement, respectively. This showed that students are not dependent on the institution to provide access to WI-FI/data and campus infrastructure should CAT be implemented.

A study by Hogan (2014) on the efficiency and precision of CAT established that participants preferred the use of CAT over PBT when assessed, with Apostolou et al. (2009) highlighting the instructor's influence and management of students' perceptions as a key variable affecting students' perceptions of CAT. An EFA using principal axis factoring was performed on Personal Perceptions data. One factor was extracted with a KMO-MSA of 0,707; the cumulative variance percentage extracted was 17,932. The variables with loadings > 0,5 were included, with the factor comprising five variables, as can be seen in Table 6.

Academic perceptions of CAT

Lecturers and students were asked, in several statements, to assess their academic perceptions of CAT and to indicate specific requirements, if any, they needed to engage with it. From the results obtained (see Figure 4), most participants indicated agreement with all statements about the Academic Perceptions of Adaptive Testing scale. Regarding disagreement, the greatest disagreement percentage (28,2%) was seen on whether "CAT should be engaged only during allotted times (the same duration as a test/exam)." Consequently, enabling CAT to be completed at any time may help to reduce a student's examination anxiety and result in a more accurate representation of their understanding. In this way, lecturers can construct a richer profile of students' development and determine where additional assistance is needed (New Meridian, 2022). Mohd-Ali et al. (2019) found that among mathematics students, CAT assisted in reducing their anxiety regarding the examination. However, further research is required to understand if similar findings are to be found in other disciplines.

CAT may work well in an online institution that favours self-paced learning. However, one needs to ask if CAT would be appropriate for summative assessments as well. Questions about moderation, standardisation, benchmarking, etc., become an issue since every student will not be given the same question but, rather, questions that match their cognitive ability.

An exploratory factor analysis was performed on the Academic Perceptions data. The extraction method used was principal axis factoring and the eigenvalue rule > 1 was used. However, on review, we noticed that one of the factors had only one item. This required an additional iteration of the EFA process to extract two factors that provided the resulting pattern matrix. The cumulative variance percentage extracted for factors 1 and 2 respectively were 29,261 and 34,176, which is lower than ideal. However, given the exploratory nature of the study, it was used. Two factors were extracted (see Table 7), with a KMO-MSA of 0,837.

To determine whether there are significant relationships between Knowledge and Understanding of CAT (ATF1) and Academic Perceptions (APATF1 & APATF2), Spearman's correlation coefficients were obtained for the factors (using the factor scores).

The relationship between the factors of Knowledge and Understanding of CAT (ATF1) and the Academic Perception of CAT factor 1 (APATF1) was found to be statistically significant and had a moderately linear positive correlation (p = 0,426, p < 0,01). A statistically significant and moderately positive correlation (p = 0,431, p < 0,01) was found between ATF1 and APATF2. Although the correlation scores were not strong, the results imply that there is a possibility that the more one knows about adaptive testing and understands it, the higher one's academic perceptions of CAT factors 1 and 2 will be.

A similar analysis was performed for the Personal Perceptions factor, and no statistically significant associations were found. Overall, 53% of respondents (students and lecturers) had a negative personal perception of CAT, whereas 41% had a neutral personal perception of CAT.

Student vs Lecturer

Given the small number of respondents in the lecturer category, Mann-Whitney U tests were conducted to establish if there were significant differences in the factor scores for student vs lecturer.

A significant difference was obtained in the Knowledge and Understanding of CAT according to Position (student vs lecturer) (U = 5194,500, Z = -5,550, p < 0,01). On review of the Mean Ranks, it was found that the lecturers had a higher mean rank of 467,88, compared to the students, who had a mean rank of 301,87. This was supported when reviewing the descriptive statistics; it was found that the lecturers' factor scores were higher (M = 2,857, sd = 0,244) than the students' (M = 2,564, sd = 0,368).

A significant difference was obtained for Academic Perceptions of CAT factor 1 according to Position (student vs lecturer) (U = 9113,00, Z = -2,060, p = 0,039). On review of the Mean Ranks, it was found that the lecturers had a higher mean rank of 365,68, compared to the students, who had a mean rank of 309,05. This result was confirmed when reviewing the mean factor scores: it was found that the lecturers scored higher (M = 2,78, sd = 0,447) than the students (M = 2,656, sd = 0,463).

A significant difference was obtained for Academic Perceptions of CAT factor 2 according to Position (student vs lecturer) at a 10% level (U = 9265,000, Z = -1,836, p = 0,066). On review of the Mean Ranks, it was found that the lecturers had a higher mean rank of 361,68, compared to the students, who had a mean rank of 309,31. This was supported in a review of the descriptive statistics, where it was found that the lecturers scored higher (M = 2,702, sd = 0,476) than the students (M = 2,576, sd = 0,499).

A significant difference was obtained for Comfort according to Position (student vs lecturer) at a 10% level (U = 6154,500, Z = -4,142, p < 0,01). On review of the Mean Ranks, it was found that the lecturers had a higher mean rank of 400,66, compared to the students, who had a mean rank of 285,73. It was also found that the lecturers scored higher (M = 3,845, sd = 0,302) than the students (M = 3,500, sd = 0,593).

However, no significant key differences were established for Personal Perceptions of CAT according to position (student vs lecturer). Accordingly, based on the results, it is evident that lecturers have better knowledge and understanding of CAT and a greater level of comfort with adopting it. From an academic perspective on CAT, lecturers do believe that CAT will be beneficial to students' learning since they showed a higher number of agreement statements than the students. The study found no significant difference between males' and females' perceptions of CAT.

Recommending the use of CAT

On a scale of 1 to 10, with 1 being "Extremely Not Confident" and 10 being "Extremely Confident", lecturers and students were asked, "Do you believe that CAT is the future of assessing students' knowledge and competence in a module?" An average score of 8,2 was received for lecturers and 7,4 for students, implying that lecturers and students are confident that CAT will be used for assessing students as HEIs and PHEIs evolve in the post-Covid and technology-driven world.

Lecturers and students were also asked, "Do you believe your higher education institution should implement CAT as an assessment tool?" Of the students, 87% and 78% of lecturers believe their HEI or PHEI should implement CAT.

Implications and the way forward

CAT supports personalised learning (asynchronism). Since prolonged engagement with online learning can result in students experiencing mental and physical fatigue (Miller, 2020), it is important that students manage their time efficiently. Personalised learning speaks to holistic student learning so the mental well-being of students must also be at the forefront of institutional decision-making to ensure that students are fully engaged in their learning experience.

Understanding the perceptions of students and lecturers towards CAT can assist in decision-making. The inclusion of technology in teaching and learning does not automatically enhance the learning experience but it does create additional layers of complexity if the teaching pedagogy is not aligned with the functionality of the chosen technology and curriculum (Kirkwood & Price, 2014). Therefore, by understanding the stance of students and lecturers, HEIs and PHEIs can tailor the user experience and interaction of CAT to ensure that it is fit for purpose and aligned with the teaching pedagogy of the institution.

For those students who do not possess the initial competencies in navigating online learning, the anxiety of being outside of their comfort zone, coupled with the lack of support and community, can lead to their being emotionally despondent and unresponsive to online learning (Davidson, 2015; Gillet-Swan, 2017). As a result, it is recommended that HEIs and PHEIs have dedicated support wellness centres to assist students. Furthermore, inexperienced students can be partnered with more experienced ones to help orient them to the HEI's and PHEI's learning culture and online testing methodology. Further, they can create a Short Learning Programme or a short, simple, and easy-to-follow infographic how-to guide on how to engage with the online testing system.

Although this study found no significant difference between males' and females' perceptions of CAT, Cai et al. (2017) contended that males tend to have a more favourable attitude towards technology than females, with a minimal reduction in the sex gap when compared to studies conducted 20 years ago. In "Gendered patterns in use of new technologies," (2023, July 5), based on its 2020 report, the European Institute for Gender Equality Index indicated that these historical differences still exist, but the gap is decreasing. Moreover, although similar findings could not be established in this study, it is advised that HEIs and PHEIs be cognisant of these differences and provide the required support as needed.

Further to students' digital competency, HEIs and PHEIs must also ensure that although lecturers are comfortable with understanding CAT, there is also comfort in its design and implementation. It is the responsibility of the HEI and the PHEI to create a content blueprint document that serves to guide examinees' interaction with the assessment method (Redd, 2018). Furthermore, lecturers must be oriented into the CAT system to fully leverage its functionality and design to meet the needs of students, the module and, ultimately, the curriculum.

Given the high comfort levels and acceptance of CAT by students and lecturers, HEIs and PHEIs wanting to implement this assessment methodology must ensure that there is sufficient infrastructure available for students and lecturers to access computer laboratories to engage with this tool (Baik, 2020). However, this will result in a higher cost implication for these institutions. Further to this, they must ensure that experienced individuals with the required competencies develop the CAT system algorithm that is tailored to the needs of the module.

Conclusion

Our study found that, overall, students and lecturers are very comfortable engaging in online teaching and learning, with lecturers showing a greater degree of comfort. Both students and lecturers have a good understanding and knowledge of CAT and are comfortable with adopting it in the respective modules that they teach and study. Overall, a positive perception was noted from the participants' personal and academic perspectives of CAT, with the Spearman's correlation analysis indicating that the better the understanding of CAT, the higher the academic perceptions of CAT. The Mann-Whitney U tests established that lecturers scored higher than the students on all the scales in the instrument. An overwhelming majority of students and lecturers felt that CAT is the future of assessing students and would recommend that their HEI or PHEIs implement CAT as their assessment methodology.

The sample size of the study for the lecturer population was small, and the sex of the students and lecturers was skewed to responses from females and focused only on PHEIs. As a result, it is recommended that the study be replicated to include a larger sample population of lecturers and the equal representation of males and females, and PHEIs and public HEIs. The study was confined to South African PHEIs. It is recommended that the study be conducted in other African countries to establish if a correlation in the findings can be established.

References

Alderson, J. C. (2000). Technology in testing: The present and the future. System, 28(4), 593603. https://doi.org/10.1016/s0346-251x(00)00040-3 [ Links ]

Alkamel, M. A. A., Chouthaiwale, S. S., Yassin, A. A., AlAjmi, Q., & Albaadany, H. Y. (2021). Online testing in higher education institutions during the outbreak of Covid-19: Challenges and opportunities. Studies in Systems, Decision and Control, 348, 349-363. https://doi.org/10.1007/978-3-030-67716-9_22 [ Links ]

Alturki, U., & Aldraiweesh, A. (2021). Application of learning management system (LMS) during the Covid-19 pandemic: A sustainable acceptance model of the expansion technology approach. Sustainability, 73(19), 10991. https://doi.org/10.3390/su131910991 [ Links ]

Apostolou, B., Blue, M. A., & Daigle, R. J. (2009). Student perceptions about computerized testing in introductory managerial accounting. Journal of Accounting Education, 27(2), 59-70. https://doi.org/10.1016/jjaccedu.2010.02.003 [ Links ]

Asrizal, A., Amran, A., Ananda, A., & Festiyed, F. (2018). Effectiveness of adaptive contextual learning model of integrated science by integrating digital age literacy on grade VIII students. IOP Conference Series: Materials Science and Engineering, 335, 072067. https://doi.org/10.1088/1757-899x/335/1/ [ Links ]

Baik, S. (2020, February 3). Computer adaptive testing: Pros & cons. CodeSignal. https://codesignal.com/blog/data-driven-recruiting/pros-cons-computer-adaptive-testing

Barros, J. P. (2018). Students' perceptions of paper-based vs. computer-based testing in an introductory programming course. Proceedings of the 70th International Conference on Computer Supported Education, (Vol. 1), 303-308. https://doi.org/10.5220/0006794203030308 [ Links ]

Bolton, H., Matsau, L., & Blom, R. (2020, February 2). Flexible learning pathways: The national qualifications framework backbone. SAQA. https://www.saqa.org.za/sites/default/files/2020-12/Flexible-Learning-Pathways-in-SA-2020-12.pdf

Bougie, R., Sekaran, U., & Wiley, J. (2020). Research methods for business: A skill building approach (8th ed.). John Wiley & Sons.

Cai, Z., Fan, X., & Du, J. (2017). Gender and attitudes toward technology use: A metaanalysis. Computers & Education, 105, 1-13. https://doi.org/10.1016/jxompedu.2016.1L003 [ Links ]

Choi, I.-C., Kim, K. S., & Boo, J. (2003). Comparability of a paper-based language test and a computer-based language test. Language Testing, 20(3), 295-320. https://doi.org/10.1191/0265532203lt258oa [ Links ]

"Computer adaptive testing: Background, benefits and case study of a large-scale national testing programme." (2019, December 11). Surpass: Powering Assessment. https://surpass.com/news/2019/computer-adaptive-testing-background-benefits-and-case-study-of-a-large-scale-national-testing-programme

Creswell, J. W., & Creswell, J. D. (2018). Research design: Qualitative, quantitative, and mixed methods approaches (5th ed.). SAGE.

Davidson, R. (2015). Wiki use that increases communication and collaboration motivation. Journal of Learning Design, 8(3), 94-105. [ Links ]

De Silva, R. D. (2021). Evaluation of students' attitude towards online learning during the Covid-19 pandemic: A case study of ATI students. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.4117589

Dillon, A. (1992). Reading from paper versus screens: A critical review of the empirical literature. Ergonomics, 35(10), 1297-1326. https://doi.org/10.1080/00140139208967394 [ Links ]

Du Plessis, M., Jansen van Vuuren, C. D., Simons, A., Frantz, J., Roman, N., & Andipatin, M. (2021). South African higher education institutions at the beginning of the Covid-19 pandemic: Sense-making and lessons learnt. Frontiers in Education, 6, 740016. https://doi.org/10.3389/feduc.2021.740016 [ Links ]

"Flexible assessment systems enable educators to respond to students' needs and expand educational equity." (2023). New Meridian. https://newmeridiancorp.org/our-approach/

"Gendered patterns in use of new technologies." (2023, July 5). European Institute for Gender Equality Index. https://eige.europa.eu/publications-resources/toolkits-guides/gender-equality-index-2020-report/gendered-patterns-use-new-technologies?language_content_entity=en

Ghanbari, N., & Nowroozi, S. (2021). The practice of online assessment in an EFL context amidst Covid-19 pandemic: Views from teachers. Language Testing in Asia, 11(1). https://doi.org/10.1186/s40468-021-00143-4 [ Links ]

Gibbons, C., Bower, P., Lovell, K., Valderas, J., & Skevington, S. (2016). Electronic quality of life assessment using computer-adaptive testing. Journal of Medical Internet Research, 18(9), 1-11. [ Links ]

Gillet-Swan, J. (2017). The challenges of online learning: Supporting and engaging the isolated learner. Journal of Learning Design, 10(1), 20-30. [ Links ]

Han, K. T. (2018). Components of the item selection algorithm in computerized adaptive testing. Journal of Educational Evaluation for Health Professions, 15, 7. https://doi.org/10.3352/jeehp.2018.15.7 [ Links ]

Hogan, T. (2014). Using a computer-adaptive test simulation to investigate test coordinators' perceptions of a high-stakes computer-based testing program. [Doctoral dissertation, Geogia State University]. https://doi.org/10.57709/4825558 [ Links ]

Hussein, E., Daoud, S., Alrabaiah, H., & Badawi, R. (2020). Exploring undergraduate students' attitudes towards emergency online learning during Covid-19: A case from the UAE. Children and Youth Services Review, 119, 105699. https://doi.org/10.1016/j.childyouth.2020.105699 [ Links ]

Ismaili, Y. (2021, February 4). Evaluation of students' attitude toward distance learning during the pandemic (Covid-19): A case study of ELTE university. On the Horizon. World Health Organization. https://pesquisa.bvsalud.org/global-literature-on-novel-coronavirus-2019-ncov/resource/pt/covidwho-1062971?lang=en

Ita, M., Kecskemety, A. K., & Morin, B. (2014, June 15-18). Comparing student performance on computer-based vs. paper-based tests in a first-year engineering course. [Conference session]. ASEE Conference, Indianapolis, IN, United States. https://peer.asee.org/comparing-student-performance-on-computer-based-vs-paper-based-tests-in-a-first-year-engineering-course.pdf

Jin, Y. Q., Lin, C. L., Zhao, Q., Yu, S. W., & Su, Y. S. (2021). A study on traditional teaching method transferring to e-learning under the Covid-19 pandemic: From Chinese students' perspectives. Frontiers in Psychology, 12, 1-14. [ Links ]

Kgosana, R. (2021, January 22). Unisa semester change raises students' ire. The Citizen. https://www.citizen.co.za/news/south-africa/education/unisa-semester-change-raises-students-ire

Khoshsima, H., Morteza, S., Toroujeni, H., Thompson, N., & Ebrahimi, M. (n.d.). Computer-based (CBT) vs. paper-based (PBT) testing: Mode effect, relationship between computer familiarity, attitudes, aversion and mode preference with CBT test scores in an Asian private EFL context. Teaching English with Technology, 19(1), 86-101. https://files.eric.ed.gov/fulltext/EJ1204641.pdf

Khoza, H. H., Khoza, S. A., & Mukonza, R. M. (2021). The impact of Covid-19 in higher learning institutions in South Africa: Teaching and learning under lockdown restrictions. Journal of African Education, 2(3), 107-131. [ Links ]

Kim, M. Y., & Huh, S. (2005). Students' attitude toward and acceptability of computerized adaptive testing in medical school and their effect on the examinees' ability. Journal of Educational Evaluation for Health Professions, 2, 105. https://doi.org/10.3352/jeehp.2005.2.1.105 [ Links ]

Kirkwood, A., & Price, L. (2014). Technology-enhanced learning and teaching in higher education: What is "enhanced" and how do we know? A critical literature review. Learning, Media and Technology, 39(1), 36-36. [ Links ]

Laerd Statistics. (2018, January 16). Pearson product-moment correlation. Lund Research Ltd. https://statistics.laerd.com/statistical-guides/pearson-correlation-coefficient-statistical-guide.php

Lilley, M., & Barker, T. (2007). Students' perceived usefulness of formative feedback for a computer-adaptive test. The Electronic Journal of E-Learning, 5, 31-38. https://files.eric.ed.gov/fulltext/EJ1098856.pdf [ Links ]

Lilley, M., Barker, T., & Britton, C. (2005). Learners' perceived level of difficulty of a computer-adaptive test: A case study. Human-Computer Interaction - INTERACT, 3555,1026-1029. https://doi.org/10.1007/11555261_96 [ Links ]

Meccawy, Z., Meccawy, M., & Alsobhi, A. (2021). Assessment in "survival mode": Student and faculty perceptions of online assessment practices in HE during Covid-19 pandemic. International Journal of Educational Integrity, 17(16), 1-24. [ Links ]

Mercer. (2021, February 1). What is a computer-based test? Here's everything you need to know. https://blog.mettl.com/computer-based-test

Miller, K. (2020, January 20). 22 advantages and disadvantages of technology in education. https://futureofworking.com/10-advantages-and-disadvantages-of-technology-in-education/.

Mohd-Ali, S., Norfarah, N., Ilya-Syazwani, J. I., & Mohd-Erfy, I. (2019). The effect of computerized-adaptive test on reducing anxiety towards math test for polytechnic students. Journal of Technical Education and Training, 77(4), 27-35. https://penerbit.uthm.edu.my/ojs/index.php/JTET/article/view/3782 [ Links ]

Mpungose, C. B. (2020). Emergent transition from face-to-face to online learning in a South African University in the context of the Coronavirus pandemic. Humanities and Social Sciences Communications, 7(1), 1-9. https://doi.org/10.1057/s41599-020-00603-x [ Links ]

Mtshweni, B. V. (2022). Covid-19: Exposing unmatched historical disparities in the South African institutions of higher learning. South African Journal of Higher Education, 36(1), 234-250. http://dx.doi.org/10.20853/36-1-4507 [ Links ]

Nathaniel, S., Makinde, S. O., & Ogunlade, O. O. (2021). Perception of Nigerian lecturers on usefulness, ease of use and adequacy of use of digital technologies for research based on university ownership. International Journal of Professional Development, Learners and Learning, 3(1), ep2106. https://doi.org/10.30935/ijpdll/10881 [ Links ]

Nergiz, C., & Seniz, O. Y. (2013). How can we get benefits of computer-based testing in engineering education? Computer Applications in Engineering Education, 27(2), 287293. [ Links ]

Noyes, J. M., & Garland, K. J. (2009). Computer- vs. paper-based tasks: Are they equivalent? Ergonomics, 57(9), 1352-1375. http://dx.doi.org/10.1080/00140130802170387 [ Links ]

Oladele, J. I., & Ndlovu, M. (2021). A review of standardised assessment development procedure and algorithms for computer adaptive testing: Applications and relevance for fourth industrial revolution. International Journal of Learning, Teaching and Educational Research, 20(5): 1-17. [ Links ]

Oladele, J. I., Ayanwale, M. A., & Owolabi, H. O. (2020). Paradigm shifts in computer adaptive testing in Nigeria in terms of simulated evidence. Journal of Social Sciences, 63(1/3), 9-20. [ Links ]

Rahi, S. (2017). Research design and methods: A systematic review of research paradigms, sampling issues and instruments development. International Journal of Economics & Management Sciences, 6(2), 1-5. https://doi.org/10.4172/2162-6359.1000403 [ Links ]

Ramadan, A. M. & Aleksandrovna, A. E. (2018, February 5). Computerized adaptive testing. ResearchGate. https://www.researchgate.net/publication/329935670_Computerized_Adaptive_Testing

Redd, B. (2018, September 14). Quality assessment part 5: Blueprints and computerized-adaptive testing. Of That. https://www.ofthat.com/2018/09/quality-assessment-part-5-blueprints.html

Rezaie, M., & Golshan, M. (2015). Computer adaptive test (CAT): Advantages and limitations. International Journal of Educational Investigations, 2(5), 128-137. http://www.ijeionline.com/attachments/article/42/IJEI_Vol.2_No.5_2015-5-11.pdf [ Links ]

Saunders, M., Lewis, P., & Thornhill, A. (2019). Research methods for business students (8th ed.). Pearson Education Limited.

Scott, I. (2018). Higher education system for student success. Journal of Student Affairs in Africa, 6(1), 1-17. https://doi.org/10.24085/jsaa.v6i1.3062 [ Links ]

Slack, H. R., & Priestley, M. (2022). Online learning and assessment during the Covid-19 pandemic: Exploring the impact on undergraduate student well-being. Assessment & Evaluation in Higher Education, 48(3), 333-349. https://doi.org/10.1080/02602938.2022.2076804 [ Links ]

Song, L., Singleton, E. S., Hill, J. R., & Koh, M. H. (2004). Improving online learning: Student perceptions of useful and challenging characteristics. Internet and Higher Education, 7(1), 59-70. https://www.learntechlib.org/p/102596 [ Links ]

Stone, E., & Davey, T. (2014). Computer-adaptive testing for students with disabilities: A review of the literature. ETS Research Report Series, 2011(2), 1-24. https://doi.org/10.1002/j.2333-8504.2011.tb02268.x [ Links ]

Thaba-Nkadimene, K. L. (2020). Editorial: Covid-19 and e-learning in higher education. Journal of African Education, 1(2), 5-12. [ Links ]

Thompson, N. A. (2011, August). Advantages of computerized adaptive testing (CAT). White Paper. Assessment systems. https://assess.com/docs/Advantages-of-CAT-Testing.pdf

Tj0nneland, E. N. (2017). Crisis at South Africa's universities: What are the implications for future cooperation with Norway? CMI Brief, 16(3), 1-4. https://www.cmi.no/publications/6180-crisis-at-south-africas-universities-what-are-the [ Links ]

Troussas, C., Krouska, A., & Virvou, M. (2017). Reinforcement theory combined with a badge system to foster student's performance in e-learning environments. [Conference session]. 8th International Conference on Information, Intelligence, Systems & Applications (IISA), Larnaca, Cyprus. https://doi.org/10.1109/iisa.2017.8316421

Vie, J.-J., Popineau, F., Tort, F., Marteau, B., & Denos, N. (2017). A heuristic method for large-scale cognitive-diagnostic computerized adaptive testing. Proceedings of the Fourth (2017) ACM Conference on Learning @ Scale. (323-326). ACM Digital Library. https://doi.org/10.1145/3051457.3054015

Wise, S. L. (2018). Controlling construct-irrelevant factors through computer-based testing: Disengagement, anxiety, & cheating. Education Inquiry, 19(1): 21-33. https://doi.org/10.1080/20004508.2018.1490127 [ Links ]

Yildirim, O. G., Erdogan, T., & Ve C/igdem, H. (2017). The investigation of the usability of web-based assignment system. Journal of Theory and Practice in Education, 13, 1-9. [ Links ]

Zalat, M. M., Hamed, M. S., & Bolbol, S. A. (2021). The experiences, challenges, and acceptance of e-learning as a tool for teaching during the Covid-19 pandemic among university medical staff. PLOS ONE, 16(3), e0248758. https://doi.org/10.1371/journal.pone.0248758 [ Links ]

Received: 16 January 2023

Accepted: 15 November 2023

1 Being digitally comfortable means being confident in one's abilities to navigate easily the various online platforms.