Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

The Independent Journal of Teaching and Learning

On-line version ISSN 2519-5670

IJTL vol.18 n.2 Sandton 2023

Students' perceptions of Computerised Adaptive Testing in higher education

Priya RamgovindI; Shamola PramjeethII

IThe Independent Institute of Education, South Africa

IIThe IIE's Varsity College, South Africa

ABSTRACT

The COVID-19 pandemic has forced higher education institutions (HEIs) to re-look at their assessment strategy as learning, development, and engagement move more fluidly into the online arena. The purpose of this research was to investigate students' academic and personal perceptions of computer adaptive testing (CAT) in higher education to understand students' confidence in adopting CAT. Using a quantitative descriptive research design, an online questionnaire was administered to students at private and public HEIs in South Africa, with 600 respondents. The study found that the students were comfortable engaging in online learning and expressed positive perceptions of adopting CAT, with most respondents recommending it for implementation. Students believe that CAT allowed for more productive interaction with material which meets their needs and learning preferences without feeling overburdened. The findings of the study provide HEIs with valuable information on key managerial implications to ensure the successful adoption and implementation of CAT.

Keywords: adaptive testing, assessment methodology, computerised, student perceptions, post- COVID-19 pandemic

INTRODUCTION

Student learning styles, the speed at which they can assimilate information, and their abilities to comprehend what is being taught are different and unique to everyone (Peng, Ma & Spector, 2019). Presently, HEIs have fit-for-purpose standardised assessment strategies that are most suited to every module's needs, and overall qualification. Most of these assessment strategies were designed for a face-to-face medium/mode of delivery, namely paper-based standardised testing and catering to the masses of students. With the COVID-19 pandemic, lecturers and students had to adapt their varying learning styles and abilities forcefully and quickly to an additional complexity that is online learning. This area was unfamiliar to many. Two years into the COVID-19 pandemic, with many institutions using online learning as a key delivery medium, learners and lecturers are beginning to embrace online learning more, due to its adaptability and flexibility (Mpungose, 2020; Zalat, Hamed & Bolbol, 2021). With the COVID-19 pandemic having challenged and changed conventional teaching and learning assessment practices, learners are beginning to reflect on their learning preferences and styles.

The forced use of online learning caused by the pandemic has shown HEIs and lecturers that personalised learning is possible. Online platforms have enabled the easy collection and real-time analysis of a wide range of information on numerous aspects of learners' behaviour and competency, with the ability to provide more detailed and meaningful feedback quicker to learners through their smartphone/tablet devices. A generic one-size-fits-all assessment strategy that dominated testing before the pandemic warrants a call for a renaissance in assessment practices (New Meridian, 2022). Therefore, as online learning and the way students want to learn grows, the way they want to be taught and assessed must evolve. Learning should be an all-inclusive approach that is highly individualised, relative, and continuous (Elmore, 2019) between the learner and the educator, a partnership. In this role, the lecturer is more of a facilitator and activator; therefore, assessments should support such a learning form rather than measure, evaluate, and give a score (Elmore, 2019). Assessments should provide helpful information to guide, provide feedback, and promote the dynamic nature of learning. Assessments should provide more guided information about the development of learners' capabilities and understanding of content, and not about merit, pass or fail. Vander Ark (2014:1) writes that students' learning should be such that they are 'able to progress at different rates and with time and support varied to meet individual needs; increased access to care and education to better align with the realities of modern living and working; greater use of the home, the community, and other settings as contexts for 24/7 learning experience'. The forced move to online learning has resulted in students and lecturers capitalising on additional teaching and learning methods that were previously not considered given established assessment methods, which were effective in their own right. However, given the now endless assessment possibilities and the willingness of students to engage more online with their learning, an assessment method that aligns with this new digital student is computerised adaptive testing (CAT). Lee (2021) highlights that COVID-19 has been a catalyst to student-centric learning, where learning is more aligned and tailored to the student's needs and interests to ensure the 'full awareness of the learner' is attained (Kaminskiene & De Urraza, 2020:3).

The purpose of this research was to understand student perceptions and applications of CAT in South African HEIs. The key research objectives that guided this study were the following:

• To determine the level of students' knowledge and understanding of CAT.

• To determine students' personal and academic perceptions of CAT.

• To determine students' comfortability of adopting CAT as an assessment tool in their respective modules being studied.

A student-centric CAT approach to assessment is not standard practice for assessing students in HEI in South Africa, despite HEIs adopting online assessment methods (Queiros & de Villiers, 2016; Minty, 2018; Fynn & Mashile, 2022). With students engaging more in online learning and their comfortability with it increasing, the findings of this study provide HEIs with valuable information on students' comfortability with adopting such a testing methodology and whether CAT should be implemented in their HEI. With HEIs having this understanding, it will make the change in assessment strategy much easier to implement, as students' willingness to adopt and accept such a strategy is high. Currently, there is a lack of research on CAT adoption before implementation, student perceptions of CAT in the higher education space and especially in South Africa. Although CAT is not a new assessment tool, it has been implemented in first-world countries (Surpass, 2019; New Meridian, 2022), however, to a lesser extent in Africa. Furthermore, the contemporary literature lacks an understanding of student perception and of CAT prior to its implementation, especially in the South African HE environment.

LITERATURE REVIEW

Numerous countries around the world are at the tail end of responding to the COVID-19 pandemic (Charumilind, Craven, Lamb, Sabow, Singhal & Wilson, 2022). However, the effects and implications of this pandemic are still being felt and will continue to do so in the future. The teaching and learning practices that have emerged as a contingency, necessity, or reflex, have seen an increased reliance on e-Learning for those institutions with the existing infrastructure and Emergency Remote Teaching (ERT) for a more temporary solution (van Wyk, Mooney, Duma & Faloye, 2020; Zalat, Hamed & Bolbol, 2021). Therefore, the construction of a learning environment that aligns with the changing education landscape is fundamental to the transformation of teaching and learning practices (Li & Lalani, 2020). Looking ahead, HEIs will use the lessons learned from the pandemic and the continuing increase in the adoption of students and lecturers and the comfortability of online learning, to actively seek to create an adaptive e-Learning method that will leverage the electronic learning environment that is best suited to the needs of individual students (Ramadan & Aleksandrovna, 2018) and embrace the smart learning environment (Peng et al., 2019). Smart learning amalgamates smart device technologies with intelligent technologies to create a learning environment that is tailored to the needs and learning of different students, resulting in an improved and effective learning experience (Peng et al., 2019).

What are Adaptive Learning Systems?

Adaptive learning systems are digitally designed to factor in the level of ability, skill attainment, and needs of students in a manner that considers automated procedures and instructor interventions (Troussas, Krouska, & Virvou, 2017). The purpose of these systems is to use student proficiency to determine the exact knowledge of the student before they are moved sequentially along the learning path so that the learning outcomes are comprehensively achieved (Chrysafiadi, Troussas & Virvou, 2018).

CAT systems are based on testing, by which automated systems are used to self-assess and diagnose a student. The premise of CAT is to ensure that the questions posed to a student are not too difficult or too easy (Rezaie & Golshan, 2015). The use of CAT is to measure the efficiency or the accuracy of a test score with respect to the duration of the test. This implies that when using an adaptive test, a shorter duration can save time, rather than a test of equal precision, or it can improve a student's score on a test of equal duration or length (Thompson, 2019).

Computerised Adaptive Testing

Adaptive tests are created automatically by computer systems or applications, known as Computer Adaptive Tests (Vie, Popineau, Tort, Marteau & Denos, 2017). There are several types of CAT, but they all have two common steps. In the first step, the most appropriate item/s are determined and then administered based on students' current level of understanding. The second step is to use the response/s to the item/s posed and refine it until the performance estimate, or a student receives a perfect score. This two-step process continues until the student has answered a predetermined number of questions, or until a precision score has been obtained (Gibbons, Bower, Lovell, Valderas, & Skevington, 2016). To construct an algorithm that selects the most suited activity/item or exercise from a pool of tests to meet the specific needs of students requires the identification and evaluation of criteria pertaining to a student's needs and abilities.

Procedures for implementing CAT have been proposed; however, most have been proliferated, given that each institution and its requirements are different and cannot use a standardised approach (Moosbrugger & Kelava, 2012). Therefore, institutions seeking to implement CAT must understand the unique characteristics of the CAT programme. According to Ramadan and Aleksandrovna (2018), for a CAT to be truly adaptive, there are standard components that must be included. This includes the item pool, the decision rule for selecting the first time, methods for selecting additional items or a set of items, the selection of items is to maximise efficiency, and that test items must reflect a balance in content and finally the termination of criteria.

Advantages of Computerised Adaptive Testing

Thompson (2011), Rezaie and Golshan (2015), and Surpass (2019) describe the advantages of CAT as having shorter tests, in which testing time can be reduced by up to 50% or more. Equiprecision ensures that precision measurement is applied to all examinees, which results in the examination experience being more appropriate for each examinee (Zandvliet & Farragher, 1997; Baik, 2022), thus, bolstering their motivation. This is seen when low-achieving examinees feel better and high-achieving examinees feel challenged post-CAT. Furthermore, it decreases the levels of stress, anxiety, boredom, and fatigue that an examinee may experience (Ramadan & Aleksandrovna, 2018). Due to the flexibility in the algorithm of CAT, there is greater security when assessments are administered and can be adapted to various requirements. Furthermore, examinees can re-test themselves once a score has been received and they have had a chance to work on areas they are not yet competent in. The use of online assessments allows for a richer user interface to be provided to the examinee through the integrated use of graphics and allows for the dynamic presentation of assessment content (Han, 2018). A key advantage that is seen favourably by assessors is the immediate computation of scores, which allows both the examinee and the assessor to receive a quick 'snapshot' of the examinee's performance (Stone & Davey, 2011).

Disadvantages of Computerised Adaptive Testing

The benefits of CAT cannot be viewed in isolation; there are challenges associated with it, and therefore it is prudent that institutions fully understand these challenges to ensure that contingencies are made to address them or mitigate them completely. One of the most fundamental challenges of CAT is the computer literacy of students (Alderson, 2000). Students who do not have sufficient exposure to- and involvement with technology in an educational setting will struggle to adopt CAT. Furthermore, Thompson (2011) states that once a CAT has been administered, it is almost impossible for examinees to return to questions already answered. In addition, items can run the risk of being overexposed, since the best question is designed to be selected. Therefore, a control algorithm is required (Rezaie & Golshan, 2015). The test is designed to reflect the skill and ability of an examinee; however, if an examinee is experiencing extreme anxiety, this could be reflected in their attempts at the questions and given that the system will not allow for the examinee to go back to reattempt a question, examinees can be left with a poor score, not due to inability but rather testing anxiety. For the implementation of CAT to be successful, a large sample size and the necessary expertise are needed to ensure the validity and reliability of this assessment method. Therefore, an institution wanting to engage in CAT must ensure that its computer lab infrastructure will support the required number of students at a time (Oladela, Ayanwale & Owolabi, 2020).

Perceptions and Implementation of CAT

The current reliance on computers to facilitate teaching and learning has led to increased interest in facilitating assessment through online mediums. While the concept of CAT has been founded in the psychology discipline, contemporary studies conducted by Harrison, Geerards, Ottenhof, Klassen, Riff, Swan, Pusic, and Sidey-Gibbons (2019), Xu, Jin, Huang, Zhou, Li, and Zhang (2020), and Stochl, Ford, Perez, and Jones (2021) highlight the implementation of CAT. As its understanding and applicability to other disciplines grew, CAT has been found to be equally applicable and is evidenced in higher education. Studies conducted by Oppl, Reisinger, Eckmaier and Helm (2017), Eggan (2018), Diahyleva, Gritsuk, Kononova, and Yurzhenko (2020) Oladele and Ndlovu (2021), and Oladele (2021) evidenced the implantation of CAT in various higher education circumstances. Existing studies relating to student perceptions of CAT were studies undertaken by Lilley, Barker, and Britton (2005), and Lilley and Barker (2007); however, these studies were aimed at understanding student perceptions of CAT during implementation and not pre-implementation. Currently, there is no existing literature that examines the perceptions of students of CAT as a precursor to implementation. Currently, there are countless studies that are testing the efficacy of CAT in the respective disciplines. However, the literature gap appears due to there not being an understanding of student perceptions of CAT prior to the acceptance and implementation thereof in the contemporary higher education environment, especially against the backdrop of post-pandemic higher education in South Africa. Therefore, the purpose of this research is to address this literature gap, especially in the South African, context given its multicultural and multilingual students with varying socioeconomic conditions.

RESEARCH METHODOLOGY

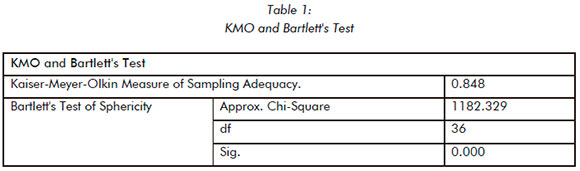

The research paradigm that guided this study was positivism (Creswell & Creswell, 2018). The study was descriptive in nature, as the researchers aimed to describe students' perceptions and comfortability of adopting CAT considering there is currently little to no previous research to serve as a reference point on CAT as an assessment approach in the South African Higher Education (HE) landscape (Rahi, 2017; Saunders, Lewis, & Thornhill, 2019). Using a quantitative methodological approach, the study sought to understand HE students' views of adopting CAT as opposed to their standardised number of assessments and their perceptions of such a testing methodology. The questionnaire design was informed by literature on CAT. The closed-ended questionnaire assessed students' level of comfortability in online learning, their understanding and knowledge of CAT, their personal and academic perceptions of CAT, and whether they believed CAT should be implemented at their HEI. Data were collected using the Microsoft Forms online survey platform from June 2022 - July 2022. The target population for the study was students (undergraduate and postgraduate) at both private and public HEIs in South Africa. Using a nonprobability sampling methodology, namely purposive sampling, the survey link was distributed to the students, the targeted population, via email. The survey was open for responses for a period of two weeks. Institution names were not included in the survey to ensure the anonymity of the respondents. An online questionnaire was chosen as the most suitable instrument in terms of the geographical reach of public and private HEIs in the major provinces of South Africa. Using Sekaran and Bougie's (2016) sampling size table, for a target population greater than 100,000 at a 95% confidence interval, the appropriate sample size is 384. The researchers were targeting 400 responses with a 50% completion rate. A total of 600 students completed the questionnaire. Of the 600 responses, 589 were from private HEIs and 11 from public HEIs. Therefore, it should be noted that generalisation of the findings to the public HEIs must be taken with caution. The data collected from Microsoft Forms was exported to an Excel spreadsheet, cleaned, and coded. Using statistical package software, SPSS version 28, descriptive and inferential statistical analyses were performed on the data. Descriptive analyses namely mean, median, mode, and standard deviations, were performed on the data to summarise the study sample characteristics and establish the means, quantiles, and measures of dispersion in the data. Cross-tabulations with chi-square and Cramer's V testing were also performed. Exploratory factor analysis (EFA) was performed to explore its structure. To assess whether there is a significant correlation between the factors, Pearson Correlation coefficients were obtained. Before performing the exploratory factor analysis (EFA), the suitability of the data should be assessed. This is done using the Kaiser-Meyer-Olkin Measure of Sampling Adequacy (KMO-MSA), and Bartlett's Test of Sphericity. The KMO-MSA value had to be greater than 0.5 and the Bartlett test of Sphericity, statistically significant. As seen in Table 1 below, both conditions were satisfied and therefore the data was considered suitable to perform the EFA analysis. To assess internal consistency of each factor, a Cronbach's Alpha reliability test was performed, with a 0.786 score being returned, and thus deemed adequate to perform EFA.

The ethical approval for the study was obtained from the Independent Institute of Education according to its ethics review and approval procedures (R. 00036).

RESULTS AND DISCUSSION

The following discussion presents the findings of the study, which are further corroborated and/or refuted by the findings of the relevant literature.

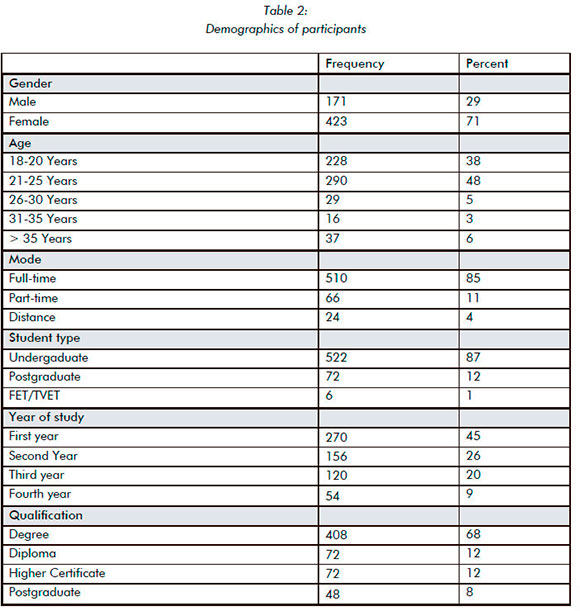

A total of 600 students completed the online questionnaire. Most of the students were within the 18-25 age range (86%), with more females (71%) responding to the survey. In terms of mode of study, 85% were full-time students with 87% being undergraduate students. In terms of the study year, 45% were first-year students, 26% were second-year, 20% were third year, and 9% were in their fourth year of study. In terms of qualification, majority (68%) were studying toward a degree.

Engagement of students in online learning and testing

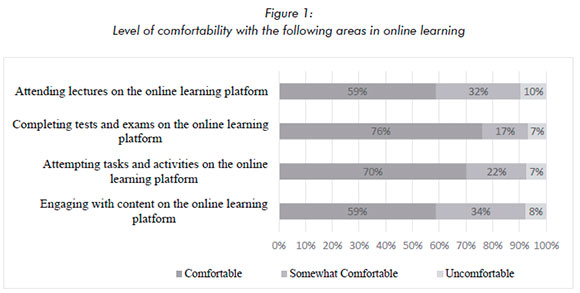

Students were asked if they have engaged in online learning and testing. Ninety-three percent indicated yes, while 7% indicated no. Of the 93% who engaged in online learning and testing, the majority expressed that they were comfortable with it, with 76% feeling most comfortable completing tests and exams on the online platform, followed by trying tasks and activities (70%), as depicted in Figure 1. Regarding engaging with the content and attending lectures online, only 59% felt comfortable, indicating that students may prefer face-to-face interaction with the lecturer and learning materials. Alkamel, Chouthaiwale, Yassin, AlAjmi, and Albaadany (2021) found similar findings of positive attitudes toward online testing in their study of postgraduate and undergraduate students, with, Yildirim, Erdogan, and ve (Jigdem (2017) noting that students were comfortable with the system and lecturer feedback, but found that the allotted time to complete the assessment was insufficient, there were challenges of access to a device, and accessing the online testing system were experienced.

A cross-tabulation and the associated chi-square and Cramer's V test were performed to determine whether there was a statistically significant association between participants' views on:

I. Adopting adaptive testing and their comfortability with the online learning platform: lecturers. It was found that the association was statistically significant with a small effect size (X2(4) = 65.953,p<0.01,Cramer's V = 0.25).

II. Adopting adaptive testing and their comfortability with the online learning platform: content engagement. The association was found to be statistically significant with a small effect size (X2(4) = 27.834,p<0.01,Cramer's V = 0.156).

III. adopting adaptive testing and its comfortability with the online learning platform: tasks and activities. It was found that the association was statistically significant with a small effect size (X2(4) = 26.933,p<0.01,Cramer's V = 0.154).

IV. Adopting adaptive testing and their comfortability with the online learning platform: tests and exams. It was found that the association was statistically significant with a small effect size (Χ2(4) = 26.933,ρ<0.01,Cramer's V = 0.154).

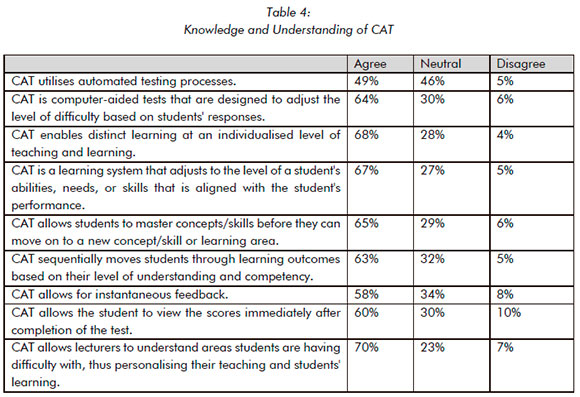

Knowledge and Understanding of CAT

The purpose of CAT and how it is implemented, were explained to the students. They were then provided with statements describing CAT's key characteristics and features to determine their level of knowledge and understanding of what CAT is before establishing their perceptions of it being used as an assessment tool. In terms of understanding that CAT uses automated testing, only 49% of the students were aware of it, and 58% knew that AT allows instantaneous feedback. Most of the students (70%) were aware that CAT allowed lecturers to understand areas students were having difficulty with, thus personalising their teaching, and students' learning. Overall, the students showed a good understanding and knowledge of what CAT is, according to Table 4. The EFA analysis was performed on Knowledge and Understanding of CAT, and one factor was extracted using the Principal Axis Factoring (PAF) with a minimum factor loading of 0.4, with a KMO of 0.848.

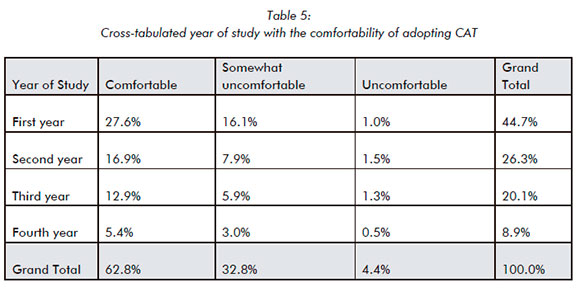

The students were asked to express how comfortable they felt they would be in adopting CAT as an assessment tool in their current studies after learning about the key characteristics and features of CAT depicted in Table 4. Most felt comfortable (63%), 33% somewhat comfortable, and 4.4% were uncomfortable with adopting CAT in their current studies. Based on Table 5, the second and third years seem more comfortable with adopting CAT (64% of the grand total, respectively) compared to the first and fourth-year students (61%), although the percentage difference is minimal. One would assume that first years are new to the tertiary space and still getting familiar with the online systems and testing methodology, while the fourth years engage in more critical and application/practical-based questioning and thus would feel more comfortable with paper-based testing as they may be unfamiliar with this level of testing in an online format.

Personal Perceptions of CAT

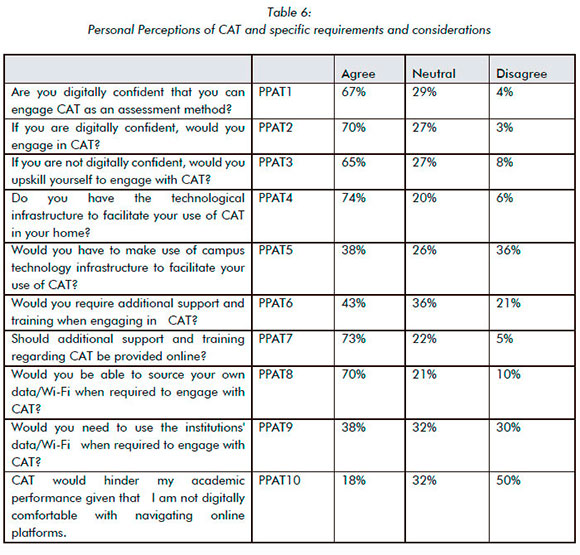

Students were asked to rate the following statements to assess their personal perceptions of CAT, and to determine if specific requirements and considerations that are needed for them to engage with CAT. The EFA analysis was performed, and one factor was extracted using PAF with a minimum factor loading of 0.4. A single factor was extracted for the Personal Perceptions of Adaptive Testing, with a KMO of 0.708. Four statements that did not load with the factor, was item PPAT2: 'If you are digitally confident, would you engage in AT?', PPAT3 'If you are not digitally confident, would you upskill yourself to engage with AT?', PPAT7 'Should additional support and training regarding AT be provided by a trained facilitator?', and PPAT8 'Should additional support and training regarding AT be provided online?'.

Students indicated a willingness to upskill themselves for CATs where they did not have the necessary skills (65%) and welcomed additional support and training online on CAT (73%). They showed significant digital confidence in engaging with CAT (67%). This is validated by Song, Singleton, Hill, and Koh (2004), who indicate that learner motivation, time management, level of comfort with online technology, and course design impact a student's online learning experience. Although there is an initial degree of hesitancy because CAT is not currently integrated into the academic assessment structure of students, it can be overcome through the training and support of students. Student perceptions in an introductory managerial accounting class found that students had both negative and positive perceptions of computerised testing due to the influence and management of student perceptions by individual instructors (Apostolou, Blue & Daigle, 2009). Thus, highlighting the critical role of lecturers, facilitators, or teachers in the implementation of CAT's. Martin (2018) found that CAT exhibits positive effectiveness for older students.

Most students have infrastructure at home to assist with online learning and would therefore be able to successfully engage in CAT for modules in which they are registered. Hogan (2014) investigated the efficiency and precision of CAT and computer classification and found that test coordinates favoured the use of CAT over pencil and paper assessments when assessed. Having the appropriate technological infrastructure and access to data/Wi-Fi plays a critical role in CAT's success. Students indicated that they have the required technological infrastructure (74%) and access to data/ Wi-Fi (70%) to facilitate their use of CAT at home. Fifty percent disagreed that CAT would hinder their academic performance, since they are not digitally comfortable navigating online platforms.

Academic Perceptions of CAT

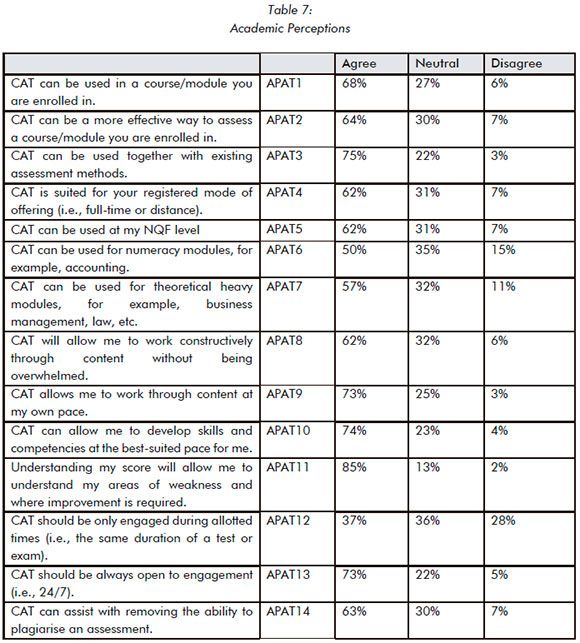

Students were asked to evaluate the following statements to assess their academic perceptions of CAT and to determine if specific requirements and considerations are needed for them to engage with CAT.

Students demonstrate a significant degree of comfort with CAT in their individual modules (68%), NQF level (62%) and mode of delivery (62%). There is a sense of individual learning that is paced for the student based on their needs (74%) that creates a sense of individuality for the student, despite being in a collective classroom. In addition to this, the students felt that CAT would allow them to work constructively through the content without being overwhelmed (62%). Martin (2018) validates the notion that CAT better matches items with examinees, which leads to minimal errors and enhanced precision.

Students are of the opinion that having more time to complete adaptive tests (73%) will ensure that they are able to work at their own pace and understanding their scores will allow them to determine their own areas of weaknesses and address them going forward (85%), which is crucial for students to help build and reinforce students taking accountability and responsibility for their learning. Given that a single summative end-of-year examination, with one or two formative assessment points, can only give you a limited view of a student's progress (New Meridian, 2022), lecturers can construct a richer profile of students' development by combining multiple measures throughout the year, which guides instruction and offers comparable data that reveals where additional resources are needed (New Meridan, 2022).

Lilley and Barker (2007) found that students performed better in summative- than in formative assessments, and that they an overall good attitude toward automated feedback that is individualised based on student performance. To further understand student success, Almahasees, Mohsen and Amin (2021) found that online learning allows for flexibility in learning due to students having access to learning material at all times of the day.

Students agreed (63%) that the use of CAT will help reduce the ability to plagiarise assessments. However, Meuschke, Gondek, Seebacher, Breitinger, Keim and Gipp (2018), Denney, Dixon, Gupa and Hulphers (2021), and Sorea, Rosculet and Bolborici (2021) indicate that for plagiarism to be effectively detected and managed in online learning, students must be trained in referencing and paraphrasing. The authors postulate further that educators must be more involved in educating students on referencing and identifying plagiarism issues; at the same time, the institution must have clear plagiarism policies, software, and ethical education practices.

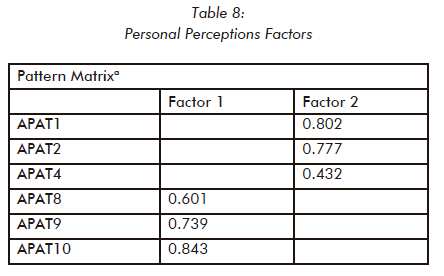

The EFA analysis was performed, and two factors were extracted using PAF with a minimum factor loading of 0.4. Two factors were extracted for Academic Perceptions of Adaptive Testing as per Table 6, with a KMO of 0.803. The first factor consisted of three items, APAT8' AT will allow me to work constructively through content without being overwhelmed', APAT9' AT allows for me to work through content at our own pace', and APAT10' AT can allow for me to develop skills and competencies at a pace best suited to them'.

The second factor consisted of three items, APAT1' AT can be used in the course/module I am learning', APAT2' AT can be a more effective way to assess a course/module I am enrolled in', and APAT4' AT is suited for all modes of offering (i.e., full-time or distance)'.

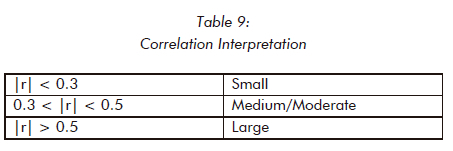

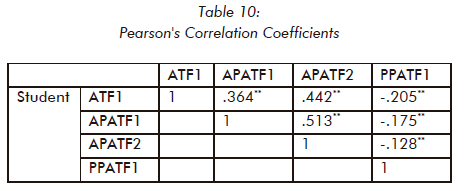

To determine whether there are significant relationships between the factors in the study, Pearson's correlation coefficients were obtained for the factors (using the factor scores), Table 8. The relationships between many of the factors were shown to be statistically significant. All statistically significant relationships were positive. Once the statistical significance and direction of the relationships were assessed, the strength of the relationship can be assessed using the correlation coefficient value. The following guideline can be used to interpret the correlation coefficients.

Table 10 notes the relationship between the factors on Knowledge and Understanding of Adaptive Testing (ATF1) and Academic Perception of Adaptive Testing factor 1 (APATF1) was found to be statistically significant and moderately positive (r = 0.364, p < 0.01). Furthermore, a statistically significant and moderately positive relationship (r = 0.442, p < 0.01) was found between AFTF1 and APATF2. This indicates that the higher the level of Adaptive Testing (the better the understanding), the higher the Academic Perception of Adaptive Testing factors 1 and 2.

Recommend the use of CAT for their HEI

Out of 599 responses, on a scale of 1 to 10, with 1 being 'Extremely Not Confident' and 10 being 'Extremely Confident', do you believe that CAT is the future of assessing students' knowledge and competence in a module, an average score of 7.4 was received, implying that students are confident in CAT as an assessment tool, as HEIs evolve in the post-COVID and technology-driven world.

Students were asked, 'Do you believe your higher education institution should implement CAT as an assessment tool?' Eighty-seven percent believe their HEI should implement CAT, while 13% indicated no.

IMPLICATIONS AND WAY FORWARD

Based on the findings in this study, some of the managerial implications are that HEIs must identify an information technology system that is suitable for use. The installation of the necessary computer lab infrastructure to support the system that caters to many students at a time is required; however, this has a major cost implication for an HEI. Lecturers/academics designing the items for the CAT must be trained on how to develop items that serve the intended purpose and allow for the scaffolding of learning. Lecturers/academics must display digital competence to ensure the successful use of CAT and not perpetuate digital hesitancy among students who may be uncertain. Ongoing training and discussions on what are working and what is not, must be part of the culture to ensure an excellent student-centric personalised learning experience. Students must be trained and taught the use and functionality of CAT. They must also allow students to work on a demo system that does not provide automatic grading before their performance is measured to familiarise them with the technology and assessment tool. To address the challenge of overexposing items in the CATs, HEIs need to factor in a control algorithm (Rezaie & Golshan, 2015) therefore, requiring specialist skills. Due to this system being new, it is advised that a full-time information technology support specialist is available to assist students and lecturers, and to address system issues that are raised. Furthermore, the issue of plagiarism has been compounded in the years since the presence of COVID-19. Students have found increasingly innovative ways to plagiarise when learning online. Therefore, despite there being no comprehensive literature that attributes the implementation of CAT to a decrease in student plagiarism, there are plagiarism policies, procedures, and workshops that must be provided to students to ensure that the culture and ethics pertaining to plagiarism are ingrained into students, and thus ensuring that the use of CAT is effective.

For CAT to be successful in its implementation and facilitation, there must be understanding and buy-in from key stakeholders. Thus, lecturers and/or academics who design CAT items must be fully aware of the needs and requirements of their course before engaging in the development of adaptive tests. Additionally, students as users must be trained, confident, and competent in digital literacy to ensure that it does not hinder their learning experience. One of the challenges of CATs is that once they have been administered, it is almost impossible for examinees to return to questions already answered; thus, it is crucial for HEIs to look at possible ways of addressing this to better assist and support students. This will also help reduce stress and anxiety in students. It is vital that the implementation of CAT is done thoughtfully and skilfully to ensure that the benefits associated with this teaching and learning practice are fully harnessed, irrespective of the socio-economic background of students in South Africa.

CONCLUSION

The study has established that most of the students have a positive attitude towards adopting CAT as a new testing methodology and believe that their HEI should implement CAT. They are comfortable completing tests, examinations, and activities online and have the digital competence to engage with CAT. Students who feel they have the required level of digital confidence are willing to improve their skills and are open to support and training if offered online. Should their HEI implement CAT, they have to have the adequate technology, infrastructure, and access to data/Wi-Fi to engage with the CAT at home, thus, not to be reliant on the institution's devices and data/Wi-Fi. They believe that CAT will allow them to engage with content more constructively at the time and pace that best suits their needs and learning styles without becoming overwhelmed, allowing them to develop the competencies and skills required. Based on the score obtained after completing the CAT, students believe that it will enable them to understand their areas of weakness and where improvement is needed, thus improving their competence and fostering the ownership of learning.

The contribution of this study is that there is a dearth of contemporary literature and studies related to understanding student perceptions of CAT prior to its adoption in higher education. This information is critical in post-pandemic South African HEIs, especially given the historic economic, social, and environmental challenges faced by higher education students. By understanding student perceptions towards CAT, any challenges or hesitancies around online learning can be mitigated to ensure buy-in and success, as opposed to exacerbating challenges associated with online learning, which was evidenced during the COVID-19 pandemic. Therefore, post-pandemic, understanding of students, academics, and personal perceptions towards the adoption of CAT will provide HEIs with pertinent contemporary knowledge to aid in decision making going forward. The information gleaned from this study is one that has not yet been constructed against the backdrop of a post-pandemic South African higher education setting.

A key limitation of this study was that of time and access to the student population in public HEIs in SA. Thus, areas of future research for implementing CAT would be to expand the sample population to include a more significant representation of public university students.

REFERENCES

Alderson, J.C. (2000) Technology in testing: The present and the future. System 28(4) pp.593-603. [ Links ]

Alkamel, M.A.A., Chouthaiwale, S.S., Yassin, A.A.A., AlAjmi, Q. & Albaadany, H.Y. (2021) Online testing in higher education institutions during the outbreak of COVID-19: Challenges and opportunities. Studies in Systems, Decision and Control 348 pp.349-363. [ Links ]

Almahasees, Z., Mohsen, K. & Amin, M.O. (2021) Faculty's and students' perceptions of online learning during COVID-19. Frontiers in Education 6:638470, doi:10.3389/feduc.2021.638470 [ Links ]

Apostolou, B., Blue, M.A. & Daigle, R.J. (2009) Student perceptions about computerised testing in introductory managerial accounting. Journal of Accounting Education 27(2) pp.59-70. [ Links ]

Baik, S. (2022) Computer adaptive testing - Pros & cons, https://codesignal.com/blog/tech-recruiting/pros-cons-computer-adaptive-testing/ (Accessed 26 September 2022).

Barros, J.P. (2019) Students' perceptions of paper-based vs. computer-based testing in an introductory programming course. Proceedings of the 10th International Conference on Computer Supported Education, (CSEDU 2018) pp.303-308.

Charumilind, S., Craven, M., Lamb, J., Sabow, A., Singhal S. & Wilson, M. (2022) When will the COVID-19 pandemic end? https://www.mckinsey.com/industries/healthcare/our-insights/when-will-the-covid-19-pandemic-end (Accessed 13 March 2023).

Choi, I., Kim, K.S. & Boo, J. (2003) Comparability of a paper-based language test and a computer-based language test. Language Testing 20 pp.295-320. [ Links ]

Chrysafiadi, K., Troussas. & Virvou, M. (2018) A framework for creating automated online adaptive tests using multiple-criteria decision analysis. 2018 IEEE International Conference on Systems, Man and Cybernetics (SMC) pp.226-231.

Creswell, J.W. & Creswell, J.D. (2018) Research design: Qualitative, quantitative, and mixed methods approaches (5th ed.). USA: Sage Publications.

Denney, V., Dixon, Z., Gupta, A. & Hulphers, E. (2021) Exploring the perceived spectrum of plagiarism: A case study of online learning. Journal of Academic Ethics 19(2) pp.187-210. [ Links ]

Diahyleva, O.S., Gritsuk, I.V., Kononova, O.Y. &Yurzhenko, A.Y. (2020). Computerised adaptive testing in education electronic environment of maritime higher education institutions. CTE Workshop Proceedings 8 pp.411-422. [ Links ]

Dillon, A. (1992). Reading from paper versus screens: A critical review of the empirical literature. Ergonomics 35 pp.1297-1326. [ Links ]

Eggan, T.J.H.M. (2018). Multi-segment Computerized Adaptive Testing for educational testing purposes. Fronters in Education 3 p.111. [ Links ]

Elmore, R.F. (2019) The Future of Learning and the Future of Assessment. ECNU Review of Education 2(3) pp.328-341. [ Links ]

Fynn, A. & Mashile, E.O. (2022) Continuous online assessment at a South African open distance and e-learning institution, Frontiers in Education 7:791271, doi:10.3389/feduc.2022.791271 [ Links ]

Gibbons C, Bower P, Lovell K, Valderas J. & Skevington S. (2016) Electronic quality of life assessment using computer-adaptive testing. Journal of Medical Internet Research 18(9) p.240. [ Links ]

Han, K.C.T. (2018) Conducting simulation studies for computerised adaptive testing using SimulCAT: An instructional piece. Journal of Educational Evaluation for Health Professions 15(20) p.20. [ Links ]

Harrison, C.J., Geerards, D., Ottenhof, M.J., Klassen, A.F., Riff, K.W.Y. Q., Swan, M. C., Pusic, A. L. & Sidey-Gibbons, C.J. (2019) Computerised adaptive testing accurately predicts CLEFT-Q scores by selecting fewer, more patient-focused questions. Journal of Plastic, Reconstructive and Aesthetic Surgery 72 pp.1819-1824. [ Links ]

Hogan, T. (2014) Using a computer-adaptive test simulation to investigate test coordinators' perceptions of a high-stakes computer-based testing program. PhD Thesis, Georgia State University, US. [ Links ]

Ita, M.E., Kecskemety, K.M., Ashley, K.E. & Morin, B. (2014) Comparing student performance on computer-based vs. paper-based tests in a first-year engineering course. Proceedings of the 121st ASEE Annual Conference & Exposition Indianapolis, Indiana, US 15-18 June, pp.1 -14.

Kaminskiene, L. & DeUrraza, M.J. (2020) The flexibility of curriculum for personalised learning. Proceedings of the 14th International Scientific Conference on Society. Integration, Education. Rezekne, Latvia, 22-23 May, 3 pp.266-273.

Khoshsima, H., Toroujeni, S.M.H.T., Thompson. N. & Ebramhimi, M.R. (2019). Computer-based (CBT) vs. paper-based (PBT) testing: Mode effect, relationship between computer familiarity, attitudes, aversion and mode preference with CBT test scores in an Asian private EFL context. Teaching English with Technology 19(1) pp.86-101. [ Links ]

Lee, K.H. (2021) The educational 'metaverse' is coming. The Campus Learn, Share, Connect. https://www.timeshighereducation.com/campus/educational-metaverse-coming (Accessed 15 August 2022).

Li, C. & Lalani, F. (2020) The COVID-19 Pandemic has changed education forever. This is how. World Economic Forum. https://www.weforum.org/agenda/2020/04/coronavirus-education-global-covid19-online-digital-learning (Accessed 20 September 2022).

Lilley, M. & Barker, T. (2007) Students' perceived usefulness of formative feedback for a computer-adaptive test. Electronic Journal of e-Learning 5(1) pp.31-38. [ Links ]

Lilley, M., Barker, T. & Britton, C. (2005) Learners' perceived level of difficulty of a computer-adaptive test: A case study. In: Costabile, Human-Computer Interaction 3585 pp.1026-1029. [ Links ]

Meuschke, N., Gondek, C., Seebacher, D., Breitinger, C., Keim, D. & Gipp. B. (2018) An adaptive image-based plagiarism detection approach. Proceedings of the 18th ACM/IEEE on Joint Conference on Digital Libraries (JCDL '18). Association for Computing Machinery, New York, NY, US, pp.1 31 -140.

Minty, R. (2018) To use electronic assessment or paper-based assessment? That is the question (apologies to Shakespeare), The Independent Journal of Teaching and Learning 13(1) pp.17-27. [ Links ]

Moosbrugger, H. & Kelava, A. (2012) Testtheorie und Fragebogenkonstruktion. In Statistik für Human-und Sozialwissenschaftler, pp.7-26.

Mpungose, C.B. (2020) Emergent transition from face-to-face to online learning in a South African university in the context of the Coronavirus pandemic. Humanities and Social Sciences Communication 7(13) p.113. [ Links ]

Nergiz, C. & Seniz, OY. (2013) How Can We Get Benefits of Computer-Based Testing in Engineering Education? Computer Applications in Engineering Education 21(2) pp.287-293. [ Links ]

New Meridian. (2022) Flexible Assessment Systems Enable Educators to Respond to Students' Needs and Expand Educational Equity. https://newmeridiancorp.org/ (Accessed 03 March 2022).

Noyes, J.M. & Garland, K.J. (2008) Computer- vs. paper-based tasks: Are they equivalent? Ergonomics 51(9) pp.1352-1375. [ Links ]

Oladela, J. & Ndlovu, M.C. (2021) A review of standardised assessment development procedure and algorithms for computer adaptive testing: applications and relevance for Fourth Industrial Revolution. International Journal of Learning, Teaching and Educational Research 20(5) pp.1-17. [ Links ]

Oladela, J. (2021) Computer-adaptive-testing performance for postgraduate certification in education as innovative Assessment. International Journal of Innovation, Creativity and Change 15(9) pp.87-101. [ Links ]

Oladele, J.I., Ayanwale, M.A. & Owolabi, H.O. (2020) Paradigm shifts in computer adaptive testing in Nigeria in terms of simulated evidence. Journal of Social Sciences 63(1-3) pp.9-20. [ Links ]

Oppl, S., Reisinger, F., Eckaier, A. & Helm, C. (2017) A flexible online platform for computerised adaptive testing. International Journal of Educational Technology in Higher Education 14(2). [ Links ]

Peng, H., Ma, S. & Spector, J.M. (2019) Personalised adaptive learning: An emerging pedagogical approach enabled by a smart learning environment. Smart Learning Environments 6(1) pp.1-14. [ Links ]

Queiros, D.R. & de Villiers, M.R. (2016) Online learning in a South African higher education institution: determining the right connections for the student, International Review of Research in Open and Distributed Learning 17(5) pp.165-185. [ Links ]

Rahi, S. (2017) Research design and methods: A systematic review of research paradigms, sampling issues and instruments development. International Journal of Economics and Management Sciences 6(2) pp.1-5. [ Links ]

Ramadan, A.M. &, A.E. (2018) Computerised Adaptive Testing. https://www.researchgate.net/publication/329935670_Computerized_Adaptive_Testing (Accessed 03 March 2022).

Rezaie, M. & Golshan, M. (2015) Computer Adaptive Test (CAT): advantages and limitations. International Journal of Educational Investigations 2(5) pp.128-137. [ Links ]

Saunders, M., Lewis, P. & Thornhill, A. (2019) Research methods for business students (8th ed.). Harlow: Pearson Education Limited. [ Links ]

Sekaran, U. & Bougie, R. (2016) Research methods for business: A skill building approach (7th ed.). Singapore: John Wiley and Sons. [ Links ]

Song, L., Singleton, E.S., Hill, J.R. & Koh, M.H. (2004) Improving online learning: Student perceptions of useful and challenging characteristics. The Internet and Higher Education 7(1) pp.59-70. [ Links ]

Sorea D., Rosculet G. & Bolborici A.M. (2021) Readymade solutions and students' appetite for plagiarism as challenges for online learning. Sustainability 13(7) p.3861. [ Links ]

Stochl, J., Ford, T., Perez, J. & Jones, P.B. (2021). Modernising measurement in psychiatry: item banks and computerised adaptive testing. Lancet Psychiatry 8(5) pp.354-356. [ Links ]

Stone, E. & Davey, T. (2011) Computer-Adaptive Testing for students with disabilities: A review of the literature. ETS, Princeton, New Jersey.

Surpass. (2019) Computer Adaptive Testing: Background, benefits and case study of a large-scale national testing programme. https://surpass.com/news/2019/computer-adaptive-testing-background-benefits-and-case-study-of-a-large-scale-national-testing-programme/ (Accessed 27 September 2022).

Troussas, C., Krouska, A. & Virvou, M. (2017) Reinforcement theory combined with a badge system to foster student's performance in e-learning environments. 8th IEEE International Conference on Information, Intelligence, Systems & Applications (IISA), Larnaca, Cyprus, 27-30 August, pp.1-6.

Thompson, N.A. (2011) Advantages of Computerized Adaptive Testing (CAT). https://assess.com/docs/Advantages-of-CAT-Testing.pdf (Accessed 27 September 2022).

Thompson, N. (2019) Computerised Adaptive Testing: Definition, algorithm, benefits. https://assess.com/what-is-computerized-adaptive-testing/ (Accessed 25 September 2022).

Van Wyk, B., Mooney, G., Duma, M. & Faloye, S. (2020) Emergency remote learning in the times of COVID: A higher education innovation strategy. Proceedings of the European Conference on e-Learning, Berlin, Germany pp.28-30.

Vander Ark, T. (2014) The future of Assessment. Getting Smart. https://www.gettingsmart.com/2014/12/17/future-assessment/ (Accessed 12 May 2022).

Vie, JJ, Popineau, F., Tort, F., Marteau, B. & Denos, N. (2017) A heuristic method for large-scale cognitive-diagnostic computerised adaptive testing. Proceedings of the 4th ACM Conference on Learning at Scale, Cambridge Massachusetts, USA. pp.323-326.

Xu, L., Jin, R., Huang, F., Zhou, Y., Li, Z. & Zhang, M. (2020) Development of computerised adaptive testing for emotion regulation. Frontiers in Psychology 11 pp.1-11. [ Links ]

Yildirim, O. G., Erdogan, T. & ve (igdem, H. (2017) The investigation of the usability of web-based assignment system. Egitimde Kuram ve Uygulama, 1 pp.1-9. [ Links ]

Zalat, M.H., Hamed, M.S. & Bolbol, S.A. (2021) The experiences, challenges, and acceptance of e-learning as a tool for teaching during the COVID-19 pandemic among university medical staff. https://doi.org/10.1371/journal.pone.0248758 (Accessed 13 June 2022).

Zandvliet, D. & Farragher, P. (1997) A comparison of computer-administered and written tests. Journal of Research on Computing in Education 29 pp.423-438. [ Links ]

Date of Submission: 24 October 2023

Date of Review Outcome: 2 March 2023

Date of Acceptance: 15 September 2023