Serviços Personalizados

Artigo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares em Google

Similares em Google

Compartilhar

The Independent Journal of Teaching and Learning

versão On-line ISSN 2519-5670

IJTL vol.15 no.1 Sandton 2020

ARTICLES

Computer-assisted assessment: An old remedy for challenges in open distance learning

Martin CombrinckI; Willem J. van VollenhovenII

ICape Peninsula University of Technology, South Africa

IINorth West University, South Africa

ABSTRACT

The article reports on the experiences of lecturers on the implementation of computer-assisted assessment in an open distance learning context. Open distance learning is growing rapidly worldwide. The North-West University and Unit for Open Distance Learning are no different and the institution has a large component of open distance learning students. The aim of the research was to reflect on the experiences of lecturers with regard to the use of computer-assisted assessment. The Technology Acceptance Model and ADKAR model were used as conceptual frameworks. This study adopted a qualitative approach: interviews were conducted with 26 lecturers during 2015 and 2016. The data showed that computer-assisted assessment (multiple-choice questions) have certain challenges, but can also contribute to a more effective open distance learning assessment strategy. Recommendations were formulated according to findings. The article concludes that computer-assisted assessment has a place in an open distance learning context.

Keywords: open distance learning, computer-assisted assessment, formative assessment, summative assessment, multiple choice questions, qualitative research

INTRODUCTION

The number of students at universities is growing rapidly worldwide (Crisp & Ward, 2008; Wilson et al., 2011). There is still a great challenge to provide access to students at higher education institutions (Stephens, Bull & Wade, 2006). One way of accommodating more students in higher education institutions is by means of open distance learning (ODL). Open distance learning is not new in South Africa (SA): the University of South Africa (UNISA) is one of the most well-known ODL universities in the world. However, other universities in SA also offer ODL programmes. The North-West University (NWU) is one such university that has a large component of ODL students. At the time of this research, 31000 students were enrolled at the university for ODL programmes. Traditionally, NWU was a campus-based university, but in 2004, NWU started to offer ODL programmes to under- and unqualified teachers (Combrinck, Spamer & Van Zyl, 2015). In 2013, the Unit for Open Distance Learning (UODL) was established with its main function of delivering ODL to NWU students in Southern Africa. The growth in ODL programmes at NWU in the last decade is also in line with the new policy for post-school education (Department of Higher Education and Training [DHET], 2014) in SA, which proposes that more South African universities should introduce ODL to create greater access for students in the country.

The aim of providing greater access to students will increase the number of students and will make it more difficult to use the traditional pen-and-paper assessment for ODL students. Therefore, the option of computer-assisted assessment (CAA) can play a bigger role in open distance learning. Combrinck, Spamer and Van Zyl (2015) defined ODL as an educational system which opens access to students and frees students from the constraints of time and space.

Although CAA is not a new approach to assessment, it was an initiative at the Unit for Open Distance Learning (UODL) at NWU to implement CAA to make assessment more manageable for lecturers (Ghilay, 2019). Wilson et al. (2011) and Ghilay and Ghilay (2012) confirmed this notion when they stated that CAA has become more important at higher institutions due to the increase in student numbers. During 2015, NWU and the UODL began to implement CAA with limited ODL programmes. CAA is a broad concept that can involve a number of things but the UODL decided to make use of multiple-choice questions (MCQ) as a starting point in the development and implementation of a CAA strategy. Therefore, when referred to CAA in this article it is synonymous with MCQ.

Although there is quite substantial research on CAA in other countries, research on CAA in an ODL context in South Africa is limited. This research attempts to fill that gap. The purpose of this research is therefore to reflect on lecturers' experiences on the use of CAA as an assessment strategy for ODL programmes at NWU in South Africa.

To achieve this purpose, the research was guided by the following research question:

• What are lecturers' experiences of the implementation of a CAA strategy in an open distance learning context?

REVIEW OF THE LITERATURE

A literature review was conducted to provide a critical synthesis of literature available on the topic. A summary of this literature is given in this section. Firstly, the concept of CAA is defined, and, secondly, the use of CAA at a few universities in selected countries is discussed. Thirdly, the advantages and disadvantages of CAA are considered, and, finally, the practice of MCQ as an assessment method is discussed.

Information and Communication Technologies (ICT) offer new possibilities for assessment at open distance institutions today. The emergence of ICT over the last three decades contributes to the shift in assessment from traditional pen-and-paper assessment to CAA, also named e-assessment (Jamil, 2012). The concept of CAA is therefore central to this article as mentioned earlier and it is important to look at the definition. Different scholars define CAA differently. Brown, Bull and Race (1997) state that it generally means the delivery, marking and analysis of examinations by means of computers and optical mark readers. Stephens et al. (2006) concurred and state CAA is an approach to deliver, mark and analyse examinations; record, analyse and report on achievement; collate and analyse data gathered from optical mark readers; and to collate, analyse and transfer assessment information through networks. Gretes and Green (2000), Thelwall (2000) and Marin, Nieto and Rodriques (2009) state that CAA includes the process whereby the computer selects MCQs from a larger database of questions. Jamil (2012) and Schoen-Phelan and Keegan (2016) further stated that CAA is any assessment that involves the use of computers for conducting or the marking of assessment products. Marin et al. (2009) expanded on this definition and stated that CAA is where computers are used to mark students' work, to provide feedback to students and to evaluate assessment effectiveness.

For the purpose of this article, CAA will have a narrower definition, and means the use of MCQs that are marked by an optical scanner as part of a computer-assisted strategy by the UODL at NWU. Due to the fact that CAA in this context consists of MCQ, the latter will be discussed in more detail later on in this section.

Computer-assisted assessment has a long tradition and many universities worldwide used this type of assessment during the 1980s. The Open University of the Netherlands is an example of an institution that began using it in 1984 (Wiegers, 2010). They realised that the pen-and-paper system had become very expensive and more inflexible. Their system selected the MCQs from a databank and also marked the answers produced on special answer sheets. By 1995, almost 50% of all examinations at The Open University of the Netherlands were based on this approach, and by 2000, the majority of examinations were done this way (Joosten ten Brinke, 2009). The popularity of CAA is quite widespread. In the USA, more than a million examinations were delivered and marked by computers during a twelve-month period (McKenna and Bull, 2000). This type of assessment is also widely used in Australia, specifically at the University of Sydney and Curtin University where 30000 students are assessed annually by means of CAA. Nicol (2007) stated that MCQs as part of a CAA strategy are increasingly being used by higher education institutions as a means of supplementing or replacing current pen-and-paper assessment practices. Computer-assisted assessment can be used as formative or summative assessment. Formative assessment, according to Dreyer (2014), is to determine the students' progress towards achieving the outcomes and to enhance the learning environment (Wilson et al. 2011). It therefore takes place during the teaching and learning process. Summative assessment, on the other hand, is assessment that takes place at the end of the teaching and learning process and determines the overall achievement of the student's academic success. It normally takes place at the end of a term, semester or year (Dreyer, 2014). Research found that many institutions used CAA more as part of their formative assessment strategy (Sim, Holifield & Brown, 2004, Nicol 2007, Wilson et al., 2011). This allowed them to be more student-centred and to provide feedback to the students. However, other institutions decided to use CAA more as part of their summative assessment strategy, which makes the assessment more formal, structured and organised, but it also requires more rigorous planning. McKenna and Bull (2000) stated that more than 70 universities in the United Kingdom use CAA mostly for summative purposes. Gikandi et al. (2011) support this claim by stating that online assessment (including CAA) is used more for summative assessment. The use of CAA at the UODL will be for summative purposes.

Bull (1999), Chalmers and McAusland (2002), Ricketts and Wilks (2002), Ghilay (2019) and Jamil (2012) describe a number of advantages of CAA. One such advantage is that it is possible to give quicker feedback to students once the marking is done by the computer. It also eliminates human error during marking and adding up marks. Feedback is important because it can motivate students when they receive feedback constructively and quickly. It can also help to identify students fairly quickly for additional support once an assessment was done (Bull 1999, Ghilay & Ghilay 2012). Crisp and Ward (2008) reported on the work of Black and Wiliam, who found that regular feedback yields substantial academic gains. Feedback is even more important for ODL students due to their limited contact time with lecturers. Ghilay and Ghilay (2012) confirm this notion when they state that feedback is an important part of CAA. Bull (1999) further described that CAA also saves the lecturer time by assessing his/her examination papers. This finding was supported by Crisp and Ward (2008), Conole and Warburton (2005) and Ricketts and Wilks (2002) when they said that CAA is an attractive option for lecturers that have a large number of students. Bull (1999) and Jamil (2012) further elaborated on this advantage by stating that such assessment can reduce the administrative load of staff during assessment times. Crisp and Ward (2007) also found that CAA increases the motivation of students to learn. McKenna and Bull (2000), Chalmers and McAusland (2002) and Jamil (2012) mentioned another advantage: CAA provides accurate data on how students perform with each question and can assist in ensuring the development of good, quality MCQs. Multiple-choice questions can be grouped according to different categories, such as difficulty, topic, type of skill, and learning level. Assessment questions that draw from each category can then be designed.

It is, however, also important to find out if there are any disadvantages to CAA. Bull (1999) Crisp and Ward (2007) and Schoen-Phelan & Keegan (2016) stated that the use of CAA may have some disadvantages when they found that CAA often consists only of MCQs, which can lead to assessment questions that only focus on lower-order thinking. This notion is supported by Sim et al. (2004), who said that CAA cannot assess higher-order thinking. Heinrich and Wang (2003) supported this view when they said that MCQs are not sophisticated enough to examine complex content and thinking patterns. Jamil (2012) stated that the frequent use of MCQ can lead more to testing than assessment. McKenna and Bull (2000) also raised the concern that MCQs cannot assess students' communication skills, and test items must therefore be carefully formulated to avoid decontextualisation, which can lead to a lack of contextual validity. Chalmers and McAusland (2002) also found that it takes quite a long time to set up an exam paper and they were also concerned about the quality of the MCQs in examination papers. Jamil (2012) identified more concerns with CAA when he stated that staff need additional training to use the system and that that can be time consuming.

As mentioned earlier, the practice of CAA at the UODL will consist mainly of MQCs that will be marked by an optical scanner (computer). It is therefore important to look more closely at MCQs. According to Ghilay (2019), MCQs are an objective type of assessment which require the choice of a number of predetermined answers. As mentioned earlier, a major concern for the use of MCQ is the tendency that it assesses lower-order thinking. Ghilay (2019) and Nicol (2007), however, dispute this claim and said MCQ can also be used to assess higher order thinking. This can be done by asking questions that not only rely on memory to select an answer but that require more complex thinking skills to select the correct answer. Nicol (2007) also identified a number of principles that can be used to strengthen the implementation of MCQ during CAA. Firstly, students can be involved in the clarification of goals and assessment criteria. Secondly, MCQs can be administered as an open book examination which can lead to greater reflection on the part of the students. Thirdly, students can receive more in-depth feedback after an MCQ examination which assists them to link their current knowledge to more complex issues. Fourthly, lecturers can develop activities that students could engage in after an MCQ examination to deepen their understanding of the work. Lastly, lecturers can create opportunities for students to take the MCQ repeatedly so that they can improve their performance.

CONCEPTUAL FRAMEWORK

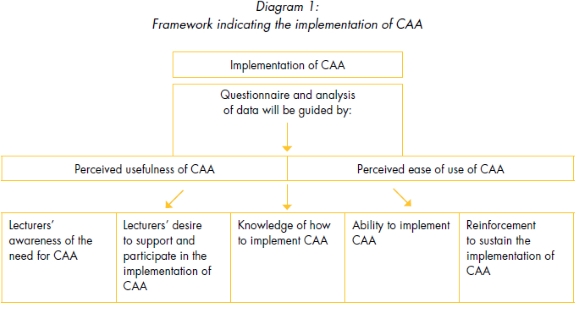

This article reflects on the experiences of lecturers on the implementation of CAA in an ODL learning context. The review of the literature indicated the Technology Acceptance Model (Davis 1989) and ADKAR Model (Kamzi & Naarananoja, 2013) have relevance to this study. They will be used as a framework for the study.

The Technology Acceptance Model was developed to explain an individual's or institution's intention to use a technological innovation and consists of two major predictors: (1) perceived usefulness and (2) perceived ease of use (Davis, 1989). These two predictors are important starting points when considering data collection and data analysis for this study.

The second useful model is the ADKAR model of change (Kamzi & Naarananoja, 2013). This model was developed to guide organisations or individuals to successfully implement a new initiative. This model was developed based on research conducted in more than 900 organisations and was developed by J. Hiatt of Prosci Change Management in 2003. This model focuses on five steps that an individual or organisation could follow to change or implement an initiative successfully. The five steps are the following:

• awareness of the need for change

• desire to participate in and support the change

• knowledge on how to change

• ability to implement required skills and behaviour

• reinforcement to sustain the change.

The study relates well to the two models in the sense that it is important to establish how lecturers experience the usefulness and ease of use of CAA. It was also important to establish if they were aware of the need of CAA and, additionally, if they had the knowledge and ability to implement such a new assessment approach.

All of the above are important concepts in this research and the authors synthesised these concepts into a framework that guided the data collection and data analysis

RESEARCH PROCESS

The research team explored the experiences of lecturers regarding the implementation of a CAA approach in an ODL institution. The researchers identified a qualitative approach as the best means for conducting this study. The reason for this is because the data-gathering technique of an open-ended questionnaire employed elicited responses reflecting the experiences of key participants (in this case, the lecturers at the UODL who implemented CAA in their modules during 2015 and 2016) in the implementation process. Qualitative research, according to Cohen, Manion and Morrison (2011), provides an in-depth and detailed understanding of meanings, actions, attitudes and perceptions. Qualitative researchers emphasise the socially constructed nature of reality and attempt to study human action from the insiders' perspective (Cohen, Manion & Morrison, 2011). For the purpose of this article, the researchers were interested in the process of the implementation of a CAA approach in the context of ODL, and the insiders referred to above are the lecturers who implement such an approach in their programmes.

Sample

Twenty-three lecturers from the Faculty of Education Sciences and three from the Faculty of Health Sciences (specifically nursing) were purposively sampled to participate in this open-ended questionnaire in 2015 and 2016.

These 26 lecturers were selected because they were the only ones who used the CAA approach for the mentioned examinations. The UODL, Faculty of Health Sciences and Faculty of Education Sciences voluntarily made use of CAA and these lecturers were the ones who decided to use it. The Faculty of Education Sciences has by far the larger component of ODL students (28 000), while the School of Nursing has approximately 2 000 students. The number of ODL students in the other faculties were very low and the lecturers in these faculties did not use CAA.

Data collection and analysis

Participants were asked to answer a set of open-ended questions. After the researchers received the 26 completed open-ended questionnaires, they analysed and categorised the responses into meaningful units, whereafter the researchers assigned a code to the units. After the process of coding was completed, the researchers organised and linked related codes to form meaningful themes. Six themes were identified and will be discussed in detail in the next section. Nieuwenhuis described this as a process whereby a researcher 'tries to establish how participants make meaning of a specific phenomenon by analysing their perceptions, attitudes, understanding, knowledge feelings and experiences' (Nieuwenhuis, 2007: 99) - in this case, the experiences of the lecturers who used CAA for the first time.

Ethical guidelines

All general ethical guidelines in qualitative research as well as university guidelines were adhered to. The lecturers voluntarily participated in the research and could withdraw from the process at any stage if they so wished. The questions were sent to them beforehand so that they could familiarise themselves with the questions. The questionnaire was accompanied by a cover letter which explained the aim of the research. Anonymity of the participants was assured.

FINDINGS

The qualitative data referred to in this research have been thoroughly analysed, interpreted and categorised based on their relevance The following six categories relating to lecturers' experiences and perceptions of CAA emerged from the qualitative analysis:

• reasons why lecturers participate in this CAA project

• lecturers' knowledge on CAA and MCQs

• the support lecturers received during the implementation of CAA

• the benefits of CAA identified by lecturers

• the challenges of CAA identified by lecturer

• the expectations of the lecturers, and if these expectations were met, by using CAA.

Reasons why lecturers participated in this CAA project

A number of participants indicated that they became part of the initiative because they were requested by the UODL and Faculties of NWU to do so. However, the majority of the participants indicated that they wanted to be part of the initiative because they wanted to develop further in their own practices and they believed in the value of adapting to new challenges.

The majority of participants also said that they believed CAA (which consisted of MCQs) would minimise their marking time and reduce the volume of compulsory marking as agreed upon in their task agreements. This reduction in the volume of marking would give them time to concentrate more on the development of their academic careers, such as conducting more research and publishing. One participant expressed his perception as follows:

It saves a lot of time in marking formal assessments, without jeopardising the quality.

Another participant said the following:

To address the amount of assignments, limited time available to mark the assignments

This is a sentiment shared by Bull (1999) and Jamil (2012), who stated that CAA can help to reduce lecturers' workloads. One participant also mentioned that using CAA would save the Faculty money as these answer sheets would be marked by the computer and not by paid external markers. This perception was confirmed by research when Bull (1999) and Ghilay (2019) stated that CAA reduces the administrative load of staff during assessment times. Another participant mentioned that she participated in the project because she wanted to find out if students who were registered for the National Professional Diploma in Education (NPDE) would benefit from this type of assessment. In her experience, NPDE students often struggle with paragraph-type questions, and by using more objective-type questions, such students could probably benefit from that - an expectation that was difficult to achieve due to the fact that not all NPDE modules were part of this CAA initiative.

Lecturers' knowledge on CAA and MCQs

The majority of participants said that they had limited knowledge of CAA and MCQs, but after they attended the workshop organised by the UODL and Academic Support Services of NWU, they felt more comfortable about the process. The purpose of the workshop was to prepare lecturers to use CAA for examination purposes. The following response from a participant is a good summary of how the participants felt about this question on their knowledge about CAA and MCQs.

I nitially not much, but after reading ample articles on Google and attending a workshop, I have more knowledge, but still not enough to set a well-balanced question paper.

The qualitative data indicated that lecturers were still not completely confident in setting MCQs after attending a workshop on this topic.

The support lecturers received during the implementation of CAA

The third theme is about the support lecturers received during the implementation of CAA. All the participants indicated that they attended a workshop on the use of CAA, as already alluded to in the previous theme. This workshop was organised and conducted by the Academic Support Services of NWU and UODL. The majority of participants felt that this workshop was sufficient to help them to use this type of assessment. The workshop helped them to better understand assessment through MCQs and how to go about formulating such questions. However, a few participants also said that the support they received was not enough. Although the Academic Support Services conducted the workshop, the participants felt that the Academic Support Services needed to support them through the whole process of setting up papers and formulating MCQs. In this regard, one participant stated:

I expected Academic Support Services to continue with support through to the exit point.

When asked how they perceived the support they had received, specifically from the UODL and the Faculty, participant responses varied. Some indicated that they received very little support from the UODL. One participant said that when she asked for support, she was advised to contact Academic Support Services. Some mentioned that they received some initial support, with an introductory session and e-mail support when needed.

To a certain extent, I recall one workshop on designing questions and one generalised feedback based on statistics of students' results.

However, some participants also indicated that the support they received was sufficient and helpful. This implies that participants were on different levels of development and therefore needed different levels of support.

The responses regarding the role of the Faculty were also very interesting. The majority of the participants said that the support was not very good and that it mainly consisted of a workshop and training session. One participant said:

There was support, a workshop was arranged.

However, some participants stated that they did not expect a lot of support because they are professionals: they can find out what to do and how to do it. One participant was more concerned about the lack of support by the Faculty about the translation of papers and the technical layout of these papers.

The benefits of CAA that have been identified by lecturers

All participants agreed that the benefits of CAA (MCQs) are indeed cutting both marking time and payment for markers. This view is supported in the literature. Bull (1999) as well as Crisp and Ward (2008) stated that CAA saves lecturers' time when marking papers. Ricketts and Wilks (2002) and Ghilay (2019) strengthened this argument by claiming that CAA could reduce the administrative burden of lecturers during examination times. In this regard, one participant said the following:

Although the compiling of a MCQs paper is much more time intensive, electronic marking is a huge benefit, especially if there are many students in the module.

This would imply that lecturers would have more time to focus on their own research, the development of study guides, and new programmes. This view is well summarised by one participant, who said:

It could free the lecturers from the burden of marking.

However, some participants indicated that they personally did not feel the effect of this 'saved time' as their task agreement marking had not been reduced, and they still needed to mark the same number of answer scripts that accumulate from their other modules. These statements should be explained against the marking policy of the Faculty of Education Sciences. At the Faculty of Education Sciences, lecturers receive a quota of assignments/examination papers to be marked before examination scripts can be outsourced to external markers. The result is that, even if lecturers use MCQs examination question papers, their quota, which is made up of their other modules, should still be marked before they can outsource marking. Although MCQs papers saved marking time, these lecturers did not feel the benefit of marking less due to the MCQs as they still needed to mark their quota. Therefore, only the Faculty benefits as it pays less marking remuneration. Another benefit, according to participants, is that the quality of marking is enhanced as it is more unbiased.

One participant found it helpful that

deep cognitive knowledge and application of theory in practice could be tested by means of MCQs.

Another participant supported this by saying that the use of this type of assessment would benefit the cognitive development of students:

More assessing opportunities [formative assessment] would be possible, which will enhance the acquisition of skills, knowledge and values...

Another participant mentioned that CAA (MCQs) would also develop students' reading speed as they are required to read quite a large number of questions. It is interesting to note that none of the participants mentioned that CAA can contribute to better and quicker feedback to students. The advantage of quicker feedback by using CAA is widely reported in the literature (Bull 1999; Ricketts & Wilks, 2002; Ghilay, 2019).

Another lecturer mentioned the following benefits:

The students benefit in that

• there is no bias

• they are not challenged concerning written language, grammar and vocabulary (no paraphrasing or long sentences)

• they don't have to parrot-learn long questions, etc.

The benefit of language, as mentioned above, can, however, also be a shortcoming as will be discussed under the next theme.

The following responses also indicated lecturers' positive feelings about this assessment:

A much, much faster turn-around time for assignments will give the students opportunity to resubmit assignments should they wish to do so.

Quicker feedback after exams will open the possibility of a second opportunity, instead of waiting another six months and teacher-students could obtain their qualifications quicker, etc.

The challenges of CAA that have been identified by lecturers

It was interesting to see that two participants indicated that there were no challenges when using CAA. All the other participants mentioned a number of challenges. The majority of participants were worried that this type of assessment will increase reading time due to the number of examination questions normally associated with an MCQ paper. Many of the students are second language English-speaking students who could find it more difficult to read and understand all the questions. That can be detrimental to students when using this type of assessment. The majority of the participants were also concerned about the time it would take to set a paper. One participant expressed it as follows:

... time-consuming to build a question bank and I am currently not convinced that students become scholars in their learning by means of MCQs.

They felt it would be much more time-consuming than setting an exam paper with paragraph and essay questions. There was also a sense that this type of assessment promotes rote learning and that the students who prefer reasoning-like questions would be influenced negatively:

That the levels of Bloom's taxonomy cannot really be assessed. Also, that students do not acquire the skills of the planning and then answering of long questions.

The participants continued with their concerns and mentioned that the focus on MCQs would lead to lecturers not seeing how students develop holistically with regard to the way they understand and answer questions, because, according to participants, MCQs tend to focus on objective questions. One participant emphasised the problem that students are not developing their writing style:

One of the shortcomings in using multi-choice questions is that it does not benefit students' writing style. That is the reason why I emphasised that the question paper cannot only consist of multiple-choice questions, but should also have longer questions to teach students how to write.

On a similar note, they indicated that they were concerned that this type of assessment would not be able to assess application skills and higher-order thinking. This concern is also found in the literature: Heinrich and Wang (2003) and Sim et al. (2004) stated that CAA (using MCQs) cannot assess higher-order thinking. However, this is a contentious issue because some scholars also said that, with the correct type of MCQs, it is possible to assess higher-order skills (Thetwall 2000; Jamil, 2012). It was further mentioned that this type of assessment makes it difficult to assess students' language skills. There were also concerns expressed that this type of assessment is not suitable for self-assessment, peer assessment, or reflection on assessment.

The expectations of the lecturers and if met by using computer-assisted assessment

The majority of participants indicated that several of their expectations were met, such as an increased pass rate, and that computer-assisted marking increased the quality and reliability of the marking process. The majority of participants also stated that their expectation of CAA saving time during examination was met. However, the majority of participants also indicated a number of expectations that were not met. One such expectation is the fact that lecturers thought when CAA is introduced, it will lead to more time being spent on setting the paper, but will save them time when marking the paper. They hoped that the time they saved would allow them to have more time to do research, but the majority of participants said that that expectation did not realise. They also expected to get more in-depth analysis of the results and item analysis, which also did not happen. This is an important finding, because the literature (Stephens et al., 2006) clearly indicates that CAA makes it possible to obtain accurate data on the students' performance and item analysis, and is an issue that should be looked into so as to help lecturers with setting MCQs. The expectation that this new assessment approach will lead to a change in their teaching style did not fully materialise. Quite a number of participants said that they did not really change their teaching style, but more than half of the participants stated that this new assessment approach forced them to think differently about their teaching style:

To a large extent, it was necessary. It is still a challenge to align teaching methodology to the new style of assessment.

DISCUSSION

The findings show that the majority of lecturers who participated in the study see the usefulness of CAA. They felt that CAA is useful because it can reduce their marking load and give them more time to do research or community work. Participants also felt that when CAA is used as formative assessment, it can contribute to deeper learning because the students will be assessed more frequently which will force them to study more regularly. It can also help to identify students at risk quicker. The main usefulness, however, when using CAA as summative assessment is the reduced marking load. Additionally, the results could be analysed for statistical purposes and such data can be used to analyse assessment trends.

It seems that the ease of use of CAA was somewhat problematic. The majority of participants said that they did not have a lot of knowledge in the use of CAA and specifically in the setting up of MCQ, and, although they received some training, they felt it was not enough. This is further strengthened by the fact that they felt the training they received was not enough to make them comfortable in using CAA. They also indicated that they were not supported well enough by the UODL and the Faculties involved in this initiative.

Another concern for the lecturers pertains to the students involved with CAA. Many of the lecturers felt that the use of CAA, specifically MCQ, will be detrimental to the second-language speakers because they will now have to read more questions. This can cause them to struggle or to misunderstand the questions. The findings also suggest that MQC can lead to surface learning due to low cognitive questions.

The challenges of CAA as discussed can lead to a lack in sustainability of CAA and it is therefore important that the institution consider the following recommendations.

Recommendations for the use of CAA

On an institutional level, a coordinated CAA policy for all faculties involved with ODL and CAA should be developed and established. Furthermore, the UODL should establish a CAA unit to become the focal point for CAA implementation at the institution.

• The research further showed that, although the implementation of CAA at the UODL was relatively small, it has the potential to expand. The data clearly indicated, and it is therefore recommended, that this initiative should be rolled out to more subjects at the UODL, and, in doing so, more time would be created for lecturers to also focus on other activities such as research. In line with this, it is recommended that the Faculties revisit its assessment policy so that it allows lecturers the benefit of saving time when using CAA, which may, in turn, allow lecturers to also focus on other academic matters.

• The NWU and the UODL must provide good training in the use of CAA which include the development and implementation of MCQ.

The NWU and the UODL must ensure that the technology and equipment used is of the best quality so that technical problems can be avoided.

• The data further suggested that lecturers need more support from the different departments within an institution. Not only is such support needed in the initial phase of the initiative, but also throughout the lifecycle of the initiative. Ongoing support for the lecturers in formulating MCQs and setting examination papers will contribute to the success of such endeavours. Lecturers must also see and experience the benefits of such assessment, because that will motivate them to take part in such an initiative. The Open University of the Netherlands is a good example of how a university started on a smaller scale with CAA and how they then expanded it to all their examinations (Joosten ten Brinke, 2009).

If the above recommendations are implemented there is a good chance that CAA at NWU and in the UODL will be sustainable and probably expandable in future.

CONCLUSION

The literature clearly showed that MCQs as part of CAA are widely used at universities and that there is a place for such assessment in an institution's assessment strategy. The UODL at NWU realised lecturers' enormous marking load when dealing with ODL students. This challenge can be successfully addressed with the use of CAA. However, it is imperative to have strategies and support available to lecturers to ensure not only the saving of time, but also quality assessment by enhancing higher-order development and thinking of students. Lecturers are willing to take on new assessment strategies and need to be supported by management in this regard.

REFERENCES

Brown, S., Bull. J. & Race, P. (1997) Computer Assisted Assessment in Higher Education. New York: Routledge. [ Links ]

Bull, J. (1999) Computer-assisted assessment: Impact on Higher Education institutions. Educational Technology and Society 2(3) pp.123-126. [ Links ]

Chalmers, D. & McAusland, W.D.M. (2002) Computer-assisted Assessment, The Handbook for Economics Lecturers. In H. John & W. David (Eds.) Glasgow Caledonian University, Scotland.

Cohen, L., Manion, L. & Morrison, K. (2011) Research Methods in Education. New York: Routledge. [ Links ]

Combrinck, M., Spamer, E.J. & van Zyl, M. (2015) Students' perceptions of the use of interactive white boards in the delivery of distance learning programmes. Progressio 37(1) pp.99-113. [ Links ]

Conole, G. & Warburton, B. (2005) A review of computer-assisted assessment. Research in Learning Technology 13(1) pp.17-31. [ Links ]

Crisp, V. & Ward, C. (2008) The development of a formative scenario-based computer assisted assessment tool in psychology for teachers: The PePCAA project. Computer and Education 50(4) pp.1509-1526. [ Links ]

Davis, F.D. (1989) Perceived usefulness, perceived ease of use and user acceptance of information technology. MIS Quarterly 13(3) p.22. [ Links ]

DHET. (2014) White Paper for Post-School Education and Training. Department of Higher Education and Training, Pretoria: Department of Higher Education and Training. [ Links ]

Dreyer, J.M. (2014) The Educator as Assessor. Pretoria: Van Schaik. [ Links ]

Ghilay, Y. (2019) Computer assisted assessment in Higher Education: Multi-text and quantitative courses. Journal of Online Higher Education 3(1) pp.1-21. [ Links ]

Ghilay, Y. & Ghilay, R. (2012) Student evaluation in higher education: A comparison between computer assisted assessment and traditional evaluation. Journal of Educational Technology 9(2) pp.8-16. [ Links ]

Gikandi, J.W., Morrow, D. & Davis, N.E. (2011) Online formative assessment in higher education: A review of the literature. Computers & Assessment 57(4) pp.2333-2351. [ Links ]

Gretes, J. & Green, M. (2000) Improving undergraduate learning with computer-assisted assessment. Journal of Research on Computing in Education 33(1) pp.46-54. [ Links ]

Heinrich, E. & Wang, Y. (2003) Online marking of essay-type assignments. Proceedings of the World Conference in Educational Multimedia, Hypermedia and Telecommunications, pp.768-772. Hawaii, AACE. [ Links ]

Jamil, M. (2012) Perceptions of university students regarding computer assisted assessment. The Turkish Online Journal of Educational Technology 11(3) pp.267-277. [ Links ]

Joosten ten Brinke (2009) Flexible Education for All: Open - Global - Innovative. Paper presented at the 23rd ICDE World Conference on Open Learning and Distance Education 7-10 June 2009. Maastricht, the Netherlands.

Kamzi, S.A.Z. & Naarananoja, M. (2013) Collection of change management models - An opportunity to make the best choice from various organisational transformational techniques. Journal of Business Review 2(4) pp.44-57. [ Links ]

Marin, D, Nieto, I. & Rodriquez, P. (2009) Computer-assisted assessment of free-text answer. The Knowledge Engineering Review 24(4) pp.353-374. [ Links ]

McKenna, C. & Bull, J. (2000) Quality assurance of computer-assisted assessment: practical and strategic issues. Quality Assurance in Education 8(1) pp.24-32. [ Links ]

Nicol, D. (2007) E-assessment by design: using multiple-choice tests to good effect. Journal of Further and Higher Education 31(1) pp.53-64. [ Links ]

Nieuwenhuis, J. (2007) Qualitative research designs and data gathering techniques. In K. Maree (Ed.) First Steps in Research. Pretoria: Van Schaik. [ Links ]

Ricketts, C. & Wilks, J. (2002) Improving student performance through computer-based assessment: Insights from recent research. Assessment & Evaluation in Higher Education 27(5) pp.475-479. [ Links ]

Schoen-Phelan, B. & Keegan, B. (2016) Case Study on Performance and Acceptance of Computer-aided assessment. International Journal for E-learning 6(1) pp.1-6. [ Links ]

Sim, G., Holifield, P. & Brown, M. (2004) Implementation of computer assisted assessment: lessons from the literature. Research in Learning Technology 12(3) pp.215-229. [ Links ]

Stephens, D., Bull, J. & Wade, W. (2006) Computer-assisted assessment: suggested guidelines for an institutional strategy. Assessment and Evaluation in Higher Education 23(3) pp.283-294. [ Links ]

Thelwall, M. (2000) Computer-based assessment: a versatile educational tool. Computer & Education 34(1) pp.37-49. [ Links ]

Wiegers, J. (2010) Institute for Educational Measurement (Cito), The Netherlands. The 36th International Association for Educational Assessment (IAEA) Annual Conference, 22-27 August, Bangkok, Thailand.

Wilson, K., Boyd, C., Chen, L. & Jamal, S. (2011) Improving student performance in a first year geography course: Examining the importance of computer-assisted formative assessment. Computers & Education 57 pp.1493-1500. [ Links ]

Yorke, M. (2003) Formative assessment in higher education: moves towards theory and the enhancement of pedagogic practice. Higher Education 45 (4) pp.477-501. [ Links ]

Date of submission 29 April 2019

Date of review outcome 17 September 2019

Date of acceptance 12 February 2020