Serviços Personalizados

Artigo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares em Google

Similares em Google

Compartilhar

South African Computer Journal

versão On-line ISSN 2313-7835

versão impressa ISSN 1015-7999

SACJ vol.35 no.2 Grahamstown Dez. 2023

http://dx.doi.org/10.18489/sacj.v35i2.17442

RESEARCH ARTICLE

Virtual learner experience (VLX): A new dimension of virtual reality environments for the assessment of knowledge transfer

Johanna Steynberg; Judy van Biljon; Colin Pilkington

University of South Africa, South Africa. Email: Johanna Steynberg- hsteynberg@bluebridgeone.com (corresponding); Judy van Biljon - vbiljja@unisa.ac.za; Colin Pilkington - colinpilkington@gmail.com

ABSTRACT

Science educators need tools to assess to what extent learners' knowledge can be transferred to novel real-world situations. Virtual reality learning environments (VRLEs) offer the possibility of creating authentic tools where situated learning and assessment can take place, but there is a lack of evidence-based guidelines to inform the design and development of the VRLEs focussing on the user, that is the learner experience, especially for secondary schools. Drawing on theoretical premises and guidelines from user experience, usability, and technologically enabled assessment literature, we designed, developed, evaluated, and refined a VRLE prototype for the authentic assessment of knowledge transfer in the secondary school science classroom as guided by the design science research approach. Lessons learnt from the implementation and iterative evaluation of the prototype are presented as a set of literature-based, empirically validated guidelines to support and guide educational designers and developers to create VRLEs focused on supporting the learner experience. The contribution of this study is a VRLE design model with the learner at its core, the definition of VLX to include learner-specific aspects of immersive environments, and guidelines for the development of an effective and efficient virtual reality environment for the assessment of knowledge transfer in science education.

Categories · Human-centred computing ~ Human computer interaction (HCI), Interaction paradigms, Virtual reality

Keywords: Augmented and virtual reality; Human-computer interface; 21st-century abilities; Learner experience; Virtual reality learning environments

1 INTRODUCTION

In January 2020, the World Economic Forum (WEF) stated that the fourth industrial revolution would create the demand for millions of new jobs in completely new occupations over the next few years, due to advances in technologies such as data science and artificial intelligence (World Economic Forum, 2020). The COVID-19 pandemic has accelerated all predictions relating to the digitisation of society (Alakrash & Razak, 2022; Amankwah-Amoah et al., 2021). Situations that learners will encounter in their professional lives will vary from having minimal similarity to previous experience to being completely novel. To complicate matters, each person's experience of a situation or problem is unique, and the work environment is constantly changing at an ever-increasing pace (Erebak & Turgut, 2021; Heng & McColl, 2021; Steiber, 2014; Zawacki-Richter & Latchem, 2018). This means that education has to empower learners to use relevant information and recognise situational conditions under which previously mastered skills and knowledge could be applied at a faster pace than ever before and to do this flexibly in these new situations that they have not previously experienced (Çakiroglu & Gökoglu, 2019a; Falloon, 2019; Spector et al., 2016).

In secondary school science education, learners need to acquire and develop science skills that are not specific to a domain or situational context but which should transfer through deeper learning to complex, real-life situations that may be predominantly unknown in a learner's daily life (Andrews, 2002; Bogusevschi et al., 2020; Jiang et al., 2015; Spector et al., 2016). For science educators, assessment is crucial for determining the level of transfer of science skills and knowledge across diverse domains and instructional contexts to ensure the successful application of skill and knowledge in novel situations (Çakiroglu & Gökoglu, 2019a; Jiang et al., 2015; McHaney et al., 2018). Therefore, science educators need assessment tools that allow for the measurement of knowledge acquired and the level of cognitive or physical skills gained through learners' performance in authentic tasks (Bogusevschi et al., 2020; Novak et al., 2005; Shavelson et al., 1991). Authentic tasks are those that closely represent tasks a learner could encounter in the real world that are believable, challenging, and meaningful with open-ended solutions (Sokhanvar et al., 2021).

Various studies have provided evidence that technology-enabled assessments can support problem-solving and skill development as computers and multimedia technology can provide the complex contexts that constitute realistic situations with tasks similar to those learners would be performing in real-world contexts (Herrington et al., 2013; Kennedy-Clark & Wheeler, 2014; Mikropoulos & Natsis, 2011; Özgen et al., 2019; Pellegrino, 2010; Rosen, 2014; Schott & Marshall, 2018; Spector et al., 2016). Such computer-generated simulation platforms can be used to create authentic virtual spaces that provide safe and effective environments where situated learning, as well as assessment, can take place (Bosman et al., 2021; Çakiroglu & Gökoglu, 2019a; Crosier et al., 2002; Idrissi et al., 2017; Kavanagh et al., 2017; Vos, 2015; Zuiker, 2016).

Virtual reality (VR) is the simulation of an environment set up in a synthetic space that embeds a human as a part of the computer system (Craig et al., 2009; Donath & Regenbrecht, 1995; Özgen et al., 2019; Penichet et al., 2013; Portman et al., 2015; Zhou et al., 2018). Abstract concepts and unobservable phenomena, such as magnetic fields, can be visualised in virtual reality in a novel way, supporting learning and assessment through interactivity and personal experience (Bogusevschi et al., 2020; Craig et al., 2009; Crosier et al., 2002; Schott & Marshall, 2018). This enables learners to construct their own knowledge and synthesise it with their existing conceptual frameworks (Madathil et al., 2017; Makhkamova et al., 2020).

Virtual reality learning environments (VRLE's) have the potential to provide such authentic assessment environments but there are numerous challenges to creating these VR assessment tools. The tension between the opportunities provided by VRLEs and the lack of guidance on designing VRLEs formed the rationale for this study. There are considerable design and implementation challenges when developing an effective VRLE efficiently (Górski et al., 2016; Liu et al., 2016; Zhou et al., 2018). Despite a number of studies providing evidence of the value of VRLEs in education (Concannon et al., 2020; Makransky et al., 2019; Sun et al., 2019), there is a dearth of evidence-based guidelines to support designers and developers, especially in the secondary school environment. This study addressed this research gap by investigating the question: 'Which user experience design aspects of a virtual reality environment are the most critical for the assessment of knowledge transfer in science education?' Notably, the application context is the secondary school environment.

In 2020, the COVID-19 pandemic affected education in an unprecedented manner. Attempts to continue offering education to 1.7 billion affected learners, in the face of the strict lockdown conditions prevalent in many countries, resulted in a shift to online classrooms (UNESCO, 2020) with the net effect on education being to accelerate the digital transformation of instruction delivery (Adedoyin & Soykan, 2020; Alakrash & Razak, 2022). Successful online instruction delivery requires effective e-assessments to include not only digital versions of closed questions but also more sophisticated tasks and skill-based or competency-based assessments (Joint Information Systems Committee, 2010; Spector et al., 2016; Tinoca et al., 2014). As a result of the pandemic, the shift in education and assessment towards improved technology-enabled environments became crucial to ensure continued quality education and assessment for learners all over the world (Adedoyin & Soykan, 2020; Alakrash & Razak, 2022; Amankwah-Amoah et al., 2021; UNESCO, 2020). Considering these developments, virtual reality classrooms, including virtual assessments, once considered an unaffordable luxury, may become a necessity as priorities adjust to the new reality. Several researchers have proposed design models for VRLEs (see section 2), but the majority of these models do not explicitly consider the unique characteristics of immersive environments, specifically in terms of the context of user experience (UX) design (Akcayir & Epp, 2020). In the light of the global digitisation of education, which was hastened by the influence of the COVID-19 pandemic, the urgency of research addressing the lack of guidance on implementing VR in an educational context is compelling. The research approach and method of this study is explained in section 2, and section 3 describes the evaluation of the prototype and the results of the findings. Section 4 explains the findings and contributions of this study. Section 5 contextualises the results and the paper concludes in section 6.

2 METHODOLOGY

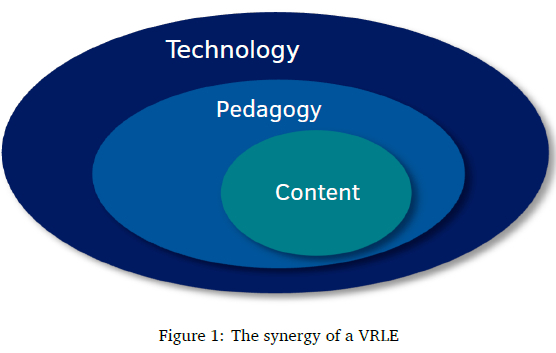

This study focused on constructing guidelines based on a conceptual model for the design and development of an effective and efficient VRLE for the authentic assessment of the transfer of skills and knowledge in the secondary school science classroom. Drawing on theoretical premises from the user experience, usability, and technologically enabled assessment literature, the research was guided by the design science research (DSR) approach. A practical artefact in the form of a VRLE was developed, directed by the guidelines identified from literature summarised in Table 1 and the initial conceptual model shown in Figure 1.

The lessons learned during the first iteration of the study were published in 2020 (Steynberg et al., 2020). This paper builds on the findings of the previous publication (as in Table 1) and additionally reports on the findings from the evaluation of the artefact obtained in Phase 2. The previous contribution is extended by this paper's focus on the virtual learner experience (VLX) as an extended view of UX.

Following DSR's iterative process, an artefact was developed and evaluated, focusing on the user experience when using the artefact. Notably, we consider usability as a subset of UX as advocated by Väätäjä et al. (2009). We employed the usability metrics of effectiveness, efficiency, and user satisfaction as core constructs in designing the evaluation, while acknowledging that UX evaluation extends beyond those constructs (Albert & Tullis, 2013). The design, development, and evaluation of the artefact took place over two iterations - iteration one started with a design and specification step, whereafter the development and implementation of Artefact One took place, and the evaluation of Artefact One followed. The results of the first evaluation guided the changes in the second iteration, leading to the modification of the Artefact One and resulted in Artefact Two. All the responses obtained in the evaluation interviews, the observations of users interacting with the VRLE and cost journals, and data from the stealth assessments, were analysed and synthesised as described in Section 3. From these findings, the conceptual model was refined, and guidelines were subsequently constructed, incorporating appropriate suggestions and proposals gleaned from previous published literature.

2.1 Design and development

Mahdi et al. (2018) and Zhou et al. (2018) concur that VRLE design is a complex activity, integrating technical difficulties from the nature of VRLEs with cognitive aspects, such as content definition and task appropriation. Therefore, they propose that VRLE design and development should combine three different areas - content, pedagogy, and technology.

In a VRLE, these three areas form new interrelationships that cause the three parts to fuse to form a new synergy rather than existing in isolation. This synergy is not simply a sum of the parts - the interaction between and within the parts gives rise to a new integrated environment. Initially, we visualised this as an onion diagram, with content in the centre, pedagogy around it, and technology encapsulating content and pedagogy (Figure 1). The guidelines from different studies were analysed and classified according to this new, integrated environment. When the artefact was designed and developed in Phase 1 of our study, this three-part environment evolved into a conceptual model that was closely followed.

As guided by the conceptual framework, the design of the first layer started with the core of the onion diagram - the definition of the content of the VRLE, namely, the problem, objectives, content, and audience. The problem that this artefact addressed was the assessment of a learner's scientific knowledge and skill transfer to a new situation, focusing on magnetism and electromagnetism. The audience for the VRLE included secondary-school learners who are fluent in English. In 2020, this meant that it included learners who were born between 2002 and 2006. These learners are part of Generation Z, that is, people born after 1995 and before 2013 (Bilonozhko & Syzenko, 2020). The content of the artefact was designed with the characteristics of this audience in mind. By using a dystopian or post-apocalyptic setting in this study, the specific learner group connected and engaged with the theme as was seen by comments such as:

"It felt post-apocalyptic like in a movie. It was very cool, I really enjoyed it. It was very easy and an interesting way to learn." [L3]

Guided by the application context, the levels of immersion and realism were considered, the level of interaction was specified, and the entry point and navigation methods were decided.

Having defined the content, we moved to the next layer of our conceptual model, namely, the design of the pedagogy. The learning scenarios were identified, the levels of differentiation were determined, and the instructional support and scaffolding were described. In the outer layer, that is, technology, the hardware and software to be used were determined, while negative effects such as motion sickness were considered, and the learner's personal space was respected throughout the development. Integrating the designs and suggestions from the three layers of the conceptual model, namely, content, pedagogy, and technology, a detailed design of the VRLE was drafted as a storyboard.

The storyboard includes each scene in the environment, the objectives, details of the scene, action and challenges in the scene, cues, and interactions, what learner response can be expected, change of scene, or redirection away from the scene after a learner's action. The detail of one of the tutorial scenes is shown in Figure 2.

The hardware used in the design and evaluation of the prototype included a desktop computer running Windows 10 with an Intel i7 chip, an NVIDIA GeForce RTX 2070 graphics card, and 64 GB RAM. The head-mounted display used was an Oculus Rift with integrated earphones, two motion sensors and two hand controllers. The prototype was exclusively developed in Unreal Engine 4.22.

2.2 Limitations

VR is not recommended for use by children under the age of 13 years (Nemec et al., 2017). Therefore, this study did not include educational material for elementary learners. Furthermore, this study did not investigate the emotional impact of using VR for assessment purposes, and the use of VR platforms for special needs education is beyond the scope of this study.

Artefact Two was tested by a group of 10 secondary-school learners as a convenience sample. Our sample of only 10 learners might seem limiting in terms of generalisation, however, when investigating usability, a sample of five participants has been found to uncover 80% of the usability issues (Lewis, 1994; Virzi, 1992). It has also been found that severe usability problems are easier to detect with the first few participants (Lewis, 1994). Albert and Tullis (2013) contend that given a small number of tasks or scenarios and one distinct user group, where it is not paramount that all usability issues should be uncovered, a sample size of between five and 10 participants is sufficient. This study investigated a prototype with only two tasks to be performed with the objective of constructing guidelines for the design and the development of a VRLE. Additionally, the target audience of the artefact is secondary-school learners in the science classroom, a fairly homogenous group of teenagers. As noted, 10 participants are accepted as adequate in usability testing, but we acknowledge that UX is broader than usability and includes several qualities that are more subjectively perceived. Therefore, future work to validate this study's contributions with additional user groups or a more heterogeneous sample is advisable.

3 EVALUATION

Two evaluation phases were conducted during the development of the VRLE. Phase 1 consisted of an evaluation by three general users and two domain experts. The results and suggested changes fed through to the modification of the VRLE in Phase 2, where the second phase trials were conducted with 10 secondary-school learners. The feedback and results from the second phase evaluation are explored in this paper. Ethics clearance to conduct the trials was granted by Unisa College of Science, Engineering and Technology's Ethics Review Committee with ERC reference number 020/JCS/2019/CSET_SOC.

3.1 Quantitative analysis

Feedback on the learners' experiences was obtained in one-to-one interviews that included Likert-item questionnaires and open-ended questions. For the Likert-item questions, five classes of answers were considered - strongly disagree, disagree, neutral, agree, and strongly agree. The questions captured user perspectives related to the effectiveness, efficiency, and satisfaction experienced when using the VRLE.

The frequency of each scale point grouped per subsection is presented in Figure 3.

Considering effectiveness, there were four questions that addressed effectiveness and 10 participants answered the question. Five of these responses disagreed with the statement, hence, this equates to 12.5%. The other sections were scored in a similar fashion. Physical comfort scored the highest in the evaluations, and it was followed closely by attitude and motivation. Therefore, it can be deduced that satisfaction (3.1, 3.2, and 3.3) was the section where the learners rated their experiences the most positively. Interaction with virtual objects (1.3) and ease of navigation (2.1) scored the lowest in the feedback; thus, it can be concluded that efficiency was the section they rated their experiences least positively.

The mean and standard deviation were then calculated for each of these subsections and aggregated to find these measures for each section. The count indicates the number of responses per subsection, namely, number of questions χ number of participants. The results are displayed in Table 2, supporting the findings from analysing Figure 3, where satisfaction scored the highest with a mean of 4.7083 and a low standard deviation of 0.7788. Similarly, efficiency scored the lowest with a mean of 3.8667 and a standard deviation of 1.2579. This seems to indicate that the learners were satisfied with the experience despite the navigation challenges experienced.

3.2 Thematic analysis

The responses to the open-ended questions from the one-to-one interviews with the learners in the second phase evaluation were analysed and synthesised into themes and sub-themes. This was done through thematic analysis - a method for analysing qualitative data that identifies, analyses, and reports repeated patterns in order to describe, understand, and interpret a data set (Braun & Clarke, 2006; Clarke & Braun, 2016; Kiger & Varpio, 2020).

Figure 5 displays the main themes arising from the semi-structured interviews as a tree map, where the area of each section represents the number of times the theme occurred in the responses in the interviews.

As can be seen from Figure 5, learners surprisingly commented the most on the overall emotional experience of the VRLE. Aspects highlighted regarding emotional experience can be summarised under the following five categories:

Enjoyment

All the learners commented on the enjoyment of the game.

"It was an enjoyable and fun way to learn." [L2]

"It was very cool." [L3]

"I really enjoyed it." [L3]

"I enjoyed it very much." [L4]

Interest generated

"It had a good start with a cliff hanger. It was very nice." [L10]

"It was more enjoyable, interesting, and not boring." [L5]

Immersion

Many learners commented that they felt immersed in the VRLE and felt themselves to be part of the environment.

"I felt like I was part of the environment. Like I really was there." [L1]

"It was very cool, I felt as if I was really there." [L8]

"I felt as if I was part of the game." [L10]

Adaptation

A few learners indicated that they had to adapt to the environment. L3 said that she had to get used to how it worked and L10 mentioned that his eyes had to adapt a little. L7 said that

"Something felt strange, I am not sure why."

Expectations

In addition to their emotional experiences, learners had certain expectations of the VRLE before using it.

"I did not expect such good quality," [L1]

and L9 mentioned that he did not know what to expect. He also said that he expected it to be much harder. L5 supported these preconceived expectations of the learners, saying that:

"I expected more problems with navigation, but it was quite easy to use."

Interestingly, one learner commented on other participants' anticipated emotional experience:

"Some people won't like it and not everyone will enjoy using it." [L7]

3.3 Discussion of results

Participants found the VRLE interesting, engaging, and instructive, and they enjoyed the storyline and the challenges. Some of the participants mentioned that they knew exactly what to do; this implies the explanations were at the correct level for learners and that they enjoyed the challenge. They would, nevertheless, have liked a longer game with more interaction and harder challenges. They suggested more experimentation with the magnets and more interaction with other objects as well.

Several shortcomings were also mentioned during both phases of the trials. Many participants noted that the changes and moving around in the VRLE were either too fast or too slow. Furthermore, object manipulation was occasionally erroneous and inaccurate. Interestingly, a few commented on the weightlessness of the objects and noted that not being able to use their hands when picking up an object in the VRLE felt unnatural. The use of gloves with sensors as controllers might be a necessary enhancement when VRLEs are widely used.

Surprisingly, most of participants did not experience significant negative side effects such as motion sickness or claustrophobia. A few mentioned that the elevator that lifted them to the new world was interesting, "cool" or "weird". Another participant commented that she felt very alone in the VRLE with no other people around. She suggested that there should be other people welcoming the learner into the New World.

The need for the facilitator to communicate with the learner while he or she is using the VRLE also became apparent. The learner cannot hear anything apart from what is coming through the headphones connected to the head-mounted display, and the facilitator or teacher cannot assist or direct the learner if needed. A system where the voice of the facilitator could be projected to the learner's headphones would be beneficial. Notably, this will depend on the audio functionality of the headset used.

4 CONTRIBUTIONS

4.1 A refined design model

Before the commencement of the design in Phase 1, a design model was derived from literature for the parts that must be considered when developing a VRLE, namely, the content, the pedagogy, and the technology (seen in Figure 1).

However, throughout the evaluation of the artefact that took place in Phase 2, it was evident that there was another part that was essential to the development of the VRLE. We noted that the user is embedded in the VR environment, therefore, he or she becomes part of it rather than existing as a separate entity (Radianti et al., 2020). In view of this realisation and the feedback regarding the learner's emotional experience of the VRLE, we proposed that the learner should form the central part of the design model, modifying the diagram of the model depicted in Figure 1 to the refined model seen in Figure 6.

This new model shows that the design of a VRLE should start with identifying the audience, including the demographics of the learners who will use the VRLE, determining the profiles or personas of these learners, and the target audience's expected pre-knowledge or existing conceptual frameworks. After these aspects have been identified, the rest of the VRLE design and development can follow, that is, the decisions related to the Content followed by the Pedagogy, and finally the Technology to support the implementation.

This modification of the design model, to place the learner at the core of the design model, will ensure that suitable content will be identified for presenting in the VRLE. Accordingly, the learners' developmental stage, experience, and skills will lead the design of the realism of the VRLE by using real-world metaphors and wayfinding methods that the learner is comfortable with. For example, a real-world metaphor for 'phone a friend' could be a payphone for an older audience, while for millennials it should be a cellular phone. Similarly, for wayfinding, an older audience would gravitate to a map, whereas millennials would more likely try to use a GPS or a map application. Based on the learners' profiles, scenarios can be developed to complement their interests and experiences. Authentic tasks can be created involving topical current affairs that the specific group of learners can relate to; this can broaden the learners' experiences by applying the knowledge presented in the VRLE to real-world scenarios. By considering the learners' demographics, differentiation according to language and abilities can be created. Pedagogical support should be designed with the learner in mind - while one age group may prefer a cartoon character as a pedagogical agent, others may prefer a visual task list to guide them through the VRLE. Lastly, the learners' demographics should guide the selection of hardware and software, as different age groups have different large and small motor skills that influence the use of hand controllers or buttons.

Developing the VRLE with the learner at the core could lead to an improved learner experience and more successful learning and assessment processes.

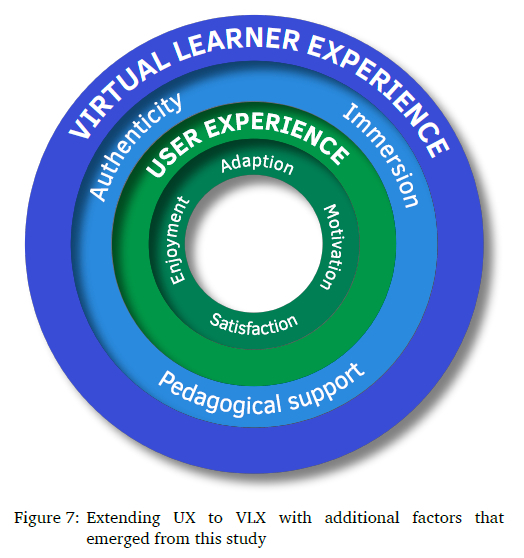

4.2 Extending UX to form VLX

The UX of a human-computer system is defined as the quality of a user's experience when interacting with the system. This includes the user's physical, cognitive, and emotional experience. The factors that are considered in measuring this experience vary in the literature and identifying these factors is an ongoing process in various studies (International Organization for Standardization, 2018; Moczarny et al., 2012; Nikou & Economides, 2019; Rhiu et al., 2020; Rogers et al., 2002). However, certain design aspects, like authenticity, immersion, and instructional support, that influenced the virtual learner experience (VLX) while using the VRLE have not been considered as part of UX in the current literature, and do not form part of the UX of general computer systems. Therefore, we propose that the definition of the UX of a virtual learning system should be extended to include learner-specific aspects. This extended definition of UX will be referred to in the rest of this study as VLX, where the quality of this experience is underpinned by the quality of the learning process, as contained in the learning environment.

During the DSR iterative design process that was followed in this study, the learners commented extensively on their emotional experience of the platform. The factors that they commented on that form part of the existing definition of UX included their emotional enjoyment, their expectations, and their adaption to the environment. However, they also commented on factors not included in current UX definitions, namely, their immersion in the scenarios,

"I felt like I was part of the environment. Like I really was there." [L1]

the authenticity of the tasks,

"There was a story and a realistic goal - that was very nice," [P4]

"It had a good start with a cliff-hanger," [L10]

and the guidance they received,

"It was nice that they explain exactly what was going on." [P4]

From the literature studies and the lessons learnt from the DSR process, the following three factors are proposed as components of VLX, as they influence the quality of the learning experience:

Authenticity

Authentic scenarios based on real-world problems and tasks that concern topical current affairs may improve the transfer of learning from the learning environment to the real world (Cakiroglu & Gökoglu, 2019a, 2019b; Craig et al., 2009; Crosier et al., 2002; Ritz & Buss, 2016; Schott & Marshall, 2018). Authentic tasks allow the learner to experience real-world situations where they can think innovatively and experiment with solutions to problems while being in a safe environment.

Immersion and engagement

When a learner is immersed in the learning scenario, his or her engagement with the learning material improves and may lead to better conceptual understanding and transfer of learning (Cakiroglu & Gökoglu, 2019a, 2019b; Craig et al., 2009; Crosier et al., 2002; Huang et al., 2018; Ritz & Buss, 2016; Schott & Marshall, 2018). Engagement is closely linked to interaction, and a high level of interaction between the learner and the learning material may lead to a better conceptual understanding (Kavanagh et al., 2017; Parong & Mayer, 2018; Pigatt & Braman, 2016).

Pedagogical support

Instructional support in the form of pedagogical agents is essential in learning environments, where a physical teacher takes on the role of a facilitator and is not directly involved in the learning process. These pedagogical agents can guide the learner in the learning process, can model desired behaviour and can provide scaffolding for developing new skills (Chen et al., 2004; Clarebout & Elen, 2007; Mamun et al., 2020; Minocha & Hardy, 2016; Schroeder, 2016). Through their presence and interaction with the learners, these agents can provide emotional support and eliminate feelings of loneliness in the VRLE.

These three additional components of VLX can be visualised with an extended definition of UX, as can be seen in Figure 7.

The perception that the applicable target audience, that is, the typical learner profile, should influence the design and the development of the VRLE became increasingly clear as this study progressed. The learners should, thus, be seen as the starting point for the design and development of the VRLE, and the VRLE should be built with the learners' demographics, characteristics, and existing conceptual frameworks in mind. The factors influencing VLX that arose from this study were identified as authenticity, immersion, and pedagogical support.

4.3 VRLE guidelines for VLX

The lessons learnt from Phases 1 and 2 were synthesised with guidelines and frameworks from existing literature, and a new set of guidelines were constructed to answer the main question -'What are the UX design aspects of a virtual reality environment for the assessment of learner knowledge transfer in science education focusing on magnetism?'

These guidelines were practically evaluated through the DSR cycles, and they can now be used by educational material designers when developing an effective, efficient, and satisfactory VRLE focusing on VLX for assessment in the secondary-school science classroom.

To structure the guidelines, the modified design model from Figure 6 was used as a starting point and the guidelines were divided into the four parts identified, namely, the learner, the content, the pedagogy, and the technology.

The learner forms the core of the entire process of design and development. The starting point of the VRLE design should be the identification and definition of the prospective learner audience. The learner's experience, engagement, motivation, and well-being must be considered as of utmost importance and should be the thread through the whole design and development process.

The guidelines then focus on the content of the VRLE, which together with the context of the VRLE, has an integral influence on the enjoyment and engagement of the learners. The objectives and goals of the VRLE should be clearly identified and presented in an engaging storyline depicted in real-world scenarios with appropriately complex and authentic tasks.

The next part of the design model is the pedagogy. When considering the use of a VRLE for assessing the transfer of learning to the real world, the pedagogical aspects of the VRLE should be carefully designed to ensure that the assessment is reliable and valid. Clear objectives and goals should be determined with help being always accessible.

Lastly, the technology used to implement the VRLE envelops all the previous parts. Without appropriate hardware and software for the implementation of the VRLE, the effectiveness and efficiency of putting the learner into the centre of VRLEs would be impaired. Wearable hardware should be comfortable, adjustable, and not cause fatigue. The VRLE should be easily mastered with intuitive controls and natural navigation. Physical side-effects should always be considered and mitigated as far as possible to accommodate learners with different physical characteristics.

The final guidelines, structured with the learner as the focus/centre of the design model in mind, are shown in Table 3.

5 CONTEXTUALISATION

To contextualise the value of our findings, we considered studies that have investigated aspects of VRLEs during the past 12 months and now present a selection of those findings. A few of those will now be discussed.

Makransky et al. (2019) investigated the effectiveness of immersive VR as a training environment and obtained their seminal results by comparing the performance of learners studying in three environments, namely, immersive VR, desktop VR, and paper-based training. They concluded that the performance of the different groups of learners did not differ on the immediate retention test, but there was a significant improvement in the transfer of learning to experience in a physical lab in the group that received training in immersive VR.

Concannon et al. (2020) studied the possibility that experience with simulated practical exams lowered occupational therapy students' anxiety. They created a virtual clinic with VR

simulation that featured a clinic and a standardised patient whom students could interview in natural language. In this study, they concluded that the students who participated in the VR simulation, as part of their examination preparation, had a significantly lower anxiety level when conducting their actual practical exams.

Sun et al. (2019) investigated the use of immersive VR for assessment in higher education. They concluded that students preferred to use the VRLE, found it more engaging, and had an increased sense of involvement. This is supported by the study by Akman and (]akir (2020), focusing on the engagement and retention of learning in the primary school mathematics classroom.

Yu's (2021) systematic review found that overall learning outcomes and achievements could be significantly improved by VR. Furthermore, they concluded that the design of the VRLE may directly affect the influence of the VRLE on learning. This also supports the importance of this study, namely, to ensure the optimum design of a VRLE with the learner at the core of the design.

These studies provide evidence to support the value of using VRLEs to support knowledge transfer to practical situations, as well as reducing anxiety in practical examinations. However, none of the recent studies addressed the gap in terms of VRLE design guidelines for assessing knowledge transfer in the science classroom, and they support the need for expansive and clear design guidelines to ensure that the positive effect of the VRLE on learning is maximised.

6 DISCUSSION AND CONCLUSION

During the initial investigation of the literature in 2018 when this study commenced, it was found that the focus of education was shifting, with learning in the 21st century becoming increasingly characterised by a large number of non-recurrent skills that have to be applied flexibly to novel situations (Bossard et al., 2008; Guardia et al., 2017; National Research Council, 2012; Spector et al., 2016; van Gog et al., 2008). Therefore, the proposal was that assessment should move away from the current linear model, which focuses on content and is isolated from real-life situations, towards authentic, real-world assessment that enables learners to experience problem-solving in a safe virtual environment (Andrews, 2002; Guärdia et al., 2017; Heady, 2000; Idrissi et al., 2017; Roussou et al., 2006; Spector et al., 2016). The COVID-19 pandemic changed education in an unprecedented manner, resulting in a shift to online classrooms (UNESCO, 2020). In this shift, the need for effective e-assessments was brought to many pedagogists' attention. Educational material designers now have the motivation to develop e-assessments that not only include digital versions of closed questions, but also more sophisticated tasks and skill- or competency-based assessments (Adedoyin & Soykan, 2020). These fundamental changes to the core of education now call for a renewed focus on e-assessment to ensure that the highest levels of requirements are met.

This research concentrated on the UX design aspects of a VRLE for the assessment of learner knowledge transfer in science education. The findings from the evaluation of the artefact obtained in Phase 2 suggest the need for a model where the learner is at the core/centre of the design of the learning environment. This insight is concretised by describing VLX as an extended view of UX, where it includes the quality of a learner's experience when interacting with a learning environment. VLX is defined in this study as the virtual learner experience, a focused view of UX including learning-specific aspects such as authenticity, immersion, and instructional support, in addition to the traditional aspects of UX. This implies that the quality of the learning process, as contained in the learning environment, forms the basis of the learners' experience in terms of usability. The theoretical contributions of this research include the evidence-based VLX design model and the VRLE design guidelines for the assessment of knowledge transfer in science education. More research is required to expand these learning-specific aspects to solidify the description of VLX and simplify the design and development process of VRLEs.

ACKNOWLEDGEMENT

This paper is based on the research supported by the South African Research Chairs Initiative of the Department of Science and Technology and National Research Foundation of South Africa (Grant No. 98564).

References

Adedoyin, O. B., & Soykan, E. (2020). Covid-19 pandemic and online learning: The challenges and opportunities. Interactive Learning Environments, 31(2), 863-875. https://doi.org/10.1080/10494820.2020.1813180 [ Links ]

Akcayir, G., & Epp, C. D. (2020). Designing, deploying, and evaluating virtual and augmented reality in education. IGI Global. https://doi.org/10.4018/978-1-7998-5043-4

Akman, E., & Cakir, R. (2020). The effect of educational virtual reality game on primary school students' achievement and engagement in mathematics. Interactive Learning Environments, 31(3), 1467-1484. https://doi.org/10.1080/10494820.2020.1841800 [ Links ]

Alakrash, H. M., & Razak, N. A. (2022). Education and the fourth industrial revolution: Lessons from COVID-19. Computers, Materials & Continua, 70(1), 951-962. https://doi.org/10.32604/cmc.2022.014288 [ Links ]

Albert, B., & Tullis, T. (2013). Measuring the user experience: Collecting, analysing, and presenting usability metrics (2nd ed.). Morgan Kaufmann. https://shop.elsevier.com/books/measuring-the-user-experience/albert/978-0-12-415781-1

Amankwah-Amoah, J., Khan, Z., Wood, G., & Knight, G. (2021). COVID-19 and digitalization: The great acceleration. Journal of Business Research, 136, 602-611. https://doi.org/10.1016/j.jbusres.2021.08.011 [ Links ]

Andrews, L. (2002). Transfer of learning: A century later. Journal of Thought, 37(2), 63-72. http://www.jstor.org/stable/42590275 [ Links ]

Bevilacque, F. (2013). Finite-state machines: Theory and implementation. https://gamedevelopment.tutsplus.com/tutorials/finite-state-machines-theory-and-implementation--gamedev-11867

Bilonozhko, N., & Syzenko, A. (2020). Effective reading strategies for Generation Z using authentic texts. Arab World English Journal, (2), 121-130. https://doi.org/10.24093/awej/elt2.8 [ Links ]

Bogusevschi, D., Muntean, C., & Muntean, G. (2020). Teaching and learning physics using 3D virtual learning environment: A case study of combined virtual reality and virtual laboratory in secondary school. Journal of Computers in Mathematics and Science Teaching, 39(1), 5-18. https://www.learntechlib.org/primary/p/210965/ [ Links ]

Bosman, I. d., de Beer, K., & Bothma, T. J. (2021). Creating pseudo-tactile feedback in virtual reality using shared crossmodal properties of audio and tactile feedback. South African Computer Journal, 33(1). https://doi.org/10.18489/sacj.v33i1.883 [ Links ]

Bossard, C., Kermarrec, G., Buche, C., & Tisseau, J. (2008). Transfer of learning in virtual environments: A new challenge? Virtual Reality, 12(3), 151-161. https://doi.org/10.1007/s10055-008-0093-y [ Links ]

Bourg, D. M., & Seemann, G. (2004). AI for game developers. O'Reilly Media, Inc. https://www.oreilly.com/library/view/ai-for-game/0596005555/

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77-101. https://doi.org/10.1191/1478088706qp063oa [ Links ]

Cakiroglu, Ü., & Gökoglu, S. (2019a). A design model for using virtual reality in behavioral skills training. Journal of Educational Computing Research, 57(7), 1723-1744. https://doi.org/10.1177/0735633119854030 [ Links ]

Cakiroglu, Ü., & Gökoglu, S. (2019b). Development of fire safety behavioral skills via virtual reality. Computers & Education, 133, 56-68. https://doi.org/10.1016/j.compedu.2019.01.014 [ Links ]

Chen, C. J., Toh, S. C., & Fauzy, W. M. (2004). The theoretical framework for designing desktop virtual reality-based learning environments. Journal ofInteractive Learning Research, 15(2), 147-167. https://www.learntechlib.org/primary/p/12841/ [ Links ]

Chen, C. J. (2006). The design, development and evaluation of a virtual reality based learning environment. Australasian Journal ofEducational Technology, 22(1). https://doi.org/10.14742/ajet.1306 [ Links ]

Clarebout, G., & Elen, J. (2007). In search of pedagogical agents' modality and dialogue effects in open learning environments. E-Journal of Instructional Science and Technology, 10(1). https://eric.ed.gov/?id=EJ846724 [ Links ]

Clarke, V., & Braun, V. (2016). Thematic analysis. The Journal ofPositive Psychology, 12(3), 297-298. https://doi.org/10.1080/17439760.2016.1262613 [ Links ]

Concannon, B. J., Esmail, S., & Roberts, M. R. (2020). Immersive virtual reality for the reduction of state anxiety in clinical interview exams: Prospective cohort study. JMIR Serious Games, 8(3), e18313. https://doi.org/10.2196/18313 [ Links ]

Craig, A. B., Sherman, W. R., & Will, J. D. (2009). Developing virtual reality applications. Elsevier. https://doi.org/10.1016/c2009-0-20103-6

Crosier, J. K., Cobb, S., & Wilson, J. R. (2002). Key lessons for the design and integration of virtual environments in secondary science. Computers & Education, 38(1-3), 77-94. https://doi.org/10.1016/s0360-1315(01)00075-6 [ Links ]

Desurvire, H., & Kreminski, M. (2018). Are game design and user research guidelines specific to virtual reality effective in creating a more optimal player experience? Yes, VR PLAY. In Design, user experience, and usability: Theory and practice (pp. 40-59). Springer International Publishing. https://doi.org/10.1007/978-3-319-91797-9_4

Donath, D., & Regenbrecht, H. (1995). VRAD (Virtual Reality Aided Design) in the early phases of the architectural design process. Sixth International Conference on Computer-Aided Architectural Design Futures, 313-322. https://papers.cumincad.org/cgi-bin/works/paper/8955

Erebak, S., & Turgut, T. (2021). Anxiety about the speed of technological development: Effects on job insecurity, time estimation, and automation level preference. The Journal of High Technology Management Research, 32(2), 100419. https://doi.org/https://doi.org/10.1016/j.hitech.2021.100419 [ Links ]

Falloon, G. (2019). From simulations to real: Investigating young students' learning and transfer from simulations to real tasks. British Journal of Educational Technology, 51(3), 778797. https://doi.org/10.1111/bjet.12885 [ Links ]

Farra, S., Miller, E. T., Hodgson, E., Cosgrove, E., Brady, W., Gneuhs, M., & Baute, B. (2016). Storyboard development for virtual reality simulation. Clinical Simulation in Nursing, 12(9), 392-399. https://doi.org/10.1016/j.ecns.2016.04.002 [ Links ]

Górski, F., Bun, P., Wichniarek, R., Zawadzki, P., & Hamrol, A. (2016). Effective design of educational virtual reality applications for medicine using knowledge-engineering techniques. EURASIA Journal of Mathematics, Science and Technology Education, 13(2). https://doi.org/10.12973/eurasia.2017.00623a [ Links ]

Guärdia, L., Crisp, G., & Alsina, I. (2017). Trends and challenges of e-assessment to enhance student learning in higher education. In Innovative practices for higher education assessment and measurement (pp. 36-56). IGI Global. https://doi.org/10.4018/978-1-5225-0531-0.ch003

Heady, J. E. (2000). Assessment - a way of thinking about learning - now and in the future. Journal ofCollege Science Teaching, 29(6), 415. https://www.proquest.com/scholarly-journals/assessment-way-thinking-about-learning-now-future/docview/200316998/se-2 [ Links ]

Heng, L., & McColl, B. (2021). Mathematics for future computing and communications. Cambridge University Press. https://doi.org/10.1017/9781009070218

Herrington, J., Reeves, T. C., & Oliver, R. (2013, May). Authentic learning environments. In Handbook of research on educational communications and technology (pp. 401-412). Springer New York. https://doi.org/10.1007/978-1-4614-3185-5_32

Huang, Y.-C., Backman, S. J., Backman, K. F., McGuire, F. A., & Moore, D. (2018). An investigation of motivation and experience in virtual learning environments: A self-determination theory. Education and Information Technologies, 24(1), 591-611. https://doi.org/10.1007/s10639-018-9784-5 [ Links ]

Idrissi, M. K., Hnida, M., & Bennani, S. (2017). Competency-based assessment. In Innovative practices for higher education assessment and measurement (pp. 57-78). IGI Global. https://doi.org/10.4018/978-1-5225-0531-0.ch004

International Organization for Standardization. (2018). ISO 9241-11:2018, Ergonomics of human-system interaction - Part 11: Usability: Definitions and concepts. https://www.iso.org/standard/63500.html

Jeffries, P. R. (2005). A framework for designing, implementing, and evaluating simulations used as teaching strategies in nursing. Nursing Education Perspectives, 26(2), 96-103. https://journals.lww.com/neponline/abstract/2005/03000/a_framework_for_designing,_implementing,_and.9.aspx [ Links ]

Jiang, Y., Paquette, L., Baker, R. S., & Clarke-Midura, J. (2015). Comparing novice and experienced students within virtual performance assessments. Proceedings of the 8th International Conference on Educational Data Mining, 136-143. https://eric.ed.gov/?id=ED560561

Joint Information Systems Committee. (2010). Effective assessment in a digital age. https://issuu.com/jiscinfonet/docs/jisc_effective_assessment_in_a_digital_age_2010

Kavanagh, S., Luxton-Reilly, A., Wuensche, B., & Plimmer, B. (2017). A systematic review of virtual reality in education. Themes in Science and Technology Education, 10(2), 85-119. https://www.learntechlib.org/p/182115/ [ Links ]

Kennedy-Clark, S., & Wheeler, P. (2014). Using discourse analysis to assess student problemsolving in a virtual world. In Cases on the assessment ofscenario and game-based virtual worlds in higher education (pp. 211-253). IGI Global. https://doi.org/10.4018/978-1-4666-4470-0.ch007

Kiger, M. E., & Varpio, L. (2020). Thematic analysis of qualitative data: AMEE Guide No. 131. Medical Teacher, 42(8), 846-854. https://doi.org/10.1080/0142159x.2020.1755030 [ Links ]

Lewis, J. R. (1994). Sample sizes for usability studies: Additional considerations. Human Factors: The Journal of the Human Factors and Ergonomics Society, 36(2), 368-378. https://doi.org/10.1177/001872089403600215 [ Links ]

Liu, M., Su, S., Liu, S., Harron, J., Fickert, C., & Sherman, B. (2016). Exploring 3D immersive and interactive technology for designing educational learning experiences. In Virtual and augmented reality (pp. 1088-1106). IGI Global. https://doi.org/10.4018/978-1-5225-5469-1.ch051

Madathil, K. C., Frady, K., Hartley, R., Bertrand, J., Alfred, M., & Gramopadhye, A. (2017). An empirical study investigating the effectiveness of integrating virtual reality-based case studies into an online asynchronous learning environment. Computers in Education Journal, 8(3), 1-10. https://coed.asee.org/2017/07/07/an-empirical-study-investigating-the-effectiveness-of-integrating-virtual-reality-based-case-studies-into-an-online-asynchronous-learning-environment/ [ Links ]

Mahdi, O., Oubahssi, L., Piau-Toffolon, C., & Iksal, S. (2018). Towards design and operational-ization of pedagogical situations in the VRLEs. 2018 IEEE 18th International Conference on Advanced Learning Technologies (ICALT), 400-402. https://doi.org/10.1109/icalt2018.00095

Makhkamova, A., Exner, J.-P., Greff, T., & Werth, D. (2020). Towards a taxonomy of virtual reality usage in education: A systematic review. In Augmented reality and virtual reality (pp. 283-296). Springer International Publishing. https://doi.org/10.1007/978-3-030-37869-1_23

Makransky, G., Borre-Gude, S., & Mayer, R. E. (2019). Motivational and cognitive benefits of training in immersive virtual reality based on multiple assessments. Journal ofComputer Assisted Learning, 35(6), 691-707. https://doi.org/10.1111/jcal.12375 [ Links ]

Mamun, M. A. A., Lawrie, G., & Wright, T. (2020). Instructional design of scaffolded online learning modules for self-directed and inquiry-based learning environments. Computers & Education, 144, 103695. https://doi.org/10.1016/j.compedu.2019.103695 [ Links ]

McHaney, R., Reiter, L., & Reychav, I. (2018). Immersive simulation in constructivist-based classroom e-learning. International Journal on E-Learning, 17(1), 39-64. https://www.learntechlib.org/primary/p/149930/ [ Links ]

Mikropoulos, T. A., & Natsis, A. (2011). Educational virtual environments: A ten-year review of empirical research (1999-2009). Computers & Education, 56(3), 769-780. https://doi.org/10.1016/j.compedu.2010.10.020 [ Links ]

Minocha, S., & Hardy, C. (2016). Navigation and wayfinding in learning spaces in 3D virtual worlds. In M. J. W. Lee, B. Tynan, B. Dalgarno & S. Gregory (Eds.), Learning in virtual worlds: Research and applications (pp. 5-41). AU Press, Edmonton, Canada. https://doi.org/10.1145/2071536.2071570

Minocha, S., & Reeves, A. J. (2010). Interaction design and usability of learning spaces in 3D multi-user virtual worlds. In Human work interaction design: Usability in social, cultural and organizational contexts (pp. 157-167). Springer Berlin Heidelberg. https://doi.org/10.1007/978-3-642-11762-6_13

Moczarny, I. M., de Villiers, M. R., & van Biljon, J. A. (2012). How can usability contribute to user experience? Proceedings of the South African Institute for Computer Scientists and Information Technologists Conference, 216-225. https://doi.org/10.1145/2389836.2389862

National Research Council. (2012, December). Education for life and work: Developing transferable knowledge and skills in the 21st century. National Academies Press. https://doi.org/10.17226/13398

Nemec, M., Fasuga, R., Trubac, J., & Kratochvil, J. (2017). Using virtual reality in education. 2017 15th International Conference on Emerging eLearning Technologies and Applications (ICETA), 1-6. https://doi.org/10.1109/iceta.2017.8102514

Nikou, S. A., & Economides, A. A. (2019). A comparative study between a computer-based and a mobile-based assessment. Interactive Technology and Smart Education, 16(4), 381-391. https://doi.org/10.1108/itse-01-2019-0003 [ Links ]

Novak, J. D., Mintzes, J. I., & Wandersee, J. H. (2005). Learning, teaching, and assessment: A human constructivist perspective. In Assessing science understanding (pp. 1-13). Elsevier. https://doi.org/10.1016/b978-012498365-6/50003-2

Özgen, D. S., Afacan, Y., & Sürer, E. (2019). Usability of virtual reality for basic design education: A comparative study with paper-based design. International Journal of Technology and Design Education, 31(2), 357-377. https://doi.org/10.1007/s10798-019-09554-0 [ Links ]

Parong, J., & Mayer, R. E. (2018). Learning science in immersive virtual reality. Journal of Educational Psychology, 110(6), 785-797. https://doi.org/10.1037/edu0000241 [ Links ]

Pellegrino, J. (2010). Technology and formative assessment. In International Encyclopedia of Education (pp. 42-47). Elsevier. https://doi.org/10.1016/b978-0-08-044894-7.00700-4

Penichet, V. M., Pefialver, A., & Gallud, J. A. (Eds.). (2013). New trends in interaction, virtual reality and modeling. Springer London. https://doi.org/10.1007/978-1-4471-5445-7

Pigatt, Y., & Braman, J. (2016). Increasing student engagement through virtual worlds. In Emerging tools and applications ofvirtual reality in education (pp. 75-94). IGI Global. https://doi.org/10.4018/978-1-4666-9837-6.ch004

Portman, M., Natapov, A., & Fisher-Gewirtzman, D. (2015). To go where no man has gone before: Virtual reality in architecture, landscape architecture and environmental planning. Computers, Environment and Urban Systems, 54, 376-384. https://doi.org/10.1016/j.compenvurbsys.2015.05.001 [ Links ]

Radianti, J., Majchrzak, T. A., Fromm, J., & Wohlgenannt, I. (2020). A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Computers & Education, 147, 103778. https://doi.org/10.1016/j.compedu.2019.103778 [ Links ]

Rhiu, I., Kim, Y. M., Kim, W., & Yun, M. H. (2020). The evaluation of user experience of a human walking and a driving simulation in the virtual reality. International Journal of Industrial Ergonomics, 79, 103002. https://doi.org/10.1016/j.ergon.2020.103002 [ Links ]

Ritz, L. T., & Buss, A. R. (2016). A framework for aligning instructional design strategies with affordances of CAVE immersive virtual reality systems. TechTrends, 60(6), 549-556. https://doi.org/10.1007/s11528-016-0085-9 [ Links ]

Rogers, Y., Sharp, H., & Preece, J. (2002). Interaction design: Beyond human-computer interaction. John Wiley & Sons. https://www.wiley.com/en-us/Interaction+Design%3A+Beyond+Human+Computer+Interaction%2C+6th+Edition-p-9781119901099

Rosen, Y. (2014). Thinking tools in computer-based assessment: Technology enhancements in assessments for learning. Educational Technology, 54(1), 30-34. http://www.jstor.org/stable/44430233 [ Links ]

Roussou, M., Oliver, M., & Slater, M. (2006). The virtual playground: An educational virtual reality environment for evaluating interactivity and conceptual learning. Virtual Reality, 10(3-4), 227-240. https://doi.org/10.1007/s10055-006-0035-5 [ Links ]

Schott, C., & Marshall, S. (2018). Virtual reality and situated experiential education: A conceptualization and exploratory trial. Journal of Computer Assisted Learning, 34(6), 843-852. https://doi.org/10.1111/jcal.12293 [ Links ]

Schroeder, N. L. (2016). Pedagogical agents for learning. In Emergingtools and applications of virtual reality in education (pp. 216-238). IGI Global. https://doi.org/10.4018/978-1-4666-9837-6.ch010

Serafin, S., Erkut, C., Kojs, J., Nordahl, R., & Nilsson, N. C. (2016). Virtual reality musical instruments. Proceedings of the Audio Mostly 2016, 266-271. https://doi.org/10.1145/2986416.2986431

Shavelson, R. J., Baxter, G. P., & Pine, J. (1991). Performance assessment in science. Applied Measurement in Education, 4(4), 347-362. https://doi.org/10.1207/s15324818ame0404_7 [ Links ]

Snowdon, C. M., & Oikonomou, A. (2018). Analysing the educational benefits of 3D virtual learning environments. European Conference on E-Learning, 513-522. https://www.proquest.com/conference-papers-proceedings/analysing-educational-benefits-3d-virtual/docview/2154983229/se-2

Sokhanvar, Z., Salehi, K., & Sokhanvar, F. (2021). Advantages of authentic assessment for improving the learning experience and employability skills of higher education students: A systematic literature review. Studies in Educational Evaluation, 70, 101030. https://doi.org/10.1016/j.stueduc.2021.101030 [ Links ]

Spector, J. M., Ifenthaler, D., Sampson, D., Yang, L., Mukama, E., Warusavitarana, A., Dona, K. L., Eichhorn, K., Fluck, A., Huang, R., Bridges, S., Lu, J., Ren, Y., Gui, X., Deneen, C. C., Diego, J. S., & Gibson, D. C. (2016). Technology enhanced formative assessment for 21st century learning. Journal of Educational Technology & Society, 19(3), 58-71. http://www.jstor.org/stable/jeductechsoci.19.3.58 [ Links ]

Steiber, A. (2014). The Google model: Managing continuous innovation in a rapidly changing world. Springer, London. https://doi.org/10.1007/978-3-319-04208-4

Steynberg, J., van Biljon, J., & Pilkington, C. (2020). Design aspects of a virtual reality learning environment to assess knowledge transfer in science. In Lecture notes in Computer Science (pp. 306-316). Springer International Publishing. https://doi.org/10.1007/978-3-030-63885-6_35

Sun, B., Chikwem, U., & Nyingifa, D. (2019). VRLearner: A virtual reality based assessment tool in higher education. 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR). https://doi.org/10.1109/vr.2019.8798129

Tinoca, L., Pereira, A., & Oliveira, I. (2014). A conceptual framework for e-assessment in higher education. In Handbook of research on transnational higher education (pp. 652-673). IGI Global. https://doi.org/10.4018/978-1-4666-4458-8.ch033

UNESCO. (2020). Education: From disruption to recovery. https://www.unesco.org/en/covid-19/education-disruption-recovery

Väätäjä, H., Koponen, T., & Roto, V. (2009). Developing practical tools for user experience evaluation: A case from mobile news journalism. European Conference on Cognitive Ergonomics: Designing beyond the Product - Understanding Activity and User Experience in Ubiquitous Environments, 1-8. https://dl.acm.org/doi/10.5555/1690508.1690539

van Gog, T., Sluijsmans, D. M. A., Joosten-ten Brinke, D., & Prins, F. J. (2008). Formative assessment in an online learning environment to support flexible on-the-job learning in complex professional domains. Educational Technology Research and Development, 58(3), 311-324. https://doi.org/10.1007/s11423-008-9099-0 [ Links ]

Vergara, D., Rubio, M., & Lorenzo, M. (2017). On the design of virtual reality learning environments in engineering. Multimodal Technologies and Interaction, 1(2), 11. https://doi.org/10.3390/mti1020011 [ Links ]

Virzi, R. A. (1992). Refining the test phase of usability evaluation: How many subjects is enough? Human Factors: The Journal of the Human Factors and Ergonomics Society, 34(4), 457-468. https://doi.org/10.1177/001872089203400407 [ Links ]

Vos, L. (2015). Simulation games in business and marketing education: How educators assess student learning from simulations. The International Journal of Management Education, 13(1), 57-74. https://doi.org/10.1016/j.ijme.2015.01.001 [ Links ]

World Economic Forum. (2020). Jobs of tomorrow. https://www.weforum.org/reports/jobs-of-tomorrow-mapping-opportunity-in-the-new-economy/

Yu, Z. (2021). A meta-analysis of the effect of virtual reality technology use in education. Interactive Learning Environments, 1-21. https://doi.org/10.1080/10494820.2021.1989466

Zawacki-Richter, O., & Latchem, C. (2018). Exploring four decades of research in Computers & Education. Computers & Education, 122, 136-152. https://doi.org/https://doi.org/10.1016/j.compedu.2018.04.001 [ Links ]

Zhou, Y., Ji, S., Xu, T., & Wang, Z. (2018). Promoting knowledge construction: A model for using virtual reality interaction to enhance learning. Procedia Computer Science, 130, 239-246. https://doi.org/10.1016/j.procs.2018.04.035 [ Links ]

Zuiker, S. J. (2016). Game over: Assessment as formative transitions through and beyond playful media. Educational Technology, 56(3), 48-53. http://www.jstor.org/stable/44430493 [ Links ]

Received: 30 April 2022

Accepted: 7 August 2023

Online: 14 December 2023