Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

South African Computer Journal

On-line version ISSN 2313-7835

Print version ISSN 1015-7999

SACJ vol.30 n.2 Grahamstown Dec. 2018

http://dx.doi.org/10.18489/sacj.v30i2.440

RESEARCH ARTICLE

Designing a natural user interface to support information sharing among co-located mobile devices

Timothy Lee SonI; Janet WessonII; Dieter VogtsIII

IDepartment of Computing Sciences, Nelson Mandela University, South Africa. timothy.leeson@mandela.ac.za

IIDepartment of Computing Sciences, Nelson Mandela University, South Africa. janet.wesson@mandela.ac.za

IIIDepartment of Computing Sciences, Nelson Mandela University, South Africa. dieter.vogts@mandela.ac.za

ABSTRACT

Users of mobile devices share their information through various methods, which are supported by mobile devices. However, the information sharing process of these methods are typically redundant and sometimes tedious. This is because it may require the user to repeatedly perform a series of steps to share one or more selected files with another individual. The proliferation of mobile devices support new, more intuitive, and less complicated solutions to information sharing in the field of mobile computing. The aim of this paper is to present MotionShare, which is a NUI application that supports information sharing among co-located mobile devices. Unlike other existing systems, MotionShareÕs distinguishing attribute is its inability of relying on additional and assisting technologies in determining the positions of devices. A primary example is using an external camera to determine device positioning in a spatial environment. An analytical evaluation investigated the accuracy of device positioning and gesture recognition, where the results were positive. The empirical evaluation investigated any usability issues. The results of the empirical evaluation showed high levels of user satisfaction and that participants preferred touch gestures to point gestures.

Categories: • Human-centered computing ~ Interaction techniques • Human-centered computing ~ Ubiquitous and mobile computing systems and tools

Keywords: information sharing, natural user interfaces, mobile computing, gesture-based interaction

1 INTRODUCTION

Communication between users and their mobile devices continuously increases. As a result, the need for information sharing emerges. The advancements in mobile computing, specifically the computational and data storage capabilities, have attributed to the increased information sharing rate between users and their mobile devices. Existing information sharing methods on mobile devices are typically manual in nature. The process can be cumbersome and dependent on the quantity of information to be shared as well as the number of recipients. Therefore, these methods can become time-consuming and ineffective.

Due to the increasing prevalence of Natural User Interfaces (NUIs), standard interaction methods with mobile device are also increasingly replaced with more intuitive interaction techniques. Intuitive is the easy understanding of a concept without any conscious reason (Britton, Setchi & Marsh, 2013). Human-computer interaction has evolved to the include various gestures (tap, point, swipe, and drag) that are prevalent in NUIs (Oh, Robinson & Lee, 2013). NUI interaction techniques are characterised as natural and intuitive to users. Therefore, task completion is less time-consuming and users are able to easily perform task actions (Oh et al., 2013). Increasingly, new application areas of NUIs show promise and further advance the current generation of interactive computing (Seow, Wixon, Morrison & Jacucci, 2010).

Proxemics involves the study of sociological, behavioural, and cultural features between individuals and their devices (Dingler, Funk & Alt, 2015). Proxemics also refers to the movement, orientation, and distances between devices. In computer vision and robotics, an object's pose refers to both the object's position and orientation (Ilic, 2010). In this paper, pose means the device's location and orientation in relation to other devices in the environment.

A co-located environment refers to a forum where users and their mobile devices are collectively gathered (formal or informal environment) (Heikkinen & Porras, 2013). As long as the users are in close proximity to each other, the environment can occur indoors or outdoors. User proximity is important as it allows NUI interaction techniques to support information sharing among co-located mobile devices. Therefore, co-located is the close proximity of users and their mobile devices to each other (indoors or outdoors), with limited movement of users (standing or seated near a table).

The aim of this paper is to present an NUI application, called MotionShare, to support information sharing among co-located mobile devices. MotionShare calculates the poses of co-located mobile devices and applies this to NUI gestures to facilitate information sharing (documents, images, and media) among selected recipients. The research contribution to mobile computing is MotionShare's accuracy and usability that we have evaluated.

This article extends our SAICSIT 2016 paper (Lee Son, Wesson & Vogts, 2016).

2 RELATED WORK

This section discusses information sharing and NUIs. The related work presents and identifies the challenges of information sharing as well as the benefits and shortcomings of existing NUI systems.

2.1 Information sharing

Information sharing is any activity where information (natural, electronic, or other form) is transferred between individuals, organisations, or devices through by any means of transference (Mesmer-Magnus & DeChurch, 2009).

Widespread communication and coordination of people has caused a variety of information methods to occur. These methods are either in a digital or natural form. The proliferation of computing technology has made digital information sharing methods possible. Although natural information sharing methods will always be prevalent, their digital counterparts have made life easier. For example, sending files (images, documents, music, or videos) can be done transferred between devices via flash drives, Dropbox, Bluetooth, or email attachments.

Co-located information sharing only occurs when individuals are located within the same environment, such as a room (Singleton, 2014). Consequently, continuous information sharing is expected because individuals are face-to-face. Furthermore, individuals in a co-located environment may know each other or have a shared context, such as living in the same city, studying at the same university, or working for the same organisation (Kahai, 2008).

Mobile devices support several technologies to share information, which include Near Field Communication (NFC), Dropbox, Bluetooth, WiFi, and Email. Exsting information sharing systems were identified that use one or more of these methods. These systems are Xender (Anmobi, Inc., 2015), ShareLink (ASUSTeK Computer Inc., 2015), Feem (FeePerfect, 2015), and SuperBeam (LiveQoS, 2015). Xender, Share Link, and Feem use WiFi to share information, whereas SuperBeam uses NFC, Wi-Fi Direct, QR Codes, or Manual Sharing Key. In all these systems, the selection of a files and recipient is performed by single touch. Typically, when selecting a recipient, an icon or text list of device names is displayed. However, SuperBeam also supports QR Codes or NFC to select a recipients. None of the systems identified sufficiently determine the device pose for NUI interaction techniques to be used. In these systems, file sharing among multiple devices also requires the above process to be repeatedly performed.

2.2 Natural User Interfaces

NUIs typically allow individuals to perform natural movements to manipulate on-screen content or control of an application (Yao, Fernando & Wang, 2012). Various interaction techniques (multi-touch, gesture recognition, speech, eye tracking, and proxemics) allow user interaction in NUIs. NUIs provide a new perspective on user interaction with content displayed on devices. There are several NUI definitions, all of which originated from Blake's (2013) definition:

A natural user interface is a user interface designed to reuse existing skills for interacting appropriately with content.

This definition illustrates three crucial aspects about NUIs, namely: NUIs are designed, NUIs reuse existing skills, and NUIs provide appropriate interaction with content.

Natural Interaction is a user experience objective that is extensively researched in most interaction fields and not limited to NUIs (Tavares, Medeiros, de Castro & dos Anjos, 2013).

Consequently, it can be presented that natural interaction is the effect of transparent interfaces, which are based on previous knowledge, and where the users feel like they are interacting directly with the content. (Wendt, 2013)

The first NUI objective is derived from the natural interaction definition, whereby content and context familiarity ensures users can understand the interaction. Typically, NUIs should prioritise the content and its supporting technologies be ubiquitous (Blake, 2013). The second NUI objective is content-centric, whereby the NUI should facilitate content accessibility. NUI literature (Blake, 2013; Valli, 2008; Wigdor & Wixon, 2011) perpetuates that the appropriate selection of a technique is dependent on the proper understanding of the context. Thus, the third NUI objective is the importance of context, where understanding the context ensures the appropriate interaction techniques are selected and used. The cognitive load is reduced by reusing skills that users already possess, thus freeing up the mental capacity to understand and reuse different interactions with an NUI. The fourth NUI objective is reducing the user cognitive load of NUI interaction.

Gestures are any motion that involves physical movements of an individual's body, for example, hands, fingers, head, or feet (Billinghurst, Piumsomboon & Bai, 2014). Gestures provide a natural, direct, and intuitive way of interacting with a computing device, allowing easier interaction for all types of users, including the elderly (Hollinworth & Hwang, 2011). There are two types of gestures, namely touch and in-air gestures (X. A. Chen, Schwarz, Harrison, Mankoff & Hudson, 2014). Touch gestures are predominant in touch screen interfaces where the user performs a predefined gesture to achieve a specific system response. In-air gestures are any movements of the user's body that are recognised by the system without touching the screen (Agrawal et al., 2011). A benefit of in-air gestures is their natural feel as they naturally accompany speech interaction. However, in-air gestures are susceptible to several limitations, such as context dependence, user fatigue, social acceptability, and gesture recognition (Agrawal et al., 2011; Bratitsis & Kandroudi, 2014).

Several NUI systems are available, which use different interaction techniques depending on the context. Different NUI interaction techniques are used to support the specific context of each system. These systems were selected for review on the basis that they required no additional hardware. The systems reviewed were Zapya (DewMobile, 2016), AirLink (K.-Y. Chen, Ashbrook, Goel, Lee & Patel, 2014), MobiSurf (Seifert et al., 2012), Gesture On (Lu & Li, 2015), and Flick (Ydangle Apps, 2013). MobiSurf, Flick, and Zapya only support single file sharing. All of these systems use touch gestures, except AirLink, which uses in-air gestures and code words to share information. None of these systems use pose information to determine the location and orientation of the mobile devices in the physical environment. Table 1 summarises the applications with their respective communication technologies used and the types of information sharing supported.

3 RESEARCH DESIGN

This research selected and followed the Design Science Research (DSR) methodology. It is an appropriate methodology because it requires the development of a proxemic prototype NUI to support information sharing among co-located mobile devices. The development of this prototype directly correlates with the development of an artefact to solve the identified problem, which is one of the core activities in the DSR methodology, namely Design and Develop Artefact (Peffers, Tuunanen, Rothenberger & Chatterjee, 2007).

3.1 Application of DSR

The DSR methodology can be used as a framework for conducting research based on Design Science, which involves the performance of the following activities:

Identify problem and motivate: defining specific research problem and justification of a solution;

Define objectives of a solution: inferring the solution objectives derived from the problem definition and knowledge;

Design and development: involves creating the artefact solution;

Demonstration: demonstrating the artefact's efficacy to solve the defined problem;

Evaluation: observing and measuring if and/or how well the artefact supports a solution to the defined problem, by comparing the solution objectives to actual observed results from the artefact in the demonstration phase; and

Communication: communicating the importance of the problem, the artefact, its utility and novelty, rigor of its design, and its effectiveness to relevant audiences.

During this research, several research strategies were employed, namely: literature study, focus groups, prototyping, and experiments. The various DSR methodology activities and cycles (Relevance, Design, and Rigor) incorporated these strategies.

3.2 Research questions

This research was guided through addressing the primary research question:

How can a proxemic Natural User Interface be designed to provide an accurate and usable solution to support information sharing among co-located mobile devices?

The following secondary questions were formulated to answer the primary research question:

RQ1. What are the shortcomings of existing information sharing methods currently used by mobile devices?

RQ2. What are the benefits and shortcomings of existing NUI interaction techniques for information sharing?

RQ3. How should the relative pose for colocated mobile devices be calculated?

RQ4. How should NUI interaction techniques be designed to support information sharing among co-located mobile devices?

RQ5. How can a proxemic prototype NUI be developed to support information sharing among co-located mobile devices?

RQ6. How accurate and usable is the proxemic prototype NUI in supporting information sharing among co-located mobile devices?

Each of these research questions was addressed by applying at least one or more research strategies (Section 3.1) in one of the DSR methodology activities.

4 MOTIONSHARE DESIGN

This section discusses how MotionShare determines the positions of the co-located mobile devices in an environment. The pose information was used to design the information sharing process. Focus groups were conducted to determine the most suitable NUI gestures to be implemented in MotionShare, which were used to share information among co-located mobile devices.

4.1 Positioning techniques

For NUI interaction techniques to be used in information sharing, the pose of each device in relation to one another is required. Existing information sharing technologies (Section 2.1) only provide coarse-grained granularity. For this research, a more fine-grained approach is required for information sharing in a co-located environment. The distance between devices and the orientation of the devices is required for the computation of the poses of every device in the environment. There are other existing indoor positioning solutions (Estimote and iBeacon) that are small computer beacons used for indoor positioning. These solutions use Bluetooth 4.0 Smart, also known as Bluetooth Low Energy (BLE), an accelerometer, and a temperature sensor (Estimote, Inc., 2018; Apple, Inc., 2018). However, these solutions use hardware additional to the user's mobile device. Thus, the challenge was to solve this particular problem without the use of additional hardware.

4.1.1 Determining distance

Smartphones can use different techniques to determine their own distance. Table 2 presents several criteria to measure the different distance techniques (Hightower & Borriello, 2001).

These techniques are global positioning system (GPS), cell tower triangulation and Wi-Fi positioning. GPS is highly accurate (95.00%) and distance is within metres, but requires high level of energy consumption and is unable to perform within indoor environments. Although cell tower triangulation is energy efficient and available almost everywhere in the world, it still lacks the accuracy and precision provided by GPS (accuracy within metres). Wi-Fi positioning is best suited for an indoor environment; however, it requires multiple access points (AP) to be located nearby to function properly, and is only able to provide a distance to within an accuracy of 60 metres. Table 3 compares the distance techniques using the criteria identified in Table 2.

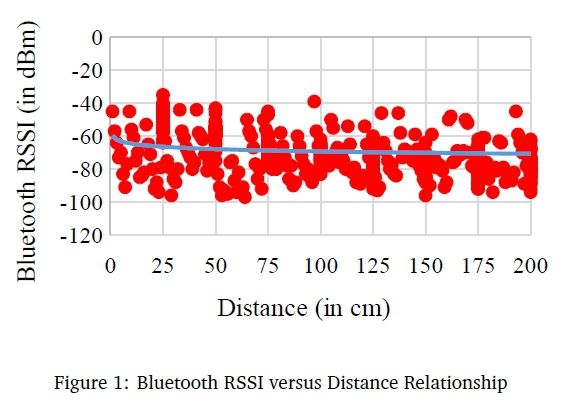

All the discussed techniques provided coarse-grained granularity, and ideally for this research, a more fine-grained technique is required. Therefore, experiments were conducted to determine if a more fine-grained solution was available. Several experiments were conducted using Bluetooth. The objective of these experiments was to determine if this technology could be used to determine the distance between two mobile devices. Each experiment involved two smartphones that was placed at different distance increments. The distance increments commenced at 25cm and went up to 200cm. These increments were considered to be a fair because it represents the expected distance that users would either be seated or standing apart in a co-located environment.

A prototype using the Bluetooth Received Signal Strength Indicator (RSSI) was developed, installed, and used on each device to determine the RSSI values. The RSSI values displayed on each device were recorded by observation and data logging. Every experiment conducted was repeated several times to ensure an adequate data sample was obtained to develop a more accurate model for the selected machine learning (ML) algorithms.

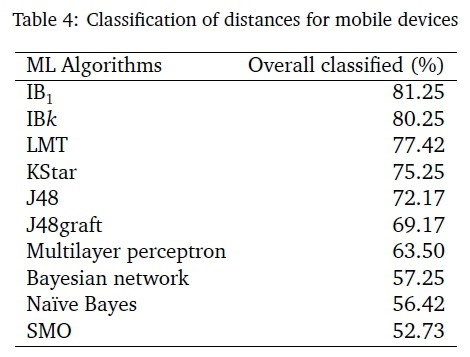

The collected data from the Bluetooth experiments was subjected to various ML algorithms. Table 4 shows the performance of these algorithms in classifying the distance based on the Bluetooth RSSI values. The IB1 classifier had the highest accuracy in correctly classifying the instances. Therefore, the IB1 classifier was selected and used within MotionShare.

The IB1 algorithm is an instance-based nearest neighbour classifier (Devasena, 2013). It uses normalised Euclidean distance to determine the training instance closest to the given test instance, and predicts the same class as this training instance. 10-fold cross-validation was used with IB1. This meant that the dataset was split into 10 equal parts (folds). Using the 10-fold cross-validation also meant that 90% of the dataset was used for the training (and 10% for testing) in each fold test.

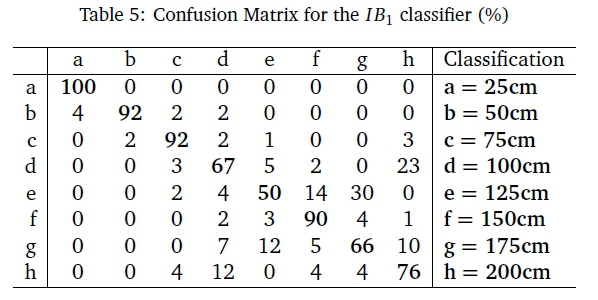

Confusion matrices are used in ML to visualise the performance of a specific algorithm (Markham, 2014). Each column of the matrix represents the instances in a predicted class, while each row represents the instances in an actual class. The value at each intersection between a column and row represents the number of predictions classified. The ideal scenario is to have the value only appear in the "diagonal".

Table 5 represents the confusion matrix for the IB1 algorithm. The IB1 algorithm correctly classified 50cm (92%) and misclassified it as 25cm (4%), 75cm (2%), and 100cm (2%). Overall, the IB1 algorithm correctly classified instances with an accuracy of 81.25%, which was deemed to be an acceptable rate.

All the results from the experiments were aggregated into a single visualisation to illustrate the relationship between Bluetooth RSSI and distance. The experiments showed that an inverse relationship exists between these two variables (Figure 1).

Bluetooth RSSI values are, however, sensitive to some variables in the environment, so a test: environment was chosen that had low environmental interference.

4.1.2 Determining Orientation

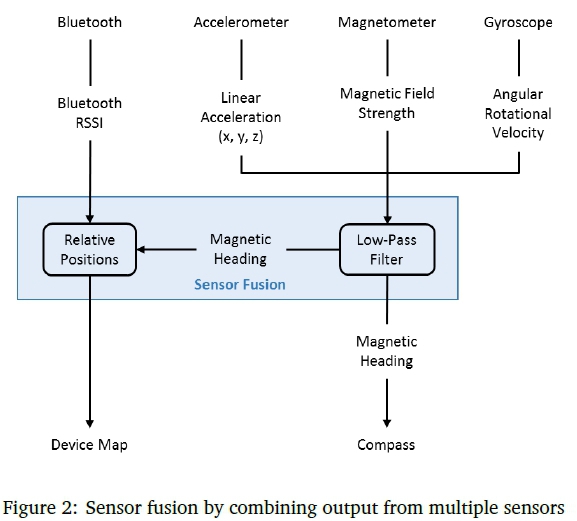

The design of a digital compass was required to determine the orientation of the mobile devices1 Consequently, the position and motion sensors embedded in mobile devices were utilised. These sensors were the accelerometer, magnetometer, and gyroscope.

An accelero meter is an embedded sensor in a mobile device used to me asure the acceleration forces on all three physical axes (x, y, and z) and can determine the device's physical position (Aviv, Sapp, Blaze & Smith, 2012). A magnetometer is a magnetic sensor embedded in a mobile device to determine the heading ef the device, erovided she user is holding it parallel to the ground (Zhang & Sawchuk, 2012).

Similarly, a gyroscope is an embedded sensor that provides an additional dimension to the information supplied by the accelerometer by measuring the rotation or twist of the Oevice (Thomason & Wang, 2012). The gyroscope measures the angular rotational velocity od a device. Unlike the aceel0rometer, the gyroscope is not affected by gravity. Thus, the accelerometer and gyroscope measure the rate od change differently. In practice, this means that an accelerometer will measure the directional movement of a device, but will not be able to accurately resolve its lateral orientation or tilt during this movement accurately, without the use of the gyroscope which would provide the fdditional information (Kratz, Rohs & Essl, 2013).

These sensors outp°t sensor data that varies at a high rate, similarly to the Bluetooth RSSI values. The solution was to poll the sensor data at an interval of two seconds and only extract those values that were useful to this contex and eliminate unnecessary noise. Applying a low-pass filter resolved the issue of the sensor data varying considerably.

A low-pass filter is a smoothing algorithm that smooths the sensor values by filtering out high-frequency noise and "passes" low-frequency or slowly varying changes (Lee, 2014). Consequently, a more stable compass is displayed and the orientation changes are smoother. The accuracy of the compass was compared to existing compass applications on the Google Play Store and it was found to be similar (informal testing).

Figure 2 illustrates how multiple sensors were combined to create sensor fusion. The sensor data from the accelerometer, magnetometer, and gyroscope were combined through the low-pass filter to provide an improved compass. The distance and orientation information was combined to determine the pose of the different devices in relation to each other.

4.1.3 MotionShare architecture

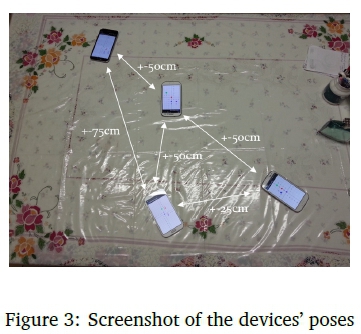

MotionShare was designed as an Android application to support information sharing amono co-located devices and, therefore, a client-server architecture was required (Baotic, 2014). There are two roles in MotionShare, namely server and client. Any device can act as a server and the other devic°s serve as clients. The server facilitates the communication of the relative positions (poses) of the devices between the clients and itself using Wi-Fi. The server's purpose is to create a private and secure Wi-Fi hotspot, wh°reby clients can join the password-protected network. The server can determine the network service set identifier (SSID) and the password. The client requires the input of the network SSID and the encrypted password. All of the devices are able to send and receive information. Figure 3 illustrates the poses of several devices that are randomly placed on a table.

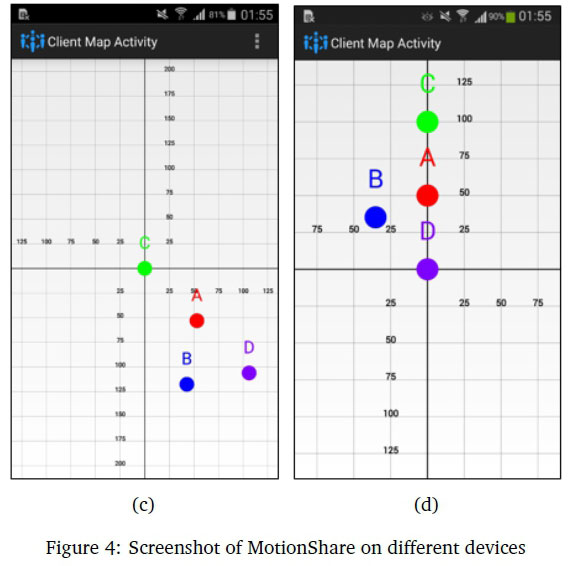

Figure 4 shows the screenshots of each device screen relative to its pose. Device A (server) was pointed in the direction of Device B (Figure 4(a)). Device B (client) was pointed towards Device C (Figure 4(b)). Figure 4(c) shows what map is displayed on Device C as it is facing away from the other devices in the environment. Device D was oriented in the direction of Devices A and C, with Device B positioned to the left of Device D (Figure 4(d)). Each device is represented by a uniquely coloured dot and labelled with the device name. The dot displayed in the centre of the map represents the pose of the subject device, with the other devices displayed around it.

4.2 Gesture design

Focus groups were conducted to determine the most appropriate NUI gestures to utilise the pose information that was determined (Section 4.1). These gestures were used for information sharing among co-located mobile devices.

4.2.1 Procedure

Two focus groups of four participants each were seated around a table. Participants were selected based on purposive sampling to identify a representative sample, which represented the same population to be used in user evaluation (Maxwell, 2012). A group comprised four participants, due to the availability of participants, mobile devices, and venue size. All eight participants were experienced mobile device users (more than seven years), and six participants (75.00%) used mobile devices for 5-6 hours on a daily basis while the remaining two (25.00%) for 3-4 hours. The age distribution among the participants showed 25.00% (n = 2) and 75.00% (n = 6) belonged to the 18-21 and 22-30 years categories respectively. Seven participants were Android users (87.50%) and only one was an iOS user (12.50%).

The placement of the participants was to simulate an actual co-located environment, whereby individuals would gather for a formal or social meeting. The moderator presented several questions to the focus groups:

1. Single Select: What action would you perform to select a file?

2. Multiple Select: What action would you perform to select multiple files?

3. Single Share: What action would you perform to share a file with another mobile device?

4. Multiple Share: What action would you perform to share a file with multiple devices?

5. Initiate: What action would you perform to initiate a file transfer?

6. Accept: What action would you perform to accept a file transfer?

7. Reject: What action would you perform to reject a file transfer?

8. Cancel: What action would you perform to cancel a file transfer?

4.2.2 Results

The moderator analysed and categorised the results from the two focus groups according to the questions presented (Section 4.2.1). Five participants indicated a long press action to initiate the file selection process, whereas the remaining three participants suggested a long press with a hold and drag action of the selected file(s) into a basket icon representation of the recipient (Single Select).

The second question posed to the focus groups was related to multiple file selection. The same five participants indicated a long press to initiate the selection process, after which a single touch was required to select other files. The remaining three participants suggested a custom touch gesture be drawn on the device screen to invoke a system response of selecting all the files.

The Single Share question received mix participant responses. Four participants suggested a swipe or flick gesture, three participants recommended pointing the device towards the recipient, and one participant suggested a throw and catch concept. The throw and catch concept was based on the Bump application, which was recently discontinued by Google (Hockenson, 2014).

Regarding Multiple Share (information sharing among multiple devices), six participants preferred a single action for all tasks and recommended moving the device in an arc-like manner or shape to select intended recipients. These six participants also suggested an alternative option, which was the drawing of a custom gesture, for example, an arc to highlight and select the intended recipients. The remaining two participants suggested multiple swiping or flicking action for recipients.

Regarding the Initiating a file transfer, five participants were accustomed to simply selecting a "Share" button. The remaining three participants suggested pointing the device and tilting it towards the intended recipient. These three participants felt this gesture was similar to handing a document over to another individual.

Six participants preferred a simple dialog box containing a "Yes" and "No" button to either initiate or reject a file transfer. Two participants suggested tilting the device towards themselves. These two participants suggested an action of swinging the device like a pendulum to indicate a "No" action, similar to an individual shaking his head. When the question was asked on cancelling a file transfer ("Cancel"), all the participants mentioned that a cross icon would suffice.

4.2.3 Design Implications

An analysis and comparison was conducted between the existing systems identified from the literature study and the results obtained from the two focus groups. Table 6 shows the comparison between the literature study and results of the focus groups. This table also presents the decision made on the interaction techniques to be implemented in MotionShare.

Single select and multiple select were implemented as a long press to initiate the file selection process, followed by a single touch for multiple files. The decision on sharing with a single device (single share), was to allow the user to point the device towards the recipient.

Multiple device sharing (multiple share) was implemented using a point gesture, which allowed users to draw a custom gesture on the device screen to select recipients. The second option for multiple device sharing allowed for selective multiple sharing, as the user can decide which recipients to select. Figure 5 shows a screenshot of a custom gesture being used to select multiple recipients.

A "Share" button was implemented to initiate the file transfer using the device pointed at the recipient. Initiating file transfer occurs when the user lifts their finger up from the device screen. Accepting or rejecting the file transfer was removed, as existing systems showed that devices that had joined a private network had already made the decision to share files. A constant request notification to receive files can become annoying and tedious. The "Cancel" button was also removed as the files were automatically accepted. Two NUI gestures, namely point and touch, were incorporated into MotionShare to support information sharing among co-located mobile devices.

5 EVALUATION

This section discusses the research design and the results of the evaluation of MotionShare. The analytical evaluation investigated the accuracy of the device positioning and the gesture recognition. The results of the usability evaluation are then presented.

5.1 Analytical evaluation

An analytical evaluation technique was selected as the most suitable technique to determine the accuracy and precision of the device positioning and gestures of MotionShare.

5.1.1 Design

Device positioning and MotionShare gestures was subjected to an analytical evaluation. Using the same evaluation environment allowed the recall, trueness, and precision metrics to be measured and compared with various scenarios for device positioning and MotionShare gestures.

Each of these metrics contain similar variables, which are explained (Sokolova & Lapalme, 2009):

true positives (tp) are the number of positive class examples correctly classified as positive;

true negatives (tn) are the number of negative class examples correctly classified as negative;

false positives (fp) are the number of positive class examples incorrectly classified as negative; and

false negatives (fn) are the number of negative class examples incorrectly classified as positive.

Recall is typically referred to as Sensitivity or True Positive Rate (TPR), which focuses on the effectiveness of a classifier to correctly classify test results as positive values depending on the positive condition (Sokolova & Lapalme, 2009). Recall is determined with Equation 1.

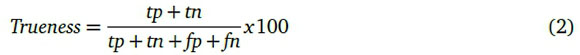

The ISO 5725-1 standard defined trueness (International Standards Organisation, 1994), but was better understood from ASTM (NDT Resource Center, 2018) as the "closeness of agreement between the average value obtained from a large series of test results and the true value". Trueness is determined using Equation 2.

The ISO 52725-1 standard defined precision as the "closeness of agreement among a set of results" (International Standards Organisation, 1994) and determined with Equation 3.

The mobile devices were placed in various combinational layouts and at different distance increments to simulate a co-located environment. These scenarios allowed for the analytical evaluation to assess the accuracy and precision of the device positioning. This procedure was also repeated for MotionShare gestures.

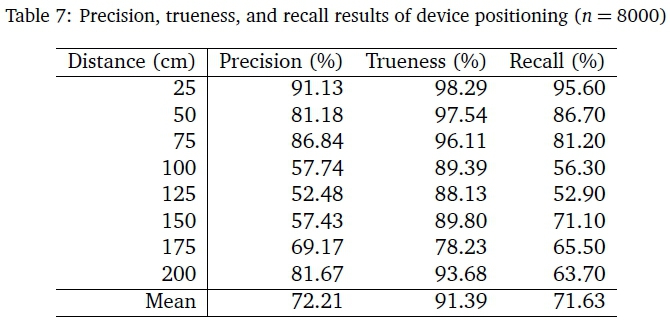

5.1.2 Results

Table 7 presents the individual precision, trueness and recall results at the different distance increments as percentages. The aggregate number of all distance increments tested was 8000 (1000 times for each increment shown in the table). The 25cm distance had the highest precision of 91.13% among the various tested distances, which suggests that MotionShare was accurate at this distance. The lowest precision was 52.48% for the 125cm distance, which suggests that the external variables affected the Bluetooth RSSI. MotionShare had an average precision of 72.21%. The highest trueness of MotionShare was at the 25cm distance (98.29%), closely followed by 50cm with 97.54%. The lowest trueness was 175cm with 78.23%. The average trueness of MotionShare was 91.39%.

The third metric measured for device positioning was recall. The highest recall was at 25cm with 95.60%. The lowest recall was at 125cm with 52.90%. These results showed that MotionShare struggled at this distance, which was interesting as the further distances of 150cm (71.10%), 175cm (65.50%), and 200cm (63.70%), all had significantly higher recall. The lower recall values could be attributed to the external variables affecting the Bluetooth RSSI, which caused MotionShare to misclassify the distances between devices. The average recall for device positioning was 71.63%.

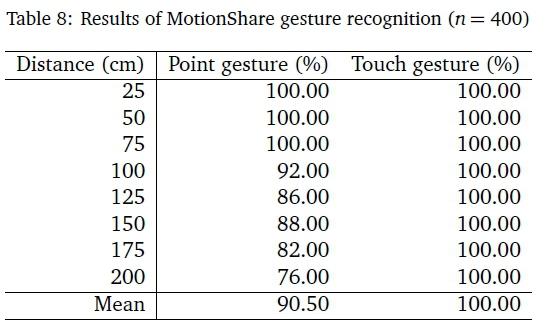

Mobile devices were placed at known distance increments to evaluate the accuracy of the MotionShare gestures. The touch gestures had the highest mean recall value (100.00%), and the point gestures had a mean value of 90.50% (n = 362). The point and touch gestures both experienced no recall issues at 25cm, 50cm, and 75cm distance increments, as the recall was 100.00% (n = 50). Point gestures had a recall of 92.00% (n = 46) at 100cm, and 86.00% (n = 43) at 125cm. Performing a point gesture when the devices were located 150cm apart resulted in an 88.00% recall (n = 44), as compared with a recall of 82.00% (n = 41) at 175cm. MotionShare struggled to recognise the orientation of the devices at 200cm distance with a recall of 76.00% (n = 38).

The touch gesture had a 100.00% recall (n = 400) because the gesture involved highlighting the device dots on the screen. This gesture was not affected by external variables other than when computing the positions of the different devices. Point gestures had an average recall value of 90.50% (n = 362), because the devices were placed in areas of the environment where external variables could affect the sensors. Table 8 presents the results of the accuracy of the MotionShare gestures.

5.2 Empirical evaluation

A usability evaluation was conducted of MotionShare to determine if there were any usability issues and also which gestures the users preferred.

5.2.1 Design

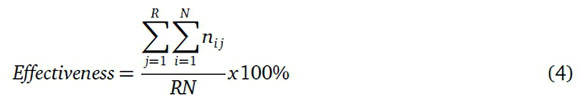

The usability evaluation comprised a representative sample of 32 participants, who had prior mobile phone expertise. Previous studies have shown that 10-12 participants is the ideal number for a usability study. This range can detect 80.00% of the possible usability issues of the evaluated system (Lewis, 1995). The evaluation metrics captured were effectiveness, efficiency and satisfaction.

Effectiveness is universally considered to be the fundamental usability metric (Sergeev, 2010). This metric was measured according to the participants' ability to complete the task, which is used to determine the task completion rate. Participants were assigned a binary value of 1 for task success or 0 for task failure. Effectiveness was determined with Equation 4, where N was the number of tasks, R was the number of participants, and nijwas the result of whether task i by participant j was successfully completed (nij = 1) or failed to complete (nij = 0).

Efficiency was measured by the participants' time taken to complete each task (Albert & Tullis, 2013). Task time was calculated by subtracting the start time from the end time, as shown in Equation 5.

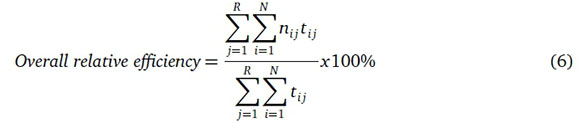

The overall relative efficiency was the ratio of the time taken by participants who completed the task with the total time taken by all participants. This was determined with Equation 6, where the variables N, R, and nijwere the same as in Equation 4, and tijwas the time spent by participant i to complete task j even if the task was not successfully completed, in which case time was recorded until the participant decided to quit the task.

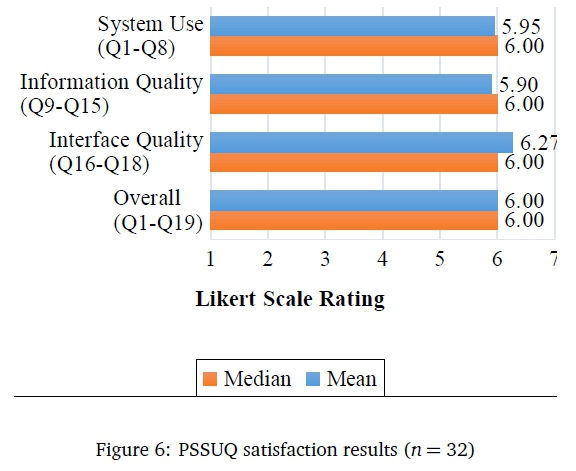

Satisfaction was the user acceptance and comfort levels experienced by participants. This metric was measured using the Post Study System Usability Questionnaire (PSSUQ), which was designed to perform an overall assessment of the usability of a system at the end of a usability evaluation (Lewis, 1995). The PSSUQ comprised 19 statements and was analysed by calculating a mean value for each statement on the 7-point Likert-type scale of "Strongly Disagree" to "Strongly Agree". The mean scores of the 7-point Likert-type scale were classified according to following ranges:

disagree: [1.00 ≤ x< 3.57)

neutral: [3.57 ≤ [x ≤ 4.42]

agree: (4.42 ≤ [x ≤ 7.00]

Equation 7 determined the mean satisfaction, where R is the number of participant responses, Q is the number of statements in the PSSUQ; and pj is the weight of the answer (1-7) for scenario i and participant j.

Every group (comprised four participants) were given a list of tasks to perform. Each group was instructed to work together to complete the task list, which comprised two roles, namely sender and receiver. Seven user tasks were presented to the participants, ensuring a comprehensive coverage of the functionality of MotionShare.

These tasks comprised the following: Single Point (S1), Multiple Point (S2), Single Touch (S3), Multiple Touch (S4), and three Receive (R1-R3) tasks. Counterbalancing was used to eliminate the learnability effect by changing the order in which the gestures were used. The mobile devices were randomly placed around a table to simulate a co-located environment.

The gender composition of all participants was 25 males and 7 females. The age distribution comprised ten participants in the 18-21 age category and 22 in the 22-30 age category. The number of hours spent on mobile devices was fairly evenly distributed, as 4 participants indicated 1-2 hours per week, 9 indicated 4 hours, 11 indicated 5-6 hours, and 8 indicated 7+ hours. Most of the participants were Android users (n = 28), and only four were iOS users.

5.2.2 Results

Nearly all the user tasks were successful, with the exception of three tasks (S1, R1, and R3) that had 95.00% (n = 30) success. These three tasks had a rare occurrence of files not displaying in the receiver's file list. The duration for each task was determined, which included reading the instructions and answering the task question. The touch gestures were less time consuming to complete than point gestures. The mean times for S1 (Single Point) and S2 (Multiple Point) were 43.30 and 44.45 seconds respectively, while the mean times for S3 (Single Touch) and S4 (Multiple Touch) were 35.00 and 34.04 seconds respectively. The mean Receive times were fairly consistent, but showed a downward trend (R1=24.00, R2=18.35, R3=15.95). The user satisfaction results obtained from using the PSSUQ were classified into the following categories (Devasena, 2013; Markham, 2014; Aviv et al., 2012):

System use: mean of the participants' scores in statements 1-8

Information quality: mean of the participants' scores in statements 9-15

Interface quality: mean of the participants' scores in statements 16-18

Overall: mean of the participants' scores in statements 1-19

The mean of System Use was 5.95 (using a 7-point Likert-type scale). The other two categories (Interface Quality and Overall) also had high mean values of 6.27 and 6.00 respectively. The Information Quality had the lowest mean (u = 5.90), which suggested that the information provided by the application could be improved. The median values for all PSSUQ categories were the same (median=6.00). The standard deviations of these categories were all low (0.79 ≤ 5 ≤ 1.11). Figure 6 presents the feedback from the PSSUQ classified according to these categories. Ninety-five percent confidence intervals are indicated for the mean values.

Thematic analysis was used to analyse the data obtained from the Overview Section in the PSSUQ. A frequency count (f) and a percentage were computed for each theme. The strongest positive theme was gesture recognition (f = 29), with 90.63% of participants stating "Intuitive ability to share data wirelessly by pointing the device to the intended target". This theme was followed by ease of use (f = 23), with 71.88% commenting "The system was easy to use". Twenty participants (62.50%) stated that "Clear indication of devices on the map allowed for tasks to be completed". Eighteen (56.25%) stated that "Map of devices is simple to understand". Fifty percent of the participants (f = 16) stated the "Compass is very responsive and accurate". Thirteen participants (40.63%) stated that "The system is intuitive".

Several additional comments were made by the participants. Twenty-seven participants commented on user experience (84.38%), who stated "Overall functionality of the application and accuracy of the calibration used in sharing the files is impressive". This was closely followed by gesture recognition (78.13%), where 25 participants stated "Touch gesture for selective recipient sharing is cool". The potential of MotionShare was highlighted (f = 17) by 53.13% of participants, who stated "A promising application with potential applications of commercial use".

A Post-Test questionnaire was presented to determine which NUI technique the participants preferred and why. Figure 7 displays trie satitfaction results o f the post-test questionnaires. Ninety-five percent confidence intervals are shown for the mean values. The participants preferred the touch gesture as an intuitive method of information sharing with co-located mobile devices (u, = 5.75), as opposed to pointing the device in the direction ot the intended rec ipients. Th e point gesture was only preferred by five participants (15.63%). The participants felt that the touch gesture was slightly more complex than the point gesture (u = 4.13). Twenty-six panticipants (81.25%) found the touch gestore to be easier to use (u = 4.38), with the remaining six (18.75%) preferring the point gesture. Participants indicated a slight preference towards pointing (u = 3.22)) because they believed that pointing the device in the direction of the intended recipients was more intuitive than the touch gesture.

Participants thought individuals would learn to use the touch gesture more quickly than the point gesture, by a slight margin (u = 4.13). Participants were equally divided (u = 4.03) on which technique was more cumbersome, with 20 of them (62.50%) remaining neutral. Participant confidence in using the touch gesture (u = 5.59 and f = 26), was a clear indication of them feeling more in control with this technique. The low standard deviations for each question indicated that the participants had similar views on both NUI techniques.

5.3 Discussion

Participants felt the usability of MotionShare was good (Zhang & Sawchuk, 2012), as evident from the usability results (86.00% overall rating). User satisfaction results were high, as the participants rated MotionShare with a mean value of 5.87 in the PSSUQ. The key themes in the positive qualitative feedback included:

Gesture recognition (90.63%)

Ease of use (71.88%)

Effectiveness (62.50%)

Map of devices (56.25%)

Compass orientation functionality (50.00%)

Learnability (40.63%)

These themes were identified from the participants' comments, with the strongest theme being gesture recognition (90.63%). This theme showed that the participants were highly satisfied with the support of the NUI interaction techniques for the information sharing process. This paper identified that existing mobile applications do not determine the relative pose of co-located devices. This pose information is required as it ensures that the most appropriate NUI interaction techniques can be effectively utilised. Indoor positioning for mobile devices is a complex field and remains inaccurate and highly volatile.

The instability of indoor positioning information is a result of external variables, which influence the data collected by the various sensors embedded in mobile devices. Indoor positioning typically requires the use of external hardware, such as dongles attached to the device or cameras placed throughout an environment, to determine the relative positions of the devices. Without additional hardware to assist in device positioning, the issue becomes even more challenging.

Certain environments were shown to adversely affect the operation of MotionShare, which resulted in the initial calibrations of determining the device positions being inaccurate. The sensors embedded in mobile devices are highly inaccurate and can be easily affected by external variables. It is possible that with the rapid development of mobile devices, embedded sensors will be improved and become more accurate and stable in the future. The results of the evaluations conducted showed MotionShare's ability to accurately determine the relative pose of the co-located mobile devices without the requirement of additional hardware. The accuracy and usability of the pose information is utilised in MotionShare to facilitate information sharing, not only in an indoor, but also an outdoor environment. Doing an experiment in an outdoor environment can prompt future research endeavours because the pose information is less likely to be affected by the environmental variables that exist only within an indoor environment, such as the structure of the building.

6 CONCLUSION

Existing file sharing technologies and systems are not able to determine the relative position of mobile devices in a co-located environment without the assistance of external hardware, such cameras and beacons. This paper has discussed the design of MotionShare, an NUI mobile application, which is able to determine the relative position of mobile devices in a co-located environment, and use NUI gestures to support file sharing. The evaluation of MotionShare showed that the device positioning and gesture recognition were highly accurate and that the usability of the gestures was rated very highly.

Some issues still remain to be solved. Firstly, external factors were shown to have a negative influence on the Bluetooth RSSI values and the data captured by the motion and position sensors. Secondly, the calibration process is time consuming and needs to be streamlined. Finally, additional NUI gestures should be investigated to determine if their usability is better than the point and touch gestures implemented.

References

Agrawal, S., Constandache, I., Gaonkar, S., Roy Choudhury, R., Caves, K. & DeRuyter, F. (2011). Using mobile phones to write in air. In Proceedings of the 9th International Conference on Mobile Systems, Applications, and Services (pp. 15-28). ACM. https://doi.org/10.1145/1999995.1999998

Albert, W. & Tullis, T. (2013). Measuring the user experience: Collecting, analyzing, and presenting usability metrics. Newnes.

Anmobi, Inc. (2015). Xender-The mobile file transferring application. Last accessed 19 Oct 2018. Retrieved from https://www.xender.com/index.html

Apple, Inc. (2018). iBeacon-Apple Developer. Last accessed 19 Oct 2018. Retrieved from https://developer.apple.com/ibeacon/

ASUSTeK Computer Inc. (2015). Share Link-File transfer. 23 November 2018. Retrieved from https://apkpure.com/share-link-%C3%A2%C2%80%C2%93-file-transfer/com.asus.sharerim

Aviv, A. J., Sapp, B., Blaze, M. & Smith, J. M. (2012). Practicality of accelerometer side channels on smartphones. In Proceedings of the 28th Annual Computer Security Applications Conference (pp. 41-50). ACM. https://doi.org/10.1145/2420950.2420957

Baotic, A. (2014). Simple server-client syncing for mobile apps using Couchbase Mobile-Infinum. Last accessed 19 Oct 2018. Retrieved from https://infinum.co/the-capsized-eight/server-client-syncing-for-mobile-apps-using-couchbase-mobile

Billinghurst, M., Piumsomboon, T. & Bai, H. (2014). Hands in space: Gesture interaction with augmented-reality interfaces. IEEE Computer Graphics and Applications, 34(1), 77-80. https://doi.org/10.1109/MCG.2014.8 [ Links ]

Blake, J. (2013). Natural user interfaces in .NET. Manning Publications.

Bratitsis, T. & Kandroudi, M. (2014). Motion sensor technologies in education. EAI Endorsed Transactions on Serious Games, 1(2), e6. https://doi.org/10.4108/sg.L2.e6 [ Links ]

Britton, A., Setchi, R. & Marsh, A. (2013). Intuitive interaction with multifunctional mobile interfaces. Journal of King Saud University - Computer and Information Sciences, 25(2), 187-196. https://doi.org/10.1016/j.jksuci.2012.11.002 [ Links ]

Chen, X. A., Schwarz, J., Harrison, C., Mankoff, J. & Hudson, S. E. (2014). Air+ touch: Interweaving touch & in-air gestures. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology (pp. 519-525). ACM. https://doi.org/10.1145/2642918.2647392

Chen, K.-Y., Ashbrook, D., Goel, M., Lee, S.-H. & Patel, S. (2014). AirLink: Sharing files between multiple devices using in-air gestures. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing (pp. 565-569). ACM. https://doi.org/10.1145/2642918.2647392

Devasena, C. L. (2013). Classification of incomplete multivariate datasets using memory based classifiers-A proficiency evaluation. International Journal of Advanced Trends in Computer Science and Engineering, 2(1), 148-153. [ Links ]

DewMobile. (2016). Zapya-The cross platform file sharing app used all over the world. Last accessed 19 Oct 2018. Retrieved from https://www.izapya.com

Dingler, T., Funk, M. & Alt, F. (2015). Interaction proxemics: Combining physical spaces for seamless gesture interaction. In Proceedings ofthe 4th International Symposium on Pervasive Displays (pp. 107-114). ACM. https://doi.org/10.1145/2757710.2757722

Estimote, Inc. (2018). Estimote, Inc.-indoor location with Bluetooth beacons and mesh. Last accessed 19 Oct 2018. Retrieved from https://estimote.com

FeePerfect. (2015). Feem-Wi-Fi file transfers between phones, tablets and PCs. Last accessed 19 Oct 2018. Retrieved from https://tryfeem.com/

Heikkinen, K. & Porras, J. (2013). UIs - Past, present and future. Last accessed 19 Oct 2018. Retrieved from https://www.wwrf.ch/files/wwrf/content/files/publications/outlook/WWRF_outlook_10.pdf

Hightower, J. & Borriello, G. (2001). Location systems for ubiquitous computing. Computer, 34(8), 57-66. https://doi.org/10.1109/2.940014 [ Links ]

Hockenson, L. (2014). Gigaom-Discontinuing soon, Bump is the latest in Google's acquisition graveyard. Last accessed 19 Oct 2018. Retrieved from https://gigaom.com/2014/01/01/discontinuing-soon-bump-is-the-latest-in-googles-acquisition-graveyard/

Hollinworth, N. & Hwang, F. (2011). Investigating familiar interactions to help older adults learn computer applications more easily. In Proceedings of the 25th BCS Conference on Human Computer Interaction (pp. 473-478). British Computer Society.

Ilic, S. (2010). Tracking and detection in computer vision: Camera models and pose estimation. Last accessed 23 November 2018. Retrieved from http://campar.in.tum.de/Chair/TeachingWs17TDCV

International Standards Organisation. (1994). ISO 5725-1:1994(en), Accuracy (trueness and precision) of measurement methods and results-Part 1: General principles and definitions. Last accessed 19 Oct 2018. Retrieved from https://www.iso.org/obp/ui/#iso:std:iso:5725:-1:ed-1:v1:en

Kahai, S. (2008). Leading in face-to-face versus virtual teams. Last accessed 19 Oct 2018. Retrieved from https://www.leadingvirtually.com/leading-in-face-to-face-versus-virtual-teams/

Kratz, S., Rohs, M. & Essl, G. (2013). Combining acceleration and gyroscope data for motion gesture recognition using classifiers with dimensionality constraints. In Proceedings of the 2013 International Conference on Intelligent User Interfaces (pp. 173-178). ACM. https://doi.org/10.1145/2449396.2449419

Lee Son, T., Wesson, J. & Vogts, D. (2016). A natural user interface to facilitate information sharing among mobile devices in a co-located environment. In Proceedings of the Annual Conference of the South African Institute of Computer Scientists and Information Technologists (20:1-20:9). SAICSIT '16. Johannesburg, South Africa: ACM. 10.1145/2987491.2987533

Lee, Y. J. (2014). Android sensor signal processing using acceleration sensor for smart phone. In ASTL 2014: Proceedings of the International Conference on Advanced Computer and Information Technology (Vol. 51, pp. 188-191).

Lewis, J. R. (1995). IBM computer usability satisfaction questionnaires: Psychometric evaluation and instructions for use. International Journal of Human-Computer Interaction, 7(1), 57-78. [ Links ]

LiveQoS. (2015). SuperBeam: Quick and easy file sharing. Last accessed. Retrieved from https://superbe.am

Lu, H. & Li, Y. (2015). Gesture on: Enabling always-on touch gestures for fast mobile access from the device standby mode. In Proceedings ofthe 33rd Annual ACM Conference on Human Factors in Computing Systems (pp. 3355-3364). ACM. https://doi.org/10.1145/2449396.2449419

Markham, K. (2014). Simple guide to confusion matrix terminology. Last accessed 19 Oct 2018. Retrieved from https://www.dataschool.io/simple-guide-to-confusion-matrix-terminology/

Maxwell, J. (2012). Qualitative research design: An interactive approach. SAGE Publications.

Mesmer-Magnus, J. R. & DeChurch, L. A. (2009). Information sharing and team performance: A meta-analysis. Journal ofApplied Psychology, 94(2), 535. [ Links ]

NDT Resource Center. (2018). Accuracy, Error, Precision, and Uncertainty. Last accessed 23 November 2018. Retrieved from https://www.nde-ed.org/GeneralResources/Uncertainty/UncertaintyTerms.htm

Oh, J., Robinson, H. R. & Lee, J. Y. (2013). Page flipping vs. clicking: The impact of naturally mapped interaction technique on user learning and attitudes. Computers in Human Behavior, 29(4), 1334-1341. https://doi.org/10.1016/j.chb.2013.01.011 [ Links ]

Peffers, K., Tuunanen, T., Rothenberger, M. A. & Chatterjee, S. (2007). A design science research methodology for information systems research. Journal of Management Information Systems, 24(3), 45-77. https://doi.org/10.2753/MIS0742-1222240302 [ Links ]

Seifert, J., Simeone, A., Schmidt, D., Holleis, P, Reinartz, C., Wagner, M., ... Rukzio, E. (2012). MobiSurf: Improving co-located collaboration through integrating mobile devices and interactive surfaces. In Proceedings of the 2012 ACM international conference on Interactive tabletops and surfaces (pp. 51-60). ACM.

Seow, S. C., Wixon, D., Morrison, A. & Jacucci, G. (2010). Natural user interfaces: The prospect and challenge of touch and gestural computing. In CHI'10 Extended Abstracts on Human Factors in Computing Systems (pp. 4453-4456). ACM.

Sergeev, A. (2010). UI Designer-ISO-9241 Effectiveness metrics-Theory of usability. Last accessed 19 Oct 2018. Retrieved from http://ui-designer.net/usability/effectiveness.htm

Singleton, A. (2014). Distributed teams: Co-location, outsourced, distributed. Last accessed 19 Oct 2018. Retrieved from https://www.continuousagile.com/unblock/team_options.html

Sokolova, M. & Lapalme, G. (2009). A systematic analysis of performance measures for classification tasks. Information Processing & Management, 45(4), 427-437. [ Links ]

Tavares, T. A., Medeiros, A., de Castro, R. & dos Anjos, E. (2013). The use of natural interaction to enrich the user experience in telemedicine systems. In International Conference on Human-Computer Interaction (pp. 220-224). Springer. https://doi.org/10.1007/978-3-642-39476-8_46

Thomason, J. & Wang, J. (2012). Exploring multi-dimensional data on mobile devices with single hand motion and orientation gestures. In Proceedings ofthe 14th International Conference on Human-Computer Interaction with Mobile Devices and Services Companion (pp. 173-176). ACM. https://doi.org/10.1145/2371664.2371702

Valli, A. (2008). The design of natural interaction. Multimedia Tools and Applications, 38(3), 295-305. [ Links ]

Wendt, T. (2013). Designing for transparency and the myth of the modern interface. Last accessed 19 Oct 2018. Retrieved from https://uxmag.com/articles/designing-for-transparency-and-the-myth-of-the-modern-interface

Wigdor, D. & Wixon, D. (2011). Brave NUI world: Designing natural user interfaces for touch and gesture. Elsevier.

Yao, J., Fernando, T. & Wang, H. (2012). A multi-touch natural user interface framework. In Systems and Informatics (ICSAI), 2012 International Conference on (pp. 499-504). IEEE. https://doi.org/10.1109/ICSAI.2012.6223046

Ydangle Apps. (2013). Welcome to Flick. Last accessed 19 Oct 2018. Retrieved from https://getflick.io

Zhang, M. & Sawchuk, A. A. (2012). A preliminary study of sensing appliance usage for human activity recognition using mobile magnetometer. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing (pp. 745-748). ACM. https://doi.org/10.1145/2370216.2370380

Received: 29 Nov 2016

Accepted: 17 Sep 2018

Available online: 15 Dec 2018