Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

South African Journal of Occupational Therapy

On-line version ISSN 2310-3833

Print version ISSN 0038-2337

S. Afr. j. occup. ther. vol.50 n.2 Pretoria Aug. 2020

http://dx.doi.org/10.17159/2310-3833/2020/vol50no2

ARTICLES

The Content and Convergent Validity of Hirebright Cognitive Ability Test (H-CAT) as pre-employment measure in South Africa

Lizette SwanepoelI; Yolanda GroblerII; Susan De KlerkIII

IBRad (UP); B.OT (UP); M.OT (SU). http://orcid.org/0000-0002-6662-7071; Occupational Therapist, Private Practice

IIB.OT (UP); M.OT (SU). http://orcid.org/0000-0001-8912-5923; Occupational Therapist, Private Practice (Hirebright Pty. Ltd)

IIIB.OT (SU); Dip. Hand Therapy (UP); M.OT (SU). http://orcid.org/0000-0001-7639-9319; Senior Lecturer, Division of Occupational Therapy, Department of Rehabilitation Sciences, Stellenbosch University

ABSTRACT

INTRODUCTION: Occupational therapists perform assessment of cognitive abilities, including executive functions, in adults in a variety of practice fields. In vocational rehabilitation, these assessments are performed to determine how impairment in cognitive ability impacts on occupational performance. Executive function is understood to be cognitive processes associated with inhibitory control, working memory, cognitive flexibility, planning, reasoning and problem solving. The Hirebright Cognitive Ability Test (H-CAT), a general cognitive ability measuring instrument developed for pre-employment screening, has not been validated for clinical use in South Africa

METHODOLOGY: The aim of this study was two-fold: first, to evaluate the content validity of the H-CAT through content expert rating and item-objective congruency (IOC). Secondly, the researchers evaluated the convergent validity of the H-CAT through correlating scores of the H-CAT with an existing measure, the Raven Standard Progressive Matrices (SPM), using retrospective data from the Hirebright database

RESULTS: Item-objective congruency calculation yielded results indicating acceptable content validity (IOC values from 0.60 to 0.80) in the majority of the items (43/45) in the H-CAT. In a sample of N=20, correlation of the Raven SPM raw scores and the H-CAT raw scores yielded evidence (r = 0.89; p = 0.00) for convergent validity

Key words: general cognitive ability, executive function, content validity, convergent validity

INTRODUCTION

General Cognitive Ability (GCA), as originally defined by Spearman in 19041 is interchangeably referred to as logical reasoning, the ability to learn or process information, forge new insights, understand instructions, solve problems and to discern meaning in confusion. More recently, General Cognitive Ability in literature is similarly defined as logical-, verbal-, numerical- and spatial- reasoning2; and the ability to learn3,4. Schmidt3 states that any two or more specific cognitive aptitudes together e.g., memory, problem solving, various reasoning skills and perceptual skills, can be classified as GCA. Psychologists often refer to GCA as intelligence, which may create confusion as 'intelligence' refers to genetic potential, and GCA is believed by most to go beyond that3,5. General Cognitive Ability lies in using genetic potential to reason, to problem solve and to learn, abilities which fall under executive functioning as described by various authors6-9. Diamond6136 describes executive function as a "family" of cognitive processes consisting of inhibitory control, working memory and cognitive flexibility, with higher-level executive functions being planning, reasoning and problem solving. The link between GCA and executive function is evident, as reasoning and problem solving - which form the foundation of GCA - are classified as higher-level executive functions6-10.

Occupational therapists are trained in the evaluation of cognitive abilities and they perform these assessments in adults in a variety of practice fields (including vocational rehabilitation) to determine how impairment or aptitude in cognitive ability impact on occupational performance11. One such cognitive ability, which has been closely linked to occupational performance, is executive function.

In relation to the field of work and employment, Bade stated that "executive functioning in the workplace refers to the interplay of intellect, perceptions and reasoning to perform day to day functions in the areas of problem solving, initiation, self-monitoring, multi-tasking, shifting between tasks, inhibition, abstract reasoning, planning and organization"7:389. She concluded that executive functioning, and more specifically, reasoning and problem solving, are "critical in all types of work as they are the basis of being able to perform complex tasks whether one is a CEO, a trades worker..."7:389. Regardless of whether reasoning and problem solving are labelled executive function or GCA, both aspects are critical components in job performance. In fact, executive function is considered a predictor of job performance and successful job training at all levels of employment2,4,12-14.

Other specific cognitive abilities' predictive value for job performance is of little benefit as stated in studies by Ree and Earles15 and Schmidt3. In the work done by these authors, specific aptitudes or cognitive abilities versus overall GCA are compared in terms of predictive value for job performance, and findings indicate that the prediction validity of GCA is higher than any specific aptitude on its own. Schmidt3 further comments on how one cannot ignore scientific evidence about GCA and emphasises the fact that the value of GCA in predicting work performance is often ignored in the field of industrial psychology. As reported by Sackett et al.16, no predictive bias was found in the vast number of studies stating that GCA predicts work performance and they concluded that the question on whether GCA predicts work performance can be regarded as settled scientifically.

General Cognitive Ability or executive functioning, is not just a predictor of work performance and job training, but has also been linked to predicting the possibility of work injuries occurring3,16-18. Thus, knowledge of executive functioning can be utilised both for assessing and predicting work performance, as well as contributing to injury prevention in industries with high work injury rates.

Occupational therapists working in the field of vocational rehabilitation in South Africa, receive referrals for both evaluations to determine a person's ability to perform their own or alternative occupations after illness or injury as well as referrals for pre-emp-loyment work evaluation. Pre-employment work evaluation is part of injury prevention and absenteeism management in the workplace, and is understood to imply the evaluation of an individual's ability to meet essential job demands as part of a hiring process19.

The second author experienced an increase in referrals in clinical practice for healthy individuals for occupational therapy pre-emp-loyment work evaluation from 2014. These healthy individuals were applicants applying for job vacancies, thus evaluations on a pre-employment level were required to determine whether the individual would be able to cope with the demands of the job being applied for. With increased levels of referrals, the need for basic pre-employment measuring instruments, especially in the cognitive domain became evident. This element of work-related cognitive evaluation for healthy individuals was therefore incorporated into a separate entity within the author's practice, named Hirebright (Pty) Ltd20.

Despite the value of knowing applicants' level of GCA and executive functioning to pre-empt job performance and job training and to reduce work injury rates, no formal locally developed measuring instruments of GCA and executive functioning were available for the South African working population. Tests which are available, only measure intelligence and Intelligence Quotient (IQ).

Initially, the Hirebright team used the Raven's Standard Progressive Matrices (SPM)21 to assess applicants' executive function at pre-employment level. The Raven SPM is a 60-item, non-verbal measuring instrument, with known psychometric properties21-23 that provides information about an individual's capacity for analysing and solving problems, abstract reasoning, and the ability to learn. The non-verbal approach of the Raven SPM is believed to reduce cultural bias and an appropriate option for the South African population where language barriers could negatively affect test results if verbal skills were part of the instrument24. In addition, the Raven SPM may be administered to both individuals and groups, and makes valid and reliable evidence-based inferences to work performance and training success21. No other local low-cost instruments were available but purchasing Raven SPM scoring sheets from international suppliers made the offering of this test to clients expensive. Thus, the Hirebright team decided to develop their own executive function test, the H-CAT (Hirebright Cognitive Ability Test) to reduce the operational costs of the evaluations and thereby making their pre-employment services more affordable to the South African market. The H-CAT team consisted of the second author, who is an occupational therapist with a Masters Degree in Occupational Therapy in Vocational Rehabilitation, an actuary and an IT developer who managed the development of the on-line test application. The objectives of the H-CAT measuring instrument development were to measure logical, verbal and abstract reasoning in a working population to establish levels of general cognitive ability.

THE HIREBRIGHT COGNITIVE ABILITY TEST

Item generation:

As the main elements of executive function is reasoning ability and the ability to learn, the Hirebright team considered the definitions of 'reasoning' and 'ability to learn' when applied to a work situation. Reasoning is used to draw conclusions in order to solve problems and make decisions25. On the other hand, the ability to learn work habits and rules in complex tasks is the process of acquiring novel ways of thinking, and modifying established forms of thinking. Ropovik10 stated that learning is exhibited in the ability to successfully transfer the acquired problem solving skill to a new situation. The holistic assessment of reasoning skills may therefore indicate an individual's ability to problem solve and learn, which are also the main characteristics of GCA and executive functions measured by occupational therapists4,9,10,25.

The main component in test construction during item development, was to analyse and compare the conceptual base of various tests purporting to measure GCA (See table 1 below).

These included the Wonderlic Personnel Test (formerly known as Wonderlic Cognitive Ability Test) developed in the USA26,27. Raven Progressive Matrice's developed in the USA21,23, Revelian Onetest developed in Australia28, Cubiks Logiks developed in the UK29, and Mettl's Cognitive Abilities Assessment developed in India30. A comparison matrix (see Figure 1 on page 8) was drawn up to analyse the different components of cognitive aptitudes measured in each test. In addition, the developers considered whether the tests were 'verbal' or 'non-verbal' orientated, the number of test items, time allowances, whether they require proctoring, and whether they could be administered in a group setting or not. All five of these tests are available to clinicians for on-line administration23,27,29-31.

Based on this review the objectives of the H-CAT were met by including the following: items measuring logical reasoning, which measure the ability to form conclusions based on rational, systematic mathematical procedures; items measuring verbal reasoning which measure the ability to solve word problems, using the content of statements to draw conclusions; and items measuring abstract reasoning which measure the ability to solve problems using visual information and diagrams. During the development stage, occupational therapy peers and expert occupational therapists in the field of vocational rehabilitation were consulted to establish information on usability, e.g., ease of administration, of the test.

Instrument Construction:

The instrument was constructed to measure GCA within the known domains of logical reasoning, verbal reasoning, and abstract reasoning. Once the occupational therapist administering the test logs into the internet-based H-CAT system with the client, an example of the test administration is displayed on the screen and the client gives consent to complete the test, as well as for the results to be shared with the referring party.

The H-CAT consists of three subsections with 15 multiple choice items each, measuring:

1. logic reasoning (forming conclusions based on rational systematic series based on mathematical procedures);

2. verbal reasoning (solving problems around words - using content of statements to make conclusions); and

3. abstract reasoning (solving problems using visual information and diagrams).

The individual items require the client to solve a set problem and select an answer from three or four possible answers presented visually via the computer screen to the client. The average time to complete the 45 items on the instrument is 30 minutes based on the online system that captures time of completion of all clients completing the on-line H-CAT. The instrument was therefore programmed to allow clients 60 minutes to complete the assessment. The scoring of the H-CAT is automatically compiled by Hirebright's online system and a score and description of the results are available to the assessor on completion of the instrument. One mark for each correct answer is given and a raw score is calculated out of 45. The score is then processed to a score (out of 100) which is the processed score. The value of the processed score is that the score can be compared across various industries to develop normative data.

Development of the H-CAT was completed and the instrument is currently a commercially available, internet-based, subject-completed, occupational therapist supervised measuring instrument. The instructions and response options of H-CAT is currently available in English, Afrikaans and isiXhosa, with translation to other official languages of South Africa in progress. At present, South African H-CAT industry norms have been developed for professional occupations (e.g., requiring a professional qualification such as engineers and accountants) and elementary occupations (e.g., requiring no qualification such as general labourers and machine operators) as classified by the International Standard Classification of Occupations (ISCO-08) standard32. Normative values are continuously updated within the online system and the processed score of the client is then automatically compared to the industry norm that they have been tested on.

Rationale and Significance:

The impetus of this study derives from the fact that to date the H-CAT has been used commercially in Cape Town, alongside a valid and reliable instrument, the Raven SPM, in pre-employment measurement by Hirebright. On clinical examination, the raw scores of these tests yielded a clinical agreement, e.g., candidates obtaining high scores on the Raven SPM also obtained high scores on the H-CAT. In addition, employers reported an agreement between H-CAT processed scores with job performance in supervisor-scored commercial performance appraisal structures.

Given the clinical agreement, the researchers performed formal analysis on retrospective data available from the Hirebright database to statistically determine the validity of the measuring instrument. Validity in a measuring instrument is the extent to which an instrument measures the construct it purports to measure33,34. Validation of an instrument involves collecting and analysing instrument item data to evaluate the accuracy with which the instrument measures what it intends to measure. Content validity of an instrument "examines how well an assessment represents all aspects of the phenomenon being evaluated or studied"34:155, i.e., is the content of the instrument an accurate reflection of the construct? Convergent validity is the extent to which the score of an instrument measuring a specific construct, positively relates to scores from an existing instrument measuring the same construct34.

The researchers therefore evaluated content and convergent validity as an initial step towards determining the validity of the H-CAT in the South African context as a measure of for GCA for pre-employment screening.

METHOD

This study was approved by the Stellenbosch University Health Research Ethics Committee (ref nr NI7/I0/I09) and all participants provided informed consent prior to participation. The two objectives of the study outlined below were addressed across two phases. The methodology will be discussed for each phase.

Objective 1: To evaluate the content validity of the H-CAT through calculating the item-objective congruence (IOC) for each test item.

Objective 2: To evaluate the convergent validity of the H-CAT by correlating evaluation raw scores from the H-CAT to evaluation raw scores obtained from an existing, validated instrument, the Raven SPM, in a working population.

Phase 1: The content validity of the H-CAT

Item-objective congruence (IOC) scoring was used to evaluate content validity35,36. A group of content experts rated individual test items to evaluate congruency with the instrument objectives as outlined by Hirebright in the development of the H-CAT35,36.

Population, sampling and recruitment:

A convenient sample of five content experts36 were recruited via telephone and e-mail communication. Occupational therapists with knowledge and skill in cognitive evaluation and work evaluation, and with more than five years' clinical work experience were included. There were no exclusion criteria.

Data collection and management:

The experts were required to rate the 45 individual H-CAT test items toward calculating the item-objective congruence (IOC) value35,36. The content experts were asked to score each item on a three-degree scale as follows:

• -1: the test item is clearly not measuring the constructs of CGA (incongruent)

• +1: the test item is clearly measuring the constructs of CGA (congruent)

• 0: not clear if the constructs of CGA has been measured in the test item (questionable).

Data analysis:

During data analysis, the IOC value for each item was calculated by dividing the sum of the scores from each expert by the number of content experts35,36. Satisfactory content validity is demonstrated with a calculated IOC value of > 0.535.

Phase 2: The convergent validity of the H-CAT

To address the second objective, the researchers used a quantitative, cross-sectional, retrospective design. An important strength of most retrospective databases is that they allow researchers to examine a phenomenon as it occurs in routine clinical practice37. Retrospective databases may provide large study populations, allowing for examination of specific subpopulations such as employees working in a variety of jobs. In addition, retrospective databases provide a relatively inexpensive and expedient approach37 for answering questions posed by decision-makers such as prospective employers who are interested in using the instrument for their pre-employment screening processes.

To evaluate the convergent validity of the H-CAT, the researchers used data from the Hirebright database where both the H-CAT and the Raven SPM were performed on the same employees to determine the correlation coefficient (r) between these scores. Correlation (r) was calculated between overall scores for the H-CAT and Raven SPM out of 45 and 60 points respectively.

Population, sampling and recruitment:

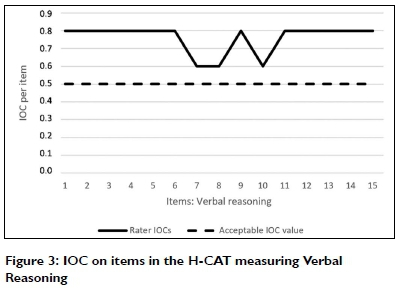

From the Hirebright database retrospective data, the researchers included a convenient sample of H-CAT and Raven SPM evaluation scores obtained between January and October 2017. This convenient sample on 20 evaluation scores resulted from assessing individuals for pre-employment and job performance purposes, using both the H-CAT and Raven SPM in clinical practice. The groups consisted of males and females between ages 20 to 50 that were employed or applying for employment. The group included employees/prospective employees across a variety of job settings. The job settings included information technology, finance, health care and architecture (see Table III on page 8). All individuals in the group resided in the Western Cape.

Data collection and management:

Relevant demographic data, employment data and H-CAT and Raven SPM evaluation scores were extracted from the existing Hire-bright files and recorded on an Excel spreadsheet for analysis. Anonymity was ensured by replacing names and surnames with a code and excluding identifying demographic data. All the data used from the H-CAT files were collected in clinical practice by one therapist from Hirebright. No changes in scoring/coding were implemented before or during data analysis. The first author performed quality assurance checks by cross-checking all data and coding to check for missing information, out-of-range values and consistency of data. No steps were necessary to normalise or eliminate data and all 20 individuals' scores that were available for both measures were captured to prevent biased results by favouring one individual over another (e.g., outliers or variance in evaluation scores between the H-CAT measure and the Raven SPM).

Data analysis:

A biostatistician from Stellenbosch University performed statistical analysis during this phase, using IBM SPSS Statistics, Version 24.0. Raw scores were used for data analysis as raw scores offer a pure score without interpretation or transformation such as a standard score38. The H-CAT and the Raven SPM instrument raw scores are based on the number of correct answers in each assessment. Correlation analyses were performed on the raw scores to identify the statistical relationships between the overall evaluation scores from the H-CAT (raw score/45) and the overall evaluation scores from the Raven SPM (raw score/60). Because the data were normally distributed, a parametric test, Pearson's Correlation Coefficient (r-value) was used to calculate the strength of the relationship between the scores of the two instruments34. A correlation coefficient of < 0.30 are considered low convergent validity; correlation coefficients of between 0.31 and 0.59 demonstrate adequate convergent validity; and correlations of > 0.59 demonstrate strong convergent validity33,34. In addition, a significance value (p < 0.05) was calculated using a two-tailed probability for normally distributed data.

RESULTS

Phase 1: The content validity of the H-CAT

Five content experts reviewed the items. Table II below displays the demographic variables of the expert reviewers (N = 5).

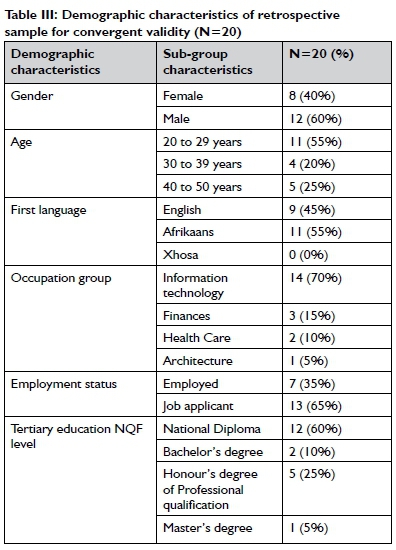

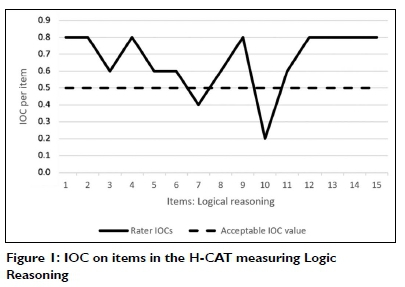

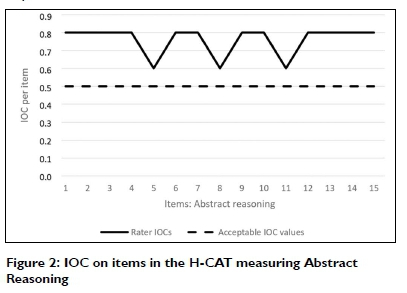

Figures 1 to 3 display the IOC values on the individual items in logic reasoning, verbal reasoning and abstract reasoning. Figure 1 indicates satisfactory IOC values (> 0.50) for 13 of 15 individual items in logic reasoning; items 7 and 10 had an IOC value of < 0.05. All individual items in verbal reasoning (Figure 2) and abstract reasoning (Figure 3) exceeded the required 0.50 value.

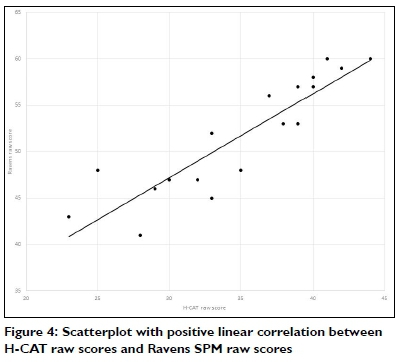

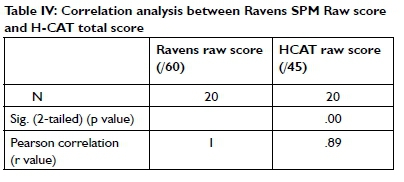

In the correlation analysis of the H-CAT raw scores and Raven SPM raw scores, Pearson Correlation presented a positive linear correlation between the two measures as displayed in Figure 4 (page 9). Table III presents the Pearson Correlation value of r = 0.89, indicating a strong relationship, with a statistical significance of p = 0.00 in the two-tailed test.

Phase 2: The convergent validity of the H-CAT

The demographic characteristics of the sample group (N=20) are presented in Table III (below). The sample group of 20 individuals consisted of more males (n=12) than females (n=8). The majority of individuals were in the age group 20 to 29 years, were job applicants and working in the field of information technology. All applicants obtained formal tertiary education (Levels 6 to 9) as classified by the National Qualifications Framework39 in the field of information technology. All applicants obtained formal tertiary education (Levels 6 to 9) as classified by the National Qualifications Framework39.

In the correlation analysis of the H-CAT raw scores ad Raven SPM raw scores, Pearson Correlation presented a positive linear correlation between the two measures as displayed in Figure 4 (page 9). Table IV (page 9) presents the Pearson Correlation value of r = 0.89, indicating a strong relationship with a statistical significance of p = 0.00 in the two tailed test.

DISCUSSION

Executive function is an important factor in predicting job performance and job training6,7,16. The H-CAT measuring instrument aims to evaluate aspects of executive function closely related to GCA namely logical reasoning, verbal reasoning and abstract reasoning.

Phase 1: The content validity of the H-CAT

The researchers used the approach of expert review and IOC by Rovinelli and Hambleton35, to evaluate content validity of the H-CAT; an approach which is broadly applied in healthcare36. This approach is quantitative in nature and requires experts to trichotomously indicate the extent to which the individual test items are reflective of the construct (GCA). Upon calculating IOC values of the five expert reviewers for logic reasoning two items, item 7 and 10, did not meet the acceptable IOC value of > 0.50. Three of the reviewers (n = 5) were either uncertain or of the opinion that items 7 and 10 do not measure the construct of logical reasoning. These items potentially exceeded logical reasoning demands and test developers concluded that it will be eliminated from the overall H-CAT measuring instrument. Reviewers did not comment on their responses. The ratings from the expert reviewers on the verbal reasoning and abstract reasoning test items exceeded the satisfactory value of 0.50 (IOC values from 0.60 to 0.80), indicating good content validity for these items of the H-CAT. These results confirm that items are reflective of the theoretical construct GCA, conceptualised by Schmidt3, Diamond6 and Bade7 as reasoning and problem solving in the workplace. The Hirebright team's inclusion of logical reasoning, verbal reasoning and abstract reasoning skills, is consistent with known assessments including the Wonderlic Personnel Test26, Raven Progressive Matrices21, Revelian Onetest28, Cubiks Logiks29, and Mettl's Cognitive Abilities Assessment30, and demonstrated good content validity as confirmed by the majority of the IOC scores.

Phase 2: The convergent validity of the H-CAT

In the absence of a gold standard for measuring GCA, validity of the H-CAT was evaluated using The Raven SPM an instrument that measures GCA, with known psychometric properties, including but not limited to validity. The Raven SPM has been validated to measure the construct of GCA across various working populations internationally22,24. In addition, the Raven SPM was utilised during the item construction phase of the H-CAT to inform the development of relevant test items. This test was routinely used in the Hirebright practice and was therefore an obvious choice toward evaluating convergent validity in the new instrument. Evidence of convergent validity does not imply the interchangeable use of the instruments, it does however confirm that a similar construct is being measured across the two instruments. In a sample of N=20, statistical analysis of the Raven SPM raw scores (/60) and the H-CAT raw scores (/45) yielded evidence (r = 0.89; p = 0.00) for convergent validity for clinical use. Based on both correlation calculation and evaluating the significance of the correlation, preliminary convergent validity can therefore be inferred for the H-CAT.

The limitations for this study pertains to the relatively small sample size (N = 20) for the convergent validity correlation analyses. The fairly homogenous educational levels of the sample group add certainty for the H-CAT in clinical use only for clients in professional occupations, with a tertiary level of education (Level 6: National diploma, to Level 9: Master's degree)39. Further evaluation of the correlation of H-CAT results with the Raven SPM in a group that represent the population group with low schooling and working in unskilled to semi-skilled occupations, will strengthen the evidence for clinical use of the H-CAT in this population group. Secondly, the occupations represented in the sample group were limited to four fields of work; variance in occupation groups will ensure that the findings can be generalised.

The strengths of this study include the findings of good content validity of the majority of the items (43/45) through expert review and strong preliminary convergent validity in the H-CAT. As such, this study provided the first steps towards empirically validating the H-CAT in South Africa. The study also laid the foundation for clinical Occupational Therapy practitioners to understand the conceptual base of executive function and GCA measuring instruments.

CONCLUSION

To conclude, the findings of expert reviews support the content validity, and the findings of the correlation analysis exhibited promising preliminary convergent validity of the H-CAT instrument. Continued work is needed to establish a collective body of validity evidence for the H-CAT. This study contributes to literature, since it adds to the psychometric refinement of a commercially available evaluation instrument and thus to the evidence supporting the use of the H-CAT in evaluating GCA.

AUTHOR CONTRIBUTIONS

Lizette Swanepoel (first author)

• Research (lit review, data capturing, cross-checking of data, data analyses) and writing of manuscript

• Editing and preparing manuscript for submission

Yolanda Grobler (second author)

• Providing data from clinical files (data capturing)

• Writing of manuscript

Susan de Klerk (third author)

• Research (lit review and data analyses)

• Writing of manuscript

REFERENCES

1. Spearman C. "General intelligence," objectively determined and measured. Am J Psychol. 1904; 15(2): 201 - 92. https://www.jstor.org/stable/pdf/1412107 [ Links ]

2. Hunter JE. Cognitive ability, cognitive aptitudes, job knowledge, and job performance. J Vocat Behav. 1986; 29(3): 340 - 62. https://doi.org/10.1016/0001-8791(86)90013-8 [ Links ]

3. Schmidt FL. The role of general cognitive ability and job performance: Why there cannot be a debate. Hum Perform. 2002; 15(1 - 2): 187 - 210. https://doi.org/10.1080/08959285.2002.9668091 [ Links ]

4. Bertua C, Anderson N, Salgado JF The predictive validity of cognitive ability tests: A UK meta-analysis. J Occup Organ Psychol. 2005 Sep 1; 78(3): 387 - 409. https://doi.org/10.1348/096317905X26994 [ Links ]

5. Carson AD. The Integration of interests, aptitudes, and personality traits: A test of Lowman's Matrix. J Career Assess. 1998; 25; 6(1): 83 - 105. https://doi.org/10.1177/106907279800600106 [ Links ]

6. Diamond A. Executive functions. Annu Rev Psychol. 2013; 64(1): 135 - 68. https://doi.org/10.1146/annurev-psych-113011-143750 [ Links ]

7. Bade S. Cognitive executive functions and work: Advancing from job jeopardy to success following a brain aneurysm. Work. 2010; 36(4): 389 - 98. https://doi.org/10.3233/WOR-2010-1042 [ Links ]

8. Van der Sluis S, de Jong PF, van der Leij A. Executive functioning in children, and its relations with reasoning, reading, and arithmetic. Intelligence. 2007; 35(5): 427 - 49. https://doi.org/10.1016/j.intell.2006.09.001 [ Links ]

9. Cramm HA, Krupa TM, Missiuna CA, Lysaght RM, Parker KH. Executive functioning: A scoping review of the occupational therapy literature. Can J Occup Ther. 2013; 80(3): 131 - 40. https://doi.org/10.1177/0008417413496060 [ Links ]

10. Ropovik I. Do executive functions predict the ability to learn problem-solving principles? Intelligence. 2014; 44: 64 - 74. https://doi.org/10.1016/j.intell.2014.03.002 [ Links ]

11. AOTA. Role of Occupational therapy in assessing functional cognition. Accessed 3 March 2019. https://www.aota.org/Advocacy-Policy/Federal-Reg-Affairs/Medicare/Guidance/role-OT-assessing-functional-cognition.aspx [ Links ]

12. Schmidt FL, Hunter J. General mental ability in the world of work: Occupational attainment and job performance. J Pers Soc Psychol. 2004; 86(1): 162 - 73. https://doi.org/10.1037/0022-3514.86.1.162 [ Links ]

13. Salgado JF Anderson N, Moscoso S, Bertua C, Fruyt F. International validity generalization of GMA and cognitive abilities: A European community meta-analysis. Pers Psychol. 2003; 56(3): 573 - 605. https://doi.org/10.1111/j.1744-6570.2003.tb00751.x . [ Links ]

14. Kolz AR, Mcfarland LA, Silverman SB. Cognitive ability and job experience as predictors of work performance. J Psychol. 1998; 132(5): 539 - 48. https://doi.org/10.1080/00223989809599286 [ Links ]

15. Ree MJ, Earles JA. Intelligence is the best predictor of job performance. Curr Dir Psychol Sci. 1992; 1(3): 86 - 9. https://doi.org/10.1111/1467-8721.ep10768746 [ Links ]

16. Sackett PR, Schmitt N, Ellingson JE, Kabin MB. High-stakes testing in employment, credentialing, and higher education: Prospects in a post-affirmative-action world. Am Psychol. 2001; 56(4): 302 - 18. https://doi.org/10.1037/0003-066X.56A302 [ Links ]

17. Zhang J, Li Y, Wu C. The Influence of Individual and team cognitive ability on operators' task and safety performance: A multilevel field study in nuclear power plants. Sueur C, editor. PLoS One. 2013; 8(12): e84528. https://doi.org/10.1371/journal.pone.0084528 [ Links ]

18. Allahyari T, Rangi NH, Khalkhali H, Khosravi Y. Occupational cognitive failures and safety performance in the workplace. Int J Occup Saf Ergon. 2014; 20(1): 175 - 80. https://doi.org/10.1080/10803548.2014.11077037 [ Links ]

19. Kuijer PPFM, Gouttebarge V, Brouwer S, Reneman MF, Frings-Dresen MHW. Are performance-based measures predictive of work participation in patients with musculoskeletal disorders? A systematic review. Int Arch Occup Environ Health. 2012; 85(2): 109 - 23. https://doi.org/10.1007/s00420-011-0659-y [ Links ]

20. HireBright. HireBright Powerful Pre-employment Testing. Accessed 10 April 2019. Available from: www.hirebright.co.za [ Links ]

21. Raven JC. Advanced Progressive Matrices, Sets I and II: Plan and Use of the Scale with a Report of Experimental Work Carried out by G. A. Foulds, & A. R. Forbes. England, London; 1965. [ Links ]

22. Raven, J; Raven J. Raven Progressive Matrices. In: McCallum SR, editor. The Handbook of Nonverbal Assessment. Boston: Springer US; 2003: 223 - 37. [ Links ]

23. Academia. Ravens Standard Progressive Matrices Online. Accessed 10 April 20I9. Available from: https://www.academia.edu/24308941/IQ_Test_Raven_s_Advanced_Progressive_Matrices_1_ [ Links ]

24. Owen K. The suitability of Raven's Standard Progressive Matrices for various groups in South Africa. Pers Individ Dif. 1992; 13(2): 149 - 59. https://doi.org/10.1016/0191-8869(92)90037-P [ Links ]

25. Greiff S, Wüstenberg S, Funke J. Dynamic problem solving. Appl Psychol Meas. 2012 May 25; 36(3): 189-213. https://doi.org/10.1177/0146621612439620 [ Links ]

26. Wonderlic EF, Hovland CI. The Personnel Test: a restandardized abridgment of the Otis S-A test for business and industrial use. J Appl Psychol. 1939; 23(6): 685 - 702. https://doi.org/10.1037/h0056432 [ Links ]

27. Wonderlic Inc. Official Wonderlic website. Accessed 10 April 2019. Available from: https://www.wonderlic.com/ [ Links ]

28. Revelion. Revelion OneTest Online. Accessed 10 April 2019. Available from: https://www.revelian.com/ [ Links ]

29. Cubiks.com. Cubiks Online. Accessed 10 April 2019. Available from: https://www.cubiks.com/ [ Links ]

30. Mettl Online Assessment. Mercer/Mettl. Accessed 10 April 2019. Available from: https://mettl.com/en/cognitive-ability-test/ [ Links ]

31. OneTest. OneTest.com. Accessed 10 April 2019. Available from: https://www.practiceaptitudetests.com/testing-publishers/onetest/ [ Links ]

32. International Labour Organisation. International Standard Classification of Occupations, Volume I. Geneva, Switzerland; 2012. Available from: https://www.ilo.org/wcmsp5/groups/public/@dgreports/@dcomm/@publ/documents/publication/wcms_172572.pdf [ Links ]

33. Stein F Cutler S. Research design and methodology. In: Clinical research in Occupational Therapy. 4th ed. Albany, NY: Delmar Singular Publishing group: 223 - 67. [ Links ]

34. Crist P Reliability and Validity: The psychometrics of standardised assessments. In: Hinojosa J, Kramer P editors. Evaluation in Occupational Therapy: Obtaining and interpreting data. 4th ed. Bethesda, Maryland: AOTA; 20I4: 143 - 60. [ Links ]

35. Rovinelli RJ, Hambleton RK. On the use of content specialists in the assessment of criterion-referenced test item validity. Dutch J Educ Res. 1977; 2: 49 - 60. ERIC Number: ED121845 [ Links ]

36. Turner R.C. and Carlson LT. Indexes of Item-Objective Congruence for Multidimensional Items. Int J Test. 2003; 3(2): 163 - 71. https://doi.org/10.1207/S15327574IJT0302_5 [ Links ]

37. LaMorte WW. Prospective versus Retrospective cohort studies. Boston University School of Public Health. 2016. Accessed 14 April 2019. Available from: http://sphweb.bumc.bu.edu/otlt/MPH.Modules/EP/EP713_CohortStudies/EP713_CohortStudies2.html [ Links ]

38. Crist P Scoring and interpretation of results. In: Hinojosa J, Kramer P editors. Evaluation in Occupational Therapy: Obtaining and interpreting data. 4th ed. Bethesda, Maryland: AOTA Press; 2014: 161 - 84. [ Links ]

39. South African Qualifications Authority. The South African National Qualifications Framework. 2014. Accessed 16 April 2019. Available from: http://www.saqa.org.za/list.php?e=NQF [ Links ]

Correspondence:

Correspondence:

Lizette Swanepoel

Email: lizetteswan@swan-ot.com