Serviços Personalizados

Artigo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares em Google

Similares em Google

Compartilhar

Koers

versão On-line ISSN 2304-8557

versão impressa ISSN 0023-270X

Koers (Online) vol.78 no.2 Pretoria Jan. 2013

http://dx.doi.org/10.4102/koers.v78i2.1240

ORIGINAL RESEARCH

Organism versus mechanism: Losing our grip on the distinction

Organisme en meganisme: 'n Onderskeid wat verlore gaan

Henk Reitsema

L'Abri Fellowship Foundation, Utrecht, The Netherlands

ABSTRACT

The distinction between organism and mechanism is often subtle or unclear and yet can prove to be fundamental to our understanding of the world. It has been tempting for many thinkers to seek to 'understand' all of reality through the lens of either the one or the other of these concepts rather than by giving both a place. This article sets out to argue that there is a substantial loss of understanding when either of these metaphors is absolutised to explain all causal processes and patterns in reality. Clarifying the distinction between the two may provide one more tool to grasp what is reductionist in many of the perspectives that have come to dominate public life and science today. This contention is tested on the quest for the design of self-replicating systems (i.e. synthetic organisms) in the nanotech industry. It is common that the concepts of organic functioning and mechanism are used imprecisely and in an overlapping way. This is also true of much scientific debate, especially in the fields of biology, micro-biology and nano-science. This imprecise use signals a reductionist tendency both in the way that the organic is perceived and in terms of the distinctive nature of mechanisms.

OPSOMMING

Die onderskeid tussen organisme en meganisme is dikwels subtiel of onduidelik, maar nogtans kan hierdie verskil 'n groot invloed op ons verstaan van die wêreld hê. Dit was in die geskiedenis van die filosofie vir baie denkers verleidelik om die totale werklikheid deur die lens van slegs die een of die ander van hierdie metafore te probeer verstaan in plaas daarvan om albei hulle regmatige plek te gee. Hierdie artikel argumenteer dat daar 'n substansiële verlies aan begrip is as 'n mens slegs een van hierdie metafore verabsoluteer in 'n poging om alle oorsaak- en gevolgpatrone in die werklikheid te verklaar. 'n Duideliker onderskeid tussen hierdie twee konsepte kan waarskynlik 'n bydrae lewer om die reduksionistiese elemente in die hedendaagse denkwyses wat die wetenskap en die openbare lewe oorheers, te bepaal. Hierdie benadering word getoets aan 'n analise van die ontwikkelinge in die nanotegnologie waar gepoog word om sintetiese lewe en selfrepliserende sisteme te vervaardig. Dit kom algemeen voor dat die begrippe organisme en meganisme onnoukeurig gebruik word en, tot 'n mate, soms selfs oorvleuelend. Dit geld ook vir 'n groot deel van die huidige wetenskaplike debatte, veral in die biologie, mikrobiologie en nanowetenskap dissiplines. Hierdie onnoukeurige woordgebruik maak dit duidelik dat daar 'n reduksie plaasvind beide in die onderskeid tussen wat as organiese en dit wat as spesifiek meganiese eienskappe beskou word.

Introduction

The distinction between organism and mechanism is often subtle or unclear and yet can prove to be fundamental to our understanding of the world. It has been tempting for many thinkers to seek to 'understand' all of reality through the lens of either the one or the other of these concepts rather than by giving both a place. This article sets out to argue that there is a substantial loss of understanding when either one of these metaphors is absolutised to explain all causal processes and patterns in reality. Clarifying the distinction between the two may provide one more tool to grasp what is reductionist in many of the perspectives that have come to dominate public life and science today. This contention is tested on the quest for the design of self-replicating systems (i.e. synthetic organisms), a central endeavour in nanotechnology today.

It is common that the concepts of organic functioning and mechanism are used imprecisely and in an overlapping way. This is also true for much scientific debate, especially in the fields of biology, micro-biology and nano-science. This imprecise use signals a reductionist tendency both in the way that the organic is perceived and in terms of the distinctive nature of mechanisms. This reduction results in a number of paradoxes both in science and in society. In biology, for example, the increased scientific understanding of the processes at the nano-scale has resulted in people situating cause in mechanistic causal chains rather than in causes seated in the distinctive realm of the organic (the cell as a centre of operation and top-down (function based) causal processes). At the same time, the more vague vocabulary of emergentism has entered biology as a mechanistic explanatory tool, whilst it provides little or no predictive capacity (Anderson 1972:394),1 paradoxically introducing an almost magical dimension in our understanding of how a strictly mechanistic process works.

Though the choice has been made in this article to take the developments in nanotechnology and microbiology as a primary test case, the organism-mechanism distinction is equally relevant to social contexts. In social organisations, the use of the term 'mechanism' is often applied to things like double bookkeeping to keep people honest and multilayered management.2 These are often used to supplant or replace processes like relationships of trust and sense of honour. Propensities such as these (trust and honour) can reasonably be called organismic in the sense that they are natural, often pre-intentional processes of the human organism which function simultaneously, especially in the realm of interconnectedness at multiple dimensions of scale. Even evolutionary biologists and neuroscientists would argue that altruism and trust have some form of evolutionary origin and therefore are part of the natural organic realm (see Axelrod 2006:130; Dawkins [1976] 1989:11; Trivers 1971:35). The paradox of using such mechanisms to supplant relational processes is that the cost of overheads can increase whereas the goal was to safeguard appropriate use of funds. It would seem that finding a legitimate place for both dynamics would be a better way to go.

Mechanismic versus organismic worldview

The terminology attempting to indicate an overarching worldview that is defined either by the organism metaphor or by the mechanism metaphor is not uniform. Organistic, organismic and organicism (and the commensurate forms of the term 'mechanism') have all been employed to this end (Venter 1997:41-60). Part of the difficulty with this terminology is that the traditional use of the '-ism' ending to connote extreme or universalised perspective, is already part of the core terminology in this case, but is here simply referring to functioning wholes. This renders the terms 'organistic' and 'mechanistic' ambiguous, either referring to organlike or machinelike or to a more worldviewish usage. From here on, I will use the terms 'organismic' and 'mechanismic' to refer to the overarching worldview and the terms 'organistic' and 'mechanistic' to connote an absolutised or one-sided use of either metaphor in the more restricted context of a specific aspect or case of reality.

The tendency to take the biology-based term 'organism' and apply it to inorganic systems, perpetually moving wholes or groups rather than individuals, has a long history. Many ancient worldviews included some form of organismic thinking, as do more recent variants of pantheistic and New Age philosophies. In these philosophies the movement of people and animals (i.e. organisms) is taken as a model and explanation of the movement of all other moving things, for example, the celestial bodies. We are then seen to be living inside a huge living entity that requires harmony in order to function. As Venter (1992:189-224) has emphasised, these pictures of how things work provide more than just explanations of the movement of celestial bodies; they also imply evaluations of things in the universe. If this system of heavenly bodies is an eternal living entity that has a direct influence on my life, they must somehow be omnipotent or divine. So we have to live in a way that pleases these powerful influences. When applied to group wholes, this view quickly breeds an absolutist view of the dynamics of the group, whatever scale size one is focusing on. An example can be found in the organismic social perspective of the likes of Mussolini (1938:18). Venter (1992:189-200) argues that the resurfacing tendency in the 20th century to have an organismic perspective (as in the New Age movement), has its roots in the new physics that has paradoxically opened the way to ancient pantheistic mysticism.

The inherent category confusion in organismic thinking which declares that all is organism, ends up reducing reality in a number of ways. For one it does not sufficiently allow for causal relationships at other levels of explanation. Dooyeweerd's (2002:94, 241) suite of aspects of reality and his analysis of act structures would be a relevant reminder in this regard, situating laws of cause and effect in many layers from the numeric, spatial and kinematic up through the higher levels of behaviour caused by social processes etc. It also leads to a loss of both truly external (to the cosmos) influences and to a loss of situated or intention based causes inside the whole.

When machines working on wind-, water- and fire-based steam energy started to become more complex and seemingly self-perpetuated, a new model was found with which to represent the universe, and so the mechanismic worldview was born. If reality is a big machine, then the planets are not all defining or supreme and we can, once we understand their mechanics, employ their forces and perhaps even control them. More importantly, the machine-based perception of ourselves implies a similar potential for control, once the mechanics are understood, but lacks precisely a space for an acting self. This ends up undressing our existence, so that there is no space for an acting self and the real solutions are chemical and not intentional.

It is with the body-mind dualism of the enlightenment philosopher René Descartes that the machine metaphor could start to be applied as a key to understanding things that had formerly been defined by their being an organism or having an organism-like dynamic. This line of understanding was strengthened by Thomas Hobbes's description of humans and society as a machine (Hobbes 1962:19, 55) and has subsequently been applied in the industrial revolution when people were organised in a machine-like fashion to enhance the tempo of production. This spurned a variety of mechanismic models of understanding economic processes and all other social interactions. Darwin was strongly influenced by this mechanismic tradition in economics. He especially linked the conflict or competition motive with discourse from the advances in technology as well as from the cultural practices of selection (farmers and pigeon breeders). All these elements contributed to the central concept of his theory: the metaphor of 'naturalselection' (Venter 1996:209).

How the machine metaphor came to be dominant even in biology

Descartes had taken the position that we need to incorporate a method of extreme scepticism to achieve certainty. Mathematics was the only science that was seen as successful in conquering scepticism (Strauss 1953:171). It was the kind of fundamental knowledge that would give humans power. What was happening here was that a fundamental shift was taking place in the natural law (right) idea as it had been understood in its development from Plato and Aristotle through the Middle Ages. This natural law idea was also influenced by Galileo in his formulation of the law of inertia, where movement was viewed as the natural state of things (Strauss 1953:10). Hobbes, who took over Descartes's conviction that fundamental scepticism was necessary, extended this idea to humans and society: 'for seeing life is but a motion of limbs … for what is the heart but a spring; and the nerves, but so many strings, and the joints, but so many wheels, giving motion to the whole' (Hobbes 1962:19, 55). This is a clear attempt at trying to understand the organic in mechanistic terms. Because of the basic attitude of scepticism, knowledge was not seen as concerned with ends (no teleology) any more. This loss of appreciation of goal- or purpose-based functioning resulted in a diminished interest in the goals that biological functions are driven by and therefore a preference for the mechanistic view arose (Strauss 1953:171). For modernity, natural law had become the sealed-off totality of nature, unchanging. This is in sharp contrast with natural law as Aquinas (1948) had still perceived it:

To the natural law pertains everything to which a man is inclined according to his nature. Now different men are inclined to different things; ... Therefore there is not one natural law. (q. 94 a. 4)

Aquinas certainly considered many things universal amongst humanity and therefore saw natural law as a fine basis for many conclusions as to what is true, though he did not see it as an impersonal structure, but as that which joined particular instances of justice to the Divine. The shift away from this conviction that was started with Descartes led to a scientific approach that presupposed that all laws describing the natural order could be distilled into mathematical formulas, building from the laws of interaction for the smallest parts upwards.

The result is a reduction to the model of mechanism or machine for all interactions of matter, not allowing for new constituents to enter at the organic level - or any other level, for that matter. This also does not allow for a causality that is situated in these higher layers. This mechanistic restriction has led to false expectations and a sterile approach within those disciplines that are defining the landscape for much of the research and engineering which is currently taking place also at the nanoscale. The nanoscale is where biology and physics meet and so this development has led to a mechanisation of the understanding of cause-and-effect relationships even in biology - the discipline that should have been the science of organic functioning par excellence.

The difference between biological 'structures' and human creations

One of the people who have spent time pondering the differences between things made by humans and that which we find in the organic realm as biology observes it is Robert Rosen. Rosen concludes the first chapter of his book Life itself with the remark: 'So it happens that the wonderful edifice of physical science, so articulate elsewhere, stands today utterly mute on the fundamental question: What is life?' (Rosen 1991:12). What he subsequently argues in his book is that: 'the muteness of physics [with regard to the question: what is life?] arises from its fundamental inapplicability to biology' (Rosen 1991:13). In his analysis, this inapplicability to biology has everything to do with the tendency of physics to restrict itself to mechanism, computability and that which is simulable. He formulates himself as follows:

As we have seen, a contemporary physicist will feel very much at home in the world of mechanisms. We have quite deliberately created this world without making any physical hypotheses, beyond our requiring the simulability of every model. We have thus put ourselves in a position to do a great deal of physics, without having had to know any physics. That fact alone should indicate just how special the concept of mechanism really is. It is my contention that contemporary physics has actually locked itself into this world; this has of course enabled it to say much about the (very special) systems in that world, and nothing at all about what is outside. Indeed, the claim that there is nothing outside (i.e., that every natural system is a mechanism) is the sole support of contemporary physics' claim to universality. (Rosen 1991:212-213)

What Rosen articulates here is that the trend to simulate organic function with computational models indicates an underlying assumption that organism is nothing more than complex mechanism.

We are organisms but we make mechanisms

The products of human design would seem to be more mechanical than organic. This comes because abstraction and reduction are tools that we use to grasp or conceptualise things in the concrete world3 (Glas 2002:149). Though we are organisms for whom it is true that in some way everything is connected to everything (organism, species, population, environment, biosphere, etc.), the complexity of this interconnectedness is too great for us to exhaustively map in our minds. So, though our functioning at the level of naive experience is wonderfully integrated, our attempts to design and make things are more detached from this layered integration, because we employ abstraction and reduction. By definition, therefore, what we make tends in the direction of the more limited machine-like dynamics of limited cause-and-effect patterns. Machines are typically not fully integrated with their environment, therefore they disturb rather than partake in the balance4 of things in the organic realm.

This dimension of human design holds true except for in those contexts where the regulating interaction of organisms is steering that process of design, such as in the structure of human organisations. Even here, though, it could be argued that we tend to err in the direction of mechanistic resolutions to organic problems.5 This is still true of the management-driven models of organisation of our day, including those that believe in employing what are considered to be the emergent properties in such systems. Given the mechanistic restrictions of physics and human engineering, if there are material systems that are not mechanisms, all that contemporary physics can do is to tell us what properties they cannot have. And as Rosen (1991) puts it:

what must be absent seems devastating. For instance, such a system cannot have a state set, built up synthetically from the states of minimal models and fixed once and for all. If there is no state set, there is certainly no recursion, and hence, no dynamics in the ordinary sense of the term. There is accordingly no largest model of such a system. And the categories of causation in it cannot be segregated into discrete, fixed parts, because fractionability itself fails. (p. 242)

This last remark about the absence of fractionability (or the lack of accumulation of discrete packages in design) is really a core issue in the difference between human engineering and the design embodied in organic life.

What it means is that the degree of complexity of biological design is typically far greater than a product of human engineering with the same number of parts because of the more integrated nature of that design, that is, there are more established relationships and connections over more scales of size without partition into separable packages (everything is more authentically connected with everything else6). Complexity is not the only characteristic of life, though; it is not even its defining characteristic. Rosen would say 'complexity7 is not life itself though it is the habitat of life' (Rosen 1991:280). More is needed to distinguish what is alive from what is complex; given the complexity of dead organisms this should be clear. Biology, amongst other things, is relational in the sense that the organisation is prioritised over the physics:8

Organization in its turn inherently involves functions and their interrelations; the abandonment of fractionability, however, means there is no 1 to 1 relationship between such relational, functional organizations and the structures which realize them. These are the basic differences between organisms and mechanisms or machines. (Rosen 1991:280)

Rosen arguably epitomises the reductionist approach to life, as: 'throw away the organization and keep the underlying matter' (Rosen 1991:126, 127). The relational alternative to this, he would say, is the exact opposite, namely: 'when studying an organized material system, throw away the matter and keep the underlying organization.'

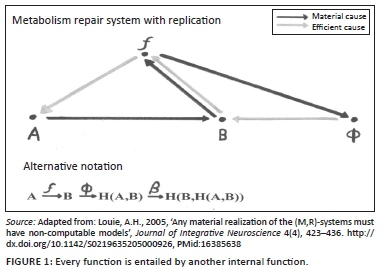

The concepts 'organism' and 'organisation' are not only linked by chance similarity of letters. Their etymological linkage gives witness to an age-old insight that the two belong together. 'Organisation' in our context means purpose-directed structure (having teleology) or functionally specific structuring. Organisms are characterised, amongst other things, by the fact that their parts are organised in a functionally specific way. Not only is this organisation visible when one looks for it but the functional or relational aspect seems to be primary, that is, biological systems frequently achieve the same goal by different means, whether it be the realising of pain, being in a certain state of mind or conquering a bacterial or viral intrusion. The embodied organisation would seem to be the organisation of functions which are then realised in material organisation - the direction of causation being both top-down and bottom-up, rather than simply bottom-up. What is happening is that the top-down (function-oriented) causal processes are employing the bottom-up physico-chemical processes to achieve their goal. The immunological response would be a case in point: it displays a lot of redundancy characterised not by a similarity of mechanism but by a similarity of goal or function. The essence of biological design seems to be found in the relationships of a vast variety of functionalities. These functionalities are frequently realisable by various physical means. Whilst chaos may be a form of structure, even having its own kind of regularity and computability, it is not organisation for lack of this goal-oriented dimension (see Figure 1).

What Rosen contends is that biological organisms - in contrast to mechanisms9 and machines - contain almost everything about their functional organisation within themselves (Rosen 1991:244). This is in line with Heusinkveld and Jochemsen's (2006:5) assertion that cells have an inwardness. As a result, biological organisms can answer 'why?' questions about components of their functional organisation in terms of efficient cause from within this organisation (Gwinn 2007a:1). Rosen coined the phrase 'closed to efficient causation' (Rosen 1991:244) to express this feature of organisms which have an internal loop of causation that closes out external domino effects in the mechanistic sense. What this means is that there is top-down causation within an organism which provides the reason for why some of the bottom-up chemical processes fulfil the function that they do. This is a concept that has tremendous implications for biology, physics and science in general. It means that to describe the processes inside organisms, one has to have models (such as the metabolism-repair system model (see Figure 1) (Louie 2005:423-436), which include impredictive loops of causation. These are therefore models that are non-computable, that is, they cannot be turned into algorithms for computational processing.

This flies in the face of the widely held conviction that Turing machines (computation-based machines that define the limits of mechanical computation) are the ticket to developing computation-based models of life and that all complexity in the natural order is fractionable. The challenge for physics is therefore to create a formalism that is outside the realm of simulable recursive functions and therefore is not state-based, and is not fully computable, in the Turing sense. As Gwinn (2007b:2) points out, there sometimes exists a degree of confusion concerning what a Turing machine is or is not capable of computing. This uncertainty stems from the use of phrases such as 'more powerful' when referring to certain kinds of computing device, which seem to imply a larger scope of computability but in reality refer only to speed or efficiency of computation. Also, devices such as Cray computers, cellular automata, neural nets and quantum computers are unable to compute anything that is outside the (theoretical) scope of a Turing machine. Even adding 'stochastic' devices, multiple read or write heads, and so forth, to a Turing machine does not allow the enhanced machine to compute anything beyond what was already considered computable (Gwinn 2007b:2). Turing computability covers only the realm of purely rote or algorithmic processes. Such processes are completely syntactic, since they are restricted to symbol manipulation. In the realm of mathematical systems, such systems that do not include semantic elements and are entirely describable by rote processes are labelled formalisable. It is this feature of formalisability that makes state-based modelling possible. State-based descriptions are what all of physics rests on (including quantum mechanics), yet they are unable to describe systems that possess such impredictive loops of causation (Louie 2006:2). Gwinn (2007b) summarises Rosen's conclusion as follows:

The result is that the limits of description imposed by the approaches of computational modelling and by state-based physics are entirely artefactual (non-living), and do not represent fundamental limits of the material world. With regard to biology, those approaches are incapable of answering fundamental questions about organisms, such as 'what is life?', because they are unable to represent the relevant features of the material systems we call "organisms" within their formalisms. (p. 2)

It is fair to note that Rosen's conclusion is not that artificial life is impossible. It is, rather, that life is not computable: however one models life, whether natural or artificial, one cannot succeed by computation alone, would be his position. Ultimately he is saying that life is not definable by an algorithm, no matter how complex the algorithm is (Louie 2005:3). This is a conclusion that in fact has some practical verification from computer science. Attempts to implement a hierarchical closed loop led to deadlock, which is expressly forbidden in systems programming (Silberschatz & Galvin 1998).

Synthesised life?

The most full-blown attempts to synthesise life and reduce organic process to the computable can be found in the recent work of the Craig Venter Institute and the Biophysics and Bioengineering departments of Stanford University. An article entitled: 'A Whole-cell computational model predicts phenotype from genotype' (Karr et al. 2012:389-401) clearly exudes the conviction that life can be fully simulated with computer models.

The article starts with the observation that: '[u]nderstanding how complex phenotypes arise from individual molecules and their interactions is a primary challenge in biology that computational approaches are poised to tackle' (Karr et al. 2012:389). Two broadly heralded assumptions are evident in this starting point. The first is that ultimately the computational reduction of organic life is possible, even if there is still a lot of refining to do. The second is that the phenotype (the working whole) is somehow produced by its molecular parts, rather than that the integrated whole steers its reproduction as a kind of control centre. Not only is the control situated in the parts rather than the whole, but there is a further reduction specifically to the genome (DNA molecule) as the steering or controlling entity, even though the computational model at hand has included a lot of effort to model the perceived products of genome function. One sees this reduction of life to the genome in the following quotation: 'Moreover, these findings, in combination with the recent de novo synthesis of the M. genitalium chromosome and successful genome transplantation of Mycoplasma genomes to produce a synthetic cell (Gibson et al. 2008, 2010; Lartigue et al. 2007, 2009), raise the exciting possibility of using whole-cell models to enable computer-aided rational design of novel microorganisms' (Karr et al. 2012:399). The quick jump from a synthesised DNA molecule (which is not designed but copied from biological design) to therefore having created a synthesised cell is clearly a blind spot that has its roots in the reduction of life to the genotype. It has to be stressed that the genome does not replicate itself; in fact, it does nearly nothing by itself. It is rather replicated by the cell as a working whole.

The tendency to look at the represented code as the ultimate driver loose from the rest of the cell is even more painfully clear when one sees how the weight discrepancy between the DNA and the total Mycoplasma chromosome is perceived:

Most of these parameters were implemented as originally reported. However, several other parameters were carefully reconciled; for example, the experimentally measured DNA content per cell (Morowitz et al., 1962; Morowitz, 1992) represents less than one-third of the calculated mass of the Mycoplasma chromosome. Data S1 details how we resolved this and several similar discrepancies among the experimentally observed parameters. (Karr et al. 2012:391)

At the one point in the article where the authors give evidence of bumping into another source of steering than simply the genome, the vocabulary of emergence is evoked: 'The whole-cell model therefore presents a hypothesis of an emergent control of cell-cycle duration that is independent of genetic regulation' (Karr et al. 2012:393). The implication of this is that the regulation of timing is produced by bottom-up complex interactions rather than that the embodied pattern or organisation situated in the whole is steering in any way. Seeing the way that the vocabulary of emergence is employed here, one can conclude that the reduction to mechanistic cause and effect is accompanied by a physicalist or even a simply materialist ontology.

A final observation about the mechanismic underpinnings of this research into cell function is the assumption that the cell and the genome are fundamentally fractionable. In their own words:

Our approach to developing an integrative whole-cell model was to divide the total functionality of the cell into modules, model each independently of the others, and integrate these sub models together. (Karr et al. 2012:389)

In terms of both the independence of the various functions in the cell and the perceived genetic units that govern these functions, there is a very real reduction going on when it becomes clear that this computational model is not just a tool for learning (which it has proven to be useful for), but is also seen as a preliminary blueprint for the design of novel organisms. The genome is neither truly divided into discrete packages nor are the parts of the cell functioning independently.

Computability is not the only difference between things made by humans and natural organisms, though. There are more reasons to tread carefully in the realm of asserting the human capacity to design organismic or life-like things. The fundamental differences between human design and the designs embodied in biology are numerous:

1. The unicity (which is not superficial) of each thing that is replicated.

2. The law of the loss of information (if we create a machine to make pans, the machine will always require more information to describe it than the pans it makes). Biological design does not suffer from this loss.

3. We remain the operators and directors of the things we make; biology produces systems with inwardness, self-governed operation and adaptation.

4. Integration and wholeness (cohesion) - we make systems that are a clear product of the specialisation of attention: one thing made up of many parts. Biology, on the other hand, makes everything, starting from a single cell. (We interact with DNA as though one piece of DNA deals with one function. Biology, though, proves to employ endless feedback loops to use and re-use parts of its genetic code, which in the end results in everything being connected to everything in such a way that real specialisation in the analysis of the genome leads to a loss of understanding and not a gain.)

5. Biology uses redundancy and is flexible in the means of achieving a function (one function can fill in for another) and self-repair (healing) - the mechanical systems we make do not repair themselves, they need to be repaired. Take note that the relevant comparison is not between what a computer does with software or data, but with what those systems do with matter which we have programmed, that is, a car or the computer itself (not just the code or the programming. This is a similar kind of reduction of reality as the reduction of traits to DNA).

6. Biology gathers its own raw materials and processes, and re-uses or jettisons its own waste products.

7. Diversity seems to be a crucial feature of the functioning of biological (organic) systems, together forming a greater system (e.g. atmosphere), which allows for stability and life. This increases the complexity in a way that is not scale thin.10 Human creations tend to be made in isolation, for example, more mono-dimensional, thus restricting themselves to the realm of mechanism.

These differences have been a reason for some people in microbiology and the nanotech sector to take a more careful position than the line of the Craig Venter Institute or that of Drexler (1986), set out in his 'Engines of Creation'. As Harper puts it with reference to the ethics of the human capacity to design organismic things: 'For any threat from the nano world to become a danger, it would have to include far more intelligence and flexibility than we can possibly design into it' (Harper 2003:4). As he sees it:

Nature has the ability to design highly energy efficient systems that operate precisely and without waste, fix only that which needs fixing, do only that which needs doing, and no more. We do not although one day our understanding of nanoscale phenomena may allow us to replicate at least part of what nature accomplishes. (Harper 2003:4)

The lack of a clear understanding and distinction between the mechanistic nature of our creations versus the organic integration of the things we have not designed leads to an unwarranted optimism about our ability to design in the realm of the biological. Though we are organisms, what we make is more machine-like and less organism-like than we would like to admit - especially when self-replicating systems look like such an enticing possibility.

Reduction and synthetic life

Few human endeavours have met with as much 'opposition' from the natural order as the attempts to design phenotype self-replicating systems. Yet much of the recent rhetoric of the nanotech community has taken it for granted that this goal is achievable by humans because the phenomenon occurs in the natural world. These two things do not naturally flow from one another, though. The potential thought mistake is that because organisms are expressed in matter, all they are is matter. Even the attempt to employ emergent behaviour as a way of circumventing our design shortcomings is an extension of the reductionistic11 view that all that organisms are made of is matter. In this view, the primary barrier to being able to make organism-like things is the limit on our capacity to manipulate all the parts of matter, which with nanotech indeed comes closer. Features such as the capacity of organisms to maintain identity whilst moving through various substrates of matter, even replacing every part of the whole, should warn against such reductions. This has led to a consistent trend in the efforts of nanotechnology to invest in the achieving of self-replication (which is akin to life) and for the ethics community to make that its primary focus for evaluating the activities of nanotech. This still holds true, as evidenced by the following quotation from Turney and Ewaschuk's (2006) article on 'Self-replication and self-assembly for manufacturing':

It has been argued that a central objective of nanotechnology is to make products inexpensively, and that self-replication is an effective approach to very low-cost manufacturing. The research presented here is intended to be a step towards this vision. (p. 411)

The qualitative and quantitative difference between the kind of design that humans produce and the kind of designs which are expressed in biological organisms should caution us to take seriously the option that we have design limitations which may fundamentally stand in the way of achieving these goals. There is evidence of more raw material than just matter and energy. The immaterial constituents of life and intelligence may form a more formidable barrier to overcome than simply achieving the capacity to manipulate all that there is to matter (though even this may be a lot more difficult than many of us are suggesting, given the uncertain nature of the material constituents). One of the reasons that the immaterial constituents of biological systems (organisms) have largely been ignored is the predominantly Cartesian model of reality with its machine analogy, which has shaped the landscape for most of what has been called science in the modern era.

Even in the journal Artificial Life, which is devoted to everything that is involved in 'our rapidly increasing technological ability to synthesize life-like behaviours from scratch in computers, machines, molecules, and other alternative media', one author has had to acknowledge that the present state of affairs is that '[e]lectronic systems, no matter how clever and intelligent they are, cannot yet demonstrate the reliability that biological systems can' (Zhang, Dragffy & Pipe 2006:313).>

With regard to all the computer- and electronics-based attempts at simulating life, it needs to be observed that, given the lack of computability of real life, the fabrication of something (e.g. an organism) is a vastly different matter than the simulation of its behaviours. Louie argues that 'the pursuit of the latter represents the ancient tradition that used to be called bio mimesis, the imitation of life' (Louie 2006:6). The idea that underlies the attempts to achieve life through simulation models is:

that by serially endowing a machine with more and more of the simulacra of life, we would cross a threshold beyond which the machine would become an organism. The same reasoning is embodied in the artificial intelligence of today, and it is articulated in Turing's Test. This activity is a sophisticated kind of curve-fitting, akin to the assertion that since a given curve can be approximated by a polynomial, it must be a polynomial. (Louie 2006:6)

Conclusion

Though the distinctive nature of a mechanism is hard to capture, it would seem that the core meaning load of the concept 'mechanism' is to be found in the kind of causality which is functioning at the physical level - that Dooyeweerd (2002:94) situates in the four pre-biotic aspects of reality: quantitative, spatial, kinematic and physical. Here the bottom-up causality is definitive. The relatively limited and linear nature of the complexity of these processes seems to mirror our own limited ability to model and design things. The more layered kind of steering that organic causal processes employ to limit all possible physico-chemical outcomes to a specific set that is functional, would seem more interconnected than our own making.

One of the paradoxes of our time is that both organismic and mechanismic worldviews are prevalent, both appealing to science in their own way. The mechanismic account of causal processes in reality seems more prevalent, though, and defines the way in which we currently invest in research. The risk that absolutising either of these accounts of causal processes holds is that one gets caught in a reductionism that leads to a loss of understanding. The limitations of the various attempts to synthesise life on the basis of mechanistic computational models, computing from the bottom up and starting with the laws for interaction at the smallest level, should signal the very real possibility that there is more raw material to reality than is being captured in the computational, mechanistic approach. An alternative perspective that allows for top-down causal processes such as the organic, which employ the bottom-up physical-chemical processes, would seem more likely to avoid the pitfalls of reductionism. Causal processes may be situated in multiple layers of reality.

Acknowledgements

Competing interests

The author declares that he has no financial or personal relationship(s) which may inappropriately influence him in writing this article.

References

Anderson, P.W., 1972, 'More is different', Science 177(4047), 393-396. http://dx.doi.org/10.1126/science.177.4047.393, PMid:17796623 [ Links ]

Aquinas, T., 1948, Summa Theologica II, Christian Classics, Grand Rapids. [ Links ]

Axelrod, R., 2006, The evolution of cooperation, rev. edn., Perseus Books Group, New York. [ Links ]

Chu, D. & Ho, W.K., 2006, 'A category theoretical argument against the possibility of artificial life: Robert Rosen's central proof revisited', Artificial Life 12(1), 117-134. http://dx.doi.org/10.1162/106454606775186392, PMid:16393453 [ Links ]

Dawkins, R., [1976] 1989, The selfish gene, 2nd edn., Oxford University Press, Oxford.

Dooyeweerd, H., 2002, Encyclopedia of the science of law: Introduction, vol. 1, The Edwin Mellen Press, Lewiston/New York. [ Links ]

Drexler, K.E., 1986, Engines of creation: The coming era of nanotechnology, Anchor Books Doubleday, New York. [ Links ]

Gibson, D.G., Benders, G.A., Andrews-Pfannkoch, C., Denisova, E.A., Baden-Tillson, H., Zaveri, J. et al., 2008, 'Complete chemical synthesis, assembly, and cloning of a Mycoplasma genitalium genome', Science 319, 1215-1220. http://dx.doi.org/10.1126/science.1151721, PMid:18218864 [ Links ]

Gibson, D.G., Glass, J.I., Lartigue, C., Noskov, V.N., Chuang, R.Y., Algire, M.A. et al., 2010, 'Creation of a bacterial cell controlled by a chemically synthesized genome', Science 329, 52-56. [ Links ]

Glas, G., 2002, 'Churchland, Kandel and Dooyeweerd on the reducibility of mind states', Philosophia Reformata 67, 148-172. http://dx.doi.org/10.1126/science.1190719, PMid:20488990 [ Links ]

Gwinn, T., 2007a, 'Closed to efficient causation', in Panmere, viewed 31 January 2013, from http://www.panmere.com/?p=22 [ Links ]

Gwinn, T., 2007b, 'Effective processes, computation, and complexity', in Panmere, viewed 31 January 2013, from http://www.panmere.com/?p=19 [ Links ]

Harper, T., 2003, 'What is nanotechnology?', Nanotechnology 14(1), 4. [ Links ]

Heusinkveld, B.J. & Jochemsen H., 2006, 'Homo excelsior!: Normatieve grenzen bij de maakbaarheid van het menselijk lichaam' [Normative limits to the malleability of the human body], Tijdschrift voor Gezondheidszorg en Ethiek 16(1), 2-6. [ Links ]

Hobbes, T., 1962, Leviathan: Or the matter, forme and power of a commonwealth ecclesiastical and civil, Macmillan Publishing Co., New York. [ Links ]

Karr, J.R., Sanghvi, J.C., Macklin, D.N., Gutschow, M.V., Jacobs, J.M., Bolival, B. et al., 2012, 'A whole-cell computational model predicts phenotype from genotype', Cell 150(2), 389-401. http://dx.doi.org/10.1016/j.cell.2012.05.044, PMid:22817898 [ Links ]

Lartigue, C., Glass, J.I., Alperovich, N., Pieper, R., Parmar, P.P., Hutchison, C.A. III, et al., 2007, 'Genome transplantation in bacteria: Changing one species to another', Science 317, 632-638. http://dx.doi.org/10.1126/science.1144622, PMid:17600181 [ Links ]

Lartigue, C., Vashee, S., Algire, M.A., Chuang, R.Y., Benders, G.A., Ma, L. et al., 2009, 'Creating bacterial strains from genomes that have been cloned and engineered in yeast', Science 325, 1693-1696. http://dx.doi.org/10.1126/science.1173759, PMid:19696314 [ Links ]

Louie, A.H., 2005, 'Any material realization of the (M,R)-systems must have non-computable models', Journal of Integrative Neuroscience 4(4), 423-436. http://dx.doi.org/10.1142/S0219635205000926, PMid:16385638 [ Links ]

Louie, A.H., 2006, 'A living system must have noncomputable models', in Panemere, viewed 31 January 2013, from http://www.panmere.com/rosen/Louie_noncomp_pre_rev.pdf [ Links ]

Mussolini, B., 1938, The doctrine of fascism, Ardita, Rome. [ Links ]

Rosen, R., 1991, Life itself: A comprehensive inquiry into the nature, origin, and fabrication of life, Columbia University Press, New York. [ Links ]

Rosen, R., 1998, Essays on life itself, Columbia University Press, New York. [ Links ]

Rashevsky, N., 1960, Mathematical biophysics, vol.2, 3rd edn., Dover Publications, New York. [ Links ]

Salk, J., 1983, Anatomy of reality: Merging of intuition and reason, Convergence series, Columbia University, New York. [ Links ]

Shostak, S., 1998, Death of life: The legacy of molecular biology, Macmillan Press Ltd, London. [ Links ]

Silberschatz, A. & Galvin, P.B., 1998, Operating system concepts, 5th edn., Addison-Wesley, Reading. [ Links ]

Strauss, L., 1953, Natural right and history, University of Chicago Press, Chicago. [ Links ]

Trivers, R.L., 1971, 'The evolution of reciprocal altruism', Quarterly Review of Biology 46, 35-57. http://dx.doi.org/10.1086/406755 [ Links ]

Turney, P.D. & Ewaschuk, R., 2006, 'Self-replication and self-assembly for manufacturing', Artificial Life, Summer, 12(3), 411-433. http://dx.doi.org/10.1162/artl.2006.12.3.411, PMid:16859447 [ Links ]

Venter, J.J. 1992, 'From "machine-world" to "God-world" - world pictures and world views', Koers - Bulletin for Christian Scholarship 57(2), 189-214. http://dx.doi.org/10.4102/koers.v57i2.783 [ Links ]

Venter, J.J., 1996, 'Mechanistic individualism versus organismic totalitarianism', Koers - Bulletin for Christian Scholarship 23(1), 175-200. [ Links ]

Venter, J.J., 1997, 'Ultimate reality and meaning', Koers - Bulletin for Christian Scholarship 20(1), 41-60. [ Links ]

Weinberg, S., 2001, Facing up: Science and its cultural adversaries, Harvard University Press, Cambridge. [ Links ]

Zhang X., Dragffy, G. & Pipe, A.G., 2006, 'Embryonics: A path to artificial life?', Artificial Life 12(3), 313-332. http://dx.doi.org/10.1162/artl.2006.12.3.313, PMid:16859443 [ Links ]

Correspondence:

Correspondence:

Henk Reitsema

Burg Verbrughweg 40, 4024HR, Eck en Wiel, The Netherlands

Received: 06 Feb. 2013

Accepted: 29 Aug. 2013

Published: 28 Nov. 2013

1. 'The ability to reduce everything to simple fundamental laws does not imply the ability to start from those laws and reconstruct the universe. In fact, the more the elementary particle physicists tell us about the nature of the fundamental laws, the less relevance they seem to have to the very real problems of the rest of science, much less society' (Anderson 1972:394).

2. It is a fact that these mechanisms are a product of intention and therefore limited to the number of causal layers and interactions that we can neurally realise at any given moment, which distinguishes them from the kind of cause-and-effect patterns that belong with organistic interactions, which are not limited in the same way.

3. The term 'abstraction' refers to the cognitive process of isolating and scrutinising a particular aspect (or aspects) of an object under investigation.

4. 'If all insects on earth disappeared, within 50 years all life on Earth would end. If all human beings disappeared from the Earth, within 50 years all forms of life would flourish' (Salk 1983). The implication here is that humans are less in balance with their environment than other organisms because they can employ instrumental reason to design and shape things (mechanisms) that are less well integrated with their environment.

5. Hobbes is of the opinion that the state is an artificial construction (not an organism). It depends on agreement, contract, institution and other manifestations of human decision: 'Man, he held, was a natural machine as distinct from Leviathan, which was an artificial one' (Peters, cited in Hobbes 1962:13).

6. The diversity expressed at the micro level is a reflection of and interrelated with the diversity at the macro level, that is, the one is intrinsically dependent on the other. The elliptical course of the Earth around the sun linked to the solar system's positioning in the rest of the cosmos, and the off-centre angle of the Earth's rotation around its own axis together with the elliptical course provides for the seasons, the varying gravitational pull provided by the moon and the other planets et cetera. This kind of interrelation is also found between the big parts of an individual organism (the organs) and the structures at the micro/nano-level.

7. Rosen (1998:292) chooses to try to redefine complexity in such a way that it is reserved for those systems which are not susceptible to fractionability or other reductionistic mathematical tools (systems having at least one non-simulable model). He writes: 'This is essentially what I have called complexity; a system (mathematical or physical) is complex to the extent that it does not let itself be exhausted within a given set of (subjective) limitations.'

8. Rashevsky (1960) elaborates on this understanding of biology.

9. Chu and Ho (2006:117-134) attempted a rebuttal of Rosen's argument but misrepresented his definition of 'mechanism', which makes their attempt unsuccessful.

10. Shostak (1998:190): '[T]he biosphere of the earth is … a cybernetic system possessing the properties of self regulation. A cybernetic system "possesses stability for blocking external and internal disturbances when it has sufficient internal diversity". Diversity on earth is provided in part by such things as its rotation around the sun, and around its own axis (creating latitudinal and seasonal change), and by the range of elevation and depth of the surface. But the main diversity of the earth's biosphere is created by living organisms. This internal diversity of the biosphere created by life: "provides a definite guarantee for the preservation of life on our planet".'

11. Weinberg (2001:115) is a well-known advocate of reductionist explanation. Whilst he believes that phenomena such as life and mind come about through emergence, he asserts that '[t]he rules they obey are not independent truths, but follow from scientific principles at a deeper level'. What he means by 'deeper' in this context is at the lower level of the constituent physical parts.