Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

Journal of the Southern African Institute of Mining and Metallurgy

On-line version ISSN 2411-9717

Print version ISSN 2225-6253

J. S. Afr. Inst. Min. Metall. vol.122 n.9 Johannesburg Sep. 2022

http://dx.doi.org/10.17159/2411-9717/1741/2022

PROFESSIONAL TECHNICAL AND SCIENTIFIC PAPERS

Journal impact factors - The good, the bad, and the ugly

D.F. Malan

Department of Mining Engineering, University of Pretoria, South Africa. https://orcid.org/0000-0002-9861-8735

SYNOPSIS

This paper provides an overview of the concepts of citations and journal impact factors, and the implications of these metrics for the Journal of the Southern African Institute of Mining and Metallurgy (JSAIMM). Two key research literature databases publish journal impact factors; namely, Web of Science and Scopus. Different equations are used to calculate journal impact factors and care should be exercised when comparing different journals. The JSAIMM has a low impact factor compared with some of the more prestigious journals. It nevertheless compares well with journals serving other mining sectors, such as the Canadian CIM Journal. The problems associated with journal impact factors are discussed. These include questionable editorial practices, the negative impact of this concept on good research, and the problem of a few highly cited papers distorting the journal impact factor. As a consequence, there is growing resistance to the use of journal impact factors to measure research excellence. The San Francisco Declaration on Research Assessment is a global movement striving for an alternative assessment of research quality. As a recommendation, the Editorial Board of the JSAIMM should adopt a pragmatic approach and not alter good journal policies simply to increase the journal impact factor. The focus should remain on publishing excellent quality papers. Marketing of the Journal, the quality of the published papers, and its open access policy should be used to counter the perception that journals with high impact factors are better options in which to publish good research material.

Keywords: research publishing, citation, journal impact factor.

Introduction

Citations and impact factors, such as the Web of Science Journal Impact Factor (JIF) and Scopus' CiteScore, are prominent metrics in the modern academic environment. Postgraduate students are encouraged to publish in journals with a high impact factor and academic staff need numerous citations and good h-index scores for promotion. The h-index (or Hirsch index) is an author-level metric that measures both the productivity (number of publications) and number of citations of these publications (Hirsch, 2005). This paper explores citations and journal impact factors and their impact on the Journal of the Southern African Institute of Mining and Metallurgy (JSAIMM1). Of particular interest is the effect of the JIF on manuscripts that the Journal attracts for potential publication and will attract in future. It appears that universities may, in some cases, encourage staff and students to preferably publish in journals with 'high' impact factors and the author has experienced this at first-hand. The JSAIMM has a proud history: outstanding papers were published in the past and such papers continue to be published. The effect of a greater emphasis on journal impact factor in academic circles therefore needs to be better understood and countered using an appropriate strategy.

Fundamental to the discussion is the concept of a 'citation'. This is simply a reference in a publication to another author's paper or book. A 'citation index' is a bibliographic index of citations between publications, allowing researchers to establish which later documents cite which earlier documents. Eugene Garfield, founder of the Science Citation Index (SCI) in 1964, described it more eloquently (Garfield, 1979):

'Citations are the formal, explicit linkages between papers that have particular points in common. A citation index is built around these linkages. It lists publications that have been cited and identifies the sources of the citations. Anyone conducting a literature search can find from one to dozens of additional papers on a subject just by knowing one that has been cited.'

Garfield's SCI is currently incorporated into the well-known Web of Science as one of the databases. Garfield argued that the connections he captured between indexed papers could be trusted because they were based on the decisions of the researchers themselves (Web of Science, 2020). The value of a citation index is that literature that shows the greatest impact in a particular field can be easily identified. It makes searching the literature more efficient and effective. These are clearly noble objectives and make the citation databases valuable research tools.

As an unintended consequence, citations became a widely used measure of the performance of researchers. This was inevitable because it is very difficult to measure research performance. As there are so many fields of research, it is difficult for universities to measure extraordinary research performance. Owing to the difficulty of developing reliable techniques, the number of citations has been adopted as one of the measurement tools. The number of citations and databases also evolved into a powerful marketing tool for universities. As a good example, Clarivate, the current owner of Web of Science, publishes an annual document listing the "Highly Cited Researchers" (see Figure 1). As stated in the 2020 document:

'These highly cited papers rank in the top 1% by citations for a field or fields and publication year in the Web of Science. Of the world's population of scientists and social scientists, Highly Cited Researchers are 1 in 1000.'

Clearly, ambitious researchers will strive to become part of this elite club, and there is subtle pressure to focus on the number of citations and publishing in high-JIF publications. Figure 2 illustrates the number of highly cited researchers in the top institutions for 2020. Invariably, this information will be used in marketing material by the universities to attract top students and research grants.

This focus on citations and publications in journals with a high JIF in academic circles raises the important question: How should the JSAIMM position itself to remain relevant to the Southern African mining industry and its wide audience, but still attract top academic research papers? This paper gives some of the long and interesting history of the JSAIMM, explores the growing body of criticism against the use of impact factors, and presents some possible solutions.

Calculation of journal impact factors

Citations are used to calculate the journal impact factors: two commonly used metrices are discussed in this section. These are the JIF from Clarivate and CiteScore from Elsevier. JIF is occasionally referred to as the 'JCR impact factor'.

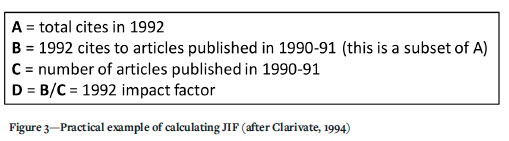

Clarivate was formerly the Intellectual Property and Science division of publisher Thomson Reuters. In 2016, it was spun off into an independent company and is the current administrator of the Web of Science database. The Web of Science method used to calculate JIF is described in Clarivate (1994). A specific example for the year 1992 is reproduced in Figure 3. The JIF for a specific year is based on the number of citations of papers published in the preceding two years in a particular journal.

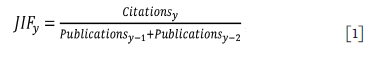

More generally, the method of calculation for the JIF can be given by the following equation:

where y is a particular year, Citationsy is the number of citations received in year y for the total number of publications in that journal that were published in the two preceding years, Publications,,, and Publicationsy-2.

Of significance is that a two-year period is adopted for papers published and the year following this period is used to count citations of papers published in the previous two years. It is therefore essentially a three-year cycle. The important and difficult implication of this is that for journals with a low JIF, it will take at least three years to substantially increase the JIF if very good papers can be sourced. Top researchers, attempting to meet the university requirements described in the introduction, may not be willing to wait such a long time. The nature of Equation [1] probably induces a feedback loop in which the prestigious journals continue to attract the best papers and, by default, the most citations; the opposite is true for journals with low impact factors.

The motivation for the adoption of a two-year period is not clear in the literature, but some information is given by Garfield (1999). He stated that the impact factor could just as easily be based on the previous year's articles alone. This would give an even greater weight to rapidly changing fields. A less current impact factor can consider longer periods. One can therefore go beyond two years for the items in the denominator in Equation [1], but then the measure would be less current. It should be noted that a five-year impact factor is also calculated and included in the Journal Citation Reports (JCR). The five-year impact factor is typically a larger number than the two-year impact factor, as illustrated in Table I.

Elsevier also maintains a curated abstract and citation database called Scopus. The impact factor calculated from the Scopus database is called CiteScore. In comparison with the JIF of Web of Science, CiteScore uses a different calculation. The equation for CiteScore before 2020 is given below:

where y is a particular year, and Citationsy-3 is the number of citations received in year y for the total number of publications in that journal that were published in the three preceding years, Publicationsy-1, Publicationsy-2, and Publicationsy-3. As a longer period is used, it is expected that CiteScore will be a larger number than JIF.

From 2020 onwards, the CiteScore was calculated differently (Wikipedia, 2021) and the revised formula is given by:

The various time periods, databases, and calculations can be confusing, and care should be exercised when comparing JIFs to ensure that similar metrics are being used. Table I gives a small arbitrary sample of journals that publish papers similar to those of the JSAIMM, and illustrates their recent impact factors as sourced by the author during September 2021 from the various journal websites. Note that the CiteScore values are larger than the JIF values. The journals do not specify whether the new CiteScore method is being used, but it is presumed that Equation [3] was used to calculate the values shown. Note that the objective of this table is not to give an extensive list of mining journals and their ranking, but rather to put the current JIF of the JSAIMM into perspective.

From Table I, it can be seen that the CiteScore and JIF of the JSAIMM are low compared with the other prestigious journals. Note the data is only for the year 2020 and this comparison may look different for years prior to 2020. Also note the exceptionally large JIF of Nature. When considering Equations [1)] to [3], an impact factor of less than unity for the JSAIMM implies that some of the papers published seem to get no citations at all. It is encouraging, however, that there seems to be a gradual improvement in the JIF, as shown in Figure 4. A large step change occurred in 2019. It is not clear if this was a consequence of many more citations or simply caused by the change in CiteScore calculation method (see Equations ([2] and [3]). Note that the impact factor of the JSAIMM is substantially larger than comparable Canadian journals, which cater for the local mining industry in that country. Another useful comparison will be with an Australian mining journal, but the author could not find the impact factor of the journal(s) published by the AusIMM on the internet.

History of the Journal of the Southern African Institute of Mining and Metallurgy

The JSAIMM has a proud history and the first edition was published more than a century ago. The name of the Journal changed several times as listed below. Listing these previous titles is of value to researchers searching for older papers in libraries because the current SAIMM website only includes papers from January 1969 onwards.

> The title of the first edition was Chemical and Metallurgical Society of South Africa Proceedings, vol. I, 1894 - 1897.

> In July 1904 it changed to Journal of the Chemical, Metallurgical and Mining Society of South Africa, vol. V, July 1904 - June 1905.

> In 1956, it changed to Journal of the South African Institute of Mining and Metallurgy, vol. 57, August 1956 to July 1957.

>- In 2008, it changed to: Journal of the Southern African Institute of Mining and Metallurgy, in line with the new mission of the Institute to include neighbouring countries.

Some of the historical covers of the printed Journal are illustrated in Figure 5.

One of the attractive features of the Journal is that it provides immediate open access to its content, on the principle that making research freely available to the public supports a greater global exchange of knowledge. This has not always been the case and, until recently, the Journal was distributed as a hard copy to members only. From the information available on the Journal website, it seems that the initiative of indexing of the Journal in the Directory of Open Access Journals gained momentum in 2017.

Some outstanding technical papers have been published in the Journal. Only a few key papers in the author's area of expertise, rock engineering, are mentioned below to illustrate that important papers do not necessarily attract a large number of citations. There seems to be a poor correlation between the number of citations for some of the important papers and their significant impact on new developments in the mining industry: care should therefore be exercised on judging publications solely on the number of citations. The reader is advised to explore the Journal website (https://www.saimm.co.za/publications/journal-papers) and Google Scholar to sample a larger selection of papers and the number of citations these papers attracted over the years. One of the mostly highly cited papers was written by Krige (1951). According to Google Scholar, it has already attracted 3414 citations. This paper was written a long time ago, but it highlights the problem associated with Equations [1] to ([3] when only a short time-frame is used to count the number of citations. As a second example, Krige (1966) has 491 citations.

In terms of rock engineering, the following papers are noteworthy. Following the Coalbrook mining disaster in January 1960, Salamon and Munro (1967) published their famous power-law formula for coal pillar strength in a paper in the JSAIMM. The South African coal mining industry still uses this formula and the original source was the publication in the JSAIMM. According to Google Scholar, this paper has already been cited 459 times. Some other papers that have a large number of citations are Bieniawski (1974) with 437 citations and Laubscher (1994) with 292 citations.

In contrast, other important rock engineering papers only received a limited number of citations. The adoption of the elastic concept was a very significant development for the quantitative analysis of stress distribution around excavations in the gold mining industry. Deformation measurements were conducted by Ryder and Officer (1964) at East Rand Proprietary Mines from 1961 to 1963. This paved the way for general acceptance of elastic theory to approximate the behaviour of a rock mass. In spite of the importance of this paper, it has only been cited 37 times.

The use of the displacement discontinuity numerical modelling approach is described in early foundational papers written by Salamon (1963, 1964a, 1964b, 1965), which he termed the 'Face Element Principle'. He published these papers in the JSAIMM and these led to the devopment of numerical programs such as MINSIM and TEXAN that are still used in the gold and platinum mining industries to design layouts. Despite the significance of these papers and their major influence on mine design, they have attracted only a few citations: as examples, the 1964a paper has only 60 citations and the 1965 paper has 49 citations.

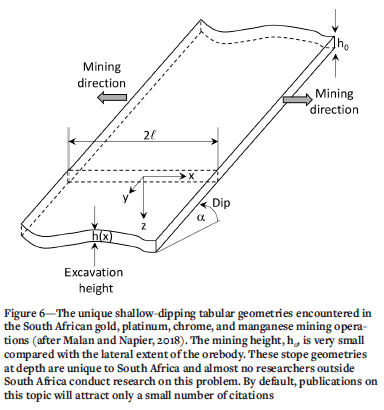

The important principle illustrated is that groundbreaking publications may attract only a few citations, but may have a major impact on the mining industry in Southern Africa. JIF is a flawed measure of research excellence in these cases. One possible reason for this is that some mining problems are unique to a particular country or region (Figure 6). Owing to the unique tabular geometry of the gold reef deposits on the Witwatersrand (Malan and Napier, 2018), South Africa, research needs to be conducted to understand the behaviour of the rock mass around the deep tabular excavations. As correctly pointed out by the Leon Commission (Leon, 1995): 'Furthermore, as no other region of economic significance has similar geometry, no mining industry outside South Africa pursues the solution to this problem. The solution must therefore be found in South Africa.' As the number of researchers working in South Africa comprises a small group, and no other mining countries encounter these problems, such research publications can attract only a limited number of citations. Thus, for the reasons explained above, JIF is not necessarily a good measure of the impact of many papers published in the JSAIMM, but it may be applicable for other papers in journals elsewhere in the world.

Problems associated with the use of journal impact factors

The use of JIFs is criticised by some as having a negative impact on science in general. Muller and de Rijcke (2017) suggested that 'For many researchers the only research questions and projects that appear viable are those that can meet the demand of scoring well in terms of metric performance indicators - and chiefly the journal impact factor.' This relates to the discussion above of the unique mining geometries encountered in South Africa. Research is desperately needed in this area, but the papers will attract only a few citations owing to the small number of researchers interested in this problem. This may result in important areas of research not receiving the required attention. Lariviere and Sugimoto (2019) noted that the process of scientific publication is slowed down because authors attempt to publish in a journal with the highest impact factor. In many cases, this may not be the most appropriate journal for the topic. For this reason, South African research on problems experienced in the deep tabular gold mining excavations needs to be published in the JSAIMM.

A further problem is that impact factors may not be suitable for comparing journals across disciplines. The percentage of total citations of a paper occurring in the first two years after publication also varies greatly between disciplines. As examples this percentage is 1 -3% in the mathematical and physical sciences and 5-8% in the biological sciences (van Nierop, 2009).

More insidious are questionable editorial policies that may affect the impact factor. Some journals adopt dubious practices to increase their impact factor. Coercive citation is a practice in which an editor forces an author to add unrelated citations to a paper to inflate the journal's impact factor (McLeod, 2020). The author has recently experienced this first hand from a so-called 'prestigious journal'. Journal editors may also attempt to limit the number of 'citable items' by declining articles that are unlikely to be cited or by altering articles that will not be considered as a citable item by the rating agencies.

The concept of the JIF was originally developed by Garfield as a metric to assist libraries to make decisions about which journals were worth indexing. JIF has now become a measure of quality, however, and is widely used for the evaluation of research. It therefore has a major effect on research practices and behaviours. Curry (2018) stated: 'Most agree that yoking career rewards to JIFs is distorting science. Yet the practice seems impossible to root out. In China, for example, many universities pay impact-factor-related bonuses, inspired by unwritten norms of the West.'

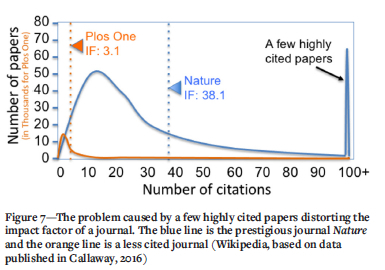

Statistically, there is also a problem with JIFs because a high impact factor may be derived from a few highly cited papers. Most papers do not get many citations, but are still regarded as influential because the overall impact of certain individual papers in the journal is high. This is illustrated in Figure 7, which compares the impact factors of Nature and Plos One for papers published in 2013 to 2014. Note that the impact factor of Nature is 38.1, but most papers received only between 10 and 20 citations. The few highly cited papers distort the 'average' impact factor.

As a final word on the negative aspects of impact factors, Garfield (1999) recognized that his system was being abused and stated:

'I first mentioned the idea of an impact factor in 1955. At that time it did not occur to me that it would one day become the subject of widespread controversy. Like nuclear energy, the impact factor has become a mixed blessing. I expected that it would be used constructively while recognizing that in the wrong hands it might be abused.'

The future of journal impact factors

From the literature studied to compile this study, it appears that there is a growing resistance to the use of JIFs. Curry (2018) described the role of the San Francisco Declaration on Research Assessment (DORA) that was established by journal editors and publishers at a meeting of the American Society for Cell Biology (ASCB) in December 2012. DORA strives for a system in which the content of a research paper is more important than the JIF. Curry (2018) mentioned that the number of university signatories from the United Kingdom had tripled within a period of two years. He stated: 'Impact factors were never meant to be a metric for individual papers, let alone individual people. They're an average of the skewed distribution of citations accumulated by papers in a given journal over two years. Not only do these averages hide huge variations between papers in the same journal, but citations are imperfect measures of quality and influence.' This is illustrated in this paper with regards to the rock engineering papers discussed above: some of the key influential papers attracted only a few citations.

Based on the above discussion, it is recommended that the Editorial Board of the JSAIMM adopts a pragmatic approach and does not modify good journal policies simply to increase the impact factor. Additional marketing and wider circulation of the journal to industry, as well as academia, should be conducted. The following points should be emphasized.

> The JSAIMM is open access. Many of the prestigious high-impact-factor journals charge exorbitant fees to publish papers open access. If the open access option is not selected, the papers are typically available only to subscribers for a period of, say, two years or if a fee is paid.

> Excellent quality papers are published in the JSAIMM and the review process is thorough and fair. It is notable that a double-blind system of peer review has recently been introduced to further improve impartiality and reduce bias. Such a system is seldom used by more prestigious journals, and this can lead to preference for publication of a reviewer's colleagues and countrymen.

> The JSAIMM has wide distribution in the Southern African mining industry and researchers will reach the appropriate target audience.

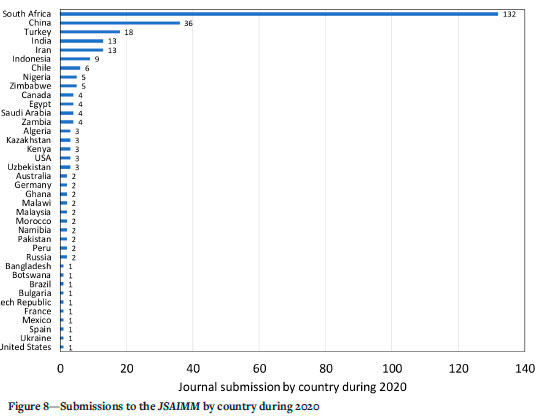

> There is significant interest in the JSAIMM by international researchers who want to publish their work in the journal (see Figure 8).

It is expected that marketing of these aspects will have a positive effect on the JIF.

The Editorial Board should also strive towards a good turnaround time for paper submissions. Targets should be set in this regard (Figure 9 can, for example, be used as a guideline) and ongoing monitoring of actual performance should be conducted.

Long wait times are caused by several factors, such as the poor language quality of papers submitted, finding suitable referees, and a small administrative office to manage the large volume of paper submissions. The problem of long wait times is, however, not unique to the JSAIMM. Vosshall (2012) wrote: 'In the past three years, if anything, it's gotten substantially worse. It takes forever to get the work out, regardless of the journal. It just takes far too long.' Powell (2016) stated that, for Nature, the median review time had increased from 85 days to more than 150 days over the past decade, and at PLoS ONE it increased from 37 to 125 days during the same period. Globally, researchers are becoming increasingly frustrated by how long it takes to publish their papers. In defence of the journals, it should nevertheless be added that the quality of the work submitted nowadays is frequently of an unacceptable standard. The large number of poor-quality papers clogs the system and it consumes valuable resources to review and reject these papers.

An interesting aspect is that the above studies found that journals with the lowest and highest impact factors have the longest wait times (Figure 9). Powell (2016) nevertheless added the important comment that researchers are also to blame for the long publication times as they 'indulged in the all-too-familiar practice of journal shopping'. This is the practice of submitting first to the most prestigious journals with the highest impact factor and then working their way down the hierarchy if the papers are rejected. A further factor contributing to the wait time is that the volume of papers has substantially increased. For PLoS ONE, the volume of papers increased from 200 in 2006 to 30 000 per year in 2016, and it takes time to find and assign appropriate editors and reviewers. Contributing to this problem is that a large number of poor-quality papers are being submitted. This has discouraged and demoralized competent reviewers, who then become less willing to offer their services in this regard.

Conclusions

This paper provides an overview of the concepts of citations and journal impact factors, and the implications of these metrics for the Journal of the Southern African Institute of Mining and Metallurgy (JSAIMM). Two key research literature databases publish journal impact factors; namely, Web of Science and Scopus. Different equations are used to calculate journal impact factors and care should be exercised when comparing journals evaluated using different equations.

The JSAIMM has a low impact factor compared with some of the more prestigious journals. It nevertheless compares well with journals serving other mining industries, such as the Canadian CIM Journal. The problems associated with journal impact factors were discussed. These include questionable editorial practices, their negative impact on good research, and the problem of a few highly cited papers distorting the impact factor. As a result, there is growing resistance to the use of journal impact factors to measure research excellence. The San Francisco Declaration on Research Assessment is a global movement striving for an alternative assessment of research quality. As a recommendation, the Editorial Board of the JSAIMM should adopt a pragmatic approach and not alter good journal policies simply to increase the journal impact factor. The focus should remain on publishing excellent quality papers.

Marketing of the JSAIMM, the quality of the published papers, and its open access policy should be used to counter the perception that journals with high impact factors are better options in which to publish good research material. The Editorial Board should also strive for a good turnaround time on manuscript submissions.

Acknowledgements

Kelly Matthee from the SAIMM is thanked for sourcing historical publications from the JSAIMM. The reviewers are thanked for their good suggestions to improve the quality of the paper.

References

Bieniawski, Z.T. 1974. Estimating the strength of rock materials, Journal of the South Africa Institute of Mining and Metallurgy, vol. 74, no. 8. pp. 312-329, [ Links ]

Callaway, E. 2016. Beat it, impact factor! Publishing elite turns against controversial metric. Nature, vol. 535, no. 7611. pp. 210-211. [ Links ]

Clarivate. 1994. The Clarivate impact factor. https://clarivate.com/essays/impact-factor. [First published in the Current Contents print editions June 20, 1994, when Clarivate Analytics was known as The Institute for Scientific Information (ISI). [ Links ]]

Curry, S. 2018. Let's move beyond the rhetoric: It's time to change how we judge research. Nature, vol. 554, no. 7691. pp. 147. [ Links ]

Garfield, E. 1999. Journal impact factor: A brief review. Canadian Medical Association Journal, vol. 161. pp. 979-980. [ Links ]

Garfield, E. 1979. Citation Indexing: Its Theory and Application in Science, Technology, and Humanities. Wiley, New York. [ Links ]

Hirsch, J.E. 2005. An index to quantify an individual's scientific research output, Proceedings of the National Academy of Sciences of the United States of America, vol. 102, no. 46. pp. 16569-16572, [ Links ]

Krige, D.G. 1951. A statistical approach to some basic mine valuation problems on the Witwatersrand, Journal of the Chemical Metallurgical & Mining Society of South Africa, vol. 52, no. 6. pp. 119-139. [ Links ]

Krige, D.G. 1966. Two-dimensional weighted moving average trend surfaces for ore-evaluation. Journal of the South African Institute of Mining and Metallurgy, vol. 94. pp. 279-293. [ Links ]

Laubscher, D.H. 1994. Cave mining-The state of the art. Journal of the South African Institute of Mining and Metallurgy, vol. 94. pp. 279-293. [ Links ]

Larivière, V. and Sugimoto, C.R. 2019. The journal impact factor: A brief history, critique, and discussion of adverse effects. Springer Handbook of Science and Technology Indicators. Glánzel, W., Moed, H.F., Schmoch, U., and Thelwall, M. (eds). Springer, Cham. pp. 3-24. [ Links ]

Leon, R.N. 1995. Report of the Commission of Inquiry into Health and Safety in the Mining Industry, Volume 1. Department of Mineral and Energy Affairs, Braamfontein, Johannesburg. [ Links ]

Malan, D.F. and Napier, J.A.L. 2018. Rockburst support in shallow-dipping tabular stopes at great depth. International Journal of Rock Mechanics and Mining Science, vol. 112. pp. 302-312. [ Links ]

McLeod, S. 2020. Should authors cite sources suggested by peer reviewers? Six antidotes for handling potentially coercive reviewer citation suggestions. Learned Publishing, vol. 34, no. 2. pp. 282-286. [ Links ]

Müller, R. and de Rijcke, S. 2017. Thinking with indicators. Exploring the epistemic impacts of academic performance indicators in the life sciences. Research Evaluation, vol. 26, no. 3. pp. 157-168. [ Links ]

Powell, K. 2016. Does it take too long to publish research? Nature, vol. 530. pp. 148-151. https://doi.org/10.1038/530148a [ Links ]

Salamon, M.D.G. 1963. Elastic analysis of displacements and stresses induced by the mining of seam or reef deposits - Part I: Fundamental principles and basic solutions as derived from idealised models. Journal of the South African Institute of Mining and Metallurgy, vol. 63. pp. 128-149. [ Links ]

Salamon, M.D.G. 1964a. Elastic analysis of displacements and stresses induced by the mining of seam or reef deposits - Part II: Practical methods of determining displacement, strain and stress components from a given mining geometry. Journal of the South African Institute of Mining and Metallurgy, vol. 64. pp. 197-218. [ Links ]

Salamon, M.D.G. 1964b. Elastic analysis of displacements and stresses induced by the mining of seam or reef deposits - Part III: An application of the elastic theory: Protection of surface installations by underground pillars. Journal of the South African Institute of Mining and Metallurgy, vol. 64. pp. 468-500. [ Links ]

Salamon, M.D.G. 1965. Elastic analysis of displacements and stresses induced by the mining of seam or reef deposits - Part IV: Inclined reef. Journal of the South African Institute of Mining and Metallurgy, vol. 65. pp. 319-338. [ Links ]

Salamon, M.D.G. and Munro, A.H. 1967. A study of the strength of coal pillars. Journal of the South African Institute of Mining and Metallurgy, vol. 68. pp. 56-67. [ Links ]

Van Nierop, E. 2009. Why do statistics journals have low impact factors? Statistica Neerlandica, vol. 63, no. 1. pp. 52-62. [ Links ]

Vosshall, L.B. 2012. The glacial pace of scientific publishing: Why it hurts everyone and what we can do to fix it. FASEB Journal, vol. 26. pp. 3589-3593. [ Links ]

Web of Science. 2020. Highly cited researchers 2020, Clarivate, https://recognition.webofscience.com/awards/highly-cited/2020/. Wikipedia. 2020. CiteScore. https://en.wikipedia.org/w/index.php?title=CiteScore&oldid=1025821433. [ Links ]

Correspondence:

Correspondence:

D.F. Malan

Email: francois.malan@up.ac.za

Received: 18 Sep. 2021

Revised: 13 May 2022

Accepted: 2 Jun. 2022

Published: September 2022

1 The abbreviation JSAIMM is used throughout this paper, although according to SCIELO (The Scientific Electronic Library Online, South Africa), the correct abbreviation of the journal title is J. South. Afr. Inst. Min. Metall.