Serviços Personalizados

Artigo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares em Google

Similares em Google

Compartilhar

South African Journal of Communication Disorders

versão On-line ISSN 2225-4765

versão impressa ISSN 0379-8046

S. Afr. J. Commun. Disord. vol.69 no.2 Johannesburg 2022

http://dx.doi.org/10.4102/sajcd.v69i2.912

ORIGINAL RESEARCH

Application of machine learning approaches to analyse student success for contact learning and emergency remote teaching and learning during the COVID-19 era in speech-language pathology and audiology

Milka C. MadahanaI; Katijah Khoza-ShangaseII; Nomfundo MoroeII; Otis NyandoroI; John EkoruI

ISchool of Electrical and Information Engineering, Faculty of Engineering and the Built Environment, University of the Witwatersrand, Johannesburg, South Africa

IIDepartment of Audiology, School of Human and Community Development, University of the Witwatersrand, Johannesburg, South Africa

ABSTRACT

BACKGROUND: The onset of the COVID-19 pandemic across the globe resulted in countries taking several measures to curb the spread of the disease. One of the measures taken was the locking down of countries, which entailed restriction of movement both locally and internationally. To ensure continuation of the academic year, emergency remote teaching and learning (ERTL) was launched by several institutions of higher learning in South Africa, where the norm was previously face-to-face or contact teaching and learning. The impact of this change is not known for the speech-language pathology and audiology (SLPA) students. This motivated this study

OBJECTIVES: This study aimed to evaluate the impact of the COVID-19 pandemic on SLPA undergraduate students during face-to-face teaching and learning, ERTL and transitioning towards hybrid teaching and learning

METHOD: Using course marks for SLPA undergraduate students, K means clustering and Random Forest classification were used to analyse students' performance and to detect patterns between students' performance and the attributes that impact student performance

RESULTS: Analysis of the data set indicated that funding is one of the main attributes that contributed significantly to students' performance; thus, it became one of the priority features in 2020 and 2021 during COVID-19

CONCLUSION: The clusters of students obtained during the analysis and their attributes can be used in identification of students that are at risk of not completing their studies in the minimum required time and early interventions can be provided to the students

Keywords: artificial intelligence; audiology; hybrid learning; contact; COVID-19; education; machine learning; emergency remote teaching; speech-language pathology; teaching; blended learning.

Introduction

The coronavirus disease 2019 (COVID-19) pandemic has produced a public health crisis worldwide that has severely affected the economic, health, academic and social fabric of the world community (Khoza-Shangase, Moroe, & Neille, 2021; Maital & Barzani, 2020; McKibbin & Fernando, 2020; Pawar, 2020; Soni, 2020). The spread of COVID-19 has been a catastrophe, and most governments in the world instituted measures to minimise the risks and spread of COVID-19 (Blankenberger & Williams, 2020). Some of the measures taken to curb the spread of the disease were and are not limited to wearing face masks, practising social distancing, isolation for individuals infected or exposed to COVID-19 (WHO, 2021) and varying levels of lockdown where movement is restricted. Most countries, including South Africa, restricted gatherings, movement and also implemented curfews (South African Government, 2021). One of the sectors that was significantly affected by the restrictions on movement was the education sector. COVID-19 significantly reshaped the way education is conducted globally, Dhawan (2020). In South Africa, from the time of colonisation to the decolonisation period, most institutions of higher learning have been dependent on face-to-face teaching and learning (Mgqwashu, 2017; Mpungose, 2020). Face-to-face teaching and learning usually occurs in the presence of an instructor or lecturer who transfers knowledge to students in a designated, demarcated classroom. The instructor or lecturer may use chalkboards, textbooks or any other traditional resource (Mpungose, 2020). In the context of political unrests, student protests, the outbreak of a pandemic or any other emergency situation, the demarcated physical classrooms are not available. In addition to that, face-to-face learning is marked by real-time contact with resources at a specific contact time (Mpungose, 2020; Tularam, 2018). At the early stages of the COVID-19 pandemic, most institutions of higher learning were completely closed in South Africa, as part of national regulations forming the National State of Emergency. To ensure that students completed the academic year successfully, most institutions transitioned to emergency remote teaching and learning (ERTL), which may be defined as a temporary shift of instructional delivery to an alternate delivery mode because of crisis circumstances. Emergency remote teaching and learning involves the use of various remote teaching strategies for education that would under normal circumstances be conducted face-to-face or as blended or as hybrid (Mpungose, 2020). Emergency remote teaching and learning applies aspects of both synchronous teaching -using video conferencing tools, for instance, Zoom, Teams and Google Meets (Bond, Bedenlier, Marín, & Händel, 2021) - and in some instances, utilising the asynchronous type of teaching approach, where students are provided with course material and lecture recordings and given flexibility to study in a self-paced fashion.

Some of the approaches used in ERTL were virtual and digital teaching and learning strategies (Mhlanga & Moloi, 2020). Emergency remote teaching and learning has played a huge role in ensuring that academic institutions in South Africa continue to deliver on their mandates. However, considering the South African history of colonialism and apartheid, which was characterised by racial segregation and injustices, transitioning to ERTL came with its own challenges (Besser, Flett, & Zeigler-Hill, 2020; Khoza-Shangase et al., 2021; McQuirter, 2020; Neuwirth, Jović, & Mukherji, 2021; Rajab, Gazal, & Alkattan, 2020). Thaba-Nkadimene (2020) highlighted that changing from face-to-face learning to ERTL, using digital platforms and currently transitioning towards blended or hybrid teaching, is a change that cannot be easily carried out within a short period of time. This change, which has occurred over the last 24 months, has required institutions of higher learning to intensively invest in digital technologies and academic staff digital training (García-Morales, Garrido-Moreno, & Martín-Rojas, 2021). COVID-19 highlighted and exacerbated the already existing social and economic inequalities in South Africa, and at the beginning of the ERTL, some students did not have the resources required for learning; for example, they lacked the device(s) to access information on digital platforms which were being used as part of the ERTL strategy, and they did not have connectivity access. Thus, the Department of Higher Education and Training collaborated with universities to provide laptops for students (Francis, Valodia, & Webster, 2020; Mpungose, 2020). Some students were faced with the dilemma of exorbitant costs of Internet and connectivity interruption, especially students residing in remote areas (Azionya & Nhedzi, 2021).

To overcome some of the challenges faced because of lack of access and Internet interruption, universities responded by partnering with Internet providers as a mitigating strategy, and a fixed amount of data were made available monthly for students to use in some of the universities (ITWeb, 2020). South African universities also negotiated for zero rating of educational sites, which is a solution that had previously been used during the students' protests in 2015. Zero rating is where mobile network providers and some Internet service providers do not bill their clients for accessing specific sites, in this context, educational sites. Zero rating means that the academic sites may be accessed without depletion of the student's data bundle. However, the current reality of education is that pedagogically relevant content is no longer constrained to an institutional website or even to a limited number of websites. In ERTL, students were sometimes required to access audio and visual communication tools, for instance, Microsoft Teams, Zoom and Google Classroom. Zero rating can therefore be perceived as having been narrowly interpreted, and there is a huge disconnect between sites that are zero rated and most sites that are pedagogically relevant to ERTL (Mhlanga, 2020; Tenet, 2021).

Another challenge faced by students in navigating ERTL was frequent power interruptions, which also affect Internet connectivity (Azionya & Nhedzi, 2021; Laher, Bain, Bemath, De Andrade, & Hassem, 2021; Oyedotun, 2020). Power interruption is a well-documented ongoing challenge in South Africa, termed load-shedding, and it is currently still being resolved. Students required flexibility to access academic material online and to participate in assessments that were conducted online to accommodate this instability in power supply (Gedala & Connie, 2021; Motsepe Foundation, 2021).

The assessment of students during ERTL was the most challenging aspect, both locally and internationally. The proposed assessment strategies that emerged either supported or disrupted the already existing inequalities that widened access to higher education by previously marginalised individuals (Padayachee & Matimolane, 2021). It is argued by Padayachee and Matimolane (2021) that challenges experienced during ERTL originate from an ingrained perspective of an assessment as a measure of student competence and standard of teaching. The transition to ERTL challenged how assessments have previously been viewed at institutions of higher learning. Re-evaluating teaching and redesigning approaches to assessments seems to have triggered a shift in assessment ideology (Padayachee & Matimolane, 2021). Although these strategies assisted students in coping with the ERTL, both course instructors and students continued to face other challenges in completing the academic year (Padayachee & Matimolane, 2021). In most institutions of higher learning in South Africa, Bachelor of Speech-Language Pathology and Bachelor of Audiology programmes are designed to be completed within a duration of 4 years. The practical or clinical courses may be held at the University's speech and hearing clinics and at speech and hearing clinics at hospitals, schools, industries and care facilities, within the broader urban and rural context. South African training institutions for Speech-Language and Hearing (SLH) have predominantly applied the traditional and orthodox approach to teaching and learning through in-person contact. Teletraining and telepractice have not been employed extensively (Khoza-Shangase et al., 2021). The COVID-19 outbreak therefore brought to light the need for a paradigm shift in the manner in which clinical training for SLH in South Africa is viewed and conducted (Chin et al., 2021; Khoza-Shangase et al., 2021; Schmutz et al., 2021). In pursuit of solutions during ERTL, application of simulations was presented as one of the ways students can conduct their clinical training (Tabatabai, 2020). In a scoping review by Nagdee, Sebothoma, Madahana, Khoza-Shangase and Moroe (2022) on what has been documented about simulation as a mode of clinical training in health care professions, findings indicate the value and usefulness of simulation but only within a hybrid model of training where it is used in conjunction with traditional approaches to training.

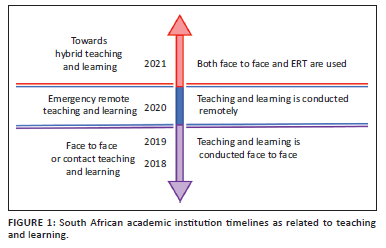

Other challenges that were observed during the ERTL were lack of effective peer-to-peer interaction, which is very instrumental in students being able to learn from each other (Chandra & Palvia, 2021). Some students lacked conducive environments at home that would allow for effective ERTL (Gumede & Badriparsad, 2022). These challenges were not restricted to students only, as staff members at institutions of higher learning were also faced with various challenges. Lack of e-tech facilities was one of the setbacks (Mpungose, 2020). Lecturers who were used to only traditional ways of teaching were frustrated with online interactive learning digital technologies because of lack of digital skills or because of the new expectation to have to learn how to use new platforms in a very short period of time, whilst at the same time delivering quality lectures (Engler, 2020). Regardless of all these challenges faced by students and academic members of staff, there was still an expectation that students should excel in their academic work and the academic year be successfully completed. With the ease of the lockdown restrictions, most institutions of higher learning in South Africa are transitioning to hybrid teaching and learning. Hybrid teaching and learning in the current context of most South African universities may be defined as the thoughtful integration of classroom face-to-face learning experiences, ERTL and online learning experiences (Hrastinski, 2019). Figure 1 is a visual representation of the modes of teaching and learning, ranging from face-to-face learning, ERTL and the current transition to hybrid learning; the cohort of students in Figure 1 change each year.

Amidst the COVID-19 pandemic, academic success has remained one of the primary strategic objectives for most institutions of higher learning (Zeineddine, Braendle, & Farah, 2021). With increasing operational costs and budget cuts from government subsidies, companies that sponsor students and general donors terminating their sponsorship contracts and general loss of income by guardians because of COVID-19 (Van Schalkwyk, 2021), academic institutions need to pay more attention to clearly understanding factors that influence students' performance and be able to address these in their planning and interventions. These interventions would facilitate throughput, thus institutions meeting their mandate. Student retention in light of the COVID-19 pandemic has also been a concern for institutions of higher learning; thus, enrolment and retention of enrolled students has also become a top priority for administrators of institutions of higher learning (Zeineddine et al., 2021). High student dropout rates generally result in lower graduation rates and financial loss for both students and institutions. Institutions that experience high dropout rates may also be ranked lower and their reputation amongst their peers be in disrepute (Cardona, Cudney, Hoerl, & Snyder, 2020). One of the main indicators and predictors of students' success in academic institutions are the course marks that a student obtains at the end of the course.

In South Africa, most institutions of higher learning use both summative and formative assessments to arrive at course marks for students. The assessments are usually prepared by the lecturer and are moderated by both internal and external stakeholders. The examinations are usually administered by the university, and invigilators are selected by the university. The final course mark is made up of an accumulation of scores over the course period through various tests, practicals and the examination. The final mark is normally presented as a percentage mark, and in many institutions, it is released to the student by the faculty on behalf of the university. A mark of 50% and above in a course would generally be viewed as having passed the course and a mark below that would indicate failure. Course marks are therefore one of the indicators of whether a student has completed a course successfully or not. Institutions of higher learning currently have huge data sets collected over years that can be used to detect and understand unknown patterns and trends and other hidden variable relationships, using data mining techniques and tools.

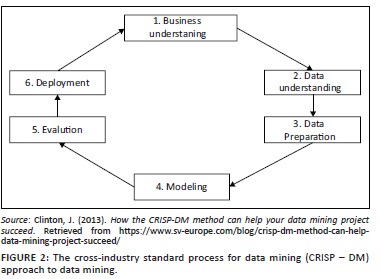

Data mining has in the past been applied in various fields, for instance, health care, business and education. Considering its success in prediction of various critical academic outcomes, performance, retention, success, satisfaction and achievement, data mining may also be applied in assessing the impact of COVID-19 on students' performance (Alyahyan & Düştegör, 2020). Data mining and application of machine learning (ML) models for classification and analysis of data typically follow a data science approach, namely the Cross Industry Standard Process for Data Mining (CRISP - DM), as shown in Figure 2.

The first step in CRISP-DM is business understanding, which refers to clearly understanding the problem and converting it to a well-defined analytics problem. The current dilemma in this research is to assess the effect of the COVID-19 pandemic on speech-language pathology and audiology (SLPA) undergraduate students under three predefined modes of learning, namely face-to-face teaching and learning, ERTL and transitioning to hybrid teaching and learning. The second stage in this approach entails understanding of the data that are extracted or collected from the data warehouse that are essential in solving the identified problem. Literature shows that prior academic achievements, student demographics, e-learning activities and psychological attributes and environment are the most commonly reported features or attributes that determine students' success (Alyahyan & Düştegör, 2020). It is reported that 69% of literature articles focused on prior academic achievement and student demographics as a choice of attributes. To benchmark this study, demographic, financial and academic achievements were extracted. The third stage in the CRISP-DM entails data preparation, where the decision of the data to be used for evaluation of the impact of COVID-19 on students' performance was made. Existing literature (Alyahyan & Düştegör, 2020) was used in determining the criteria for the attributes (columns) and records (rows) that were suitable in answering the research question. The financial, academic and demographic data were merged for easy analysis and interpretation. The fourth stage is the modelling. The two common models used in student success models are predictive and descriptive. To generate patterns that define the basic structure and interconnectedness in students' data, a descriptive model was firstly used. The most common techniques used are linear regression and logistic regression analysis. Logistic regression is a simple and efficient technique for linear and binary classification models. It is a model that is easy to realise and provides good performance. Logistic regression can be used for binary classification and class probability estimation (Millar, 2011; Tu, 1996). This analysis is commonly used to explain the relationship between a series of predicted variables and a binary variable, for example, whether a student passed or failed. Logistic regression provides the best model that fits the correlation between dependent and independent variable sets (Sinha et al., 2010; Hashim, Awadh, & Hamoud, 2020). Logistic regression is applied for a response variable (y) that tracks a binomial distribution (Bielza, Robles, & Larrañaga, 2011). Logistic regression output is defined generically by a probability distribution and has two values: 1 or 0 (Madahana, Ekoru, Mashinini & Nyandoro, 2019).

Clustering of students' data applies to techniques such as K means clustering, which may be defined as one of the statistical and unsupervised analysis that can be performed on a huge data set. This method groups data into homogeneous classes; thus, hidden relationships and patterns can be observed and analysed from the data set. A cluster may be defined as a group of data objects with similar characteristics (Shovon & Haque, 2012). K means clustering is an unsupervised learning algorithm that groups data samples together depending on the attributes, also known as features, that they share. This results in a model that has data samples as inputs and the return groups or clusters that the new data point belongs to. The simplified algorithm is presented by Shovon and Haque (2012). The most commonly used classification algorithms are decision trees and random forest. Decision trees are ML algorithms that apply the branching approach to demonstrate likely outcomes of a decision subject on determined parameters. A hierarchical arranged set of rules makes up the tree structure, which starts with the root attributes and ends with the leaf nodes. One or multiple outcomes from the original data sets represents each tree (Guleria, Thakur, & Sood, 2014; Hamoud, 2017). Decision trees also have root nodes, internal nodes and terminal nodes (Basu, Basu, Buckmire, & Lal, 2019). On the other hand, random forest is a supervised ML algorithm that is constructed from decision trees. The random forest classifier is made up of a combination of tree classifiers, which are generated by using a random vector sampled independently (Breiman, 2001). The other commonly used classification technique is the support vector machine (SVM), which is a ML algorithm that is commonly used for regression and pattern classification (Graf & Wichmann, 2002). The basic operation of SVMs is obtaining an optimal linear hyperplane such that the error of unseen samples is reduced (Chiu & Huang, 2013; Madahana et al., 2019). Figure 3 shows the overall system diagram used for clustering the data sets and for building classification models. The raw data refer to the extracted students' data, which then undergoes data cleaning, and then classification is conducted. Evaluation is conducted to understand the data mining results. In this study, the patterns and trends observed in students' performance are analysed. In the evaluation stage, the attributes that were excluded are discussed, as well as whether there is a need to use them in future. The final stage is the deployment where the model can be used.

Research method and design

Study design

The research approach selected and applied to this study is a quantitative methodology utilising a correlational study design (Creswell & Creswell, 2017). The design was suitable because correlational studies attempt to establish the extent of a relationship between two or more variables using statistical methods. The chosen approach produces results that can be used in a predictive model, hence its appropriacy for this study.

Study population and sampling strategy

Data for 605 undergraduate students from the SLPA departments were extracted from the university data warehouse. The data set consists of 95% (n = 576) female students and 4.8% (n = 29) male students, with age ranges from 18 to 36 years and a mean age of 24.8 in the sample.

Data collection

Raw data were obtained from an institution of higher learning in Johannesburg, South Africa. Four years of institutional data for undergraduate students from 2018 to 2021 enrolled for SP&A degree programmes were extracted from the university data warehouse. Variables were extracted that are typically cited in the literature to influence students' performance, for example, financial, academic and demographic characteristics (Alyahyan & Düştegör, 2020). Table 1 provides the definition of the attributes. The variable in Table 1 includes the quantile ranking and different funding types. The quantile ranking system was implemented by the South African government to assist in redressing the past financial inequalities regarding educational funding in South Africa. All South African public schools are placed into five categories, called quintiles, which are used for the application of financial resources. Quintile 1 is the 'poorest' quintile whilst quintile 5 is the 'least poor'. The quintile rankings are determined nationally according to the poverty of the community around the school and certain infrastructure factors (Van Dyk & White, 2019). A student may be self-funded or sponsored. Self-funded students may be paying their own school fees, or their guardians may be paying the fees. Students may be sponsored externally, for example, by a company or organisation. A student may also be sponsored internally, for example, through a staff bursary, faculty bursary or merit award that is sourced via the university's financial aid and scholarship office.

Data analysis

The data extracted from the university data warehouse had already undergone a thorough extraction, transformation and loading (ETL) process. The data were loaded onto Jupyter Notebook in Python, and the data cleaning process was conducted by deleting unusable columns of data, for example, incomplete columns of data sets from the biographical questionnaire. The extracted data were converted into a single file, with the columns representing the features and the rows representing students' records. The attributes extracted and their definitions are given in Table 1. The data consists of 5608 horizontal rows of data, also known as observations, of students' performance for 4 years. The final course mark is known as the target feature, and it represents the feature that the researchers were interested in gaining a deeper understanding of, because it determines whether a student has passed or failed. A statistical approach to assess and compare learning algorithms by dividing data into segments (known as cross-validation) was used. A 10-fold cross-validation was run with a split of 80% and 20% randomly shuffled testing and training sets, respectively. The extracted data were analysed using K means clustering and random forest classification. The summarised data were presented in tables and graphs.

Ethical considerations

This study involved the use of anonymised data to evaluate student performance. Depersonalised attributes or features (for instance, course marks) were extracted from the database. The study followed all ethical standards of studies without direct contact with human or animal subjects. Ethical clearance to conduct this study was obtained from the University of the Witwatersrand, Human Research Ethics Committee (Non-Medical) (reference number: HRECNMW22/01/11).

Results and discussion

In order to get general insights into the patterns and trends in the data set, a correlation matrix showing relationships between features in the data sets was drawn, as shown in Figure 4. There is a need to understand the relationship between variables. Each cell in Figure 4 shows the correlation between two specific variables. Features with a correlation number above 0.50 are considered to be strongly positive correlated, whilst features with negative numbers are weakly negative correlated, and numbers that are less than 0.006 are considered not to be correlated at all. The patterns observed on the correlation matrix indicated a correlation between students' funding type and their final mark. There is also a positive correlation between self-funded students and the quintile. Age was also observed to have a positive correlation with the final mark. Therefore, from the correlation matrix, the students' funding type, the courses registered by a student and age are viewed as important correlations. However, in the building of a student success or intervention model, features that introduce biases in the data set are not included.

Bar charts for overall students' performance and performance per course were also drawn as shown in Figures 5a to 5d. Variations of average marks within the range of 5% are expected in student marks each year, because each cohort of students varies each year. The average performance of students can vary, even if the teaching practices remain the same. Despite the SP&A courses being taught by qualified and experienced staff each year, variations in the course marks are still expected, because the cohorts of students are different each year. The p-value and the confidence interval may be used to determine whether the average marks variation are statistically significant or not. A p-value of less than 0.05 was considered as the threshold of whether the average marks were statistically significant or not. Statistical significance does not imply practical significance; hence, further tests and interpretations are conducted for values deemed as statistically significant values. From 2018 to 2019, the average student performance was very similar for Speech and Hearing Science (SPPA1003A) and Speech Pathology and Audiology (SPPA1004A). There was then a slight increase in average marks for ERTL and hybrid learning (2020 and 2021) for SPPA1003 and SPPA1004, as seen in Figure 5a.

From Figure 5b, Audiology II (SPPA2001A) students' average performance in 2020 and 2021 was lower compared with 2018 and 2019. There was also a slight drop in average performance for clinical practicals in Audiology (SPPA2005A) in 2020 and 2021. The SPPA2004 was introduced in 2019, and SPPA2002 was discontinued after 2018, hence the data are missing for those course codes.

In third year, students performed better in clinical practicals in Audiology (SPPA3004A) in 2021 compared with 2018 to 2020. In Speech-Language Pathology III (SPPA3005A), the highest performance was observed in 2020. The performance for SPPA3005A dropped back to its usual average in 2021 (Figure 5c). The SPPA 3003 was discontinued after 2019; hence, there is no data on the course after 2019.

Finally, in fourth year, observations show an improvement in average marks for SPPA4005 in 2020, as seen in Figure 5d. The SPPA4005 course was discontinued after 2020, hence the missing data in the year that follows. The SPPA4007 course shows similar performance in 2018 and 2021, whereas in 2019 and 2020 average performance is similar. The variations could be because of factors other than the shift to ERTL.

The trends in the average marks plotted in the bar graphs (Figures 5a to 5d) indicate an increase in average marks in 2020 and 2021 for courses with practical components, except for one second-year course (SPPA2001) where there was a decrease in the average marks for a course with a practical component. Whilst the obtained general information is instrumental in providing a general view of the class performance over the years, it does not provide enough details to account for the reasons for the increase or drop in marks. Therefore, data are analysed further to obtain insights into students' performance during face-to-face, ERTL and hybrid learning by clustering the students using K means. Clustering was conducted to determine the properties of the data set. The students were segmented into groups using K means. The elbow plot was used to determine the optimal cluster number that minimises the cost function, as shown in Figure 6. From the elbow plot, the optimal cluster number is three. The vertical axis is labelled as within cluster sum of square (WCSS) referring to WCSS, which is defined as the sum of squared distance between each point and the centroid in a cluster. When the WCSS is plotted with the K-value, the plot looks like an elbow. As the number of clusters increase, the WCSS value will start to decrease. The WCSS value is largest when K = 1, which is the optimal cluster selection.

Each student in the entire data set was assigned to a cluster label. Figure 7 shows the clusters that were obtained using third-year students as an example.

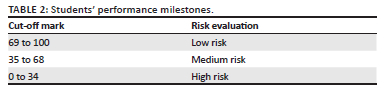

Figure 7 shows three distinct priority classes in the students' marks from 2018 to 2021. These priority classes are further broken down, as shown in Table 2.

The change in students' final course marks was the determining factor in classification into the level of priority. The classes in Table 2 can be used in the prediction of whether a given student will be able to complete the academic year or not, depending on their classification. Low-risk students are viewed to be generally performing well in their studies and they need minimal intervention; medium-risk students need intervention at a high level, which may be administered in a group setting. However, high-risk students are perceived to have various challenges with the courses and urgent intervention based on 'one-on-one' intervention may be required. At this point, the clusters still do not provide much information about the students' attributes. In order to customise students' interventions, it is important to understand the attributes that characterise each cluster. Information within the cluster is obtained using the random forest classifier.

Figure 8 is an example of attribute extraction that was conducted using random forest. The main attributes that were found to affect students' performance in the fourth year in 2021 are listed in order of priority starting with the age, quintile, SPPA4006 and funding. The first four attributes will be considered to be significant. The attributes are important because they provide the state of 'academic health' for a student. For example, if a student is self-funded and from a lower high school quintile (1, 2, 3), the department of SP&A will need to closely monitor the performance of this student, because the student is likely not to perform well. One of the courses that can be used to monitor students' performance in fourth year would be the observed performance in SPPA4006A. The students' performance in SPPA4006 strongly influences the performance in the other courses and the performance in their entire fourth year.

Classification of the data using random forest results is the ability to recognise the hidden patterns and trends in the data set, as shown in Table 3. The attributes vary depending on the year of study.

The clusters were created using the attributes extracted and listed in Table 1. Random forest classifier was applied in order to understand the hidden information in each cluster for each year of study from 2018 to 2021. The observed patterns can be summarised as follows:

-

Self-funded students from lower quintile high schools (1, 2 and 3) generally performed poorly from 2018 to 2021. Whereas, self-funded students from higher quintile schools (5, 6, 7) performed very well. This study does not consider whether the self-funded students paid their own tuition fees or if the tuition fees were paid by their guardians.

To understand and illustrate this finding further, Figure 9, is plotted to show the relationship between quintiles, funding and students' performance.

-

From 2018 to 2021, the age and high school quintile remained the priority factors for all the undergraduate students from first year to fourth year.

-

Funding was observed to have a very low priority from 2018 to 2019 in determining a student's performance; however, from 2020 to 2021, funding increased in priority and it influenced students' performance.

-

In 2018 and 2019, SPPA1003 had a lower priority; however, in 2020 and 2021, its priority increased and it became one of the key courses in first year that determined a student's performance in first year.

-

The SPPA2001 course influenced students' performance in 2018, 2020 and 2021.

-

In fourth year, SPPA4006A was a priority factor in 2018, 2019 and 2021.

These results indicate that one of the impacts of COVID-19 on SP&A students was having the financial health status of a student becoming one of the priority attributes that determined their success. Self-funded students from lower quintiles were affected more than self-funded students from higher quintiles. This is expected, because some of the effects of COVID-19 in South Africa were budget cuts and loss of income from different sectors. Institutions of higher learning were not an exception. It is not clearly understood from the data why SPPA1003 (Speech and Hearing Science), SPPA2001 (Audiology) and SPPA4006 (Research Report) became priority courses during the contact and blended learning. Careful deliberation of the content of these courses is required to ensure that the teaching and learning approaches adopted are responsive, relevant and responsible. The general insights and the important attributes from the clusters may be used in development of SP&A undergraduate students' performance tracking system. A comparative analysis was also carried out using various classification techniques to establish the model that is suitable for the students' data set. The results are shown in Table 4.

The significance of the analytics in this study is that they can be used by various stakeholders to make predictions of student performance and be able to implement intervention strategies for students in a timely manner. The analytics indicate that each study approach has its own merits and demerits, and the different population groups of students are affected by the choice of one model of learning over the other. A hybrid type of learning would be more suitable because it is more flexible.

Although this study was prompted by the global negative effects of COVID-19 on teaching and learning (Maital & Barzani, 2020), current findings raise important implications going beyond COVID-19 if the hybrid model of teaching and learning remains part of standard practice. Within the SLPA programmes, where traditionally only face-to-face was the adopted model, the implications of these findings speak to areas requiring supportive measures for both access and success. The current findings were obtained using data from the SLPA programmes, however, the results can also be applied as evidence of South African colonialism- higher learning institutions, as described by Mgqwashu (2017) and Mpungose (2020).

If financial status in the form of self-funding is a critical factor in the performance of students during remote teaching and learning, as found in this study, it is important for institutions of higher learning to ensure that (1) students have funding; (2) they intensify their investment in digital technologies and digital literacy for both staff and students (García-Morales et al., 2021); (3) the Department of Higher Education and Training engages in and solidifies intersectoral collaboration with other relevant departments such as the Department of Communications to facilitate students' access to Information and Communication Technology (ICT) resources and support for learning (Francis et al., 2020); (4) zero rating of all course material is negotiated as a standard nationally, without the current reported disconnect between sites that are zero rated and relevant ETRL relevant course material (Mhlanga, 2020; Tenet, 2021); (5) considerations are always made around power supply challenges such that students are not disadvantaged by load-shedding, which also affects connectivity that is beyond their control during remote learning (Azionya & Nhedzi, 2021; Laher et al., 2021; Oyedotun, 2020); (6) models of training forming part of remote teaching and learning as part of hybrid methodology such as telepractice, teletraining and use of simulations (Khoza-Shangase et al., 2021; Nagdee et al., in press) are carefully monitored for efficacy; and (7) psychosocial aspects such as gradual instead of abrupt change to remote teaching and learning (Thaba-Nkadimene, 2020), as well as provision of effective peer support and engagement platforms are considered (Chandra & Palvia, 2021).

Limitations of the study

-

It is assumed that the average performance can vary even if the teaching practices remain the same. Despite the SPPA courses being taught by qualified and experienced staff each year, variations in the course marks are still expected because the cohorts of students are different each year; therefore, definitive conclusions based on current data are limited, thus raising implications for future confirmatory research in this area.

-

Some attributes (for instance, gender, disability, nationality and marital status) are analysed as attributes that affect student performance; however, in the future development of the ML model, these attributes are not included to avoid biases in the model.

-

For self-funded students who have to work to pay their school fees, their specific performance based on this unique feature is not analysed in this study, which is thus an implication for future studies.

-

The impact of race on student performance with respect to COVID-19 has deliberately been left out of this publication, even though it is well documented that attributes such as financial health remain racially linked in South Africa. This will be the focus in a future study.

Recommendations and conclusion

According to the comprehensive analysis of the data sets, a novel approach in this field, both traditional methods of learning and online learning have their merits and demerits. Amalgamating the merits of both types of learning would result in a system that is easy for both students and lecturers or course instructors to navigate. Transitions towards blended or hybrid learning will require stakeholders to identify and merge the positive aspects from the online learning and face-to-face learning platforms. The COVID-19 pandemic has accelerated South Africa's institutions of higher learning towards thinking about how to develop robust technological infrastructure that can be used alongside the traditional methods of teaching. The huge amount of data sets that institutions of higher learning have collected over the years can be used to analyse trends and patterns in students' performance. This study has demonstrated how this can be done through ML, using SLPA as a case study. With this in mind, some of the questions that the SLPA programmes and institutions of higher learning generally will need to look at are:

-

How can learning and teaching be improved to support student success?

-

Which approach would be suitable to ensure students' academic success?

-

Which social, psychological and economic factors affect students, and what are the ways in which the students can work with the university to resolve the challenges?

-

Which learning approaches are better suited for students, and is there a difference in these based on year of study?

The ML model can be used in implementation of an early warning and recommendation system that ensures that administrators provide early intervention to a student who is not performing well. However, it is important to note that features that would introduce biases in the data sets (for example, race, age, nationality, marital status and nationality) would not be included in the ML models. In future, the attributes that have been observed to have an influence on students' performance will be used in the building of an early intervention model to help the course instructors and administrators in identifying students who are not coping with their workload and provide interventions. In the early intervention model, features that introduce biases in the data will not be included.

Acknowledgements

Competing interests

The authors declare that they have no financial or personal relationships that may have inappropriately influenced them in writing this article.

Authors' contributions

M.C.M., K.K-S., N.M., O.N. and J.E. contributed equally to this work.

Funding information

The authors thank the National Institute for the Humanities and Social Sciences for providing financial assistance for the publication of this manuscript.

Data availability

Data sharing is not applicable to this article as no new data were created or analysed in this study.

Disclaimer

The views and opinions expressed in this article are those of the authors and do not necessarily reflect the official policy or position of any affiliated agency of the authors.

References

Alyahyan, E.A., & Düştegör, D. (2020). Predicting academic success in higher education: Literature review and best practices. International Journal of Educational Technology in Higher Education, 17, 1-21. https://doi.org/10.1186/s41239-020-0177-7 [ Links ]

Azionya, C.M., & Nhedzi, A. (2021). The digital divide and higher education challenge with emergency online learning: Analysis of tweets in the wake of the COVID-19 Lockdown. Turkish Online Journal of Distance Education, 22(4), 164-182. https://doi.org/10.17718/tojde.1002822 [ Links ]

Basu, K., Basu, T., Buckmire, R., & Lal, N. (2019). Predictive models of student college commitment decisions using machine learning. Data, 4(2), 65. https://doi.org/10.3390/data4020065 [ Links ]

Besser, A., Flett, G. L., & Zeigler-Hill, V. (2022). Adaptability to a sudden transition to online learning during the COVID-19 pandemic: Understanding the challenges for students. Scholarship of Teaching and Learning in Psychology, 8(2), 85-105. https://doi.org/10.1037/stl0000198 [ Links ]

Bielza, C., Robles, V., & Larrañaga, P. (2011). Regularized logistic regression without a penalty term: An application to cancer classification with microarray data. Expert Systems with Applications, 38(5), 5110-5118. https://doi.org/10.1016/j.eswa.2010.09.140 [ Links ]

Blankenberger, B., & Williams, A.M. (2020). COVID and the impact on higher education: The essential role of integrity and accountability. Administrative Theory & Praxis, 42(3), 404-423. https://doi.org/10.1080/10841806.2020.1771907 [ Links ]

Breiman, L. (2001). Random forests. Machine Learning, 45(1), 5-32. https://doi.org/10.1023/A:1010933404324 [ Links ]

Bond, M., Bedenlier, S., Marín, V.I., & Händel, M. (2021). Emergency remote teaching in higher education: Mapping the first global online semester. International Journal of Educational Technology in Higher Education, 18(1), 50. https://doi.org/10.1186/s41239-021-00282-x [ Links ]

Cardona, T., Cudney, E.A., Hoerl, R., & Snyder, J. (2020). Data Mining and Machine Learning Retention Models in Higher Education. Journal of College Student Retention: Research, Theory & Practice, 0(0) 1-25. https://doi.org/10.1177/1521025120964920 [ Links ]

Chandra, S., & Palvia, S. (2021). Online education next wave: Peer to peer learning. Journal of Information Technology Case and Application Research, 23(3), 157-172. https://doi.org/10.1080/15228053.2021.1980848 [ Links ]

Chin, K.E., Kwon, D., Gan, Q., Ramalingam, P.X., Wistuba, I.I., Prieto, V.G., & Aung, P.P. (2021). Transition from a standard to a hybrid on-site and remote anatomic pathology training model during the coronavirus disease 2019 (COVID-19) pandemic. Archives of Pathology & Laboratory Medicine, 145(1), 22-31. https://doi.org/10.5858/arpa.2020-0467-SA [ Links ]

Chiu, C.Y., & Huang, P.T. (2013). Application of the honeybee mating optimization algorithm to patent document classification in combination with the support vector machine. International Journal of Automation and Smart Technology, 3(3), 179-191. https://doi.org/10.5875/ausmt.v3i3.183 [ Links ]

Clinton, J. (2013). How the CRISP-DM method can help your data mining project succeed. Retrieved from https://www.sv-europe.com/blog/crisp-dm-method-can-help-data-mining-project-succeed/

Creswell, J.W. and Creswell, J.D. (2017) Research design: Qualitative, quantitative, and mixed methods approaches. 4th Edition, Sage, Newbury Park.

Delen, D. (2010). A comparative analysis of machine learning techniques for student retention management. Decision Support Systems, 49(4), 498-506. https://doi.org/10.1016/j.dss.2010.06.003 [ Links ]

Dhawan, S. (2020). Online learning: A panacea in the time of COVID-19 crisis. Journal of Educational Technology Systems, 49(1), 5-22. https://doi.org/10.1177/0047239520934018 [ Links ]

Engler, S. (2020). As the COVID-19 response accelerates the speed and scale of digital transformation, a lack of digital skills could Jeopardize companies with misaligned talent plans. Retrieved from https://www.gartner.com/smarterwithgartner/lack-of-skillsthreatens-digital-transformation/

Francis, D., Valodia, I., & Webster, E. (2020). Politics, policy, and inequality in South Africa under COVID-19. Agrarian South: Journal of Political Economy, 9(3), 342-355. https://doi.org/10.1177/2277976020970036 [ Links ]

García-Morales, V.J., Garrido-Moreno, A., & Martín-Rojas, R. (2021). The transformation of higher education after the COVID disruption: Emerging challenges in an online learning scenario. Frontiers in Psychology, 12, 196. https://doi.org/10.3389/fpsyg.2021.616059 [ Links ]

Gedala, N., & Connie, I. (2021). A critique of online learning in higher education during the coronavirus lockdown level 5 in South Africa African Journal of Development Studies (AJDS). Journal of Development Studies 11, 127-147. https://doi.org/10.31920/2634-3649/2021/v11n1a6 [ Links ]

Graf, A.B., Wichmann, F.A. (2002). Gender Classification of Human Faces. In: Bülthoff, H.H., Wallraven, C., Lee, S.W., Poggio, T.A. (eds) Biologically Motivated Computer Vision. BMCV 2002. Lecture Notes in Computer Science, vol 2525. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-36181-2_49 [ Links ]

Guleria, P.G., Thakur, N., & Sood, M. (2014). Predicting student performance using decision tree classifiers and information gain. In: Y. Singh & V. Sehgal & N. Nitin & S. P. Ghrera (eds) 2014 International Conference on Parallel, Distributed and Grid Computing, pp. 126-129, doi: 10.1109/PDGC.2014.7030728

Gumede, L., & Badriparsad, N. (2022). Online teaching and learning through the students' eyes - Uncertainty through the COVID-19 lockdown: A qualitative case study in Gauteng province, South Africa. Radiography (London, England: 1995), 28(1), 193-198. https://doi.org/10.1016/j.radi.2021.10.018 [ Links ]

Hamoud, A. (2017). Applying association rules and decision tree algorithms with tumor diagnosis data. International Research Journal of Engineering and Technology, 3(8), 27-31. https://doi.org/10.35741/issn.0258-2724.54.3.25 [ Links ]

Hashim, A.S., Awadh, W.A., & Hamoud, A.K. (2020, November). Student performance prediction model based on supervised machine learning algorithms. In IOP Conference Series: Materials Science and Engineering, 928(3), Thi-Qar, Iraq, 032019. IOP Publishing.

Hrastinski, S. (2019). What do we mean by blended learning? TechTrends, 63(5), 564-569. https://doi.org/10.1007/s11528-019-00375-5 [ Links ]

ITWeb. (2020). The government makes provision for virtual learning during lockdown. Retrieved from https://www.itweb.co.za/content/Gb3BwMW8LQKq2k6V

Khoza-Shangase, K., Moroe, N., & Neille, J. (2021). Speech-language pathology and audiology in South Africa: Clinical training and service in the era of COVID-19. International Journal of Telerehabilitation, 13(1), e6376. https://doi.org/10.5195/ijt.2021.6376 [ Links ]

Laher, S., Bain, K., Bemath, N., De Andrade, V., & Hassem, T. (2021). Undergraduate psychology student experiences during COVID-19: Challenges encountered and lessons learnt. South African Journal of Psychology, 51(2), 0081246321995095. https://doi.org/10.1177/0081246321995095 [ Links ]

Madahana, M.C., Ekoru, J.E., Mashinini, T.L., & Nyandoro, O.T. (2019). Noise level policy advising system for mine workers. IFAC-PapersOnLine, 52(14), 249-254. https://doi.org/10.1016/j.ifacol.2019.09.195 [ Links ]

Maital, S., & Barzani, E. (2020). The global economic impact of COVID-19: A summary of research. Samuel Neaman Institute for National Policy Research, 2020, 1-12.

McKibbin, W., & Fernando, R. (2020). The economic impact of COVID-19. Economics in the Time of COVID-19, 45(10), 1162. [ Links ]

McQuirter, R. (2020). Lessons on change: Shifting to online learning during COVID-19. Brock Education: A Journal of Educational Research and Practice, 29(2), 47-51. https://doi.org/10.26522/brocked.v29i2.840 [ Links ]

Mgqwashu, E. (2017). Universities can't decolonise the curriculum without defining it first. Retrieved from www.conversation.com/universities-cant-decolonise-the-curriculumwithout-defining-it-first-63948

Mhlanga, D., & Moloi, T. (2020). COVID-19 and the digital transformation of education: What are we learning on 4IR in South Africa? Education Sciences, 10(7), 180. https://doi.org/10.3390/educsci10070180 [ Links ]

Millar, R.B. (2011) Maximum Likelihood Estimation and Inference: With Examples in R, SAS and ADMB. John Wiley and Sons, New York. http://dx.doi.org/10.1002/9780470094846

Mpungose, C.B. (2020). Emergent transition from face-to-face to online learning in a South African University in the context of the Coronavirus pandemic. Humanities and Social Sciences Communications, 7(1), 1-9. https://doi.org/10.1057/s41599-020-00603-x [ Links ]

Motsepe Foundation. (2021). What South Africa's universities have learnt about the future from Covid 19. Mail & Gurdian. Retrieved from https://mg.co.za/education/2021-03-01-what-south-africas-universities-have-learnt-about-the-future-from-covid-19/

Nagdee, N., Sebothoma, B., Madahana, M., Khoza-Shangase, K., & Moroe, N. (2022). Simulations as a mode of clinical training in healthcare professions: A scoping review to guide planning in speech-language pathology and audiology during the COVID-19 pandemic and beyond. South African Journal of Communication Disorders, 69(2), a905. https://doi.org/10.4102/sajcd.v69i2.905 [ Links ]

Neuwirth, L.S., Jović, S., & Mukherji, B.R. (2021). Reimagining higher education during and post-COVID-19: Challenges and opportunities. Journal of Adult and Continuing Education, 27(2), 141-156. https://doi.org/10.1177/1477971420947738 [ Links ]

Oyedotun, T.D. (2020). Sudden change of pedagogy in education driven by COVID-19: Perspectives and evaluation from a developing country. Research in Globalization, 2, 100029. https://doi.org/10.1016/j.resglo.2020.100029 [ Links ]

Padayachee, K., & Matimolane, M. (2021, July 20). Assessment practices during Covid-19: the confluence of assessment purposes, quality and social justice in higher education. EdArXiv. Preprint. https://doi.org/10.35542/osf.io/9fqyb

Pawar, M. (2020). The global impact of and responses to the COVID-19 pandemic. The International Journal of Community and Social Development, 2(2), 111-120. https://doi.org/10.1177/2516602620938542 [ Links ]

Rajab, M.H., Gazal, A.M., & Alkattan, K. (2020). Challenges to online medical education during the COVID-19 pandemic. Cureus, 12(7). https://doi.org/10.7759/cureus.8966 [ Links ]

Schmutz, A., Jenkins, L.S., Coetzee, F., Conradie, H., Irlam, J., Joubert, E.M., Matthews, D., & Van Schalkwyk, S.C. (2021). Re-imagining health professions education in the coronavirus disease 2019 era: Perspectives from South Africa. African Journal of Primary Health Care & Family Medicine, 13(1), e1-e5. https://doi.org/10.4102/phcfm.v13i1.2948 [ Links ]

Shovon, M.H., & Haque, M. (2012). An approach of improving students academic performance by using k means clustering algorithm and decision tree. International Journal of Advanced Computer Science and Applications (IJACSA), 3(8), 146-149. [ Links ]

Sinha, S. K., Laird, N. M., & Fitzmaurice, G. M. (2010). Multivariate logistic regression with incomplete covariate and auxiliary information. Journal of multivariate analysis, 101(10), 2389-2397. https://doi.org/10.1016/j.jmva.2010.06.010 [ Links ]

Soni, V.D. (2020). Global impact of e-learning during COVID 19. Available at SSRN 3630073.

South African Government. (2021). Regulations and guidelines - Coronavirus Covid -19. Retrieved from https://www.gov.za/covid-19/resources/regulations-and-guidelines-coronavirus-covid-19

Tabatabai, S. (2020). Simulations and virtual learning supporting clinical education during the COVID 19 pandemic. Advances in Medical Education and Practice, 11, 513. https://doi.org/10.2147/AMEP.S257750 [ Links ]

Tenet. (2021). There is no turning back. The COVID-19 pandemic and global lockdown has accelerated the trend towards blended and online-only education. Retrieved from https://www.tenet.ac.za/news/online-learning-for-thewin-lets-keep-policy-in-line-with-technology

Thaba-Nkadimene, K.L. (2020). COVID-19 and e-learning in higher education. Journal of African Education, 1(2), 5-11. https://doi.org/10.31920/2633-2930/2020/1n2a0 [ Links ]

Tu, J.V. (1996). Advantages and disadvantages of using artificial neural networks versus logistic regression for predicting medical outcomes. Journal of Clinical Epidemiology, 49(11), 1225-1231. https://doi.org/10.1016/s0895-4356(96)00002-9 [ Links ]

Tularam, G.A. (2018). Traditional vs non-traditional teaching and learning strategies-the case of e-learning! International Journal for Mathematics Teaching and Learning, 19(1), 129-158. [ Links ]

Van Dyk, H, & White, CJ. (2019). Theory and practice of the quintile ranking of schools in South Africa: A financial management perspective. South African Journal of Education, 39(Suppl. 1), s1-s9. https://dx.doi.org/10.15700/saje.v39ns1a1820 [ Links ]

Van Schalkwyk, F. (2021). Reflections on the public university sector and the COVID-19 pandemic in South Africa. Studies in Higher Education, 46(1), 44-58. https://doi.org/10.1080/03075079.2020.1859682 [ Links ]

World Health Organization (WHO). (2021). Information for the public. Retrieved from https://www.who.int/westernpacific/emergencies/covid-19/information

Zeineddine, H., Braendle, U.C., & Farah, A. (2021). Enhancing prediction of student success: Automated machine learning approach. Computers and Electrical Engineering, 89, 106903. https://doi.org/10.1016/j.compeleceng.2020.106903 [ Links ]

Correspondence:

Correspondence:

Milka Madahana

milka.madahana@wits.ac.za

Received: 31 Jan. 2022

Accepted: 30 May 2022

Published: 30 Aug. 2022