Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

South African Journal of Industrial Engineering

On-line version ISSN 2224-7890

Print version ISSN 1012-277X

S. Afr. J. Ind. Eng. vol.32 n.4 Pretoria Dec. 2021

http://dx.doi.org/10.7166/32-4-2551

GENERAL ARTICLES

Future application of multisensory mixed reality in the human cyber-physical system

H.B. SantosoI, II; D.K. BarorohIII, IV, *; A. DarmawanIII, V, *

IDepartment of Information Systems, Universitas Kristen Duta Wacana, Indonesia. https://orcid.org/0000-0001-8272-3066

IIInstitute of Service Science, National Tsing Hua University, Taiwan

IIIDepartment of Industrial Engineering and Engineering Management, National Tsing Hua University, Taiwan

IVDepartment of Mechanical and Industrial Engineering, Universitas Gadjah Mada, Indonesia. https://orcid.org/0000-0001-9578-0078

VDepartment of Industrial Engineering, Universitas Hasanuddin, Indonesia. https://orcid.org/0000-0001-6763-6992

ABSTRACT

Mixed reality as an emerging technology can improve users' experience. Using this technology, people can interact between virtual objects and the real world. Mixed reality has enormous potential for enhancing the human cyber-physical system for different manufacturing functions: planning, designing, production, monitoring, quality control, training, and maintenance. This study aims to understand the existing development of mixed reality technology in manufacturing by analysing patent publications from the InnovationQ-Plus database. Evaluations of trends in this developing technology have focused on qualitative literature reviews and insufficiently on patent technology analytics. Patents connected to mixed reality will be mapped to give technology experts a better grasp of present progress and insights for future technological development in the industry. Thus 709 patent publications are systematically identified and analysed to discover the technological trends. In addition, we map existing patent publications with manufacturing functions, and illustrate this with a technology function matrix. Finally, we identify future research in human cyber-physical system development by enhancing different human senses to give users more sensations while interacting with virtual objects. We provide insight into this human cyber-physical system development in three industries: automotive, food and beverage, and textiles.

OPSOMMING

Gemengde-werklikheid is 'n opkomende tegnologie wat gebruikers se ervaring kan verbeter. Hierdie tegnologie laat mense toe om interaksie te hê met virtuele voorwerpe in die regte wêreld. Gemengde-werklikheid het baie potensiaal om die mens-kuber-fisiese stelsel vir verskeie vervaardigingsprosesse te verbeter, soos beplanning, ontwerp, produksie, monitering, gehaltebeheer, opleiding en instandhouding. Hierdie studie ondersoek die ontwikkeling van gemengde-werklikheid tegnologie in vervaardiging deur 'n hersiening van patente in die InnovationQ-Plus databasis. Tendense in hierdie ontwikkelende tegnologie fokus op kwalitatiewe literatuurstudies eerder as op kwantitatiewe patent tegnologie ontledings. Patente wat met gemengde-werklikheid assosieer word, word gekarteer om tegnologiekenners 'n beter begrip van bestaande vordering en insigte vir toekomstige ontwikkeling in die bedryf te gee. So word 709 patente sistemies identifiseer en ontleed om die tendense te ontdek. Verder word bestaande patente gekarteer volgens vervaardigingsfunksies - dit word met 'n tegnologiefunksiematriks illustreer. Laastens word toekomstige navorsing oor mens-kuber-fisiese stelselontwikkeling identifiseer deur verskillende sintuie te gebruik om gebruikers meer sensasies te gee wanneer hulle met virtuele voorwerpe werk. Insig word spesifiek in die motor, voedsel- en drank-, en tekstielbedryf verskaf.

1 INTRODUCTION

Businesses have become more competitive because of global competition, and their technology has developed to ensure their long-term viability. Because of recent advances in operating technologies (OT) and information technology (IT), the industrial sector has entered the age of Industry 4.0, and is known as the cyber-physical system (CPS). This revolution has increased efficiency, allowing increasingly diverse and productive production processes to meet the increasing growth expectations of clients. Artificial intelligence (AI) and the industrial Internet of Things (IIoT) enhance traditional processing infrastructure to handle more complex shop floor activities. However, the process of migrating to CPS in real industry is a huge problem. The Reference Architectural Model of Industry 4.0 (RAMI 4.0) is a solution that presents a step-by-step migration technique to enable connectivity and interoperability in order to identify clearly the point where OT meets IT [1]. Although automation technology has a great degree of manufacturing system stability, autonomy, and intelligence, it is difficult to guarantee that technology would replace any operation in manufacturing processes.

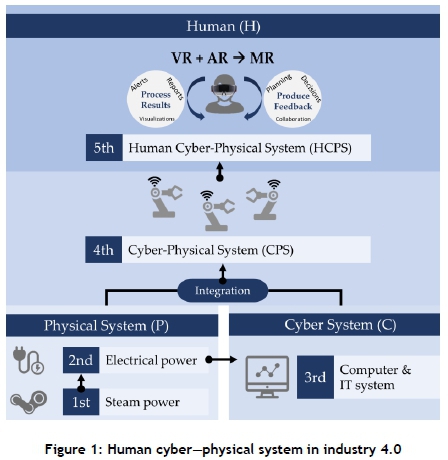

According to a McKinsey report [2], machines could replace fewer than 5% of manual tasks and operations, whereas only 60% of all employees have at least 30% of their activities automated. As a result, a possible approach is to allow people to cooperate with the operational cyber-physical system by assuming the position of 'master', and this concept is presented as the fifth industrial revolution, known as the human cyber-physical system (HCPS) [3]. Figure 1 is a conceptual representation of HCPS in the context of adopting Industry 4.0.

Figure 1 represented the 4.0 stage of the Industrial Revolution (from 1st to 4th). Combining physical and cyber systems resulted in the fourth evolution, the cyber-physical system, which is capable of completing, controlling, and optimising operation. Even if the AI system is robust, the human part is still vital. Therefore, the system advances to the fifth level, producing a human cyber-physical system. Finally, it will fully exploit and combine human and machine intellect synergistically [3].

As a result of the manufacturing support system's advanced technology installation, data is generated, evaluated, and shown as visualised information, warnings, or reports. As a result, feedback can be developed to improve human operations in manufacturing [4]. Immersive technology, which includes virtual reality (VR), augmented reality (AR), and mixed reality, is one of the most effective approaches to enhancing human participation and HCPS in the industrial sector, known as mixed reality (MR). MR is a user interface application that employs visualisation methods. It improves the view of the real world and allows for near-simultaneous communication between virtual and real settings. Amplifying knowledge enables remote participants to annotate user views, and improves collabourative interactions. As a result, in the intelligent manufacturing environment, MR has a considerable capability for HCPS.

This study focuses on MR technology, which is generated by mixing virtual reality (VR) and augmented reality (AR). This study aims to answer two research questions: (1) How can existing multisensory MR, based on the patent literature, be applied to manufacturing operations? (2) What are the predominant human senses, and how can the possible future development of multisensory MR for different manufacturing operations be applied in three industries (automotive, food and beverage, and textiles)? MR is a cutting-edge technology that allows for maximum user involvement in both the real world and the virtual world. Various solutions to support MR environments are being developed and used across a wide range of industries. To comprehend better the evolution of technology and MR applications, the authors did a literature review [3, 5-13] from the Scopus database to build an MR ontology. We followed earlier research [3] in identifying manufacturing operations in which MR could enhance or facilitate technology or interaction devices. It demonstrated that MR has already implemented numerous production procedures, from planning to maintenance [5-7]. In the context of MR technology, it can be split into input and output from the user's perspective [3, 8-13]. Both are crucial in the creation of immersive and intelligent systems to support human behaviour/nature. For the technology, we took seven papers as our reference to identify the critical technology of MR. Each author of this article identified the crucial technology in recent papers on MR and classified it. We did an author triangulation to check and validate the findings, and then drew the MR ontology-based literature review in Figure 2 below.

2 LITERATURE REVIEW

2.1 Technology mining and patent analytics

The advancement of science and technology leads to innovation and the development of existing technologies. As a result there is a rapid evolution of technology and increasing publication of intellectual property such as patents. To understand the current technology, people conduct patent analyses. Patent analysis needs specific techniques to analyse the patent documents, such as patent classification, technology clustering, patent management mapping, patent bibliometrics, and patent quality analysis [14, 15].

The collection of patent documents is relevant to a specific industrial domain. Technology clustering provides a mechanism to group similar patent documents. This clustering outcome can help one to understand the research and development focus among firms [15, 16]. Meanwhile, a patent quality analysis helps the company and inventor to decide whether to extend new research and customise its development [17]. A patent management map makes visual the existing intellectual property roadmap. Using this roadmap, the company and inventor can plan new research and development policies, and recommend new areas of innovation that have more significant potential [16]. The patent map also helps to visualise the distribution of patents, monitor the trend of technological change, and infer patent portfolios' strategies [18]. Furthermore, to understand a patent's quality, the expert uses patent bibliometrics and patent quality analysis as a tool. Patent quality analysis usually includes citation analysis and backward and forward citation [17, 18].

People used different applications and platforms to get patent documents, such as Google Patents, InnovationQ Plus, and Derwent Innovation. Those various applications also have other functionalities and features to help users to extract the patent information, such as assignees, patent claim, inventors, the international patent classification (IPC), the cooperative patent classification (CPC), country origin, and much other information.

Patent analytics can be seen as a tool for comprehensive technology mining, for both supervised and unsupervised learning. The aims of patent analytics are to find and understand state-of-the-art technology trends based on the patent documents. By analysing and studying patent documents, people can get more insight into the existing technology and how far it has been developed. It can also decrease the R&D cost by up to 40%, since the patent analysis can avoid a false assessment of product, technology, and service development [19].

2.2 Technology function matrix

A technology function matrix (TFM) is one of the patent maps that visualise two different patent analytics parts, technology, and management [20]. A TFM is also an indicator that is constructed to match the technology (represented by patent documents) and functions (represented by non-patent literature) of the specific key terms [21]. For example, people usually draw the technology development from the particular patent documents in the technology part. Thus the technology part aims to gain more innovative ideas and to understand the specific technology's existing development track [18, 20]. On the other hand, the expert uses the management part to draw information related to the assignee, country, application date, publication date, IPC, CPC, citation, and function of a specific technology [20, 21].

There are some benefits that people can gain by drawing a TFM. First, understanding the TFM can help R&D personnel, innovators, and engineers to know about state-of-the-art technology developments [20]. Second, it can also help to analyse the development phase and implement the specific technology in specific functions [21]. Finally, the TFM can also help to decide future technology development and the prospect of the future implementation of the existing technology [18-20].

To draw a TFM, people need expert judgement, and to construct a TFM, people need to identify, read, analyse, and structure the patent documents into the specific intersection between technology and function [20]. That construction job is labour-intensive and time-consuming, and requires a lot of knowledge of the specific domain [21]. TFM construction also requires enormous volumes of patent documents and non-patent literature. The development of text mining methods and applications helps experts to analyse and construct the TFM efficiently [19, 21].

2.3 Cyber-physical system, human cyber-physical system, and digital-human senses

In reality, physical and human spaces have always interacted. Eventually, the cyber or digital space was integrated, giving rise to the concept of a cyber-physical system (CPS). The integration's evolution has been divided into three stages [22]. Stage one was the traditional human-physical system (HPS), in which operations were entirely manual, and information sensing, analysis, decision-making, and operation control were primarily the responsibility of humans. There was also a limitation in terms of quality, efficiency, and performance. CPS represents the introduction of digital integration as the second stage. During this phase, the cyber system's activities include information sensing, analysis, operation control, and decision-making. The task quality, efficiency, and process stability have all been enhanced. Humans, like technology, are expected to perform at higher levels of competence. Thus the advancement of technology is synonymous with people upgrading their skills, type of work, and status of responsibility.

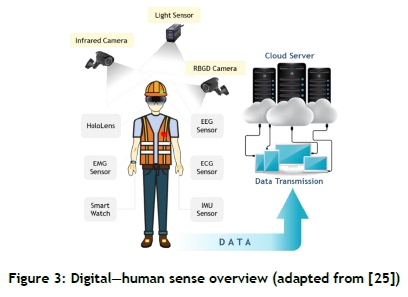

Furthermore, people will be surrounded by an increasing number of automated systems. Such systems can be used either to replace or to collaborate with human input. In general, full automation occurs when a procedure no longer requires human participation. Semi-automation is defined as a system that considers human insights in the control loop. HCPS is an example of an application that holds human input accountable throughout the process. According to RAMI 4.0, the manufacturing execution system (MES), which acts as a component manager for digitalised assets on the shop floor, plays a key architectural and functional role in CPS [1]. The majority of these attempts have centered on the technological aspect, ignoring the human-centered perspective. The third stage will acquire the capability of generating knowledge, and people working with these technologies will have the opportunity to engage in more creative aspects of the work. Humans' roles have shifted from passive information receivers to information or knowledge generators [23]. Taking human interaction into account, CPS evolved to HCPS to introduce next-generation intelligent manufacturing. To enhance human skill and interaction in HCPS, multisensory technology is needed [24]. It is possible to develop digital human senses by implementing certain technologies in the surrounding human environment (as seen in Figure 3).

The system is composed of both ambient and wearable sensors with different modalities [25]. Each sensor has a unique ability to collect specific information about the human worker. Various ambient sensors are used to capture workers' activities in the workplace. Ambient sensing can contain a large amount of data without interfering with the worker's movements. Nevertheless, the complex setup and occlusion issues are the main challenges in implementing ambient sensing. Therefore, wearable sensing was applied, including HoloLens, EMG (surface electromyography), ECG (electrocardiogram), EEG (electroencephalogram), IMU (inertial measurement unit) sensors, and a smartwatch. This scenario produced data, and all of the data were synchronised and sent to the local workstation or cloud server via different transmission protocols. With the data stored in the cloud server, it could be easily accessed in real time.

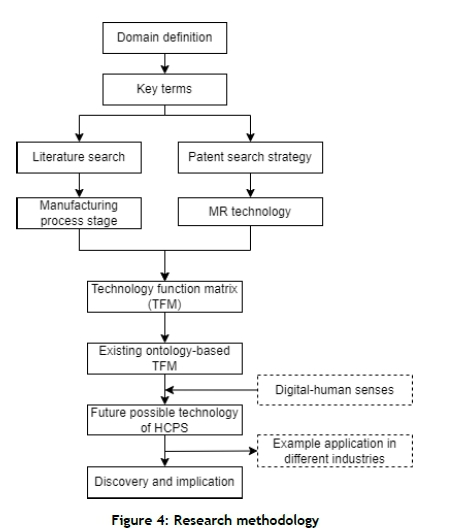

3 RESEARCH METHODOLOGY

As shown in Figure 4, this research's proposed methodology consisted of three main parts: (1) technological specification, in which we briefly discussed the patent portfolio used mainly in MR technology for the manufacturing process; (2) TFM, which was used to match the technology application and manufacturing process, in which we identified six manufacturing processes: planning, designing, production, monitoring, quality control, training, and maintenance [26, 27]; (3) future application of a human cyber-physical system; in this part we mapped the future human cyber-physical system and enhanced the user experience by using digital-human senses (visual, auditory, smell, taste, and haptic). To complete our analysis, we mapped these human senses to different industries. We used three other industries as examples to analyse the textile, food and beverage, and car assembly indiustries. We also provided future application development and direction that might enhance and advance the human cyber-physical system's manufacturing processes in those three different industries.

3.1 Patent search strategy

This research used the patent literature from InnovationQ-Plus from different patent offices. These were (1) the United States (USPTO); (2) China (CNIPA); (3) Taiwan (TIPO); (4) the World Intellectual Property Organization (WIPO); (5) European (EPO); and a number of other patent offices. We analysed the patent literature in the ten years from 2010 to 2019 for active and granted patent publications. We limited the CPC to narrow down our search. We used the search strategy (see Table 1) from the InnovationQ-Plus database, thus gaining 709 patent documents to use for further analysis.

3.2 Technology function matrix

We continued our analysis by using the ontology-based TFM, an approach that reduces the technology mining workforce and enhances patent analysis accuracy and consistency [21]. TFM consists of two indicators: technology and functions. A TFM is developed to match a specific patent's technologies and functions and put it into a single matrix. Thus a TFM can help people to understand technological developments and identify potential research and products [21]. Therefore, in this TFM analysis, we used our patent portfolio in the technology part and matched it with the six functions in the manufacturing process mentioned in subsection 3a. This TFM analysis will be a foundation for our future application development of the HCPS. Therefore, we discuss it briefly in the next subsection.

3.3 Future application of human cyber-physical system

In this part we give a broad idea of how to improve the accessibility of HCPS in different manufacturing processes. MR technology provides human-machine collaboration. Therefore, using this feature, humans can collaborate with machines to advance the manufacturing process. We extended our future research direction by adding digital-human sense stimuli (visual, haptic, smell, taste, and auditory). Human sense stimuli are essential in a human cyber-physical system [25]. Adding human sense stimuli to a human cyber- physical system can enhance and advance the user experience. We also provided the human cyber-physical system (HCPS) and digital multisensory implementation in different industries, such as textiles, food and beverage, and automotive, to other manufacturing processes.

4 RESULTS AND DISCUSSION

4.1 Patent analysis

Figure 5 is a map of the patent analysis based on the country of origin and the IPC code. It reveals that the ten countries with the most significant number of MR patent publications were Australia (AU), Canada (CA), China (CN), Germany (EP), Spain (ES), France (FR), the United Kingdom (GB), Japan (JP), South Korea (KR), and the United States (US). The United States (US: 455) dominated the top ten countries of origin for patent documents, followed by China (CN: 134), South Korea (KR: 79), and Japan (JP: 22). According to the IPC code, most of the patents were in the category G, which is related to physics. There were three dominant IPC codes: G06T (image data processing or generation), G06F (electric digital data processing), and G02B (optical elements, systems, or apparatus).

4.2 Technology function matrix result

A technology function matrix was developed to match the existing patent technology with the different manufacturing processes. We took the technology from the domain ontology of MR (see Figure 1) that were discussed most: (1) auditory; (2) organic light-emitting diode (OLED); (3) holographic; (4) haptic; (5) eye-tracking; (6) head-mounted devices (HMD); and (7) smell and taste sensory technology. On the other hand, we identified seven essential functions in the manufacturing processes: (1) planning; (2) designing; (3) production; (4) monitoring; (5) quality control (QC); (6) training; and (7) maintenance. To create this TFM, we used prior non-patent literature as our training dataset. We also used patent literature as our testing dataset to match technology and function. Table 2 shows the TFM results and the extra column, 'Human senses', to map the technology to specific human senses.

From Table 2, we can see that the most often used sensory technologies in MR applications were haptic and eye-tracking. On the other hand, the sensory technology used least often in MR technology were OLED and smell and taste sensory technology. Thus we used four examples of technologies in the TFM: eye-tracking, holographic, smell and taste technology, and haptic. We also provided some examples of technology development aligned with the manufacturing processes.

Eye-tracking is a low-cost and highly accurate technology, but it received the least attention in the manufacturing process [28, 29]. Using eye-tracking technology can understand and track the operation process and trigger devices [28, 29]. In addition, eye-tracking can be used in different manufacturing functions. For instance, in quality control, eye-tracking technology can control potential defect of a product, especially for a complex product. In training, eye-tracking technology can help to understand and quantify experienced workers' implicit knowledge and transfer it to new staff members [28].

Holographic display technology allows the creation of a virtual 3D image, and distinguishes it from other forms of 3D display. These specific abilities to project 3D images are suitable for holographic display technology in planning, monitoring, quality control, and maintenance. In addition, there are some current uses of holographic display technology in manufacturing. For example, Hennigar et al. [30] proposed holographic technology to design high efficiency and reliable volume phase holographic optical elements. Holographic technology is suitable for this process, since it needs photosensitive materials.

Smell and taste technology, too, can be used in the manufacturing process, especially in the food and beverage-related industry. Currently this technology needs to capture the flavour and fragrance of specific ingredients. Smell and taste technology is also required to help particular industries, such as cigarette and tea companies. For example, smell and taste technology in manufacturing can be used in the monitoring and quality-control process to taste non-destructive technology for detecting insects in fruits, or a hybrid electronic nose to evaluate pasteurised milk [31].

Haptic technology, also known as kinesthetic communication or 3D touch, can create an experience of contact by applying forces, vibrations, and motions on the user. Haptic technology generates an electric current that drives a response to create a vibration. Haptic technology is helpful in the manufacturing process, such as in planning production of remote human-robot collaboration in a hazardous manufacturing environment [32]. Haptic can also evaluate workforce gestures with visual detection and haptic feedback [33].

4.3 The development of HCPS in three different industries

Industrial evolution brings an integration of technologies and human cognitive abilities in the manufacturing process. With this integration, people now want not only to focus on its functionalities but also to enhance the human sensations. Using the five human senses (visual, auditory, smell, taste, and touch), existing technologies can meet people's demand for sensation technologies. It also happens in HCPS technology. However, manufacturing industries need some operations that cannot be replaced by technology and that do not have to rely on humans being able to avoid the high mental workload.

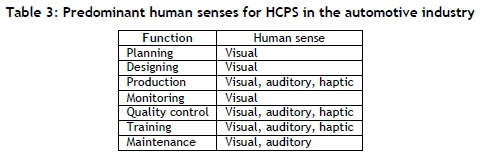

Therefore, there is a need for technology to support human activities as if they could do them naturally. Multisensory HCPS technology should provide human sensations for the collaboration between humans and technology in the manufacturing process. Different processes in manufacturing require the main human senses. To understand better the existing multi-modality HCPS technology in three different industries, we use three sectors to discuss the future development of multisensory HCPS: automotive, food and beverages, and textile. Those three sectors are chosen as the examples because they are varied in respect of the required human sensations: the automotive sector mostly needs haptic and touch technology in the manufacturing process; the food and beverage industry requires smell and taste technology; and the textile sector requires visual technology to understand patterns and colours.

4.3.1 Automotive industries

MR, including virtual reality (VR) and/or augmented reality (AR), has been implemented on a wide scale in the automotive industry. This technology has proven benefits for improving safety, performance, and product design, for better training, for the faster execution of activities, for error reduction, and for user satisfaction [34, 35]. The literature review found that MR technology has been implemented to support human involvement in systems, also known as human cyber-physical systems (HCPS). Some MR implementations in the automotive industries have been in the following areas: (1) vehicle design and conception (for example, virtual mockups for manipulating design and ergonomic considerations); (2) factory planning (for example, virtual simulation of the entire production system and detecting discrepancies/collisions, and providing in situ visualisation); (3) vehicle production and assembly (to evaluate tasks such as picking, assembly, and quality control; video-mapping the process of picking shipments in storage logistics can shorten the time needed for preparation); (4) marketing and sales support (for instance, a virtual showroom to improve the customer experience by offering an excellent way for customers to view and test drive a car from a distance; it also improves the after-sales experience for buyers who can do self-assisted maintenance work with AR guidance tools and so understand their cars better); (5) maintenance support and inspection tasks, such as remote assistance, collaboration, and automobile servicing to support vehicle diagnostics and repair, thus reducing the learning time and the mental effort; (6) training programmes related to the production process in which participants are helped to work without making unnecessary errors; and improving teams' satisfaction rates while reducing risks and resource loss.

The visual sense is still dominant in automotive applications. However, the industriy has already considered multisensory systems to create a comprehensive experience [36]. Multisensory stimuli closely mimic human perceptions of the environment using the five senses, thus improving the overall awareness of the human operator at work. Furthermore, MR provides flexibility in overcoming the limitations of the manufacturing environment and increases users' comfort level by increasing the amount of information delivered and by reducing the recipient's cognitive load [3, 37]. To elaborate on multisensory HCPS in the automotive industry, we illustrate it using the predominant human senses.

Most MR technology developments and applications in the automotive industry are a combination of visual, auditory, and haptic; the senses of smell and taste do not yet exist in MR technology. The integration of the senses of visual and smell would possibly support the maintenance process. For example, a combination of the visual and smell senses (odour) in an electronic nose would provide more information so that users could quickly react to a machine's condition, diagnose it, and make a decision [38]. The development of autonomous vehicles is still a hot issue in the automotive industry. Multisensory HCPS could support vehicle testing programmes to provide users with greater safety. Multisensory stimuli (combining the visual, auditory, haptic, and smell senses) could support the comprehensive development and testing of autonomous vehicles: it could superimpose on the driver's field of view the trajectory to support a manoeuvre, suggest directions when navigation decisions are being made, and reduce the cognitive effort of the driver. However, some MR technology implementations have encountered obstacles [35] such as these: (1) registration technology encounters inaccurate tracking systems on a large scale; (2) display devices have problems with reliability and their readiness for hands-free transport; (3) the driver's information display should not confuse or block their view; and (4) the ethical considerations for technology should focus on privacy, security, and risks to accessibility.

4.3.2 Food and beverage industries

The food and beverage industries produce and process different foods, beverages, and dietary supplements [39]. Thus they are among the industries that are multidimensional, complex, and challenging. In addition, these industries have unique characteristics because they manufacture raw materials, semi-finished products, and finished products. Therefore, the future development of human cyber-physical systems might help with the manufacturing functions. Furthermore, the possible future application of HCPS in food and beverage industries might be to design and create artificial flavours that used the human senses, particularly taste, smell, and visual. In this case, a human cyber-physical system could be improved to predict and deliver better the flavours preferred by customers.

Furthermore, since the flavour perception is affected by changing the colour of a food or drink [40, 41], it might be possible to provide visual perception through colour in designing an artificial taste in the production process. Another potential application of multisensory HCPS is using auditory and haptic technology to measure and improve a food's texture and sound. The sound perception of a food and beverage product carries some meaning for customers. For example, the crispness of food can give the sense of freshness in many fruits and vegetables, and crunchiness in some potato chips [42]. Multisensory HCPS also helps the food and beverage industry to design packaging as the critical element in performing multiple functions to optimise the customer experience [43]. Different sensory stimuli, such as haptic, auditory technology, or vision through an OLED screen or HMD, can help to design better food packaging by focusing on creating a better customer perception and experience of the food and beverage industry. To elaborate on multisensory HCPS in the food and beverage industries, we draw on the predominant human senses.

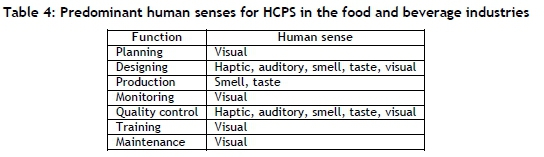

Table 4 shows the predominant human senses for multisensory HCPS that could enhance the manufacturing process. We suggest that smell and taste technology could be used in food and beverage designing, production, and quality control. Currently, some smell and taste technologies might possibly be integrated with HCPS - for instance, the electronic nose and the electronic tongue [44], which could be used to design and measure the quality of food and beverage products. They might be complemented by using haptic technology to understand the food's texture, vision to enhance colour perceptions, and auditory technology to develop the sound perceptions of food and beverages. Therefore, multisensory HCPS plays a significant role in design and quality control. In addition, visual technology could be seen as a sense to be used in food and beverage production. Through visuals, it might be significantly easier to convey complex information about the manufacturing process.

4.3.3 Textile industries

The introduction of new fibres and textiles and of cutting-edge processing technologies has fuelled the expansion of the textile industry for several decades. These trends stimulated the industry to be more innovative and creative by adopting advanced technologies. The market has also benefitted from changes in customer lifestyles. Thus the textile and fashion industries have recently integrated human cyber and physical systems to be more user-friendly and to respond to the future challenge of lifestyle needs and requirements, such as being environmentally friendly. This could provide many other advantages, especially in the production process and the customization of textile product.

One of the MR technology applications in the textile industry is virtual prototyping (VP) in 3D dimensions. This application is developed to plan and design the manufacturing process. It can also help to visualise the prospective customer for greater clarity [45]. Production scheduling and monitoring are also implemented in textile industries [46]. In marketing communications, the magic mirrors technology, introduced in 2012, enabled the customer to choose colour options by using a touchscreen while 'trying on' clothes in front of the mirror, customising different garments for the body type, fitting shoes by just pointing the camera at their feet, and looking around a virtual showroom. These technologies generally provided visual perception that was more personalised for the customer.

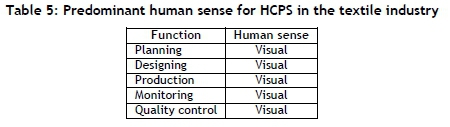

Table 5 shows the predominant human sense - the visual sense - in the textile industry that is used to improve production and marketing communications. MR technology can strengthen textile industries' robustness and capabilities, and transcend conventional manufacturing technologies in the future. Furthermore, the extended development of the textile industry has made significant progress, especially in relation to smart materials being integrated with micro-electronic systems for wearable applications, better known as STIMES. The study of Shi et al. [47] covered several aspects of STIMES, such as the functional aspects of materials, the manufacturing process for innovative textiles components, available devices, system architectures, heterogeneous integration, wearable applications in human-related and nonhuman categories (haptic technology), and the safety and security of STIMES. Moody et al. [48] explored the potential use of the sense of touch in physical designs and virtual experiences in fashion and in the textiles of the future. Finally, Ramaiah [49] presented a theoretical study of applying innovative materials and IoT applications in textile technology.

5 CONCLUSION

The development of immersive technology such as MR can help to enhance human collaboration and involvement in cyber-physical systems, especially human cyber-physical systems. As it gained much attention in the current research literature, we used patent publication data from the Innovation-Q Plus database to understand recent technological developments. We found that the United States, China, South Korea, and Japan have dominated the development of MR technology, focusing on image data processing or generation, electric data processing, and optical elements, systems, and apparatus. Furthermore, to understand the mapping between technology and the manufacturing function, we employed an ontology-based TFM to enhance our analysis. As a result of our TFM, we understand that haptic and eye-tracking are the sensory technologies that are most often adopted in MR to use HCPS. However, smell and taste sensory technology, like the most recent technological development, is the least used in MR in manufacturing. Therefore, future growth could be maximised by implementing this technology.

Our analysis of this patent technology text mining continued by analysing the implementation of multisensory human cyber-physical systems. Multisensory HCPS technology can bring human-technology collaboration closer to reality by providing human sensations. We analysed the possible future performance of multisensory HCPS technology in three industries: automotive, food and beverage, and textile. As a result, we identified possible future multisensory MR in those three industries, and suggested that it could enhance HCPS in the various stages of the manufacturing functions.

Our study has some limitations. First, the dataset used for this analysis came only from the patent literature. We specifically analysed publications on patents because that could capture the recent developments in the technology. However, we consider that the non-patent literature is also important to understand the current research, mainly from universities and research institutes. Therefore, we suggest conducting future research by adding the non-patent literature to the dataset. Second, our methodology on the TFM could be enhanced to give a deeper understanding of MR development in manufacturing. Therefore, we propose that future research combine the TFM with other text-mining methodologies. Third, the predominant human senses for HCPS that we identified in three different industries could be regarded as our conceptual contribution to the further development of HCPS. Therefore, future research could develop HCPS applications in various sectors and in other functions by referring to our work.

REFERENCES

[1] D. Schulte and A. W. Colombo, "Rami 4.0 based digitalization of an industrial plate extruder system: Technical and infrastructure challenges," in IECON 2017 - 43rd Annual Conference of the IEEE Industrial Electronics Society, 2017, pp. 3506-3511. [ Links ]

[2] J. Manyika, "Technology, jobs and the future of work," available at https://www.mckinsey.com/featured-insights/employment-and-growth/technology-jobs-and-the-future-of-work, McKinsey Global Institute Report, 2017, accessed 19 March 2021. [ Links ]

[3] D. K. Baroroh, C.-H. Chu, and L. Wang, "Systematic literature review on augmented reality in smart manufacturing: Collabouration between human and computational intelligence," Journal of Manufacturing Systems, vol.61 , pp.696-711, 2020. [ Links ]

[4] C. Emmanouilidis, P. Pistofidis, L. Bertoncelj, V. Katsouros, A. Fournaris, C. Koulamas, and C. Ruiz-Carcel, "Enabling the human in the loop: Linked data and knowledge in industrial cyber-physical systems," Annual Reviews in Control, vol. 47, pp. 249-265, 2019. [ Links ]

[5] R. A. J. de Belen, H. Nguyen, D. Filonik, D. Del Favero, and T. Bednarz, "A systematic review of the current state of collabourative mixed reality technologies: 2013-2018," AIMS Electronics and Electrical Engineering, vol. 3, no. 2, pp. 181-223, 2019. [ Links ]

[6] A. S. Nittala, N. Li, S. Cartwright, K. Takashima, E. Sharlin, and M. C. Sousa, "PLANWELL: Spatial user interface for collabourative petroleum well-planning," in SIGGRAPH Asia 2015 Mobile Graphics and Interactive Applications, pp. 1-8, 2015. [ Links ]

[7] A. R. Singh, V. Suthar, and V. S. K. Delhi, "Augmented reality (AR) based approach to achieve an optimal site layout in construction projects," in ISARC, Proceedings of the International Symposium on Automation and Robotics in Construction, 2017, vol. 34, IAARC Publications. [ Links ]

[8] S. Fleck and G. Simon, "An augmented reality environment for astronomy learning in elementary grades: An exploratory study," in Proceedings of the 25th Conference on l'Interaction Homme-Machine, pp. 14-22, 2013. [ Links ]

[9] I. M. Gironacci, R. McCall, and T. Tamisier, "Collaborative storytelling using gamification and augmented reality," in International Conference on Cooperative Design, Visualization and Engineering, Springer , pp. 90-93, 2017. [ Links ]

[10] E. Ch'ng, D. Harrison, and S. Moore, "Shift-life interactive art: Mixed-reality artificial ecosystem simulation," Presence, vol. 26, no. 2, pp. 157-181, 2017. [ Links ]

[11] M. McGill, J. H. Williamson, and S. Brewster, "Examining the role of smart TVs and VR HMDs in synchronous at-a-distance media consumption," ACM Transactions on Computer-Human Interaction (TOCHI), vol. 23, no. 5, pp. 157, 2016. [ Links ]

[12] G. Kurillo, A. Y. Yang, V. Shia, A. Bair, and R. Bajcsy, "New emergency medicine paradigm via augmented telemedicine," in International Conference on Virtual, Augmented and Mixed Reality, Springer, pp. 502-511, 2016. [ Links ]

[13] L. A. Shluzas, G. Aldaz, and L. Leifer, "Design thinking health: Telepresence for remote teams with mobile augmented reality," in Design Thinking Research, Switzerland: Springer, 2016, pp. 53-66. [ Links ]

[14] X. Tao, N. Xu, M. Xie, and L. Tang, "Progress of the technique of coal microwave desulfurization," International Journal of Coal Science & Technology, vol. 1, no. 1, pp. 113-128, 2014. [ Links ]

[15] L. Atzori, A. Iera, and G. Morabito, "The Internet of Things: A survey," Computer Networks, vol. 54, no. 15, pp. 2787-2805, 2010. [ Links ]

[16] D. Guinard, V. Trifa, S. Karnouskos, P. Spiess, and D. Savio, "Interacting with the SOA-based internet of things: Discovery, query, selection, and on-demand provisioning of web services," IEEE transactions on Services Computing, vol. 3, no. 3, pp. 223-235, 2010. [ Links ]

[17] A. C. Jhuang, J. J. Sun, A. J. Trappey, C. V. Trappey, and U. H. Govindarajan, "Computer supported technology function matrix construction for patent data analytics," in 2017 IEEE 21st International Conference on Computer Supported Cooperative Work in Design (CSCWD), IEEE, pp. 457-462, 2017. [ Links ]

[18] K. Liu, Y. Yen, and Y.-H. Kuo, "A quick approach to get a technology-function matrix for an interested technical topic of patents," International Journal of Arts and Commerce, vol. 2, no. 6, pp. 85-96, 2013. [ Links ]

[19] A. J. Trappey, C. V. Trappey, C.-Y. Fan, A. P. Hsu, X.-K. Li, and I. J. Lee, "loT patent roadmap for smart logistic service provision in the context of Industry 4.0," Journal of the Chinese Institute of Engineers, vol. 40, no. 7, pp. 593-602, 2017. [ Links ]

[20] T.-Y. Cheng and M.-T. Wang, "The patent-classification technology/function matrix - A systematic method for design around,", Journal of Intellectual Property Rights, vol. 18, no. 2, pp. 158-167, 2013. [ Links ]

[21] A. J. Trappey, C. V. Trappey, C.-Y. Fan, and I. J. Lee, "Consumer driven product technology function deployment using social media and patent mining," Advanced Engineering Informatics, vol. 36, pp. 120-129, 2018. [ Links ]

[22] J. Zhou, Y. Zhou, B. Wang, and J. Zang, "Human-cyber-physical systems (HCPSs) in the context of new-generation intelligent manufacturing," Engineering, vol. 5, no. 4, pp. 624-636, 2019. [ Links ]

[23] M. Krugh and L. Mears, "A complementary cyber-human systems framework for Industry 4.0 cyber-physical systems," Manufacturing Letters, vol. 15, pp. 89-92, 2018. [ Links ]

[24] S. Covaci, M. Repetto, and F. Risso, "A new paradigm to address threats for virtualized services," in 2018 IEEE 42nd Annual Computer Software and Applications Conference (COMPSAC), vol. 2, IEEE , pp. 689-694, 2018. [ Links ]

[25] F. Tao, Q. Qi, L. Wang, and A. Nee, "Digital twins and cyber-physical systems toward smart manufacturing and industry 4.0: Correlation and comparison," Engineering, vol. 5, no. 4, pp. 653-661, 2019. [ Links ]

[26] Y. Hao and P. Helo, "The role of wearable devices in meeting the needs of cloud manufacturing: A case study," Robotics and Computer-Integrated Manufacturing, vol. 45, pp. 168-179, 2017. [ Links ]

[27] H. Culbertson, S. B. Schorr, and A. M. Okamura, "Haptics: The present and future of artificial touch sensation," Annual Review of Control, Robotics, and Autonomous Systems, vol. 1, pp. 385-409, 2018. [ Links ]

[28] J. Niemann, C. Fussenecker, and M. Schlösser, "Eye tracking for quality control in automotive manufacturing," in European Conference on Software Process Improvement, Springer, pp. 289-298, 2019. [ Links ]

[29] J. Niemann, C. Fussenecker, M. Schlösser, and E. Ocakci, "The workers perspective: Eye tracking in production environments," Acta Technica Napocensis-Series: Applied Mathematics, Mechanics, And Engineering, vol. 64, no. 1-S1, 2021. [ Links ]

[30] R. A. Hennigar, F. McCarthy, A. Ballon, and R. B. Mann, "Holographic heat engines: General considerations and rotating black holes," Classical and Quantum Gravity, vol. 34, no. 17, p. 175005, 2017. [ Links ]

[31] P. Wang, L. Zhuang, Y. Zou, and K. J. Hsia, "Future trends of bioinspired smell and taste sensors," in Bioinspired Smell and Taste Sensors, Beijing: Science Press, 2015, pp. 309-324. [ Links ]

[32] H. Liu and L. Wang, "Remote human-robot collaboration: A cyber-physical system application for hazard manufacturing environment," Journal of Manufacturing Systems, vol. 54, pp. 24-34, 2020. [ Links ]

[33] C. Barbosa, L. F. Souza, E. de Lima, B. Silva, O. Santana Jr, and G. Alberto, "Human gesture evaluation with visual detection and haptic feedback," in Proceedings of the 24th Brazilian Symposium on Multimedia and the Web, pp. 277-282, 2018. [ Links ]

[34] Z. Cujan, G. Fedorko, and N. Mikusová, "Application of virtual and augmented reality in automotive," Open Engineering, vol. 10, no. 1, pp. 113-119, 2020. [ Links ]

[35] R. G. Boboc, R.-L. Chiriac, and C. Antonya, "How augmented reality could improve the student's attraction to learn mechanisms," Electronics, vol. 10, no. 2, p. 175, 2021. [ Links ]

[36] Q. Wu and Z. Zhang, "Vehicle multi-sensory user interaction design research based on MINDS methods," in International Conference on Applied Human Factors and Ergonomics, Springer, pp. 442-447, 2019. [ Links ]

[37] C. D. Wickens, "Multiple resources and performance prediction," Theoretical Issues in Ergonomics Science, vol. 3, no. 2, pp. 159-177, 2002. [ Links ]

[38] W. Jia, G. Liang, Z. Jiang, and J. Wang, "Advances in electronic nose development for application to agricultural products," Food Analytical Methods, vol. 12, no. 10, pp. 2226-2240, 2019. [ Links ]

[39] A. Luque, M. E. Peralta, A. De Las Heras, and A. Córdoba, "State of the Industry 4.0 in the Andalusian food sector," Procedia Manufacturing, vol. 13, pp. 1199-1205, 2017. [ Links ]

[40] C. Spence, C. A. Levitan, M. U. Shankar, and M. Zampini, "Does food color influence taste and flavor perception in humans?" Chemosensory Perception, vol. 3, no. 1, pp. 68-84, 2010. [ Links ]

[41] J. Hoegg and J. W. Alba, "Taste perception: More than meets the tongue," Journal of Consumer Research, vol. 33, no. 4, pp. 490-498, 2007. [ Links ]

[42] C. Spence, "Multisensory flavor perception," Cell, vol. 161, no. 1, pp. 24-35, 2015. [ Links ]

[43] C. Velasco, C. Michel, J. Youssef, X. Gamez, A. D. Cheok, and C. Spence, "Colour-taste correspondences: Designing food experiences to meet expectations or to surprise," International Journal of Food Design, vol. 1, no. 2, pp. 83-102, 2016. [ Links ]

[44] J. Tan and J. Xu, "Applications of electronic nose (e-nose) and electronic tongue (e-tongue) in food quality-related properties determination: A review," Artificial Intelligence in Agriculture, vol. 4, pp. 104-115, 2020. [ Links ]

[45] A. Rudolf, A. Cupar, and Z. Stjepanovic, "Designing the functional garments for people with physical disabilities or kyphosis by using computer simulation techniques," Industria Textila, vol. 70, no. 2, pp. 182-191, 2019. [ Links ]

[46] D. Mourtzis, V. Siatras, J. Angelopoulos, and N. Panopoulos, "An augmented reality collaborative product design cloud-based platform in the context of learning factory," Procedia Manufacturing, vol. 45, pp. 546-551, 2020. [ Links ]

[47] Q. Shi, J. Sun, C. Hou, Y. Li, Q. Zhang, and H. Wang, "Advanced functional fiber and smart textile," Advanced Fiber Materials, vol. 1, no. 1, pp. 3-31, 2019. [ Links ]

[48] W. Moody, P. M. Langdon, and M. Karam, "Enhancing the fashion and textile design process and wearer experiences," in Cambridge Workshop on Universal Access and Assistive Technology, London: Springer-Verlag, pp. 51-61, 2018. [ Links ]

[49] G. B. Ramaiah, "Theoretical analysis on applications aspects of smart materials and Internet of Things (loT) in textile technology," Materials Today: Proceedings, vol. 45, pp. 4633-4638, 2021. [ Links ]

Submitted by authors 11 Jul 2021

Accepted for publication 5 Oct 2021

Available online 14 Dec 2021

* Corresponding author: dawi.karomati.b@mail.ugm.ac.id; armin.d@unhas.ac.id