Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

South African Journal of Industrial Engineering

On-line version ISSN 2224-7890

Print version ISSN 1012-277X

S. Afr. J. Ind. Eng. vol.32 n.2 Pretoria Aug. 2021

http://dx.doi.org/10.7166/32-2-2484

GENERAL ARTICLES

Investigation of user interface of gyro-sensor-based hand gestural drone controller

Junghun L.I; Kyungdoh K.II,*

IDepartment of Industrial Design, Hongik University, Seoul, Republic of Korea. https://orcid.org/0000-0001-7854-6853

IIDepartment of Industrial-Data Engineering, Hongik University, Seoul, Republic of Korea. https://orcid.org/0000-0003-1062-6261

ABSTRACT

Joystick controllers are used mainly for modern civil drones. However, joystick controllers are non-intuitive and require two hands to be used simultaneously. Therefore, although single-handed drone controllers using a joystick and hand gestures have been introduced, they have only replaced the right stick of the joystick controller with gyro sensors. While this approach retains interface continuity with conventional joystick controllers, it is not user-centric. Therefore we propose a gestural drone controller based on hand gestures, and compare it experimentally with conventional controllers, including an investigation of the effective differences depending on the user's joystick experience. We separate participants into expert and novice joystick groups to investigate the joystick controller experience effects (e.g., radio-controlled cars, game consoles) for each controller type. The conventional joystick controller is found to be superior to the conventional gestural controller for five out of nine criteria, and superior to the proposed gestural controller for three out of nine criteria. The proposed gestural controller is more natural than the conventional gestural controller. There tends to be an interaction effect of the joystick experience and controller types, considering the naturalness of the controller.

OPSOMMING

Stuurstok beheerders word dikwels gebruik as beheerders vir moderne hommeltuie. Stuurstok beheerders is nie intuïtief nie en vereis gelyktydige gebruike van twee hande. Alhoewel nuwe, eenhandige hommeltuig beheerders met 'n enkele stuurstok al bekendgestel is, het dit net die regterkantste stuurstok met giroskope vervang. Hierdie ontwerp behou koppelvlak kontinuïteit met konvensionele stuurstok beheerders, maar dit is nie gebruiker gesentreer nie. Hierdie artikel bied die ontwerp van 'n beheerder, wat van gebare gebruik maak, aan. Die ontwerp word vergelyk met konvensionele beheerders. Die vergelyking sluit 'n ondersoek na die verskille afhangend van die gebruiker se ervaring in. Deelnemers word verdeel in nuwelinge en ervare groepe om die invloed van stuurstok ervaring te bepaal vir elke beheerder tipe. Die konvensionele stuurstok beheerder vertoon beter as die konvensionele gebaar gebaseerde beheerder vir vyf van die nege kriteria. Dit vertoon ook beter as die voorgestelde beheerder vir drie van die nege kriteria. Die voorgestelde gebaar gebaseerde beheerder is meer intuïtief as die konvensionele gebaar gebaseerde beheerder. Die gebruiker se ervaring het ook n rol gespeel tydens die oorweging van hoe intuïtief die beheerder is.

1 INTRODUCTION

Drones were originally developed for military purposes, but have become widely used in the civil sector for various purposes, including deliveries and aerial photography [1]. They also offer endless possibilities for scientific investigation, emergency responses, traffic control, etc. [1]. The drone market has been growing steadily, and drones are expected to become as indispensable as smartphones in the future [2]. However, drones are not yet widely used in daily life because of many concerns about them, including their safety aspects [3]. Previous studies have shown that accidents caused by users comprise a high proportion of all drone accidents [4]. Therefore drone popularisation is expected to advance significantly if controlling them can be made easier and safer.

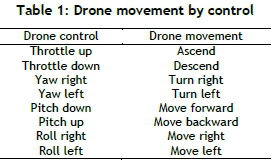

Most drone controllers on the civil market use two joysticks [5] - e.g., the Potensic D50, the SJRC Z5, and the Holy Stone HS200. These drone controller types use the right stick x-axis to control roll (moving right and left) and y-axis to pitch (moving forwards and backwards), and the left stick x-axis to control yaw (turning around) and y-axis to control the throttle (changing altitude). Application interfaces that mimic conventional joystick controllers are also used with smart devices. Figure 1 shows a typical conventional joystick drone controller user interface, and Table 1 details how a drone is moved by operating the controls.

Despite its being the most common controller type, prior studies have conclusively revealed various user experience problems associated with conventional joystick controllers. Operating a drone has a high mental workload, which can often cause accidents [6]. In contrast with industrial and military drones, civil drones are used in everyday situations when several things happen simultaneously rather than in environments where the operator can be fully focused on drone control alone. Therefore the operator's mental workload can be vastly increased and can eventually become a large problem. Also, the joystick interface is not intuitive or well-understood [5,7]. This is expected to become a large problem for the civil drone market, in which most users are novices.

Recent natural user interface (NUI) research has been studying computer and machine communication through methods that humans use naturally [8]. In contrast with artificially developed communication media, an NUI allows people to communicate more intuitively with robots using natural methods that they employ in the real world [7]. NUIs offer a lower mental workload, more intuitiveness, and higher learnability than unnatural user interfaces [9,10]. Consequently, many studies have proposed approaches to reduce robot control mental workloads using NUIs [11-13]. Various NUI approaches have suggested robot control interfaces that are easier to understand and learn [14-17], and have focused on improving the robot control experience and usability [18-21]. Therefore drone control interfaces that are closer to natural human behaviour will improve mental workload, learnability, and usability. Consequently, many studies are approaching drone piloting experience through the NUI [7,18,22-33].

There are three NUI drone control types: sound, visual, and gestural communication [7]. Sound communication is unsuitable in the civil sector, given the high risk of signals overlapping with loud noises or when used in conjunction with others [34]. Visual communication requires a camera to observe the operator continuously [24], it is limited to line of sight, and there always is the risk of some obstacle such as a bird or an insect disturbing communication between the user and the drone. In contrast, gestural communication is relatively simple to implement, and avoids signals overlapping or being disturbed. It can also directly reflect the operator's mental concept [26]. Currently the dominant gestural drone control research focuses on leap motion sensors [26], head-mounted displays [25,26], and gyro sensors. However, leap motion sensors are unsuitable for dynamic or mobile situations, and head-mounted displays are relatively uncomfortable if worn for long periods, and are quite expensive. Thus most gestural controllers in the market use gyro sensors.

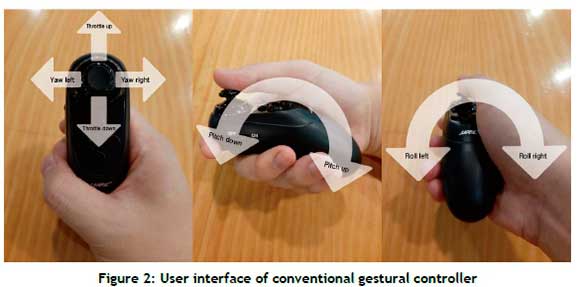

As a result, many modern controllers recognise gestures with a gyro sensor, providing an intuitive, easy, and dynamic drone control experience with one hand. These generally use joystick and hand gestures (detected by a gyro sensor) together - e.g., the Bump F-7 Orbis Hand Sensor Control Series, the GoolRC Drone, and the Jeestam Mini Drone for Kids. These controllers are usually used complementarily with mobile applications, using interfaces that mimic conventional joystick controllers so that operators can choose their control interface according to their intended use and/or personal preference. These controllers use the sagittal axis rotational angle to control roll, the coronal axis rotational angle to control pitch, the joystick x-axis to control yaw, and the y-axis to control the drone throttle. They are also commonly available in glove form, with a joystick attached to the fingertips and gyro sensors between the thumb and forefinger on the gloves. Figure 2 shows a typical user interface for conventional gestural controllers.

However, conventional gestural controllers simply replace the right stick of joystick controller with gestural control using gyro sensor(s). Although this approach provides some continuity with conventional joystick controllers, it is not user-centric. Therefore, while they overcome some of the conventional joystick controller's limitations by using gestures, providing a more natural interface, they are relatively less frequently used than joystick controllers.

Previous studies of drone control hand gestures have revealed a number of common features, including:

• raising the hand to raise the drone and lowering it to lower the drone [22-24,29,31 -33]

• turning the hand to turn the drone [23,24,28,29,32,33]

The reason that these gestures have many common features is that they are intuitive and natural. Therefore we proposed and constructed a gestural drone control interface with a lower mental workload and that is more intuitive and easier to learn, based on previous studies of natural drone control hand gestures. We then compared the proposed interface experimentally with conventional joystick and conventional gestural drone controllers.

2 NEW GESTURAL DRONE CONTROLLER

Many previous studies have considered using drone control hand gestures without a controller, employing existing signaling systems, user responses, and the researcher's insight [22-24,28-33]. Following these earlier studies, we expect the proposed new gestural drone control interface to reduce the mental workload and replace unnatural communication processes.

Prior studies suggested that raising the hand, or opening the wrist and lowering the hand, or bending the wrist, as used with conventional gestural controllers, is more suitable for controlling throttle than pitch [22-24,29,31-33]. We also considered moving the joystick in the y-axis (currently used to control the throttle) as more suitable for controlling pitch, because pushing forwards and pulling back is similar to the motion commonly used for pitch control hand gestures. Therefore usability could be improved by changing the pitch control from the coronal axis rotational angle to the joystick y-axis. Table 2 shows the various drone control hand gestures employed in previous studies.

The proposed control interface will use the coronal axis rotational angle to control the throttle, the sagittal axis rotational angle to control roll, the joystick x-axis to control yaw, and the y-axis to control pitch. Figure 3 shows the proposed gestural controller user interface.

3 METHOD

3.1 Independent variables

3.1.1 Controller types

We considered three drone controller types: the conventional joystick, the conventional gestural controller, and the proposed gestural controller. Conventional joysticks and gestural controllers are commonly available in the market. Conventional gestural controllers combine the joystick and hand gestures to control the drone. The proposed gestural controller employs the same hardware as conventional gestural controllers, but implements the new drone control interface proposed here. We compared the controller types using both objective and subjective measures.

3.1.2 Joystick experience

We considered the joystick operator's experience. Joystick controllers that use two joysticks were widely available before being used to control drones. Conventional controllers have a similar interface to that of other joystick controllers that combine moving vertically and horizontally. Although we proposed a more natural drone control interface using hand gestures, participants who are already familiar with common joystick interfaces might find conventional controllers more comfortable than joystick novices. Therefore we separated the participants into joystick experts who are already familiar with joystick controllers, such as radio-controlled car controllers and game consoles, and joystick novices who are unfamiliar with joystick controllers, to investigate the joystick control experience's effects on user experience with the three types of drone controller.

3.2 Task

In the civil drone market, which is the scope of this research, personal drones are mostly used to learn drone control and/or to take photographs [35]. To take pictures with the drone, it must be possible to perform two functions easily at a close distance so that the drone can be seen. The drone must be easily moved into the correct position, and then pointed in the right direction. Therefore we evaluated usability and user experience for each controller, using a task comprising these two functions.

Participants controlled a virtual drone (i.e., on a screen) using each controller. The drone image included a protruding part to represent the camera focus abstractly. Participants were asked to hit targets that appeared in random position with the protruding part. When they hit the target, the drone returned to the starting position and the new target appears. We placed a circle around the target sphere to prevent the drone hitting the target without turning around; so participants had to hit the target perpendicular to the circle, with a maximum error of 20°. The goal was to hit as many targets as possible within three minutes, with the score being the number of targets hit.

The 47-inch screen showed the virtual drone, the protruding part, the target, the virtual drone's shadow, the target's shadow, the ground and the current score - i.e., the number of targets already hit, displayed on the upper right. Figure 4 shows a snapshot from a typical task.

3.3 Dependent variables

3.3.1 Objective measure

The objective dependent variable was the task score - i.e., the number of targets hit within three minutes - for each drone controller. The assumption was that the controller with better usability would tend to produce higher hit counts.

3.3.2 Subjective measure

We considered eight questions related to user experience as subjective dependent variables to evaluate the different drone controllers. The questionnaire was designed on the basis of previous research into drone control interfaces [5], with questions related to drone experience that were chosen to be easily answered by the participants. The questions covered simplicity, stress, convenience, learnability, naturalness, intelligibility, overall satisfaction, and mental workload. The questionnaire comprised item pairs for each judgment category, and these were rated on a seven-point Likert scale, with 1 being 'mostly disagree' and 7 being 'mostly agree'. Positive and negative questions were mixed to avoid evaluation inertia. Negative question scores were interpreted in the opposite sense when analysing the results - i.e., changing a 7 to a 1, and vice versa. The participants were asked to provide their opinions about each controller experience and the reasons for their evaluation as narrative answers after completing the subjective evaluation.

3.4 Participants

The participants were 36 people who had never or had hardly ever used a drone. Because the civil drone market largely comprises non-professionals with little or no drone experience, we wanted to exclude people who were skilled in using existing drone controllers. Of the 36 participants, 18 were joystick experts with considerable joystick experience, and the other 18 were joystick novices with little or no joystick experience. Of the participants, 22 were male and 14 were female; the average age was 23.5 ± 2.02 years. All of the participants were Hongik University students, relatively evenly distributed across 19 majors, including industrial design and mechanical system design. Each participant received 10,000 Won as an experiment participation fee.

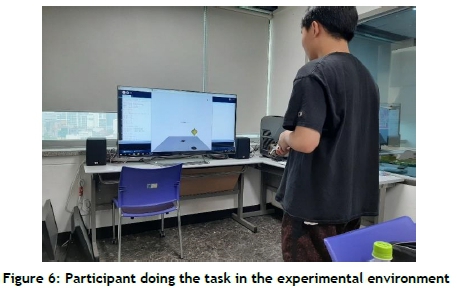

3.5 Experiment devices and environment

The participants performed tasks using a virtual drone in a virtual environment that was shown on a 47-inch TV screen, using a wireless remote controller that embodied the drone control interfaces to be compared. All of the controllers were prototyped on the Arduino open source hardware, with an Arduino Nano used for bluetooth signal reception (connection to the computer) and a wireless drone controller. HC-06 bluetooth modules were used for the communication between the Arduino Nanos. We provided a PS2 five-pin joystick module as the joystick and an MPU 6050 as the gyro sensor. The wireless drone remote controller included a 9V battery. The virtual drone and the experiment's environment were prototyped with Processing open source software. Figure 5 shows the controllers used in the experiment, and Figure 6 shows a participant doing the task in the experimental environment.

3.6 Procedure

Participants were asked to complete a demographic questionnaire before performing the experiment. Instructions for the procedure were then provided, and participants subsequently performed the tasks and answered questionnaires for all three controller types. Participants were able to practise hitting the target at least five times before beginning the counted task with each controller type. We randomly divided the participants into three groups with different drone controller test orders in order to exclude the learning effect. All of the participants were provided with as much rest time as they needed when moving from one controller to the next, to minimise the fatigue effect as the experiment progressed. The experiments took place one participant at a time in the laboratory environment.

4 RESULTS

The experiment consisted of two independent variables. One was 'experience of joystick', which is a between-subjects factor; and the other was 'type of drone controller', which is a within subjects factor. To compare the means of cross-classified groups, we used a mixed design ANOVA and a pairwise paired t-test for post-hoc comparison.

4.1 Objective measure

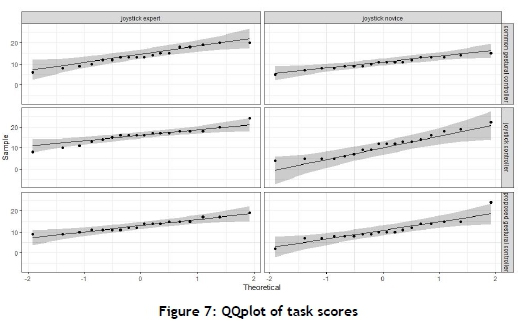

Figure 7 shows the QQ plot that drew the correlation between the gained data and the assumed normal distribution for the task score. According to the Runs test, the task scores of the participants could be regarded as independent (P=0.0603).

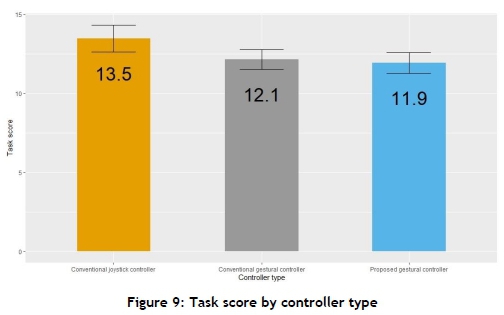

Figure 8 shows the participants' mean and standard error task scores for joystick experience, and Figure 9 shows the participants' mean and standard error task scores for controller types. The performance scores were significantly different, depending on joystick experience (F(1,34) = 9.694, P = 0.0037), with joystick experts achieving higher scores than joystick novices. The difference by drone controller type was also statistically significant (F(2,68) = 3.35, P = 0.0410). The pairwise paired t-test showed that the conventional joystick controller scored higher than the proposed gestural controller at the 10 per cent level (P = 0.071), but other comparisons showed no statistically significant difference. There was no interaction between joystick controller experience and controller type (F(2,68) = 1.48, P = 0.2350).

4.2 Subjective measure

4.2.1 Simplicity, stress

Figure 10 shows the QQ plot that drew the correlation between the gained data and the assumed normal distribution for simplicity. According to the Runs test, the simplicity scores of the participants were independent (P=0.9021).

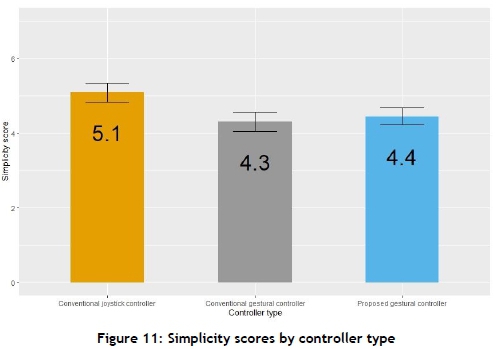

Figure 11 shows the seven-point Likert scale outcomes for controller simplicity. The drone controller type was significantly different (F(2,68) = 3.614, P = 0.0322). The pairwise paired t-test showed that the conventional joystick controller was considered simpler than the conventional gestural controller at the 10 per cent level (P = 0.055), but other comparisons had no statistically significant difference. The difference with respect to joystick controller experience was not statistically significant (F(1,34) = 0.933, P = 0.341), and there was no interaction between joystick controller experience and controller type (F(2,68) = 0.697, P = 0.5017).

Figure 12 shows the QQ plot that drew the correlation between the gained data and the assumed normal distribution for anti-stress. According to the Runs test, the anti-stress scores of the participants were independent (P=0.2771).

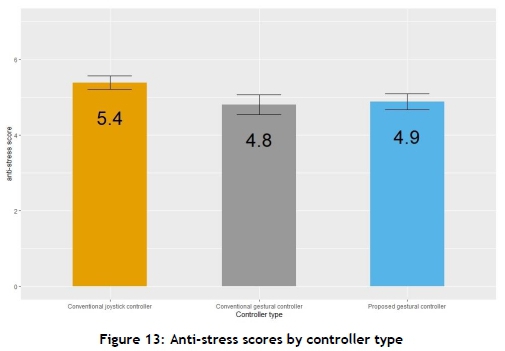

Figure 13 shows the seven-point Likert scale outcomes for user's stress. The drone controller type was significantly different (F(2,68) = 3.395, P = 0.0393). The pairwise paired t-test showed that the conventional joystick controller had a lower stress level than the conventional gestural controller at the 10 per cent level (P = 0.071), with other comparisons showing no statistically significant difference. The difference with respect to joystick controller experience was not statistically significant (F(1,34) = 0.231, P = 0.6340), and there was no interaction between joystick controller experience and controller type (F(2,68) = 0.500, P = 0.6087). Since this was a negative question that asked users about their stress level, we reversed the scores so that the highest score meant the most positive effect. Eventually the higher score meant that it caused the user less stress.

Thus the conventional gestural controller was significantly worse than the conventional joystick controller for simplicity and stress, despite employing gestural control that were expected to be more natural than those for joysticks [12-15]. On the other hand, the proposed gestural controller was not significantly different from the conventional joystick controller for simplicity and stress. The joystick controller experience did not affect the user's drone control experience for simplicity and stress.

4.2.2 Convenience, learnability

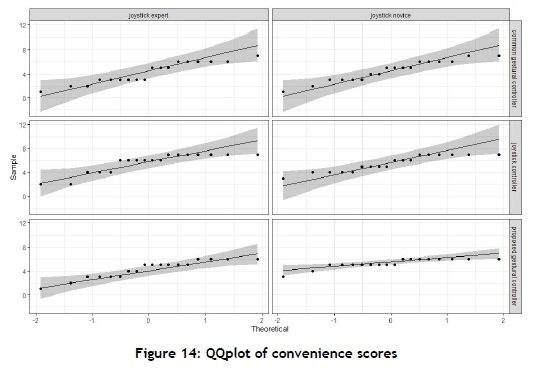

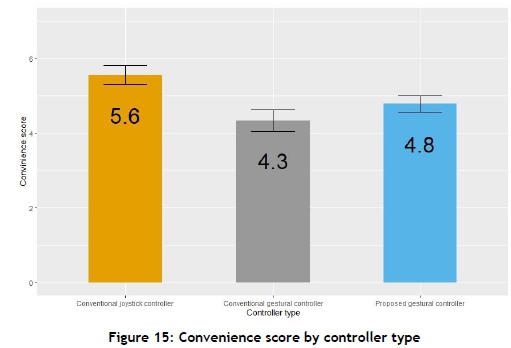

Figure 14 shows the QQ plot that drew the correlation between the gained data and the assumed normal distribution for convenience. According to the Runs test, the convenience scores of the participants were independent (P=0.2648).

Figure 15 shows the seven-point Likert scale outcomes for controller convenience. The drone controller type was significantly different (F(2,68) = 7.126, P = 0.0016). The pairwise paired t-test confirmed the conventional joystick controller to be more convenient than the conventional gestural controller (P = 0.0120) and the proposed gestural controller at the 10 per cent level (P=0.0770), with no statistically significant difference between the other joystick comparisons. The difference with respect to joystick controller experience was not statistically significant (F(1,34) = 1.501, P = 0.2290), and there was no interaction between joystick controller experience and controller type (F(2,68) = 2.815, P = 0.2404).

Figure 16 shows the QQ plot that drew the correlation between the gained data and the assumed normal distribution for learnability. According to the Runs test, the learnability scores of the participants were independent (P=0.1556).

Figure 17 shows the seven-point Likert scale outcomes for learnability The drone controller type was significantly different (F(2,68) = 5.546, P = 0.0059). The pairwise paired t-test showed that the conventional joystick controller was easier to learn than the conventional gestural controller (P = 0.016) and the proposed gestural controller (P=0.036), with other comparisons showing no statistically significant difference. The difference with respect to joystick controller experience was not statistically significant (F(1,34) = 0.724, P = 0.4010), and there was no interaction between joystick controller experience and controller type (F(2,68) = 1.565, P = 0.9470).

Thus the conventional joystick controller was better than both gestural controllers for convenience and learnability. Joystick controller experience did not affect the user's drone control experience of convenience and learnability.

4.2.3 Naturalness

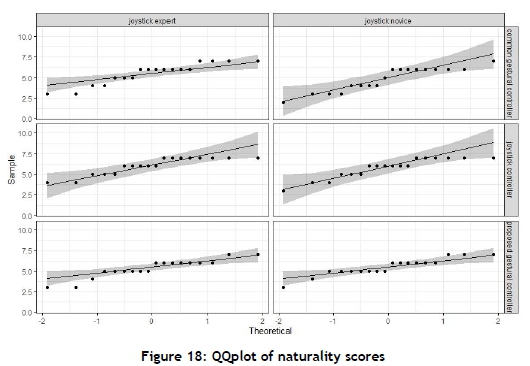

Figure 18 shows the QQ plot that drew the correlation between the gained data and the assumed normal distribution for naturalness. According to the Runs test, the naturalness scores of the participants were independent (P=0.6736).

Figure 19 shows the seven-point Likert scale outcomes for naturalness. There were significant differences between the remote controller types (F(2,68) = 14.083, P = 0.0005). The pairwise paired t-test showed that the conventional joystick controller was considered more natural than the conventional gestural controller (P = 0.009) and that the proposed gestural controller was also considered more natural than the conventional gestural controller (P = 0.034), with no statistically significant difference for other comparisons. The difference with respect to joystick controller experience was not statistically significant (F(1,34) = 0.502, P = 0.484). There was an interaction between joystick controller experience and controller type at the 10 per cent level (F(2,68) = 4.750, P = 0.0653).

Figure 20 shows the seven-point Likert scale outcomes of the naturalness scores for interaction between joystick experience and controller type. The pairwise paired t-test showed that the proposed gestural controller was considered more natural than the conventional gestural controller by joystick novices (P = 0.011), while the joystick experts did not consider the proposed gestural controller more natural than the conventional one.

The conventional joystick controller and the proposed gestural controller were considered more natural than the conventional gestural controller, with other comparisons having no statistically significant difference. The joystick novices found the proposed gestural controller more natural than the conventional gestural controller, whereas the joystick experts felt that the conventional gestural controller was as natural as the proposed gestural controller. The reason for this difference could be inferred from the narrative answers. The joystick experts were familiar with joystick controllers designed for movement on a flat surface, combining right, left, forwards, and backwards movement in a single joystick. Thus they felt familiar with conventional joystick and gestural controllers that had similar interfaces.

4.2.4 Intelligibility

Figure 21 shows the QQ plot that drew the correlation between the gained data and the assumed normal distribution for intelligibility. According to the Runs test, the intelligibility scores of the participants were independent (P=0.1340).

Figure 22 shows the seven-point Likert scale outcomes for controller intelligibility. The intelligibility score was significantly different with respect to joystick experience at the 10 per cent level (F(1,34) = 3.371, P = 0.0751), with the joystick experts providing higher scores than the novices. The differences with respect to drone controller type were statistically significant at the 10 per cent level (F(2,68) = 2.519, P = 0.0.0880) but, according to the pairwise paired t-test, there was no statistically significant difference between the controller types. There was no interaction between joystick controller experience and controller type (F(2,68) = 0.892, P = 0.4140).

4.2.5 Satisfaction, mental workload

Figure 23 shows shows the QQ plot that drew the correlation between the gained data and the assumed normal distribution for overall satisfaction. According to the Runs test, the satisfaction scores of the participants were independent (P=0.8377).

There was no statistically significant difference for overall satisfaction with respect to joystick controller experience (F(1,34) = 0.43, P = 0.5170). There was a statistically significant difference for overall satisfaction with respect to controller type at the 10 per cent level (F(2,68) = 2.761, P = 0.0703) but, according to the pairwise paired t-test, there was no statistically significant difference between the controller types. There was interaction between joystick controller experience and controller type at the 10 per cent level (F(2,68) = 2.861, P = 0.834) but, according to the pairwise paired t-test, no individual interaction effect was statistically significant. The mean response for overall satisfaction was 5.4 and the standard error was 0.195.

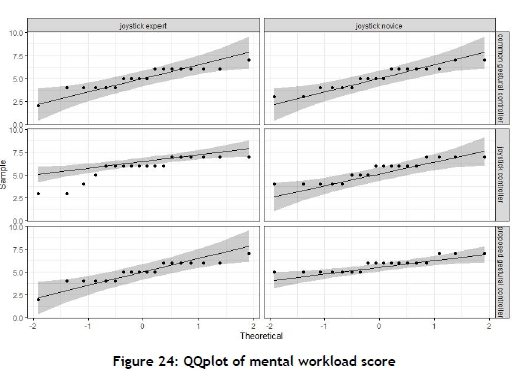

Figure 24 shows the QQ plot that drew the correlation between the gained data and the assumed normal distribution for mental workload. According to the Runs test, the mental workload scores of the participants could be considered independent (P=0.0696).

There were no statistically significant differences for using the controller while doing other things with respect to joystick controller experience (F(1,34) = 0.197, P = 0.6600) or controller type (F(2,68) = 0.016, P = 0.9850), nor was there any interaction between joystick controller experience and controller type (F(2,68) = 1.414, P = 0.250). The mean response for overall satisfaction was 3.0, and the standard error was 0.258.

5 CONCLUSION AND DISCUSSION

Civil drones, which were the scope of this research, are mainly used for photography or hobbies [26]. Therefore moving and rotating the drone at a close distance is an important usability aspect. We compared three controller types: the conventional joystick, the conventional gestural controller, and the proposed gestural controller in this respect. Conventional joystick and gestural controllers are commonly available in the civil market. The proposed gestural controller employed the same hardware as conventional gestural controllers, but implemented a drone control interface that was based on drone control hand gestures without a controller from prior studies. Most civil drone consumers have little or no drone control experience; thus the experiment participants only included people with little or no drone experience. The participants were also divided into joystick experts who are familiar with joystick controllers, and joystick novices who are unfamiliar with joystick controllers.

The conventional gestural controller was worse than the conventional joystick controller for five of the nine criteria (convenience, simplicity, naturalness, learnability, and stress). Although the conventional gestural controller implemented gestures for drone control, which tend to be more natural than for joystick controls, it was evaluated poorly compared with the conventional joystick controller. Therefore the joystick worked better than gestural control with a conventional interface. However, there were no statistically significant differences between the two controller types for the other criteria (task score, intelligibility, overall satisfaction, and mental workload). The proposed gestural controller, which implemented a gestural drone control interface that was based on prior studies, was worse than the conventional joystick controller for three of the nine criteria (task score, convenience, learnability), and there was no difference for the other criteria (simplicity, stress, naturality, intelligibility, satisfaction, and mental workload). Consequently, it is difficult to regard the proposed gestural controller as an alternative to the conventional joystick controller. However, since conventional joystick controllers always require both hands, and are difficult to use in dynamic or mobile situations, the proposed gestural controller is expected to overcome the limitations of the conventional joystick controller. Since the conventional joystick controller interface can be provided by mobile applications, it is often used in parallel with other controller types. The proposed gestural controller was considered more natural than the conventional gestural controller, but it had an interaction effect with joystick experience. The mean mental workload was 3.0 on the seven-point Likert scale; thus all the drone controller types need more research into their usability in relation to multitasking.

Joystick experts - i.e., those familiar with joystick controllers in other areas - had a superior ability to perform tasks using the drone, and understood drone controllers better than joystick novices did. This was not limited to the joystick drone controller, but also applied to the gestural controllers. Thus, although the joystick experts were unfamiliar with drone control, they performed better and showed understandability because of their other joystick controller experience. There was some evidence for an interaction between perceived natural usage and joystick experience. While the joystick novices found the proposed gestural controller to be more natural than the conventional one, the joystick experts assessed conventional gestural controller as natural as proposed one. The narrative answers from the joystick experts clarified that they felt familiar with the conventional drone controller, since its interface is similar to that of joystick controllers that are used in other fields.

Thus experience with other joystick controllers affected the drone control experience, and so we expect that experience with other remote controller types, such as a mouse, a keyboard, Kinect, or leap motion, could also affect the drone control experience. Different results would be likely if the proposed gestural control interface were as widely available to the public as conventional joystick and gestural control interfaces are. We assume that the drone control experience could be affected by the electronic device environment of the culture.

Therefore it is essential to consider the electronic device interfaces that are already widely available in the target market when developing a new drone controller interface, and to consider providing customised interfaces for different cultures. Many electronic appliances have succeeded by adopting localiaation, particularly in emerging markets where the lifestyle and electronic appliance experiences differ from those in developed countries [36,37]. Unfortunately, few drone control studies consider these issues.

Many of the narrative answers highlighted a discordance between the controller interface and the operator's mental model in conventional interfaces. Conventional joystick and gestural controllers combine yaw and throttle in a single joystick, causing the drone to spin while ascending or descending if the joystick is positioned diagonally, even though users rarely intend to spin the drone while changing altitude. Therefore changing the joystick that controls throttle and yaw to a discontinuous interface such as a button or a cross key could overcome this problem in conventional controllers. This problem was not noted with the proposed gestural controller, because the drone curved when the joystick was controlled diagonally because it moved forward and spun at the same time, and this corresponded with the user's mental model.

One of the common features of drone control hand gestures we found from reviewing previous studies were users turning the hand to turn the drone [23,24,28,29,32,33]. However, we did not propose a drone control interface related to this gesture because repeatedly turning hands while holding a drone controller easily causes strain on the user's wrist.

The experiments for this paper were conducted by controlling a drone virtually on a screen. Thus the drone was always in front of the participant, and the sense of distance between the drone and the target was unclear because a flat-screen TV was used. Therefore we expect that the results would reflect reality better if the experiments were conducted with real drones and obstacles, or in a 3D virtual reality environment.

REFERENCES

[1] Valavanis, K.P. & Vachtsevanos, G.J. (Eds). 2015. Handbook of unmanned aerial vehicles (Vol. 1). Dordrecht: Springer Netherlands. [ Links ]

[2] Giones, F. & Brem, A. 2017. From toys to tools: The co-evolution of technological and entrepreneurial developments in the drone industry. Business Horizons, 60(6), 875-884. [ Links ]

[3] Chamata, J. 2017. Factors delaying the adoption of civil drones: A primitive framework. The International Technology Management Review, 6(4), 125-132. [ Links ]

[4] Williams, K.W. 2004. A summary of unmanned aircraft accident/incident data: Human factors implications. Washington, DC: Office of Aerospace Medicine. [ Links ]

[5] Cha, M., Kim, B., Lee, J. & Ji, Y. 2016. Usability evaluation for user interface of a drone remote controller. Proceedings of the ESK Conference, (pp. 417-424). [ Links ]

[6] Gabriel, G., Ramallo, M.A. & Cervantes, E. 2016. Workload perception in drone flight training simulators. Computers in Human Behavior, 64, 449-454. [ Links ]

[7] Fernandez, R.A.S., Sanchez-Lopez, J.L., Sampedro, C., Bavle, H., Molina, M. & Campoy, P. 2016. Natural user interfaces for human-drone multi-modal interaction. In 2016 International Conference on Unmanned Aircraft Systems (ICUAS) (pp. 1013-1022). IEEE. [ Links ]

[8] Glonek, G. & Pietruszka, M. 2012. Natural user interfaces (NUI). Journal of Applied Computer Science, 20(2), 2745. [ Links ]

[9] Oviatt, S. 2006. Human-centered design meets cognitive load theory: Designing interfaces that help people think. In Proceedings of the 14th ACM International Conference on Multimedia (pp. 871-880). [ Links ]

[10] O'Hara, K., Harper, R., Mentis, H., Sellen, A. & Taylor, A. 2013. On the naturalness of touchless: Putting the "interaction" back into NUI. ACM Transactions on Computer-Human Interaction (TOCHI), 20(1), 1-25. [ Links ]

[11] García, J.C., Patrão, B., Almeida, L., Pérez, J., Menezes, P., Dias, J. & Sanz, P.J. 2015. A natural interface for remote operation of underwater robots. IEEE Computer Graphics and Applications, 37(1), 34-43. [ Links ]

[12] Almeida, L., Menezes, P. & Dias, J. 2017. Improving robot teleoperation experience via immersive interfaces. In 2017 4th Experiment@ International Conference (exp. at'17) (pp. 87-92). IEEE. [ Links ]

[13] Villani, V., Sabattini, L., Secchi, C. & Fantuzzi, C. 2018. A framework for affect-based natural human-robot interaction. In 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) (pp. 1038-1044). IEEE. [ Links ]

[14] Guo, C. & Sharlin, E. 2008. Exploring the use of tangible user interfaces for human-robot interaction: A comparative study. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 121130). [ Links ]

[15] Yu, J. & Paik, W. 2019. Efficiency and learnability comparison of the gesture-based and the mouse-based telerobotic systems. Studies in Informatics and Control, 28(2), 213-220. [ Links ]

[16] Hu, C., Meng, M.Q., Liu, P.X. & Wang, X. 2003. Visual gesture recognition for human-machine interface of robot teleoperation. In Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003)(Cat. No. 03CH37453) (Vol. 2, pp. 1560-1565). IEEE. [ Links ]

[17] Pons, P. & Jaen, J. 2019. Interactive spaces for children: Gesture elicitation for controlling ground mini-robots. Journal of Ambient Intelligence and Humanized Computing, 1-22. [ Links ]

[18] Bae, J., Voyles, R. & Godzdanker, R. 2007. Perliminary usability tests of the wearable joystick for gloves-on hazardous environments. Proceedings of the International Conference on Advanced Robotics, 514-519. [ Links ]

[19] Song, T.H., Park, J.H., Chung, S.M., Hong, S.H., Kwon, K.H., Lee, S. & Jeon, J.W. 2007. A study on usability of human-robot interaction using a mobile computer and a human interface device. In Proceedings of the 9th International Conference on Human Computer Interaction with Mobile Devices and Services (pp. 462-466). [ Links ]

[20] Waldherr, S., Romero, R. & Thrun, S. 2000. A gesture based interface for human-robot interaction. Autonomous Robots, 9(2), 151-173. [ Links ]

[21] Qian, K., Niu, J. & Yang, H. 2013. Developing a gesture based remote human-robot interaction system using kinect. International Journal of Smart Home, 7(4), 203-208. [ Links ]

[22] Ng, W.S. & Sharlin, E. 2011. Collocated interaction with flying robots. In 2011 Ro-Man (pp. 143-149). IEEE. [ Links ]

[23] Obaid, M., Kistler, F., Kasparaviciütè, G., Yantaç, A.E. & Fjeld, M. 2016. How would you gesture navigate a drone? A user-centered approach to control a drone. In Proceedings of the 20th International Academic Mindtrek Conference (pp. 113-121). [ Links ]

[24] Pfeil, K., Koh, S.L. & LaViola, J. 2013. Exploring 3D gesture metaphors for interaction with unmanned aerial vehicles. In Proceedings of the 2013 International Conference on Intelligent User Interfaces (pp. 257-266). [ Links ]

[25] Higuchi, K. & Rekimoto, J. 2013. Flying head: A head motion synchronization mechanism for unmanned aerial vehicle control. In CHI' 13 Extended Abstracts on Human Factors in Computing Systems (pp. 2029-2038). [ Links ]

[26] Duan, T., Punpongsanon, P., Iwai, D. & Sato, K. 2018. Flyinghand: Extending the range of haptic feedback on virtual hand using drone-based object recognition. In SIGGRAPH Asia 2018 Technical Briefs (pp. 1-4). [ Links ]

[27] Menshchikov, A., Ermilov, D., Dranitsky, I., Kupchenko, L., Panov, M., Fedorov, M. & Somov, A. 2019. Data-driven body-machine interface for drone intuitive control through voice and gestures. In IECON 2019-45th Annual Conference of the IEEE Industrial Electronics Society (Vol. 1, pp. 5602-5609). IEEE. [ Links ]

[28] Zhao, Z., Luo, H., Song, G.H., Chen, Z., Lu, Z.M. & Wu, X. 2018. Web-based interactive drone control using hand gesture. Review of Scientific Instruments, 89(1), 014707. [ Links ]

[29] Yu, Y., Wang, X., Zhong, Z. & Zhang, Y. 2017. Ros-based UAV control using hand gesture recognition. In 2017 29th Chinese Control and Decision Conference (CCDC) (pp. 6795-6799). IEEE. [ Links ]

[30] Menshchikov, A., Ermilov, D., Dranitsky, I., Kupchenko, L., Panov, M., Fedorov, M. & Somov, A. 2019. Data-driven body-machine interface for drone intuitive control through voice and gestures. In IECON 2019-45th Annual Conference of the IEEE Industrial Electronics Society (Vol. 1, pp. 5602-5609). IEEE. [ Links ]

[31] Ma, L. & Cheng, L.L. 2016. Studies of AR drone on gesture control. In 2016 3rd International Conference on Materials Engineering, Manufacturing Technology and Control (pp. 1864-1868). Atlantis Press. [ Links ]

[32] Hu, B. & Wang, J. 2020. Deep learning based hand gesture recognition and UAV flight controls. International Journal of Automation and Computing, 17(1), 17-29. [ Links ]

[33] Nahapetyan, V.E. & Khachumov, V.M. 2015. Gesture recognition in the problem of contactless control of an unmanned aerial vehicle. Optoelectronics, Instrumentation and Data Processing, 51(2), 192-197. [ Links ]

[34] Szafir, D., Mutlu, B. & Fong, T. 2015. Communicating directionality in flying robots. In 2015 10th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (pp. 19-26). IEEE. [ Links ]

[35] Rao, B., Gopi, A.G. & Maione, R. 2016. The societal impact of commercial drones. Technology in Society, 45, 8390. [ Links ]

[36] Park, Y., Shintaku, J. & Amano, T. 2010. Korean firm's competitive advantage: Localization strategy of LG Electronics. University of Tokyo: MMRC Discussion Paper Series 292. [ Links ]

[37] Phys.org. 2020. LG Electronics unveils the F7100 digital Qiblah phone with embedded compass, direction indication and azan feature. Available at: https://phys.org/news/2004-07-lg-electronics-unveils-f7100-digital.html [ Links ]

Submitted by authors 26 Jan 2021

Accepted for publication 4 Aug 2021

Available online 31 Aug 2021

* Corresponding author: kyungdoh.kim@hongik.ac.kr