Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

South African Journal of Industrial Engineering

On-line version ISSN 2224-7890

Print version ISSN 1012-277X

S. Afr. J. Ind. Eng. vol.32 n.1 Pretoria May. 2021

http://dx.doi.org/10.7166/32-1-2389

GENERAL ARTICLES

Reliable and valid measurement scales for determinants of the willingness to accept knowledge

C.C. van WaverenI; L.A.G. OerlemansI, II; M.W. PretoriusI

IDepartment of Engineering and Technology Management, University of Pretoria, Pretoria, South Africa. C.C. van Waveren: https://orcid.org/0000-0002-2624-5247; L.A.G. Oerlemans: https://orcid.org/0000-0002-7495-4515; M.W. Pretorius: https://orcid.org/0000-0002-8214-4838

IIDepartment of Organization Studies, Tilburg University, Tilburg, The Netherlands

ABSTRACT

Before any acquired knowledge is used or adds value to the receiving project (members), it must be accepted by its recipients, leading to an increase in their positive attitudes towards, and intended use of, the acquired knowledge. To be willing to accept knowledge, the receiving project's team members must perceive it to have value and be easy to use. The focus of this exploratory paper is to develop and empirically test relevant sub-dimensions of perceived value and ease-of-use. The sub-dimensions were identified through a literature review, and measurement scales were developed empirically by applying a well-established scale development methodology.

OPSOMMING

Voordat enige verkrygde kennis vir 'n projek gebruik word of waarde toevoeg tot die projek en sy projeklede, moet die kennis aanvaar word deur die ontvangers daarvan. Dit sal die positiewe ingesteldheid van die ontvangers tot die kennis verhoog. As voorvereiste vir die gewilligheid om kennis te ontvang, moet die verkrygde kennis dus van waarde wees en moet dit maklik lyk vir die projeklede om die kennis te kan gebruik. Die fokus van hierdie artikel is dus om dimensies vir die meting van die gewilligheid om die verkrygde kennis te aanvaar te identifiseer en om die skalese dimensies te ontwikkel. Die dimensies is vanuit 'n literatuuroorsig identifiseer, en die skale is empiries bepaal met behulp van n bewese skaal ontwikkelingsmetodologie.

1 INTRODUCTION

Project-based organising in the economy and in society at large is an important managerial practice, and is increasingly studied by project management, business, and management scholars [1]-[3]. Because the generation of economic and social value is increasingly knowledge- and information-based, processes such as creativity, knowledge development, and innovation become highly relevant in projects. Because of their relatively flexible nature, projects are regarded as very suitable breeding grounds for knowledge creation in the context of its application; however, their temporary nature hinders the sedimentation of knowledge, because when the project dissolves and its members move on, the created knowledge is likely to disperse [4]. This phenomenon is often called 'the project learning paradox' [5]. It follows from this paradox that one of the major challenges for project managers is transferring the knowledge created in a project to other organisational contexts - for example, to subsequent projects or the permanent organisation [6].

At an abstract level, the knowledge transfer process can be modelled as a communication process comprising three basic building blocks [7]: a source (and its context), a transfer, and a recipient (and its context). If we add certain project characteristics to the equation, an interesting and relevant issue surfaces. It is commonly accepted that a project is an action-oriented and temporary endeavour, often carrying out a unique task [8]. The more unique the task performed by the project, the higher the likelihood that the project generates knowledge that is more difficult to apply in other contexts. Focusing on other contexts implies that one has to look at the recipients of the knowledge generated in previous projects inside or outside the organisation [9]. In particular, the recipient has to be willing to accept the acquired knowledge, which can be defined as the likelihood that any knowledge received will be used in subsequent activities. Here, we assume that at least a part of the knowledge developed in a previous project can potentially be used in subsequent ones. This knowledge can be project-related or, more broadly, related to capabilities for managing projects [10]. Inspired by the technology acceptance (TA) model, in which perceived value and ease-of-use of knowledge acquired are the main determinants of the willingness to accept a technology, this study explores and tests measurable sub-dimensions of these two determinants so that they can be applied to a project context. The TA model is widely used in studying the willingness to accept certain technologies or technological artefacts, but its determinants are not designed to measure the sub-dimensions of perceived values and ease-of-use.

This article therefore aims to develop a reliable and valid measurement scale for these sub-dimensions of the two determinants of the willingness of project team members of receiving projects or other organisational units to accept acquired knowledge. The focus of this paper is to explore and identify the theoretical dimensions of the perceived value and the perceived ease-of-use of acquired project knowledge, and to develop scales to measure these sub-dimensions.

The research questions for this study are, therefore: (1) What are the sub-dimensions of perceived value and perceived ease-of-use of acquired project knowledge? and (2) How can these dimensions be measured in a reliable and valid way? We argue that the development of reliable and valid measurement scales is crucial for the field of knowledge and project management. In doing so, we contribute in two ways to these fields. The first contribution is the identification of measurable sub-dimensions of the perceived value and perceived ease-of-use of acquired project knowledge. Such a dedicated identification is new to these fields, as current dimensions and measurements predominantly refer to technology and technological artefacts - for example, digital technologies [11], mobile payment systems [12], or ride-sharing services [13] - and not to project knowledge. Unlike in other scientific fields (e.g., in psychology and economics), scholars in the former fields tend to measure similar concepts with different (self-designed) measurements, which are often not tested for their psychometric characteristics. Consequently, scholars run the risk that effects observed in studies are not the product of factors investigated, but are a result of the different ways that constructs are measured.

The second contribution is that this study reduces measurement problems in knowledge and project management by systematically investigating the reliability and validity of the measurement scales that are developed [14].

The next section discusses the knowledge acceptance concept.

2 DETERMINANTS OF THE WILLINGNESS TO ACCEPT ACQUIRED PROJECT KNOWLEDGE: PERCEIVED VALUE AND EASE-OF-USE

In the theory of reasoned action (TRA), a theoretical model on the antecedents of human behaviour from the field of psychology that was developed by Ajzen and Fishbein [15], actors first need to indicate that they intend to behave in a certain way before they actually show the behaviour. Several meta-analyses found that there is strong overall evidence for the predictive utility of the TRA model - as indicated by Sheppard Hartwick and Warshaw [16], for example. Davis [17] extended their work, introducing a technology acceptance (TA) model and adding two concepts that impact on an actor's attitude; perceived usefulness and perceived ease-of-use [17], [18]. A later version of the TA model, TAM2, dropped the 'attitude' concept and replaced it with the concept of 'subjective norm'. As a consequence, the behavioural intention to use becomes a function of perceived usefulness (a.k.a. performance expectancy) and perceived ease-of-use (a.k.a. effort expectancy). Perceived usefulness is "the degree to which an individual believes that using a particular system or technology would enhance his or her job performance", whereas perceived ease-of-use is "the degree to which an individual believes that using a particular system or technology would be free of physical and mental effort" [17]. The TA model has been used, modified, improved, and confirmed empirically in many different settings [11], [17], [19]. Furthermore, the results of several meta-analyses of the TA model show it to be valid and robust (see, for example, [20]-[22]). Its core independent concepts, perceived ease-of-use and perceived usefulness, proved to be solid predictors of behavioural intention. Thus, this model can inform our research, and additional explorations of the literature would add little value.

For projects, one needs to determine whether receiving projects and their members are willing to accept transferred knowledge from other projects and project-related sources. Informed by the TA model, it is proposed that the perceived value and perceived ease-of-use of acquired knowledge from projects may influence the receiver's intention to use the acquired knowledge. This implies that the recipient(s) of the knowledge must be able to understand the knowledge received, and have experience with the surrounding conditions and influences in which the knowledge is generated and used, if the knowledge is to be meaningful to the receiving project [23]. Put differently, we argue that the perceived value and perceived ease-of-use of knowledge acquired by projects are important determinants of the willingness to accept acquired knowledge from projects.

The need to develop new, reliable, and valid measurement scales for the sub-dimensions of both determinants of the willingness to accept knowledge is informed by the observation that commonly existing scales are not focused on the value and ease-of-use of (project) knowledge, but have technological artefacts or software as their object of measurement. A few examples illustrate this statement. One of the items measuring perceived ease-of-use in a study by Cheung and Noble [24] reads: "It is easy for me to become skilful at using Google Applications", while Pai and Huang [25] use the item, "The healthcare information system can reduce the paper work time" to indicate perceived value/usefulness". In both cases the items do not refer to acquired knowledge in a project context, making the direct implementation of these and related measurements in a different context dubious. To address this issue, the subsequent sections of this paper will deal with the specification of the sub-dimensions of knowledge acceptance willingness (Section 3) and with the empirical development of measurement scales for these determinants by applying a well-established scale development methodology (Section 4).

3 THEORETICAL DIMENSIONS OF PERCEIVED VALUE AND PERCEIVED EASE-OF-USE OF ACQUIRED PROJECT KNOWLEDGE

3.1 Introduction

In the previous section we concluded that there are many applications of the TA model, but that they are not directed at explaining the willingness to accept acquired project knowledge. The same point applies to the measurements of the main determinants (perceived value and perceived ease-of-use) [26]. This implies that, for our purposes, we shall use the main determinants. However, because there are no measurement scales for the perceived value or the perceived ease-of-use of the knowledge acquired, a systematic exploration of the literature is needed to identify possible sub-dimensions that, taken together, provide a sound measurement of the overall constructs. Possible sub-dimensions were identified by searching the literature using combinations of keywords such as 'measurement', 'perceived value', 'perceived ease-of-use', 'knowledge', and 'projects'. Analogies were used where sub-dimensions were identified that could be adapted, clarified, or explained to indicate their applicability to knowledge acceptance. The results of this exploratory search of the literature are presented in the next two sections. After identifying the possible sub-dimensions of both main constructs, they will be empirically investigated in order to develop valid measurement scales (Sections 4 and 5).

3.2 Identifying the sub-dimensions of the perceived value of the acquired project knowledge

The perceived value of the acquired project knowledge is defined as the degree to which a recipient of knowledge believes that the acquired knowledge is relevant, adds value, and enhances project work or project performance. Informed by an exploratory search of the literature, the following possible sub-dimensions were identified:

• Uniqueness: The rareness of the transferred project knowledge, including the difficulty of obtaining or copying it (level of inimitability) or of finding a substitute for it (non-substitutability) [27]. The more unique the knowledge (i.e., the higher the inimitability and non-substitutability of the knowledge), the higher the perceived value of the knowledge and of the subsequent competitive advantage it can create or sustain for the project and the organisation [28], [29]. Unique knowledge is often tacit, complex, and highly product-specific [30], and is embedded within a firm's knowledge reservoirs - its people, tasks, tools, and networks [31]. The ambiguity caused by the tacitness of the knowledge objects often makes knowledge transfer difficult, especially when no overlapping process is compatible with both actors of learning [32].

• Relevance can be defined as the extent to which the acquired knowledge is applicable and salient to projects and subsequent organisational success [33], and whether the subsequent recipient projects and teams will learn a great deal about the technological or process know-how held by the source project [34], [35]. It can be argued that the more relevant the knowledge is to a particular problem or application, the more valuable it is (Ford and Staples, 2006).

• Comprehensiveness pertains to the correctness and level of detail of the knowledge that is transferred from a project and that should lead to a deeper understanding of the knowledge content as defined by Zahra, Ireland and Hitt [36]. It should therefore include the know-what, know-why, and know-how of the knowledge objects. In general, the more detail that is provided, the higher its comprehensiveness - but the more time and resources it will take in providing such details. Too much information may also lead to wasted effort and information overload. The correctness of the knowledge artefacts and the inclusion of contextual meaning add to the perceived value [37].

• Ability to improve quality of decision-making: The value of acquired project knowledge also lies in the question whether the received knowledge will improve the ability of the decision-maker to make better decisions [38].

• Source attractiveness: Knowledge value is higher when the recipient deems the knowledge source to be attractive and/or authoritative. This increases the legitimacy of the source and adds value to the acquired knowledge [29], [33]. Source attractiveness can also be seen as the value an organisation or project attaches to specific employees or team members in respect of their influence and their ability to perform their work and achieve organisational or project goals [28].

3.3 Identifying sub-dimensions of perceived ease-of-use of the acquired project knowledge

The perceived ease-of-use of the acquired project knowledge is defined as the degree to which an individual working in a project believes that using the acquired knowledge would be free of physical and mental effort. Informed by the literature, several sub-dimensions could be relevant:

• Understandability of acquired knowledge is indicated by the ease of obtaining a deeper understanding of the knowledge content [36]. It can therefore be defined as the extent to which new knowledge that is transferred from a project can be fully understood by its recipient [33]. For project-based organisations, this means that knowledge generated and transferred by a sender project, team, or individual is fully and easily understood by individuals and teams elsewhere in the project or across project boundaries [37], [38].

• Speed of application signifies how quickly the recipient acquires new insights and skills [36] or how quickly the project knowledge is retrieved [38]. Should the recipient master useful knowledge, but do so slowly, early mover benefits are likely to be limited, and the costs might even outweigh the anticipated benefits [33].

• Economics of transfer relates to the efforts needed to acquire knowledge from a project and to transfer the knowledge through the transfer process [39]-[41]. Excessive use of resources could also lead to the loss of early mover benefits similar to the speed of transfer [33]. The speed and ease with which a recipient can obtain better understanding of the knowledge will motivate the recipient to use the knowledge to its full advantage, and it will also enhance the perceived value of the knowledge [41].

The next section deals with the research methodology that was applied to identify appropriate items to measure each of the established dimensions of the two concepts, and thus to develop scales for their measurement.

4 METHODOLOGY

Measurement scale development as a methodology has been discussed by many scholars [42]-[46]. For this study, a scale development methodology was applied that was developed by Schriesheim et al. [43], adapted by Hinkin and Tracey [46], and referred to by a number of scholars in subsequent scale development studies [45], [47], [48]. The scale development process used includes: (1) item generation, (2) item reduction, (3) content adequacy assessment and validation, and (4) item retention selection. Each of the above-mentioned process steps and its application is discussed below.

4.1 Item generation

Item generation is the process of creating items or statements to measure a construct and its dimensions. Research indicated that most scale development efforts combine an inductive (involving experts) and a deductive approach to develop or identify items [49]. When creating items, each item should address only one issue. Furthermore, all items should be consistent in terms of perspective, and should be simple and as short as possible. Items should be written in a language that is familiar to the target group, and negatively worded items should be avoided. The number of items to be compiled must ensure that the measure is internally consistent and parsimonious, and should comprise the minimum number of items that adequately assess the dimension of the construct [50]. As a general rule, and as used by different authors, three to four items per dimension should provide adequate internal consistency reliability [45], [50].

A literature review identified possible sub-dimensions to measure the two determinants of perceived value (five sub-dimensions) and perceived ease-of-use (three sub-dimensions) of knowledge acceptance. From here, groups of items were derived that could measure each of the sub-dimensions. Items were formulated so that each statement related to one dimension only. This was to ensure that the subsequent content adequacy assessment could be simplified (see next section). A total of 81 Items were compiled for the different sub-dimensions.

4.2 Item reduction

To make the measurement instrument more practical and feasible for respondents, the number of statements needed to be reduced, as the 81 items statements that were generated would have made completing a questionnaire a cumbersome task, with a high possibility of (non)response biases. Reduction was accomplished by asking respondents, acting as judges, to evaluate each item for its relevance to the definition of the sub-dimension. For this purpose, a questionnaire was developed that measured a respondent's judgement of the relevance, using a six-point Likert scale1. In total, 321 postgraduate students in the field of engineering, technology and project management at a South African university responded (August 2017). Because these students were active project managers, this characteristic qualified them as appropriate subjects. Respondents had an average project work experience just under five years, ranging from a few months to 40 years' involvement in projects.

Means and mean ranking of each of the items were determined for different respondent project experience groups - namely, (1) those with less than five years' project experience, and (2) those with five or more years' project experience. By comparing the experience groups, it would become clear whether there was bias between the two groups and thus whether the more experienced group might view certain items differently from the less experienced group. This comparison was conducted because previous studies [51], [52] had proposed that more experienced managers process received information differently.

As mentioned, there is no specific rule about the number of items to be selected, although there are helpful heuristics, as the measurement items need to be internally consistent and parsimonious, and should have the minimum number of items that adequately assess the domain of interest [50]. This is achieved by selecting three to four items per sub-dimension. It was decided to select the four top-ranked items for each sub-dimension, but also to verify that the means were above 3.50, meaning that respondents judged the relevance of the item statement at least moderately relevant (a score of 4). An independent sample T-test was also performed on the two experience groups to determine whether there was any statistically significant difference between them. For the higher-ranked and selected items, the results showed no statistically significant differences between the two experience groups. The final lists of the selected items that were used as part of the measurement instrument are referred to in the next results section, and the item generation and reduction method and detailed result are published as part of the International Association for Management of Technology (IAMOT) conference proceedings [53].

5 RESULTS

5.1 Content adequacy assessment and validation

Content adequacy assesses how satisfactorily the items of a scale measure a theoretical construct. This means that a satisfactory measurement item should only measure the intended theoretical construct, and not others [45]. Authoritative work in the field of content adequacy was done by Schriesheim [43], [54] who developed a variety of approaches to assess content adequacy judgements. The approach followed here involved compiling a questionnaire, followed by a statistical analysis of the results obtained. The questionnaire was set to measure a respondent's view on how well an item statement, from the reduced list of items, belonged to any of the defined sub-dimension definitions of perceived value and perceived ease-of-use.

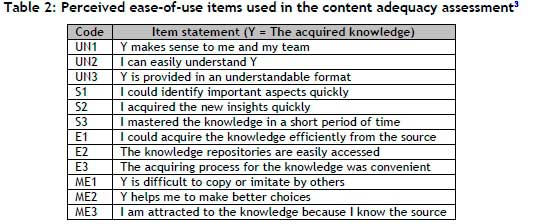

Respondents were requested to read each of the item statements carefully, and to indicate to which of the sub-dimension definitions the statement(s) applied. The list of statements used for the content adequacy assessment analysis contained the items with the highest mean values from the item reduction section (see Table 1 and Table 2) for the two main sub-dimensions. To check for any interpretative biases, three additional items that reflected the other sub-dimension were included as marker items.

Following Schriesheim's procedure [42], [54], if an item statement only applied to a single definition, the respondent had to indicate this with an 'X'. If the respondent felt that an item statement belonged to multiple dimension definitions, they had to indicate the most relevant with the number 1, the second most relevant with the number 2, and so on. We compiled three sets of questionnaire forms with items statements randomly ordered. To control for any potential order effects, forms were distributed equally among respondents. In total, 114 respondents took part in this second study. All were postgraduate (thus employed) students in the field of engineering, technology and project management at a South African university (March 2018). The panel had an average project work experience of just under six years, ranging between a few months' and 20 years' involvement in projects. The respondents' judgements on each item were scored following Schriesheim's procedures [42, p. 72]. The responses to each item were scored by assigning points for each entry, as shown in Table 3. Entries above '2' were not scored, as these made up less than 2.2% of the total response data. It is also doubtful whether a respondent can make such accurate dimension discriminations. All other non-indicated responses were set to zero.

An extended matrix and Q-method approach to content assessment was applied to evaluate the data [43], [45]. First, the scored responses were consolidated into a data matrix in which the rows represented the questionnaire item statements, the columns represented the content categories or different definition statements, and the matrix entries represented the mean ratings of the total number of respondents. Table 4 and Table 5 show these matrices for our variables. For perceived value, the mean ratings clearly identified the items having the highest mean values in their respective content categories (except for U1, C1, C2, and C3). The same procedure was followed for perceived ease-of-use (except for S1). For the five exceptions, the mean values indicated potential problems, as these items could be confounded with other, non-intended dimensions.

The marker items also showed the highest mean values in the intended marker categories, except for MV1 and ME2, implying that care must be taken to distinguish clearly between the two constructs of perceived value and perceived ease-of-use when administering the final items through a questionnaire.

Second, an extended data matrix was constructed in which the rows represented the respondents' judgements, with each judgement in a separate row (For perceived value, each respondent had six rows, one for each dimension definition; and for perceived ease-of-use, each respondent had four rows, again one for each dimension definition). The columns represented the item statements for the two constructs. A principal component factor analysis was conducted on the extended data matrix using Varimax rotation and a criterion level of >0.40 for the inclusion and interpretation of the factor loadings (similar to Saha et al. [45]). For 'perceived value', six factors, and for 'perceived ease-of-use', four factors with Eigen values greater than one were extracted. The marker values loaded mainly on a separate factor. The rotated component matrices for both dimensions are shown in Table 6 and Table 7 respectively. Clear factor structures emerged, except for U1 and C2, which both loaded on two factors each, and MV1, which loaded on an incorrect factor. Table 6 shows the perceived value loadings. For the perceived ease-of-use items, only the ME2 item loaded on two factors (see Table 7).

Using Hinkin and Tracey's [46] approach to content validation as an alternative to making item retention and deletion decisions, an ANOVA procedure was employed using the same data as for the factor analysis. According to these authors, this analysis provides a direct method for assessing an item's content validity by comparing the item's mean rating on one conceptual dimension with the item's ratings on another comparative dimension [46]. Thus, it can determine whether an item's mean score is statistically higher on the proposed theoretical construct. The ANOVA indicated that there was a statistically significant difference between the mean values of the different groups under a component/dimension (p<0.05) for both the perceived value and the perceived ease-of-use constructs.

To check whether the groups under a component differed from each other individually, we conducted Duncan's multiple range test (DMRT), which provides simultaneous comparisons by holding the probability of making a type I error for the entire set of comparisons with the a priori significance criteria [45], [46]. The results of both tests indicated that, for perceived value, the items U1 and C1 did not have a statistically significant mean difference, thus loading on two different factors each, while M1 loaded on an incorrect factor. For perceived ease-of-use, the item S1 loaded on two factors.

Table 8 and Table 9 present summaries of the findings for all the statistical tests conducted to establish content validity. Ignoring all marker items, Table 8 indicates that the U1 item was definitely problematic in the 'perceived value' theoretical content domain, while the C1, C2, and C3 items were found to be problematic in some tests. Similarly, Table 9 shows that the S1 item was problematic in the 'perceived ease-of-use' dimension.

5.2 Discussion of item retention and deletion

Different follow-up options are proposed regarding the problematic items identified in the previous section. First, one could scrutinise the problematic items in respect of their wording and relevance to their respective definitions, and rephrase them to eliminate their possible confounding effect. If this effect is obvious, there might be a limited need to re-evaluate the items through an additional content adequacy and validation study. If a completely new item statement needs to be compiled, a re-evaluation study might be compulsory, or the problematic statement should be omitted from the items pool. To understand the reason for the confounding effect, we discuss the problematic statements below.

The factor U1 includes the item "The acquired knowledge is highly content specific", loading mainly on the identified factors 'uniqueness' and 'comprehensiveness'. As the word 'specific' relates to the qualities and properties of the content, it might be that the use of the word 'highly' is problematic, and so 'highly' could be replaced by 'thoroughly' or 'exceedingly'. In this way, the understanding of the statement can also suggest thorough or exceeding content, which is more comprehensive.

Another reason for the loading on the 'comprehensiveness' factor might have been the different language backgrounds of the respondents. Although English is the language of business in South Africa, the country has 11 official languages. In most cases, English was not the mother tongue of the respondents, which could also have led to different interpretations of key words. Thus, the word 'highly' should have been omitted to indicate that "The acquired knowledge is content-specific" or, rephrased, that "The acquired knowledge is specific in its content".

Similarly, the C1 statement, "The acquired knowledge gives me a deeper understanding of the problem or situation at hand", loaded on two factors ('knowledge relevance' and 'knowledge comprehensiveness'). We argue that the underlined words might have suggested relevance to a very specific problem or situation; and therefore could have been omitted. For the C2 item, "The acquired knowledge provides good context", the word 'context' could also have meant 'connection' or related or relevant to something; hence the confusion with 'relevance'. It is suggested that this statement should have been changed to "The acquired knowledge provides good contextual insight".

For the item S1, "I could identify important aspects quickly", which loaded on both "Comprehensibility of the knowledge" and "Speed of knowledge transfer", it might have been that the phrase was ambiguous. Although emphasis should have been on the word 'quickly' - thus indicating speed - it could also have meant 'easily comprehensible'. It therefore made sense to omit this item.

6 CONCLUSION

In this paper, theoretical sub-dimensions were identified for the perceived value and perceived ease-of-use of acquired project knowledge. Measurement scales to measure these dimensions were also proposed and empirically tested. For academics, the sub-dimensions and measurement scales could provide an opportunity for future research by further developing or replicating the scales, as well as testing these scales for suitability and application in project environments. For practitioners, the sub-dimensions and measurements scales could be used, even in a reduced or simplified way, to check that the knowledge developed in one project is structured and presented in such a way that it could improve the accepting behaviour of individuals in a receiving project.

The development and general use of validated and reliable measurement scales is underdeveloped in the knowledge and project management field. To measure two important determinants of the willingness to accept acquired project knowledge (the perceived value and the perceived ease-of-use of acquired project knowledge), it is important that sound measurement instruments are developed that can measure these constructs. The first contribution of this paper is that it developed a content-adequate measurement framework that is based on a well-established scale development procedure. The scales thus developed can be used to measure the two constructs by members of projects and of other organisational units. This validated measurement will help to predict intended and actual knowledge use behaviour in projects.

A second contribution lies in the literature-based identification of the possible dimensions of the two determinants. The dimension 'perceived value of acquired project knowledge' has the sub-dimensions uniqueness, relevance, comprehensiveness, decision-making, and source credibility. The sub-dimensions for 'perceived ease-of-use of acquired project knowledge' are speed, economics, and understanding of the received knowledge.

The third contribution concerns the formulation and validation of item statements that could be used to measure the relevant (sub)dimensions. These item statements were reduced to a manageable set, and a content-adequacy assessment was performed to verify the independence of the item statements and to identify items for retention or deletion. Our analyses led to a final set of items statements that could be used to adequately measure the willingness to accept acquired knowledge in projects.

Although our research efforts generated an instrument measuring project members' willingness to accept knowledge, it should not be seen as the only or best way to measure it. Schriesheim et al. [54] indicated that scale development and content-adequacy assessment can best be viewed as a never-ending process. Thus, we do not claim to have produced the best measurement instrument; rather, it was an attempt to identify and test suitable item statements that enable the measurement of relevant dimensions.

REFERENCES

[1] Fiol, C. M. & Romanelli, E. 2012. Before identity: The emergence of new organizational forms. Organ. Sci., 23(3), pp. 597-611. [ Links ]

[2] Geraldi, J. & Söderlund, J. 2018. Project studies: What it is, where it is going. Int. J. Pro]. Manag., 36(1), pp. 5570. [ Links ]

[3] Maylor, H. & Turkulainen, V. 2019. The concept of organisational projectification: Past, present and beyond? Int. J. Manag. Pro]. Bus., 12(3), pp. 565-577. [ Links ]

[4] Hobday, M. 2000. The project-based organisation: An ideal form for managing complex products and systems? Res. Policy, 29(7-8), pp. 871-893. [ Links ]

[5] Bakker, R. M., Cambré, B., Korlaar, L. & Raab, J. 2011. Managing the project learning paradox: A set-theoretic approach toward project knowledge transfer. Int. J. Pro]. Manag. , 29(5), pp. 494-503. [ Links ]

[6] Lindner, F. & Wald, A. 2011. Success factors of knowledge management in temporary organizations. Int. J. Pro]. Manag., 29(7), pp. 877-888. [ Links ]

[7] Cummings, J. L. & Teng, B.-S. 2003. Transferring R&D knowledge: The key factors affecting knowledge transfer success. J. Eng. Technol. Manag., 20(1/2), pp. 39-68. [ Links ]

[8] Lundin, R. A. & Söderholm, A. 1995. A theory of temporary organisation. Scand. J. Manag., 11(4), pp. 437-455. [ Links ]

[9] Van Wijk, R., Jansen, J. J. P. & Lyles, M. A. 2008. Inter-and intra-organizational knowledge transfer: A meta-analytic review and assessment of its antecedents and consequences. J. Manag. Stud., 45(4), pp. 830-853. [ Links ]

[10] Davies, A. & Brady, T. 2000. Organisational capabilities and learning in complex product systems: Towards repeatable solutions. Res. Policy, 29(7-8), pp. 931-953. [ Links ]

[11] Scherer, R., Siddiq, F. & Tondeur, J. 2019. The technology acceptance model (TAM): A meta-analytic structural equation modeling approach to explaining teachers' adoption of digital technology in education. Comput. Educ., 128(0317), pp. 13-35. [ Links ]

[12] Schmidthuber, L., Maresch, D. & Ginner, M. 2020. Disruptive technologies and abundance in the service sector: Toward a refined technology acceptance model. Technol. Forecast. Soc. Change, 155, pp. 1 -11. [ Links ]

[13] Wang, Y., Wang, S., Wang, J., Wei, J. & Wang, C. 2020. An empirical study of consumers' intention to use ride-sharing services: Using an extended technology acceptance model. Transportation (Amst)., 47(1), pp. 397-415. [ Links ]

[14] Boyd, B. K., Gove, S. & Hitt, M. A. 2005. Consequences of measurement problems in strategic management research: The case of Amihud and Lev. Strateg. Manag. J., 26(4), pp. 367-375. [ Links ]

[15] Ajzen, I. & Fishbein, M. 1970. The prediction of behavior from attitudinal and normative variables. J. Exp. Soc. Psychology, 6(4), pp. 466-487. [ Links ]

[16] Sheppard, B. H., Hartwick, J. & Warshaw, P. R. 1988. The theory of reasoned action: A meta-analysis of past research with recommendations for modifications and future research. J. Consum. Res., 15(3), pp. 325-343. [ Links ]

[17] Davis, F. 1985. A technology acceptance model for empirically testing new end-user information systems: Theory and results. PhD Thesis. Massachussets Institude of Technology. [ Links ]

[18] Venkatesh, V. & Davis, F. D. 2000. A theoretical extension of the technology acceptance model: Four longitudinal studies. Manage. Sci., 46(2), pp. 186-205. [ Links ]

[19] Zyngier, S. & Venkitachalam, K. 2011. Knowledge management governance: A strategic driver. Knowl. Manag. Res. Pract., 9(2), pp. 136-150. [ Links ]

[20] King, W. R. & He, J. 2006. A meta-analysis of the technology acceptance model. Inf. Manag., 43(6), pp. 740-755. [ Links ]

[21] Yousafzai, S. Y., Foxall, G. R. & Pallister, J. G. 2007. Technology acceptance: A meta-analysis of the TAM: Part 2. J. Model. Manag., 2(3), pp. 281-304. [ Links ]

[22] Tao, D., Wang, T., Wang, T., Zhang, T., Zhang, X. & Qu, X. 2020. A systematic review and meta-analysis of user acceptance of consumer-oriented health information technologies. Comput. Human Behav., 104 pp. 1-15. [ Links ]

[23] Nonaka, I. 1994. A dynamic theory of organizational knowledge creation. Organ. Sci., 5(1), pp. 14-37. [ Links ]

[24] Cheung, R. & Vogel, D. 2013. Predicting user acceptance of collaborative technologies: An extension of the technology acceptance model for e-learning. Comput. Educ., 63, pp. 160-175. [ Links ]

[25] Pai, F. Y. & Huang, K. I. 2011. Applying the technology acceptance model to the introduction of healthcare information systems. Technol. Forecast. Soc. Change, 78(4), pp. 650-660. [ Links ]

[26] Lai, P. 2017. The literature review of technology adoption models and theories for the novelty technology. J. Inf. Syst. Technol. Manag., 14(1), pp. 21-38. [ Links ]

[27] Barney, J. 1991. Firm resources and sustained competitive advantage. J. Manage., 17(1), pp. 99-120. [ Links ]

[28] Ford, D. P. & Staples, D. S. 2006. Perceived value of knowledge: The potential informer's perception. Knowl. Manag. Res. Pract., 4(April 2005), pp. 3-16. [ Links ]

[29] Gupta, A. & Govindarajan, V. 2000. Knowledge flows within multinational corporations. Strateg. Manag. J., 21 (4), pp. 473-496. [ Links ]

[30] Han, J., Jo, G. S. & Kang, J. 2016. Is high-quality knowledge always beneficial? Knowledge overlap and innovation performance in technological mergers and acquisitions. J. Manag. Organ., 24(2) pp. 1-21. [ Links ]

[31] Argote, L. & Ingram, P. 2000. Knowledge transfer: A basis for competitive advantage in firms. Organ. Behav. Hum. Decis. Process., 82(1), pp. 150-169. [ Links ]

[32] Uygur, U. 2013. Determinants of causal ambiguity and difficulty of knowledge transfer within the firm. J. Manag. Organ., 19(6), pp. 742-755. [ Links ]

[33] Pérez-Nordtvedt, L., Kedia, B. L., Datta, D. K. & Rasheed, A. A. 2008. Effectiveness and efficiency of crossborder knowledge transfer: An empirical examination. J. Manag. Stud., 45(4), pp. 714-744. [ Links ]

[34] Simonin, B. L. 1999. Ambiguity and the process of knowledge transfer in strategic alliances. Strat. Mgmt. J., 20(20), pp. 595-623. [ Links ]

[35] Simonin, B. L. 2004. An empirical investigation of the process of knowledge transfer in international strategic alliances. J. Int. Bus. Stud., 35(5), pp. 407-427. [ Links ]

[36] Zahra, S. A., Ireland, R. D. & Hitt, M. A. 2000. International expansion by new venture firms: International diversity, mode of market entry, technological learning, and performance. Acad. Manag. J., 43(5), pp. 925-950. [ Links ]

[37] Jennex, M. E., Smolnik, S. & Croasdell, D. 2016. The search for knowledge management success. In 2016 49th Hawaii International Conference on System Sciences (HICSS), IEEE Computer Society, pp. 4202-4211. [ Links ]

[38] Jennex, M. E., Smolnik, S. & Croasdell, D. 2007. Towards defining knowledge management success. In 200740th Annual Hawaii International Conference on System Sciences (HICSS'07), IEEE Computer Society p. 193c. [ Links ]

[39] Hansen, M. T., Mors, M. L. & Lovas, B. 2005. Knowledge sharing in organizations: Multiple networks, multiple phases. Acad. Manag. J., 48(5), pp. 776-793. [ Links ]

[40] Szulanski, G. 2000. The process of knowledge transfer: A diachronic analysis of stickiness. Organ. Behav. Hum. Decis. Process., 82(1), pp. 9-27. [ Links ]

[41] Szulanski, G. 1996. Exploring internal stickiness: Impediments to the transfer of best practice within the firm. Strateg. Manag. J. , 17(Winter Special), pp. 27-43. [ Links ]

[42] Schriesheim, C. A. 1978. Development, validation, and application of new leadership behavior and expectancy research instruments. PhD Thesis, The Ohio State University. [ Links ]

[43] Schriesheim, C. A., Powers, K. J., Scandura, T. A., Gardiner, C. C. & Lankau, M. J. 1993. Improving construct measurement in management research: Comments and a qualitative approach for assessing the theoretical content adequacy of paper-and-pencil survey-type instruments. J. Manage., 19(2), pp. 385-417. [ Links ]

[44] Hinkin, T. R. & Schriesheim, C. A. 1989. Development and application of new scales to measure the French and Raven (1959) bases of social power. J. Appl. Psychol., 74(4), pp. 561-567. [ Links ]

[45] Saha, K., Kumar, R., Dutta, S. K. & Dutta, T. 2017. A content adequate five-dimensional entrepreneurial orientation scale. J. Bus. Ventur. Insights, 8(March), pp. 41 -49. [ Links ]

[46] Hinkin, T. & Tracey, J. B. 1999. An analysis of variance approach to content validation. Organ. Res. Methods, 2(2), pp. 175-186. [ Links ]

[47] Wong, M. A. 2014. Entrepreneurial culture: Developing a theoretical construct and its measurement. PhD Thesis, The University of Western Ontario. [ Links ]

[48] Holt, D. T., Armenakis, A. A., Feild, H. S. & Harris, S. G. 2007. Readiness for organizational change: The systematic development of a scale. J. Appl. Behav. Sci., 43(2), pp. 232-255. [ Links ]

[49] Morgado, F. F. R., Meireles, J. F. F., Neves, C. M., Amaral, A. C. S. & Ferreira, M. E. C. 2017. Scale development: Ten main limitations and recommendations to improve future research practices. Psicol. Reflex. e Crit., 30(3), pp. 1 - 20. [ Links ]

[50] Hinkin, T. R., Tracey, J. B. B. & Enz, C. A. 1997. Scale construction: Developing reliable and valid measurement instruments. J. Hosp. Tour. Res., 21(1), pp. 100-120. [ Links ]

[51] Taylor, R. N. 1975. Age and experience as determinants of managerial information processing and decision making performance. IEEE Eng. Manag. Rev., 18(1), pp. 74-81. [ Links ]

[52] Jiang, F., Ananthram, S. & Li, J. 2018. Global mindset and entry mode decisions: Moderating roles of managers' decision-making style and managerial experience. Manag. Int. Rev., 58(3), pp. 413-447. [ Links ]

[53] Van Waveren, C. C., Oerlemans, L. A. G. & Pretorius, M. W. 2018. Developing scales for measuring knowledge acceptance and use in projects. In 2018 27th Annual International Association for Management of Technology (IAMOT) Conference, IAMOT, pp. 1-14. [ Links ]

[54] Schriesheim, C. A., Cogliser, C. C., Scandura, T. A., Lankau, M. J. & Powers, K. J. 1999. An empirical comparison of approaches for quantitatively assessing the content adequacy of paper-and-pencil measurement instruments. Organ. Res. Methods, 2(2), pp. 140-156. [ Links ]

Submitted by authors 29 Jul 2020

Accepted for publication 29 Mar 2021

Available online 28 May 2021

* Corresponding author: corro@up.ac.za

1 Possible answers were: (1) not, (2) slightly, (3) somewhat, (4) moderately, (5) very, and (6) extremely relevant

2 U=Uniqueness; R=Relevance; C=Comprehensiveness; DM=Decision-making; SC=Source credibility; MV=Marker value.

3 UN=Understandability; S=Speed; E=Economics; ME= Marker EoU.