Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

South African Journal of Industrial Engineering

On-line version ISSN 2224-7890

Print version ISSN 1012-277X

S. Afr. J. Ind. Eng. vol.32 n.1 Pretoria May. 2021

http://dx.doi.org/10.7166/32-1-2300

GENERAL ARTICLES

A review of the stylus system to enhance usability through sensory feedback

Department of Industrial Engineering, Hongik University, Seoul, Republic of Korea. J. Sim: https://orcid.org/0000-0002-2475-9092; Y. Yim: https://orcid.org/0000-0002-7795-174X; K. Kim: https://orcid.org/0000-0003-1062-6261

ABSTRACT

With the advent of touchscreen-based smart devices, several studies on the usability of the stylus, a typical touchscreen input method, have been conducted. However, usability studies that simply focus on completing tasks are not suitable for investigating the stylus in various use contexts and with qualitative values. In other words, the context from the user's point of view should be investigated. Therefore this study aims to provide usability directions for improving the stylus system by structurally investigating recent stylus-related studies. After examining stylus-related studies between 2004 to 2017, they were mainly classified into two cases: those considering the use of the stylus in various environments, and those focused on developing the stylus by applying new sensory feedback to increase usability. By systematically analysing and classifying each study, unique features of the stylus were identified and examples of stylus usage in certain domains were summarised. Furthermore, to improve usability, sensory feedback methods that could be applied to the stylus were established. In conclusion, this study derived sensory feedback that would be suitable for the use context of styluses, and proposed guidelines to improve the usability of stylus systems through sensory feedback.

OPSOMMING

Verskeie studies oor die bruikbaarheid van 'n stilus, 'n tipiese inset tegniek vir raakskermtoestelle, is al uitgevoer. Bruikbaarheidstudies wat net op die verrigting van take fokus is nie gepas wanneer die stilus vir verskillende take gebruik word nie. Die konteks vanuit die gebruikersperspektief moet ondersoek word. Hierdie studie beoog dus om bruikbaarheidsaanwysing te verskaf om die stilus stelsel te verbeter. Dit word gedoen deur onlangse stilus verwante studies te ondersoek. Stilus verwante studies van die tydperk van 2004 tot 2017 is ondersoek en hoofsaaklik in twee kategorieë geklassifiseer, naamlik studies na die gebruiksdoel van die stilus in verskillende omgewings en studies wat fokus op die ontwikkeling van die stilus deur nuwe sensoriese terugvoer aan die gebruiker te verskaf. Deur die verskeie studies sistematies te analiseer en te klassifiseer is daar unieke eienskappe van die stilus identifiseer en voorbeelde van die aanwending daarvan verskaf. Verder is metodes wat die sensoriese terugvoer van die stilus kan verbeter vasgetel. Hierdie studie het dus sensoriese stilus terugvoer afgelei wat gepas is vir die gebruiksdoel van die stilus. Laastens word riglyne voorgestel om die bruikbaarheid van stilus stelsels te verbeter deur middel van sensoriese terugvoer.

1 INTRODUCTION

In the past, the stylus has been recognised as a writing instrument or a small tool used for marking or shaping. Currently the stylus is defined as a pen-type digital device used as an input device or auxiliary tool. The stylus, originally developed for manipulating a personal digital assistant (PDA) with a resistive touchscreen, has been regarded as a unique input method. With the advent of capacitive touchscreens, the finger emerged as a competitive input method. As the finger is a more intuitive input method than the stylus, it has quickly become a mainstream input method [1]. At the same time, a number of usability studies comparing the stylus and fingers on capacitive touchscreens were conducted [2-9]. The term 'usability' refers to a user's ability to perform a task successfully using a thing [10], and is based on effectiveness, efficiency, and satisfaction [11]. These studies have consistently focused on the effectiveness, the ability to accomplish the task accurately [11], and the efficiency of stylus and finger task performance (e.g., tapping, dragging). The stylus is consistently more effective than the finger, which can be inferred from the natural consequence of the difference in tip area. In contrast, no consistent result was found for efficiency. This shows that the obvious advantage of the stylus over fingers is its effectiveness.

However, a diary study by Riche, Riche, Hinckley, Panabaker, Fueling and Williams [12] concluded that the main reason for using the stylus is the immersion of cognitive processes. The user is satisfied with the muscle memory generated by performing a pen-based note taken through the stylus, yet at the same time requires tactile feedback to feel the texture. Because of this, there is a need to evaluate the value of the stylus system in consideration of the context before and after the task occurs, and to provide appropriate sensory feedback to facilitate the cognitive processes. This value can be interpreted as 'satisfaction', a qualitative value neglected by existing usability studies. By examining existing literature review studies related to styluses, the studies on computer input devices [13, 14], on the computer interface environment (e.g., the touchscreen) [15-19], on object-sensing [20, 21], and on the design direction of styluses [22] were grasped. These studies considered the stylus as simply an input device without investigating its usage context in depth, even though the stylus can be used as a tool to improve cognitive ability. To bridge this gap, this study examines the stylus system from the perspective of usability, analyses the context in which the user uses a stylus, and suggests sensory feedback that is suitable for the context.

First, by examining the domain where the stylus is actually used from the perspective of usability, the behaviour and thinking of the person who uses the stylus are grasped. Next, in order to implement the stylus properly, considering its various usage contexts, studies on improving the usability of the stylus system in respect of sensory feedback are reviewed. Finally, this study suggests design guidelines for sensory feedback that considers the usage contexts of the stylus system for each domain.

2 METHOD

Google Scholar was used to search for studies in diverse fields because the stylus is used in various applications. 'Stylus pen', 'stylus UX', and 'stylus development' were the search keywords used to examine studies published from 2004 to 2017. The reason for using these keywords is that it is appropriate to use macroscopic words to understand the various usage contexts of stylus. In addition, 'user experience (UX)' is used as the appropriate word to grasp the user-oriented usage context. The collected studies include those in which the stylus users are not experts but the general public. For example, the stylus profilometer is not suitable for this study because its function is so specialised that it is completely different from the commonly used stylus. About 350 studies were investigated for each keyword. The studies were screened by considering our research direction.

As a result, 85 studies on the task and environment in which the stylus was used were collected initially, and the collected studies were macroscopically divided into studies in a common usage environment and those in a specific usage environment. The former consisted of studies dealing with general tasks, regardless of the context of use, and was further divided into studies about intended interaction (e.g., tapping, dragging) [2-5, 7, 8, 23-28] and studies about unintended interaction (e.g., the palm rejection problem, in which the palm is not properly recognised when a stylus is used to interact) [29, 30]. However, for the purpose of grasping the various usage contexts of the stylus, studies about the common usage environment were excluded. The studies that simply used the stylus in specialised domains were allocated as literature for application studies in Section 3. In these cases, the collected studies were classified by grouping them by domain, and domains with fewer than four studies that could be grouped into the same domain were excluded.

Thirty-six studies on improving the usability of the stylus by developing a new stylus system were collected initially. The studies that improved usability with new sensory feedback through the stylus system were categorised as usability improvement studies in Section 4, and studies that simply added functions to the stylus (e.g., tilt and colour change) were excluded. When searching the usability improvement studies, the reference lists of the collected studies were all checked, and other stylus studies that improved sensory feedback were also searched; this process was repeated until no further studies were found.

Finally, 28 studies related to the stylus application were investigated for Section 3, and 30 studies related to usability improvement were analysed for Section 4. The analysis was performed based on these studies.

3 ACTIVITIES AND AFFORDANCE IN VARIOUS APPLICATION DOMAINS

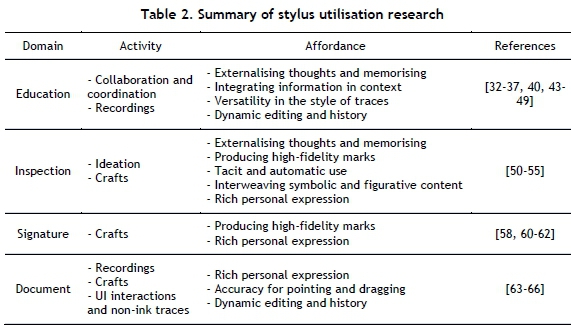

For the collected studies on stylus utilisation, the application fields were classified into a total of four domains: education, inspection, signature, and document. To examine the domains structurally, the diary study by Riche et al. [12] of the analogue pen and stylus was referenced. Riche et al. [12] derived the use characteristics of the analogue pen and stylus by classifying them as 'activities' and 'affordances'. 'Activity' refers to an action occurring when using a stylus, and "the term affordance refers to the perceived and actual properties of the thing, primarily those fundamental properties that determine just how the thing could possibly be used" [31]. In this study, the activities and affordances of Riche et al. [12] were revised to suit the characteristics of the stylus. First, from the perspective that activities are involved in handwriting, similar activities of information scraps, annotations, and recordings were combined into recordings; 'doodles and games' and 'personal communication' that did not appear in the collected studies were eliminated. For affordances, 'reliability and dependability' and 'immediacy of capture' were eliminated owing to the current technical limitation that a stylus has to be used with another electronic device (Table 1).

Based on the revised activities and affordances, the collected studies were analysed, and the activities and affordances that could represent each domain were selected. The selection criteria were the activity and affordance existing in a majority of studies for each domain.

3.1 Education

3.1.1 Activity

Two activities exist in the education domain: 'collaboration and coordination' and 'recordings'. 'Collaboration and coordination' refers to activities of exchanging opinions with other people about school classes. Opinion exchange occurs between educator and students, and between student and student. Most studies were focused on the opinion exchange activities between educator and students; however, a study was conducted focusing on the evaluation between students - e.g., peer-to-peer assessment (P2PASS) [32] - and there were studies in which an educator and all students participating in the class were sharing and using a screen [33, 34]. 'Recordings' are activities of composing traces for easy understanding and memorising. These activities commonly exist in all education domain studies, and they can be divided according to the respective standpoints of the educator and the students. An educator teaches students by showing the learning materials that were prepared in advance and speaking at the same time. In this process, the educator uses a stylus to emphasise points on the learning materials or writing texts. Afterwards, these traces are saved on the Internet and distributed to the students. During class, each student uses a stylus to mark the main points or to write texts on the learning materials that were prepared in advance, such that they can understand them more easily [35]. Later, each student studies by using the traces they composed [36] and the traces composed by the educator. However, if a student does not own a tablet PC and stylus, they can only use the traces composed by the educator [34, 37].

3.1.2 Affordance

'Affordances' in the education domain are 'externalising thoughts and memorising', 'integrating information in context', 'versatility in the style of traces', and 'dynamic editing and history'. 'Externalising thoughts and memorising' appears in the process of delivering knowledge or solving a problem by using a stylus. During a class an educator uses a stylus to deliver his/her knowledge to teach students. For example, by writing sentences or using circles and underlines on the learning materials, the educator can help students to understand better [37]. Each student uses a stylus to solve problems in the class. In particular, symbols and graphs are often used when solving a mathematical problem [38], and because free-form notes can be used on a tablet PC [39], if a stylus is used, notes can be written freely without the limitation of form. In fact, there was a comment that the tablet PC and stylus are suitable for mathematical education [40]. 'Integrating information in context' appears when traces are left on a document when a stylus is used. During a class, when an educator composes a trace on a learning material, it is interpreted that the trace is related to the corresponding page. For example, through P2PASS, if students leave comments to each other on a problem-solving page [32], or an educator leaves comments on reports when evaluating student reports [34], they are interpreted as contents related to the corresponding pages. In addition, because traces written with the stylus can lead to the loss of original data and to confusion, a lossless data embedding technique has to be taken into consideration [41, 42]. 'Versatility in the style of traces' appears when the characteristics of digital display are combined with the stylus. In many education-related software applications, various colours represent the movements of the stylus [33, 34, 37, 43-46], and can be used to distinguish or emphasise entities. For example, in a basketball diagram for physical education, various colours were used to distinguish the offence and defence positions or to emphasise important positions [47]. 'Dynamic editing and history' appears when an educator saves the traces generated with the stylus as digital information. In the past, many students were occupied with copying down what educators wrote. However, because those notes are stored on the Internet and can be accessed later, students can focus on understanding the class lecture [40]. This also benefits educators. Because traces of previous studies remain, the educator can proceed with the lecture without breaks, and the traces of previous studies can be used too [48]. Moreover, the educator can track the problem-solving processes of students by using the 'Solution Viewer' feature of SetPad, a set theory problem-related software [49].

3.2 Inspection

3.2.1 Activity

In the inspection domain, 'ideation' and 'crafts' activities exist, and there are a total of five inspection methods: the Torrance tests of creative thinking (TTCT), which is a creative testing method based on figure drawing [50, 51]; the clock drawing test (CDT) to draw numbers on a given circle and the trail making test (TMT) to perform the task of sequentially connecting the circles, which can confirm cognitive dysfunction [52]; pain drawings (PDs), which are used to check the physical location of pain and to identify the level of pain with a colour [53]; and spiral drawing, which is used to diagnose Parkinson's disease [54, 55]. 'Ideation' refers to the activity of expressing thoughts by using a stylus and includes TTCT, CDT, and PDs. In TTCT, a picture is finished according to a given condition [56]; in CDT, a clock is drawn in a given circle, or a circle is drawn by itself and then the clock is drawn [52]; and in PDs, shades are applied to a picture of the body based on the pain experienced by the subject [53]; and they all place a meaning on expressing a thought and do not require correct answers. Crafts refer to the activities of making artifacts using the stylus, and TMT and spiral drawing are applicable. An inspectee performs a given task as directed. In TMT, a task of connecting 25 continuous targets is performed [52], and in spiral drawing, a task of simply drawing a spiral picture is performed [54, 55]. TMT and spiral drawing focus on accurate and fast task completion.

3.2.2 Affordance

Affordances in the inspection domain are 'externalising thoughts and memorising', 'producing high-fidelity marks', 'tacit and automatic use', 'interweaving symbolic and figurative content', and 'rich personal expression'. 'Externalising thoughts and memorising' appears when one's own thoughts are expressed with a stylus when performing TTCT, CDT, and PDs. According to Zabramski and Neelakannan [50], no differences exist between the TTCT results of two input devices, an analogue pen and a stylus. This means that a stylus can be used instead of an analogue pen when testing creativity. 'Producing high-fidelity marks' appearing as an accurate drawing is required when performing TMT or spiral drawing. According to Surangsrirat and Thanawattano [54], 'radial error of the tracing' is used as a characteristic parameter of spiral drawing. This means that accurate drawing is an important factor of inspection. 'Tacit and automatic use' appears in all the inspections because the stylus is perceived as a familiar tool, similar to the analogue pen. When a human uses an analogue pen, few cognitive resources are used [12], and this may be why people do not find using a stylus difficult. 'Interweaving symbolic and figurative content' appears as figures, and is used instead of text letters when performing TTCT or CDT. According to Kim et al. [52], drawing is an activity that can show a degradation in a patient's cognition. In CDT, for example, missing numbers and number repetition are treated as errors [57]. This can be interpreted as 'interweaving symbolic and figurative content' being essential when performing an inspection activity using a stylus. 'Rich personal expression' may be interpreted as a reason for using a stylus when performing an inspection. Each person has his/her own characteristics, and inspection is the task of deriving a result that reflects the characteristics of each individual. The stylus is used to express an individual's characteristics, and at the same time to convert the expressed characteristics of the individual into digital information. Thus the stylus provides an opportunity to digitalise the inspections.

3.3 Signature

3.3.1 Activity

In the signature domain, only the 'crafts' activity exists, and it refers to signature generation. In biometric technology, a signature corresponds to behavioural biometrics [58], and has a high reliability [59]. In the signature verification process, a user who wants to prove his/her identity with a signature inputs his/her signature into the system in advance, and then saves the corresponding template in the system's database. Later, when a user wants to prove his/her identity, they can do so by comparing the signature in the database with the written signature for verification [60].

3.3.2 Affordance

'Producing high-fidelity marks' and 'rich personal expression' exist in the affordances of the signature domain. 'Producing high-fidelity marks' is an essential affordance for writing a precise signature. According to Robertson and Guest [58], the stylus has a tendency to move further than the finger, meaning that the stylus leaves more detailed traces than the finger does. It can be construed that the stylus can express more information about action characteristics than the finger, and its identity verification capability is higher than that of the finger. In support of this, Vera-Rodriguez, Tolosana, Ortega-Garcia and Fierrez [61] said that, in the case of skilled forgery, the reliability of the stylus was better than that of the finger. 'Rich personal expression' is an affordance that emerged as it became possible to create digital signatures with a stylus. In the case of signatures written using an analogue pen, a complicated procedure is required for verification; however, a digital signature written using a stylus can be verified anywhere and in real time. Going one step further, a study was conducted to prove a user's identity by using a digital fingerprint and a digital signature together [62].

3.4 Document

3.4.1 Activity

In the document domain, there were three activities: 'recording', 'crafts', and 'user interface (UI) interaction and non-ink traces'. 'Recording' appeared in the editing results and the applications of such results, and 'craft' occurred mostly when editing a document by using gestures. In the document domain, recordings of document editing activity, process, and results were completed simultaneously. In other words, 'recording' and 'craft' appeared at the same time in most cases. The stylus allowed the user to perform document editing via natural gestures, and thoughts could be recorded immediately in the document editing results [63-65]. Furthermore, the stylus has advantages in that figures or irregular records can be easily edited in addition to text, and the document editing processes can be recorded [63]. The results derived from recording and editing a document are middle artifacts, and can be used for communication between users; and, owing to document writing and editing carried out on a digital device in a way similar to that of pen-and-paper, users were able to experience familiar materials [63-65]. Furthermore, in a study on the evaluation of letter acquisition with a stylus on a tablet PC, which was performed by targeting children diagnosed with a developmental disability, a written letter became an immediate record (recording), and by using the records for evaluation, effective inspection was facilitated [66].

'UI interaction and non-ink traces' is an activity that is carried out in the process of using convenient functions, such as immediate edit and undo, when writing and editing a document using a stylus [63-65]. Moreover, an auxiliary system was proposed to facilitate direct writing on the screen using a stylus when presenting with Microsoft PowerPoint. Because the stylus could be used to draw figures or to use editing functions (e.g., the useful function of drawing a mark) for better explanation during a lecture, knowledge delivery efficiency was increased [65]. In addition, Matulic et al. [64] proposed two-handed document editing that uses finger touch and stylus simultaneously.

3.4.2 Affordance

Applicable affordances in the document domain were 'rich personal expression', 'accuracy for pointing and dragging', and 'dynamic editing and history'. 'Rich personal expression' is an affordance that emerged as inputs of various expressions, besides text, that were facilitated with a soft keyboard. Information, which is difficult to express with an individual's thoughts and text, could be drawn with various marks, including figures, and this facilitated rich information delivery [64]. The stylus that facilitates inputs of shape, gesture, and text can be used to implement various rich expressions of children [66].

'Accuracy for pointing and dragging' means that, when performing an interaction (e.g., dragging and tapping) using a stylus, accurate target assignment and position movement are possible. The stylus tip, which is more accurate than the fingertip, is a very important affordance in the document domain [64]. In particular, accurate records are essential in letter inspection of young children with disabilities. Owing to 'accuracy for pointing and dragging' of the stylus, children were able to input letters accurately; thus accurate inspection became possible [65].

'Dynamic editing and history' appeared when editing a document on a tablet PC using a stylus and in lectures using a stylus. The marks used during a presentation facilitated immediate concretisation and recording (annotation) of the rich expressions of individuals [65]. Furthermore, document editing with gesture interaction using the stylus facilitated immediate and dynamic editing. User satisfaction was high when using a system that facilitated editing on a limited screen (e.g., a tablet PC) without a separate input application [63].

3.5 Summary

The stylus was used in the four domains of education, inspection, signature, and document, and in this study, activities and affordances used extensively in each domain were derived (Table 2). In summary, the educator used the stylus when leaving traces to deliver his/her thoughts to the students. The traces written by the educator were delivered to the students along with the learning materials, and the students studied by referencing the traces and the learning materials simultaneously. It can be said that, when a stylus is used, learning materials are used better than with traditional education methods. In the Inspection domain, the subjects used styluses as input tools to perform the inspections. In the inspection process, the stylus was used as a tool to extract the physical and mental characteristics of inspectees, rather than as a tool simply to check marking. This was possible because the stylus used few cognitive resources. In the signature domain, the stylus was used when people attempted to verify their identities with digital signatures. A digital signature produced using a stylus is written accurately because of the sharp tip of the stylus, which is why a digital signature written with a stylus has high reliability. In the document domain, the stylus was extensively used in document editing through gesture interaction, and the editing results were also frequently used. In addition, it was discovered that the stylus can be used for editing and recording input information other than text letters.

4 IMPROVING USABILITY BY PROVIDING SENSORY FEEDBACK

Although the touchscreen is used extensively in human-computer interaction (HCI) systems, there is a problem in that, during user interaction with a touchscreen and a finger, a part of the interface is covered owing to the 'fat finger problem' [67]. As a solution, users began to use a stylus, which solved the fat finger problem by minimising the touching area of the interface. Furthermore, when a user is performing an interaction, feedback can be absent owing to the characteristics of the touchscreen, and the absence of feedback can decrease the device's reliability for the user [68]. Thus various studies have been conducted to improve usability by providing proper sensory feedback when the stylus is used. Since Fiorentino, Uva, and Monno [69] conducted a study on the development of Senstylus for three-dimensional (3D) computer-aided design (CAD) in virtual reality, various virtual environment-based studies have been carried out on tasks performed on 3D space. In other words, studies on stylus system development to improve usability by providing sensory feedback were carried out mainly in the two use environments - touchscreen and virtual - and the majority were in the touchscreen environment.

Among sensory forms of feedback, visual, auditory, and haptic can be used for information delivery [70], and so the stylus system development studies were examined to determine which combinations of the three forms of sensory feedback were provided. First, the studies providing a single sensory feedback used only the haptic feedback (no study provided only visual feedback or auditory feedback). Next, the studies that provided multiple sensory feedback were carried out in only the touchscreen environment: four studies provided visual and haptic feedback together, and two studies provided auditory and haptic feedback together. In all the studies that provided sensory feedback, haptic feedback was always included, which indicates that haptic feedback is crucial to improving the usability of the stylus system.

According to psychological and physiological definitions, the term 'haptic' refers to an ability to experience the environment through exploration with the hand, such as when estimating an object's shape and physical property by touching it [71]; such haptic-based interfaces have grown into a general HCI field [72-74]. The lack of haptic feedback was pointed out as a problem when interacting with touch and pen devices [75]. Because human fingertips are the most sensitive tactile sensors [76], the experiences of controllability and interaction are improved only when proper haptic feedback is provided [77]. Therefore, in this study, previous studies were examined to investigate which kinds of haptic feedback were provided to improve the usability of each developed stylus system.

4.1 Tasks/activities for touchscreen

The studies of stylus systems that provided sensory feedback were divided according to various tasks performed in the touchscreen environment. They were examined to determine which sensory feedback was provided for each task, and what effects such sensory feedback had on improving usability.

4.1.1 Button clicking

Kyung, Lee and Park [78] said that one of the most frequently raised complaints when using a touchscreen was the uncertainty whether a button was successfully pressed by tapping the screen. To overcome this problem, studies were performed to provide proper feedback for 'button clicking' [71, 78-87]. In these studies, haptic feedback was provided such that the user would know whether or not the button had been pressed. In most studies using haptic feedback, a sensation similar to that of a mechanical button that was familiar to the users was provided, and the clicking sensation improved user confidence and reduced the errors in and the completion time for test tasks, while facilitating easier control of the stylus system.

4.1.2 Handwriting

Because handwriting is usually carried out with the interaction of paper and pen, it is familiar to many users; and so, when writing on the smooth surface of a touchscreen with a stylus, they want to have an experience similar to writing on real paper with a pen. To satisfy this desire, studies have been performed to improve usability by providing sensory feedback when users write with a stylus [71, 88-90]. In most studies, a sensation similar to that of writing on paper was provided by haptic feedback, and when the feeling of real paper was produced, writing could be performed more accurately, and better results were shown in respect of efficiency, accuracy, and satisfaction than in the case of no haptic feedback.

4.1.3 Interactive drawing

A similar context to handwriting is that of interactive drawing. The studies on interactive drawing were carried out with the objective of feeling the textures that users expected [67, 71, 80, 81, 87-89]. These studies were carried out with the objective of providing haptic feedback for drawing and stroking gestures. However, when drawing, if the haptic feedback that is provided is not similar to real paper or other textures, it can confuse the user. Therefore, to improve usability, haptic feedback similar to the real texture must be provided [71, 87]. To this end, the temporal and spatial correlation of haptic feedback for dynamic gestures must be thoroughly investigated, and a technique that deals with delay is important [87]. Furthermore, in a study that provided haptic and auditory feedback together [81], the intensity of the haptic feedback was designed according to the drawing speed, and auditory feedback was also provided. When haptic feedback similar to a real texture was provided during the drawing process, user performance was significantly improved, and as the difficulty of the test task increased, the benefit increased too compared with the case of no haptic feedback.

4.1.4 Object manipulation

'Object manipulation' corresponds to dragging, dropping, scrolling, enlarging, and rotating tasks for files or objects in the graphical user interface (GUI), and studies were carried out to provide proper sensory feedback when performing object manipulation [67, 81, 83-85, 88-90]. These studies improved usability by providing haptic feedback. In the study of Kyung et al. [83], when performing a selection/movement task for an object such as an icon and file, haptic feedback is provided when the object is first selected and then when it is dropped; when performing a movement after object selection, an haptic bit is immediately generated according to the movement of the object, and the user can move the object one pixel unit at a time on the touchscreen. This solved the problem of the difficulty of accurately arranging the position of an object on a touchscreen, and increased the accuracy of object control. The haptic bit, which is provided during a movement task, can be applied to window scrolling and window size control in the same way. In the above study, different feedback was provided for closing a window and for maximising and minimising; when closing a window, a short vibration of 50 ms was provided to notify the user; and when maximising/minimising, the vibration was gradually strengthened or weakened for 100 ms, such that the user knew that the window was either maximised or minimised. If such haptic feedback is provided for object manipulation tasks, the GUI can be controlled more precisely and accurately and can feel more comfortable while improving the control speed. With respect to object manipulation, the users showed higher levels of satisfaction because of the accuracy and comfort provided by the haptic feedback, rather than the control speed.

4.1.5 Text editing

'Text editing' is another task performed in the GUI environment, and several studies were carried out [83, 85, 87]. Text editing corresponds with the 'copy and paste' or 'cut and paste' tasks performed by highlighting, dragging, and dropping text or by moving the cursor between texts. In general, because the usability of text editing functions is considerably lower in a touchscreen environment, they are not used [83]. However, Kyung et al. [83] facilitated more convenient text editing tasks by providing the same haptic feedback provided in object manipulation. When a text is highlighted using a stylus, haptic feedback is provided to notify the user that the corresponding text has been objectified, and when it is dragged and cut or pasted to a different location, haptic bits are generated according to the movement of the text, such that the user can finish the task accurately. When such haptic feedback was provided, the completion time of the test task was considerably reduced than in the case of not providing any haptic feedback; and as a result, accurate and fast text editing was facilitated on the touchscreen.

4.1.6 Information visualisation

The tasks discussed above occur regularly in a GUI environment. However, in addition to regular tasks, special tasks can be facilitated through combinations of stylus and sensory feedback. An example of such tasks is 'information visualisation', and studies on the subject have been carried out [91, 92]. Most users extract information from data visually, and if the data set has a large number of dimensions or is expressed in relation to another data set, the information that can be acquired just visually is limited [92]. The above studies provided haptic feedback in the climate visualisation domain - i.e., a typical example of information visualisation in which it is difficult to acquire information only visually. In general, haptic feedback is known to increase the ability to discover the cause-and-effect relationship in multi-dimensional climate data and data learning [93]. Arasan et al. [92] provided the user with vorticity, a climate-related form of data, through a haptic rotation effect, such that the rotational direction of air can be experienced, and also provided the rising and falling of wind levels through a haptic movement effect flowing up or down, based on the axis of the stylus, to help the user understand. The haptic rotation effect can be produced with the on-off of a high-torque direct current (DC) motor; however, to produce this haptic flow effect, multiple actuators have to be used. By changing the actuator's stimulation continuation time and the inter-stimulus onset interval (ISOI), the tactile illusion of continuous movement can be created [94]; and by changing the stimulation intensity of the actuator, a 'phantom' sensation can be produced that is perceived by using an illusory vibrating actuator located between real actuators [95]. Arasan et al. [92] performed a test for guessing rotational direction under the two conditions of a visual channel only and visual and tactile channels combined. In the results, no significant difference was observed in the rate of correct answers; however, user satisfaction was higher when haptic feedback was provided.

4.1.7 Texture and image rendering

If proper sensory feedback is provided with the stylus, texture and image rendering is possible, as demonstrated by several studies [71, 78-80, 82, 84, 87-89, 96-100], which not only showed the texture and image visually when interacting with the stylus on a touchscreen, but they also provided haptic feedback, such that the texture and image could be felt tactilely. For example, there were studies that facilitated the recognition of image and pattern through Braille-type haptic feedback of a tactile display [78,79,82]. A study was also carried out to feel the blocking and interval pattern of object borders with haptic feedback [84]. The tactile display of that study facilitated simple pattern recognition, and visually impaired people performed better than sighted people [78]. In addition, studies were carried out to recognise an image by performing haptic modelling with three elements: the height and the border of the image, and the rigidity of the virtual object on the image [98]. In this study, it was noted that, when interacting with an image of stone with large and irregular internal contours, the border contour should not be used to represent the contour of the image; further, additional haptic attributes such as texture and roughness have to be considered besides the image's height, border, and rigidity. To recognise the roughness of the texture, normal force - which is the pressure on a touchscreen - should be considered [97]. Taking these factors comprehensively into consideration, studies were performed on texture rendering with haptic feedback [96,100]. Because a user thinks about the texture to be felt on the corresponding surface, and uses auditory, visual, and tactile elements as auxiliary measures, it is important to provide the haptic feedback expected by the user [101].

4.1.8 Direct touch

Studies were carried out on direct touch that allowed the user to touch an object directly with a stylus when using a virtual reality application in a two-dimensional (2D) touchscreen environment [102-105]. Only limited interactions can be performed on a touchscreen because the screen surface is touched, not the digital world behind the screen [106]. Such an indirect touch approach is generally used in haptic systems [74, 107]; however, it is not natural because the sources of visual information and haptic information do not coincide spatially [108]. Unlike the indirect touch approach, the tangible user interface is a concept that facilitates direct touch by making the boundary between the physical environment and cyber space ambiguous [109]. This concept was proposed in the field of augmented reality to combine visual and tactile feedback [107]. Studies related to direct touch have primarily combined visual feedback and haptic feedback. In most studies, using a length-adjustable stylus, haptic feedback that appears as if the stylus is going into the touchscreen was provided; at the same time, visual feedback showing the real stylus inserted inside the touchscreen was provided by producing a virtual stylus on the touchscreen. Thus the user experiences the feeling of directly touching a virtual object [102, 103, 105]. Currently, according to studies on direct touch, the best method uses a length-adjustable stylus; however, the depth of the direct touching is limited owing to the limited length of the stylus. The above studies demonstrated excellent usability through haptic rendering of characteristics such as hardness and roughness when touching a virtual object.

4.1.9 Digital rubbing

Last, a study was carried out on digital rubbing [110]. Rubbing is a drawing technique similar to frottage, which reveals the texture hidden in the background by rubbing with a tool [111]. In the above digital rubbing study, the task of placing a piece of paper on an object and rubbing it with a pencil was extended to the digital domain. In other words, a digital image was displayed on a touchscreen, and after a piece of paper had been placed on the touchscreen, the rubbing task was facilitated simply by rubbing it with a stylus. For this task, when the stylus passed over the image, the stylus received the output signal, and the picture was drawn as the stylus was pushed away owing to the haptic feedback produced.

4.2 Task/activity for virtual environment

The studies on the development of the stylus system were carried out to provide sensory feedback in the virtual environment of a 3D CAD modelling domain. They were examined to see which sensory feedback was provided when performing 3D CAD modelling, and which effects were the result of the improvements to usability.

4.2.1 3D CAD modelling

Studies were carried out on 3D CAD modelling in the virtual environment [69,107,112]. These studies solved the limitations that commonly appear when performing 3D modelling with mouse and keyboard, which are two degrees of freedom (2DOF) devices that facilitate the ability to design freely with a stylus in 3D space. Fiorentino et al. [69] implemented the first stylus hardware to perform 3D CAD. It produced a rumble feedback when it collided with a virtual object in the 3D composition and in logo sketch tasks, such that depth information could be known, thereby improving its usability. In studies thereafter, kinesthetic feedback was provided with the stylus, such that a sensation of touching a virtual object was felt, thereby facilitating intuitive input and control [107,112]. These studies improved usability by constructing a 3D haptic interaction system to perform 3D sketches freely without a virtual canvas.

4.3 Summary

The studies of the stylus system that improved usability by providing sensory feedback were carried out in two environments: touchscreen and virtual environment. The studies in the touchscreen environment were divided specifically into button clicking, handwriting, interactive drawing, object manipulation, text editing, information visualisation, texture and image rendering, direct touch, and digital rubbing. However, the studies in the virtual environment were carried out only on 3D CAD modelling. The sensory feedback recommended for improved usability when performing each task/activity is summarised in Table 3.

5 DISCUSSION AND CONCLUSION

By summarising existing studies on improving the usability of styluses, sensory feedback that was suitable for certain tasks or activities was identified. It was applied to each task or activity that frequently occurs in the education and inspection domains. The criteria judged to be applicable were determined by checking whether each task of sensory feedback was described in studies in the domain. In the education domain, button clicking, interactive drawing, object manipulation, text editing, and digital rubbing tasks were applicable. Button clicking can be applied when generating a radial menu that involves mathematical operations [44, 49] or selecting a mathematical operation of a radial menu after its generation. When applying the task, if a haptic feedback similar to real button clicking is provided because the occurrence or non-occurrence of button clicking can be identified without any visual clues, user confidence increases. Interactive drawing can be applied to experience geological features [35] via the stylus. When attempting to feel the texture of geological features, haptic and auditory feedback similar to the real texture can be provided considering the drawing speed. Object manipulation can be applied when controlling a small icon. Because icons on a tablet PC are small, they are difficult to control [40]. Therefore, to solve the problem of controlling icons, an immediate haptic bit is provided such that the user does not need to rely on visual clues only. Text editing can be applied when inserting an annotation in a document or editing a mathematical equation [34, 44]. When adding a highlight in a document or moving a character of a mathematical equation, if proper haptic feedback is provided, editing can be completed quickly. Digital rubbing can be applied when performing a gesture that causes a page to create a new page [44]. When an existing page and a new page overlap, haptic feedback similar to the friction sensation of paper can be provided such that a feeling of handling real paper is given.

In the inspection domain, button clicking, handwriting, direct touch, and texture and image rendering are applicable. Button clicking can be applied to tapping tests for the diagnosis of Parkinson's disease [55]. When tapping a button, if proper haptic feedback is provided, the inspectee's errors in button tapping will be reduced and a more accurate inspection will be possible. Handwriting' and direct touch can be applied to TTCT, which is used in testing creativity. When TTCT is performed using a stylus, there is a physical distance discrepancy problem that occurs owing to the use of a glossy screen and the absence of friction between the paper and the physical pen [50]. This problem can be solved by appropriately providing haptic and visual feedback (e.g., with a virtual stylus) considering the friction and physical distance. Texture and image rendering can be applied when performing a digital pain drawing test [53]. Because this test is performed by shading a body chart, more accurate test results can be obtained if appropriate haptic feedback is provided at the borders such that the body parts can be easily distinguished.

In this study, literature reviews were carried out relating to the stylus. Studies investigating the stylus used as an input device on digital devices are mainly classified into those that use the stylus in certain domains, and those that focus on improving the usability of the stylus. By analysing 28 studies using the stylus in certain domains, the domains were classified into education, inspection, security, and document. The activities and affordances of the stylus corresponding with each domain were summarised, and implications were identified. The results obtained by summarising the roles and characteristics of the stylus in each domain could be useful data when extending the stylus into new fields. Through the analysis of 30 studies that dealt with improving stylus usability, the sensory feedback used for such improvement of the stylus was discussed. Proper sensory feedback was summarised systematically according to the environments and tasks in which styluses are used. The summary could be a guide when attempting to improve the usability of the stylus. In addition, based on the results, applicable sensory feedback was provided for the education and inspection domains by considering the tasks or activities. Various applicable combinations were derived; and we believe that these stylus-related studies and developments will be a valuable reference when developing new applications in the future.

ACKNOWLEDGEMENTS

This work was partially supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. 2020R1F1A1049180).

REFERENCES

[1] Holzinger, A., Searle, G., Peischl, B. & Debevc, M. 2012. An answer to "Who needs a stylus?" On handwriting recognition on mobile devices. In E-Business and Telecommunications, 314, (pp. 156-167). Berlin, Heidelberg: Springer. [ Links ]

[2] Cockburn, A., Ahlström, D. & Gutwin, C. 2012. Understanding performance in touch selections: Tap, drag and radial pointing drag with finger, stylus and mouse. International Journal of Human-Computer Studies, 70(3), 218233. [ Links ]

[3] Zabramski, S. 2011. Quickly touched: Shape replication with use of mouse, pen-and touch-input. Proceedings of the Conference: Interfejs uzytkownika - Kansei w praktyce, Warszawa 2011 (pp. 134-141). Warsaw: Wydawnictwo PJWSTK [ Links ]

[4] Zabramski, S. & Stuerzlinger, W. 2012. The effect of shape properties on ad-hoc shape replication with mouse, pen, and touch input. In MindTrek '12: Proceeding of the 16th International Academic MindTrek Conference (pp. 275-278). New York: ACM Digital Library. [ Links ]

[5] Zabramski, S., Shrestha, S. & Stuerzlinger, W. 2013. Easy vs. tricky: The shape effect in tracing, selecting, and steering with mouse, stylus, and touch. In Academic MindTrek '13: Proceedings of International Conference on Making Sense of Converging Media (pp. 99-103). New York: ACM Digital Library. [ Links ]

[6] Lee, S. & Zhai, S. 2009. The performance of touch screen soft buttons. In CHI '09: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 309-318). New York: ACM Digital Library. [ Links ]

[7] Arif, A.S. & Sylla, C. 2013. A comparative evaluation of touch and pen gestures for adult and child users. In IDC '13: Proceedings of the 12th International Conference on Interaction Design and Children (pp. 392-395). New York: ACM Digital Library. [ Links ]

[8] Tu, H., Ren, X. & Zhai, S. 2015. Differences and similarities between finger and pen stroke gestures on stationary and mobile devices. ACM Transactions on Computer-Human Interaction (TOCHI), 22(5). [ Links ]

[9] Ahn, J., Ahn, J., Lee, J.I. & Kim, K. 2016. Comparison of soft key-board types for stylus pen and finger-based interaction on Tablet PCs. Journal of the Korean Institute of Industrial Engineers, 42(1), 57-64. [ Links ]

[10] Tullis, T. & Albert, B. 2013. Measuring the user experience: Collecting, analyzing, and presenting usability metrics 2nd ed. Burlington MASS: Morgan Kaufmann Publishers. [ Links ]

[11] ISO. ISO 9241-11:2018: Ergonomics of human-system interaction-part 11: Usability: definitions and concepts. Geneva, Switzerland: International Organization for Standardization. [ Links ]

[12] Riche, Y., Riche, N.H., Hinckley, K., Panabaker, S., Fuelling, S. & Williams, S. 2017. As we may ink? Learning from everyday analog pen use to improve digital ink experiences. In CHI '17: CHI Conference on Human Factors in Computing Systems (pp. 3241-3253). New York: ACM Digital Library. [ Links ]

[13] Taveira, A.D. & Choi, S.D. 2009. Review study of computer input devices and older users. Intl. Journal of HumanComputer Interaction, 25(5), 455-474. [ Links ]

[14] Bruno Garza, J.L. & Young, J.G. 2015. A literature review of the effects of computer input device design on biomechanical loading and musculoskeletal outcomes during computer work. Work, 52(2), 217-230. [ Links ]

[15] Petrie, H. & Darzentas, J.S. 2017. Older people's use of tablets and smartphones: A review of research. In International Conference on Universal Access in Human-Computer Interaction (pp. 85-104). Springer, Cham. [ Links ]

[16] Wolfe, J. 2002. Annotation technologies: A software and research review. Computers and Composition, 19(4), 471497. [ Links ]

[17] Kudale, A.E. & Wanjale, K. 2015. Human computer interaction model based virtual whiteboard: A review. International Journal of Computer Applications, 975, 8887. [ Links ]

[18] Punchoojit, L. & Hongwarittorrn, N. 2017. Usability studies on mobile user interface design patterns: A systematic literature review. Advances in Human-Computer Interaction, 2017. [ Links ]

[19] Orphanides, A.K. & Nam, C.S. 2017. Touchscreen interfaces in context: A systematic review of research into touchscreens across settings, populations, and implementations. Applied Ergonomics, 61, 116-143. [ Links ]

[20] Wigdor, D. 2011. 57.4: Invited paper: The breadth-depth dichotomy: Opportunities and crises in expanding sensing capabilities. In SID Symposium Digest of Technical Papers (Vol. 42, No. 1, pp. 845-848). Oxford, UK: Blackwell Publishing Ltd. [ Links ]

[21] Hinckley, K., Pahud, M., Benko, H., Irani, P. Guimbretière, F., Gavriliu, M., 'Anthony' Chen, X., Matulic, F., Buxton, W. & Wilson, A. (2014, October). Sensing techniques for tablet+ stylus interaction. In Proceedings of the 27th annual ACM symposium on User interface software and technology (pp. 605-614). ACM. [ Links ]

[22] Jahagirdar, K., Raleigh, E., Alnizami, H., Kao, K. & Corriveau, P.J. (2016, July). A comprehensive stylus evaluation methodology and design guidelines. In International Conference of Design, User Experience, and Usability (pp. 424-433). Cham: Springer. [ Links ]

[23] Mohr, A., Xu, D.Y. & Read, J. 2010. Evaluation of digital drawing devices with primary school children: A pilot study. In Proc. of ICL (pp. 830-833). Hasselt. [ Links ]

[24] Park, E., Del Pobil, A. & Kwon, S. 2015. Usability of the stylus pen in mobile electronic documentation. Electronics, 4(4), 922-932. [ Links ]

[25] Forlines, C. & Balakrishnan, R. (2008, April). Evaluating tactile feedback and direct vs. indirect stylus input in pointing and crossing selection tasks. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 1563-1572). ACM. [ Links ]

[26] Ng, A., Annett, M., Dietz, P., Gupta, A. & Bischof, W.F. (2014, April). In the blink of an eye: investigating latency perception during stylus interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 1103-1112). ACM. [ Links ]

[27] Matulic, F. & Norrie, M. (2012, November). Empirical evaluation of uni-and bimodal pen and touch interaction properties on digital tabletops. In Proceedings of the 2012 ACM international conference on Interactive tabletops and surfaces (pp. 143-152). ACM. [ Links ]

[28] Havgar, P.A., Schwitalla, T., Valen, J., Eide, A.W., & Reutz, B.A. (2014, October). Usability on a shareable interface in a multiuser setting. In Proceedings of the 8th Nordic Conference on Human-Computer Interaction: Fun, Fast, Foundational (pp. 561-564). ACM. [ Links ]

[29] Annett, M., Gupta, A. & Bischof, W.F. 2014. Exploring and understanding unintended touch during direct pen interaction. ACM Transactions on Computer-Human Interaction (TOCHI), 21(5), 28. [ Links ]

[30] Kitani, A., Kimura, T. & Nakatani, T. 2017. Toward the reduction of incorrect drawn ink retrieval. Human-centric Computing and Information Sciences, 7(18). [ Links ]

[31] Norman, D.A. 1988. The psychology of everyday things. NY: Basic Books. [ Links ]

[32] Isabwe, G.M.N. (2012, June). Investigating the usability of iPad mobile tablet in formative assessment of a mathematics course. In Information Society (i-Society), 2012 International Conference on (pp. 39-44). IEEE. [ Links ]

[33] Runge, P. 2017. Technology in IVC classes. Journal on Empowering Teaching Excellence, 1 (1), 50-62. [ Links ]

[34] Cao, H. 2014. A tablet based learning environment. arXiv preprint arXiv: 1403.6006. [ Links ]

[35] Santamarta, J.C., Hernández-Gutiérrez, L.E., Tomás, R., Cano, M., Rodríguez-Martín, J. & Arraiza, M.P. 2015. Use of tablet PCs in higher education: A new strategy for training engineers in European bachelors and masters programmes. Procedia-Social and Behavioral Sciences, 191, 2753-2757. [ Links ]

[36] Van Hove, S., Vanderhoven, E. & Cornillie, F. 2017. The tablet for second language vocabulary learning: Keyboard, stylus or multiple choice/La tablet para el aprendizaje de vocabulario en segundas lenguas: teclado, lápiz digital u opción multiple. Comunicar, 25(1), 53-62. [ Links ]

[37] Maclaren, P., Wilson, D.I. & Klymchuk, S. 2018. Making the point: The place of gesture and annotation in teaching STEM subjects using pen-enabled Tablet PCs. Teaching Mathematics and its Applications: An International Journal of the IMA, 37(1), 17-36. [ Links ]

[38] Galligan, L., Loch, B., McDonald, C. & Taylor, J.A. 2010. The use of tablet and related technologies in mathematics teaching. Australian Senior Mathematics Journal, 24(1), 38-51. [ Links ]

[39] Fister, K.R. & McCarthy, M.L. 2008. Mathematics instruction and the tablet PC. International Journal of Mathematical Education in Science and Technology, 39(3), 285-292. [ Links ]

[40] Percival, J. & Claydon, T. 2015. A study of student and instructor perceptions of tablet PCs in higher education contexts. Higher Education In Transformation, 250-264 [ Links ]

[41] Cao, H. & Kot, A.C. (2009, April). Lossless data hiding for electronic ink. In Acoustics, Speech and Signal Processing, 2009. ICASSP 2009. IEEE International Conference on (pp. 1381-1384). IEEE. [ Links ]

[42] Cao, H. & Kot, A.C. 2010. Lossless data embedding in electronic inks. IEEE Transactions on Information Forensics and Security, 5(2), 314-323. [ Links ]

[43] Jelemenská, K., Cicák, P. & Koine, P. (2010, July). The pen-based technology towards the lecture improvement. In Proc. of International Conference on Education and New Learning Technologies (EDULEARN 2010) (pp. 67036712). [ Links ]

[44] Zeleznik, R., Bragdon, A., Adeputra, F. & Ko, H.S. (2010, October). Hands-on math: A page-based multi-touch and pen desktop for technical work and problem solving. In Proceedings of the 23nd annual ACM symposium on User interface software and technology (pp. 17-26). ACM. [ Links ]

[45] Fujii, S., Onishi, R. & Yoshida, K. 2011. Development of e-learning system using handwriting on screen. Knowledge-Based and Intelligent Information and Engineering Systems, 6883, 144-152. [ Links ]

[46] Nelson, M.K. 2015. Teaching educators how to integrate tablet PCs into their classrooms. 20th Annual Technology, Colleges, and Community Worldwide Online Conference. [ Links ]

[47] Nye, S.B. 2010. Tablet PCs: A physical educator's new clipboard. Strategies, 23(4), 21-23. [ Links ]

[48] Lau, A.P.T. & Ho, S.L. (2012, August). Using iPad 2 with note-taking apps to enhance traditional blackboard-style pedagogy for mathematics-heavy subjects: A case study. In Proceedings of IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE) 2012 (pp. H3C-4). IEEE. [ Links ]

[49] Cossairt, T.J., & LaViola, J.J. Jr. (2012, June). SetPad: a sketch-based tool for exploring discrete math set problems. In Proceedings of the International Symposium on Sketch-Based Interfaces and Modeling (pp. 47-56). Eurographics Association. [ Links ]

[50] Zabramski, S. & Neelakannan, S. (2011, October). Paper equals screen: a comparison of a pen-based figural creativity test in computerized and paper form. In Procedings of the Second Conference on Creativity and Innovation in Design (pp. 47-50). ACM. [ Links ]

[51] Zabramski, S., Gkouskos, D. & Lind, M. 2011. A comparative evaluation of mouse, pen-and touch-input in computerized version of the Torrance tests of creative thinking. In De-sire' 11 Conference-Creativity and Innovation in Design, October 19-21, 2011, Eindhoven, Netherlands (pp. 383-386). The Association for Computing Machinery. [ Links ]

[52] Kim, H., Cho, Y.S. & Do, E.Y.L. (2011, July). Using pen-based computing in technology for health. In International Conference on Human-Computer Interaction (pp. 192-201). Springer, Berlin, Heidelberg. [ Links ]

[53] Cruder, C., Falla, D., Mangili, F., Azzimonti, L., Araújo, L.S., Williamon, A, & Barbero, M. 2018. Profiling the location and extent of musicians' pain using digital pain drawings. Pain Practice, 18(1), 53-66. [ Links ]

[54] Surangsrirat, D. & Thanawattano, C. (2012, March). Android application for spiral analysis in Parkinson's Disease. In 2012 Proceedings of IEEE Southeastcon (pp. 1-6). IEEE. [ Links ]

[55] Westin, J., Dougherty, M., Nyholm, D. & Groth, T. 2010. A home environment test battery for status assessment in patients with advanced Parkinson's disease. Computer Methods and Programs in Biomedicine, 98(1), 27-35. [ Links ]

[56] Kim, K.H. 2006. Can we trust creativity tests? A review of the Torrance tests of creative thinking (TTCT). Creativity Research Journal, 18(1), 3-14. [ Links ]

[57] Ismail, Z., Rajji, T.K. & Shulman, K.I. 2010. Brief cognitive screening instruments: An update. International Journal of Geriatric Psychiatry, 25(2), 111-120. [ Links ]

[58] Robertson, J. & Guest, R. 2015. A feature based comparison of pen and swipe based signature characteristics. Human Movement Science, 43, 169-182. [ Links ]

[59] Shridhar, M., Houle, G., Bakker, R. & Kimura, F. (2006, October). Real-time feature-based automatic signature verification. In Tenth International Workshop on Frontiers in Handwriting Recognition, Université de Rennes 1, La Baule (France). [ Links ]

[60] Subpratatsavee, P., Pudtuan, P., Charoensuk, J., Sondee, T. & Vejchasetthanon, T. (2014, May). The authentication of handwriting signature by using motion detection and qr code. In Information Science and Applications (ICISA), 2014 International Conference on (pp. 1-4). IEEE. [ Links ]

[61] Vera-Rodriguez, R., Tolosana, R., Ortega-Garcia, J. & Fierrez, J. (2015, March). e-BioSign: Stylus-and finger- input multi-device database for dynamic signature recognition. In Bio-metrics and Forensics (IWBF), 2015 International Workshop on (pp. 1-6). IEEE. [ Links ]

[62] Suh, J. & Bae, S.M. 2015. Veri-Pen: Digital pen system for authenticating digital authorship. In Proceedings of International Design Conference of KSDS and ADADA with Cumulus, 82-85. [ Links ]

[63] Costagliola, G., De Rosa, M. & Fuccella, V. 2017. The design and evaluation of a text editing technique for stylus- based tablets. In Proceedings-DMSVLSS 2017: 23rd International Conference on Distributed Multimedia Systems, Visual Languages and Sentient Systems. [ Links ]

[64] Matulic, F. & Norrie, M.C. (2013, October). Pen and touch gestural environment for document editing on interactive tabletops. In Proceedings of the 2013 ACM international conference on Interactive tabletops and surfaces (pp. 41-50). ACM. [ Links ]

[65] Ueda, K. & Murota, M. 2010. Presentation support software using mobile device for interactive lectures. In The 18th International Conference on Computers in Education (p. 28). [ Links ]

[66] Lorah, E.R. & Parnell, A. 2014. The acquisition of letter writing using a portable multi-media player in young children with developmental disabilities. Journal of Developmental and Physical Disabilities, 26(6), 655-666. [ Links ]

[67] Wintergerst, G., Jagodzinski, R., Hemmert, F., Müller, A. & Joost, G. (2010, July). Reflective haptics: enhancing stylus-based interactions on touch screens. In International Confer-ence on Human Haptic Sensing and Touch Enabled Computer Applications (pp. 360-366). Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-14064-8_52 [ Links ]

[68] Wigdor, D., Williams, S., Cronin, M., Levy, R., White, K., Mazeev, M. & Benko, H. (2009, October). Ripples: utilizing per-contact visualizations to improve user interaction with touch displays. In Proceedings of the 22nd annual ACM symposium on User interface software and technology (pp. 3-12). ACM. https://doi.org/10.1145/1622176.1622180 [ Links ]

[69] Fiorentino, M., Uva, A.E. & Monno, G. 2005. The Senstylus: A novel rumble-feedback pen device for CAD application in Virtual Reality. WSCG '2005: Full Papers: The 13-th International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision 2005 in co-operation with EUROGRAPHICS: University of West Bohemia, Plzen, Czech Republic, 131-138. [ Links ]

[70] Iqbal, S.T. & Horvitz, E. (2010, February). Notifications and awareness: A field study of alert usage and preferences. In Proceedings of the 2010 ACM conference on Computer supported cooperative work (pp. 27-30). ACM. https://doi.org/10.1145/1718918.1718926 [ Links ]

[71] Boscaglia, N.S., Gaudio, L.A. & Ribeiro, M.R. (2011, January). A low cost prototype for an optical and haptic pen. In Biosignals and Biorobotics Conference (BRC), 2011 ISSNIP (pp. 1-4). IEEE. https://doi.org/10.1109/BRC.2011.5740660 [ Links ]

[72] Minsky, M.D.R. 1995. Computational haptics: The sandpaper system for synthesizing texture for a force-feedback display. (Doctoral dissertation, Massachusetts Institute of Technology). [ Links ]

[73] Ando, H., Miki, T., Inami, M. & Maeda, T. (2002, July). The nail-mounted tactile display for the behavior modeling. In ACM SIGGRAPH 2002 conference abstracts and applications (pp. 264-264). ACM. https://doi.org/10.1145/1242073.1242277 [ Links ]

[74] Massie, T.H. 1996. Initial haptic explorations with the phantom: Virtual touch through point interaction. (Doctoral dissertation, Massachusetts Institute of Technology). [ Links ]

[75] Buxton, W., Hill, R. & Rowley, P. 1985. Issues and techniques in touch-sensitive tablet input. ACM SIGGRAPH Computer Graphics, 19(3), 215-224. https://doi.org/10.1145/325165.325239 [ Links ]

[76] Johansson, R.S. & Vallbo, A.B. 1979. Tactile sensibility in the human hand: Relative and absolute densities of four types of mechanoreceptive units in glabrous skin. The Journal of Physiology, 286(1), 283-300. https://doi.org/10.1113/jphysiol.1979.sp012619 [ Links ]

[77] Choi, S. & Tan, H.Z. (2005, July). Toward realistic haptic rendering of surface textures. In ACM SIGGRAPH 2005 Courses (p. 125). ACM. https://doi.org/10.1145/1198555.1198612 [ Links ]

[78] Kyung, K.U., Lee, J.Y. & Park, J. 2008. Haptic stylus and empirical studies on braille, button, and texture display. Journal of biomedicine and biotechnology, 2008, 369651. [ Links ]

[79] Kyung, K.U. & Park, J.S. (2007, March). Ubi-Pen: Development of a compact tactile dis-play module and its application to a haptic stylus. In EuroHaptics Conference, 2007 and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems. World Haptics 2007. Second Joint (pp. 109-114). IEEE. https://doi.org/10.1109/WHC.2007.121 [ Links ]

[80] Kyung, K.U., Lee, J.Y. & Park, J. (2007, October). Design and applications of a pen-like haptic interface with texture and vibrotactile display. In Frontiers in the Convergence of Bioscience and Information Technologies, 2007. FBIT 2007(pp. 543-548). IEEE. https://doi.org/10.1109/FBIT.2007.92 [ Links ]

[81] Kyung, K.U. & Lee, J.Y. (2008, August). wUbi-Pen: windows graphical user interface interacting with haptic feedback stylus. In ACM SIGGRAPH 2008 new tech demos (p. 42). ACM. https://doi.org/10.1145/1401615.1401657 [ Links ]

[82] Kyung, K.U. & Lee, J.Y. (2009). Ubi-Pen: A haptic interface with texture and vibrotactile display. IEEE Computer Graphics and Applications, 29(1). https://doi.org/10.1109/MCG.2009.17 [ Links ]

[83] Kyung, K.U., Lee, J.Y. & Srinivasan, M.A. (2009, March). Precise manipulation of GUI on a touch screen with haptic cues. In EuroHaptics conference, 2009 and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems. World Haptics 2009. Third Joint (pp. 202-207). IEEE. https://doi.org/10.1109/WHC.2009.4810865 [ Links ]

[84] Park, S.M., Lee, K. & Kyung, K.U. (2011, January). A new stylus for touchscreen devices. In Consumer Electronics (ICCE), 2011 IEEE International Conference on (pp. 491-492). IEEE. https://doi.org/10.1109/ICCE.2011.5722700 [ Links ]

[85] Kyung, K.U., Lee, J.Y., Park, J. & Srinivasan, M.A. 2012. wUbi-pen: Sensory feedback stylus interacting with graphical user interface. PRESENCE: Teleoperators and Virtual Environments, 21(2), 142-155. https://doi.org/10.1162/PRES_a_00088 [ Links ]

[86] Lee, J.C., Dietz, P.H., Leigh, D., Yerazunis, W.S. & Hudson, S.E. (2004, October). Haptic pen: a tactile feedback stylus for touch screens. In Proceedings of the 17th annual ACM symposium on User interface software and technology (pp. 291-294). ACM. https://doi.org/10.1145/1029632.1029682 [ Links ]

[87] Poupyrev, I., Okabe, M. & Maruyama, S. (2004, April). Haptic feedback for pen computing: directions and strategies. In CHI'04 extended abstracts on Human factors in computing sys-tems (pp. 1309-1312). ACM. https://doi.org/10.1145/985921.986051 [ Links ]

[88] Wang, Q., Ren, X. & Sun, X. (2016, November). EV-pen: an electrovibration haptic feed-back pen for touchscreens. In SIGGRAPH ASIA 2016 Emerging Technologies (p. 8). ACM. https://doi.org/10.1145/2988240.2988241 [ Links ]

[89] Wang, Q., Ren, X., Sarcar, S. & Sun, X. (2016, November). EV-Pen: Leveraging Electrovi-bration Haptic Feedback in Pen Interaction. In Proceedings of the 2016 ACM on Interactive Surfaces and Spaces (pp. 57-66). ACM. https://doi.org/10.1145/2992154.2992161 [ Links ]

[90] Wang, Q., Ren, X. & Sun, X. (2017, May). Enhancing Pen-based Interaction using Electrovibration and Vibration Haptic Feedback. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (pp. 37463750). ACM. https://doi.org/10.1145/3025453.3025555 [ Links ]

[91] Arasan, A., Basdogan, C. & Sezgin, T.M. (2013, April). Haptic stylus with inertial and vi-bro-tactile feedback. In World Haptics Conference (WHC), 2013(pp. 425-430). IEEE. https://doi.org/10.1109/WHC.2013.6548446 [ Links ]

[92] Arasan, A., Basdogan, C. & Sezgin, T.M. 2016. HaptiStylus: A novel stylus for conveying movement and rotational torque effects. IEEE computer graphics and applications, 36(1), 30-41. https://doi.org/10.1109/MCG.2015.48 [ Links ]

[93] Yannier, N., Basdogan, C., Tasiran, S. & Sen, O.L. (2008). Using haptics to convey cause-and-effect relations in climate visualization. IEEE Transactions on Haptics, 1(2), 130-141. https://doi.org/10.1109/TOH.2008.16 [ Links ]

[94] Sherrick, C.E. & Rogers, R. 1966. Apparent haptic movement. Perception & Psychophysics, 1(3), 175-180. https://doi.org/10.3758/BF03210054 [ Links ]

[95] Alles, D.S. 1970. Information transmission by phantom sensations. IEEE Transactions on Man-machine Systems, 11(1), 85-91. https://doi.org/10.1109/TMMS.1970.299967 [ Links ]

[96] Romano, J.M. & Kuchenbecker, K.J. 2012. Creating realistic virtual textures from con-tact acceleration data. IEEE Transactions on Haptics, 5(2), 109-119. https://doi.org/10.1109/TOH.2011.38 [ Links ]

[97] Deng, P., Wu, J. & Zhong, X. (2016, July). The roughness display with pen-like tactile device for touchscreen device. In International Conference on Human Haptic Sensing and Touch Enabled Computer Applications (pp. 165176). Springer International Publishing. https://doi.org/10.1007/978-3-319-42324-1_17 [ Links ]

[98] Chen, D., Song, A. & Tian, L. (2015, October). A novel miniature multi-mode haptic pen for image interaction on mobile terminal. In Haptic, Audio and Visual Environments and Games (HAVE), 2015 IEEE International Symposium on (pp. 1-6). IEEE. https://doi.org/10.1109/HAVE.2015.7359445 [ Links ]

[99] Takeda, Y. & Sawada, H. (2013, November). Tactile actuators using SMA micro-wires and the generation of texture sensation from images. In Intelligent Robots and Systems (IROS), 2013 IEEE/RSJ International Conference on (pp. 2017-2022). IEEE. https://doi.org/10.1109/IROS.2013.6696625 [ Links ]

[100] Culbertson, H., Unwin, J. & Kuchenbecker, K.J. 2014. Modeling and rendering realistic textures from unconstrained tool-surface interactions. IEEE Transactions on Haptics, 7(3), 381-393. https://doi.org/10.1109/TOH.2014.2316797 [ Links ]

[101] Rocchesso, D., Delle Monache, S. & Papetti, S. 2016. Multisensory texture exploration at the tip of the pen. International Journal of Human-Computer Studies, 85, 47-56. https://doi.org/10.1016/j.ijhcs.2015.07.005 [ Links ]

[102] Withana, A., Kondo, M., Makino, Y., Kakehi, G., Sugimoto, M. & Inami, M. 2010. ImpAct: Immersive haptic stylus to enable direct touch and manipulation for surface computing. Computers in Entertainment (CIE), 8(2), 9. https://doi.org/10.1145/1899687.1899691 [ Links ]

[103] Nagasaka, S., Uranishi, Y., Yoshimoto, S., Imura, M. & Oshiro, O. (2014, November). Haptylus: haptic stylus for interaction with virtual objects behind a touch screen. In SIGGRAPH Asia 2014 Emerging Technologies (p. 9). ACM. https://doi.org/10.1145/2669047.2669054 [ Links ]

[104] Takagi, M., Arata, J., Sano, A. & Fujimoto, H. (2011, December). A new encounter type haptic device with an actively driven pen-tablet LCD panel. In Robotics and Biomimetics (ROBIO), 2011 IEEE International Conference on (pp. 2453-2458). IEEE. https://doi.org/10.1109/ROBIO.2011.6181673 [ Links ]

[105] Lee, J. & Ishii, H. (2010, April). Beyond: Collapsible tools and gestures for computational design. In CHI'10 Extended Abstracts on Human Factors in Computing Systems (pp. 3931-3936). ACM. https://doi.org/10.1145/1753846.1754081 [ Links ]

[106] Wang, F. & Ren, X. (2009, April). Empirical evaluation for finger input properties in multi-touch interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 1063-1072). ACM. https://doi.org/10.1145/1518701.1518864 [ Links ]

[107] Kamuro, S., Minamizawa, K., Kawakami, N. & Tachi, S. (2009, September). Ungrounded kinesthetic pen for haptic interaction with virtual environments. In Robot and Human Interactive Communication, 2009. RO-MAN 2009. The 18th IEEE International Symposium on (pp. 436-441). IEEE. https://doi.org/10.1109/ROMAN.2009.5326217 [ Links ]

[108] Minsky, M., Ming, O.Y., Steele, O., Brooks, F.P. Jr, & Behensky, M. (1990, February). Feeling and seeing: Issues in force display. In ACM SIGGRAPH Computer Graphics (Vol. 24, No. 2, pp. 235-241). ACM. https://doi.org/10.1145/146022.146092 [ Links ]

[109] Ishii, H. & Ullmer, B. (1997, March). Tangible bits: Towards seamless interfaces between people, bits and atoms. In Proceedings of the ACM SIGCHI Conference on Human factors in computing systems (pp. 234-241). ACM. https://doi.org/10.1145/258549.258715 [ Links ]

[110] Lee, W., Pak, J., Kim, S., Kim, H. & Lee, G. (2007, August). TransPen & MimeoPad: A playful interface for transferring a graphic image to paper by digital rubbing. In ACM SIGGRAPH 2007 emerging technologies (p. 23). ACM. https://doi.org/10.1145/1278280.1278304 [ Links ]

[111] Simpson, I. & Wood, L. 1987. The encyclopedia of drawing techniques. London: Headline. [ Links ]

[112] Kamuro, S., Minamizawa, K. & Tachi, S. (2011, June). An ungrounded pen-shaped kinesthetic display: Device construction and applications. In World Haptics Conference (WHC), 2011 IEEE (pp. 557-562). IEEE. https://doi.org/10.1109/WHC.2011.5945546 [ Links ]

Submitted by authors 16 Dec 2019

Accepted for publication 11 Dec 2020

Available online 28 /May 2021

# J. Sim and Y. Yim should be considered the co-first authors

* Corresponding author: kyungdoh.kim@hongik.ac.kr