Serviços Personalizados

Artigo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares em Google

Similares em Google

Compartilhar

South African Journal of Industrial Engineering

versão On-line ISSN 2224-7890

versão impressa ISSN 1012-277X

S. Afr. J. Ind. Eng. vol.30 no.3 Pretoria Nov. 2019

http://dx.doi.org/10.7166/30-3-2241

SPECIAL EDITION

A Patient-centric Six-sigma Decision Support System Framework for Continuous Quality Improvement in Clinics

S.N. HlongwaneI; C.N. NgongoniI; S.S. GrobbelaarI, II, *

IDepartment of Industrial Engineering, Stellenbosch University, South Africa

IIDST-NRF CoE in Scientometrics and Science, Technology and Innovation Policy, Stellenbosch University, South Africa

ABSTRACT

Primary health care facilities are widely regarded as the backbone of the South African healthcare system. For this reason, formalised standards such as the 'ideal clinic' and 'national core standards' dictate expected service levels for clinics. Although this is a big step towards the improvement of service delivery at the facilities, the level of uptake of and adherence to these standards is concerning. Service quality plays a huge role in the level of patient satisfaction, and emphasis is placed on the features of quality that are of importance to the patient. To this end, the focus on the patient is an important dimension in healthcare quality management in order to improve the service quality in healthcare facilities. This article provides an overview of quality and how it is managed in the context of clinics in South Africa. It outlines the gaps, aligned with how well quality is managed, from a patient perspective. The paper proposes a decision support framework aimed at continuous improvement of quality in clinics. The tool was developed using the Six Sigma methodology, complemented by service quality assessment instruments. The structure of the tool provides an integrated systematic approach that can assist the healthcare decision-maker in tracking the continuous improvement of processes and activities in clinics. The tool also takes the first step towards digitising a typical paper-based system.

OPSOMMING

Primêre gesondheidsorgfasiliteite word wyd beskou as die ruggraat van die Suid-Afrikaanse gesondheidsorgstelsel. Om hierdie rede word formele standaarde deur die 'ideale kliniek' en 'Nasionale kernstandaarde' bepaal. Alhoewel dit 'n groot stap is vir die verbetering van dienslewering by die fasiliteite, is die vlak van opname en nakoming van hierdie standaarde kommerwekkend. Diensgehalte speel n groot rol in die vlak van pasiëntbevrediging, en klem word geplaas op die eienskappe van kwaliteit wat van belang is vir die pasiënt. Vir hierdie doel is die fokus op die pasiënt n belangrike dimensie in gesondheidsorgkwaliteitsbestuur ten einde die diensgehalte in gesondheidsorgfasiliteite te verbeter. Hierdie artikel bied n oorsig oor kwaliteit en hoe dit in die konteks van klinieke in Suid-Afrika bestuur word. Dit beskryf die gapings van hoe goed kwaliteit bestuur word, uit n pasiëntperspektief. Die artikel stel n besluitsteunraamwerk voor wat op deurlopende verbetering van gehalte in klinieke gemik is. Die instrument is ontwikkel met behulp van die Ses-Sigma metodologie, aangevul deur dienskwaliteit assesseringsinstrumente. Die struktuur van die instrument bied n geïntegreerde sistematiese benadering wat die gesondheidsorgbesluitnemer kan help om die deurlopende verbetering van prosesse en aktiwiteite in klinieke te monitor. Die instrument neem ook die eerste stap in die rigting van digitalisering van n tipiese papiergebaseerde stelsel.

1 INTRODUCTION

In 2013 the National Department of Health (NDoH) initiated the 'ideal clinic' (IC) initiative as a way of systematically reducing the inefficiencies in primary health care (PHC) facilities. By the end of 2018, 1,507 facilities had qualified as ICs, with this milestone being attributed to a collaboration between the NDoH and the National Treasury in resolving supply chain anomalies around equipment, essential supplies, and infrastructure [1]. Nevertheless, the qualification of IC status does not warrant such a standard forever; maintenance and improvement of quality in the service delivery process at clinics is thus important for the overall strengthening of health systems.

When it comes to the healthcare sector, service quality consists of numerous characteristics that shape the experience of the care received by the patient. Service quality is a measure of an organisation's ability to meet and potentially exceed the expectations of the customer, and is formulated from personal needs, past service experience, and word-of-mouth [2]. Contextually, patients assess the healthcare that they receive by evaluating dimensions that reflect what they personally value. This has given rise to patient-centric service delivery, resulting from the increasing quality consciousness of patients [3], [4]. Patient-centred care is an important aspect of quality service, as it is a method that involves placing the patient first by providing care that is respectful and responsive to their needs and preferences [5].

A series of distinct gaps are created when a patient's perception of the delivered service does not meet expectations. The gaps between perception and expectation that occur in service delivery institutions, adapted from Ramseook-Munhurrun [2], are listed as:

Gap 1. The difference between patient expectations and management perceptions of patient expectations.

Gap 2. The difference between management perceptions of patient expectations and service quality specifications.

Gap 3. The difference between service quality specifications and the service delivered. Gap 4. The difference between service delivery and what is communicated about the service to patients.

2 PURPOSE OF THE PAPER

The primary objective of this paper is to present a generic decision support system (DSS) framework to guide the continuous improvement of quality in clinics. To develop the framework effectively, it is necessary to identify the aspects that are required for continuous improvement in healthcare, as well as the dynamics of the South African health system. Since the geographical context of the tool is South Africa, the standards aligned with South African PHC will be outlined and integrated into the tool. The resulting DSS framework consists of different criteria to evaluate service quality dimensions in ongoing clinic projects. The main aim of the framework is to provide an integrated referral point for PHC quality management. This offers a base for the digitisation of a paper-centric process and allows the development of assistive tools (such as mobile applications) that can reduce the workload of the health workforce in the South African public healthcare system. The framework is intended to serve as a tool that can assist clinics in managing quality through continuously improving processes and activities to ensure that the decisions made are in the best interest of the patients. It ultimately encourages the decision-maker to consider the various factors that influence quality management in order to improve service quality. The framework consists of five phases, which reflect a systematic approach. The processes are generic and can be applied to various clinics in South Africa.

3 A BRIEF LITERATURE REVIEW

3.1 Continuous improvement in South African healthcare

There is a widespread perception that the service in public healthcare facilities in South Africa has deteriorated over the years. In 2009, public healthcare in South Africa was characterised as being inefficient and ineffective [6]. Facilities that lack the human resources to provide care for the growing population cause strenuous working conditions for workers; this, in turn, is shown to have a positive correlation with job dissatisfaction, and also contributes to the high turnover and absenteeism of employees, as shown in Table 1 [6], [7]. A failure to establish standards and norms in these healthcare facilities contributes to poor service delivery [8]. The level of service dissatisfaction is more prominent in government health facilities than in private facilities. Areas of patient dissatisfaction include: long waiting times, staff attitudes, medicine stock-outs, and staff shortages [9]. This inadvertently affects quality management in healthcare institutions, as the satisfaction of the doctors, nurses, and support staff and supply chain actors directly contributes to the service delivered to patients [10]. The low level of satisfaction serves as an indicator for taking corrective action to achieve positive results [10].

3.2 Quality management dimensions and standards in healthcare

According to the list of national core standards developed by the NdoH's Quality Assurance Directorate in 2000, healthcare facilities are required to have medicine, supplies, and a mechanism to obtain emergency supplies [12]. The standards also stipulate that these facilities are required to have electricity, cold and hot water, and reliable communication in the form of telephones or a two-way radio [8], [13]. However, although there are standards, PHC facilities still have unresolved issues, such as a lack of piped water, inadequate infrastructure, and staff shortages [8]. Many of these public healthcare facilities are still considered to be in a state of crisis due to the run-down, dysfunctional infrastructure that prevails as a result of underfunding, mismanagement, and neglect [7].

According to the national core standards (NCS), an expected functional and quality level exists for healthcare facilities to ensure adequate satisfaction levels.

Ideal clinic (IC) standards for PHCs form the basis for the assessment of all healthcare establishments in South Africa, including clinics [13]. An 'ideal clinic' is one that functions optimally through the presence of a combination of elements. The checklist embodies a strategy that was developed to respond to the inadequacies of the quality of primary health care services in South Africa. This is achieved by determining the status of a clinic's performance against carefully selected elements pertaining to quality and safety regulations [13], [14]. The effect of inadequate quality management on the service quality in these facilities is examined. This is essentially done to identify the prominent features of quality that affect the service that is delivered to patients. To achieve this, aspects of service, waiting time, and medicine availability in these healthcare facilities are observed. In this study, the ideal clinic checklist, deduced from the NCS, is used to ensure that clinics address and adhere to all standards.

Quality in healthcare is defined as

doing the right thing for the right patient, at the right time, in the right way to achieve the best possible results [15, p. 1635].

Five important dimensions highlight the importance of quality service delivery to the patient, and form the basis for their expectations. These dimensions are: tangibles, reliability, assurance, confidence, and empathy (see Table 2) [16]-[19]. It is important to note that the significance of the different dimensions differs, depending on the context [16].

Quality in healthcare should be viewed as a process that aims to minimise external variability in order to maximise efficiency and effectiveness, thus ensuring that patient expectations are continuously met [15], [16]. This can be done by integrating process management tools such as Six Sigma.

4 METHODOLOGY AND CONCEPTUALISATION

In this study, three DSS frameworks were reviewed and compared to provide the baseline structure for the integration of quality management tools into continuous process improvement at clinics. These were the benchmarking framework [20], multiple criteria decision analysis (MCDA) [21], and Six Sigma [19], [22].

The benchmarking framework consists of two stages that involve a focus on improving internal effectiveness of an organisation, and a focus on improving external competitiveness [20]. This framework involves an internal comparison of the best practices from high-performing departments in the organisation. These best practices are identified and adopted by other departments to improve the overall performance of the organisation. The benchmarking framework requires organisations to identify their strengths and weaknesses before the benchmarking tool is adopted [20].

'MCDA' is an umbrella term describing a collection of approaches that seek to assist individuals or organisations in exploring decisions that matter, by using multiple dimensions [21], [23]. MCDA approaches are classified broadly into value measurement models, outranking models, and reference level models. For the purposes of this study, only the value measurement approach is considered, as the other techniques are rarely used in the healthcare industry [21]. The MCDA framework supports complex decision-making using problem identification, problem structuring, model development, model approval, and action plan development. In the broader sense, this entails defining the problem, selecting criteria, measuring alternatives, scoring alternatives, weighting criteria, aggregation, uncertainty analysis, and interpretation of results [21], [23]. In order to implement MCDA successfully, analysts, experts, stakeholders, and decision-makers are all required to be involved.

Six Sigma is a methodology that can be applied to processes to eliminate the root cause of problems through problem-solving and improvement techniques [22]. In order to achieve the goal of Six Sigma, the 'define, measure, analyse, improve, and control' (DMAIC) methodology must be implemented to ensure the continuous improvement of processes through continuously reducing errors [19]. The Six Sigma philosophy centres around the need to improve what an organisation is currently doing, measuring the outcomes, and improving to a more productive state [19]. In the context of this study, this implies consideration of the PHC facility and alignment of the strategic objectives of the NDoH with patient and employee satisfaction. In order to do so, Taner, Sezen and Antony [19] identified the following key concepts for consideration in the healthcare context [19]:

1. Critical to quality: attributes most important to the patient.

2. Defect: failing to deliver what the patient wants. In terms of impact on the patient, a defect in the delivery of healthcare can range from relatively minor to significant. In a worst-case scenario, the defect can be fatal, as when a medication error results in the patient's death.

3. Process capability: what the healthcare process can deliver.

4. Variation: what the patient sees and feels.

5. Stable operations: ensuring consistent and predictable processes to improve what the patient experiences.

6. Design for Six Sigma: designing to meet patients' needs and the facility's process capability.

7. Lean Six Sigma: integration of lean thinking to address speed and better flow of the processes by eliminating waste.

The three research methodologies were evaluated against criteria developed by the researchers that were based on the research objectives, as well as on alignment with continuous improvement (see Table 3). Six Sigma was selected as the best and most applicable framework for the study.

5 TOWARDS A HEALTHCARE CONTINUOUS IMPROVEMENT DECISION SUPPORT FRAMEWORK

This section presents the development of the DSS framework that will act as a tool to guide the decision-making process along a series of eleven sequential steps that are guided by the Six Sigma process (see Figure 1). This DSS framework for continuous quality improvement in clinics incorporates the five dimensions of quality that were explained earlier: tangibles, reliability, responsiveness, assurance, and empathy [16]-[18].

The phases encompass the Six Sigma aspects of defining the problem, measuring current performance, and analysing, improving, and controlling processes in PHC facilities, shown in Figure 1 are explained below [19] .

5.1 Phase 1: Define the problem

Step 1: Measure and map patient expectations and perceptions of quality care; & Step 2: Identify problem area: The five quality dimensions are arranged in the form of a questionnaire for the patients to fill in prior to, and after, being served [16]-[18]. The questionnaire consists of a rating system between 1 and 5 - the highest value that can be achieved. The questionnaire is essentially used to identify problem areas that are relevant to patients.

Step 3: Define goals and objectives aligned with continuous improvement in the clinic: The goals and objectives of the clinic need to be set to provide a focus on the identified problem area, and to make priorities clear to all employees involved. The breakdown of goals into objectives is demonstrated in the 'specific measurable attainable relevant timebound' tool. Effective goal setting is important, as it has a major influence on the success of the healthcare facility [24].

5.2 Phase 2: Measure current performance

Step 4: Align standards with identified problem area (NCS + IC standards): In this phase, the performance of the clinic as it currently stands is measured (using the NCS and the IC standards form) for the basic requirements for both quality and safety for these facilities [13], [25]. The standards are considered to be a reflection of international best practices that have been tailored to the needs of South Africa [12].

Step 5: Use integrated standards to measure current performance: Once the standards are aligned, measurements of the current clinic performance are mapped against the NCS and IC checklists. The structure of the NCS consists of seven domains that reflect the health systems approach. The first three domains (domain 1: patient rights; domain 2: patient safety and governance and care; domain 3: clinical support services) relate to the business aspect of the health system, while the final four domains (domain 4: public health; domain 5: leadership and corporate governance; domain 6: operational management; domain 7: facilities and infrastructure) act as a support system to ensure that the former are delivered. The domains are broken down into sub-domains, which are associated with measurement criteria [12]. To fill in the IC checklist, the PHC administrator is required to fill in either a Y (for yes) or an N (for no) under the performance column for the domain(s) under question. Once completed, a colour coding system is used to indicate whether the clinic has achieved, partially achieved, or not achieved the domain(s) being examined. The suggested layout is shown in Figure 2.

5.3 Phase 3: Analyse

Step 6: Identify root cause categories: A cause-and-effect diagram is used to identify each root cause of the problem areas that were identified in Phases 1 and 2. For the development of the tool, the physical structure of the diagram was reconstructed into a network diagram. This was done mainly to make the tool more user-friendly for the decision-makers. The main factors that contribute to poor service delivery in healthcare facilities, as identified above, are broken down into the main causes, sub-causes and, in some cases, sub-sub-causes.

Step 7: Identify root causes: In this step, elements of the cause-and-effect diagram are used to identify the root causes of poor service quality in clinics. It is important to note that these categories consist of the most common causes and sub-causes of poor service delivery that clinics experience [6], [11]. This approach is illustrated in Figure 3, using an extract that represents the dissatisfied workers' category.

5.4 Phase 4: Improve

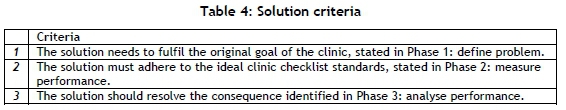

Step 8: Test potential solutions: The purpose of this phase is to develop solutions that eliminate the root causes of poor service quality in clinics. Potential solutions are tested during a trial period to determine their effectiveness. This involves evaluating the solutions in real-world conditions, where the new procedures are tried under typical clinic conditions. In order for solutions to reach the trial stage, they must meet the criteria described in Table 4.

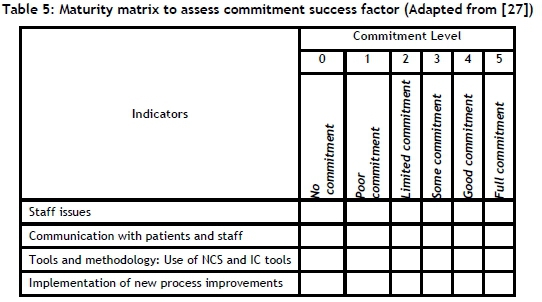

Step 9: Benchmark for successful and sustainable integration of identified solutions: Solutions that fit the criteria and that have proven to be effective then need to be standardised to ensure better service quality for patients. However, this is not an easy task, as employee resistance to change is the most frequently cited implementation problem that is encountered when change is introduced [26]. For this reason, technical change is not the only aspect of change that is focused on in this phase; the role of the human dimension in implementing change is also considered by using the benchmarking tool. This aspect is addressed by a maturity matrix, which is the first step of the benchmarking tool. The matrix measures the clinic's change management performance using the five success factors, to determine whether the change management practices of the clinic are effective. The matrix consists of a rating system between zero and five that assesses the clinic's level of maturity in change management. Indicators comprising each of the success factors are used to ensure accurate assessment of the facility's change management practices.

The indicators for commitment are used to demonstrate the clinic assessment process. Using the rating system shown in Table 5, the indicators test the level of commitment of clinic employees. The same procedure is followed for the remaining success factors. These commitment levels are filled in as a maturity matrix, as outlined in Table 4. The results of this benchmarking process can be represented as a radar chart that integrates the display of multivariate data into an action plan.

5.5 Phase 5: Control

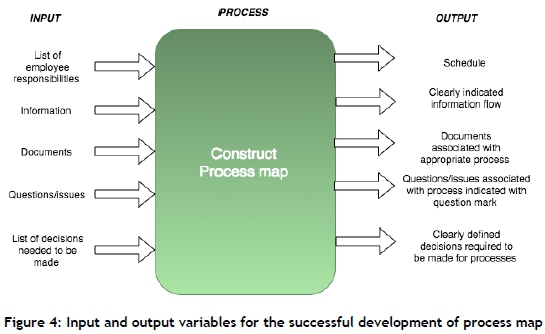

Step 10: Document procedures for standardised quality: The fifth and final phase of the framework involves controlling the performance of the clinic. In this phase, the improved process, as developed in Phase 4, is documented using a process mapping tool. This is done to ensure that all workers are aware of their new responsibilities, and to provide detail on how the improved process is expected to be delivered. Figure 4 shows the input and output variables that are required for the successful development of the process map.

Step 11: Repeat phases 1 -5: The second part of this phase focuses on developing a mechanism that will ensure that the processes in clinics are continuously monitored and improved. The idea is systematically to maintain the gains of the newly standardised solutions that were developed in Phase 4 of the framework, and to improve other poorly performing sections within the clinic. This is achieved using a feedback loop; this involves measuring the newly standardised procedures and comparing the results with the required performance standards. The synthesised DSS framework is depicted in Appendix A.

6 FRAMEWORK EVALUATION

The DSS framework formulated in the study was evaluated through a three-part process, summarised in Table 6. This was done to ensure that the framework was aligned to the standards, was applicable to the PHC context, and was relevant to the current PHC facility processes.

Standards adherence: According to the quality improvement (QI) guide, five foundation stones are needed to build a quality improvement process; these align with teamwork, data, systems, patients, and communication [25]. The foundation stones formed the specifications that were used to validate the DSS framework, as can be seen in Table 7. Even though the framework met the majority of the specifications, those that were not met indicated an opportunity for future improvement of the tool. The table describes in detail the improvements that are to be implemented in the respective phases of DSS framework.

It was also assessed whether the elements of the framework are consistent with the methodology of the Operation Phakisa initiative [28]. The latter is government's initiative to assist targeted sectors to develop sector-wide solutions to pressing concerns, and to implement priority programmes that are better, faster, and more effective. The framework reflects highlighted features of the methodology of the Operation Phakisa initiative (see Figure 4).

Context validation: For this part, a clinic was selected, the current process flows were observed, and interviews with the PHC facility administrator and regional administrator were undertaken. The main focus was to determine whether the framework can support and assist public clinics to improve the quality of service that patients receive through the continuous improvement of processes and activities. The three significant indicators observed were: an understaffed facility, a lack of electronic equipment (such as computers), and a large patient-to-nurse ratio. These constraints implied that a significant amount of data was filled in on paper before being entered into an electronic system by data entry clerks. Notably, some aspects that were included in the framework are already being undertaken by the clinic, such as a general patient satisfaction survey, monthly brainstorming sessions for different problems in the PHC facility, and use of the IC checklist. However, the nature and extent of data analysis was unclear.

Framework validation: The purpose of the survey was to validate whether the framework could assist in the continuous improvement of processes and/or activities in public clinics, in order to provide patients with a quality service. The survey assesses the importance of the content in each of the phases of the DSS framework. In order to achieve this, the survey required that the criteria of each of the phases be rated on a scale of 1 (not important) to 5 (very important). The results of the survey from the administrator are shown in Table 8.

The results of the survey indicate that the criteria in each of the phases are all equally essential, with a rating of 5 associated with each phase. However, the practicality of the tool was rated at 3, indicating that certain aspects of the tool need to be improved for efficiency.

7 FURTHER WORK

This study was aimed at integrating fragmented tools into a single one, and to serve as a first step towards digitising the continuous improvement process in clinics. Digitisation assists in understanding health system data through analysing process variations. The authors suggest that a case study of several other clinics be undertaken to get a holistic overview of tool efficiency. Some points of note are to:

• when constructing surveys, make use of rating systems that patients can easily understand;

• improve the efficiency of the framework by migrating from paper-based decision-making tools to automated systems; and

• create a framework that is more predictive in nature to support further the decision-making process of the user.

8 CONCLUSION

The nature of the tool allows for it to be easily accessible to all clinics in under-developed areas. The many challenges faced by these clinics impede their ability to perform efficiently and effectively, which in turn affects the service delivered to patients. With exploitation of the DSS framework, the continuous improvement of processes and activities in clinics can strengthen their capacity to improve the health and well-being of patients. For this reason, it is evident that the development of the DSS framework supports features of the sustainable development goals (SDG) - specifically, those of good health and well-being (SDG 3) and industry innovation and infrastructure (SDG 9)*.

Using the DSS framework, decision-makers can follow the systematic steps of the tool to manage quality through the continuous improvement of processes in the clinic. The improvement of service quality is a by-product of the development of the DSS framework, and essentially enhances the lives of people who live in low-income areas.

REFERENCES

[1] Department of Health. 2018. Annual Report 2017/2018. Pretoria: South African Government. [ Links ]

[2] Ramseook-Munhurrun, P. 2010. Service quality in the public service. Int. J. Manag. Mark. Res. IJMMR, 3(1), pp. 37-51. [ Links ]

[3] Aman, B. & Abbas, F. 2016. Patient's perceptions about the service quality of public hospitals located at District Kohat. JPMA J. Pak. Med. Assoc., 66(1), p. 72-75. [ Links ]

[4] Kenagy, J.W., Berwick, D.M. & Shore, M.F. 1999. Service quality in health care: The scenario. Word J. Int. Linguist. Assoc., 281(7), pp. 661-665. [ Links ]

[5] Wardman, B., Kelly, L. & Weideman, M. 2013. Voice of the customer. In eCrime Researchers Summit (eCRS), pp. 1-7. [ Links ]

[6] Pillay, R. 2009. Work satisfaction of professional nurses in South Africa: A comparative analysis of the public and private sectors. Hum. Resour. Health, 7(1), p. 15. [ Links ]

[7] Mayosi, B.M. & Benatar, S.R. 2014. Health and health care in South Africa: 20 years after Mandela. N. Engl. J. Med., 371(14), pp. 1344-1353. [ Links ]

[8] Cullinan, K. 2006. Health services in South Africa: A basic introduction. South Afr. Health News Serv., January, p. 38. [ Links ]

[9] Peltzer, K. and Phaswana-Mafuya, N. 2013. Depression and associated factors in older adults in South Africa. Global Health Action., 6, pp. 1-9. [ Links ]

[10] Talib, F., Rahman, Z. & Azam, M. 2011. Best practices of total quality management implementation in health care settings. Health Mark Q., 28(3), pp. 232-252. [ Links ]

[11] Mudaly, P. & Nkosi, Z.Z. 2015. Factors influencing nurse absenteeism in a general hospital in Durban, South Africa. J. Nurs. Manag., 23(5), pp. 623-631. [ Links ]

[12] Whittaker, S., Shaw, C., Spieker, N. & Linegar, A. 2011. Quality standards for healthcare establishments in South Africa. SAHR, 48(4), pp. 59-67. [ Links ]

[13] South African National Department of Health. 2016. Ideal Clinic Definitions, Components and Checklists. Retrieved from https://www.idealhealthfacility.org.za/docs/guidelines/Booklet%20-%20Ideal%20Clinic%20Dashboard%20-%20revision%2016%20published%20Feb%202017.pdf *https://sustainabledevelopment.un.org/?menu=1300 [ Links ]

[14] Wright, C., Street, R., Cele, N., Kunene, Z., Balakrishna, Y., Albers, P., Mathee, A. 2017. Indoor temperatures in patient waiting rooms in eight rural primary health care centers in northern South Africa and the related potential risks to human health and wellbeing. Int. J. Environ. Res. Public. Health, 14(1), p. 43. [ Links ]

[15] Yuan, F. & Chung, K.C. 2016. Defining quality in health care and measuring quality in surgery. Plast. Reconstr. Surg., 137(5), pp. 1635-1644. [ Links ]

[16] Sewell, N. 1997. Continuous quality improvement in acute health care: Creating a holistic and integrated approach. Int. J. Health Care Qual. Assur., 10(1), pp. 20-26. [ Links ]

[17] Guesalaga, R. & Pitta, D. 2014. The importance and formalization of service quality dimensions: A comparison of Chile and the USA. J. Consum. Mark., 31(2), pp. 145-151. [ Links ]

[18] Jiang, L., Jun, M. & Yang, Z. 2016. Customer-perceived value and loyalty: How do key service quality dimensions matter in the context of B2C e-commerce? Serv. Bus., 10(2), pp. 301-317. [ Links ]

[19] Taner, M.T., Sezen, B. & Antony, J. 2007. An overview of six sigma applications in healthcare industry. Int. J. Health Care Qual. Assur., 20(4), pp. 329-340. [ Links ]

[20] Deros, B.M., Yusof, S.M., Azhari, S. 2006. A benchmarking implementation framework for automotive manufacturing SMEs. Benchmarking Int. J., 13(4), pp. 396-430. [ Links ]

[21] Thokala, P., Devlin, N., Marsh, K., Baltussen, R., Boysen, M., Kalo, Z., Longrenn, T., Mussen, F., Peacock, S., Watkins, J., Ijzerman, M. 2016. Multiple criteria decision analysis for health care decision making: An introduction: Report 1 of the ISPOR MCDA emerging good practices task force. Value Health J. Int. Soc. Pharmacoeconomics Outcomes Res., 19(1), pp. 1-13. [ Links ]

[22] Girmanová, L., Sole, M., Kliment, J., Divoková, A. & Miklos, V. 2017. Application of Six Sigma using DMAIC methodology in the process of product quality control in metallurgical operation. Acta Technol. Agric., 20(4), pp. 104-109. [ Links ]

[23] Saarikoski, H., Barton, D.N., Mustajoki, J., Keune, H., Gomez-Baggethun, E. & Langemeyer, J. 2015. Multi-criteria decision analysis (MCDA) in ecosystem service valuation. OpenNESS Ecosyst. Serv. Ref. Book, 1(17), pp. 1-5. [ Links ]

[24] MacLeod, L. 2012. Making SMART goals smarter. Physicians Exec., 38(2), pp. 68-72. [ Links ]

[25] South African National Department of Health. 2012. Quality Improvement: the key to providing improved quality of care. Ideal Health Facility. [ONLINE] Available at: https://www.idealhealthfacility.org.za/. [Accessed 23 April 2018]. [ Links ]

[26] Bovey, W.H. & Hede, A. 2001. Resistance to organisational change: The role of defence mechanisms. J. Manag. Psychol., 16(7), pp. 534-548. [ Links ]

[27] Clarke, A. & Manton, S. 1997. A benchmarking tool for change management. Bus. Process Manag. J., 3(3), pp. 248-255. [ Links ]

[28] Department of Health. 2014. Operation Phakisa. [Online]. Available: https://www.operationphakisa.gov.za/Pages/Home.aspx. [Accessed: 14-Oct-2018]. [ Links ]

* Corresponding author ssgrobbelaar@sun.ac.za