Servicios Personalizados

Articulo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares en Google

Similares en Google

Compartir

South African Journal of Childhood Education

versión On-line ISSN 2223-7682

versión impresa ISSN 2223-7674

SAJCE vol.9 no.1 Johannesburg 2019

http://dx.doi.org/10.4102/sajce.v9i1.677

ORIGINAL RESEARCH

Measuring the outcomes of a literacy programme in no-fee schools in Cape Town

Mlungisi Zuma; Adiilah Boodhoo; Joha Louw-Potgieter

Section of Organisational Psychology, School of Management Studies, University of Cape Town, Cape Town, Rondebosch, South Africa

ABSTRACT

BACKGROUND: Most funders require non-governmental organisations to evaluate the effectiveness of their programmes. However, in our experience, funders seldom fund evaluation endeavours and organisational staff often lack evaluation skills.

AIM: In this outcome evaluation of Living through Learning's (LTL) class-based English-medium Coronation Reading Adventure Room programme, we addressed two evaluation questions: whether Grade 1 learners who participated in the programme attained LTL's and the Department of Basic Education's (DBE) literacy standards at the end of the programme and whether teacher attributes contributed to this improvement.

SETTING: The evaluation was conducted in 18 different no-fee schools in Cape Town. Participants comprised 1090 Grade 1 learners and 54 teachers.

METHODS: We used Level 2 (programme design and theory) and part of Level 4 (outcome) of an evaluation hierarchy to assess the effectiveness of the programme.

RESULTS: Evaluation results showed that most schools, except three, attained the 60% performance standard set by the LTL on all quarterly assessments. Most schools, except two, attained the 50% performance standard of the DBE for English first language on all quarterly assessments. We also found that in terms of teacher attributes, only teacher experience in literacy teaching was significant in predicting learner performance in literacy in the first term of school.

CONCLUSION: We explain why our results should be interpreted with caution and make recommendations for future evaluations in terms of design, data collection and levels of evaluation.

Keywords: literacy programme; no-fee schools; outcome evaluation; reading rooms; teacher attributes; theory evaluation.

Introduction

Various national and international assessments have shown the poor state of reading ability of South African learners (see the Department of Basic Education's [DBE] Annual National Assessments [ANAs] 2013; the Regional Southern and East Africa Consortium for Monitoring Education Quality Report - SACMEQ [Moloi & Chetty 2011] and the Progress in International Reading Literacy Studies - PIRLS [Howie et al. 2011; Mullis et al. 2016]). Grigg et al. (2016) mentioned how Non-governmental Organisations (NGOs) have implemented programmes to remedy this state of affairs. Increasingly, funders are requiring that these NGOs evaluate the effectiveness of their programmes. However, in our experience, little, if any, funding is provided for monitoring and evaluation of programmes. Combined with this lack of funds, we also find that while programme staff members show great expertise in the content and implementation of their own programmes, they often lack the necessary monitoring and evaluation skills. Grigg et al. (2016) attempted to address the latter shortcoming by proposing core evaluation questions and research designs for evaluating reading programmes. These evaluation questions focused on problem definition, theories of change, programme implementation, programme outcomes and impact, and programme cost. The research designs mainly focused on how change is measured, as it is assumed that all reading programmes are based on the assumption that learners will be better off after the reading programme than before.

In the study by Grigg et al. (2016), we noted that Living through Learning (LTL) provided its external evaluator with high-quality data. Living through Learning monitored Grade 1 learners' literacy performance four times per year by using their own assessments and the teachers' Curriculum Assessment Policy Statements (CAPS) assessments. These data enabled the evaluator to track over time whether a large group of children who were exposed to the programme improved their English literacy skills. In this study, we show how we used LTL's data for an evaluation of its Coronation Reading Adventure Room (CRAR) programme, an English-medium literacy development programme. We also address how data, collected by programme staff, influence the independence of the evaluation and the selection of an appropriate evaluation design. Finally, we show how to guide NGO staff towards more complex research designs, thus addressing a knowledge gap in collaborative evaluation.

Programme description of the Coronation Reading Adventure Room

Living through Learning started implementing the CRAR programme in 2012 (S. Botha, pers. comm., 27 February 2016) and is an NGO located in Wynberg, Cape Town. The main aim of the programme is to empower children from poor communities through education, specifically by means of developing and improving English literacy in learners, by building learners' confidence in reading and writing, and by equipping teachers with effective teaching skills to administer the programme successfully in schools (S. Adams, pers. comm., 04 March 2016). Current programme sites are Athlone, Belhar, Bishop Lavis, Delft, Grassy Park, Gugulethu, Lotus River, Mitchell's Plain, Nyanga, Parow, Stellenbosch and Strandfontein (S. Adams, pers. comm., 04 March 2016).

Any no-fee primary school close to the premises of LTL is eligible to apply for the programme (S. Adams, pers. comm., 04 March 2016). After receiving the application, LTL visits the schools where interviews and assessments are conducted to ensure that the schools have all the requirements needed for the programme.

The CRAR programme consists of two main activities, firstly, it builds teachers' skills to teach English literacy and manage a classroom and, secondly, it assists teachers to implement a child literacy programme for Grade 1 learners in the classroom. The teachers from participating schools attend all-day workshops for three consecutive Saturdays. Teachers receive training in classroom management, class discipline, barriers to learning, the role of the educator and the literacy programme. They also learn how to equip a reading adventure room and develop learning content for targeted learners (S. Adams, pers. comm., 04 March 2016). During and after the training, each teacher works with an LTL facilitator. These facilitators, who act as teaching assistants, work closely with the teachers to set up the programme and the reading room. They also provide support to the teachers throughout the duration of the programme.

Within each participating school, a specific classroom is allocated to CRAR and the room is decorated with vibrantly coloured educational images, letters and toys. Living through Learning provides learners' workbooks, a teacher's manual, board games, toys and stationery.

Teachers who deliver the programme are trained in the CRAR literacy method, which is an easy, systematic and phonics-based way to learn to read and write in English. The majority of children on the programme do not speak English as a first language. Before learners start the programme, they are assessed for their basic knowledge of English by means of formation of sounds, filling in missing sounds, picture matching, doing puzzles and following mazes. During the programme, learners start with sound recognition of familiar sounds (phonics), then use familiar sounds to form three-letter words (blends using sliding), thereafter they progress to recognition of more unfamiliar sounds and, lastly, they continue to form three-letter words using these unfamiliar sounds. For each English lesson, teachers follow a CRAR lesson plan to ensure that the literacy programme is implemented with fidelity and that active learning is taking place (S. Adams, pers. comm., 04 March 2016). The CRAR curriculum is aligned with CAPS, which is the national curriculum for public schools in South Africa.

Teachers provide LTL with regular feedback on learners' progress in the form of attendance registers, weekly assessments and end of term reports (S. Adams, pers. comm., 04 March 2016). At the end of each term, learners are assessed by means of both CRAR and CAPS assessments. Living through Learning designs the four CRAR assessments and the teachers design the four CAPS assessments (S. Adams, pers. comm., 04 March 2016).

Evaluation framework

We would like to introduce the evaluation hierarchy that we used as a framework for this evaluation. Rossi, Lipsey and Freeman (2004) outlined five levels in their evaluation hierarchy. We have added the core evaluation questions to each one of these levels (Table 1).

In consultation with the programme staff, we used Level 2 (design and programme theory) and part of Level 4 (outcome) for this evaluation. These are described in detail in the following section.

Programme theory

Like all development programmes, the CRAR programme is based on the assumption that it will change the programme beneficiaries. In this section, we explore the programme theory (also called the theory of change) that underlies the CRAR programme and assess whether it is plausible. This is Level 2 of the evaluation hierarchy.

A programme theory is a sensible and plausible model of how the programme activities will change the beneficiaries (Bickman 1987). It is basically the 'story of change' for the programme. The CRAR programme has a one-page document depicting its goals and outcomes and a detailed two-page logical framework consisting of programme elements, evaluative questions, indicators, targets and measures. We simplified these two documents into a diagram that would enable programme staff to understand the programme linkages at a glance. This simplified model for the Grade 1 programme is presented in Figure 1.

From the programme goals and outcomes document, the logical framework and Figure 1, it can be concluded that the following change assumptions underlie the CRAR programme, firstly, the activities of the CRAR programme may lead to improved foundational phase literacy; secondly, successful acquisition of early literacy may influence later academic performance; and thirdly, classroom resources may contribute to literacy acquisition. In order to test the plausibility of these assumptions, we used EBSCOhost and Google Scholar to source relevant literature for review. We used the following search words, namely, 'literacy programmes', 'primary school literacy interventions', 'literacy programme evaluations', 'conducive classrooms' and 'tutoring primary school learners'.

The first assumption is that the content of the CRAR programme may lead to the short-term outcome of early literacy acquisition. The CRAR programme consists of the following activities, namely, identifying sounds, identifying letters, blending words, writing and comprehension. It is assumed that these activities may result in improvement in fluency, familiarity with words and vocabulary, comprehension and word recognition skills.

Reynolds, Wheldall and Madelaine (2010) systematically reviewed American studies such as those conducted by the National Reading Panel (NRP), the Independent Review of the Teaching of Early Reading (IRTER) and the National Inquiry into the Teaching of Literacy (NITL) and found that effective literacy programmes contain all the following components, namely, phonological awareness, phonics, fluency, reading, writing, vocabulary and a range of reading material. Stahl and Murray (1994) noted that activities such as sound manipulation, recognition of rhyming words and the matching of consonants are important for early acquisition of phonological awareness. Torgesen (2000) found that literacy interventions that emphasised phonological awareness in primary schools were more effective in promoting fluent reading than interventions that did not emphasise the phonological component.

Apart from sound and word recognition, fluency and comprehension (Reynolds et al. 2010) also constitute important elements of reading performance. Reading with fluency is critical for young children because it serves as a connector between comprehension and word recognition. Interventions that seek to improve reading fluency teach learners how to read text accurately, fast and with expression (Reynolds et al. 2010).

Studies have also emphasised vocabulary skills as another important aspect of an effective literacy intervention (Krashen 1989; Reynolds et al. 2010). Vocabulary skills can be taught through reading storybooks, conversations in class, listening tasks, word recognition and task restructuring (Reynolds et al. 2010). In addition to vocabulary, a writing component is also considered important in a literacy intervention programme. Reading and writing are interlinked and therefore should be taught in conjunction (Reynolds et al. 2010).

From the literature cited here, it is plausible to assume that the CRAR programme's components could lead to improved foundation phase literacy.

The second assumption is that early literacy interventions may influence later school performance. According to Nel and Swanepoel (2010), it is important to address literacy problems early in order for learners to attain academic success. A learner's ability to read is a strong predictor of later academic success (Van der Berg 2008). Reading provides building blocks to learning, and it is therefore important for children to master reading and gain the necessary skills early in their development. Torgesen (2000) stated that it is important for children to be competent in literacy during the first years of school as children who fail to master reading at that stage are at high risk of academic problems. The works of Spira, Bracken and Fischel (2005) and Reynolds et al. (2010) support the link between poor early literacy and later academic and psychological problems. The relationship between low literacy and low academic achievement in South Africa is well documented (Macdonald 2002; Matjila & Pretorius 2004; Pretorius 2002; Pretorius & Mampuru 2007).

Foundation phase is the ideal place to start introducing literacy intervention programmes. In this phase, children are provided with fundamental building blocks that will be useful throughout their academic life (Slavin et al. 2009). Sound recognition, converting sounds into letters and letters into words provide children with stepping stones to later grades when they expand their vocabulary, build fluency in English and the ability to understand texts (Slavin et al. 2009).

From the literature cited here, it can be concluded that the optimal entry point of literacy programmes would be Grade R, the first grade in foundational education, but that Grade 1, the second grade, would be early enough for effective intervention.

The third assumption is that sufficient classroom resources may be necessary for early literacy acquisition. Competent literacy teachers who are supported by trained facilitators and make optimal use of the dedicated reading room are essential inputs for the CRAR programme.

Day and Bamford (1998) and Pressley et al. (2001) identified effective teaching skills of literacy teachers, active engagement of learners and classrooms that foster a positive learning environment as important factors in literacy acquisition. A positive learning environment is welcoming, supportive, attractive and provides instructions (Conroy et al. 2009). Furthermore, Fraser (1998) noted that primary school learners perform better in classrooms that are well organised, have greater cohesion, are well resourced and goal orientated.

Krashen (1981) supported the idea of a dedicated classroom containing visual aids. Kennedy (2005) noted that children find bright and high contrasting colours stimulating and that such stimulation may help them to focus on that task at hand.

Coronation Reading Adventure Room programme teachers and facilitators are trained in classroom management and literacy teaching and fully resourced with teaching manuals, lesson plans and workbooks for learners. A dedicated reading room that is decorated in brightly coloured alphabets, words, pictures and toys contributes to creating a setting that is conducive to learning to read and write. Therefore, it is plausible to assume that sufficient resources, in the form of trained teachers, supportive facilitators, teaching and learning materials and a special room for reading, may contribute to successful acquisition of early literacy.

In summary, when tested against relevant social science literature, we can conclude that the assumptions underlying the CRAR programme are plausible and it is reasonable to expect that the programme will deliver its intended outcomes.

Evaluation questions

The LTL programme staff indicated that an outcome evaluation would be useful for them. An outcome evaluation is concerned with change in the state of affairs or the state of the programme participants; in this case, it focused on whether the literacy skills of the Grade 1 learners changed during the school year. We used Level 4 of Rossi et al.'s (2004) hierarchy of evaluation plus the programme theory (Level 2) to formulate a single, outcome-focused evaluation question for the programme:

1. Are the Grade 1 learners who participated in the CRAR programme able to read and write according to LTL and DBE standards?

Here LTL standards refer to the 60% performance standard that LTL used as a yardstick for adequate performance. Initially LTL started out with a standard of 85%, but soon realised that it was too high for the programme participants. Living through Learning then reduced the standard to 60%. Learners' performance on the CAPS assessments was compared to the DBE's pass marks for English first language (50%) and English additional language (40%) at the time of the evaluation. Although all learners in the sample learned to read in English, most of them were not English first language speakers and therefore the English additional language standard was added. These pass marks rather than the national CAPS assessment levels were used here, as teachers generally find the CAPS assessment levels difficult to implement and of limited use for tracking reading improvement.

As the CRAR programme focuses on providing competent teachers and classroom resources for literacy teaching, we also added an evaluation question which would assess the influence of these resources:

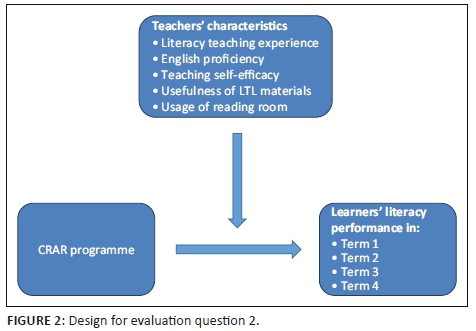

2. Did the teachers' language teaching experience, English language proficiency, self-efficacy, perceptions of usefulness of the LTL materials and usage of the reading room have any influence on the learners' literacy performance?

Method

Ideally, evaluators should be involved at programme conceptualisation. In real life, this seldom happens. Quite often, funders or programme staff request an affordable evaluation based on existing data collected by programme staff. While pre-existing data certainly save costs, such data also raise other dilemmas. Firstly, such data are often of poor quality (e.g. incomplete, mainly binary attendance data, sometimes reams of qualitative participant opinions about the programme, etc.). Secondly, pre-existing data may mean that evaluators often have to surmise the evaluation design which guided the data collection, as programme staff may not have made this explicit. Thirdly, external evaluators cannot claim an independent evaluation when using such data. In the case of the CRAR programme, the data quality was good (i.e. two sets of repeated measures which were complete and systematically reported). However, the data restricted us to utilising a rather weak evaluation design and although we were external evaluators, we cannot claim that our evaluation was independent.

Design

The design for this evaluation will be presented in terms of the two evaluation questions.

1. Are the Grade 1 learners who participated in the CRAR programme able to read and write according to LTL and DBE standards?

To assess the outcomes for the learners in the literacy programme, a single-group quasi-experimental design with two sets (i.e. CRAR and CAPS) of four repeated post-test performance measures was employed. Here the single-group quasi-experiment refers to Grade 1 learners on the programme and implies the absence of a control group of learners who did not get the programme. The two sets of four repeated measures are the CRAR and CAPS literacy assessments that are administered quarterly. A post-test-only design was chosen (in other words, no pre-test was included), as the pre-programme diagnostic test dealt with different outcome variables than the post-tests.

While performance on the two sets of assessments cannot be compared directly because they are different and measure different outcomes, we thought it would be interesting to examine whether learners participating in the CRAR programme also improved on their CAPS assessments.

2. Did the teachers' language teaching experience, English language proficiency, self-efficacy, perceptions of usefulness of the LTL materials and usage of the reading room have any influence on the learners' literacy performance?

To assess the attributes of the teachers, a descriptive design was employed. Descriptive research describes the current state of affairs (Salkind 2009) and for this evaluation the aim was to examine whether or not specific characteristics of teachers influenced the literacy performance of the learners. Figure 2 shows the details of this design.

Setting and participants

There were two sets of participants in this evaluation.

The first set consisted of Grade 1 learners from 18 different schools. For ethical considerations, the names of the schools were not disclosed and instead numbers were used: we refer to schools 1-18. All schools were public schools located in low-income areas around Cape Town in the Western Cape. Within the selected schools, the unit of analysis was Grade 1 learners who received the CRAR programme in 2015. In total, the sample consisted of 1090 learners in 54 classes. Class sizes ranged from 30 to 45 learners.

The second set of participants consisted of 54 teachers in 18 different schools who implemented the CRAR programme. Each class had one teacher and one LTL facilitator.

Instruments and data collection

Living through Learning signed a contract with each school to provide teacher training, facilitator provision and quarterly assessments of learners. The assessment data remained the intellectual property of LTL. These secondary data were used to answer evaluation question 1.

Living through Learning staff designed the four CRAR assessments. The evaluators did not have access to the details of these assessments. However, the programme staff disclosed the main literacy skills that were assessed in each term. These quarterly tests included (1) finding a picture and stating what it is and matching a letter to the picture (marked out of 20 marks); (2) word search, reading and circling words (marked out of 30 marks); (3) filling in the missing words, spelling, matching words to a picture, writing and comprehension (marked out of 55 marks); and (4) matching words to a picture, writing, comprehension, spelling and reading (marked out of 50 marks). The teachers and facilitators administered the measures.

In addition to the CRAR quarterly assessments, learners were also assessed by quarterly CAPS assessments which were developed and administered by each teacher.

For evaluation question 2, the influence of teacher characteristics on learner performance, an eight-item questionnaire was used. The first five items, relating to teaching self-efficacy, were adapted from a scale developed by Midgley, Feldlaufer and Eccles (1989). These items were phrased as follows: If I try really hard, I can get through to even the most difficult and unmotivated learner; If some learners in my class are not doing well in reading, I feel that I should change how I teach them; I use different teaching methods to help a learner to read; I can motivate learners who show low interest in their school work; I can provide an alternative explanation or example when learners are confused. At the time of development, Midgley et al. (1989) reported an alpha coefficient of 0.65 for this brief scale. For the current evaluation, the alpha coefficient was 0.57. Item 6 of the questionnaire assessed the teachers' perceptions of the usefulness of LTL's literacy teaching materials, whereas item 7 measured self-reported usage of the reading room. The first 7 items used a five-point Likert response format. A text box was used for item 8 where teachers had to indicate their literacy teaching experience in number of years. Living through Learning supplied the home language of each teacher. This was used as a measure of English proficiency.

Data analysis

Descriptive statistics (means and standard deviations) were used to answer evaluation question 1. Learners' performance on the CRAR assessments was compared to a single LTL performance standard (60%). Learners' performance on the CAPS assessments was compared to the DBE's pass mark for English first language (50%) and English additional language (40%).

Regression analysis was used to analyse the data for the second evaluation question. The outcome measure was learners' performance on the four NGO assessments. The predictor variables and their levels were language teaching experience (number of years), English proficiency (yes/no English first language), self-efficacy (average score on five Likert-type items), usefulness of LTL materials (score on a Likert-type item) and use of reading room (score on a Likert-type item). As we had multiple predictors and a continuous outcome variable, a multiple linear regression was employed. Where our categorical predictors had more than two levels, they were dummy coded for the linear regression model.

To ensure that multivariate assumptions were met for the regression, the data were examined for outliers, normality, homoscedasticity, linearity and multi-collinearity.

Ethical considerations

The Director of LTL gave written permission to conduct the evaluation of the CRAR programme. The Commerce Ethics in Research Committee of the University of Cape Town granted the permission to use secondary data and collect primary data.

Results

The evaluation results are presented according to the two evaluation questions.

Evaluation question 1: Are the Grade 1 learners who participated in the CRAR programme able to read and write according to LTL and DBE standards?

Measures used in this analysis were the quarterly NGO and CAPS assessments. The four assessments in the two sets are independent of each other as they measure different aspects of literacy.

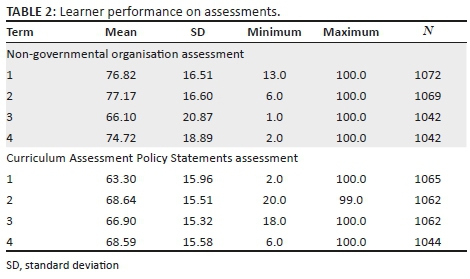

Initially, 1090 learners in 18 schools started on the programme. However, as the year progressed, some attrition occurred. In Table 2, an initial snapshot of quarterly performance on the two sets of assessments is expressed as mean scores. The number of learners who completed the assessments is also indicated.

It is clear from Table 2 that the mean scores on the four different NGO assessments exceeded the 60% LTL standard and the mean scores on the four different CAPS assessments exceeded the 50% English first language DBE standard.

We disaggregated the performance results by school, and Tables 3 and 4 display the mean scores of the NGO and CAPS assessments across the 18 schools for each of the four assessments. These tables also include the results of a one-sample t-test that compared the performance of each school with the specified standards.

From Table 3, it is clear that most schools reached the 60% LTL standard in all four terms. The exceptions, however, were school 5 (this level of performance not attained in term 3), school 8 (this level of performance not attained in terms 1 and 3) and school 13 (this level of performance not attained in term 3). These schools scored significantly lower than the 60% LTL standard in these terms.

From Table 4, it is clear that most schools significantly exceeded the DBE standard of 50% for English first language, except for school 8 in term 4 and school 9 in term 1.

We disaggregated the data in Tables 3 and 4 further by examining what proportion of learners in each school attained the set standards for each NGO assessment. We used an arbitrary cut-off point of 50% (at least half of the learners) for this analysis. Instances where less than half of the learners attained the 60% standard on at least one of the NGO assessments were identified in six schools (schools 1, 5, 7, 8, 13 and 15). School 8 stands out again in this analysis, with less than half of its learners attaining the 60% standard in two terms.

The same proportional analyses were performed for the performance on the CAPS assessments. Only the English first language standard (50%) was used, as more than 50% of learners attained the English additional language standard (40%) in all schools. Our analysis showed that in almost all schools (except school 1 in quarters 3 and 4) more than 50% of learners attained the English first language standard for all four CAPS assessments. This is also evident for school 8, which underperformed in the 50% CAPS assessment in term 4.

In summary, it can be concluded that most schools, except schools 5, 8 and 13, attained the LTL standard (60%) in their NGO literacy assessments in all four terms. Furthermore, all schools (18) attained the 40% DBE standard in the CAPS assessments, while all schools, except school 8 and 9, attained the 50% DBE standard in all four terms. In the proportional analyses, in 12 schools, more than 50% of learners attained the 60% LTL standard on all four assessments, whereas in 17 schools, more than 50% of learners attained the DBE's 50% English first language standard on all four CAPS assessments.

Evaluation question 2: Did the teachers' language teaching experience, English language proficiency, teaching self-efficacy, perceptions of usefulness of the LTL materials and usage of the reading room have any influence on the learner's performance?

Completed data were available for 40 teachers.

To address evaluation question 2, we employed a hierarchical multiple regression with performance on the four NGO assessments as the dependent variable. In this type of regression, sample size, the presence of outliers and various aspects of the relationship among the independent variables should be considered (Field 2013). Our sample size (N = 40) was smaller than the recommended sample size for five independent variables (a ratio of 10:1 is recommended), but was deemed adequate for the analysis as we were expecting a medium effect size. Cook's distance, a technique for identifying influential outliers, showed that of the 40 cases screened, none had a value of >1.00. With regard to the aspects of the relationships among the independent variables, tests showed:

1. low correlations among the independent variables (no perfect multicollinearity)

2. a normal distribution of errors

3. linear relationships between independent and dependent variables

4. similar variances for predicted scores (homoscedasticity) - refer to Zuma (2016:52-55) for details of these four tests.

To determine if the independent variables (i.e. the teacher characteristics) predicted literacy outcomes, four independent multiple regressions, based on the NGO assessment data, were performed. CAPS assessment data were not included in the regression model, as these data were not based on the workbook and measured different aspects than the NGO measures. The outcome variables were performance on each of the four independent NGO assessments, while the five predictor variables were language teaching experience, English proficiency, teacher self-efficacy, perceived usefulness of LTL materials and usage of reading room (Figure 2).

For a hierarchical regression, variables are entered into the variable blocks in SPSS in a pre-determined order (Pallant 2013). For the first block, language teaching experience was entered into the model. In the second block, the remaining predictor variables were entered into the model. Table 5 presents the regression model and indicates coefficients and their significant p-values.

In Model 1 for NGO1 assessment, teaching experience explained a significant proportion of variance in LTL literacy scores (R2 = 0.114, F(1, 35) = 4.37, p < 0.005).

In Model 2 for NGO1 assessment, with all the predictors included in the regression model, the total variance explained by the model was R2= 0.152, F(6, 35) = 0.868, p > 0.005. When the contribution of each variable is considered individually in Model 2, none of these were significant: English proficiency, β = 0.006, p = 0.975; teacher self-efficacy, β = 0.205, p = 0.278; usefulness of LTL materials, β = −0.056, p = 0.780; and usage of reading room, β = 0.020, p = 0.914.

Therefore, the first model where only one predictor was included was better in predicting the outcome variable and significantly contributed to the outcome (β = 0.34; p < 0.005).

When we examined the results for NGO2, NGO3 and NGO4 assessments, the contribution of all five predictors was not significant in both models 1 and 2.

In conclusion, the hierarchical multiple regression revealed that only teaching experience predicted performance on the NGO1 reading assessment during the first term.

Discussion

From the results reported in this study, it can be concluded that learners who received the CRAR programme in addition to school-based literacy teaching were able to read and write according to LTL and DBE standards at the end of Grade 1. When data were disaggregated per school, we found that 15 schools attained the LTL standard in all four terms, while 16 schools significantly exceeded the 50% DBE standard in all four terms. Further analysis showed that in 12 schools, more than half of the learners in the class attained the LTL standard on all four assessments, while in 17 schools, more than half of the learners in the class attained the DBE English first language standard on all four assessments.

In an analysis of teacher attributes, teacher experience in literacy teaching was the only variable that significantly predicted learner performance in literacy during the first term.

These results will be discussed in more detail under the relevant evaluation questions below.

Evaluation question 1: Are the Grade 1 learners who participated in the Coronation Reading Adventure Room programme able to read and write according to Living through Learning and Department of Basic Education's standards?

The results of the evaluation will be discussed in terms of learner performance (all learners' performance on each of the two sets of assessments during the full year), school performance (each of the 18 schools on each of the quarterly assessments) and school proportional performance (the number of schools where more than 50% of learners in a class attained the relevant literacy standards).

Learner performance

Analysis of the NGO data revealed that learners attained the 60% standard in all the quarterly assessments. However, there was a slight decline in the mean NGO test scores at year end (total assessment score for term 4 = 74.72%), compared to beginning of the year (total assessment score for term 1 = 76.82%). More remarkable, in term 3, there was a sharp decline in the mean NGO assessment scores (total assessment score for term 3 = 66.1%). A comparison of the NGO literacy activities that were assessed in terms 2 and 3 may explain this decline. According to the programme manager, in term 2 the learners' ability to read three-letter words was assessed, while in term 3 the assessment focused on the learners' ability to read simple sentences and know words that start with certain blends (fl, cl, bl, sh and th). At this stage, it is unclear whether the step from reading three-letter words to reading simple sentences is a bit too big. What is interesting here is that there was no decline in performance on Assessment 4 taken at year end, which measured a relatively complex operation, namely, writing own sentences.

The CAPS analysis showed that learners attained the standard of 50% for English first language in all four assessments. The learners' mean scores on the four CAPS assessments improved from year start (63.3%) to year end (68.59%), but mean scores also dipped in term 3 (66.9%). Again, it is difficult to explain this decline. At this stage, we simply do not know whether the literacy operation that is assessed at this time of the school year is inherently difficult, whether the assessment tool is flawed or whether something peculiar happens in schools in this term.

What is of interest here is that the learners who received CRAR support plus school-based literacy teaching could read and write according to LTL and DBE standards at the end of their first school year.

School performance

From the analysis that examined each school's mean performance on each quarterly NGO assessment, it is clear that most schools were able to reach the 60% LTL standard, except for school 5 in term 3, school 8 in terms 1 and 3, and school 13 in term 3. Here we note again that performance on term 3 assessments was problematic in these three schools. School 8, however, also performed poorly at year start and is the only school that performed below the 60% standard in two terms. We can only conclude that there may have been other factors affecting performance in this school. As the NGO data were anonymised, the evaluators could not investigate this further. The programme manager was alerted about the poor performance of the school and we suggested that the NGO staff, who could identify the school, should investigate why the learners in this school did not benefit from the programme to the same extent as the other programme schools.

From the analysis that examined each school's mean performance on each quarterly CAPS assessment, it is clear that all schools were able to reach the 50% DBE English first language standard, except for school 8 in term 4. What is of interest here is that this school also performed below the LTL standard of 60%. The programme manager was alerted to the fact that two different measures indicated that literacy levels at this school were not up to standard.

Proportional school performance

When we examined the quarterly assessments and isolated the schools where fewer than 50% of learners in a class attained the set standards, some variation appeared in the NGO scores. The number of schools that had proportions below 50% seemed quite random and comprised schools 1, 5, 7, 8, 13 and 15 in either terms 2, 3 or 4.

For the DBE English first language standard, we found that at least 50% of learners in all schools in all four terms (except school 1 in the third and fourth terms) attained the required standard of literacy.

We suggest that LTL alert literacy teachers to this proportional measure. While teachers usually attend to individual learners who perform poorly, they may not always be aware of class under-performance. Living through Learning itself may want to investigate the possibility of a special intervention for a class where more than half of the children cannot read and write according to set standards.

Strengths and limitations

When we tested the plausibility of the programme theory earlier, we found that the programme activities (i.e. what the learners do on the programme) were consistent with literacy programmes, which showed positive results (Krashen 1981; Reynolds et al. 2010; Stahl & Murray 1994; Torgesen 2000). We could thus assume that the programme would work. From our analyses and results, we can conclude that the CRAR literacy programme, as a proven and well-designed programme, works. Overall, the findings were positive, but they must be interpreted with extreme caution. We cannot claim that the improvement in literacy can be attributed to the CRAR programme alone, as the programme was embedded in the CAPS literacy curriculum which could have influenced the results. Additionally, we were not able to compare learners who did not receive the programme with those who received the programme. A design involving a control group may provide stronger causal claims regarding the benefit of the programme.

Recommendations

For this evaluation, we depended on secondary data collected by means of assessments designed by teachers and programme staff. This constrained the use of a strong evaluation design and we suggest that future evaluators utilise a stronger design that would enable them to make causal inferences about the programme. Ideally a randomised control design should be used, but a relatively strong quasi-experimental pre- and post-test design using treatment groups (schools that receive the programme) and relevant comparison groups (schools that do not receive the programme) would enable causal claims.

Furthermore, we suggest that future evaluators design independent assessments and use these in conjunction with CAPS (teacher) and LTL (programme staff) assessments. We also suggest that future evaluators design an assessment of emergent English literacy which should be administered as a pre-test before the programme starts. Although such a pre-test would be independent of the quarterly English literacy measures, evaluators could examine whether better performance on the pre-test predicts better performance on the post-tests.

This outcome evaluation was the first evaluation of the CRAR programme and focused on programme theory and outcomes. We recommend that future evaluations focus on implementation, outcome and impact. Adding an implementation level evaluation would allow evaluators to assess programme utilisation by learners (e.g. actual participants, dosage, attrition, etc.) and whether learners' demographic variables play any role in their English literacy performance. The number of books in the home plus exposure to pre-school and/or Grade R could be added to these variables. We specifically recommend that the influence of learners' home language on their performance on the English language CRAR programme should be investigated. Teaching and learning in a language other than the learners' home language is a contentious issue and it deserves systematic investigation.

Evaluation question 2: Did the teacher's language teaching experience, English language proficiency, teaching self-efficacy, perceptions of usefulness of the Living through Learning materials and usage of the reading room have any influence on the learner's performance?

We tested whether five teacher attributes contributed to learners' literacy acquisition and found that the only attribute that contributed significantly to learner performance was experience in literacy teaching. Furthermore, this attribute played a role only during the first term.

Specific teacher attributes, for instance, experience, qualifications, the ability to manage a classroom and many more, may influence the quality of teaching. In turn, quality of teaching is an important factor for attaining literacy outcomes (Conroy et al. 2009; Day & Bamford 1998; Fraser 1998; Pressley et al. 2001). The CRAR programme equipped the teachers with literacy teaching skills and supplied a dedicated classroom and learning materials. The NGO1 assessment measured performance right at the very beginning of literacy and tested the ability to know familiar sounds and write them. In this evaluation, experienced literacy teachers (and by implication older teachers) were better able to instil this fundamental literacy skill in learners than less experienced teachers. This finding contradicts research by Armstrong (2015) who found that often less experienced (and by implication, younger) teachers are more successful in teaching children to read and write than older teachers. Our results showed that teacher experience contributed significantly to learner performance at year start. However, it could be that more experienced teachers were simply better at managing discipline in class and therefore at creating a conducive atmosphere for foundational literacy learning.

Recommendations

We suggested earlier that an implementation level, specifically the sub-level of programme utilisation, should be added to future evaluations. An additional implementation level, namely, programme delivery, would enable future evaluators to assess English literacy teaching competency of the teachers who deliver the programme, before and after the CRAR training. This would provide more objective answers to questions regarding teacher competency. Apart from objective competency measures, we also suggest an implementation quality measure. Even if teachers are competent after the training, it is unclear whether they are implementing the programme as intended in their classrooms and the dedicated reading rooms.

Elbaum et al. (2000) stated that learners learn better and more effectively when a facilitator is present. Teaching assistants play a major role in the CRAR programme and we suggest that future evaluators should assess if and how teachers utilise this resource. Furthermore, the competency of these teaching assistants should be assessed before and after training, plus the possible influence their demographic variables have on their utilisation and effectiveness.

Conclusion

In this study, we showed how significant stakeholders set the agenda for an evaluation of an English-medium literacy development programme. We pointed out how this limited the evaluation in terms of independence, design and levels of evaluation. Despite the limitations of the evaluation, there is evidence that programme participants can read and write in English at LTL and DBE standards at the end of Grade 1. This outcome is probably because of a combination of a few different interventions (teaching, teaching assistant support, the programme and its stimulating reading room). Should future evaluators have more resources (funds and time), they could develop and use these features of the programme as independent measures and assess the contribution of each to literacy performance. There is a dearth of evaluations of medium-sized English literacy programmes which are presented in the class in addition to the usual classroom activities. Evaluations that contain the recommendations we have made here will contribute significantly to the knowledge of what works in terms of literacy in the classroom.

Acknowledgements

The authors would like to thank Living through Learning's Sonja Botha and Salma Adams for access to the programme, support during the evaluation and clean programme data.

Competing interests

The authors declare that they have no financial or personal relationships which may have inappropriately influenced them in writing this article.

Authors' contributions

M.Z. completed this evaluation as a dissertation requirement for a MPhil in Programme Evaluation, at the University of Cape Town. A.B. and J.L.-P. served as the supervisors of the study. J.L.-P. prepared the first draft of the manuscript for publication and M.Z. and A.B. provided input for the final draft.

Funding information

The authors would like to acknowledge and thank the funders: M.Z. - UCT Master's Need Scholarship; J.L.-P. - the National Research Foundation's Incentive Fund for Rated Researchers (IFR150126113160).

Data availability statement

Data sharing is not applicable to this article as no new data were created or analysed in this study.

Disclaimer

The views and opinions expressed in this article are those of the authors and do not necessarily reflect the official policy or position of any affiliated agency of the authors.

References

Armstrong, P., 2015, 'Teacher characteristics and student performance: An analysis using hierarchical linear modelling', South African Journal of Childhood Education 5(2), 123-145. https://doi.org/10.4102/sajce.v5i2.385 [ Links ]

Bickman, L., 1987, 'The functions of program theory', New Directions for Program Evaluation 33, 5-18. https://doi.org/10.1002/ev.1443 [ Links ]

Conroy, M.A., Sutherland, K.S., Snyder, A., Al-Hendawi, M. & Vo, A., 2009, 'Creating a positive classroom atmosphere: Teachers' use of effective praise and feedback', Beyond Behavior 18(2), 18-26. [ Links ]

Day, R.R. & Bamford, J., 1998, Extensive reading in the second language classroom, Cambridge University Press, New York.

Department of Basic Education, 2013, Report on the Annual National Assessment of 2013, Department of Basic Education, Pretoria.

Elbaum, B., Vaughn, S., Tejero Hughes, M. & Moody, S.W., 2000, 'How effective are one-to-one tutoring programs in reading for elementary students at risk for reading failure? A meta-analysis of the intervention research', Journal of Educational Psychology 92(4), 605-619. https://doi.org/10.1037/0022-0663.92.4.605 [ Links ]

Field, A., 2013, Discovering statistics using SPSS, Sage, Thousand Oaks, CA.

Fraser, B.J., 1998, 'Classroom environment instruments: Development, validity, and applications', Learning Environments Research 1(1), 7-33. https://doi.org/10.1023/A:1009932514731 [ Links ]

Grigg, D., Joffe, J., Okeyo, A., Schkolne, D., Van der Merwe, N., Zuma, M. et al., 2016, 'The role of monitoring and evaluation in six South African reading programmes', Southern African Linguistics and Applied Language Studies 34, 359-370. https://doi.org/10.2989/16073614.2016.1262270 [ Links ]

Howie, S., Van Staden, S., Tshele, M., Dowse, C. & Zimmerman, L., 2011, Progress in International Reading Literacy Study 2011: South African children's reading literacy achievement, viewed 07 March 2016, from http://web.up.ac.za/sitefiles/File/publications/2013/PIRLS_2011_Report_12_Dec.PDF.

Kennedy, M., 2005, Classroom colors, viewed 07 September 2016, from http://go.galegroup.com/ps/i.do?id=GALE%7CA132350598&sid=AONE&v=2.1&u=unict&it=r&p =AONE&sw=w&asid=c975dc152e1ee6565662324a11218b24.

Krashen, S.D., 1981, Principles and practice in second language acquisition, Pergamon, Oxford.

Krashen, S.D., 1989, 'We acquire vocabulary and spelling by reading: Additional evidence for the input hypothesis', The Modern Language Journal 73(4), 440-464. https://doi.org/10.1111/j.1540-4781.1989.tb05325.x [ Links ]

Living Through Learning, n.d., Home, viewed 07 March 2016, from http://livingthroughlearning.org.za.

Macdonald, C.A., 2002, 'Are children still swimming up the waterfall? A look at literacy development in the new curriculum', Language Matters 33, 111-141. https://doi.org/10.1080/10228190208566181 [ Links ]

Matjila, D.S. & Pretorius, E.J., 2004, 'Bilingual and biliterate? An exploratory study of Grade 8 reading skills in Setswana and English', Per Linguam 20, 1-21. https://doi.org/10.5785/20-1-77 [ Links ]

Midgley, C., Feldlaufer, H. & Eccles, J.S., 1989, 'Change in teacher efficacy and student self-and task-related beliefs in mathematics during the transition to junior high school', Journal of Educational Psychology 81(2), 247-258. https://doi.org/10.1037/0022-0663.81.2.247 [ Links ]

Moloi, M. & Chetty, M., 2011, 'Trends in achievement of Grade 6 pupils in South Africa', SACMEQ III, Policy Brief, Pretoria.

Mullis, I.V.S., Martin, M.D., Foy, P. & Hooper, M., 2016, PIRLS 2016 international results in reading, International Association for the Evaluation of Educational Achievement, Chestnut Hill, MA.

Nel, N. & Swanepoel, E., 2010, 'Do the language errors of ESL teachers affect their learners?', Per Linguam 26(1), 47-60. https://doi.org/10.5785/26-1-13 [ Links ]

Pallant, J., 2013, SPSS survival manual, Open University Press, Maidenhead.

Pressley, M., Wharton-McDonald, R., Allington, R., Block, C.C., Morrow, L., Tracey, D. & Woo, D., 2001, 'A study of effective first-grade literacy instruction', Scientific Studies of Reading 5(1), 35-58. https://doi.org/10.1207/S1532799XSSR0501_2 [ Links ]

Pretorius, E.J., 2002, 'Reading ability and academic performance in South Africa: Are we fiddling while Rome is burning?', Language Matters 33, 169-196. https://doi.org/10.1080/10228190208566183 [ Links ]

Pretorius, E.J. & Mampuru, D.M., 2007, 'Playing football without the ball: Language, reading and academic performance in a high-poverty school', Journal of Reading 30(1), 24-37. https://doi.org/10.1111/j.1467-9817.2006.00333.x [ Links ]

Reynolds, M., Wheldall, K. & Madelaine, A., 2010, 'Components of effective early reading interventions for young struggling readers', Australian Journal of Learning Difficulties 15(2), 171-192. https://doi.org/10.1080/19404150903579055 [ Links ]

Rossi, P., Lipsey, M.W. & Freeman, H.E., 2004, Evaluation: A systematic approach, 7th edn., Sage, Thousand Oaks, CA.

Salkind, N.J., 2009, Exploring research, Pearson Education, Saddler River, NJ.

Slavin, R.E., Lake, C., Chambers, B., Cheung, A. & Davis, S., 2009, 'Effective reading programs for the elementary grades: A best-evidence synthesis', Review of Educational Research 79(4), 1391-1466. https://doi.org/10.3102/0034654309341374 [ Links ]

Spira, E.G., Bracken, S.S. & Fischel, J.E., 2005, 'Predicting improvement after first-grade reading difficulties: The effects of oral language, emergent literacy, and behaviour skills', Developmental Psychology 41(1), 225-234. https://doi.org/10.1037/0012-1649.41.1.225 [ Links ]

Stahl, S.A. & Murray, B.A., 1994, 'Defining phonological awareness and its relationship to early reading', Journal of Educational Psychology 86(2), 221-234. https://doi.org/10.1037/0022-0663.86.2.221 [ Links ]

Torgesen, J.K., 2000, 'Individual differences in response to early interventions in reading: The lingering problem of treatment resisters', Learning Disabilities Research and Practice 1, 55-64. https://doi.org/10.1207/SLDRP1501_6 [ Links ]

Van der Berg, S., 2008, 'How effective are poor schools? Poverty and educational outcomes in South Africa', Studies in Educational Evaluation 34(3), 145-154. https://doi.org/10.1016/j.stueduc.2008.07.005 [ Links ]

Zuma, M., 2016, 'An outcome evaluation of Living through Learning's Coronation Reading Adventure Room Programme', MPhil dissertation, University of Cape Town. [ Links ]

Correspondence:

Correspondence:

Joha Louw-Potgieter

Joha.Louw-Potgieter@uct.ac.za

Received: 13 July 2018

Accepted: 27 Aug. 2019

Published: 07 Nov. 2019