Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

Yesterday and Today

On-line version ISSN 2309-9003

Print version ISSN 2223-0386

Y&T n.25 Vanderbijlpark 2021

http://dx.doi.org/10.17159/2223-0386/2021/n25a2

ARTICLES

A Comparative Analysis of the Zambian Senior Secondary History Examination between the Old and Revised Curriculum using Blooms Taxonomy

Y Kabombwe; N Machila; P Sikayomya

University of Zambia , Lusaka, Zambia, yvonnekmalambo@gmail.com; nisbertmachila@gmail.com; sikayaomya@gmail.com; Orcid: Yvonne Kabombwe - 0000-002-1844-7797; Nisbert Machila - 0000-0001-5552-7510; Patrick Sikayomya - 0000-0001-7522-7486

ABSTRACT

IThe 2013 Education reform in Zambia is one of the significant changes that brought about a shift in assessment. To understand the changes that have taken place in the 2013 revised curriculum, and to determine the claims by the Ministry of General Education that the revised curriculum is based on higher order thinking, this study evaluated the Examination Council of Zambia's Grade 12 History examination past papers. Qualitative content analysis was used as a research method and document study. A descriptive content analysis style was used to describe the occurrence of the coding categories of analysis precisely. Content analysis was used to make replicable and valid inferences by interpreting and coding textual material in the Grade 12 examination questions. The sample for this research comprised of 10 History examination papers from the new curriculum and old curriculum which were purposively selected. The findings of the study suggested that the analysed exam papers lacked the higher-level cognitive skills contained in Bloom's taxonomy. It is recommended that examiners follow the guidelines for setting an Outcome-Based Assessment so that they can achieve the intended goals of learning for learners.

Keywords: Bloom's Taxonomy; History; exam questions; Assessment; Outcome Based Assessment and Outcome Based Curriculum/ Education.

Background and Introduction

Educational taxonomies describing the cognitive domain are used to establish educational goals and "are especially useful for establishing objectives and developing test items" (McDonald, 2002:34). Bloom's taxonomy of cognitive processes involves classifying learning into six levels of measuring learning through assessment (Bloom, 1956). The levels are: knowledge, comprehension, application, analysis, synthesis and evaluation (Bloom, 1956). The use of taxonomies allows for the alignment of teaching strategies and assessment. Imrie (1995) argues that although it is customary to use a systematic taxonomy in teaching and assessment, the lack of a system means that outcomes may not be evident or verifiable and that there could be a mismatch between the stated (intended) outcomes and the actual behaviour of the learners. Biggs and Tang (2007) have argued that curriculum alignment is crucial for the quality of teaching. Thus, it clear that outcomes should be clear and measurable in order for quality education to be provided to learners. Amua-Sekyi (2016) contended that education is largely controlled by assessment and that anxieties about the quality of education have to do with teaching and learning, the nature of assessment, and particularly summative assessment.

Hall and Sheehy (2010:4) argued that "assessment means different things in different contexts." For the two scholars it means knowledge, attitudes, values, beliefs, and sometimes prejudices of teachers and learners. Assessment has been categorized as formative or summative depending on how the results are used (Dunn & Mulvenon, 2009). Formative assessment is embedded in the teaching and learning process and provides feedback to the teacher in the course of teaching to enable him or her to judge how well students are learning. Summative assessment takes place at the end of a course or program to determine the level of students' achievement or how well a program has performed. It often takes the form of external examinations or tests.

The Senior Secondary School History Examinations papers fall under the category of summative assessment. Typically, summative assessment occurs at the end of educational activity. The summative assessment comes in the form of tests, marks, academic reports, and qualifications that are socially highly valued (Biggs, 2003; Awoniyi & Fletcher, 2014). An exam paper is a traditional way of assessment. It is a common choice for teachers evaluating the learners' degree of success in a particular lesson in which the necessary cognitive ability of students is determined through the exam scores (Koksal & Ulum, 2018). Therefore, it is clear that Senior Secondary School Examinations for History are very important for learners' final results for them to progress to tertiary level.

The current Zambian Senior Secondary School History school curriculum is outcomes-based which is in contrast to the previous curriculum which was content-based. A content-based curriculum focuses on rote memorization of factual knowledge. Wangeleja (2010:10) argued that "a Knowledge-Based Curriculum (KBC) focuses on the grasp ofknowledge and thus the curriculum is content-driven." On the other hand, an outcomes-based curriculum, like other outcomes-based models across the world, is anchored on learners' outcomes. The Zambian outcome-based curriculum focuses on learners acquiring the higher levels of Bloom's taxonomy. To enable learners to have the ability to make appropriate use of knowledge, concepts, skills, and principles in solving various problems in daily life, the higher level of Blooms Taxonomy should be applied in teaching and assessment (MoGE, 2013). It is important that teachers are aware of the level of Bloom's taxonomy that they should be assessing, so that they achieve their intended goal. Therefore, the emphasis is on the four higher levels of Bloom's Taxonomy, that is, application, analysis, synthesis, and evaluation. Therefore, teachers are required to take a creative or innovative approach when teaching (MoGE, 2013; Kabombwe, 2019). Learners of History are expected to acquire the following competences such as historical knowledge, historical concepts, and historical skills after completing senior secondary school (Kabombwe, Machila & Sikayomya, 2020; Kabombwe, 2019; Mazabow, 2005).

Research on implementation of outcomes-based curriculum across the world indicates that the nature of assessment was problematic and not very practical (Brindley, 2005; Jansen, 1999; Donnelly, 2007; Kabombwe, 2019). For instance, in Australia, as well as in the United States of America, educators found outcome-based assessments to be very time consuming (Killen, 2005; Donnelly, 2007). Jansen (1999) argued that the pressure of examinations compromised the logic outcome-based approaches in South Africa ( Jansen, 1999). In Tanzania and Zambia literature showed that teachers were still using traditional methods of assessment instead of outcomes-based assessment methods (Komba & Mwandanji, 2015; Makunja, 2016; Kabombwe, 2019).

Harris and Ormond (2019) noted that curricula reforms, which emphasizes transferrable competences and generic skills, are based on the belief that a knowledge economy requires citizens to be adaptive and able to effectively utilise knowledge, derived from diverse sources, to innovate, progress, and enrich society (Harris & Ormond, 2019). It is vital to note that historical knowledge has been differentiated into two main forms: substantive and disciplinary knowledge. Substantive knowledge refers to knowledge of events, ideas, and people, while disciplinary knowledge includes procedural and conceptual dimensions. Procedural thinking involves the processes required to effectively work with evidence, develop interpretations, and construct arguments (Bertram, 2008; Harris & Ormond, 2019).

Historical knowledge has not been spared from the debate of which knowledge is better than the other. For instance, Cain and Chapman (2014), Young and Muller (2010), and Chisholm (1999) have criticised the knowledge model because learners are expected to comply and accept preordained bundles of knowledge as valuable and uncontested, and are not expected to examine or understand the knowledge presented to them. On the other hand, McPhail and Rata (2016) and Young and Muller (2010) also critique the emphasis on competences and skills. For them, this type of curriculum has the potential to provide isolated, random areas of content within a sea of competences, where young people are not taught to distinguish between different types of knowledge and forms of thinking, but instead, regard knowledge as information. Similarly, Counsell (2003) contended that genericism is redundant, as it adds nothing to strong disciplinary practice in fostering thinking, reflection, criticality, and motivation. It can be noted that what forms historical knowledge is highly contested among History educators around the world.

Research on assessment in History using the outcomes-based approach in Africa reveals that educators were not familiar with how to assess learners, and that interventions would need to be made for learners to be assessed in a meaningful way (Kabombwe & Mulenga, 2019; Machila, Sompa, Muleya, & Pitsoe, 2018; Alabi, 2017; Warnich, Meyer & Van Eeden, 2014; Bertram, 2007; Au, 2009). Bertram (2008) argued that there were signs that learners were not being assessed on these historical knowledge and skills. These skills appeared to have been replaced by generic skills and comprehension, and thus, learners could not develop higher order skills. A candidate could pass the examination without attempting the optional source-based question which developed higher order skills (Kudakwashe, Jeriphanos & Tasara, 2012). In addition, Oppong (2019) also revealed that learners could not apply sourcing, contextualisation, and corroboration appropriately in History in Ghana. Therefore, it would be important to continue to analyse History assessment tasks to see if this trend continues or not.

Statement of the problem

Studies in Zambia indicate that most Zambian examinations were assessing low order skills and that educators did not know whether they used Bloom's taxonomy or not as they prepared examinations (Sichone, Chigunta, Kalungia, Nankonde & Banda (2020); Banda, Phiri & Nyirenda: 2020; Ngungu, 2016). Killen (2005) explained that thinking about these broad groupings of outcomes helps educators to see that different types of learning require different approaches to teaching and assessment. Since the Zambian education system is focused on developing the higher order skills, as prescribed by Bloom's taxonomy, where teaching, learning, and assessment should be aligned, it requires that the assessment be directed at the learners' ability to apply, analyse, synthesise, and evaluate. Olson (2003) argued that when an examination question is used to measure the achievement of curriculum standards, it is essential to evaluate and document both the relevance of the examination to the standards and the extent to which it represents those standards. It is on this basis that this study sought to analyse the level of Bloom's taxonomy in the Zambian History Senior Secondary School Examination papers.

Purpose of the Study

The main aim of the study was to investigate the level to which Bloom's taxonomy was applied in the preparation of Senior Secondary History Examinations in Zambia. The study was guided by the following research questions;

1. What was level of Bloom's taxonomy applied in the preparation of senior secondary school history examination papers using knowledge-based curriculum?

2. What was level of Bloom's taxonomy applied in the preparation of Senior Secondary School History Examination papers using the outcome-based curriculum?

3. What was the difference between the papers that were prepared using knowledge-based curriculum and an outcomes-based curriculum in terms of application for Bloom's taxonomy?

Methodology

Qualitative content analysis was used as a research method. Specifically, the study employed the summative content analysis method which is an approach to qualitative content analysis. The content of the examination questions was interpreted through the systematic classification process of coding and identifying themes or patterns (Hsieh & Shannon, 2005). This qualitative content analysis went beyond merely counting words to examining content intensely for the purpose of classifying large amounts of text into an efficient number of categories that represent similar meanings (Weber, 1990). A coding scheme for classifying and evaluating the examination questions, using the revised Bloom's taxonomy, was developed. This research used a descriptive content analysis style that describes the occurrence of the coding categories of analysis precisely.

The research results were based on the data that was collected through Grade 12 Secondary School History past papers. The key target examination past papers for this content analysis were five (5) papers from before the year 2013 and five (5) papers from the year 2014 onwards. That is five (5) from the previous curriculum and five (5) from the new curriculum. Various themes emerged from the data that was collected, and were aligned as answers to the research questions following cognitive levels of Bloom's taxonomy. In this regard, the analysis used the cognitive order of thinking skills as proposed in Blooms taxonomy like Motlhabane (2017).

The papers were coded according to the six (6) levels of Bloom's taxonomy, which are knowledge, comprehension, application, analysis, synthesis, and evaluation (Bloom, 1956). The knowledge level is known as recalling of data (Bloom,1956). Scott (2003) refers to it as 'rote learning' or 'memorization. This level serves as the lower level or the beginning level of the hierarchy. It is a level where students remember or memorize facts or recall the knowledge they have learnt before. Bloom describes the comprehension level as grasping the meaning of information (Bloom, 1956). The ability to interpret, translate, extrapolate, classify, and explain are the concepts of this levels (Thompson, Luxton-Reilly, Whalley, Hu, JL & Robbins, 2008). The application level is defined by applying the concept to a certain scenario (Starr, Manaris & Stalvey, 2008). Analysis level requires students to breakdown information into simpler parts and analyse each of it. This may imply drawing relationships, making assumptions, and distinguishing or classifying the parts. In the synthesis level, the student should be able to integrate and combine ideas or concepts by rearranging components into a new whole (a product, plan, pattern, or proposal) (Bloom, 1956). Finally, the evaluation level is a final level where making judgements or criticisms and supporting or defending one's own stand is involved.

Coding as a process of organizing and sorting data was used. The codes served as a way to label, compile, and organize the data. Initial coding and marginal remarks were done on hard copies of Grade 12 Examination papers. Content analysis was used to make replicable and valid inferences by interpreting and coding textual material for the Grade 12 Examination questions. The most important aspects of the examination questions were identified and presented clearly and effectively. This helped in guiding the coding and analysis. Themes and patterns were identified to describe the situation. The cognitive levels of Bloom's taxonomy were used to categorise the examination questions.

Examples of coding

Questions that required recalling of historical facts, observation, or definitions were coded under knowledge-based, while questions that required organization of facts, such as order of 'describe', 'explain', 'compare' or 'contrast', and 'state' were coded under Comprehension of knowledge. Questions that encouraged learners to apply information taught or learnt to solve a problem were coded as Application of historical knowledge. Questions that helped learners to establish underlying reasons such as causes and effects were coded as Analysis of historical knowledge. Understanding of the subject matter and relationships such as questions on 'write' or 'develop' were coded as Synthesis of historical knowledge, while questions that required judgment or giving options were coded as Evaluation of historical facts. See below some Examination Council of Zambia questions for History paper 1 and 2.

Example A- Below, on Image 1, question (i), where learners were required to 'name', the question was coded as knowledge based, because it seemed that learners needed to possess basic information about the region (Rhineland). Questions (iii) (iv) (v) (vi) were also coded as knowledge since it seemed learners were required to recall. These tasks required learners to give one word or state or identify. This type of questions was centred on testing memorization.

Example B- Below, in image 1, question (iii) was coded as application of historical knowledge since learners were required to employ some factual knowledge.

Please see overleaf

Example C- "What were the terms of the treaty signed by Mwenemutapa Negoma in 1573? How did it affect the history of Mwenemutapa Kingdom?" This question was coded, in the first part of the task, as historical knowledge, and in the second part, as comprehension, because to answer this task learners need to provide information that has been acquired, accumulated, and understood.

Example D- \Distinguish between primary and secondary resistance. Discuss specific incidents of primary resistance in Zambia and Malawi." This question was coded as analysis of historical facts, since it required learners to establish reasons and give critical thinking.

Example E- "Choose the mission and explain its work paying particular attention to the benefits of its work to the Africans." This question was coded as evaluation, since learners were required to give alternative judgments and various answers.

Findings

The aim of this research was to establish the level of Blooms Taxonomy used by the Examination Council of Zambia for grade 12 History final examination questions in terms of lower and higher order thinking skills under Bloom's taxonomy. In order to justify the use of the named skills, the analysis revealed the following results: the 2001 examination consisted of two papers of which Paper 1 had 20 questions while Paper 2 had only 18 questions. The results for Paper 1 revealed that the number of questions for each level were: five (5); 25% testing knowledge, seven (7); 35% testing comprehension, one (1); 5% testing application, four (4); 19% testing analysis, zero (0) testing synthesis, while two (2); 10% tested evaluation; The 2001 history examination Paper 2 revealed that out of 18 questions, seven (7) questions were based on knowledge while six (6) tested comprehension. There were no questions that tested the aspect of application in the paper while two (2); 11% focused on analysis, one (1); 6% on synthesis and two (2); 11% on evaluation. Table 1 gives a summary of the Level of Cognition analysis of 2001 Grade 12 history examination paper.

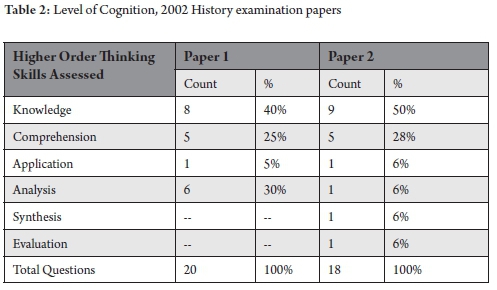

The 2002 examination comprised of two papers with Paper 1 containing 20 questions while Paper 2 had 18 questions respectively. When the two papers were analysed, they presented the following results: Paper 1, with regards to the setting of questions using the Bloom's taxonomy, discovered that the distribution of questions in the paper were; Knowledge, eight (8); comprehension, five (5); 25%, application, one (1); 5%, analysis, six (6) and zero (0); 30% of the questions for both synthesis and evaluation. In contrast, the 2002 Grade 12 History Paper 2 examination recorded that out of 18 questions, nine (9) questions, equating to 50% of the total questions, were set to test the Knowledge skills. For the remaining questions, five (5); 28% tested comprehension while there was one (1) question, in other words, 6% each, to test for application analysis, synthesis, and evaluation. Table 2 illustrates the summary of Level of Cognition in the 2002 Grade 12 History examination paper.

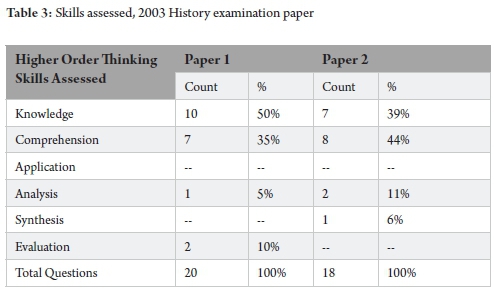

The Examination Council of Zambia 2003 Grade 12 History final examination was comprised of two papers, with Paper 1 containing 20 questions, and Paper 2 containing 18 questions. After analysing the higher order skills in the two papers, the results show that most of the questions in the two papers were based on the two skills namely knowledge and comprehension. For example, results from Paper 1 revealed that 10 questions, 50% of the total questions, tested knowledge while seven (7) of the questions tested the comprehension skills. Furthermore, there were no question testing application and synthesis skills while one (1); 5% of the questions were on analysis and two (2); 10% tested evaluation skills.

Paper 2 of the 2003 Grade 12 History examination revealed that out of the 18 questions in the paper, seven (7); 39% were testing the knowledge skill, eight (8); 44% of the questions were included to test comprehension while two (2); 11% were meant to test analysis skills. One (1); 6% of the questions targeted synthesis skills and there were no questions intended to test application and evaluation skills. The summary of the skills assessed in the 2003 Grade 12 History examination has been presented in table 3.

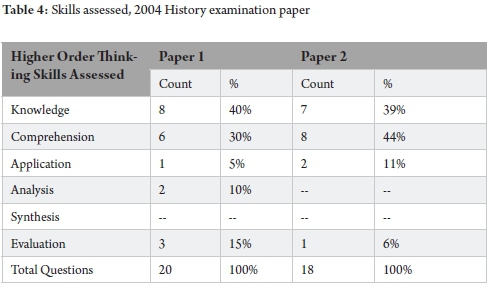

Unlike the examinations from the previous years, the 2004 grade 12 History examination had 20 questions in both Paper 1 and Paper 2. Similarly, to the year 2001, 2002, and 2003 examinations, the 2004 History examination had many questions drawn that tested the knowledge and comprehension skills. For instance, the analysis of the questions from Paper 1 discovered that eight (8); 40% of the questions were aimed at testing Knowledge skills while six (6); 30% tested comprehension skills and there was one (1); 5% question testing application skills. The analysis skills were represented by two (2); 10% questions, there were no questions assessing synthesis skills while, three (3); 15% of the questions were to test evaluation skills.

In the same vein, out of the total number of 20 questions, the analysis of the 2004 Grade 12 History final examination Paper 2 shows that the questions on higher order skills were allocated as follows; Knowledge eight (8); 40%, comprehension six (6); 30%, application one (1); 5%, analysis two (2); 10%, no questions targeted synthesis skills and three (3); 15% of the questions were testing evaluation skills. Table 4 presents skills assessed in the 2004 Grade 12 History examination.

In the year 2005, the examination council of Zambia Grade 12 History final examination involved Paper 1 with 20 questions and Paper 2 with 18 questions respectively. This examination was not an exception from the previous examinations in containing the majority of the questions meant to test comprehension skills. The analysis of Paper 1 shows that half of the questions 10; 50% targeted knowledge skills, three (3); 15% of the questions were based on testing comprehension skills, one (1); 5% was aimed at testing application skills. Additionally, five (5); 25% were meant to test analysis skills. Surprisingly, there was no question to test synthesis skills while one (1); 5% of the questions tested evaluation skills.

Out of the total number of 18 questions contained in 2005 History final examination Paper 2, six (6); 33% of the questions were drawn to probe knowledge skills, seven (7); 39% tested comprehension skills while one (1); 6% were based on application skills, one (1); 6% on analysis skills and one (1); 6% targeted synthesis skills. In addition, two (2); 11% of the questions tested evaluation skills. Table 5 shows the summary of the results in this section.

The results of the analysis of Grade 12 History examination papers in the old syllabus for the period of five (5) years from the year 2001 to 2005 have been presented in image 2. The results show variations in the six (6) aspect of the revised Bloom's taxonomy.

1.1 Construction of Secondary School Grade 12 History Examination Content in the Revised Curriculum

Starting from 2014, when the new curriculum was introduced in Zambian secondary schools, the Grade 12 Examination consists of two (2) papers as follows: Paper 1: African History 1½ Hours. This Paper consists of two (2) Sections as follows: Section A has ten (10) essay questions. Section B also has ten (10) questions. Candidates are expected to answer three (3) questions and not more than two (2) questions are to be attempted from any one Section. Each question carries twenty (20) marks.

On the other hand, Paper 2 consists ofWorld History with the examination duration of 1½ Hours. This Paper consists of two (2) Sections as follows: Section A has ten (10) essay questions while Section B also has ten (10) questions. Candidates are expected to answer three (3) questions and not more than two (2) questions are required to be attempted from any one Section. Each question carries twenty (20) marks.

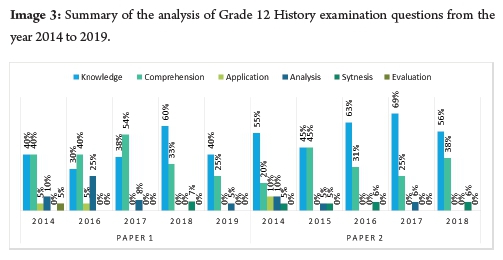

Evaluation of Grade 12 history final examination papers from the year 2014 to 2019 followed Bloom's taxonomy guided by the higher order skills proposed in the objectives of this inquiry. The Grade 12 History Examinations in the years 2014, 2016, 2017, 2018, and 2019 presented a similar pattern in areas of analysis such as the knowledge and the comprehension skills where most of the questions focused.

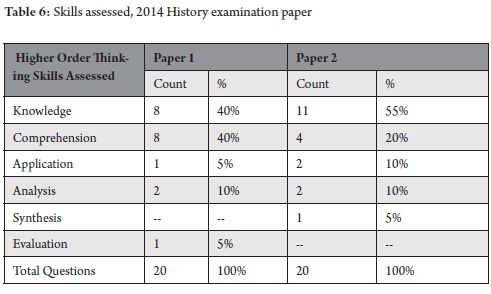

For instance, out of 20 questions set in Paper 1, results of the analysis of the 2014 Grade 12 History Examination revealed that knowledge skills recorded eight (8); 40%, comprehension skills on the other hand indicated four (4); 20%, application skills were represented by two (2); 10%, while two (2); 10% was based on analysis skills. Furthermore, there was no question based on testing synthesis skills, one (1); 5% of the questions tested evaluation skills. Table 6 presents Skills assessed in the 2014 Grade 12 History examination paper.

In the same regard, Paper 2 of 2014 Grade 12 History Examination revealed a different trajectory on higher order skills emphasised by the examination council of Zambia in preparing Grade 12 History Examination questions. The results were presented as follows: knowledge skills recorded eight (11); 55%, comprehension skills on the other hand also showed eight (8); 40%, application skills were represented by one (1); 5%, while five (5); 25% was based on analysis skills. Furthermore, there was one (1); 10% question based on testing synthesis skills, none of the questions tested evaluation skills.

The examination council of Zambia Grade 12 History examination of the year 2016 was comprised of two papers of which each one had 20 questions. Paper 1 presented the following analysis results: knowledge skills recorded eight (6); 30%, comprehension skills then again indicated eight (8); 40%, application skills were represented by one (1); 5% while two (2); 10% was based on analysis skills. Furthermore, there was no question based on testing synthesis skills and evaluation skills.

The 2016 Grade 12 History Examination Paper 2 presented the subsequent results: knowledge nine (9); 45%, comprehension also recorded nine (9); 45% of the questions, application and evaluation skills were not represented by any questions in the paper. Synthesis as well as analysis skills both recorded one (1); 5%. To illustrate the results in this section, table 7 presents a summary of the skills assessed in the 2016 Grade 12 History examination paper.

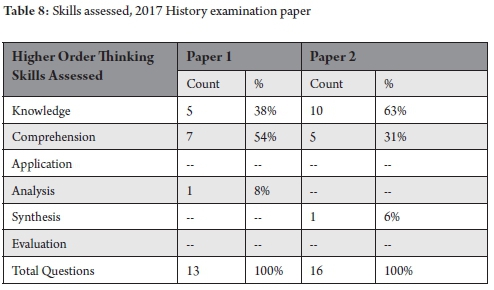

The 2017 Grade 12 History Examination also consisted of two papers although Paper 1 had 14 questions and Paper 2 had 16 questions. After analysing if the questions were in conformity with the higher order skills, the results for Paper 1 emerged as follows: knowledge five (5);36%, comprehension, application (1) 13%; seven (7); 50% of the questions, application, synthesis and evaluation skills were not represented by any questions in the paper.

Paper 2 of the 2017 Grade 12 History Examination, like other previous History examination papers, exhibited a strong focus on formulating questions on knowledge and comprehension skills. Therefore, the 2017 History Paper 1 analysis noted that the knowledge skills had the majority of the questions represented in the paper accounting to 10; 63%, comprehension five (5); 31% while synthesis recorded one (1) 6% of the questions. Application, analysis, and evaluation skills were not represented in the paper, while one (1) question was based on synthesis skills equating to 14% of the total number of questions. Table 8 illustrates the summary of the skills assessed in the 2017 Grade 12 History examination paper.

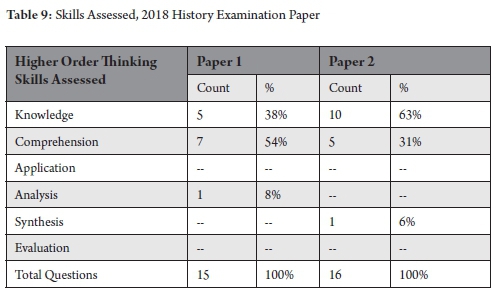

In the year 2018, the Grade 12 History Examination also encompassed two papers, Paper 1 consisted of 15 questions while Paper 2 had 16 questions. Paper 1 was based on African History and was supposed to be taken in 1 Hour thirty minutes. This Paper involved two (02) Sections as follows: Section A has ten (10) essay questions. Section B also has ten (10) questions. Candidates were expected to answer three (03) questions and not more than two (02) questions were to be attempted from any one Section. Each question carried twenty (20) marks.

The 2018 History examination Paper 1 analysis results were as follows: Nine (9); 60 % of the questions represented knowledge skills, five (5); 33% tested comprehension skills while one (1); 7% were based on analysis skills. There was no question which focused on the aspects of application and evaluation skills in the paper. The analysis of Paper 2 on the other hand discovered that the majority 11; 69% of the questions were based on knowledge while four (4); 25% covered the comprehension skills. There was one (1) question; (6%) which tested synthesis while application, analysis and evaluation skills were not included in the paper. Table 9 illustrates the summary of the results with regards to skills assessed in the 2018 Grade 12 History Examination Paper.

To probe the validity of the claim by the Ministry of General Education that the current secondary school curriculum acknowledges the application of the higher order skills in the formulation of History final examination questions, this study advanced in analysing the 2019 examination questions. The 2019 Grade 12 History Examination was comprised of two papers, Paper 1 had 14 questions while Paper 2 involved 16 questions. In making sure that reliability of the analysis was tenable, the six higher order skills proposed by Blooms were applied categorically.

Out of the 14 questions included in paper of the 2019 History Examination, eight (8); 57% were expected to test the knowledge skills, while five (5); 36% of the questions were to test comprehension skills. There were no questions that tested application, synthesis, and evaluation, whereas one (1) question; (7%) tested analysis skills.

Paper 2 of the 2019 Grade 12 History Examination revealed a similar pattern of setting questions as those in the previous years where the majority of the questions were mainly focused on the knowledge skills. For example, most of the questions nine (9); 56% were drawn from the aspect of the knowledge skills while six (6); 38% of the questions were comprehension based. There was one (1); 6% that tested synthesis and skills such as application, analysis, and evaluation were not represented in the paper. Table 10 summarised the presented results in this section.

In summary, the analysis noted that there was a consistent pattern in the setting of the examination council of Zambia Grade 12 History final examination questions for all the years analysed in study. The majority of questions included in the examination papers were based on the knowledge and comprehension skills, since many scholars consider knowledge as the most basic level of the taxonomy. Within any subject area, a learner can possess mere knowledge, and may demonstrate the ability to recall this learned knowledge in an assessment. The implication of this revelation is that learners may not, however, understand the meaning of this knowledge. Furthermore, they may not possess the ability to apply it in situations other than that in which it was learnt, or to combine it with additional knowledge to create new insights. Image 3 shows the summary of the analysis of examination questions for five years after the introduction of the 2014 revised curriculum.

Discussion of Findings

Bloom's Taxonomy was used because it is a proper benchmark to assess learning and teaching activities with the cognitive learning domain like remembering, understanding, applying, analysing, evaluating, and creating. When assessment is aligned (Herman & Webb, 2007) to the standards, an analysis such as this can provide sound information about both how well learners are doing and how well schools and their teachers are doing in helping students to attain the standards.

The results from this study revealed that the exam focus was designed on the questions which measured the lower levels of Bloom taxonomy, that is, the knowledge, comprehension, and application, more than other cognitive levels. While these questions are not bad in themselves, using them all the time is not good practice: it was preferable to try to utilize higher order level of questions, as these questions required much more "brain power" and more extensive and elaborate answers (Jones, Harland, Reid, & Bartlett, 2009). Findings of this research are consistent with Azar (2005) and Cepni (2003), who also found that most questions in their studies were at application level. The implication of these findings is that the Senior History Examinations for the previous and revised curriculum did not focus much on the high order skills of Blooms Taxonomy.

The findings of this study indicate that learners were not assessed on the skills they were supposed to be assessed in an outcome-based curriculum as learners in History. These findings are not different from with the findings of (Oppong, 2019: Kudakwashe, Jeriphanos, Tasara, 2012; Warnich et el 2014 & Bertram, 2007 & Jansen, 2009) who argued that learners were not assessed in the required skills for an outcome-based curriculum. There was a need for the educationist to be clear about the outcomes they wanted the learners to achieve by focusing on the relevant skills in teaching and assessment.

There was not much difference between the level of cognition that was measured in the old and new curriculum. The lower levels of Blooms Taxonomy were still assessed. This means that the questions of these final exams were mostly aimed to elicit the knowledge the students had accumulated prior to the exam. Concentrating on the low-level leads to a real problem because learners were just motivated to remember. The results of this study reveal that higher cognitive questions were not used much. Higher cognitive questions are interpretive, evaluative, inquiry, inferential, and synthesis questions (Cotton, 1988). Thus, the exams questions of the lower levels were only suitable for evaluating students' preparation and comprehension, diagnosing students' strengths and weaknesses, and revising or summarizing contents. Hence, there is need for educators in Zambia to prepare examination questions that focus on higher cognitive questions so that the outcome-based curriculum could be implemented effectively.

Conclusion

In conclusion, it could be argued that the Zambian Secondary School History Examination papers are still focused on the lower order thinking skills instead of the higher order skills as prescribed in the outcomes-based curriculum. The danger of dwelling on lower order skills implies that the learners of history will not be able to acquire the desired competences that they needed to achieve after secondary school education. Therefore, it would be important that the History Examination papers balance the low- and high-order thinking skills so that learners' achievements can be enhanced. The proper curriculum guidelines for the outcomes-based curriculum should also be followed and implemented effectively. Swart (2010) argues that to make it effective, balancing between lower- and higher-level questions is a must.

References

Alabi, AJ 2017. Challenges Facing Teaching and Learning of History in Senior Secondary Schools in Ilorin West Local Government Area, Kwara State. L-Hikmah. Journal of Education, 4 (2):249-265. [ Links ]

Amua-Sekyi ET 2016. Assessment, Student Learning and Classroom Practice: A Review. Journal of Education and Practice, 7(21,2):1-6. [ Links ]

Arshada, I, Razalia, S & Mohamed, Z 2012. Programme Outcomes Assessment for Civil & Structural Engineering Courses at University Kebangsaan Malaysia. Procedia - Social and Behavioral Sciences, 60(1):98-102. [ Links ]

Au, W 2009. Social Studies, Social Justice: Whither the Social Studies in High-Stakes Testing? Teacher Education Quarterly, 36(1):43-58. [ Links ]

Awoniyi, FC & Fletcher, JA 2014. The Relationship between Senior High School Mathematics Teacher Characteristics and Assessment Practices. Journal of Educational Development and Practice, 4:21-36. [ Links ]

Azar, A 2005. Analysis of Turkish High-School Physics-Examination Questions and University Entrance Exams Questions According to Blooms' Taxonomy. Turkish Science Education Journal, 2(2):144-150. [ Links ]

Banda, R, Phiri, J & Nyirenda, M 2020. Model Development of a Recommender System for Cognitive Domain Assessment Based on Bloom's Taxonomies. International Journal of Scientific & Technology Research 9(6):401-407. [ Links ]

Bertram, C 2008. Doing history? Assessment in History Classrooms at a Time of Curriculum Reform. Journal of Education, 45:155-177. [ Links ]

Bertram, C 2007. An Analysis of Grade 10 History Assessment Tasks. Yesterday & Today, 1: 145-160. [ Links ]

Bertram, C 2008. Doing history? Assessment in History Classrooms at a Time of Curriculum Reform. Journal of Education, 45:156-177. [ Links ]

Bertram, C 2019. What is Powerful Knowledge in The School History? Learning from South Africa and Rwanda School Curriculum Documents. The Curriculum Journal, 10(2):125-143. [ Links ]

Biggs, J & Tang, C 2007. Teachingfor Quality Learning at University. New York, NY: Society for Research into Higher Education & Open University Press. [ Links ]

Bloom, BS 1956. Taxonomy of Educational Objectives. Vol. 1: Cognitive Domain. New York: McKay. [ Links ]

Bloom, BS, Engelhart, MD, Fürst, EJ, Hiss, WH & Krathwohl, DR (Eds.) 1956. Taxonomy of Educational Objectives. The Classification of Education Goals. Handbook I: Cognitive Domain. New York: Longmans. [ Links ]

Brindley, G 1998. Outcomes-Based Assessment and Reporting in Language Programs: A Review of the Issues. Language Testing(15):45-85. [ Links ]

Cain, T, and Chapman, A 2014. Dysfunctional Dichotomies? Deflating Bipolar Constructions of Curriculum and Pedagogy through Case Studies from Music and History. The Curriculum Journal (25):111 -129. [ Links ]

Cepni, S 2003. An Analysis of University Science Instructors' Examination Questions According to the Cognitive Levels. Educational Sciences: Theory & Practice, 3(1):65-84. [ Links ]

Chisholm, L 1999. The Transition to a Knowledge Society and Its Implications for The European Social Model. Paper Presented at XVII European Symposium on Science and Culture, Bruges, 30 September-1 October. [ Links ]

Cotton, K 1988. Classroom Questioning. Available at www.Kurwongbss.Eq.Edu.Au/Thinking/Bloom/Bloomspres.Ppt. Accessed 11 April 2021. [ Links ]

Counsell, C 2003. Fulfilling History's Potential: Nurturing Tradition and Renewal in a Subject Community. In Riley, M & Harris, R (eds), Past Forward: A Vision for School History 2002-2012, pp. 6-11. London: Historical Association. [ Links ]

Darling-Hammond, L 2006. Assessing Teacher Education: The Usefulness of Multiple Measures for Assessing Program Outcomes. Journal of Teacher Education, 57(2):120-138. [ Links ]

Deneen, C & Boud, D 2014. Patterns of Resistance in Managing Assessment Change. Assessment & Evaluation in Higher Education, 39(5):577-591. [ Links ]

Dunn, KE & Mulvenon, SW 2009. A Critical Review of Research on Formative Assessments: The Limited Scientific Evidence of the Impact of Formative Assessments in Education. Practical Assessment Research and Evaluation, 14(7). [ Links ]

Hall, K and Sheehy, K 2010. Assessment and Learning: Summative Approaches. In Arthur, J & Cremin, T (eds), Learning to Teach in the Primary School. London: Routledge. [ Links ]

Harris, R and Ormond B 2019. Historical Knowledge in A Knowledge Economy - What Types of Knowledge Matter? Educational Review, 71(5):564-580. [ Links ]

Imrie, BW 1995. Assessment for Learning: Quality and Taxonomies. Assessment and Evaluation in Higher Education, 20(2):175-189. [ Links ]

Jansen, J 1999b. Why Outcomes-Based Education Will Fail: An Elaboration. In Jansen, J & Christie, P (eds), Changing Curriculum: Studies on Outcomes-Based Education in South Africa, pp. 145-156. Cape Town, South Africa: Juta [ Links ]

Jones KO, Harland, J, Reid, MVJ & Bartlett, R 2009. Relationship Between Examination Questions and Bloom's Taxonomy. 39th ASEE/IEEE Frontiers in Education Conference W1G-4. October 18-21, 2009, San Antonio, TX. [ Links ]

Kabombwe, MY 2019. Implementation of the Competency-Based Curriculum in the Teaching of History in Selected Secondary Schools in Lusaka, Zambia. Unpublished MEd Dissertation. Lusaka: University of Zambia. [ Links ]

Kabombwe, YM & Mulenga, I M 2019. Implementation of the Competency-Based curriculum by Teachers of History in Selected Secondary Schools in Lusaka District, Zambia. Yesterday & Today, 22:19-41. [ Links ]

Kabombwe, MY, Machila, N & Sikayomya, P 2020. Implementing a History Competency Based Curriculum: Teaching and Learning Activities for a Zambian School. Multidisciplinary Journal of Language and Social Sciences Education, 3(3):18-50. [ Links ]

Killen, R 2005. Programming and Assessment for Quality Teaching and Learning. Southbank Australia: Thomson. [ Links ]

Komba, SC & Mwandanji, M 2015. Reflections on the Implementation of Competence Based Curriculum in Tanzanian Secondary Schools. Journal of Education and Learning, 4:73-80. [ Links ]

Donnelly, K 2007. Australia's Adoption of Outcomes Based Education: A Critique. Educational Research 17(2). Available at: Https://Www.Ied.Edu.Hk/Obl/Files/164891.Pdf. Accessed 10 April 2021. [ Links ]

Kudakwashe, M, Jeriphanos, M, Tasara, M 2012. Teachers' Perceptions of the Assessment Structure of the Level History Syllabus [2167] In Zimbabwe: A Case Study of Zaka District. Greener Journal of Educational Research, 2(4):100-104. [ Links ]

Machila, N, Sompa, M, Muleya, G & Pitsoe, VJ 2018. Teachers' Understanding and Attitudes Towards Inductive and Deductive Approaches to Teaching Social Sciences, Multidisciplinary Journal of Language and Social Sciences Education, 2:120-137. [ Links ]

Makunja, G 2016. Challenges Facing Teachers in Implementing Competence-Based Curriculum in Tanzania: The Case of Community Secondary Schools in Morogoro Municipality. International Journal of Education and Social Science, 3(5):30-37. [ Links ]

Mazabow, G 2003. The Development of Historical Consciousness in the Teaching of History in South African Schools. PhD Thesis. Pretoria: University of South Africa. [ Links ]

McPhail, G &Rata, E 2016. Comparing Curriculum Types: 'Powerful Knowledge' and '21st Century Learning. New Zealand Journal of Educational Studies, 51:53-68. [ Links ]

McDonald, ME 2002. Systematic Assessment of Learning Outcomes: Developing Multiple- Choice Exams. Sudbury, MA:Jones and Bartlett Publishers. [ Links ]

Ministry of General Education 2013. The Zambia Curriculum Framework. Lusaka: Curriculum Development Center. [ Links ]

Motlhabane, A 2017. Unpacking the South African Physics-Examination Questions According to Blooms' Revised Taxonomy. Journal of Baltic Science Education, 16(6):919-930. [ Links ]

Ngungu, J 2016. Formal Education and Critical Thinking Skills for Knowledge Economy in Zambia, MPhil thesis. Stellenbosch: Stellenbosch University. [ Links ]

Oppong, CA 2019. Assessing History Students' Historical Skills in the Cape Coast Metropolis of Ghana. The Councillor: A Journal of Social Studies. 80(1):1-19. [ Links ]

Scott, T 2003. Bloom's Taxonomy Applied to Testing in Computer Science Classes. Consortium for Computing Science in Colleges: Rocky Mountain Conference. (October 2003) 267-274. [ Links ]

Shanableh, A 2014. Alignment of Course Contents and Student Assessment with Course and Programme Outcomes - A Mathematical Approach. Engineering Education, 9(1):48-61. [ Links ]

Sichone JM, Chigunta M, Kalungia AC, Nankonde, P & Banda S 2020. Addressing Radiography Workforce Competence Gaps in Zambia: Insights into the Radiography Diploma Training Programme Using a Curriculum Mapping Approach. International Journal of Sciences: Basic and Applied Research. 49(2):225-232. [ Links ]

Starr, CW, Manaris, B, & Stalvey, RH 2008. Bloom's Taxonomy Revisited: Specifying Assessable Learning Objectives in Computer Science. ACM SIGCSE, 40(1):261-265. [ Links ]

Swart, AJ 2010. Evaluation of Final Examination Papers in Engineering: A Case Study Using Bloom's Taxonomy, IEEE Transactions on Education, 53(2):257-264. [ Links ]

Thompson, E, Luxton-Reilly, Whalley A, Hu, JL, Robbins, M P 2008. Bloom's Taxonomy for CS Assessment. Proceeding Tenth Australasian Computing Education Conference (ACE 2008), Wollongong, Australia. 155-162. [ Links ]

Wangeleja, M 2010. The Teaching and Learning of Competency-Based Mathematics Curriculum: A Paper Presented at the Annual Seminar of the Mathematical Association of Tanzania at Mazimbu Campus. Morogoro: Sokoine University of Agriculture. [ Links ]

Warnich, P, Meyer, L & Van Eeden, E 2014. Exploring History Teachers' Perceptions of Outcomes-Based Assessment in South Africa. Journal for Educational Studies 13(1):1-25. [ Links ]

Young, M & Muller, J 2010. Three Educational Scenarios for the Future: Lessons from the Sociology of Knowledge. European Journal of Education, 45(1):11-27. [ Links ]