Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

Literator (Potchefstroom. Online)

On-line version ISSN 2219-8237

Print version ISSN 0258-2279

Literator vol.38 n.1 Mafikeng 2017

http://dx.doi.org/10.4102/lit.v38i1.1319

ORIGINAL RESEARCH

Diversity, variation and fairness: Equivalence in national level language assessments

Verskeidenheid, variasie en regverdigheid: Ekwivalensie by taalassesserings op nasionale vlak

Albert WeidemanI; Colleen Du PlessisII; Sanet SteynIII

IOffice of the Dean, Humanities, University of the Free State, South Africa

IIDepartment of English, University of the Free State, South Africa

IIICentre for Academic and Professional Language Practice, School of Languages, North-West University, South Africa

ABSTRACT

The post-1994 South African constitution proudly affirms the language diversity of the country, as do subsequent laws, while ministerial policies, both at further and higher education level, similarly promote the use of all 11 official languages in education. However, such recognition of diversity presents several challenges to accommodate potential variation. In language education at secondary school, which is nationally assessed, the variety being promoted immediately raises issues of fairness and equivalence. The final high-stakes examination of learners' ability in home language at the exit level of their pre-tertiary education is currently contentious in South Africa. It is known, for example, that in certain indigenous languages, the exit level assessments barely discriminate among learners with different abilities, while in other languages they do. For that reason, the Council for Quality Assurance in General and Further Education, Umalusi, has commissioned several reports to attempt to understand the nature of the problem. This article will deal with a discussion of a fourth attempt by Umalusi to solve the problem. That attempt, undertaken by a consortium of four universities, has already delivered six interim reports to this statutory body, and the article will consider some of their content and methodology. In their reconceptualisation of the problem, the applied linguists involved first sought to identify the theoretical roots of the current curriculum in order to articulate more sharply the construct being assessed. That provides the basis for a theoretical justification of the several solutions being proposed, as well as for the preliminary designs of modifications to current, and the introduction of new assessments. The impact of equivalence of measurement as a design requirement will be specifically discussed, with reference to the empirical analyses of results of a number of pilots of equivalent tests in different languages.

OPSOMMING

Suid-Afrika se post-1994 grondwet herbeklemtoon met trots die taalverskeidenheid van die land; so ook daaropvolgende wette. Ministeriële beleidsverklarings op beide verdere en hoëronderwysvlak bevorder insgelyks die gebruik van al 11 amptelike tale in die onderwys. Tog bied die erkenning van diversiteit verskeie uitdagings wat betref die akkommodering van potensiële variasie. In die geval van taalonderrig op sekondêre skoolvlak, wat nasionaal geassesseer word, lei die klem op verskeidenheid onmiddellik tot vrae oor regverdigheid en ekwivalensie. Die finale hoë impak-eksamen in Suid-Afrika van leerders se bemeestering van tuistale, wat geskied aan die einde van hul pre-tersiêre onderrig, is tans kontroversieel. Dit is byvoorbeeld bekend dat in die geval van sekere inheemse tale die uittreevlak-eksamens dit skaars regkry om te diskrimineer tussen leerlinge met verskillend vermoëns, terwyl in ander gevalle dit wel geskied. Om hierdie rede het die Raad vir Gehalteversekering in Algemene en Verdere Onderwys, Umalusi, reeds verskeie verslae aangevra ten einde die aard van die probleem beter te kan begryp. Hierdie stuk bespreek 'n vierde poging van Umalusi om die probleem te ondervang. As deel van daardie poging, wat onderneem is deur 'n konsortium van vier universiteite, is reeds ses tussentydse verslae aan hierdie statutêre liggaam voorgelê, en hierdie bydrae oorweeg die inhoud en metodologie daarvan. Deur die probleem te herkonseptualiseer, het die betrokke toegepaste taalkundiges eerstens gepoog om die teoretiese wortels van die huidige kurrikulum te identifiseer ten einde die konstruk wat geassesseer moet word, skerper te kan artikuleer. Daardie konstruk bied die basis vir die teoretiese begronding van die onderskeie oplossings wat voorgestel word, asook vir die voorlopige ontwerpe van modifikasies aan die huidige, en die invoer van nuwe assesserings. Die impak van ekwivalente meting as 'n ontwerpvoorwaarde sal spesifiek bespreek word met verwysing na die empiriese analises van resultate wat verkry is uit die voortoetse van ekwivalente toetse in verskillende tale.

Diversity recognised in law and policy

Some ambiguity attaches to the term 'home language' in South Africa. It refers not only to the constitutional and legal enshrinement after 1994 of 11 primary languages as official languages (Republic of South Africa 1996a) but also, in the context of this article, more specifically to the place and role of 'home language' as a subject in the secondary school curriculum (Department of Education 1997; Republic of South Africa 1996b). In the first case, 'home language' may therefore be any of the 11 officially recognised languages, used by various citizens as first languages. For the second purpose, its role is determined by policies of the national Department of Education (now Basic Education). The current curriculum, called the Curriculum and assessment policy statement (CAPS) (Department of Basic Education 2011), views the mastery of those languages taught at school as 'home languages' primarily as a passport to the world of work.

What are taught as home languages at school, however, may not be the learners' first language, as the term suggests. Firstly, urbanisation has thrown together people, who seek education for their children, are from many of the different legally recognised language backgrounds and are not necessarily from a single, homogeneous language community. Pressures of both urbanisation and the scarcity of state resources allocated to education make the provision of single-language schools for every language community virtually impossible. Though there may be many first languages represented among the learners in one secondary school, usually - though there are exceptions - only one 'home language' is taught in it. So 'home language' at school must not be confused with 'first language' or mother tongue, because not all learners in a particular school come from single-language households, nor do they necessarily have as first language the language that is being taught as home language in the school they attend. What is more, even before the end of apartheid, intermarrying between different language groups, especially in urban communities, was widespread. It is, therefore, possible that a secondary school learner whose father's language is Sesotho, and whose mother speaks Xhosa, may have received his or her initial primary education in a Setswana medium of instruction township school, but may eventually find himself or herself in an English medium secondary school, one where English is taught as the only 'home language'.

To accommodate this reality, the Department of Basic Education, to whose responsibilities belong providing for the teaching of the 11 languages at school, makes little to no reference to native speaker fluency or competence in its policies. Rather, it specifically underwrites the principle of 'high knowledge and high skills', while emphasising, in setting out the requirements in CAPS for the school subject of home language instruction, that the minimum criteria to be met are 'high … standards in all subjects' (Department of Basic Education 2011:4). The term 'high', as it is used in CAPS, anticipates the distinction the education authorities wish to make between the different levels that apply to the teaching and assessment of each of the 11 official languages, possibly harking back to the time when languages at school were taught at two levels, standard and higher grade, which arrangement no longer holds. So, two of the levels at which languages can currently be taught are home language (HL) level and first additional language (FAL) level. In this definition, however, HL refers neither to the language used at home, nor to the mother tongue or to first language proficiency but specifically to a higher level or standard of instruction. By extension, it also refers to a higher degree of mastery by learners of that language and of the assessment of the extent of that mastery.

CAPS identifies both a social command of language ('the mastery of basic interpersonal communication skills required in social situations') and an ability to use language for academic, educational or professional purposes ('cognitive academic skills essential for learning across the curriculum'; being able to use a sufficiently high standard of language in order to be able to gain access to 'further or higher education or the world of work') before also referring to aesthetic ends ('literary, aesthetic and imaginative ability') (Department of Basic Education 2011:8-9). There is no doubt that the levels of mastery identified, on the one hand, for social purposes and, on the other hand, for meeting academic and professional goals derive from the distinctions of Cummins (Cummins & Davison 2007:353), who sees a distinction between the proficiency of first language and second language speakers in bilingual educational settings, and, consequently, the differences in ability required for 'basic interpersonal communicative skills (BICS), or conversational language, and cognitive academic language proficiency (CALP), or academic language'. It is clear, however, that the 'high' level of mastery required for HL must refer primarily not to the social goal of competence in conversation in it, but rather and more pointedly to its use for academic and professional purposes. 'Home language' ability, therefore, refers to a high level of ability and assessment, and not necessarily to first language.

So, while language diversity is recognised in law and in policy, and while the promotion of a substantial number of official languages is both lauded by civil society and officially supported by government through the establishment of semi-statutory bodies such as the Pan-South African Language Board, the factual educational environment has to accommodate a language arrangement that is faced with challenges. Learners enrolled for the subject of a HL at secondary school cannot be assumed to have first language level mastery of it. This article will, for the sake of focussing on the assessment of ability in HLs, not even begin to touch on other and further potential complications, such as the blurring of the distinctions between languages within the instructional environment, as they may, for example, be used interchangeably in a single instructional episode.

One of the main challenges yielded by the accommodation of a diversity of languages as HLs lies in the potential variation in the assessment of the extent to which they have been mastered by learners. That variation may be exacerbated especially when these learners are compelled by organisational arrangements at secondary school level to be assessed in a HL that is different from their primary or first language.

Recognising diversity makes accommodation of variation problematic

The challenge of accommodating language diversity becomes acute when one considers that secondary school exit level examinations as a rule, are high-stakes assessments and, in South Africa's case, are nationally assessed, not provincially, regionally or locally. Taken at the end of Grade 12, they are known as the National Senior Certificate (NSC) examinations (Department of Education 2008a, 2008b). For many learners about to leave secondary school, these Grade 12 exit level examinations (sometimes still referred to as 'matric' or the 'matriculation examination', the terms used in a previous dispensation) are one of the highest stakes assessments most of them will ever submit to. Its results determine to a significant degree not only their post-school employability but also their access to higher education. The latter gives those successfully completing their tertiary studies a disproportionate competitive advantage in earning power that will benefit them for all of their working lives. It is the type of examination in which there can be little allowance for any kind of variation that results in unfair treatment of those submitting to these high-stakes assessments.

To define the problem more precisely, one needs to note, furthermore, that in order to gain access to university, tertiary institutions generally employ an index to assess learners' performance before applications are considered, whereupon access is granted or denied. Formerly called the M-score (for average matric performance across a number of compulsory subjects for entry into university), and now known as the Admission Point Score (APS), the index is a weighted measure of average performance across various subjects on the NSC examinations. Employed by university administrators, the index makes the biggest single contribution to decisions of access to tertiary institutions (Nel & Kistner 2009). Pertinent to the current discussion, moreover, is that the results of HL examinations contribute substantially, and proportionately more than the remaining subjects, to the APS. It is, therefore, apparent why the statutory body tasked to oversee these examinations, the Council for Quality Assurance in General and Further Education and Training (Umalusi), would be concerned that the 11 languages for which examinations are written have assessments with comparable results across the various papers (three in total) in each. Should these show variation or lack comparability, their equivalence would be questionable, and their results would be unfair (Kunnan 2000, 2004). In a high-stakes assessment, this is unacceptable, for it would indicate that some sitting for the examination are being advantaged over others just because they wrote a different set of papers belonging to a particular language.

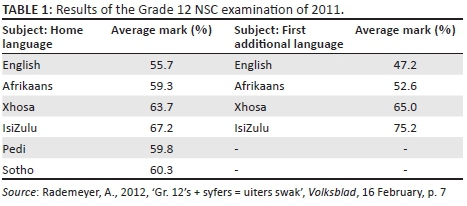

It is known, for example, that in certain indigenous languages, the exit level assessments barely discriminate among learners with different abilities, while in other languages they do (Du Plessis, Steyn & Weideman 2016). This was first highlighted in the daily press, with typical figures such as those in Table 1, which survey the results of the 2011 HL and FAL examinations for some of the languages (Steyn 2014).

As the table shows, the results of this selection of various HLs vary with regard to average marks between a low of close to 56% and a high of above 67%, while the average marks obtained nationally for FALs cover an even larger range in the selection given in the table, from 47% to 75%. The credibility of the examinations is undermined when one language indisputably treats those assessed more favourably than is the case for other languages. As Van Rooy and Coetzee-Van Rooy (2015) have pointed out, school language results are neither a good predictor of performance at university nor a good measure of academic literacy, one of the 'high level' goals set by the curriculum for language instruction at school (though there is potentially some contradictory evidence in this regard: cf. Myburgh 2015).

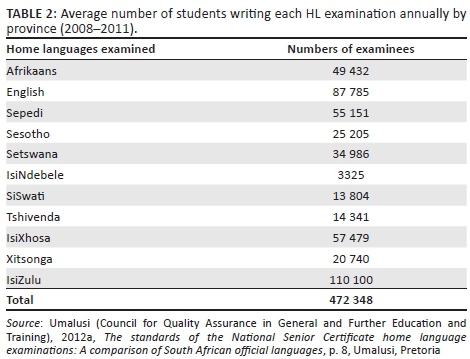

The numbers of pupils involved are also large: almost half a million Grade 12 learners on average wrote the examinations in the various HL in the four years between 2008 and 2011, according to Umalusi (Table 2).

As Du Plessis (2014) points out, more than 80% of these pupils wrote a language examination other than English at HL level. The latest census figures show, however, that less than 10% of the South African population are first language speakers of English (see Figure 1). This means that perhaps half of the close to 19% of learners who wrote English at this level (HL) may be speakers of English as an additional language, confirming a trend of (mainly middle class) pupils drifting towards schools offering English as home language.

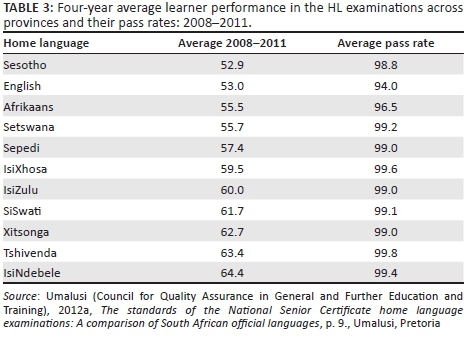

In a population as large as that of the pupils writing examinations at HL level, composed of segments that (with the exception of Ndebele) are equally substantial, one would expect a normal distribution of marks, and perhaps comparable averages. The high-stakes assessment that this examination forms part of would not only expect comparability, but in fact require it. However, a look at the average marks obtained for the various home languages in the four years between 2008 and 2011 shows that this is not the case (Table 3).

The average marks differ across languages by about 12% between lowest and highest, ranging from a low of under 53% to a high of over 64%. That is not the only concern. When one considers pass rates in this high-stakes examination, one finds that if pupils sit for the examination in one of the African languages, their chance of failing is less than 1%. Pupils writing examinations in the other HLs (English and Afrikaans) have a much greater chance to fail. Furthermore, when one compares the HL results with non-language school subjects, their averages are exceptionally high (Umalusi 2012b:61; Department of Basic Education 2012:5): between 2009 and 2012, the subjects other than languages have a low average pass rate of 48.4% for mathematics, for example, with higher pass rates for history (77.5%) and mathematical literacy (Department of Basic Education 2012:5), but nowhere near those for languages, which are close to 100%.

Problem awareness: Seeking to understand variation

The levels of variation observed among non-language and language examinations, as well as among the individual languages involved, are evidently undesirable. Such variation does not only undermine the quest for equitable and fair treatment for all in assessment but also creates an unfavourable climate in an environment that wishes to celebrate diversity in an emerging, recently established democracy. The diversity that a constitutional democracy recognises in law is intended to ensure fair treatment of all. Where there is unfair treatment, it jeopardises such recognition.

Two observations on the potentially unfair treatment of some individuals or groups may be made. Firstly, the average marks, as well as pass rates, indicate that merely sitting for an examination in a certain language places some at a disadvantage and gives an undeserved advantage to others. These examinations across languages are patently and indefensibly different. Secondly, for at least some of those who write the examinations in either English or Afrikaans (even though these may not be their HLs), there is a much greater chance of failing than if they had written these in what is indeed their first language.

For these reasons, Umalusi has commissioned several reports to attempt to gain a more thorough understanding of the nature of the problem outlined above. Those papers, and a number of others that preceded them, are summarised and discussed in more detail by Du Plessis (2014). The concerns they raise include the lack of comparability across languages because assessment guidelines had not been adhered to, the low levels of cognitive demand resulting from certain methods of setting questions in some languages, and a number of other factors. Rather than reviewing them again here, this article will deal with a discussion of a fourth major attempt by Umalusi to address the problem: to bring in a range of expertise in language assessment from a collaborative venture among four universities, the Inter-Institutional Centre for Language Development and Assessment (ICELDA 2014). This project was initially known as the HLs project, and, though a number of reports have been made about it to Umalusi, it is now an independent investigation by the partnership first tasked to research the problem from the specific point of view of identifying and then articulating the construct of what is being assessed in these examinations.

From problem awareness to problem identification

The initial brief of the HLs project was to determine whether the assessment conformed to one of the basic principles of language testing, namely whether the construct being assessed was clear and well defined (Weideman 2011, 2012). This was in fact the angle suggested by Umalusi in their initial briefing of the applied linguists commissioned to tackle the problem. In their approach to identify the problem at a level that would most likely yield insight into the eventual design of a feasible solution, the language assessment experts involved soon realised that, in order to define the construct of what was being assessed, there was no alternative but to start with the curriculum - the same one is employed for all the HLs and in policy terms was the only officially recognised common prescription and, hence, a shared point of orientation. As one reviewer has pointed out, defining a construct for a supposedly general course of language ability is not unproblematic, as will become evident in the subsequent discussion.

One immediate problem was that, at the stage that the project was commissioned, the education system was in a transition to a new curriculum (CAPS). Upon examination of the proposed curriculum for HL instruction, however, the core research team concluded that the new formulations not only echoed the old but were at most an attempt to simplify the interpretation of the previous curriculum as well (Du Plessis et al. 2016). This is an unsurprising finding, since education change is rarely revolutionary, and the various modifications to language curricula since the initial introduction, in 1984, of communicative language teaching (CLT) substantiate that the process of change has been incremental rather than disruptive. The researchers, therefore, concluded that it would be more worthwhile to focus their attention on designs for future assessments of HL ability (under CAPS) than to attempt to consider only the past and merely come up with an understanding of the variation observed. In addition, they felt justified to assume that, given the slight simplification of the curriculum, the examinations would be even more conservatively approached and would remain largely similar to what went before. This is an assumption that needs to be confirmed as more data continue to become available on examinations done after the recent introduction of the new curriculum, but so far that assumption seems to hold.

In their reconceptualisation of the problem, the applied linguists involved therefore first investigated the theoretical roots of the current curriculum in order to articulate more sharply the construct being assessed. We turn to a discussion of that in the next section.

A productive reconceptualisation

As a design for language teaching, any sophisticated curriculum for language instruction relies on its alignment with views on language that can be justified with reference to theoretical insights. As regards HLs, there are several cues in both CAPS and in the curriculum it replaced as to the theoretical roots of the ideas on language, language pedagogy and language mastery that underlie it. These underlying perspectives on language and language learning both support and enrich its content, and provide an interpretive basis for making sense of its various specific prescriptions. The details become interpretable more effectively and responsibly when they are given relief by the theoretical views on which they are based.

The first clue for the responsible interpretation of the curriculum for HL instruction (Department of Basic Education 2011:section 2.5) can be found in its declaration of subscribing to a 'text-based' and communicative approach. Combined with the specific aims of the curriculum, namely to enable a mastery of language specifically for academic and educational purposes, for its enjoyment and stimulation of the imagination and creativity, for social purposes, and for its use in ethical and political discourse, as well as a number of general aims relating to gathering and analysing information, expressing opinions and so on (Department of Basic Education 2011:9), this approach clearly has a number of theoretical starting points. Firstly, its emphasis on the mastery of specific discourse types, genres and registers, also identifiable as material lingual spheres (Patterson & Weideman 2013), that is, as kinds of discourse that are distinct in content as well as in type, is notable. Secondly, there is reference to more generally defined, functional uses of language. These observations are borne out by and reflected in many - though not all - detailed specifications on language instruction in the rest of the curriculum, as our previous analyses have shown (Du Plessis et al. 2016). One commentator on a previous version of this article is of the opinion that the old distinction between 'curriculum' and 'syllabus' still holds; our analysis is that a content analysis of CAPS would show that one might interpret it as either a list of things to be done (the traditional understanding of 'syllabus') or as a document that has the status of what the 'C' in its name indicates. Whatever the case may be, it would not have influenced our interpretation of the content of the instructional prescription it entails, so we did not mull over the question as a problem in its own right, even though educationists may want to do so. We, therefore, use the terms 'interchangeably'.

It is important for the purposes of correctly interpreting the curriculum, however, to note that both angles of the approach, the specific and the general, define language functionally. Language is viewed not only as an instrument of social interaction in various spheres of discourse but also as a general, and functionally definable, communicative tool. These views patently derive from those that characterise the turn effected in language teaching designs especially by sociolinguistic perspectives in the last 30 years of the previous century and in the development of which the work and analyses of, among others, Halliday (1978), Habermas (1970) and Hymes (1972) are prominent. These all reflect a concern with a differentiated communicative competence (an ability to use language in a variety of lingual spheres of discourse or repertoires), as well as a general ability to use language acts that are functionally defined (Searle 1969; Wilkins 1976).

Taking such an open view of language as is embodied in these socially disclosed ideas of what constitutes language has several implications for how one conceives both of language mastery and of the language instruction that is aimed at achieving that mastery. The first implication probably is that a command of language cannot solely be defined with reference to the grammatical control learners have of the language. In any kind of interaction through language, be it political, academic, ethical, aesthetic or economic, the functional uses and purposes of language have precedence over language structure. They also in fact determine the structure, in Halliday's view: meaning is expressed or encoded in wording. Another issue concerns the age and depth of these perspectives - especially when one considers that their origins lie in views that emerged a good 40 years ago. In this respect, we may observe, however, that these theoretical perspectives have stood the test of time: the dependence not only of the South African language curricula on them but also the cutting-edge language teaching syllabi in Australia, for example, that have been informed by these views, shows that they offer the theoretical rationale for an impressive range of genre-based approaches to language teaching as well as to the teaching of writing (Carstens 2009).

By identifying these as the theoretical starting points of the HL curriculum in question, we are able to rediscover the direction that the syllabus encourages language teaching and language assessment to take. Though it forms part of what one reviewer calls a 'general education of children', the prescriptions for instruction in language that it sets are specific to language ability in a primary language. Our analysis shows that these prescriptions can potentially be beneficially interpreted if their goals are as stated: to allow language ability to develop both generally and specifically. The curriculum sets the conditions, in other words, for a productive reconceptualisation of the assessment problem that HL examinations at Grade 12 level face.

Misalignment of purpose and assessment

Having identified the theoretical views at the basis of the curriculum, one needs to examine, firstly, the further details of the syllabus to see whether these provide a consistent further working out of these views. Secondly, one would need to consider whether the actual examination papers (three in total for each language), and the way that they and the rest of the assessment are organised, are aligned with these views.

The short answer in both cases is negative (Du Plessis 2014; Du Plessis et al. 2016; Steyn 2014). Further, in working out the ideas on which the curriculum is based, those who drafted it evidently tried to accommodate a range of traditional approaches to language teaching. That compromise allows teachers who do not subscribe to the new perspectives, both on language and on language learning, to continue as before (Weideman 2002; Weideman, Tesfamariam & Shaalukeni 2003). Evidence for this accommodation can be found both in the continuing strong emphasis on 'sentence structures', grammar and grammatical conventions and language 'structure', and in statements that encourage the combination of the 'skills' of listening, speaking, reading and writing, the conventional components of traditional approaches, with new, functional ways of interacting through language. For example, the syllabus specifies a combination of listening and speaking for different purposes, as well as 'reading and viewing' and 'writing and presenting' (Department of Basic Education 2011:10), presumably for the sake of presenting these in a functional perspective. The exhortation to 'integrate' the supposedly separable skills does not consider that they are in the first instance not distinct at all (Weideman 2013). Rather than echoing and reinforcing the skill separation that dates back to views of more than a century ago, the syllabus might have embraced a more functional view of language that takes into account the well-known criticisms and shortcomings of a skills-based starting point (Bachman & Palmer 1996:75f.; Kumaravadivelu 2003:226). In those and in several other senses, then, the detailed syllabus contained in CAPS is not an entirely consistent working out of CLT, the approach which it claims to subscribe to. What is more, the extensive work suggested in the emphasis that is retained (as in previous policy dispensations) on the appreciation of language used for aesthetic purposes and the enjoyment of literature, indicates a much larger role for literature study than is conventional in most interpretations of CLT. That emphasis on literature, and especially the study of 'good' literature, is associated not with the 21st century, but rather with 19th century conceptions and arrangements. In terms of styles of language teaching, the grammar translation method that accompanied this emphasis reinforced the bias towards studying what was then considered to be worthwhile texts: the canonical literature of a language. Some may be of the opinion that the 'text-based' approach that is promoted in CAPS can be equated with the genre approaches and also the emphasis on Halliday's systemic functional grammar (SFG) that have now been adopted in Australia (cf. Carstens 2009). Our examination and analysis of CAPS has shown, however, that while the curriculum certainly depends on earlier notions of communicative competence and associated concepts, there is no reference in CAPS to SFG, or its current interpretations within genre approaches. Rather those emphases that we do find in the curriculum to especially the study of literature are indicative of a mismatch between the theoretical justification of the policy and the policy itself. That mismatch is reflected especially in the allocation of marks to the various components of the assessment, which will be discussed below.

In developing contexts as well as developed ones, there may be a further mismatch between policy specification in the curriculum (in this case: CAPS), teaching practice (Weideman 2002; Weideman et al. 2003) and assessment. In order to make changes in policy effect changes in practice, education authorities in a developing context may attempt to achieve a new alignment between policy prescription (curriculum) and practice (language teaching) simply by changing the assessment. The rationale for this strategy is that, rather than attempting to interpret the syllabus independently, teachers will teach to the testing that will follow. As our earlier reports and analyses have pointed out, however, the division of the three papers in assessing the content of the mastery of language articulated in the curriculum is not successful. As was assumed, even after a change in curriculum (to CAPS), the assessments have remained largely similar to the ones in the previous dispensation. The papers are divided as follows: Paper 1 (70 marks) is entitled 'Language in context' (including comprehension, summary writing), with in some papers almost half (30 marks) allocated to grammar, though there is again inconsistency across languages in this respect; Paper 2: 'Literature' (three genres: novel, poetry and drama) is allocated 80 marks and Paper 3: 'Writing' (the second paper, somewhat confusingly, to assess that) 100 marks. Apart from these three papers, the assessment includes an internal assessment of oral proficiency in the HL (50 marks). The various components of the assessment neither promote the underlying views of language of the curriculum nor do they bring about an alignment of curriculum and assessment. There is little patent evidence of either how they align in content with the curriculum and its main tenets, or whether they in fact deviate much from those in previous policy regimes, of which there have been three since 1994: a first attempt that closely followed the advice of the National Education Policy Initiative (NEPI) of the early 1990s; a second attempt, the contested foray into outcomes-based education; and the third attempt, the revised CAPS. A good fit between construct and specification of the assessment (Weideman 2011) is missing.

Construct articulation as a key to solving misalignment

A responsible analysis of the curriculum indicates that the construct on which the assessment needs to be based should be defined as a differentiated language ability in a number of discourse types involving typically different texts and a generic ability incorporating task-based functional and formal aspects of language (Du Plessis et al. 2016). That analytical basis furthermore provides a platform from which a theoretical justification of several possible solutions can be pursued.

In allowing this construct to influence their solution, the project team has been able to come up with a preliminary set of designs for the assessment that would eventually help to solve both the problem of mismatch between curriculum and its theoretical foundations, and that of the misalignment between the curriculum and the assessment.

Firstly, in order to advance the analysis, a doctoral level study anchoring the project will take the process of construct identification a step further by analysing a number of current and past examination papers in several HLs in detail. These analyses are likely to provide empirical backing for the preliminary conclusions about the misalignment of construct and assessment. Initial analyses (Du Plessis 2014; Du Plessis & Weideman 2014) show a large and indefensible range of discrepancies both in the kinds of question and the level of the questions across languages. They also test mastery of an inappropriate and largely irrelevant range of texts for learners at this level (though some of these are indeed mentioned in the curriculum). These detailed analyses, combined with an insight into the key elements of the curriculum, may enable the test designers to propose a new set of assessment components. These proposals may seek to redivide the allocation of marks in the first instance not to papers, but to the assessment of the mastery of various spheres of discourse (such as academic, political, ethical, aesthetic and economic discourse). That kind of re-allocation should be able to do justice to the emphasis of the syllabus on the required command of a differentiated variety of discourse types and to correct some of the current biases, such as that towards the aesthetic appreciation of literary language.

Secondly, there needs to be an acknowledgement that the differential command of language, identified as key component of the construct to be assessed, does not operate in isolation. It is intertwined with and made possible by a general ability to use language for interactional purposes, and these functional uses of language would occur across discourse types. Nonetheless, the mastery of language functions such as explaining, interpreting, distinguishing, defining, inferring and so forth can be tested as so-called high level uses of language that occur across discourse types. Therefore, in addition to the planned re-allocation of marks, provision would also have to be made for assessing the generic, functional abilities that underlie and are intertwined with the mastery of a variety of discourse types.

It is likely that the changes to the assessment that are being proposed, as well as the designs of modifications to current assessments, will also include the introduction of new assessments, and specifically an assessment of an advanced level language ability. In the final section, below, we turn to a discussion of progress on a preliminary design for such an assessment.

A Test of Advanced Language Ability across all languages

The possible re-allocation of marks referred to above may make it possible to reserve 20% of the marks allocated to the assessment (i.e. 60 marks out of a total of 300) to assess learners' command of an advanced level of ability in the various HLs.

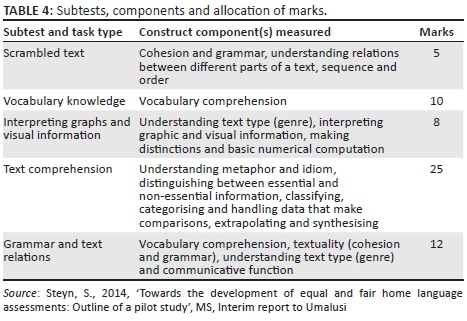

For this, equivalent tests of advanced language ability across the various languages will be needed. The team tasked to design such a set of tests has drawn specifically from the specifications in the curriculum of functional uses of language to come up with a Test of Advanced Language Ability (TALA) that has the following components and specifications (cf. Steyn 2014, for a more complete explanation) (Table 4).

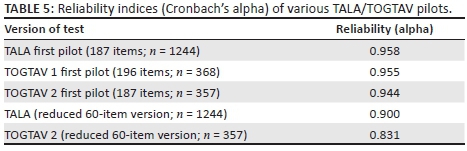

The test design was done first in English and then translated into Afrikaans (in which the test is known as the Toets van Gevorderde Taalvermoë [TOGTAV version 1]). A second Afrikaans version (TOGTAV 2), equivalent in respect of construct to these, was also developed, before all three were piloted. The intention is to see which of the two methods of ensuring equivalence (translation or similar construct) would give better results. It turned out that all of the tests were highly reliable (on Cronbach's alpha, as below, but also on other measures) (Table 5).

Even when the longer versions (that should, by virtue of their length, give higher reliability readings) were reduced to the desired 60-item versions of TALA and TOGTAV, the reliability indices are well above the required 0.7 benchmark for high-stakes tests. Though these are overall reliability measures at test level, a further consequence of this is that they indicate that the test designers are likely, at item level, to be left with a sufficient number of productive items to select from either the construct equivalent (TOGTAV 2) or translated version (TOGTAV 1) of the two tests derived from TALA.

Conclusion

These initial attempts must now be followed up by further designs in a handful of other languages. Specifically, the design team is starting to work on Sesotho versions of TALA, while work in Sepedi and Tswana may also be possible. A challenge that remains is to involve an Nguni language (e.g. Xhosa or IsiZulu) as well. As one reviewer has pointed out, there is a potential problem translating "the tests from languages that have been used in higher functions for 40 years or more, while others are still in the process of being standardised and used in public, professional and scholarly functions". That problem is authenticity. So one may ask about how authentic such texts will be in other languages. There is no easy answer to this, but the fact remains that the curriculum for the HLs currently requires that mastery of such a range of texts be assessed. So unless changes and exceptions are made for some HLs, but not for others, that challenge will remain. The other alternative would be to suspend the curriculum requirements for mastery of a range of contexts, but that would surely result in some languages then being examined differently from others. What is more, that would send out a message that certain languages indeed 'lack' certain so-called higher functions, which surely is not only untenable, but would also discourage their development to employ and be employed in such functions. What is more, currently the texts used in examination papers across the HLs are, according to one of the Umalusi officials who briefed us for this project, already being translated, in fact as a matter of course. So if there is a problem with authenticity, it is not one that is unfamiliar to those struggling to make the assessment fairer to everyone.

If equivalence of measurement as a design requirement for the high-stakes assessments in HLs is taken seriously, in order to yield results that are fair to everyone involved, the tests that are being proposed will further serve to benchmark not only the 60-mark measurement of advanced language ability across languages, but, since they constitute a substantial part (20%) of the total assessment, may also be used to draw inferences about the equivalence (or continuing lack of equivalence) in the remaining components of the assessment of HLs. They will, in other words, also be able to serve as an indication of how fair the rest of the assessment is likely to be, since their results could be extrapolated to be compared with these. Concurrent validity of the remaining examination sections could also be determined using TALA scores as a basis.

All of this endeavour is a first step towards equivalence and fair assessment. Whether the designs will be successfully implemented is, in the end, a political rather than an educational question. If these solutions are accepted and are indeed implemented, they are certain to ensure greater fairness. The goal that any democratic arrangement should have is to recognise variety. It is our hope that these proposals may assist in overcoming some of the challenges that such recognition implies.

Acknowledgements

Competing interests

The authors declare that they have no financial or personal relationship(s) that may have inappropriately influenced them in writing this article.

Authors' contributions

A.W. was the coordinator of the project and primary author of this article. C.d.P. and S.S. are both completing studies, under the supervision of A.W., that contributed to the content of this article.

References

Bachman, L.F. & Palmer, A.S., 1996, Language testing in practice: Designing and developing useful language tests, Oxford University Press, Oxford.

Carstens, A., 2009, The effectiveness of genre-based approaches in teaching academic writing: Subject-specific versus cross-disciplinary emphases, University of Pretoria, Pretoria.

Cummins, J. & Davison, C. (eds.), 2007, International handbook of English language teaching Part 1, Springer Science & Business Media LLC, New York.

Department of Basic Education, 2011, Curriculum and assessment policy statement: Grades 10-12 English home language, Department of Basic Education, Pretoria.

Department of Basic Education, 2012, National Senior Certificate examination schools subject report 2012, Department of Basic Education, Pretoria.

Department of Education, 1997, 'Language-in-education policy 14 July 1997, published in terms of section 3(4)(m) of the National Education Policy Act 1996 (Act 27 of 1996)', Government Gazette 18546, 19 December 1997.

Department of Education, 2008a, National curriculum statement Grades 10-12 (General): Subject assessment guidelines: Languages: Home language, first additional language, second additional language, Department of Basic Education, Pretoria.

Department of Education, 2008b, National Senior Certificate report, Department of Education, Pretoria.

Du Plessis, C.L., 2014, A historical and contextual discussion of Umalusi's involvement in improving the standards and quality of the examination of home languages in Grade 12 [Interim report to Umalusi], Inter-Institutional Centre for Language Development and Assessment (ICELDA), Bloemfontein.

Du Plessis, C.L., Steyn, S. & Weideman, A., 2016, 'Die assessering van huistale in die Suid-Afrikaanse Nasionale Seniorsertifikaateksamen - Die strewe na regverdigheid en groter geloofwaardigheid', LitNet Akademies 13(1), 425-443, viewed 28 April 2016, from http://www.litnet.co.za/die-assessering-van-huistale-in-die-suid-afrikaanse-nasionale-seniorsertifikaateksamen-die-strewe-na-regverdigheid-en-groter-geloofwaardigheid/ [ Links ]

Du Plessis, C.L. & Weideman, A., 2014, 'Writing as construct in the Grade 12 home language curriculum and examination', Journal for Language Teaching 48(2), 121-141. http://dx.doi.org/10.4314/jlt.v48i42.6 [ Links ]

Habermas, J., 1970, 'Toward a theory of communicative competence', in H.P. Dreitzel (ed.), Recent sociology, vol. 2, pp. 115-148, Collier-Macmillan, London.

Halliday, M.A.K., 1978, Language as social semiotic: The social interpretation of language and meaning, Edward Arnold, London.

Hymes, D., 1972, 'On communicative competence', in J.B. Pride & J. Holmes (eds.), Sociolinguistics: Selected readings, pp. 269-293, Penguin, Harmondsworth.

ICELDA, 2014, Inter-Institutional Centre for Language Development and Assessment, viewed 18 November 2013, from http://icelda.sun.ac.za

Kumaravadivelu, B., 2003, Beyond methods: Macrostrategies for language teaching, Yale University Press, London.

Kunnan, A.J. (ed.), 2000, Studies in language testing 9: Fairness and validation in language assessment, Cambridge University Press, Cambridge.

Kunnan, A.J., 2004, 'Test fairness', in M. Milanovic & C. Weir (eds.), Studies in language testing, vol. 18, pp. 27-45, Cambridge University Press, Cambridge.

Myburgh, J., 2015, 'The assessment of academic literacy at pre-university level: A comparison of the utility of academic literacy tests and Grade 10 home language results', MA dissertation, University of the Free State, Bloemfontein. [ Links ]

Nel, C. & Kistner, L., 2009, 'The National Senior Certificate: Implications for access to higher education', South African Journal for Higher Education 23(5), 953-973. http://dx.doi.org/10.4314/sajhe.v23i5.48810 [ Links ]

Patterson, R. & Weideman, A., 2013, 'The typicality of academic discourse and its relevance for constructs of academic literacy', Journal for Language Teaching 47(1), 107-123. http://dx.doi.org/10.4314/jlt.v47i1.5 [ Links ]

Rademeyer, A., 2012, 'Gr. 12's + syfers = uiters swak', Volksblad, 16 February, p. 7.

Republic of South Africa, 1996a, Constitution of the Republic of South Africa, Act No. 108 of 1996, Government Printer, Pretoria.

Republic of South Africa, 1996b, South African Schools Act No. 84 of 1996, as amended, Government Gazette, Pretoria.

Searle, J.R., 1969, Speech acts: An essay in the philosophy of language, Cambridge University Press, Cambridge, UK.

Statistics South Africa, 2012, Census 2011 Census in brief, Statistics South Africa, Pretoria.

Steyn, S., 2014, 'Towards the development of equal and fair home language assessments: Outline of a pilot study', MS, Interim report to Umalusi.

Umalusi (Council for Quality Assurance in General and Further Education and Training), 2012a, The standards of the National Senior Certificate home language examinations: A comparison of South African official languages, Umalusi, Pretoria.

Umalusi (Council for Quality Assurance in General and Further Education and Training), 2012b, Technical report on the quality assurance of the examinations and assessment of the National Senior Certificate (NSC) 2012, Umalusi, Pretoria. Van Rooy, B. & Coetzee-Van Rooy, S., 2015, 'The language issue and academic performance at a South African university', Southern African Linguistics and Applied Language Studies 33(1), 31-46. http://dx.doi.org/10.2989/16073614.2015.1012691 [ Links ]

Weideman, A., 2002, 'Overcoming resistance to innovation: Suggestions for encouraging change in language teaching', Per Linguam 18(1), 27-40. [ Links ]

Weideman, A., 2011, 'Academic literacy tests: Design, development, piloting and refinement', Journal for Language Teaching 45(2), 100-113. [ Links ]

Weideman, A., 2012, 'Validation and validity beyond Messick', Per Linguam 28(2), 1-14. [ Links ]

Weideman, A., 2013, 'Academic literacy interventions: What are we not yet doing, or not yet doing right?', Journal for Language Teaching 47(2), 11-23. http://dx.doi.org/10.4314/jlt.v47i2.1 [ Links ]

Weideman, A., Tesfamariam, H. & Shaalukeni, L., 2003, 'Resistance to change in language teaching: Some African case studies', Southern African Linguistics and Applied Language Studies 21(1&2), 67-76. http://dx.doi.org/10.2989/16073610309486329 [ Links ]

Wilkins, D., 1976, Notional syllabuses: A taxonomy and its relevance to foreign language curriculum development, Oxford University Press, London.

Correspondence:

Correspondence:

Sanet Steyn

sanet.steyn@nwu.ac.za

Received: 25 June 2016

Accepted: 27 Oct. 2016

Published: 30 Mar. 2017