Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

African Journal of Health Professions Education

On-line version ISSN 2078-5127

Afr. J. Health Prof. Educ. (Online) vol.13 n.2 Pretoria Jun. 2021

http://dx.doi.org/10.7196/ajhpe.2021.v13i2.1247

RESEARCH

Do we assess what we set out to teach in obstetrics: An action research study

S AdamI; I LubbeII; M van RooyenIII

IMB ChB, FCOG (SA), MMed (O&G), Cert Maternal and Fetal Medicine (SA), PhD (O&G); Department of Obstetrics and Gynaecology, Faculty of Health Sciences, University of Pretoria, South Africa

IIBSocSc, MSocSc, MEd, PhD (Higher Education Studies); Department for Education Innovation, Faculty of Health Sciences, University of Pretoria, South Africa

IIIMB ChB, MMed (Fam Med); Department of Family Medicine, Faculty of Health Sciences, University of Pretoria, South Africa

ABSTRACT

BACKGROUND: Medical education empowers students to transform theoretical knowledge into practice. Assessment of knowledge, skills and attitudes determines students' competency to practice. Assessment methods have been adapted, but not evaluated, to accommodate educational challenges

OBJECTIVES: To evaluate whether assessment criteria align with obstetrics learning outcomes

METHODS: We conducted a collaborative action research study, in which we reviewed and analysed learning outcomes and assessments according to Bigg's model of constructive alignment. Data were analysed as per levels of Bloom's taxonomy

RESULTS: Final-year students have two 3-week modules in obstetrics, with 75% overlap in learning outcomes and assessments. Ninety-five percent of learning outcomes were poorly defined, and 11 - 22% were inappropriately assessed. Summative assessments were comprehensive, but continuous assessments were rudimentary without clear educational benefit. There is a deficiency in assessment of clinical skills and competencies, as assessments have been adapted to accommodate patient confidentiality and increasing student numbers. The lack of good assessment practice compromises the validity of assessments, resulting in assessments that do not focus on higher levels of thinking

CONCLUSION: There was poor alignment between assessment and outcomes. Combining the obstetrics modules, and reviewing learning outcomes and assessments as a single entity, will improve the authenticity of assessments

Assessment defines what should be learnt and assesses the quality of the knowledge obtained. Different outcomes may require different assessment methods. It is often necessary to integrate various methods of assessment to evaluate the attainment of a learning outcome. Similarly, various learning outcomes can also be evaluated via one assessment method. The assessment results generated by the assessor consist of information regarding the students' performance and their achievement of the learning outcomes.[1,2]

With the shift from teacher-centred learning to outcomes-based student-centred learning, there has been a move away from what will be taught to outline the outcomes that the student is expected to achieve by the end of the course. In outcomes-based learning, outcomes are based on the knowledge, skills and competencies that need to be achieved by the student.[3] All educational teaching and resources need to be related to the learning outcomes to assist students with achieving this goal. Therefore, outcomes-based assessments need to be aligned with learning outcomes.[4]

Learning outcomes refer to statements of what the learner is expected to know, understand and comprehend by the completion of the learning process. A unit of learning includes knowledge, skill and methodological outcomes.[5] Ideally, the learning outcomes should be written in a way in which these competencies can be assessed.

The aim of medical education is to educate students so that they can transform knowledge into practice as junior doctors. To achieve this, the lecturer defines the knowledge that needs to be obtained and how that will be achieved. Therefore, the lecturer has to structure this in such a way that the student knows what is required to obtain that knowledge and translate it into the appropriate actions. This is best achieved if the verbs from Bloom's taxonomy are used to describe the learning outcomes.[6,7]

Assessment should assist students to validate their achievement of outcomes.[6,8] It is a challenging task to ensure that assessments are valid, reliable, fair, and that teaching methods and assessment tools are aligned with learning outcomes. Poor assessment practice and malalignment between outcomes and assessment result in negative comments, student dissatisfaction and poor performance.[8]

Assessment in medical education is vital to the medial student and the public, as it results in competent, capable doctors. Assessment is the driving force that ensures that students learn, and students will learn what they think will be assessed. Assessment tools must support the course and allow students to demonstrate that they have achieved the defined learning outcomes in a fair, valid and reliable manner. Assessments should allow students to demonstrate the vastness of their knowledge and their skills.[9]

Courses at medical schools should employ a range of assessment tools that are appropriate for testing the curricular outcomes. The intended assessment tools should not disadvantage the medical students or the patients who may be used in clinical examinations.[10-12] As student numbers increase and issues pertaining to patient privacy and confidentiality come to the fore, medical schools need to review their current assessment practices.

Assessments should be conducted across the teaching period, not just at the end, as the process and product of learning need to be assessed continuously. Formative assessments are for learning, thus assisting students to take control of their learning by assessing their own work. Summative assessments occur after teaching has taken place. Medical students, who are adult learners, are responsible for their own self-regulated learning.[13] The balance between formative and summative assessments increases student engagement, and assists in developing self-regulated learners.[14]

Assessment tools should be valid, reliable and of an equal standard, as high-stakes decisions are based on them.[12] The grading of assessments needs to be standardised and as objective as possible. Other components are adequate and timeous feedback to students, and the use of different types of assessments to accommodate different learning styles,[15-17] as this provides students with various ways to demonstrate their knowledge and skills.

When students are close to graduating, their knowledge, skills and attitudes must be thoroughly assessed to determine their fitness to practise.[18] Hence the appropriateness of learning outcomes and assessment practices, as well as their alignment with each other, their validity and reliability, needs to be constantly reviewed and adapted.[19]

The data obtained from assessments are evidence of learning, which require analysis and interpretation (Fig. 1).[14] Data can be qualitative or quantitative, and the way we analyse data depends on the purpose of the assessment. Analysis of data can give information on students' successes or weaknesses, revision of questions, modification of teaching, or review of course content and learning outcomes. Furthermore, if the information obtained is discussed in a community of practice, different perspectives are shared, resulting in greater understanding.[14] Good assessment practice drives student learning, and informs the lecturer about the quality of the teaching and the learning experience of the student.

In this study, we evaluated whether the obstetrics assessments align with the learning outcomes of the course offered at the University of Pretoria, South Africa (SA).

Methods

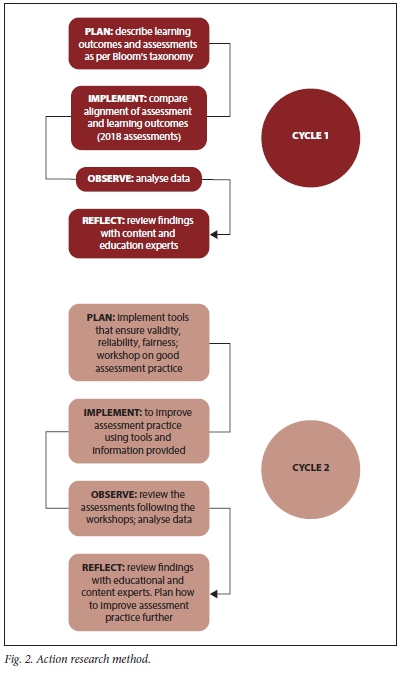

The study design was collaborative action research (Fig. 2),[20-22] which reviewed the current undergraduate obstetrics assessment practices at the University of Pretoria. This study focused on the high-stakes obstetrics examination for final-year medical students.

Medical students have two 3-week workplace-based rotations in obstetrics in their final year of their undergraduate training. One rotation is spent at a tertiary-level hospital in an academic department (referred to as obstetrics), while the other is spent at a district hospital (referred to as community obstetrics). These rotations function independently and each has its own high-stakes examination. Formative assessments during rotation contribute to the final mark of both rotations.

The formative assessment for the obstetrics rotation consists of a logbook, essay and single best answer (SBA)-type questions, and 2 spot scenario-based questions (objective structured clinical examination (OSCE)). The summative assessment consists of 5 scenario-based questions (OSCEs) and an oral discussion based on a virtual patient (objective structured patient examination (OSPE)).

The community obstetrics formative assessment consists of a portfolio of patients managed at a district-level hospital. The summative assessment comprises SBA-type questions and 'fire-drills'/simulations of obstetrics emergencies (students are aware of the 5 possible scenarios for the simulations).

First action research cycle

Data were obtained from a review of assessments conducted in the 6 student group rotations (obstetrics and community obstetrics) in the 2018 academic year. As no learning outcomes were defined, these were defined as per Bloom's taxonomy[7] and Millers pyramid of clinical competence,[23] in keeping with the first-day competencies,[18] with other content experts. Learning outcomes were defined after the assessments, but as part of this study, before the analysis of this study.

• Plan 1: The components of the obstetrics assessments were described with regard to their structure and the level of Bloom's taxonomy tested.[7]

• Implement 1: Thereafter, the alignment of assessment and outcomes was evaluated using Bigg's model of constructive alignment.[6] The data were analysed with the aid of an Excel spreadsheet 2019 (Microsoft Corp., USA) and tick-sheets.

• Observe 1: The results were analysed collaboratively with educational and content specialists.

• Reflect 1: Recommendations for improvement of the assessment practice were communicated to the course co-ordinator.

Second action research cycle

Plan 2: The training of facilitators in good assessment practice was identified as a significant gap.

• Implement 2: All facilitators involved in obstetrics teaching, learning and assessment were invited to a workshop on good assessment practice, which was hosted by the educational consultant. Furthermore, tools to improve validity, reliability and fairness of assessments (e.g. blueprints, rubrics, moderation) were implemented during March - April 2019.

• Observe 2: The third assessment of the first semester was evaluated for content and construct validity.

• Reflect 2: The findings were again discussed with educational and content experts, and strengths and weaknesses identified.

Third action research cycle

A plan was devised to further improve the assessment practice and address problem areas.

Ethical approval

Ethical approval for this study was obtained from the Faculty of Health Sciences Research Ethics Committee (ref. no. 164/2018), University of Pretoria.

Results

The two 3-week modules, viz. obstetrics and community obstetrics, function as separate entities. Ninety-five percent of the learning outcomes were poorly defined and there was a 75% overlap in learning outcomes and assessment practices between the modules.

Summative assessments were comprehensive, but formative assessments were rudimentary, without a clear educational benefit. A deficiency in the assessment of clinical skills and competencies was thus identified. The lack of rubrics, blueprinting and moderation decreases the validity of assessments. As a result, assessment did not focus appropriately on the higher levels of thinking and doing.

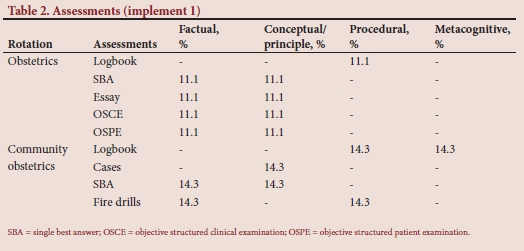

The learning outcomes for the obstetrics and community obstetrics rotations were similar, thus leading to an overlap in assessments. Therefore, the assessments for both rotations were combined and analysed together (Table 1 (http://ajhpe.org.za/public/files/1247-table.pdf) and Table 2 refer to the first action research cycle). Lower-order-thinking outcomes,[7] such as knowledge, understanding and application, were assessed comprehensively, but higher-order-thinking outcomes,[7] such as analysing, evaluating and creating, were inadequately assessed, even though this was a high-stakes assessment of an SA National Qualifications Framework (NQF) level 8 qualification.[18,24]

Furthermore, most of the assessments focused on factual and conceptual principles. Even though obstetrics is a practical-based discipline, the assessment of procedural skills was deficient (Table 2). Graduate attributes include being a self-regulated, reflective learner.[18,25] However, metacognition was not assessed adequately (Tables 1 and 2).

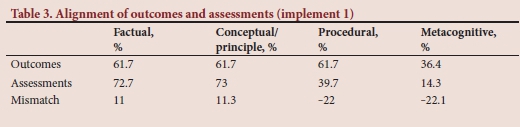

The alignment of assessments and outcomes was poor (Table 3). While the outcomes were fairly distributed across the knowledge dimension,[26] the assessments focused more on the knowledge and cognitive domains rather than on procedural and metacognitive knowledge. This led to a 22% over-assessment of lower domains and a 44% under-assessment of higher domains (Table 3).

The results of this action research cycle were discussed with education and content experts and communicated to the Department of Obstetrics and Gynaecology, in particular the head of department and the course co-ordinator.

Areas for improvement that were identified included review of the learning outcomes, education of facilitators of learning regarding good assessment practice and use of tools to ensure a valid, reliable and fair assessment of students, especially as they were assessed in their rotation groups every 7 weeks, i.e. 6 rotation assessments per year.

In the next cycle, the learning outcomes were reviewed by the Department of Obstetrics and Gynaecology, a study guide outlining the curriculum and expectation was made available to students, and all facilitators of learning were invited to a departmental workshop on good assessment practice. The workshop included discussions on constructive alignment, Bloom's taxonomy,[7] how to construct SBA-type questions, analysis on assessments and tools to ensure fair, reliable, valid assessments, such as blueprinting, rubrics and moderations. At the same time, the Academic Quality Assurance Committee of the School of Medicine, University of Pretoria, proposed that all high-stakes exit assessments in the various disciplines would be audited to ensure validity, reliability and fairness.

The third assessment of the first semester in 2019 was reviewed for fairness, validity and reliability. It was noted that there was little or no improvement in the SBA questions, with only 6/15 (40%) questions assessing higher-order thinking. The questions were sometimes inappropriate, with ambiguous detractors and poor structure. One essay question was well constructed, while the other question was vague. The memorandum for the essay question did not provide enough detail to ensure objectivity in mark allocation.

The current OSCE is a paper-based scenario-based assessment. Thus, knowledge and cognition,[26] rather than clinical skills, are assessed. The questions in this component of the assessment included a fair distribution of lower-order- and higher-order-thinking questions.[7]

The OSPE is a paper-based assessment of an approach to a clinical scenario. A virtual patient is used to ensure fairness (all students have the same case) to circumvent issues of patient privacy. Students have 15 minutes to prepare for discussion of a scenario, following a discussion of their approach to the case with the examiner. Again, there was a good balance of lower-order and higherorder thinking,[7] but clinical skills[26] were not assessed.

Rubrics and blueprinting were not used in planning this assessment. Whereas internal moderation did occur, this was superficial and only the OSCE and OSPE were reviewed, without access to the study guide or learning outcomes.

These findings were again discussed with educational and content experts, and will be discussed with the course co-ordinator to identify problems in adhering to good assessment practice.

Discussion

Assessment in medical education is important to the student, the programme and the public. Assessment needs to be continuous and frequent, workplace based where possible, aligned with expected learning outcomes, using tools that meet minimum requirements for quality, and involving the wisdom and experience of multiple facilitators to assess students' progress.[27]

The assessment of clinical competence in medical education is becoming increasingly complex, with larger student numbers, fewer clinical training sites and issues pertaining to patient privacy and confidentiality. Traditionally, clinical evaluation assessment methods consisted primarily of lecturer observations during clinical rotations (workplace-based assessment), oral assessments (usually with live patients), and multiple-choice assessments. Increased clinical workload, discontent with traditional methods of clinical skills assessment by students and facilitators of learning, and developments in the fields of psychology and education have led to the formation of new modalities, which do not employ live patients for clinical assessments. Therefore, standardised patients (simulated or virtual) are used to assess performance.[28]

However, this approach needs to be evaluated and improved so that all expected graduate competencies are adequately assessed,[18] especially procedural skills.[26] The current assessment practice needs to be evidence based, locally developed and student driven, with an understanding of educational outcomes and non-cognitive assessment factors.

A major problem identified in this action research is that facilitators of learning in medical education are content experts, but are not trained in good educational practice. The current curriculum is therefore executed in the manner in which the facilitators may have been taught or as it was passed on to them. They are usually not reflective facilitators of learning, as teaching and learning is not their key area of interest. This is contrary to the attributes of an educator.[9,25] Instructional planning, delivery and assessment are probably sub-optimal owing to the lack of knowledge of education. Professionalism as an educator may be compromised by competing interests, such as clinical work and service delivery, or research.

Collaborative action research is required to address these major challenges in medical education, especially in high-stakes assessments. Best practices in the context of systems and institutional culture and how to best train staff to be better assessors need to be instituted to ensure that graduates meet expected outcomes and possess attributes such as being a lifelong learner, self-reflective practitioner and contributing member of society.[18,25] Finally, we must remember that expertise in medical graduates, not merely competence, is the ultimate goal. Medical education does not end with graduation from a training programme, but should represent a career that includes ongoing learning and reflective practice.[18,25]

Conclusion

The assessment practice in medical education has evolved. However, there is poor alignment between assessment and outcomes and absence of surety of valid reliable assessment practice. The employment of good educational practice will improve the authenticity of assessments, but this will require a change in institutional educational culture and compulsory training of facilitators of teaching and learning.

Declaration. None.

Acknowledgements. We would like to acknowledge Prof. Dianne Manning, the Sub-Saharan Africa-FAIMER Regional Institute (SAFRI) and the Department of Obstetrics and Gynaecology, University of Pretoria for their support.

Author contributions. SA and MvR conceptualised the study and developed the research protocol. SA and IL were responsible for data collection and analysis. SA, MVR and IL contributed to compiling and editing the final manuscript.

Funding. None.

Conflicts of interest. None.

References

1. Dochy F, McDowell L. Assessment as a tool for learning. Stud Educ Eval 1997;23:279-298. https://doi.org.10.1016/S0191-491X(97)86211-6 [ Links ]

2. Yorke M. Formative assessment in higher education: Moves towards theory and the enhancement of pedagogic practice. High Educ 2003;45:477-501. https://doi.org.10.1023/A:1023967026413 [ Links ]

3. Spencer J. Learner centred approaches in medical education. BMJ 1999;318(7193):1280-1283. https://doi.org.10.1136/bmj.318.7193.1280 [ Links ]

4. Crespo R, Najjar J, Derkl M, et al. Aligning assessment with learning outcomes in outcomes-based education. IEEE Education Conference 2010:1239-1246. https://doi.org/10.1109/EDUCON.2010.5492385 [ Links ]

5. IEEE Learning Technology Standards Committee. IEEE Reusable Competency Definitions (RCD). 2007. http://www.ieeeltsc.org/working-groups/wg20Comp/ (accessed 19 May 2019). [ Links ]

6. Biggs J. Aligning teaching and assessing to course objectives. Teaching and learning in higher education: New trends and innovations, University of Aveiro, 13 - 17 April 2003. https://www.dkit.ie/system/files/Aligning_Reaching_and_Assessing_to_Course_Objectives_John_Biggs.pdf (accessed 16 May 2020). [ Links ]

7. Bloom B. Taxonomy of Educational Objectives: The Classification of Educational Goals. 1st ed. New York: Longmans, Green, 1956. [ Links ]

8. Biggs J, Tang CS. Teaching for Quality Learning at University: What the Student Does. 2nd ed. Philadelphia: McGraw-Hill/Society for Research into Higher Education, 2011. [ Links ]

9. Shumway J, Harden R. AMEE Guide No. 25: The assessment of learning outcomes for the competent and reflective physician. Med Teach 2003;25(6):569-584. https://doi.org.10.1080/0142159032000151907 [ Links ]

10. Varkey P, Karlapudi S, Rose S, et al. A systems approach for implementing practice-based learning and improvement and systems-based practice in graduate medical education. Acad Med 2009;84(3):335-339. https://doi.org.10.1097/ACM.0b013e31819731fb [ Links ]

11. Lurie S, Mooney C, Lyness J. Measurement of the general competencies of the accreditation council for graduate medical education: A systematic review. Acad Med 2009;84(3):301-309. https://doi.org.10.1097/ACM.0b013e3181971f08 [ Links ]

12. Norcini J, Anderson B, Bollela V, et al. Criteria for good assessment: Consensus statement and recommendations from the Ottawa 2010 Conference. Med Teach 2011;33(3):6-214. https://doi.org.10.3109/0142159X.2011.551559 [ Links ]

13. David L. Summaries of learning theories and models. In: Learning Theories. 2019. https://www.learning-theories.com/ (accessed 9 March 2019). [ Links ]

14. EDU 5033. Principles and practice of assessment: L2:(I) learning theories; (II) assessment of learning. https://sites.google.com/site/wongyauhsiung/edu-5033-principles-and-practice-of-assessment/l2-i-learning-theories-ii-assessment-of-learning (accessed 31 May 2019). [ Links ]

15. Gardner H, Hatch T. Educational implications of the theory of multiple intelligences. Educ Res 1989;18(8):4-10. https://doi.org/10.3102/0013189X018008004 [ Links ]

16. Herrmann N. The theory behind the HBDI and whole brain technology. 2000. www.herrmann.com.au/pdfs/articles/TheTheoryBehindHBDI.pdf (accessed 26 February 2019). [ Links ]

17. Hughes M, Hughes P, Hodgkinson I. In pursuit of a 'whole brain' approach to undergraduate teaching: Implications of the Herrmann brain dominance model. Stud High Educ 2017;42(12):2389-2405. https://doi.org/10.1080/03075079.2016.1152463 [ Links ]

18. Health Professions Council of South Africa. Core competencies for undergraduate students in clinical associate, dentistry and medical teaching and learning programmes in South Africa. 2014. https://www.hpcsa-blogs.co.za/wp-content/uploads/2017/04/MDB-Core-Competencies-ENGLISH-FINAL-2014.pdf (accessed 25 May 2021). [ Links ]

19. Biggs J. The reflective institution: Assuring and enhancing the quality of teaching and learning. High Educ 2001;41(3):221-238. https://doi.org/10.1023/A:1004181331049 [ Links ]

20. McNiff J. Action research: A generative model for in-service. Br J In-Service Educ 1984;10(3):40-46. https://doi.org/10.1080/0305763840100307 [ Links ]

21. MacDonald C. Understanding participatory action research: A qualitative research methodology option. Can J Action Res 2012;13(2):34-50. https://doi.org/10.33524/cjar.v13i2.37 [ Links ]

22. Kemmis S, McTaggart R, Nixon R. The Action Research Planner: Doing Critical Participatory Action Research. 1st ed. Singapore: Springer, 2014. https://doi.org/10.1007/978-981-4560-67-2 [ Links ]

23. Miller G. The assessment of clinical skills/competence/performance. Acad Med 1990;65(9):S63-S67. https://doi.org/10.1097/00001888-199009000-00045 [ Links ]

24. Department of Higher Education and Training. National Qualifications Framework: Sub-frameworks and qualification types. 2015. http://www.saqa.org.za/docs/brochures/2015/updated%20nqf%20levevl%20descriptors.pdf (accessed 26 May 2019). [ Links ]

25. Shukla S, Limaye R. Graduate attributes desired for 21st century. 2016. http://www.srku.edu.in/pdf/3%20%20Graduate%20Attributes%20%20desired%20for%2021st%20century(1).pdf (accessed 12 March 2019). [ Links ]

26. Macmillan Education. The knowledge dimension. https://www.ucdenver.edu/docs/Ubrariesprovider279/default-document-library/blooms_taxonomy_worksheet6cfe02e6302864d9a5bfff0a001ce385.pdf?sfvrsn=a3d666ba_0 (accessed 25 May 2021). [ Links ]

27. Holmboe E, Sherbino J, Long DM, et al. The role of assessment in competency-based medical education. Med Teach 2010;32(8):676-682. https://doi.org/10.3109/0142159X.2010.500704 [ Links ]

28. Howley L. Performance assessment in medical education: Where we've been and where we're going. Eval Health Prof 2004;27(3):285-303. https://doi.org/10.1177/0163278704267044 [ Links ]

Correspondence:

Correspondence:

S Adam

sumaiya.adam@up.ac.za

Accepted 28 May 2020