Serviços Personalizados

Artigo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares em Google

Similares em Google

Compartilhar

African Journal of Health Professions Education

versão On-line ISSN 2078-5127

Afr. J. Health Prof. Educ. (Online) vol.12 no.4 Pretoria Nov. 2020

http://dx.doi.org/10.7196/AJHPE.2020.v12i4.1350

RESEARCH

A competence assessment tool that links thinking operations with knowledge types

Y BotmaI; N Janse van RensburgII; J RaubenheimerIII

IPhD, RN, RM, FANSA; School of Nursing, Faculty of Health Sciences, University of the Free State, Bloemfontein, South Africa

IIMaster of Nursing, RN, RM, Operating Theatre Nurse; School of Nursing, Faculty of Health Sciences, University of the Free State, Bloemfontein, South Africa

IIIPhD (Research Psychology); Discipline of Pharmacology, School of Medical Sciences, Faculty of Medicine and Health, University of Sydney, Australia

ABSTRACT

BACKGROUND. Although there is a need for a greater number of nurses to meet the demands for universal health coverage, these trained nurses should also be competent. However, assessment of nurses' competence remains a challenge, as the available instruments do not focus on identifying the knowledge level that is lacking.

OBJECTIVES. To report on the development and reliability of an instrument that can be used to assess undergraduate student nurses' competence.

METHODS. A methodological research design was used. The authors extracted items from existing competence assessment instruments, inductively analysed the items and categorised them into themes. The extracted items were used to draft a new instrument. Review by an expert panel strengthened the content and face validity of the instrument. Twenty assessors used the developed assessment instrument to assess 15 student nurses' competence via video footage.

RESULTS. The Cronbach alpha coefficient of 0.90 and intraclass correlation coefficient of 0.85 indicate that the instrument is reliable and comparable with other instruments that assess competence.

Conclusions. Nurse educators can use the developed instrument to assess the competence of a student and identify the type of knowledge that is lacking. The student, in collaboration with the educator, can then plan specific remedial action.

Healthcare in Africa faces a substantial human resource crisis nested in poverty, a high burden of disease and emerging epidemics and pandemics.[1,2] There is consensus in the literature that not only more nurses are needed but that they should be competent.[2]

Globally, as in Africa, there is an educational shift from content-based curricula to competency-based education. However, people differ with regard to the interpretation of competency-based education.[3] A competent nurse is someone who is 'able to integrate knowledge from all disciplines to identify the problem, understand the theory related to the problem, as well as the appropriate response, treatment and care of the patient ... in real-life'.'[4] Nurses should be reflective of their practice to think about their thinking processes and develop metacognition.[5] Reflective practitioners become lifelong learners.

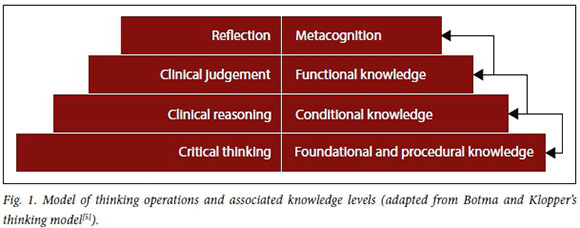

Nurses demonstrate competence in critical thinking, clinical reasoning, clinical judgement and metacognition through assessing patients, diagnosing, and implementing optimal care plans.[6]Fig. 1 shows the conceptual representation of the association between the thinking process and knowledge levels based on Botma and Klopper's thinking model.[5]

According to this model, a person should have foundational and procedural knowledge to be able to think critically, resulting in noticing or identifying a problem. [7-9]

Clinical reasoning would therefore flow from critical thinking when clinical data of a patient are collected and evaluated in a specific context to make a diagnosis,[9] i.e. conditional knowledge.[10] Conditional knowledge portrays insight into the patient's condition and associated circumstances.[11]

Critical thinking and clinical reasoning are in turn building blocks for clinical judgement, i.e. when a nurse formulates a decision about interventions.[5,7] Nurses demonstrate functional knowledge when they decide about treatment options that will be advantageous for a patient.[12]Clinical judgement and the demonstration of functional knowledge indicate the level of understanding of a specific situation and whether the response is appropriate.[10,12] Metacognition involves the assessment of thought processes, referred to as 'thinking about thinking'.'131 During metacognition, nurses analyse their personal clinical performance to identify weaknesses in the reasoning process, and to plan and monitor actions for improvement.[13,14] Metacognitive knowledge is constructed through the process of critical reflection and is referred to as 'new knowledge built on previous knowledge'.[13] It can be argued that a nurse is competent when they can demonstrate all four knowledge levels and associated thinking operations in different contexts.

Botma and Klopper's thinking model[5] suggests that, without adequate foundational knowledge, clinical reasoning and clinical judgement may be compromised. It is, therefore, the responsibility of nursing education institutions to train competent nurses who are 'ready to run' and are competent on all four levels when they have completed their training. However, there is no single definitive tool for assessing the competence of nurses.[6] Becoming competent is a process, and nursing students therefore need support throughout their training as the content and complexity of learning opportunities increase.[6] The developed competency assessment instrument may allow nurse educators to shed light on the thinking operations and levels of knowledge that a student has mastered and what needs to be developed. This article reports on the development and reliability of such an instrument that can be used to assess undergraduate student nurses' competence and development throughout their training.

Methods

A quantitative methodological research approach was used. The design defined the construct or behaviour to be measured, identifying competence assessment tools, inductively analysing items from identified assessment tools, formulating items, developing instructions for users and assessors, and testing the reliability and validity of the instrument.[5]

Development of the assessment instrument

With the assistance of a librarian, the authors identified 16 publications (from 2000 to 2017) of instruments that assessed competence in health sciences. Only 9 of the 16 instruments had comparable definitions of competence and were used for item extraction. The authors inductively analysed and classified the extracted items under the following themes:

• critical thinking (noticing)

• clinical reasoning (interpreting)

• clinical judgement (responding)

• attitude

• communication

• metacognition.

The identified themes correspond with the types of decision-making, as described by Marques[16] and Botma and Klopper's thinking model.[5] Elimination of vague items and arranging items in a logical sequence contributed to the refinement of the draft instrument.

The authors used a 5-point Likert scale that was weighted as follows:

0 = not done (student does not demonstrate any aspect of expected behaviour)

1 = incompetent (student demonstrates some aspects of expected behaviour haphazardly)

2 = competent (student demonstrates most of the aspects of expected behaviour orderly)

3 = exceptionally competent (student demon-strates all of the aspects of expected behaviour orderly and consistently)

na = not applicable (no opportunity to demonstrate expected behaviour during simulated patient scenario).

Botma and Van Rensburg's competence assessment tool is available as an appendix (http://ajhpe.org.za/public/files/1350.doc) to this article.

An expert panel consisting of 7 nurse educators in the field of transfer of learning and nurse competence, and who were knowledgeable regarding instrument construction, evaluated the draft instrument for face and content validity.

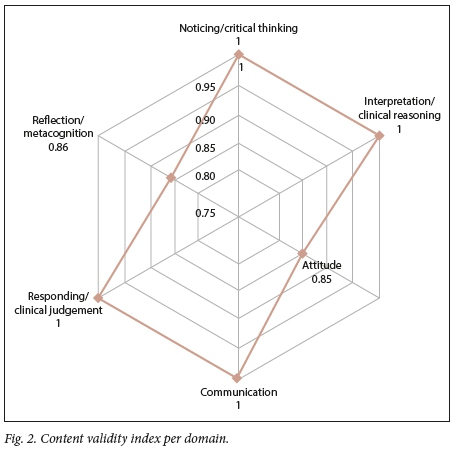

The agreement regarding the abovementioned 6 categories and individual items contributed to the content validity of the draft instrument, as the expert panel evaluated each item against the following criteria: (i) applicable to the category; (if) measurable; (iii) unambiguously phrased; and (iv) understandable. Fig. 2 gives the content validity index per domain. Four domains have the ideal value of 1 and the lowest value is 0.85, which exceeds the cut-off value of 0.83.[17]

A pilot study was done after the instrument had been validated, during which 7 assessors evaluated archived video footage of students interacting with standardised patients. The assessment instrument was regarded as easy to use, but the quality of the sound and visibility of the footage were poor. Additional cameras, audio-recording machines and positioning of the standardised patient improved the quality of the video footage for the real study.

Population and sample

Two groups, i.e. assessors and students, participated in the study. The student population consisted of 60 second-year undergraduate student nurses, who participated in the compulsory standardised patient simulation activ ies. These simulation activities are compulsory for educational purposes, but the students could withdraw the footage that captured their interactions for the purposes of research. The biostatistician used simple random samplin to select 15 students who consented to participate inthe study.

The inclusion criteria forthe20 purposefully selected assessors, who all agreed to participate in the study, were that they should be educators or registered nurses who are interested in the facilitation of clinical learning, transfer of learning, clinical judgement or primary healthcare.

Data collection

The second author trained all the assessors and provided opportunities for them to practise using the instrument. Each assessor received a demographic sheet, 15 copies of the assessment instrument, simulation footage of students and patient records written by each student. Assessors completed the instrument at their convenience for all 15 students while they watched the footage of the interaction between a student and a standardised patient. Each assessor couriered the package back to the first author at the cost of the author.

Simulation activities are routine and compulsory learning experiences for undergraduate student nurses after they have completed a learning unit at the relevant school. All simulated learning experiences are routinely captured, because the facilitators use the footage during debriefing. For this research, each student engaged with an aged standardised patient at a primary healthcare clinic. The patient's main complaint was earache and loss of appetite. Students had to assess the patient, link the assessment findings with theory by stating the differential diagnoses, and make a final diagnosis through a process of elimination. They then had to use the adult primary healthcare guidelines to decide, in collaboration with the patient, on the best acceptable treatment option. After they recorded the treatment, the students had to reflect on their performance and state how they would improve their performance in similar circumstances.

Data analysis

The second author coded and captured the data on an Excel spreadsheet (Microsoft Corp., USA).

A biostatistician used SAS/STAT software, version 12.3, SAS system for Windows (SAS, USA), to analyse the data. Cronbach's alpha coefficient test determined the internal consistency of the developed instrument, and the intraclass correlation coefficient test measured the inter-rater reliability of the respondents who used the instrument.

Ethical approval

The Health Sciences Research Ethics Committee (HSREC), University of the Free State, approved the research (ref. no. ECUFS NR 49/2014), and all the relevant authorities granted permission to conduct the study. All participants gave informed consent.

Results

Most assessors (80%) were degree qualified - 30% of the 80% had a Master's degree and 5% of the 80% had a doctoral degree. All the assessors were registered as professional nurses for >5 years and therefore had good foundational knowledge of nursing as a science. The majority (80%) had a postgraduate qualification in nursing education that covers assessment of students, among other topics. Furthermore, all the assessors had received additional training on the assessment of students, either through assessment-specific courses or through mentor/preceptor training programmes before this study.

Reliability

The internal consistency of the final assessment instrument, as measured by the Cronbach alpha coefficient, tested 0.90. The intraclass correlation coefficient value measuring the inter-rater reliability tested 0.85.

Discussion

Immonen et al.[18] concluded that assessors should use reliable and valid assessment instruments and that the need to develop consistent and systematic approaches in assessment continues. Clear assessment criteria alleviate the stress that clinical learning facilitators experience during assessment.[18] The developed assessment instrument has a high internal consistency, with a Cronbach alpha value of 0.90, and is comparable with other instruments that measure competence. For example, Juntasopeepun et al.[19]found that the internal consistency of the nurse competence scale varied between 0.85 and 0.88 for the 6 factors. The intraclass correlation coefficient value of 0.85 is remarkable, considering that 20 assessors participated, while most inter-rater studies report on 2 - 3 assessors. Fig. 2 shows that the content validity index per domain is high; thus, the assessment tool is reliable and valid.

An advantage of the developed tool is that it is structured according to the thinking process and knowledge levels. The assessor was able to identify the level where the students struggled and could plan remedial activities with the student. For example, the students lack foundational knowledge when they are unable to link the theory to the assessment findings of a patient and could not identify deviations from the norm. Furthermore, using the developed instrument throughout the training programme may ensure that the thinking operations become automated knowledge.

Conclusions

The internal consistency of the developed instrument and inter-rater reliability are comparable with the Lasater clinical judgement rubric, the competency inventory for nursing students and the nurse competence scale. The developed instrument is unique because it is set according to the knowledge levels and associated thinking operations. Furthermore, the assessor could identify the type of knowledge that is lacking to achieve competence and guide the student in rectifying the identified gap.

Study limitations

The small student sample and the homogeneity of the sample are limitations of the study. The simulated environment and the use of video footage also influenced the results, because the assessors could not validate their observations with the students. Assessors can clarify what students mean and position themselves to optimise observation in clinical practice.

The psychometric properties of the instrument should be tested through an exploratory and confirmatory factor analysis. Other health sciences student populations could be included in further studies, as the thinking operations are generic for all students in the health sciences. The instrument should also be tested in the clinical learning environment, as it could be used for integrated assessments.

Declaration. None.

Acknowledgements. The authors acknowledge the effort and time of the 20 assessors and the contributions of the critical reader and language editor.

Author contributions. NJvR did the fieldwork and drafted the manuscript. JR wrote the methods and results sections, while YB edited the manuscript for submission to the journal.

Funding. The authors acknowledge the financial assistance of the National Research Foundation (NRF).

Conflicts of interest. None.

References

1. Asamani JA, Akogun OB, Nyoni J, Ahmat A, Nabyonga-Orem J, Tumusiime P. Towards a regional strategy for resolving the human resources for health challenges in Africa. BMJ Glob Health 2019;4(Suppl 9):e001533. https://doi.org/10.1136/bmjgh-2019-001533 [ Links ]

2. Agyepong I, Sewankambo N, Binagwaho A, Piot P. The path to longer and healthier lives for all Africans by 2030: The Lancet Commission on the future of health in sub-Saharan Africa. Lancet 2017;390(10114):2803-2859. https://doi.org/10.1016/s0140-6736(17)31509-x [ Links ]

3. Fernandez N, Dory V, Chaput M, Charlin B, Boucher A. Varying conceptions of competence: An analysis of how health sciences educators define competence. Med Educ 2012;46:357-365. https://doi.org/10.1111/j.1365-2923.2011.04183.x [ Links ]

4. Nursing Education Stakeholders (NES) Group. A proposed model for clinical nursing education and training in South Africa. Trends Nursing 2012;1(1):49-58. [ Links ]

5. Botma Y, Klopper H. Thinking. In: Bruce J, Klopper H, eds. Teaching and Learning the Practice of Nursing. 6th ed. Pretoria: Pearson, 2017:189-210. [ Links ]

6. Lourenqo T, Gamara P, Abreu Fiigueiredo R. The relevance of critical thinking for the selection of the appropriate nursing diagnosis. EC Nurs Healthcare 2020;2(2):1-3. [ Links ]

7. Bruce J, Klopper H, eds. Teaching and Learning the Practice of Nursing. 7th ed. Pretoria: Pearson, 2017. [ Links ]

8. Tanner CA. Thinking like a nurse: A research-based model of clinical judgment in nursing. J Nurs Educ 2006;45(6):204-211. https://doi.org/10.3928/01484834-20060601-04 [ Links ]

9. De Carvalho EC, Oliveira-Kumakura AR de S, Morais SCRV. Clinical reasoning in nursing: Teaching strategies and assessment tools. Rev Bras Enferm 2017;70(3):662-668. https://doi.org/10.1590/0034-7167-2016-0509 [ Links ]

10. Biggs J, Tang C. Teaching for Quality Learning at University. 4th ed. Berkshire: McGraw-Hill Education, 2011. [ Links ]

11. Botma Y, van Rensburg GH, Coetzee IM, Heyns T. A conceptual framework for educational design at modular level to promote transfer of learning. Innov Educ Teach Int 2013:1-11. https://doi.org/10.1080/14703297.2013.866051 [ Links ]

12. Sedgwick MG, Grigg L, Dersch S. Deepening the quality of clinical reasoning and decision-making in rural hospital nursing practice. Rural Remote Health 2014;14(3):1-12. [ Links ]

13. Frith CD. The role of metacognition in human social interactions. Philos Trans R Soc Lond B Biol Sci 2012;367(1599):2213-2223. https://doi.org/10.1098%2Frstb.2012.0123 [ Links ]

14. Lasater K. Clinical judgment development: Using simulation to create an assessment rubric. J Nurs Educ 2007;46(11):496-503. https://doi.org/10.3928/01484834-20071101-04 [ Links ]

15. LoBiondo-Wood G, Haber J. Nursing Research. Methods and Critical Appraisal for Evidence-based Practice. 7th ed. St. Louis: Mosby-Elsevier, 2010. [ Links ]

16. Marques M de FM. Decision making from the perspective of nursing students. Rev Bras Enferm 2019;72(4):1102-1108. https://doi.org/10.1590/0034-7167-2018-0311 [ Links ]

17. Pollit D, Beck C. Nursing Research: Generating and Assessing Evidence for Nursing Practice. 10th ed. Philadelphia: Wolters Kluwer, 2017. [ Links ]

18. Immonen K, Oikarainen A, Tomietto M, et al. Assessment of nursing students' competence in clinical practice: A systematic review of reviews. Int J Nurs Stud 2019;100:103414. https://doi.org/10.1016/j.ijnurstu.2019.103414 [ Links ]

19. Juntasopeepun P, Turale S, Kawabata H, Thientong H, Uesugi Y, Matsuo H. Psychometric evaluation of the nurse competence scale: A cross-sectional study. Nurs Health Sci 2019;21(4):487-493. https://doi.org/10.1111/nhs.12627 [ Links ]

Correspondence:

Correspondence:

Y Botma

botmay@ufs.ac.za

Accepted 30 July 2020