Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

African Journal of Health Professions Education

On-line version ISSN 2078-5127

Afr. J. Health Prof. Educ. (Online) vol.12 n.1 Pretoria Mar. 2020

http://dx.doi.org/10.7196/AJHPE.2020.v12i1.1135

RESEARCH

Assessment consolidates undergraduate students' learning of community-based education

I Moodley; S Singh

PhD; Discipline of Dentistry, School of Health Sciences, University of KwaZulu-Natal, Durban, South Africa

ABSTRACT

BACKGROUND: Community-based education (CBE) is an empirical education experience that shifts clinical education from traditional to community settings to provide health sciences students with meaningful learning opportunities. However, assessing the effectiveness of these learning opportunities is a challenge.

OBJECTIVES: To describe the methods used for assessment of CBE by the various disciplines in the School of Health Sciences, University of KwaZulu-Natal (UKZN), Durban, South Africa, and to determine how they were aligned to the anticipated learning outcomes.

METHODS: This qualitative study consisted of a purposively selected sample of 9 academics who participated in audio-taped interviews and focus group discussions, with the data being thematically analysed. Ethical approval was obtained from UKZN.

RESULTS: The disciplines in the School of Health Sciences used various assessment methods, ranging from simple tests, assignments and case presentations to more complex clinical assessments, blogging and portfolio assessments. Multiple methods were required to meet the anticipated learning outcomes of CBE, as a single assessment would not achieve this.

CONCLUSION: The study findings indicated that assessment plays an important role in consolidating student learning at CBE sites, with multiple assessment methods being required to achieve graduate competencies in preparation for the workplace. Choice of assessment methods must be contextual and fit for purpose to allow for overall student development.

Community-based education (CBE) is an empirical learning experience that shifts clinical education from traditional to community settings to provide students with meaningful opportunities for self-development, improving their clinical skills, problem solving and lifelong learning.[1-3] Health sciences students are exposed to real-life situations that can contribute to a deeper understanding of social determinants of health and various cultures, improved communication skills and a more positive, compassionate attitude towards patients.[4,5]

The University of KwaZulu-Natal (UKZN), Durban, South Africa, in its effort to be more community engaged, strives to transform health professionals' education from a traditional structure to one that is more competency based, which adds value to the communities it serves. The School of Health Sciences drives this agenda by embracing CBE with similar aims and objectives into current curricula across all disciplines, including audiology, biokinetics, exercise and leisure sciences, dentistry, occupational therapy, optometry, pharmaceutical sciences, physiotherapy and speech language pathology.

Clinical training in these disciplines usually occurs at campus clinics and designated off-campus academic training sites.[6] However, with CBE being introduced in 2016, part of this clinical training has now shifted to various sites, such as primary and community healthcare centres and decentralised sites, including regional and district hospitals. At the decentralised sites, students have an extended clinical exposure for 2 - 6 weeks, depending on discipline-specific requirements for clinical training. The university provides support for this type of training by ensuring transport to the sites and accommodation for students. Academics prepare students for the decentralised sites by ensuring that they have attained an adequate level of competency in terms of clinical exposure and theoretical knowledge before they depart. Clinical staff at decentralised sites are responsible for monitoring and supervising students on a daily basis as an informal part of their work.

The main outcomes of CBE are to provide students with clinical skills in primary healthcare and to equip them with graduate competencies (Table 1) to be empathic healthcare practitioners, communicators, collaborators, leaders, managers, health advocates, scholars and professionals - able to function effectively in a variety of health and social contexts, as noted in the institution's business plan.[6] A key component of CBE is reflection on learning, which facilitates the connection between practice and theory, thought and action, and fosters critical thinking.[1,5] From the literature, three main theories explain how learning occurs in a community environment: social constructivism, Kolb's experiential learning theory[7] and the situated theory culture,[8,9] in which students learn through observation, experience and reflection and construct their own understanding.

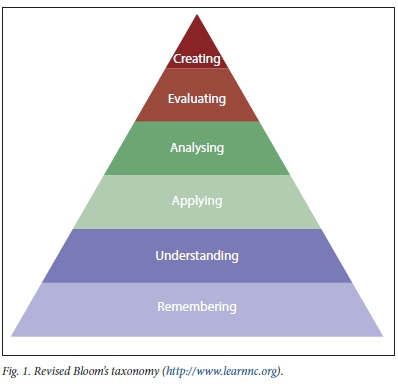

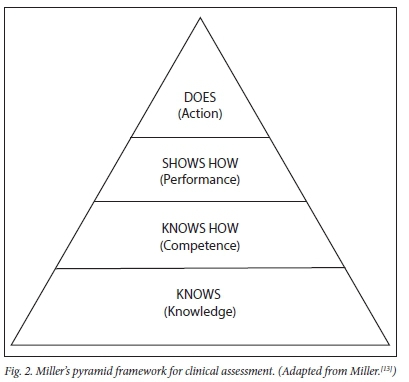

While students are at a distant learning site, academics need to determine if learning does in fact occur, and if CBE has achieved its intended outcomes. Therefore, assessment of CBE is important, as it drives learning[10] and inspires students to set higher standards for themselves.[11] The main reasons for assessments in health sciences education are to optimise student capabilities and protect the public against incompetent clinicians.[11] Assessments should therefore test knowledge, technical skills, clinical reasoning, professionalism, communication skills and reflection.[11] In an academic setting, assessments are formal and well structured, taking the form of tests and assignments. To test knowledge, academics in various disciplines use Bloom's model for written assessments, starting with testing lower-order thinking levels of remembering, understanding and applying, and continuing to higher-order thinking levels of analysing, evaluating and creating (Fig. 1).[12] Similarly, academics use Miller's model to assess clinical competence, starting with cognition-testing knowledge (knows), competence (knows how), performance (shows how) and action (does) (Fig. 2)[13] - conducted within a closed academic clinical training environment. However, development of competence in different contexts may occur at different rates, depending on a student's ability to adapt to various clinical settings.[11] The question then arises: can the methods used in formal academic settings be applied to test learning that occurs in community-based settings?

The literature indicates that there is a trend towards continuous assessment in the form of small formative evaluations throughout the year rather than a single summative one at the end of the year.[14] However, designing assessments in CBE settings may prove challenging for several reasons: the learning environments are not standardised, making it difficult to control,[15] and students are assessed by a number of tutors with varied levels of educational skills.[16] Moreover, it can be challenging to assess learning experiences that do not require memorising facts.[17] Personal growth and change in attitude towards others with greater needs are also hard to measure. In addition, the principles of assessment must be followed when deciding on which methods to use, i.e. assessments should be valid (measuring what is intended to be measured), reliable (consistency in marks obtained by several examiners), transparent (able to match the learning outcomes) and authentic (student's original work - no signs of plagiarism). Appropriate assessments are therefore required to measure both clinical skills and personal attributes that are truly reflective of the social context of the learning experience. In the literature, clinical supervisors' observations and impressions of students are the most common assessment method used in community-based settings.[11] However, the perception of most academics is that this method is often criticised as being subjective, especially if there are no clearly articulated standards.[11] Multisource assessment, in which a student is assessed by different members in a clinical setting, such as peers, nurses and patients, to provide feedback of a student's work habits, ability to function in a team and patient sensitivity, is another assessment method.[11] Self-assessment in the form of portfolios, in which reflections of technical skills and personal development are documented, is also used.[11]

Disciplines in the School of Health Sciences conduct CBE individually and collaboratively, with different levels of implementation and assessment. The researcher (IM), a lecturer in the Discipline of Dentistry with >10 years of experience in training dental therapists and oral hygienists and a PhD promotor, aims to determine the methods used by other disciplines with the vision of capacity building and learning from fellow colleagues. Therefore, the objectives of this study were to describe the assessment methods used in CBE in the School of Health Sciences at UKZN and to determine how these assessments were aligned to the learning outcomes.

Methods

Research design

This was a qualitative, descriptive and explorative study. The theoretical orientation is underpinned by the constructivism paradigm. This orientation facilitated the exploration of CBE using various data sources and methods to ensure that the phenomenon was not viewed through a single lens but through multiple lenses to obtain a deeper understanding of it. In constructivism, knowledge is obtained through social construction, mainly through subjective understandings of people's experiences and their interactions with each other. This study used different qualitative methods, such as interviews and focus group discussions, to obtain information on assessment methods used for CBE, and explored the extent to which each method achieved its learning outcomes.

Participants

A purposive sampling method was used to select the study sample. The academic leader of teaching and learning in the School of Health Sciences was selected for an interview for expert opinion on the roll-out of CBE in the School. The researcher sent emails to the academic leaders of each of the health sciences disciplines to nominate one academic currently involved with CBE to participate in a focus group discussion. The researcher, who has a professional working relationship with each participant, sent email invitations to the identified academics who agreed to participate in the study. A total of 9 participants (A1 - A9), the academic leader and one academic from each discipline agreed to participate and provided written informed consent. The participants comprised 8 women and 1 man, ranking from lecturer to professor and having 5 - 25 years of experience. An information sheet was given to each participant before data collection, outlining the reason for the study.

Data collection

Data were collected using two methods. Firstly, a semi-structured, face-to-face interview using mainly open-ended questions was conducted with the School's academic leader of teaching and learning to gain a better understanding of CBE at managerial level. Sample questions included: What is the role of assessment in community-based teaching? Who should be involved in the assessment? The interview took place in the office of the academic leader and lasted ~30 minutes.

Secondly, the researcher facilitated focus group discussions in the relaxed environment of the boardroom in the Discipline of Dentistry in the presence of a research consultant, who made field notes during the discussions. As all academics were not available simultaneously owing to work commitments, two focus group discussions were conducted separately on different days, each with 4 participants. The researcher, with the assistance of the consultant, developed a set of open-ended questions to guide the focus group discussions, focusing on their current CBE projects, their experiences and the role of assessment. Sample questions included: What are your views on assessing community-based training? Can you suggest appropriate methods you use in assessing community-based training? The focus group discussions lasted ~65 minutes and the same set of questions was used in both discussions.

The interview and focus group discussions were conducted in English and audio recorded, which a research assistant transcribed verbatim, after which the data were cleaned before analysis. The researcher engaged the services of the research consultant to assist with the thematic data-analysis process following the 6-phase process to thematic analysis by Braun and Clarke[18] to undertake the qualitative data analysis. The researcher and consultant independently read through the transcripts several times to identify familiar patterns. Initial coding was undertaken by identifying a segment that could be organised into meaningful categories relating to the objectives. Open coding was done manually by writing notes on the transcripts. Several codes were linked together in axial coding and the core categories were collated through selective coding. The different codes were then sorted and collated into large overarching themes and subthemes. The researcher and consultant compared the themes that were worked on independently and collated them. The collated extracts were reviewed to check whether they formed a coherent pattern and then refined, discarding certain extracts not falling into themes, until data saturation was reached.[18]

Furthermore, the researcher conducted a review of the assessment methods, using an inductive analysis process and tabling the results.

Credibility, a form of internal validity in qualitative research, was established by using varied research methods, i.e. interviews and focus group discussions, to obtain the data. Credibility was further established through peer debriefing, which was undertaken by another member of the research team who reviewed the data collection methods and processes, transcripts and data-analysis procedures, and provided guidance to enhance the quality of the research findings.[19] Transferability, which relates to external validity, was facilitated by the use of a purposively selected sample, thereby providing a thick description of the context of the enquiry.[20] This aspect was further enhanced by comparing research findings with those in the current literature. Dependability, which determines consistency in research findings of the same participants and context, was achieved through the use of member checks. The analysed data were sent to 2 participants from each focus group to evaluate the interpretations made by the researcher and to provide feedback.[20] Dependability was further enhanced by the researcher, as well as the research consultant as a co-coder analysing the same data and comparing the results. Confirmability was established through quotations of actual dialogue of the respondents. Participant confidentiality and anonymity were maintained.

Ethical approval

This study was part of a larger study on CBE conducted in the School of Health Sciences, UKZN. The larger study explored the intended role of CBE being rolled out in the School, described the perceptions of academics from the different disciplines on this teaching strategy, explored interprofessional learning opportunities for dental therapy students in public, private and non-governmental organisations and obtained the perceptions of final-year dental therapy students participating in CBE projects. This study is part of academics' perceptions of CBE. Ethical approval was obtained from the Humanities and Social Sciences Research Committee, UKZN (ref. no. HSS/1060/015D).

Results

Based on the responses of the interviews and focus group discussions, two main themes emerged regarding assessment in CBE: the assessment process and the methods of assessment used. Furthermore, a review of assessment methods is given and discussed.

Theme 1: The assessment process

Under this theme, three subthemes arose: the relevance of assessments in CBE, who should perform the assessment and how assessments should be done.

The relevance of assessments in CBE

All respondents in this study agreed that assessment was an integral part of CBE to ensure that students remain engaged, as supported by the following quotes:

'Yes, definitely ... assessment and education for me goes hand in hand. I cannot split the two.' (A1)

'The good thing about assessment is that it gives them an opportunity to reflect on their practice, . , so you can see the learning, the growth and to ensure that this is something they do as a lifelong practice, not just in this module.' (A2)

'The assessment actually forces them to understand the different professions that they are working with .. .when they are out there, you do not know what they are imbibing.' (A3)

'Assessment plays a very big role and is taken very seriously.' (A5) 'There is no student who will take the training seriously if they know that there is no sort of assessment.' (A7)

Who should perform the assessment?

Generally, academics in higher-education institutions are responsible for teaching the course content and ensuring that skills are transferred through assessment. In this study, the academics in the focus groups raised concerns about who should conduct assessments, as the competence of clinical supervisors varied among the programmes, as did the participation of academics at such learning sites:

' Now with the shift towards decentralised training because it is going to be community based, my problem is our students are going to go to these complexes that are further away from us. Who is responsible for the assessment? What framework or tools are available? The way we assess right now is a very objective assessment, but are the people who are supervising our students equipped to use that assessment tool?' (A8)

While some respondents believed that both academic staff and clinical supervisors should be equally involved with assessment, given their differing roles in imparting knowledge and skills, others believed that clinical staff could be empowered, through training, to assess students, as illustrated by the following quotes:

'It will have to be both because you need the academic side of it, as you know about the assessment, you understand it better, how assessment works. The clinical supervisor has not been trained formally in assessment, but from their experience, they can be roped in. They cannot assess a student only, but you need them as well, because they have worked with the student. So . we need to get those people comfortable with assessing this student.' (A1)

'Can be done if staff at these sites are trained and willing to do it. However, they should be monitored by university staff.' (A4) 'Yes, the supervisors are there. They [students] get a clinical evaluation mark and that will be what the supervisor gives them over the 6 weeks. There can be certain criteria they follow to allocate marks.' (A2)

However, some participants in the focus group argued that clinical supervisors are reluctant to become actively involved with student supervision and assessment, as highlighted by the following remarks:

'The other challenge is that there are perceptions from the Department of Health that this is an outside programme or an outside responsibility that is being imposed on them.' (A7)

'... many of the health professionals in the hospital facility are young, as this is a fairly new profession in the public sector. They are still finding their feet. They are in no position to clinically supervise.' (A6)

Some participants of the focus group offered solutions to overcome the challenges of supervision and assessments, as suggested by the following quotations:

' The one solution that we had was train the trainer. We bring all the clinical staff into university, we get a workshop going and then we do programmes with them and then we do sessions at the end where we get them to watch. We were thinking of getting videos and getting them to watch and assess, so there is inter-reliability.' (A8)

' I agree that was also a strategy that all those who would be involved in the training should be trained first by the College of Health Sciences.' (A7)

How assessments should be done

Senior management of teaching and learning believed that assessment should be formative (ongoing) rather than summative:

'... there are very interesting ways of looking at assessment . it must be continuous assessment. You cannot have exams on something like this. It is continuous assessment where every step of the way a student is taught something; it is assessed if he knows it. If he does not know it, you go a step back and you teach him.' (A1)

Theme 2: Methods of assessment

From the focus group discussion, it was established that only 1 of the 8 disciplines had very limited participation in CBE, with no assessments being conducted. Academics in the other 7 disciplines conducted their assessments using varied methods that included oral, written, clinical, online, peer and multiple modes, as described below.

Oral assessment

The academics from 7 disciplines used some form of oral assessment, which included seminars, case and handover presentations and oral examinations.

• Seminars, case and handover presentations

The seminars were oral presentations on topics that were well researched and presented, using Microsoft PowerPoint, to an audience of peers and academics. Case presentations on particular patients attended to were also a common method of oral assessment. Academics in the School of Health Sciences used this type of assessment to assess knowledge and communication skills. These assessments are conducted summatively at the end of the block at the clinical site by academics from individual disciplines, while others are assessed as a group by academics from different disciplines, with written documentation to support the oral presentation, as illustrated by the quotes below:

'The whole team is expected to see 1 patient - then all students across the professions present the case and each student is expected to present from their professional perspective, and they each get a mark for this.' (A5) 'Handover presentation - where they talk about all the projects and all the clients they have seen, do a verbal presentation and also hand in a written document which is e-filed and stored as an information base for future rotations, and they get a mark for this.' (A5)

'Case presentations on the patients they have managed to an audience of clinical, academic staff and fellow students at the end of a clinical block at the community site.' (A8)

The academics reflected that the main advantages of case presentations were teamwork and the promotion of interprofessional collaboration:

'We really grow them in those case presentations because they do it, they plan it collaboratively and they present it collaboratively and they do not necessarily present on the audio part, they may present on the OT [occupational therapy] part. It actually forces them to really understand to give value ... .' (A2)

However, they found that in group case presentations, weaker students might go undetected:

' The disadvantage of what we do at the moment with case presentations, and handovers particularly, is that when they are doing group work, we have very little opportunity to hone in on the weak individual student until the exam, and a student can slide, based on competent group members who do not want their mark to be compromised. So, they will pick up the slacker, they will work harder to make sure this group gets a good mark.' (A2)

• Oral examination

Some disciplines conducted an oral examination, as it enabled assessment of knowledge and communication skills with academics:

' We also have an individual oral exam that covers a lot of the theory behind their thinking about why they are doing it, what primary healthcare principles are evident in the programme . .' (A2)

Written assessments

This study showed that disciplines also used written assessments to test knowledge and writing skills, such as assignments, essay writing and portfolios of evidence.

• Assignments and essay writing

Some academics reported using assignments as an assessment method, with varying levels of success. Assignments test a student's ability to present a clear, concise summary of evidence of experiential learning:

'An assignment that is huge, some of them are 50 pages long, is submitted. Essays too are used. A set topic is given to them, which asks them to unpack through theory what they are seeing and engaging with and to think things through using a very rich theoretical focus.' (A5)

• Portfolio of evidence

In this study, academics showed strong support for the use of portfolios as an effective means of assessing CBE, this being a compilation of work over time, and regarded by them as a good overview of a student's abilities:

'Students need to produce a portfolio of evidence of their experience of what they learnt at these sites, their weaknesses and their strengths.' (A4) 'The best, the most efficient way of assessing is a portfolio.' (A2) A detailed written report of work done and their observations in a workplace.' (A7)

Some participants in the focus group argued that a disadvantage of portfolios was the time taken to mark them:

'The portfolio assessment in itself is a nightmare in terms of managing it with the limited resources we have and the time as staff.' (A2)

Clinical assessment

This involved assessment of a clinical procedure and logbook entry.

• Assessment of a clinical procedure

Three of the 7 disciplines used this assessment, which academics conducted at clinical sites. It entailed direct observation of a student's interview with a new patient, history taking, diagnosing and treatment planning, which were then presented, as well as performing a clinical procedure on a patient observed by a lecturer, for which a mark was allocated:

'They get a clinical evaluation mark and that will be what the supervisor awards them over the 6 weeks . There are certain criteria they allocate. This makes up 50% of their clinical assessment mark and is based on what the supervisors are saying on a daily basis when they are out there. The other 50% comes from group work, which is the case presentation.' (A2)

'Clinical assessment by trained health professionals in hospitals.' (A6)

• Logbook entry

Academics reported also using logbooks to assess CBE, and provide students with a list of clinical procedures or tasks that must be completed and verified by clinical staff by signature that these tasks were adequately performed. It also documents the range of patient care and learning experiences undertaken by students as a means of self-reflection. Students also had an opportunity to comment on their own work, with the staff rating them according to level of competency:

'Daily assessments and entry into logbook at the site, which is marked by the clinical supervisors.' (A4)

Online assessment

The advancement of information and communication technology has expanded the learning environment to allow students to learn anytime and anywhere. Academics in the health sciences made use of the university's e-learning platform to assess their students. They indicated that it provided a communication platform for academics when students are at a decentralised learning site and promoted self-learning, as students reflected on their work progress.

' They have got the weekly blog and before they go in ... they know in advance what they are going to be doing. They submit a plan at the beginning of the week, which is reviewed and assessed in terms of have they allocated their time to do whatever they are doing and then from the blog we get a better idea of how the week went.' (A2)

'... that blog gives them a chance to reflect on their practice, ... so you can see the learning, the growth and development and even in the exam they are usually questioned about the blog and also to ensure that this is something they do as a lifelong practice, not just in this module.' (A3) 'Blogging - they need to blog every week. It is about writing and reflecting and thinking things through using Kolb's learning cycle during the blogs.' (A5)

Peer assessment

The academics indicated that peer assessment was a useful tool to assess CBE, as it enabled students to evaluate their colleagues, alongside academics, thereby ensuring fairness and consistency of assessment. It also encourages reflection by students as they become more aware of how their work is evaluated:

' We also have peer assessment on the last day, which is very strong, because when the students get their feedback they pull up their socks. They will say this person does not come for equipment, they just pitch up late on the bus . so they get quite brutal . . They just say it as it is, so it does actually make students reflect also on their performance.' (A2)

The disadvantage raised in this study was that students tend to be biased towards friends:

'Sometimes the students are biased towards their friends with the peer assessments, because the students will inflate the marks.' (A4)

Review of assessment methods

A review of the assessment methods indicated by the academics in theme 2 was conducted using inductive analysis. Each assessment strategy was examined for its strengths and limitations, and how it related to learning outcome and development of graduate competencies. It also showed how each method ranked against the revised Bloom's[12] taxonomy and Miller's[13] framework of clinical competence (Table 2).

Discussion

In this study, academics from the School of Health Sciences considered assessment an important aspect of CBE, inculcating a habit for self-reflection that can contribute to lifelong learning. It serves as a means of determining whether a student can progress to the next level and exit the programme with key professional competencies and relevant technical and non-technical skills in communication, collaboration, scholarship, leadership and advocacy, as outlined in the business plan of the School.[6] This is supported by Epstein,[11] who asserts that assessment drives students, motivates and directs future learning to incrementally improve their capabilities from a student to graduate to health professional.

In institutions of higher education, academics are responsible for assessments, with the literature indicating a change from single-test methods to multisource assessments.[14] In this study, academics were of the opinion that part of this responsibility should be shared with clinical supervisors at community-based sites. They were also willing to conduct training workshops to empower clinical supervisors. This is supported by Doherty,[21] who views the clinical supervisor as a personal mentor and role model who contributes towards improving student clinical and communication skills. According to Doherty,[21] clinical supervisors should be actively involved with assessment, but where they are unable to do so, they need to be mentored. Ferris and O'Flynn[14] assert that students should be given a chance to judge their own work and that of fellow students, as self-reflection and self-assessment ensure active engagement with theory and practice and a deeper learning experience that promotes lifelong learning.

In support of this, the literature affirms that formative assessment is the most appropriate way to assess CBE, as it guides future learning, promotes self-reflection and instils values.[11,22] Competence is developmental, as students start off as novice learners, only knowing theory, then progress to applying it in clinical situations. By engaging with patients on repetitive rotations, they reflect and learn through trial and error to effectively manage patients in a professional manner.[11] Formative assessment therefore aids in the progress of a student from novice to competent professional, with feedback from assessors to guide the learning.[11]

It was observed that academics used a variety of methods to assess CBE, ranging from simple tests, assignments and essays, which test lower-order thinking, to complex case presentations, clinical assessments, blogging and portfolio assessments, which test higher-order thinking. Table 2 indicates that most of the methods used by academics tested higher-order thinking, according to Bloom[12] and Miller.[13] This is relevant, as those who participate in CBE programmes are exit-level students who need to meet graduate competencies in preparation for the work environment. Most of the methods meet >2 of these competencies, but not all, while assessment in the roles of being a leader, manager and advocator is lacking.

One of the common methods of assessment used by academics in this study was oral case presentations. Green et al.[23] support this type of assessment, as it tests a student's clinical reasoning, decision-making and organisational skills, and establishes their ability to determine what information is required for a good presentation. More importantly, it is the primary mode of communication between healthcare professionals and facilitates efficient patient care.[23] The added value of case presentations is that they are the most common mode of communication between professionals, and affords students the opportunity to learn and master these while in training.[23] Furthermore, oral assessments test students' knowledge and communication skills, as well as their ability to work with other health professional students, thus contributing towards graduate competencies in the roles of healthcare practitioner, communicator, collaborator, scholar and professional. In terms of Bloom's[12] taxonomy, it tests the understanding of knowledge, while in Miller's[13] model of clinical competence, it tests competence (knows how).

In our study, oral examinations were also used to assess CBE. However, it has been criticised in the literature for being unreliable and biased, with inconsistencies in questioning and marking.[24] Moreover, students are tested under pressure within a limited time, which could be particularly difficult for students for whom English is their second language.

Written assessments were also commonly used by academics in this study. This method is supported in the literature, as Al-Wardy[22] affirms that assignments and essays are good for processing and summarising information, as well as applying it to new situations. However, Epstein[11] argues that written assessments have little value if they are not contextual, including clinical scenarios and questioning. Written assessments rank low on Bloom's[12] taxonomy, as they test remembering, which comprises understanding of knowledge, while Miller's[13] framework only tests cognition (knows) and competence (knows how). In terms of graduate competencies, written assessment can contribute to developing the student in the roles of healthcare practitioner and scholar.

In this study, some academics believed that a portfolio of evidence was the best method to assess CBE. This is supported in the literature, as it is a collection of students' work that shows their effort, progress and achievement over time through self-reflection.[24] Friedman et al[25]are of the opinion that portfolios are an authentic way to assess a student's personal real-world experience of integrating learning of a wide range of personal, academic and professional development. It directs students to develop self-learning and autonomy, transferring responsibility for learning from the teacher to the student. Turnbull[26] also finds it to be a reliable, valid and feasible form of assessment. However, Al-Wardy[22] argues that it is not very practical, because it is difficult for students to compile and time-consuming for academics to mark.

Many academics in this study used clinical assessment in CBE. In the literature, assessment of a clinical procedure is the most common tool and is ranked high, as it is a valid evaluation of clinical competence.[11] Such assessments prepare students with the experience necessary to manage patients on a day-to-day basis.[11] They groom a student to meet graduate competencies of being a caring healthcare professional and cross-culture communicator, as they provide detailed information of student and patient interaction to make a diagnosis and design a treatment plan in the patient's best interest. This type of assessment also ranks high with Bloom's[12] taxonomy (applying, analysing and creating) and Miller's[13] framework (action/does). However, Al-Wardy[22] argues that clinical assessment cannot be very reliable, because it lacks standardisation and there is a limited sampling of skills,[2] as students are normally assessed on a single patient and may not perform at their best on that given occasion.

In our study, it was found that keeping a logbook of all the clinical procedures at a community-based site is an effective way of assessing CBE. According to Blake,[27] keeping a logbook is very useful for focusing a student's attention in obtaining important objectives within a specific time period. This is a practical way of assessing a student at a decentralised site and directly aligning their graduate competencies as healthcare practitioner, communicator, scholar and professional. However, for logbooks to be effective, Al-Wardy[22] asserts the use of checklists or rating scales for assessing specific behaviours, actions and attitudes, which will also ensure standardisation of marks allocated by the clinical supervisor and academic.

Blogging is one of the newer methods used by academics in this study. It is well documented in the literature as providing a rich situated learning environment that encourages knowledge creation, sharing of thoughts and opinions, creativity, interpretation of materials and reflection, which are more often applied than the structured exercises in a classroom setting.[28,29] However, Boulos et al.[30] note that blogging does not support learning when used in an unplanned manner. While there may be many advantages to blogging, access to computers and the internet may be a problem for students at UKZN, as there may be limited resources at decentralised community-based sites. Blogging has the potential to mould a student into being a good healthcare practitioner, scholar and professional if it is used for knowledge generation and application. When used appropriately, it ranks high in Bloom's[12] taxonomy, as it tests application and analysis of knowledge, while in Miller's[13] framework it tests performance (shows how).

Another method used was peer assessment. In the literature, peer evaluation demonstrates many strengths and was noted as being effective for assessing skills acquisition and attitudinal learning, such as integrity and respect.[30] Students perceived this form of assessment as a non-threatening exercise, being done by fellow colleagues, which offers them an opportunity to compare their own work with the standards achieved by others.[31] While this can contribute to developing reflective practices and deeper learning, some students were sceptical, and questioned the credentials of peers.[14] Moreover, this type of assessment is based on trust, and in its absence, this exercise can be undermining and destructive.[14] Peer assessment ranks high in Bloom's[12] taxonomy, as it tests the analysis of knowledge, while in Miller's[13] framework, it demonstrates action (does) and develops graduate competencies of the healthcare practitioner, scholar and professional. To avoid bias, Race et al.[32] suggest the use of designated criteria, and that a mark be allocated to each item before the assessment process begins. In addition, they contend that the final grade of a student being assessed should be a combined percentage of scores of their peers and the academics.

In the deductive analysis of assessments, it was observed that academics in the study considered using multiple methods in designing assessment methods, as a single method is inadequate to assess a range of competencies. Furthermore, it was noted that assessment must be fit for purpose, i.e. to test knowledge and its application; use tests, assignments, essays (knows); case presentations to test competence (knows how); portfolios, blogging and peer assessment to test performance (shows how); and clinical assessments and logbooks to test action (does).[13] This is supported by Al-Wardy,[22] who confers that each assessment has its own strengths and flaws, and that by using a variety of methods, the advantages of one may overcome the disadvantages of the other.

Implication of findings

The findings show that academics in this study consider assessment an important aspect of educational practice in health professionals' education, particularly in CBE. The study provided useful data regarding assessment methods used in CBE by academics in the School of Health Sciences, UKZN, and how they can contribute to preparing graduates for the work environment. The findings may be applicable to academics in other universities, where students undertake community-based training.

Study limitations

The study only explored the opinion of one academic in each discipline, and did not take into account their experience with assessing such situations. As CBE in this institution is fairly new for most academics, their opinions may change over time as they modify the content to address perceived limitations of choice and context of assessment methods they may use.

Recommendations

For disciplines that rely on academics to conduct assessments at community sites, this skill needs to be transferred to clinical supervisors who have the competence to undertake the evaluations. This can be done by empowering clinical staff at community-based clinical training platforms by running training workshops or as a continuing professional development (CPD) activity. Research needs to be conducted to establish the extent to which students have taken ownership of their learning, and whether the opportunities for self-reflection and peer assessment are useful or could be improved. Academics should always take into consideration ethical and moral principles around patient confidentiality when clinical cases are presented in the presence of an audience of peers. More importantly, assessment must relate to the context of the disease prevalence and socioeconomic status of the community setting, so that it can reflect students' personal, professional and social growth. More innovative assessments are required to establish graduate competencies in the roles of leader, manager and advocate.

Conclusion

The study findings indicated that assessment plays an important role in consolidating student learning at CBE sites, with multiple assessment methods being required to achieve graduate competencies in preparation for the workplace. The choice of assessment methods must be contextual and fit for purpose to allow for overall student development. Greater emphasis should be placed on enabling clinical supervisors to perform student assessment at these sites and engaging students with self-reflective assessment practices to promote lifelong learning.

Declaration. None.

Acknowledgements. None.

Author contributions. IM was responsible for data collection, data analysis and conceptualisation. SS was responsible for refining the methodology and overseeing the write-up.

Funding. None.

Conflicts of interest. None.

References

1. Deogade SC, Naitam D. Reflective learning in community-based dental education. Educ Health 2016;29(2):119-123. https://doi.org/10.4103/1357-6283.188752 [ Links ]

2. Mabuza LH, Diab P, Reid SJ, et al. Communities' views, attitudes and recommendations on community-based education of undergraduate health sciences students in South Africa: A qualitative study. Afr J Prim Health Care Fam Med 2013;5(1). https://doi.org/10.4102/phcfm.v5i1.456 [ Links ]

3. Bean CY. Community-based dental education at the Ohio State University: The OHIO project. J Dent Educ 2011;75(10):S25-S35. [ Links ]

4. Mofidi M, Strauss R, Pitner LL, et al. Dental students' reflections of their community-based experiences: The use of critical incidents. J Dent Educ 2003;67(5):515-523. https://doi.org/10:1.1.508.8022 [ Links ]

5. Holland B. Components of successful service-learning programs. Int J Case Method Res Appl 2006;18(2):120-129. [ Links ]

6. Essack S. Draft Business Plan: Community Based Training in Primary Health Care Model in School of Health Science. Durban: University of KwaZulu-Natal, 2014. [ Links ]

7. Kolb AY, Kolb DA. Experiential Learning Theory: A Dynamic, Holistic Approach to Management Learning, Education and Development. London: Sage, 1984:1-59. [ Links ]

8. Lave J, Wenger E. Situated Learning: Legitimate Peripheral Participation. Cambridge, UK: Cambridge University Press, 1990. [ Links ]

9. Kelly L, Walters L, Rosenthal D. Community-based medical education: Is success a result of meaningful personal learning experiences? Educ Health 2014;27(1):47-50. https://doi.org/10.4103/1357-6283.134311 [ Links ]

10. Wormald BW, Schoeman S, Somasunderman A, et al. Assessment drives learning: An unavoidable truth? Anat Sci Educ 2009;2(5):199-204. [ Links ]

11. Epstein RM. Assessment in medical education. N Engl J Med 2007;356(4):387-396. https://doi.org/10.1056/NEJMra054784 [ Links ]

12. Bloom BS. Taxonomy of Educational Objectives. Handbook I: Cognitive Domain. New York: David McKay, 1956. [ Links ]

13. Miller GE. The assessment of clinical skills/competence/performance. Acad Med 1990;65(9 Suppl):S63-S67. https://doi.org/10.1097/00001888-199009000-00045 [ Links ]

14. Ferris H, O'Flynn D. Assessment in medical education: What are we trying to achieve. Int J Higher Educ 2015;4(2):139-144. https://doi.org/10.5430/ijhe.v4n2p139 [ Links ]

15. Magzoub MEMA. Studies in Community-based Education: Programme Implementation and Student Assessment at the Faculty of Medicine, University of Gezira, Sudan. PhD thesis. Sudan: University of Gezira, 1994. [ Links ]

16. Kaye DK, Muhwezi WW, Kasozi AN, et al. Lessons learnt from comprehensive evaluation of community-based education in Uganda: A proposal for an ideal model community-based education for health professional training institute. BMC Med Educ 2011;11(7):1-9. https://doi.org/10.1186/1472-6920-11-7 [ Links ]

17. Cameron D. Community-based education in a South African context. Was Socrates right? S Afr Fam Pract 2000;22(2):17-20. [ Links ]

18. Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol 2006;3(2):77-101. https://doiorg/10.1191/1478088706qp063oa [ Links ]

19. Pitney WA, Parker J. Qualitative Research in Physical Activity and the Health Professions. Auckland, New Zealand: Human Kinetics, 2009. [ Links ]

20. Bitsch V. Qualitative research: A grounded theory example and evaluation criteria. J Agribus 2005;23(1):75-91. https://doi.org/10.22004/ag.econ.59612 [ Links ]

21. Doherty JE. Strengthening rural health placements for medical students: Lessons for South Africa from international experience. S AfT Med J 2016;106(5):524-527. https://doi.org/10.7196/SAMJ.2016.v106i5.10216 [ Links ]

22. Al-Wardy NM. Assessment methods in undergraduate medical education. SQU Med J 2010;10(2):203-209. [ Links ]

23. Green EH, Durning SJ, DeCherrie L, et al. Expectations for oral case presentations for clinical clerks: Opinions of internal medicine clerkship directors. J Gen Intern Med 2009;24(3):370-373. https://doi.org/10.1007/s11606-008-0900-x [ Links ]

24. Gisselle O, Martin-Kneip GO. Becoming a better teacher. Med Teach 2001;23(6). [ Links ]

25. Friedman BDM, Davis MH, Harden RM, et al. Portfolios as a method of student assessment. AMEE Med Educ Guide 2001;(24). [ Links ]

26. Turnbull J. Clinical work sampling. J Gen Intern Med 2000;15(8):556-561. https://doi.org/10.1046/j.1525-1497.2000.06099.x [ Links ]

27. Blake K. The daily grind - use of logbooks and portfolios for documenting undergraduates' activities. J Med Educ 2001;35(12):1097-1098. https://doi.org/10.1046/j.1365-2923.2001.01085.x [ Links ]

28. Yang C, Chang YS. Assessing the effects of interactive blogging on student attitudes towards peer interaction, learning motivation, and academic achievements. J Com Assist Learn 2012;28(2):126-135. https://doi.org/10.1111/j.1365-2729.2011.00423.x [ Links ]

29. Land SM. Cognitive requirements for learning with open-ended learning environments. Educ Technol Res Develop 2000;48:61-78. https://doi.org/10.1007/BF02319858 [ Links ]

30. Boulos MN, Maramba I, Wheeler S. Wikis, blogs and podcasts: A new generation of web-based tools for virtual collaborative clinical practice and education. BMC Med Educ 2006;6(41). https://doi.org/10.1186/1472-6920-6-41 [ Links ]

31. Van Rosenthal GMA, Jennet PA. Comparing peer and faculty evaluations in an internal medicine residency. Acad Med 1994;69(4):299-303. https://doi.org/10.1097/00001888-199404000-00014 [ Links ]

32. Race P, Brown S, Smith B. 500 Tips on Assessment. 2nd ed. London: Routledge, 2005. [ Links ]

Correspondence:

Correspondence:

I Moodley

moodleyil@ukzn.ac.za

Accepted 8 July 2019