Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

The African Journal of Information and Communication

On-line version ISSN 2077-7213

Print version ISSN 2077-7205

AJIC vol.20 Johannesburg 2017

http://dx.doi.org/10.23962/10539/23577

ARTICLES

Development of a first aid smartphone app for use by untrained healthcare workers

Chel-Mari SpiesI; Abdelbaset KhalafII; Yskandar HamamIII

IJunior Lecturer, Department of Computer Systems Engineering, Tshwane University of Technology (TUT), Pretoria

IISenior Lecturer, Department of Computer Systems Engineering, Tshwane University of Technology (TUT), Pretoria

IIIProfessor, Department of Electrical Engineering, Tshwane University of Technology (TUT), Pretoria

ABSTRACT

In the sub-Saharan African context, there is an enormous shortage of healthcare workers, causing communities to experience major deficiencies in basic healthcare. The improvement of basic emergency healthcare can alleviate the lack of assistance to people in emergency situations and improve services to rural communities. The study described in this article, which took place in South Africa, was the first phase of development and testing of an automated clinical decision support system (CDSS) tool for first aid. The aim of the tool, a mobile smartphone app, is that it can assist untrained healthcare workers to deliver basic emergency care to patients who do not have access to, or cannot urgently get to, a medical facility. And the tool seeks to provide assistance that does not require the user to have diagnostic knowledge, i.e., the app guides the diagnostic process as well as the treatment options.

Keywords: first aid, emergency treatment, m-health, smartphone app, clinical decision support system (CDSS), rural healthcare, resuscitation, rule-based algorithms, artificial intelligence (AI), Africa, South Africa

1. Introduction

Medical emergencies are a daily occurrence in human life the world over. More often than not, other people are nearby and the onus of lending a helping hand then falls on them. If these bystanders were trained in first aid, defined as "emergency care or treatment given to an ill or injured person before regular medical aid can be obtained",1 they would be able to assist with the necessary knowledge and urgency required to meet the needs of the patient.

The British Red Cross reported in 2009 that only 1 in 13 (i.e., only 7.7% of) trained first-aiders in Britain felt comfortable in carrying out first aid (BBC News, 2009). If these unsure first-aiders could have something to reassure them, they would more likely be willing to help instead of simply being bystanders.

Companies such as the American Red Cross (2016b), Phoneflips (2015), SusaSoftX (Google Play, 2016a), and St John Ambulance (Google Play, 2016b) have developed first aid applications that run on at least one of the more readily available mobile phone platforms (Android, BlackBerry, and iOS). One characteristic these applications have in common is that their use assumes a certain degree of prior medical knowledge. The user is required to make a diagnosis based on what he/she sees before the application provides steps to be followed in an emergency situation. It is assumed that the user can identify symptoms or diagnose medical conditions. These assumptions limit the efficacy of the applications in line with the limitations of the users' medical expertise. If the user makes an incorrect diagnosis, incorrect treatment will be applied, which may have negative effects on the patient's health.

Even though past research clearly indicates the advantages of training people in first aid, as well as the value of applying correct procedures in first aid situations, there appears to be a gap in the literature regarding research into mobile first aid applications that do not require the user to make diagnosis and instead use the patient's symptoms to guide the user in appropriate and safe assistance.

In the study that is the focus of this article, which took place in South Africa, we developed the prototype of an automated clinical decision support system (CDSS) smartphone app for use by untrained healthcare workers in emergency medical situations, particularly in under-served rural areas. The study aims to achieve the following research objectives:

-

to evaluate the WHO IMAI guidelines as basis for algorithm creation and investigate various algorithms for use in the proposed system;

-

to investigate and identify the most appropriate software specification(s) to develop an m-health first aid system to be used as a guide in medical emergencies; and

-

to verify the validity of the outcomes proposed by the system by comparing its outcomes with the actions of medical professionals.

The article is structured as follows: The next section reviews literature on first aid and communication technology in healthcare (including artificial intelligence and CDSSs), and describes the workings of two existing first aid smartphone apps. Section 3 describes the study: the development, testing, and refinement of of the prototype first aid mobile app. Section 4 provides results from an evaluation questionnaire on the tool, as completed by medical professionals. And Section 5 provides conclusions and future research directions.

2. First aid and the role of communication technologies

According to the St John Ambulance Association's statement about the purpose of first aid, it should (1) preserve life, (2) prevent deterioration, and (3) promote recovery (St John Ambulance, 2016). Two of the many possible examples available in the literature, of cases where bystander first aid was needed, are the following:

-

a 17-year-old boy was in a motorcycle accident with no serious injuries, his heart stopped, and no bystander performed CPR, resulting in the boy's death (St John Ambulance, 2010)

-

a 4-year-old boy suffered a swimming pool accident and was resuscitated by young, off-duty lifeguards (American Red Cross, 2016a)

Shortages of healthcare workers

It is not only the lack of first aid training and confidence among the general public that poses a risk. There are also significant shortages of healthcare workers in many parts of the world, particularly in developing countries. According to the Global Health Council (GHC, 2011), 15 sub-Saharan African countries do not have the recommended 20 physicians per 100,000 people. These countries have 5 or fewer physicians for every 100,000 people. And 17 sub-Saharan African countries have 50 or fewer nurses for every 100,000 people, far below the recommended minimum of 100 for every 100,000 people.

In South Africa, the "shortage of trained human resources" (Cherry, 2012) was highlighted by the National Health Research Committee at a summit in July 2011 as one of seven possible areas in which healthcare in South Africa can be improved Research shows that nearly 25% of doctors trained in South Africa work in other countries (Gevers, 2011).

The lack of healthcare provided by trained and qualified medical personnel in African countries (Health-e News, 2012), particularly in rural areas where access to trained personnel and hospitals or other medical facilities is particularly scarce, poses great risks for the local people. The simple process of symptom recognition and resuscitation can often mean the difference between life and death (Razzak & Kellerman, 2002) if properly, expertly and timeously delivered.

Communication technology in healthcare

e-Health refers to healthcare practices that make use of electronic processes and information and communication technology (ICT) in a cost-effective and secure manner (Hockstein, 2013). Besides use in emergency medicine, other examples of uses for e-health systems include patient treatment, diagnosis, prognosis, image-processing, health management, and health worker education (WHO, n.d.c).

Professionally trained healthcare workers in first world countries at large welcome and adopt the progress and implementation (Shekar, 2012), however, its use across Africa is still minimal. As an example, we can take note of the study done in Nigeria to determine the use of mobile technology in the distribution of reproductive health information (Ezema, 2016), but in contrast to that countries such as Kenya, Uganda and Zambia are eager to try new technologies that support healthcare with an open-mindedness for possible technologies that might have a future common platform (Shekar, 2012). Even with this in mind, the use of e-health technologies in developing countries is not widely accepted. It has been met with scepticism as it is not perceived to have the same personal interaction as would be the experience with a human healthcare worker. In some instances it is seen as a technological replacement of the human factor and only an aid in training and development of healthcare workers, rather than a trusted aid in actual emergency situations (Van Gemert-Pijnen, Wynchank, Covvey, & Ossebaard, 2012).

Mobile technology

In Africa, the number of mobile phone users was 557 million in 2015, and is expected to reach 725 million by 2020 (GSMA, 2016). When considering mobile technology in healthcare, it is noted that key goals are to improve access to, and the quality of, care (Qiang, Yamamichi, Hausman, Miller, & Altman, 2012). Treatment of people in rural areas where there is a great shortage of healthcare workers (Lemaire, 2011) links directly to these goals.

Artificial intelligence (AI)

The use of artificial intelligence (AI) in healthcare has been gaining momentum over the last decade, and while there are human aspects which cannot be replaced by a computer, machines are able to analyse massive amounts of data and recognise patterns that are impossible for humans to detect (Hernandez, 2014). To take a simple example, storing and retrieving medical record details via machines is far more reliable than human mental recollection (Hernandez, 2014). So it is evident that, even though machines are not able to function independently in all scenarios of healthcare, AI does have its rightful place in the healthcare industry.

Clinical decision support systems (CDSSs)

Decision support systems (DSS) are "computerized information system[s] that support decision-making activities" (Power, Sharda, & Burstein, 2015). In the clinical decision support system (CDSS) context, Perreault and Metzger (1999) state that one of the core functions of a CDSS is to support diagnosis and a treatment plan, and that it should aim to promote "best practices, condition-specific guidelines, and population-based management". By implementing such a system, a healthcare worker can start working independently sooner, allowing for an increase in the number of active healthcare workers in the field. Distinguishable advantages provided by a CDSS include less time spent on training and less mistakes when healthcare worker is unsure about procedures (Van der Walt, 2016).

Existing f irst aid mobile applications

We now provide a basic comparison between two of the available mobile first aid applications.

St John Ambulance First Aid app

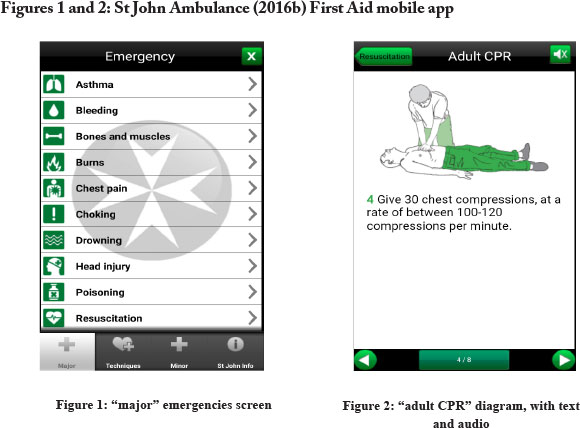

Figures 1 and 2 below shows two screens from the St John Ambulance First Aid app as it existed in mid-2016. The screen in Figure 1 gives the user options on treatments for what the app classifies as "major" emergencies. There are 12 options, 10 of which are available at first glance. The remaining two options can be reached by scrolling down. On the same screen, links can be found to treatment "techniques" (6 subsections), "minor" emergencies (6 sub-sections) and "St John Info" (with information on the company, training, calling for help, and tips on a proper first aid kit).

The user is expected to decide whether an emergency qualifies as "major" or "minor", and then identify treatments under the "techniques" tab, such as "treating shock", "opening airways" and "recovery position". The initial steps for "assessing the situation" (danger, response, airway, breathing, circulation) are also available under "techniques". Various diagrams are included to make the instructions easy to follow, with the diagrams accompanied by text and audio (as shown in Figure 2).

The St John Ambulance First Aid mobile app is available on the Android, BlackBerry and iOS platforms. No registration is necessary before the application can be used.

Netcare Assist App

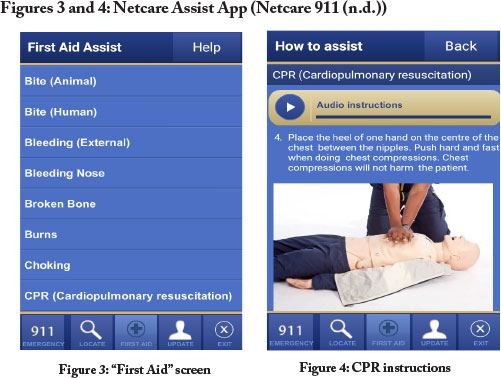

Figures 3 and 4 below shows two screens from the Netcare Assist App as it existed in mid-2016. (Netcare is a South African private hospital network). One the home screen, there is a slider button that can be used to call the Netcare emergency line. The user can also document potential "Personal Emergencies", e.g., issues such as asthma or epilepsy can be stated, along with any contact person(s) and contact numbers of whom to call in each instance of occurrence. Figure 3 (the "First Aid" screen) shows a listing of a variety of situations requiring first aid, for which instructions (as per the example in Figure 4) are given.

There are also tabs located along the bottom of every screen, with which the user can navigate to "locate", "first aid", and "update" pages. With "locate", the user can find the physical location of hospitals, pharmacies, medical centres, and doctors' rooms. On the update page, personal information can be changed and stored. Under "first aid", the user can select from a list of 13 treatments. Each treatment is explained by means of text and pictures, and audio instructions are also available. The application is available for Android, BlackBerry and iOS phones. The user needs to register before the application can be used.

As with the St John Ambulance app, the Netcare app is dependent on the user making a diagnosis before any treatment steps can be requested.

3. The research: Development and testing of a CDSS emergency first aid app

The first core component of our research was development of an automated CDSS tool - an electronic emergency treatment guide app - which can communicate to healthcare workers the procedures and steps to follow in an emergency situation. Our aim was to identify AI protocols similar to protocols applied by healthcare workers during emergency medical assistance and build them into the app, so that such protocols could be imitated by the app.

It must be noted that this study did not include development of an interface to enter patient information and vital readings into the system. For the purpose of this study, that data entry was done manually. Also, a specific database for electronic health records was not developed as part of the study, and data security was not considered. Other parameters decided upon were:

-

that there must be provision for limitations found in rural areas (e.g., lack of equipment availability): the tool must not exclude the user from using it if blood pressure cannot be measured at the time; and

-

that no patient data would be stored.

Choice of medical guidelines

Various guidelines were considered for this project, and it was ultimately decided that the guide for emergency medical treatment set up by the World Health Organisation (WHO) should be the guidance document. These WHO guidelines are the Integrated Management of Adolescent and Adult Illness (IMAI) Quick Check and Emergency Treatments for Adolescents and Adults (WHO, 2011). The IMAI guidelines are already implemented in more than 30 countries (WHO, n.d.b) and extension to several other countries, including India, China and Vietnam (WHO, n.d.a) was underway at the time of our research. After it was determined that the WHO IMAI guidelines were the most appropriate to use as a foundation for the tool, a basic "decision tree" structure was followed in the development of the tool.

Feedback from validators

Based on recommendations from validators, the IMAI procedure for the treatment of a pneumothorax was revised, and a basic cardio pulmonary resuscitation guidelines were added. Additional recommendations from validators were to: make the display of emergency and priority signs be more easily readable, e.g., via the use of bulleted points; revise medications to be suited to the South African market; and add diagrams to illustrate the procedures.

The appropriate app model was prototyped, using rules-based algorithms, AI protocols, and ontology modelling.

Selecting a viable testing platform

The two most likely options identified for testing the electronic emergency treatment guide were: 1) to create an application with a database that must be stored on a user's smart device; or 2) to use a web-based platform with a database that the user must connect to in order for it to be used. The advantages and disadvantages of each, and the eventual platform selection, are now discussed.

A smart-device application

The advantage of a smart-device application with a connected database is that no Internet connectivity is needed, but the disadvantages - which, in our analysis, outweigh the advantages - are: that the application must be installed on a device prior to use, and it needs to store information in a database. Also, for testing purposes, the stored database must be retrieved or, in cases where users do not have devices, such devices must be provided to users, and later collected for data to be accessed.

An Internet platform

Advantages of running an Internet application are that the information can be uploaded immediately, and no storage of information is necessary on the user's device, so none of the user's storage space is occupied. Alternatively, if it is a device lent to the user for the means of inputting data, there is no need to retrieve the device at a later stage. The one major drawback of this route is that the user will need Internet connectivity.

Selection of platform

After considering the advantages and disadvantages, the decision was made to use the Google Forms Internet platform to create the emergency treatment guide tool for users to use during the testing of the concept. We determined that the advantages of using Google Forms are that: no website is necessary; no database needs to be created; live updates can be done without having to recall older versions; a full report is generated containing all the options exercised by the user; the report is accessible by the creator in the form of an Excel spreadsheet; a high maximum number of questions (255) is allowed; and an unlimited number of users is allowed.

Stages of development and testing of the tool

We decided on the following stages:

-

develop the first version of the tool on the Internet platform;

-

conduct field visit to observe trained emergency medical technicians in action, and address shortcomings of the tool;

-

enter actual, documented medical emergency cases from literature to test the tool, and address deficiencies;

-

have healthcare professionals test the tool and evaluate it by means of a questionnaire, and then make adjustments where necessary;

-

have untrained healthcare workers use the tool for data collection purposes; and

-

have the inputs from the untrained healthcare workers validated by medical professionals to verify the validity of the tool.

The final two stages just listed were not performed in the first phase that is the subject of this article.

Basic operation of the tool

In order to place users into categories according to their qualifications, and thus the medical procedures they could be allowed to perform), the user is first asked to indicate her or his level of qualification. The options are:

-

No formal medical training

-

First Aid Level 1 or 2 / Medical student

-

First Aid Level 3

-

Basic Ambulance Assistant / Basic Life Support

-

Ambulance Emergency Assistant / Intermediate Life Support

-

Emergency Care Technician

-

Advanced Life Support / Critical Care Assistant / Emergency Care Practitioner / Nurse / Doctor

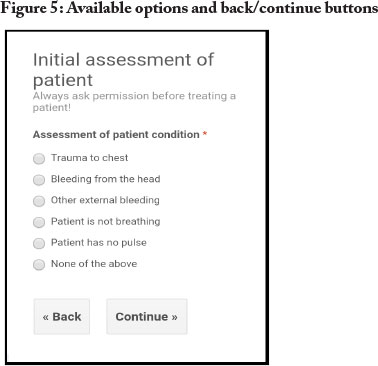

By selecting an option, the user is screened and only receives instructions for procedures that she or he is permitted to perform. Once the user is screened, she or he is guided through a series of questions and steps according to her or his choice of answers. After each instruction or question, the user must give an indication that she or he is ready to continue to the next step (or return to the previous step), as shown in Figure 5 below.

The first prompt is for the user to identify any primary symptoms from a list: "trauma to the chest"; "bleeding from the head"; "other external bleeding"; "patient is not breathing"; "patient has no pulse"; or "none of the above". The user must choose the appropriate response in order to receive further instructions relating to that specific condition. If none of the primary signs can be identified, the user is offered the choice of other emergency signs, e.g., "respiratory distress"; "circulation/shock"; "trauma (and neglected trauma)"; "convulsions/unconsciousness". If none of the emergency signs prevail, the user can choose from a list of priority signs, e.g., "pale/fainting/very weak", "bleeding; fracture/deformity.

Refinement of the tool

As development of the app continued, several issues emerged and were addressed. It was decided that the user would give input by means of selecting one or more appropriate options. Checkboxes were initially used in a limited number of questions, but the questions were later revised to allow for radio buttons only. Dropdown lists proved to be too small for handheld devices and as a result were not implemented. The decision was made to keep text input questions to a minimum, due to the limited screen size of certain models of smart devices. Instead of requiring text inputs, the needed information in all but three question was divided into viable sections, such as a range depicting low values, normal values, and high values representing the possibilities. In the app in its current form, the only questions requiring text input are: (1) "enter diastolic blood pressure (lower value) {optional}"; (2) "enter patent's age {optional}"; and (3) "an identification code the health worker assigns to himself/ herself". (The third question just listed is one of the six questions included for research data organisation purposes, and all six of these questions will be removed from the final version of the tool.

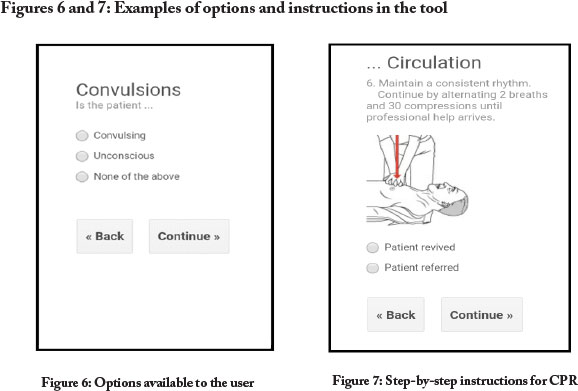

The manner in which the user is guided, by means of a series of options to select from and instructions based on the options selected, is shown in Figures 6 and 7 below.

Improvements after field visit

A field visit was conducted in order to observe trained paramedics. The major concern expressed was that multiple medical issues might present themselves simultaneously in certain situations, and that, accordingly, there had to be clearer distinctions made in the options given in the tool so that the user can choose, and attend to, the condition that is most threatening. It was thus required that additional options be given throughout the instructional process to allow the user to return to other issues (after treating the most pressing conditions) that need to be addressed before referring the patient to a medical professional or medical facility. It was also noted that verbal permission must be obtained from the patient (if he/she is conscious) before any treatment can be given. An instruction to do so was included in the tool.

Testing of tool against documented cases

A key step in testing of the tool was to use specific documented cases to compare the tool's IMAI-based suggested steps with steps actually followed by medical professionals. We used 20 documented cases for this testing, and after comparing the elements of each procedure, a 69.5% correlation was found between the steps suggested by the tool and the steps actually followed by medical professionals. It was found that:

-

the lowest correlation for steps in a single case was 33%;

-

the highest correlation for a single case was 100%; and

-

the order in which the steps were performed had a 100% correlation across the 20 cases.

However, in the process of entering the 20 cases, some difficulties were encountered. It was noticed that too many unnecessary steps were required to reach certain procedures. (As a result, more options were provided in earlier steps in order to reach said procedures directly.) It was also found that two of the methods for administering medication were too vague. And three instances that called for vital signs to be entered became a "dead-end" if the vitals were not known, e.g., if they couldn't be measured (and those instances thus needed an "unknown" option). These deficiencies were addressed.

It was then decided that more cases, as well as cases of a more diverse nature, should be utilised in order to get a more comprehensive idea of the scope of efficiency of the application. The number of cases drawn from the literature was increased from 20 to 30. It was found that this larger case load, with increased diversity of medical cases, produced a slightly lower correlation than with 20 cases - 65.6% instead of 69.5% - and that:

-

the lowest correlation for steps in a single case was 17% (down from 33% when there were 20 cases);

-

the highest correlation for a single case was 100%; and

-

the order in which the steps were to be performed was matched in 28 of the 30 cases (a 93% correlation, down from the 100% correlation with 20 cases).

Testing by healthcare professionals

The tool was also given to two doctors, three nurses, three emergency medical technicians (EMTs) and two pharmacists, so that they could scrutinise the tool and look for inconsistencies between the tool's information and IMAI guidelines. Feedback was received from two of the doctors, one nurse, and two EMTs. The feedback from the responding professionals called for refinement of approaches provided by the app to: certain medical procedures; restrictions on which medical personnel could form certain procedures; administering of certain medications, certain locally available medications; and provision of appropriate scales for inputting vital signs (instead of having the user type the values). Based on this feedback, the tool was further refined - with the help and input of one doctor (Van Aswegen, 2016) and one EMT (Rossouw, 2016). It was decided that of particular urgency was incorporating limitations into the tool in terms of procedures healthcare workers would be allowed to perform, and these were duly built into the app before any further testing was done.

4. Evaluation survey

For a more formal method of feedback collection, a questionnaire was drawn up to gather input from medical professionals. The questionnaire was administered electronically, and feedback from three medical doctors, three EMTs, two pharmacists and one emergency care practitioner (ECP) was received. All the feedback was considered and the tool was adjusted to reflect the recommendations. The core areas of interest in this questionnaire were: the IMAI guidelines; the medical procedures; usability; opinions on assistance offered by the app, efficiency in training, healthcare delivery, and suitability of the tool; security; completeness; participant demographics; and comments on overall perception. The feedback is discussed below.

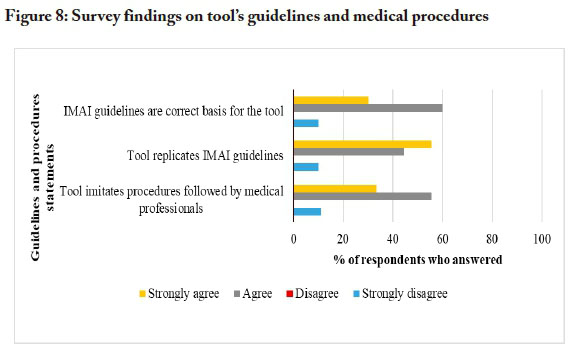

The tool's guidelines and medical procedures

Figure 8 below shows the survey data from the medical professionals' responses to the questions regarding: (1) the choice of IMAI guidelines as the basis for the guidance provided by the app; (2) whether the app succeeds in replicating the IMAI guidelines; and (3) whether the tool succeeds in imitating the procedures followed by medical professionals.

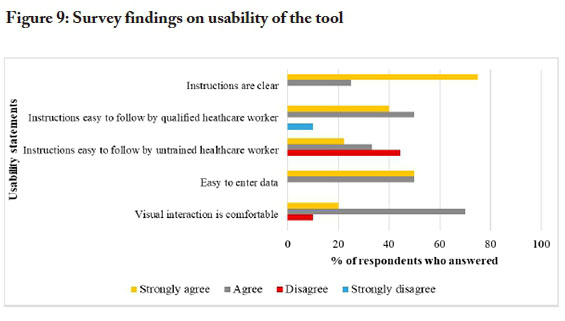

Usability of the tool

Figure 9 below represents the medical professionals' inputs on the usability of the tool, in terms of: (1) clarity of instructions; (2) ease of following instructions for qualified healthcare workers; (3) ease of following the instructions for untrained healthcare workers; (4) ease of data entry; and (5) comfort of visual interaction.

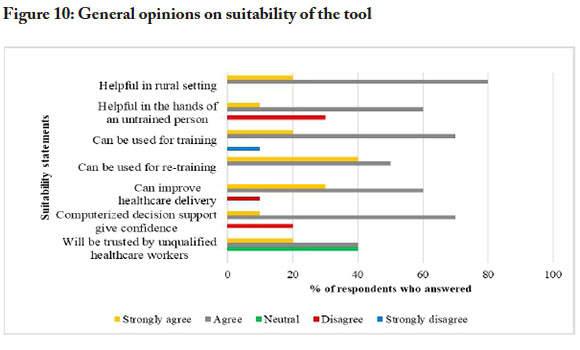

General opinions on suitability of the tool

Figure 10 shows the opinions of the respondents on the following aspects: (1) the degree to which the tool can be helpful in a rural setting where there are no trained medical professionals nearby; (2) the degree to which the tool can be helpful in the hands of an untrained person; (3) usefulness in training of healthcare workers; (4) usefulness in re-training of healthcare workers; (5) usefulness for improvement of healthcare delivery; (6) enhancement of user confidence via computerised clinical decision support system (CCDS); (7) the trust that an unqualified healthcare worker might place in the outcome proposed by the tool.

At the general level, the survey found that 90% of the respondents felt the tool was complete and that no additions needed to be made. Among the 10% of respondents who felt additions were needed, suggestions included: addition of diagrams and pictures to assist people in rural areas who may have low levels of English comprehension or be illiterate; revision of clinical procedures in line with the most recent version of the IMAI guidelines. There was also a suggestion that all the CPR instructions be on the same page, instead of the user having to choose "continue" or "back" to move between instructions.

5. Conclusions and future work

The core aims of this initial study were to develop and test the first version of an m-health first aid tool that makes procedural recommendations in response to symptoms - instead of expecting the user to diagnose the patient and treat according to said diagnosis.

In this first phase of testing, relatively good correlations were found between the steps recommended by the tool and the steps taken by medical professionals in documented cases. The tool was refined on the basis of inputs from - and with direct help from - a small group of medical professionals. After refinement of the instrument, a broader survey of medical professionals' views on the tool was performed, and the tool received relatively strong scores in terms of its ability to replicate IMAI guidelines, its ability to imitate the procedures followed by medical professionals, and in terms of usability general suitability matters.

But even after refinement, the tool in its current form is not without limitations. Perhaps the most significant of these limitations is the one alluded to near the end of the previous section: the lack of visual elements such as pictures and diagrams. For the application to be usable by people who are not fluent in English or who even be illiterate, descriptions of procedures, not matter how accurate, will not suffice. This element will be addressed at a later stage. Another likely limitation in use of the tool is that it consumes valuable time to refer to the screen during use, i.e., when actually treating a patient. Inclusion of voice commands may be a positive addition to the final version. Additionally, rollout and convincing people to choose this tool over other smartphone applications may be difficult as it is a new product.

The next phase will be to validate the system against cases in the field. Untrained healthcare workers will have to participate in the study, to test the guidance aspect of the tool. After untrained healthcare workers have entered their responses, the responses will need to be checked by professionally trained medical personnel to confirm the correctness of the actions suggested by the tool. Correlation factors, relevance and accuracy will then be studied to determine the significance of the data and the support lent by the tool. The ultimate goal, after all development and testing are done, is to generate a viable product.

References

American Red Cross. (2016a, February 29). CPR saves: Stories from the Red Cross. Retrieved from http://www.redcross.org/news

American Red Cross. (2016b). First aid - American Red Cross. Android application. Retrieved from https://play.google.com/store/apps/details?id=com.cube.arc.fa

BBC News. (2009, September 10). Many "lacking first aid skills". Retrieved from http://news.bbc.co.uk/2/hi/health/8246912.stm

Cherry, M. (2012). Purposeful support for health research in South Africa. South African Journal of Science, 108(5/6), 1. https://doi.org/10.4102/sajs.v108i5/6.1268 [ Links ]

Ezema, I. J. (2016). Reproductive health information needs and access among rural women in Nigeria: A study of Nsukka Zone in Enugu State. The African Journal of Information and Communication (AJIC), 18, 117-133. https://doi.org/10.23962/10539/21788 [ Links ]

Gevers, W. (2011). Fleeing the beloved country. South African Journal of Science, 107 (3/4). https://doi.org/10.4102/sajs.v107i3/4.649 [ Links ]

Global Health Council (GHC). (2011). Health care workers. Retrieved from http://globalhealth.ie

Google Play. (2016a). First aid. Retrieved from https://play.google.com/store/apps/details?id=com.max.firstaid

Google Play. (2016b). St John Ambulance first aid. Retrieved from https://play.google.com/store/apps/details?id=an.sc.sja

GSM Association (GSMA). (2016). Number of unique mobile subscribers in Africa surpasses half a billion, finds new GSMA study. Retrieved from https://www.gsma.com/newsroom

Health-e News (2012, July 9). World needs 3.5-m health workers. Retrieved from http://www.health-e.org.za/2012/07/09/world-needs-3-5-m-health-workers

Hernandez, D. (2014, June 2). Artificial intelligence is now telling doctors how to treat you. Wired. Retrieved from http://www.wired.com/2014/06/ai-healthcare

Hockstein, E. (2013, Sept. 4). The promise of eHealth in the African region. Press release Retrieved from http://www.afro.who.int/en/media-centre/pressreleases/item/5816-the-promise-of-ehealth-in-the-african-region.html

Lemaire, J. (2011). Scaling up mobile health elements necessary for the mHealth in developing countries. Actevis Consulting Group. Retrieved from https://www.k4health.org/sites/default/files/ADAmHealth%20White%20Paper.pdf

Netcare 911. (n.d.). Netcare apps. Retrieved from http://www.netcare911.co.za/Netcare-Apps

Perreault, L., & Metzger, J. (1999). A pragmatic framework for understanding clinical decision support. Journal of Healthcare Information Management, 13(2), 5-21. Retrieved from http://www.openclinical.org/dss.html#perreault [ Links ]

Phoneflips. (2015). Emergency first aid & treatment guide. Retrieved from http://www.phoneflips.com

Power, D. J., Sharda, R., & Burstein, F. (2015). Decision support systems. In Wiley (Ed.), Wiley encyclopedia of management. doi: 10.1002/9781118785317.weom070211

Qiang, C.Z., Yamamichi, M., Hausman, V., Miller, R., & Altman, D. (2012). Mobile applications for the health sector. World Bank. Retrieved from http://documents.worldbank.org/curated/en/751411468157784302/pdf/ 726040WP0Box370th0report00Apr020120.pdf

Razzak, L.A. & Kellerman, J.A. (2002). Emergency medical care in developing countries: Is it worthwhile? Bulletin of the World Health Organization, .90(11), 900-905. [ Links ]

Rossouw, V. (2016, April 4). Personal communication. Interviewed by C. Spies.

Shekar, M. (2012, June 18). ICTs to transform health in Africa: Can we scale up governance and accountability? Retrieved from http://blogs.worldbank.org/health/print/icts-to-transform-health-in-africa-can-we-scale-up-accountability-in-health-care

St John. (2016a). What is first aid? Retrieved from http://www.stjohn.org.za/First-Aid/First-Aid-Tips

St John Ambulance. (2016b). Free mobile first aid apps. Retrieved from http://www.sja.org.uk/sja/first-aid-advice/free-mobile-first-aid-app.aspx

St John Ambulance. (2010). Dramatic numbers dying from lack of first aid. Retrieved from https://www.sja.org.uk/sja/about-us/latest-news/news-archive/news-stories-from-2010/april/lack-of-first-aid-costs-lives.aspx

Statistics Portal. (2016). Number of smartphone users sold to end users world wide from 2007 to 2015. Retrieved from http://www.statista.com/statistics/263437/global-smartphone-sales-to-end-users-since-2007/

Van Aswegen, P. (2016, Feb.). Personal communication. Interviewed by C. Spies.

Van der Walt, L. (2016). Mobile decision support system. South African Journal of Science and Technology, 35(1), 1409-1410. https://doi.org/10.4102/satnt.v35i1.1409 [ Links ]

Van Gemert-Pinjen, J. E. W. C., Wynchank, S., Covvey, H.D. & Ossebaard, H. C. (2012). Improving the credibility of electronic health technologies. Bulletin of the World Health Organization, 90(5), 321-400. https://doi.org/10.2471/blt.11.099804 [ Links ]

World Health Organisation (WHO). (2011). Quick check and emergency treatments for adolescents and adults. WHO IMAI Project. Retrieved from http://www.who.int/influenza/patientcare/clinical/IMAIWall chart.pdf

WHO. (n.d.a). Countries showcase benefits of scaling up HIV/AIDS services using WHO approach. Retrieved from http://www.who.int/hiv/capacity/IMAI-ICASA/en/

WHO. (n.d.b). How IMAI (and IMCI) support national adaptation and implementation of task shifting. Retrieved from http://www.who.int/hiv/pub/imai/IMAIIMCItaskshiftingbrochure.pdf

WHO. (n.d.c). eHealth. Retrieved from http://www.who.int/topics/ehealth/en