Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

African Journal of Primary Health Care & Family Medicine

On-line version ISSN 2071-2936

Print version ISSN 2071-2928

Afr. j. prim. health care fam. med. (Online) vol.14 n.1 Cape Town 2022

http://dx.doi.org/10.4102/phcfm.v14i1.3744

ORIGINAL RESEARCH

Evaluating postgraduate family medicine supervisor feedback in registrars' learning portfolios

Neetha J. ErumedaI, II; Ann Z. GeorgeIII; Louis S. JenkinsIV, V, VI

IDepartment of Family Medicine and Primary Care, Faculty of Health Sciences, University of the Witwatersrand, Johannesburg, South Africa

IIGauteng Department of Health, Ekurhuleni District Health Services, Germiston, South Africa

IIICentre of Health Science Education, Faculty of Health Sciences, University of the Witwatersrand, Johannesburg, South Africa

IVDivision of Family Medicine and Primary Care, Department of Family and Emergency Medicine, Faculty of Health Sciences, Stellenbosch University, Cape Town, South Africa

VGeorge Hospital, Western Cape Department of Health, George, South Africa

VIPrimary Health Care Directorate, Department of Family, Community and Emergency Care, Faculty of Health Sciences, University of Cape Town, Cape Town, South Africa

ABSTRACT

BACKGROUND: Postgraduate supervision forms a vital component of decentralised family medicine training. While the components of effective supervisory feedback have been explored in high-income countries, how this construct is delivered in resource-constrained low- to middle-income countries has not been investigated adequately.

AIM: This article evaluated supervisory feedback in family medicine registrars' learning portfolios (LPs) as captured in their learning plans and mini-Clinical Evaluation Exercise (mini-CEX) forms and whether the training district or the year of training affected the nature of the feedback.

SETTING: Registrars' LPs from 2020 across five decentralised sites affiliated with the University of the Witwatersrand in South Africa were analysed.

METHODS: Two modified tools were used to evaluate the quantity of the written feedback in 38 learning plans and 57 mini-CEX forms. Descriptive statistics, Fisher's exact and Wilcoxon rank-sum tests were used for analysis. Content analysis was used to derive counts of areas of feedback.

RESULTS: Most learning plans (61.2%) did not refer to registrars' clinical knowledge or offer an improvement strategy (86.1%). The 'extent of supervisors' feedback' was rated as 'poor' (63.2%), with only 14.0% rated as 'good.' The 'some' and 'no' feedback categories in the mini-CEX competencies (p < 0.001 to p = 0.014) and the 'extent of supervisors' feedback' (p < 0.001) were significantly associated with training district. Feedback focused less on clinical reasoning and negotiation skills.

CONCLUSION: Supervisors should provide specific and constructive narrative feedback and an action plan to improve registrars' future performance.

CONTRIBUTION: Supervisory feedback in postgraduate family medicine training needs overall improvement to develop skilled family physicians.

Keywords: decentralised training; family physician; feedback; individualised learning plan; learning portfolio; postgraduate supervision; mini-Clinical Evaluation Exercise.

Introduction

Feedback is a vital aspect of supervision in clinical settings.1,2 Feedback is defined as 'a way the trainee comes to know about the gaps in their current level of knowledge and the desired goal'.3 For maximum effectiveness, feedback should be provided timeously, in small, manageable quantities, relate to observable behaviours4 and should be tailored to the trainee's learning needs and non-judgemental.5,6 Feedback must also be clearly articulated based on trainee's observed performance,7 promote self-assessment,8 be context-specific, enable interactions between the trainee and supervisor3 and focus on adequate performance and areas for improvement.9,10 Supervisors should assist trainees in developing written action plans specifying the supervisor's role in supporting trainees to achieve the goals outlined in the learning plans.9,10 More recent definitions regard feedback as a coaching activity where supervisors facilitate trainees' self-reflection to improve performance.10,11 However, the acceptability of feedback by trainees still depends on who provides it, whether it is constructive, the trainee's assessment literacy and the nature of supervisor-trainee relationships.5,11

The role of feedback in postgraduate family medicine training

Postgraduate family medicine decentralised clinical training of FPs involves educational and clinical supervision, each of which can be demonstrated in trainees' learning portfolios (LPs). Educational supervision includes identifying trainees' learning objectives and providing feedback on their personal development plans and progress in their LPs.12,13 Clinical supervision involves giving feedback on trainees' relationships with staff and patients, their management of clinical conditions and their professionalism.14 The LPs provide evidence of trainees' observed performance by clinical supervisors using workplace-based assessments (WPBAs),15 using tools such as the mini-Clinical Evaluation Exercise (mini-CEX) form.16

Educational supervision in learning plans

Individualised learning plans in LPs are recognised as essential components of self-regulated lifelong learning,17 which refers to trainees' ability to modify their thinking behaviour and level of motivation.18 Learning plans are essential tools for postgraduate trainees, assisting them with understanding their strengths, professional goals and the family medicine speciality requirements needed to make individual adjustments and identify resources to progress.19 The educational supervisor's role in the learning plans is to orientate trainees to the learning environment, help them set goals and objectives and plan feedback sessions.6

Learning plans can also promote self-directed learning, which differs from self-regulated learning. In self-directed learning, trainees develop their learning goals, identify activities and resources to achieve them and get external feedback to modify learning. By comparison, in self-regulated learning, the trainers set the goals, and trainees regulate their learning, influenced by their cognitive domain, which primarily happens in an academic environment.20 However, there is a lack of literature on self-directed learning,20 especially on the potential for learning plans to promote self-directed learning based on the quality of the feedback provided. The self-directed learning goals trainees develop in these plans must be meaningful, specific, measurable, accountable, realistic and include specified timelines.21 While internal accountability facilitates self-reflection, trainees' external accountability requires regular supervisor feedback.21

Clinical supervision in the mini-Clinical Evaluation Exercise

The mini-CEX tool16 is widely used in HICs to assess clinical competencies.22,23,24 Although this tool was modified to accommodate several scoring systems, clinical disciplines and settings,5,22 more contextualised studies are needed to understand its effectiveness in resource-limited settings. One of the primary purposes of the mini-CEX form is to provide structured feedback on observed performance.5,22 The educational impact of this tool24 is enhanced when combined with well-written feedback25 and negatively impacted by inadequate feedback.5,23 Supervisors often provide inadequate feedback by, for example, not appreciating or being aware of the role of feedback as an educational tool, not being skilled enough to provide helpful feedback, and tending to give high numerical scores (superior or satisfactory) on each mini-CEX competency,25 without explaining why this score is justified. Trainees value narrative feedback more than numerical scores-narrative feedback helps them to distinguish between performance deemed 'good' and 'not so good', promotes reflection on their performance,26 and develop an action plan.27

In sub-Saharan Africa, clinical supervisors play a crucial role in postgraduate training in the workplace.28 Clinical supervisors are expected to provide constructive, good-quality verbal and written feedback during WPBAs. An evaluation of supervisors' feedback in registrars' LPs at a South African university showed that feedback was better documented in e-portfolios than in paper-based ones but was still not sufficiently specific.29 A satisfactory LP is a prerequisite for registrars (trainees) to sit the national exit examination to qualify as an FP.30 One of the challenges of tools such as learning plans or mini-CEX used in LPs is that they assess 'hard' skills (history-taking, physical examination and management) and 'soft' skills (problem-solving, communication, collaboration and professionalism).20 While some received training in providing feedback in South Africa,31 many FPs may not know what constitutes adequate feedback, which is more complex for 'soft' skills.

Evaluating feedback in learning plans or the mini-CEX tool is still in its infancy in various high and low-middle income countries in clinical settings, including sub-Saharan Africa. It has been identified as an area for further research.20,27 This article evaluated both the quantity and quality of supervisory feedback and the scores given in registrars' LPs as captured in their learning plans and mini-CEX forms. The article also reports whether the training district or year of training affected the nature of the feedback across the decentralised clinical training sites. The results could help develop recommendations for improving educational and clinical supervision feedback in similar settings nationally and across sub-Saharan Africa.

Research method and design

Study design and setting

This study is part of a broader cross-sectional, convergent mixed-methods study that evaluated postgraduate family medicine training at five decentralised training sites affiliated with the University of the Witwatersrand in South Africa, using the logic model. The logic model is causal, based on the reductionist theory that links the inputs, processes, outputs and outcomes.32 A previous publication from the broader study reported evaluating postgraduate supervision as a process in the logic model.33 This article reports evaluating supervisors' feedback as a proxy measure for effective supervision as an output in the logic model. In the setting for this study, registrar training is primarily based in primary or community healthcare clinics or a district hospital. During clinical rotations, registrars join various disciplines for 3 months or less in regional hospitals to gain specific knowledge and skills. Family medicine registrars are primarily supervised by FPs but may be supervised by non-family physician (non-FP) supervisors during clinical rotations. The non-FP supervisors could be specialists, registrars in other disciplines, or medical officers. Medical officers are general practitioners working at hospitals or clinics without any specialist registration.

Family physician training for their supervisory role varies. Some FP supervisors had attended 'train the trainer' courses conducted by the South African Academy of FPs. Others had opportunities to attend the courses organised by Wits University but were not trained formally in medical education. The background of the non-FP supervisors in medical education training was not well understood at the time of this study. Family physicians act as educational supervisors and mentors, assess registrars' learning plans and provide feedback during clinical rotations. In addition, as part of WPBAs, FP supervisors complete mini-CEX forms during directly observed consultations by registrars with patients in clinical settings. Supervisors' written feedback in the learning plans and mini-CEX forms provides evidence for the quality of clinical and educational supervision.

This study evaluated the written feedback in registrars' LPs from the five decentralised training sites, four of which are in Gauteng province and the fifth in the Northwest province.

Study population and sampling

All LPs submitted in 2020 by the 20 registrars across all 3 years of training were eligible for evaluation, constituting a purposive sample.34 Thirty-six learning plans and 57 mini-CEX forms were evaluated from 19 eligible LPs. One portfolio was excluded as there was no consent. The learning plans and mini-CEX forms represented all 5 training districts (D1-D5) and 3 years of training (Y1-Y3).

Data collection

The authors evaluated the learning plans and mini-CEX forms between October and December 2020.

Learning plans

At the beginning of a clinical rotation, the registrar develops goals in their learning plan. The supervisor then provides initial feedback on these goals. At the end of the rotation, the supervisor assigns a global score for the registrar's knowledge, skills, professional values, and attitudes and provides final written feedback. A validated tool developed at a South African university29 was modified to assess supervisors' final feedback in the learning plans. The tool was adapted in consultation with one of the co-authors, who developed the validated tool: variables were added to make it more comprehensive on each of the feedback categories extracted from the data, thus increasing the construct validity of the tool.

The modified tool consists of seven categories:

• feedback on registrars' professional behaviour

• feedback on registrars' knowledge and skills

• the valence of feedback

• the presence of an improvement strategy

• a rating of the extent of supervisors' feedback

• the signatory (FP or other clinical supervisors) on the initial feedback

• the signatory (FP or other clinical supervisors) on the final feedback.

The written feedback given by supervisors in the general feedback section was captured in an MS Excel spreadsheet to analyse the quality. The spreadsheet also recorded the training district, year of training and global numerical scores from each learning plan were also recorded in Microsoft Excel. The rating of the extent of feedback was classified as 'poor', 'average', or 'good' by the researchers, depending on the feedback aspects provided. The feedback was rated 'poor' if (1) feedback was absent or irrelevant (2) specific feedback was not provided for the following categories in the tool: professional behaviour, knowledge and skills, valence of feedback and improvement strategy present, (3) forms were not signed and completed by the supervisor. A rating of 'average' was assigned when specific feedback was provided on one or two of the categories (registrar professional behaviour, knowledge and skills, valence of feedback provided or improvement strategy) and forms were signed and completed. The rating was 'good' when feedback was provided for three or more categories (registrar professional behaviour, knowledge and skills, valence of feedback and improvement strategy) and the form was signed and completed.

Mini-Clinical Evaluation Exercise forms

The mini-CEX form requires supervisors to allocate individual scores for the competencies and provide a total score. The form includes sections for the supervisor's feedback and supervisor and registrar signatures.

We developed a feedback assessment tool to evaluate written feedback in the mini-CEX forms, whether explicitly related to the seven competencies or appearing in the general feedback section. The tool assessed the extent and adequacy of the supervisors' feedback, the training district, the year of training, and whether both FPs and registrars signed the form. The 'extent of feedback' rating was classified as 'poor', 'average', or 'good' depending on the areas of mini-CEX competencies addressed, whether supervisors had completed the general feedback section and whether the supervisor and registrar signed the forms. The scores for each of the seven competencies and total scores given in mini-CEX forms were recorded in Microsoft Excel. The narrative feedback provided in the general feedback section was captured in a separate MS Excel spreadsheet to analyse the quality.

Data analysis

The data were analysed for descriptive statistics using STATISTICA 13.5.0.17 and for associations using STATA 14.2. For the inferential statistics, the feedback categories were collapsed into 'some feedback' and 'no feedback' in both learning plans and mini-CEX forms. For both tools, the absence of feedback was categorised as 'no feedback', and all other feedback categories were grouped as 'some feedback'. Similarly, if the rating of 'extent of supervisor's feedback' was 'poor', this was categorised as 'no feedback', and the 'average' and 'good' categories were grouped into a single category labelled 'some feedback'. All associations between 'no feedback' and 'some feedback' categories and the training district or the year of training were determined using Fisher's exact tests (p < 0.05). Fisher's exact tests were used rather than chi-squared tests because of the small sample size.35 Where there was a statistically significant association, Fisher's exact tests were used to test each training district and year with the 'some feedback' and 'no feedback' categories to determine the strength of association.

Kruskal-Wallis tests were used to compare the global and total scores in the learning plans and mini-CEX forms across the training district and year of training. Two-sample Wilcoxon rank-sum tests were used to test the strength of association. Bonferroni correction was applied for 2X2 training district and year of training comparison with feedback categories and scores and significance for the p-value was set at < 0.005 and < 0.017, respectively.

The feedback was first characterised according to length, that is, the number of words, overall quality and specificity to evaluate the quality of written narrative feedback in both forms. The characteristics assessed for the overall feedback quality in this study were extracted from validated tools used in previous studies. The following feedback quality characteristics were adapted from De Swardt et al. (2019) the number of words in the feedback, whether proper sentences were used, strengths and weaknesses highlighted and areas of improvement specified.29 The following were derived from Pilgrim et al.: whether an action plan was provided and whether the feedback was specific.36 For example, if the feedback simply read 'satisfactory' or 'good', it was coded under non-specific feedback. The second level of analysis involved content analysis37 to derive counts for specific feedback areas. For example, the feedback 'the joint examination was performed well' was coded as 'used proper sentences' and 'provided strengths' under the overall quality. This feedback example was coded in the category 'physical examination' during the content analysis. The main author and co-authors initially separately, coded the data set, then compared their coding systems. Any differences were discussed until agreement on the naming of codes and categories and the categorisation of the codes was reached. The coding process was iterative, involving several cycles of discussions until the data analysis was completed. The iterative process improved the interrater reliability of the findings.38

Ethical considerations

Ethical approval for the broader project was obtained from the Human Research Ethics Committee (Medical) of the University of the Witwatersrand (certificate number: M191140). Permission to conduct the research was obtained from the University Registrar and the Head of the Department of Family Medicine. Informed written consent to access the LPs was obtained from registrars and supervisors.

Results

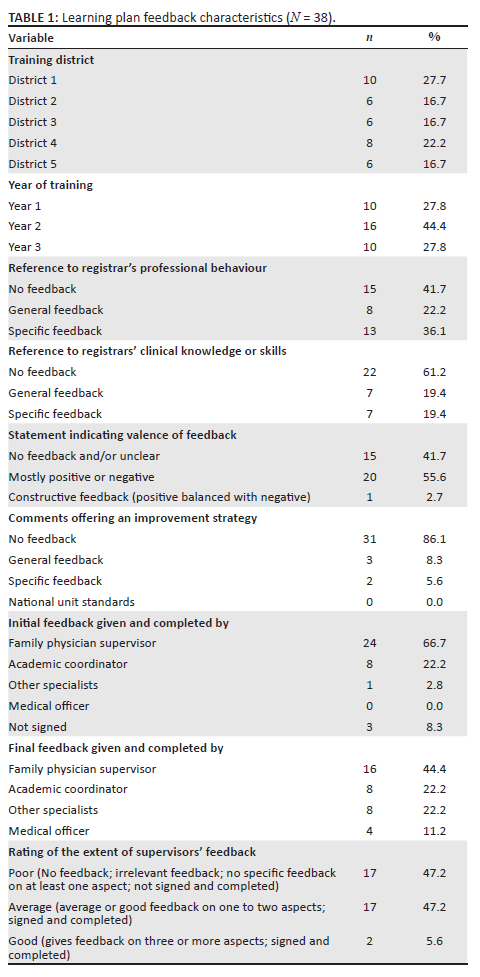

When the feedback in the 36 learning plans was analysed, most (61.2%) did not refer to registrars' clinical knowledge or skills, and 86.1% did not offer an improvement strategy. The 'extent of supervisors' feedback' was mainly rated as 'poor' (47.2%) or 'average' (47.2%); only a few (5.6%) were 'good'. In one-third of the forms, the final feedback was provided by non-FP supervisors from other clinical departments (Table 1). The data were not uniformly distributed, so the global score median of 8.0 (7.7-9.0) out of 10.0 in the learning plans was calculated.

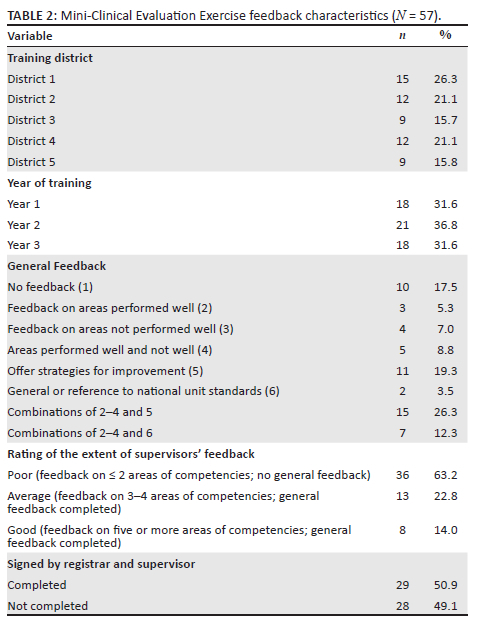

In 17 of the 19 LPs, the mini-CEX forms were completed by more than one FP from the same district, while the same FP completed the remaining two. For the 'extent of supervisors' feedback', 63.2% were classified as 'poor' and only 14.0% as 'good' (Table 2). The median of the total scores in the mini-CEX forms was 6.8 (range: 6.1-7.3). Most of the mini-CEX competency scores given by FP supervisors ranged between 5-7 or 8-10, with very few scores of 1-4 (Table 3).

Further analysis showed a statistically significant association of the 'some feedback' and 'no feedback' categories with all Mini-CEX competencies (p < 0.001 to p = 0.014), the rating of 'extent of supervisors' feedback' (p < 0.001) and the 'signed by registrar and supervisor' (p < 0.001) categories with the training district (Table 4). However, there was no association with the year of training. There was no significant association of feedback categories in the learning plans with either the training district or year of training.

When the strength of association of D1-D5 with the feedback categories was tested further, D3 feedback rated higher when compared with other districts in all mini-CEX competencies with a statistical significance (p < 0.001 to p = 0.003). The 'extent of supervisors' feedback was also rated higher in D2 and D3 than D1, D4 and D5, which was significantly different (p < 0.001 to p = 0.002). D1 had significantly more signed and completed forms than D5 (p < 0.001).

Comparing the global scores in learning plans and total scores in mini-CEX forms with the year of training revealed significant differences in the median values of the global score (p = 0.012) and the total score (p = 0.049). On further analysis with two-sample Wilcoxon rank-sum tests, the differences were between Y1 and Y3 in both global score (p = 0.003) and the total score (p = 0.016). There were no significant differences between Y1 and Y2, or Y2 and Y3, across both forms. There were no significant differences in the median global and total scores across D1-D5.

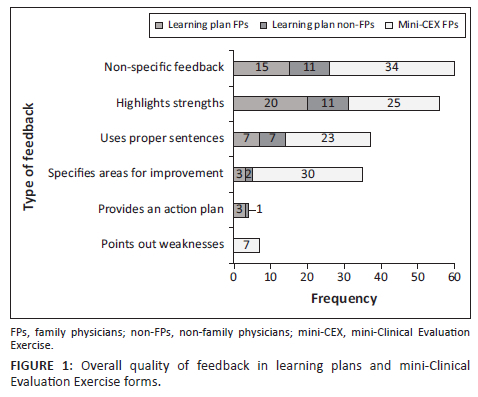

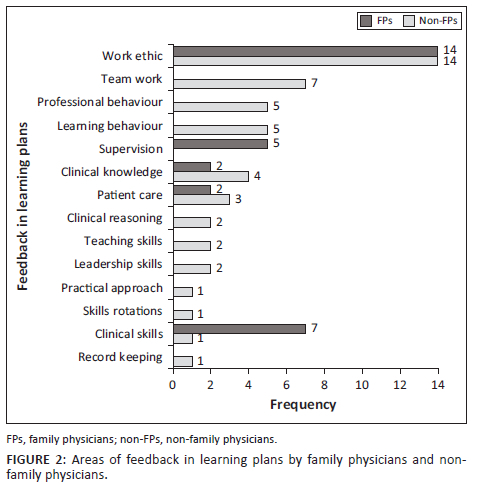

The FP supervisors had provided the feedback in 22/36 learning plans, non-FP supervisors in 12/36 and 2/36 had no feedback. Non-FP supervisors provided more extended feedback (average of −25.8 words) than FP supervisors (average of −13.7 words). The feedback focused mainly on 'highlighting strengths' or was 'non-specific', with less emphasis on 'specific areas for improvement' and 'providing action plans' (Figure 1). The FP feedback focused only on registrars' 'work ethic' and 'clinical skills'. In contrast, non-FP feedback was more comprehensive because it referred to various areas, including 'professional behaviour' and 'teamwork' (Figure 2).

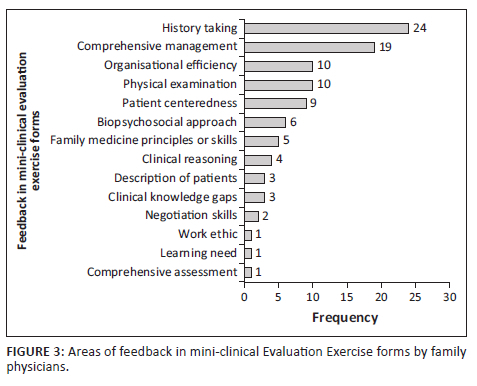

The FPs mini-CEX feedback focused on history-taking, comprehensive management and organisational efficiency. There was less emphasis on negotiation skills and comprehensive assessment (Figure 3). The average feedback by FPs in the mini-CEX forms (16.3 words) was longer than the learning plans (13.7 words). There was more emphasis on specifying 'areas of improvement' (per the overall quality evaluation) compared with the learning plans (Figure 1).

Discussion

This is one of the first sub-Saharan studies evaluating feedback in registrars' learning plans and mini-CEX forms as evidence of educational and clinical supervision. The general quantity and quality of the feedback across both forms were inadequate, which has implications for the registrars' ability to engage in self-directed learning.

The feedback in the learning plans lacked elements of effectiveness such as context specificity, being tailored to the trainee's needs and documented action plans. Other deficiencies were failure to focus on areas needing improvement, such as gaps in clinical knowledge, skills and professional behaviour and not specifying how the supervisor would assist the registrar in addressing these gaps. These findings were similar to previous studies where supervisors struggled to point out registrars' weaknesses or offer suggestions for improvement or action plans.29 Given feedback's vital role in promoting self-directed learning,18 the lack of focus on registrars' learning needs deprives them of opportunities to reflect on how they could improve their performance. As found previously, the written narrative feedback also minimally reflected registrar responses to feedback.9,18

Several studies evaluating mini-CEX forms in HIC23,39 found that feedback focused more on adequately and less on inadequately performed areas and did not always include documented action plans. The findings of this study were similar, with a tendency for feedback to focus on adequate performance, highlighting strengths, and neglecting areas that needed improvement, with few action plans included. In addition, written feedback was lacking in many of the forms. Mini-CEX forms have descriptors that guide structured feedback on observed performance,8 but these were not used effectively. Possible reasons for supervisors not providing effective feedback could be their multiple roles and responsibilities33 and the lack of protected time for educational activities,40 especially for direct observations or their lack of skills or training.5,17,39 The supervisors in this study often appeared reluctant to provide honest and negative feedback and grade trainee performance when it is poor, as has been seen in previous studies.1,5 Other possible reasons for the poor supervisory feedback reported in this study could be deficiencies in trainers' knowledge and clinical competence, facilitation or interpersonal skills, and awareness of trainee's learning needs - all factors that have been described in other studies.2,33 Organisational factors such as work demands, poor recognition for teaching activities and a lack of a conducive environment could also negatively affect feedback provision.1 Some FP supervisors participated in training courses conducted by the academy or the university but ongoing training opportunities could capacitate them better than once off attending training.41

In the mini-CEX forms, instead of using competency descriptors, supervisors mainly provided global feedback or non-specific judgements such as 'satisfactory', which are vague and do not offer any educational value, as reported previously.22,23,42 The inadequate space for written feedback on each competency resulted in supervisors recording feedback anywhere on the forms. The WPBA forms can be modified to so-called 'supervised learning events', as done in the United Kingdom,43 in which text boxes can replace rating scales with tick boxes to provide narrative feedback. Modified mini-CEX forms with separate spaces to record areas performed well, those that need improvement, and action plans improve educational impact because they act as reminders for supervisors.36,44 Although general descriptors offer a rough guide for providing feedback, modifying the forms to include the categories 'competent', 'not competent', and 'good' can guide supervisors to provide more specific feedback.45

Most FP supervisors scored highly on all mini-CEX competencies and total and global scores across all years of training. The lenient scoring was evident from the first year of training and did not exhibit the regular progression as seen in previous studies.45,46 The global and total scores progression was evident only between the first and third years, in contrast to previous studies demonstrating score progression across all 3 years.46 Ideally, feedback should be provided in the areas where registrars scored low. Insufficient supervisor feedback on the areas that scored low could lead to the registrar's self-assessing by over- or under-estimating their performance against expected standards.8 Feedback should improve and be more holistic during the later years compared with the first year, where it could be limited to fewer competencies and be more specific. This avoids overloading junior registrars with feedback, allowing them to apply it in similar situations and enhancing transferability.6 More than half of the mini-CEX forms were not signed by FPs or registrars, suggesting a lack of accountability from supervisors and registrars.

At the end of each clinical rotation, FPs and not non-FPs are expected to assess and provide feedback on whether registrars met the initial learning needs identified in their learning plans. Feedback provided by specialists and medical officers who functioned as educational supervisors may not have been aligned with training expectations. This could have been because of non-FP supervisors being unclear about the registrar's initially identified expected learning outcomes. A previous SA study also reported that registrars received more feedback from non-FP supervisors, which they found useful.47 The role of non-FP supervisors in registrar supervision needs further research.

The reliability of WPBAs increases with multiple assessments conducted at various times by different assessors.22,45 In this study, registrars had multiple assessors in some districts, but, in others, the mini-CEX forms were completed only by their immediate supervisor. Clinicians functioning as supervisors in clinical settings are neither always trained in medical education8 nor equipped to give meaningful feedback.5,6 This could negatively impact registrar training, even more so in districts where only one supervisor provides feedback. Regular faculty development is needed to understand the educational value of feedback.22,27 Identifying a core group of well-trained supervisors to provide feedback when conducting WPBAs5 could be another strategy to address the reliability of mini-CEX assessments.27

Feedback in the learning plans focused on ethics, professionalism, clinical knowledge and skills and learning behaviours. This was in contrast to a study where professionalism was the learning goal least identified by trainees.48 Feedback provided by specialists and medical officers was much more comprehensive, covered many competencies and was aligned to registrars' learning needs (Figure 2). Supervisors' ability to provide feedback on appropriate history-taking and physical examination skills is critical in workplace learning, as clinical diagnosis depends on these registrar skills.5 Mini-CEX feedback mostly covered history-taking, physical examination, comprehensive management and organisational efficiency. Clinical reasoning was the least covered. This differed from previous studies where feedback focused least on professionalism and organisational efficiency.49 As reported previously, there was no alignment of many feedback areas to the registrars' learning needs.49 Family physicians provided scanty feedback on soft skills, such as clinical reasoning or patient negotiation skills (Figure 3). High scores given by FP supervisors on all competencies suggested insufficient skills to evaluate registrars critically, especially for soft skills. Ideally, more narrative feedback should be offered on identified learning needs where supervisors gave low scores.

This is the first sub-Saharan study to explore the significance of training sites and supervisor characteristics on the quality of feedback. While one district (D3) had fewer FPs involved in training, registrars received more comprehensive feedback than other districts with more FPs. This infers that feedback does not depend on the number of supervisors but is context-specific, dependent on willingness and effort, understanding of feedback characteristics5 and assessment literacy.7,22 All supervisors and registrars involved in decentralised clinical training require ongoing faculty development training on what constitutes adequate feedback to maximise this essential element of workplace-based training and assessments in decentralised settings. There are several training options that could supplement the existing training. One option is to conduct practical sessions using videos of actual registrar consultations and the supervisory feedback that could be critiqued by pairs or groups of FPs as done in other settings.50 Another strategy could capitalise on the peer coaching or co-teaching1 opportunities provided by observed consultations - pairs of junior and senior FPs should provide feedback to registrars after these sessions. Similarly, feedback sessions between FPs and registrars in combined sessions with medical students or interns at clinics or hospitals provide opportunities for registrars to practise and internalise effective feedback. Finally, and most importantly, FPs should promote an empathic learning culture based on constructive feedback, even at the lowest family medicine training level, at the primary healthcare facilities.

Limitations

Only written feedback was evaluated; verbal feedback during direct observations was not included. Scores and feedback were compared across the different sets of registrars in the three years but not for the same registrar across training years. Although it is difficult to make high-quality assessments in postgraduate training because of low numbers,27 all registrars' portfolios were included to maximise participation. The principal author constantly engaged with co-authors on coding and analysis, improving internal and external validity. The study was conducted in the decentralised sites of one university, which could affect the generalisability of the results.

Recommendations

Given the integral role of feedback in effective clinical and educational supervision, we suggest there is a need to improve the feedback supervisors provide to trainees and how trainees utilise the feedback. Supervisors and registrars need regular faculty development on various aspects of effective feedback. Training for supervisors should focus on how to give specific feedback according to registrar performance, especially on softer skills such as clinical reasoning and professionalism, along with other hard skills such as history-taking and physical examination, how to develop action plans and assist in implementing the action plans. For the registrars, the training should focus on utilising the written feedback to develop as self-directed lifelong learners.

Conclusion

This study evaluated supervisors' feedback in family medicine registrars' LPs. Supervisor feedback was shown to be inadequate, often very general and not very helpful in developing action plans to improve registrars' skills. The lack of detail tailored to individual needs could negatively impact the training of health workers. Family physicians' supervisory role is vital to ensure the training of skilled family physicians to strengthen primary healthcare and district health systems, which should translate to better health outcomes in communities. Future research on feedback in postgraduate family medicine training programmes in similar contexts could help to improve the quality of this cadre of health professionals.

Acknowledgements

The authors would like to express their special thanks to the registrars and family physicians of the University of the Witwatersrand, who consented to access their learning plans and mini-CEX forms. The authors acknowledge the statistical assistance from Prof E.L. and Dr Z.M.Z. from the University of the Witwatersrand.

Competing interests

The authors declare that they have no financial or personal relationships that may have inappropriately influenced them in writing this article.

Authors' contributions

N.J.E. conceptualised the research, collected and analysed the data and wrote the first draft of the manuscript. N.J.E., A.Z.G. and L.S.J. contributed to the data analysis, and revising the subsequent drafts. All authors contributed to the development of the manuscript and approved the final version.

Funding information

This research work is supported by the faculty Research Committee Individual Research Grants 2021, University of the Witwatersrand.

Data availability

The data that support the findings of this study can by made available by the corresponding author, N.J.E., upon reasonable request.

Disclaimer

The views and opinions expressed in this article are those of the authors and do not necessarily reflect the official policy or position of any affiliated agency of the authors.

References

1.Ramani S, Leinster S. AMEE Guide No. 34: Teaching in the clinical environment. Med Teach. 2008;30(4):347-364. https://doi.org/10.1080/01421590802061613 [ Links ]

2.Kilminster S, Cottrell D, Grant J, Jolly B. AMEE Guide No. 27: Effective educational and clinical supervision. Med Teach. 2007;29(1):2-19. https://doi.org/10.1080/01421590701210907 [ Links ]

3.Wood DF. Formative assessment: Assessment for learning. In: Swanwick T, Forrest K, O'Brien BC, editors. Understanding medical education: Evidence, theory and practice. 3rd ed. Hoboken, NJ: Wiley-Blackwell; 2019, p. 366-369. [ Links ]

4.Kogan JR, Hatala R, Hauer KE, Holmboe E. Guidelines: The do's, don'ts and don't knows of direct observation of clinical skills in medical education. Perspect Med Educ. 2017;6(5):286-305. https://doi.org/10.1007/s40037-017-0376-7 [ Links ]

5.Norcini J, Burch V. Workplace-based assessment as an educational tool: AMEE Guide No. 31. Med Teach. 2007;29(9-10):855-871. https://doi.org/10.1080/01421590701775453 [ Links ]

6.Wood BP. Feedback: A key feature of medical training. Radiology. 2000;215(1): 17-19. http://doi.org/10.1148/radiology.215.1.r00ap5917 [ Links ]

7.Prentice S, Benson J, Kirkpatrick E, Schuwirth L. Workplace-based assessments in postgraduate medical education: A hermeneutic review. Med Educ. 2020;54(11): 981-992. https://doi.org/10.1111/medu.14221 [ Links ]

8.Burgess A, Mellis C. Feedback and assessment for clinical placements: Achieving the right balance. Adv Med Educ Pract [serial online]. 2015 [cited 2021 Jun 12];6: 373-381. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4445314/ [ Links ]

9.Weallans J, Roberts C, Hamilton S, Parker S. Guidance for providing effective feedback in clinical supervision in postgraduate medical education: A systematic review. Postgrad Med J. 2022;98(1156):138-149. https://doi.org/10.1136/postgradmedj-2020-139566 [ Links ]

10.Lefroy J, Watling C, Teunissen PW, Brand P. Guidelines: The do's, don'ts and don't knows of feedback for clinical education. Perspect Med Educ. 2015;4(6):284-299. https://doi.10.1007/s40037-015-0231-7 [ Links ]

11.Ekpenyong A, Zetkulic M, Edgar L, Holmboe ES. Reimagining feedback for the milestones era. J Grad Med Educ. 2021;13(2s):109-112. https://doi.org/10.4300/JGME-D-20-00840.1 [ Links ]

12.Bartlett J, Silver ND, Rughani A, Rushforth B, Selby M, Mehay R. MRCGP in nutshell. In: Mehay R, editor. The essential handbook for GP training and education. London: Radcliff Publishing; 2013, p. 365-367. [ Links ]

13.Launer J. Supervision, mentoring, and coaching. In: Swanwick T, Forrest K, O'Brien BC, editors. Understanding medical education: Evidence, theory and practice. 3rd ed. Hoboken, NJ: Wiley-Blackwell; 2019, p. 179-189. [ Links ]

14.Kumar M, Charlton R, Mehay R. Effective clinical supervision. In: Mehay R, editor. The essential handbook for GP training and education. London: Radcliff Publishing; 2013, p. 370-374. [ Links ]

15.Tartwijk JV, Driessen EW. Portfolios for assessment and learning: AMEE Guide no. 45. Med Teach. 2009;31(9):790-801. https://doi.org/10.1080/01421590903139201 [ Links ]

16.Norcini JJ, Blank LL, Arnold GK, Kimball HR. The mini-CEX (clinical evaluation exercise): A preliminary investigation. Ann Intern Med. 1995;123(10):795-799. http://doi.org/10.7326/0003-4819-123-10-199511150-00008 [ Links ]

17.Lockspeiser TM, Kaul P. Using individualized learning plans to facilitate learner-centered teaching. J Pediatr Adolesc Gynecol. 2016;29(3):214-217. https://doi.org/10.1016/j.jpag.2015.10.020 [ Links ]

18.Nicol DJ, Macfarlane-Dick D. Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Stud High Educ. 2006;31(2):199-218. https://doi.org/10.1080/03075070600572090 [ Links ]

19.Edgar L, McLean S, Hogan SO, Hamstra S, Holmboe ES. The milestones guidebook [homepage on the Internet]. Accreditation Council for Graduate Medical Education; 2020 [cited 2021 Jun 12]. Available from: https://www.acgme.org/What-We-Do/Accreditation/Milestones/Resources [ Links ]

20.Robinson JD, Persky AM. Developing self-directed learners. Am J Pharm Educ. 2020;84(3):847512. https://doi.org/10.5688/ajpe847512 [ Links ]

21.Li S-TT, Paterniti DA, Co JPT, West DC. Successful self-directed lifelong learning in medicine: A conceptual model derived from qualitative analysis of a national survey of pediatric residents. Acad Med. 2010;85(7):1229-1236. http://doi.org/10.1097/ACM.0b013e3181e1931c [ Links ]

22.Burch VC. The changing landscape of workplace-based assessment. J Appl Test Technol [serial online]. 2019 [cited 2021 Aug 20];20(S2):37-59. Available from: http://www.jattjournal.net/index.php/atp/article/view/143675 [ Links ]

23.Saedon H, Salleh S, Balakrishnan A, Imray CH, Saedon M. The role of feedback in improving the effectiveness of workplace based assessments: A systematic review. BMC Med Educ. 2012;12(1):25. https://doi.org/10.1186/1472-6920-12-25 [ Links ]

24.Lörwald AC, Lahner F-M, Nouns ZM, Berendonk C, Norcini J, Greif R, et al. The educational impact of Mini-Clinical Evaluation Exercise (Mini-CEX) and Direct Observation of Procedural Skills (DOPS) and its association with implementation: A systematic review and meta-analysis. PLoS One. 2018;13(6):e0198009. https://doi.org/10.1371/journal.pone.0198009 [ Links ]

25.Weller JM, Jolly B, Misur MP, Merry AF, Jones A, Crossley JGM, et al. Mini-clinical evaluation exercise in anaesthesia training. Br J Anaesth. 2009;102(5):633-641. https://doi.org/10.1093/bja/aep055 [ Links ]

26.Jenkins LS, Von Pressentin K. Family medicine training in Africa: Views of clinical trainers and trainees. Afr J Prim Health Care Fam Med. 2018;10(1):e1-e4. https://doi.org/10.4102/phcfm.v10i1.1638 [ Links ]

27.Norcini JJ, Zaidi Z. Workplace assessment. In: Swanwick T, Forrest K, O'Brien BC, editors. Understanding medical education: Evidence, theory and practice. 3rd ed. Hoboken, NJ: Wiley-Blackwell; 2019, p. 319-333. [ Links ]

28.Mash RJ, Ogunbanjo G, Naidoo SS, Hellenberg D. The contribution of family physicians to district health services: A national position paper for South Africa. S Afr Fam Pract [serial online]. 2015 [cited 2021 May 05];57(3):54-56. Available from: https://scholar.sun.ac.za/handle/10019.1/99785 [ Links ]

29.De Swardt M, Jenkins LS, Von Pressentin KB, Mash R. Implementing and evaluating an e-portfolio for postgraduate family medicine training in the Western Cape, South Africa. BMC Med Educ. 2019;19(1):251. https://doi.org/10.1186/s12909-019-1692-x [ Links ]

30.The Colleges of Medicine of South Africa: Fellowship of the College of Family Physicians of South Africa [home page on internet]. 2022 [cited 2022 Jul 15]. Available from: https://www.cmsa.co.za/view_exam.aspx?QualificationID=9 [ Links ]

31.Blitz J, Edwards J, Mash B, Mowle S. Training the trainers: Beyond providing a well-received course. Educ Prim Care. 2016;27(5):375-379. https://doi.org/10.1080/14739879.2016.1220237 [ Links ]

32.Lovato C, Peterson L. Programme evaluation. In: Swanwick T, Forrest K, O'Brien BC, editors. Understanding medical education: Evidence, theory and practice. 3rd ed. Hoboken, NJ: Wiley-Blackwell; 2019, p. 443-455. [ Links ]

33.Erumeda NJ, Jenkins LS, George AZ. Perceptions of postgraduate family medicine supervision at decentralised training sites, South Africa. Afr J Prim Health Care Fam Med. 2022;14(1):a3111. https://doi.org/10.4102/phcfm.v14i1.3111 [ Links ]

34.Rowley J. Designing and using research questionnaires. Manag Res Rev. 2014;37(3):308-330. https://doi.org/10.1108/MRR-02-2013-0027 [ Links ]

35.Kim H-Y. Statistical notes for clinical researchers: Chi-squared test and Fisher's exact test. Restor Dent Endod. 2017;42(2):152-155. https://doi.org/10.5395/rde.2017.42.2.152 [ Links ]

36.Pelgrim EA, Kramer AW, Mokkink HG, Van der Vleuten CP. Quality of written narrative feedback and reflection in a modified mini-clinical evaluation exercise: An observational study. BMC Med Educ. 2012;12(1):97. https://doi.org/10.1186/1472-6920-12-97 [ Links ]

37.Krippendorf K. Content analysis: An introduction to its methodology. 3rd ed. Thousand Oaks, CA: Sage; 2013. [ Links ]

38.Cohen L, Lawrence M, Morrison K. Research methods in education. 8th ed. New York, NY: Routledge; 2018, p. 269. [ Links ]

39.Gauthier S, Cavalcanti R, Goguen J, Sibbald M. Deliberate practice as a framework for evaluating feedback in residency training. Med Teach. 2015;37(6):551-557. https://doi.org/10.3109/0142159X.2014.956059 [ Links ]

40.Gawne S, Fish R, Machin L. Developing a workplace-based learning culture in the NHS: Aspirations and challenges. J Med Educ Curric Dev. 2020;7:2382120520947063. https://doi.org/10.1177/2382120520947063 [ Links ]

41.Mash R, Blitz J, Edwards J, Mowle S. Training of workplace-based clinical trainers in family medicine, South Africa: Before-and-after evaluation. Afr J Prim Health Care Fam Med. 2018;10(1):1589. https://doi.org/10.4102/phcfm.v10i1.1589 [ Links ]

42.Canavan C, Holtman MC, Richmond M, Katsufrakis PJ. The quality of written comments on professional behaviors in a developmental multisource feedback program. Acad Med. 2010;85(10 Suppl.):S106-S109. https://doi.org.10.1097/ACM.0b013e3181ed4cdb [ Links ]

43.Recommendations for specialty trainee assessment and review: Incorporating lessons learnt from the workplace-based assessment pilot. Joint Royal College of Physicians Training Board [home page on Internet]. 2014 [cited 2021 Jun 20]. Available from: https://www.jrcptb.org.uk/sites/default/files/April%202014%20Recommendations%20 for%20specialty%20trainee%20assessment%20and%20review.pdf [ Links ]

44.Djajadi RM, Claramita M, Rahayu GR. Quantity and quality of written feedback, action plans, and student reflections before and after the introduction of a modified mini-CEX assessment form. Afr J Health Prof Educ. 2017;9(3):148. http://doi.10.7196/AJHPE.2017.v9i3.804 [ Links ]

45.Tartwijk JV, Driessen EW. Portfolios in personal and professional development. In: Swanwick T, Forrest K, O'Brien BC, editors. Understanding medical education: Evidence, theory and practice. 3rd ed. Hoboken, NJ: Wiley-Blackwell; 2018, p. 255-262. [ Links ]

46.Hejri SM, Jalili M, Masoomi R, Shirazi M, Nedjat S, Norcini J. The utility of mini-clinical evaluation exercise in undergraduate and postgraduate medical education: A BEME review: BEME Guide No. 59. Med Teach. 2020;42(2):125-142. https://doi.org/10.1080/0142159X.2019.1652732 [ Links ]

47.Jenkins L, Mash B, Derese A. The national portfolio for postgraduate family medicine training in South Africa: A descriptive study of acceptability, educational impact, and usefulness for assessment. BMC Med Educ. 2013;13(1):1-11. https://doi.org/10.1186/1472-6920-13-101 [ Links ]

48.Chitkara MB, Satnick D, Lu W-H, Fleit H, Go RA, Chandran L. Can individualised learning plans in an advanced clinical experience course for fourth year medical students foster self-directed learning? BMC Med Educ. 2016;16(1):232. https://doi.org/10.1186/s12909-016-0744-8 [ Links ]

49.Montagne S, Rogausch A, Gemperli A, Berendonk C, Jucker-Kupper P, Beyeler C. The mini-clinical evaluation exercise during medical clerkships: Are learning needs and learning goals aligned? Med Educ. 2014;48(10):1008-1019. https://doi.org/10.1111/medu.12513 [ Links ]

50.Holmboe ES, Yepes M, Williams F, Huot SJ. Feedback and the mini clinical evaluation exercise. J Gen Intern Med. 2004;19(5):558-561. https://doi.org/10.1111/j.1525-1497.2004.30134.x [ Links ]

Correspondence:

Correspondence:

Neetha Erumeda

Neetha.Erumeda@wits.ac.za

Received: 12 July 2022

Accepted: 25 Oct. 2022

Published: 20 Dec. 2022