Serviços Personalizados

Artigo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares em Google

Similares em Google

Compartilhar

South African Journal of Child Health

versão On-line ISSN 1999-7671

versão impressa ISSN 1994-3032

S. Afr. j. child health vol.15 no.3 Pretoria Set. 2021

http://dx.doi.org/10.7196/SAJCH.2021.v15.i3.1764

RESEARCH

Developing a validated instrument to assess paediatric interns in South Africa

K L NaidooI; J M van WykII

IPhD; King Edward VIII Hospital, KwaZulu-Natal Department of Health, and Department of Paediatrics and Child Health, Nelson R Mandela School of Medicine, University of KwaZulu-Natal, Durban, South Africa

IIPhD; Department of Clinical and Professional Practice, Nelson R Mandela School of Medicine, University of KwaZulu-Natal, Durban, South Africa

ABSTRACT

BACKGROUND. In South Africa (SA), recently graduated medical practitioners (interns) are expected to be efficient and resilient in limited-resource contexts with multiple disease burdens. Work-based assessment in SA internship focuses on clinical skills and neglects the evaluation of non-clinical skills, which creates a daunting task for clinician supervisors expected to certify interns for independent practice.

OBJECTIVE. To develop a set of observable clinical activities as the basis for an instrument for evaluating interns during the paediatric rotation at hospitals.

METHODS. The core set of competencies was determined through a modified Delphi process. Focus group discussions were used in content validation and the proposed instrument was tested in a survey among 415 paediatric interns. The proposed instrument included several competencies and was further subjected to factor analysis.

RESULTS. This tool was found to be reliable, with an overall Cronbach's alpha coefficient of 0.927. Four major factors emerged from factor analysis performed on the final list of 61 observable clinical activities, aggregated into entrustable professional activities. The factors are associated with procedural clinical skills, holistic-care skills, and emotional skills related to social competence and self-management.

CONCLUSION. A locally relevant competency-based assessment tool was developed to assess paediatric interns in the SA setting. The use of validated tools as multidimensional instruments to assess both clinical and non-clinical skills is largely neglected. The study serves as a model for developing validated work-based assessment instruments responsive to local needs and supports the development of holistic clinicians in healthcare contexts with high disease burdens.

In South Africa (SA), recently graduated medical practitioners are expected to manage excessive clinical service loads, often in limited-resource settings.[1,2] This introduction to independent medical practice is termed internship and plays itself out in the context of a country with multiple disease burdens, including a high burden of childhood disease.[3-4] In SA, medical interns are expected to be highly skilled and resilient from early on in their careers, including when caring for children.P[2,6] Growing concerns over patient safety and litigation increase stress during this period for both interns and their supervisors.[6-8] The 24-month period of internship consists of various specialities, including a compulsory paediatric rotation, and offers the last formal opportunity to assess the competence of medical practitioners before their entry into independent practice. Although both undergraduate and postgraduate medical education programmes in SA are monitored and endorsed at higher education institutions, the internship period is sandwiched between these programmes and regarded as a service-learning programme. The assessment of the intern is the responsibility of the Health Professions Council of South Africa (HPCSA), which is the professional accreditation body in SA[9] Assessment practices during the internship period have a strong clinical focus and do not reflect the competency-based framework of other training programmes offered at SA higher education institutions. As such, assessment focuses predominantly on assessing the intern's ability to master several procedural clinical skills, without other competencies being monitored as closely.[8] The use of non-validated assessment instruments and the lack of formative holistic assessment strategies during the local internship period have also been a reason for concern.[8]

Competency-based medical education is an important pedagogical framework and has greatly influenced the course of medical education over the past two decades.[10] In this context, work-based assessment (WBA) serves as a critical component for operationalising learning as it lends itself to the assessment of an intern's readiness for independent practice.[11] Discipline-specific postgraduate programmes are therefore increasingly moving towards using more appropriate assessment instruments, which include tangible and intuitive professional clinical activities.[12-14] Such 'observable clinical activities' not only form the core of clinical work but also resonate with clinical supervisors because they authentically reflect the activities required in the workplace.[15] Activities such as taking a history from the parent of a sick child or performing a lumbar puncture on a baby are viewed as substantial and measurable professional activities. The significance of assessing such activities relate to the premise that evidence of successful completion during the internship will allow the supervisor to certify the trainee as 'ready for independent practice'.

This decision to entrust a trainee with professional tasks can be taken ad hoc or form part of a formal programme, and forms the basis of WBA. The concept of 'entrustment' has been a notable paradigm shift in workplace assessment, as it signifies that the trainee has reached an appropriate level of accountability and responsibility.[15]

Patient safety and improved quality of clinical service in healthcare settings ultimately rely on valid assessment opportunities and processes to support decisions of entrustability'[15] The use of observable clinical activities and their formulation into so-called entrustable professional activities (EPAs) thus underpin current trends in WBA practices in medical internship programmes. [13, 15]EPAs therefore help to create concrete assessment opportunities in competency-based education frameworks practised in authentic clinical environments.

Certifying interns for independent practice in SA rests on many entrustment decisions that need to be performed in busy hospital settings, which often have limited resources and deal with high disease burdens. There is a paucity of research on the use of EPAs for assessing newly graduated health professionals in such contexts.[16-17] In addition, a holistic approach to assessment framed in a competency-based system is currently lacking in the internship period in SA[2, 8] Thus, there is a need for a locally developed, clearly defined assessment instrument that is responsive to the specific needs of the SA context.

Method

The study set out to develop a set of observable clinical activities as the basis for an instrument that can be used to evaluate interns during the paediatric rotation at hospitals. Evaluating the validity of the instrument for use with a representative group of interns in KwaZulu-Natal, SA, formed part of the development process.

Research design

This cross-sectional study used a mixed-method approach, which involved a modified Delphi process to reach consensus on a list of appropriate observable clinical activities. The instrument was subsequently trialled for validation in the study setting.

Setting

The study was conducted at the seven major hospitals where interns are trained in Durban and Pietermaritzburg, KwaZulu-Natal. These hospitals have a combined catchment population of ~6 million and serve communities with some of the highest HIV disease burdens globally, together with high rates of tuberculosis (TB) and common childhood diseases. [3,18,19]

Subjects

The study sample consisted of paediatricians, interns and their supervising clinicians working in the identified hospitals. Specific criteria were used to ensure that participants were experienced in both paediatric clinical care and intern supervision in the described healthcare context.

Procedure

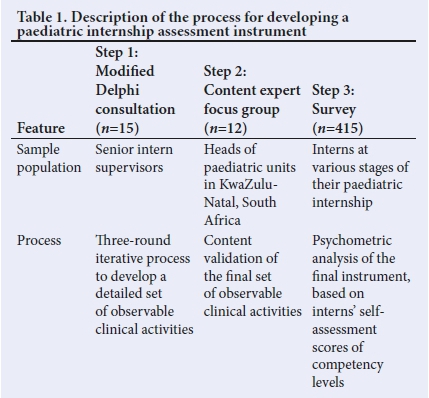

Table 1 summarises the three steps in developing the assessment instrument.

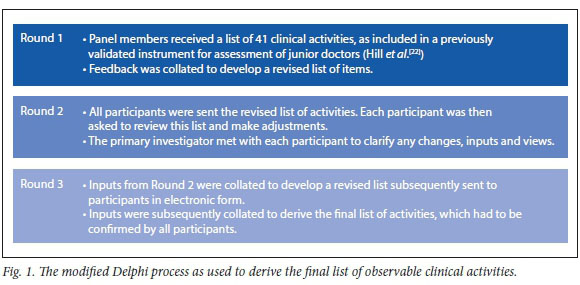

Step 1: Modified Delphi process

A modified Delphi approach, which involved iterative consultations between the authors and a panel of 15 senior intern supervisors (Fig. 1), was used to create the final list of items included in the assessment instrument. This process allowed for a locally determined, consensual list of observable clinical activities to be established, appropriate for evaluating candidates for independent clinical practice in paediatrics.[20-22] All the participating supervisors were actively involved in paediatric intern training and had more than 10 years' clinical experience in the SA public health system.

The principles of the modified Delphi process were explained to the participants in this step prior to commencement of the study, specifically with regard to anonymised consultation and the steps required to ensure consensus being reached.P[20] An initial list of suggested observable clinical activities, which had previously been validated with junior medical practitioners, was used in the first round of consultations to create a template on which the new instrument could be built.[22] Supplementary Table 1 (http://www.sajch.org.za/public/fHes/1764.pdf) lists the 41 items from the original questionnaire proposed by Hill et al.[22]

Step 2: Content validation process

Step 2 involved a focus group consultation with 12 content experts, invited based on their being the head of a paediatric department in a hospital in KwaZulu-Natal (including the sampled hospitals) as well as a head of paediatrics and child health at local government level (district and provincial). All content experts had more than 10 years' clinical experience in paediatrics in SA and were further also involved with programmes to improve paediatric care at either an international, national or provincial level.

After a formal introduction to the study, the panel of content experts were asked to evaluate the relevance of the items included in the final list of observable clinical activities as determined in Step 1. A four-point Likert-scale rubric (1 = not relevant; 2 = somewhat relevant; 3 = relevant; 4 = very relevant) was used to score each item in the overall construct of paediatric intern competency in SA.P[23]A content validity index (CVI) was subsequently calculated for each item, to dichotomise an item as either relevant or not relevant. Items with a CVI >0.9 were accepted for inclusion in the assessment instrum ent.[24]

Step 3: Survey

The final list of items was collated into an assessment instrument to be used in a survey among interns (n=415) representing the seven regional training hospitals. Each participant was asked to self-assess their level of competency using a five-point Likert scale, which was based on the five-stage entrustment scale developed by Ten Cate et al[13] The survey was group administered to interns at all sampled hospitals. Demographic data (gender, age, university of origin and year of internship) were also obtained for each participant.

Data analysis

Data analysis was performed using SAS version 9.4 for Windows.[25] Descriptive statistics were calculated based on the overall score for each item. Means were calculated based on available data and provided that no more than 20% of the items had missing data. The mean summarised continuous variables with standard deviations and medians.

Factor analysis

The proposed assessment instrument was validated for use in the described context by studying its psychometric characteristics and internal consistency. For reliable factor analysis, the sample size had to exceed 100 to be representative of the general intern population in the province. The sample size achieved for the survey was 415. The required ratio of 5 - 10 observations per item equated to 5.5 observations per item for a 75-item scale.

We investigated the internal structure, especially the construct validity, by applying factor analysis with varix (orthogonal) rotation to determine the underlying dimensions of the data. The Kaiser-Guttman criterion of eigenvalues >1, the Cattell criterion for accepting factors above the point of flexion on a scree plot, and the proportion of the total variance explained (60%) were used to determine the underlying factors. Factor loadings >0.4 were interpreted. Reliability and internal consistency of the instrument was assessed according to Cronbach's alpha coefficient.

Ethical considerations

Ethical approval for the study was obtained from the University of KwaZulu-Natal Biomedical Research Ethics Committee (ref. no. BE 177/15). Permission to conduct the study was also obtained from the various participating hospitals and the Health Research and Knowledge Management subcomponent of the KwaZulu-Natal Department of Health. Written informed consent was obtained from all participants in the respective steps of the study.

Results

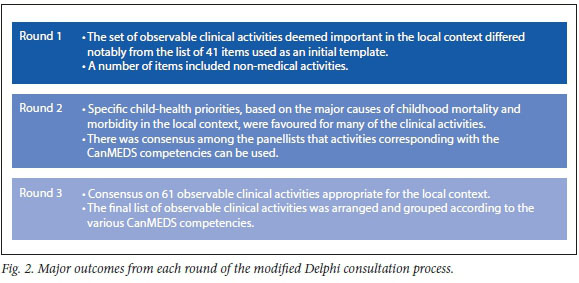

Modified Delphi consultation

Of the 15 intern supervisors invited to participate in the Delphi process, 11 contributed to all three rounds of the consultation. These participants represented the sampled hospitals in the Durban (n=6) and Pietermaritzburg (n=5) regions, respectively. A period of four weeks was allowed for each round of consultations. Fig. 2 summarises the findings from each round of this process.

Analysis of content validity

A focus group consisting of content experts participated in the content validity process. All 75 observable clinical activities identified in Step 1 (http://www.sajch.org.za/public/files/1764.pdf) were associated with a CVI value >0.9, with most deemed relevant or very relevant to be achieved in a paediatric internship in SA. Some changes to wording and categorisation were suggested by the focus group, which were incorporated in the final instrument to be used in the subsequent survey.

Survey results and psychometric analysis

A total of 415 interns across seven regional hospitals participated in the survey. The interns were at various stages of their two-year internship programme, with 36.3% of the participants being in their first year and 63.7% in their second year. The mean (standard deviation) age of the interns was 25.4 (2.1) years (range 20 - 37). The participants were predominantly female (59.6%). Interns who participated graduated from various medical schools either in or outside SA. Fig. 3 illustrates the distribution of the undergraduate medical schools attended by the surveyed interns.

An initial factor analysis suggested items to be related to 14 factors according to the Kaiser-Guttman criterion, or 6 factors according to the scree plot; 13 factors were identified to explain 60% of variance. Only items with a loading >0.5 were accepted, which resulted in eight items being removed before the factor analysis was rerun. Four factors emerged from the scree plot following this analysis; six items in this analysis had a loading <0.5. Removing these items yielded a list of 61 items for the final round of factor analysis, from which ten factors emerged according to both the KaiserGutmann criterion and the explanation of variance. Four factors emerged from the analysis of the scree plot (see http://www.sajch.org.za/public/files/1764.pdf for the results of the full factor analysis process and final factor solution).

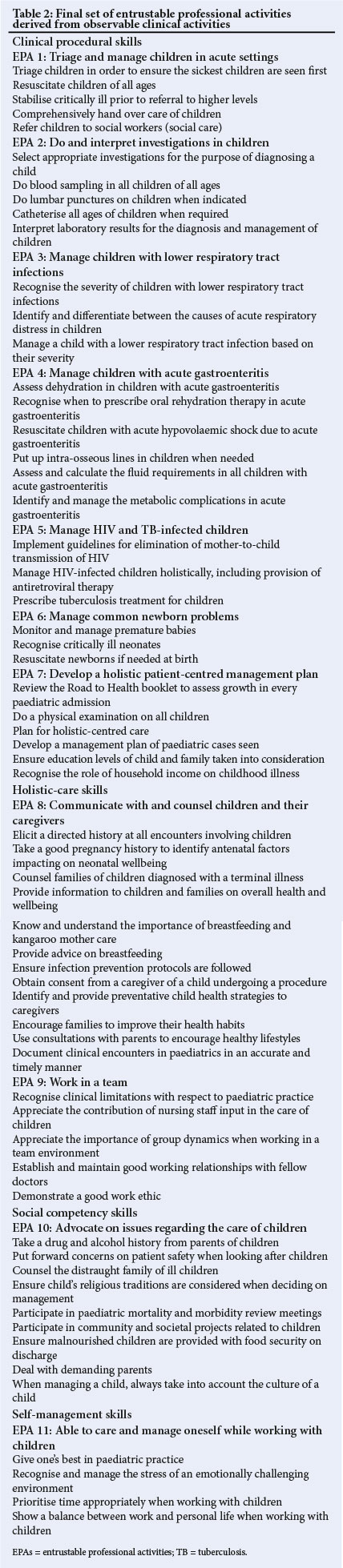

Two factors that emerged from the analysis of the scree plot corresponded to constructs relating to skills that focus on performing clinical procedures (procedural clinical skills) and providing holistic care (holistic-care skills), respectively. The remaining two factors corresponded to constructs and activities relating to emotional skills. The items to assess emotional intelligence skills were grouped according to being focused either on the patient or the environment of the patient (social competency skills) and skills related to the clinician's self-care while caring for paediatric patients (self-management skills).

Internal consistency

Internal consistency for the overall instrument was found to be high, as shown by a Cronbach's alpha coefficient of 0.927. The final list of 61 observable clinical activities were collated from the final round of factor analysis and the items were organised into EPAs based on the framework of Ten Cate et al[13] to form the final instrument (Table 2). The instrument is organised according to the four major internal constructs revealed by the factor analysis.

Discussion

A validated instrument was developed to assess interns' competencies when working in paediatrics in a setting with a high disease burden. Local expertise was used to identify and define measurable clinical activities relevant to the SA context, as clinical activities used by clinical personnel for entrustment decisions have been shown to indicate the safe transfer of responsibility[12] and can form the basis of certification for practice. The instrument developed in this study points to a paradigm shift in framing the assessment of interns for independent practice in the SA context.

The findings are in line with three fundamental principles, namely: (i) the need for assessment instruments that are responsive to prioritised health needs in the local context;[26](ii) the need for a formalised competency-based framework that forms the basis of assessment;[27] and (iii) the importance of a holistic approach to assessment of multiple competencies, including those associated with the development of emotional skills.[28 ·29] The finding that health professionals need to be responsive to current societal needs was identified as a major driver for determining assessment criteria during the design of this study.

Observable clinical activities that relate to the major health challenges facing children predominated the core assessment criteria. Such contextual responsiveness reflects a patient-centred and responsive healthcare system.[26] The expert panel's selection of clinical activities for assessment reflected the major paediatric health challenges in SA, namely: acute emergency care of sick children with acute gastroenteritis; lower respiratory tract infections; HIV; and tuberculosis. There was also a clear mandate to ensure that generic skills in all competencies were assessed holistically.

Resistance to competency-based education due to a perceived additional bureaucratic burden associated with assessing vague concepts or behaviours has been documented.[30] Implementation of competency-based frameworks can thus be problematic in resource-poor contexts where supervision during the internship period is deemed inadequate.[21] In the present study, experts reaffirmed the need to assess interns across multiple competencies and the need for a framework to house these 'granular' observable clinical activities emerged. The use of the CanMEDS framework for teaching, learning and assessment, both in the undergraduate and the postgraduate sectors in SA, possibly influenced this trend among intern supervisors. (The CanMEDS framework encompasses multiple competencies and was adopted by the Royal College of Physicians and Surgeons in 1996.) Such a framework might be well accepted in an internship programme as most interns' supervisors have experience using such an approach in undergraduate training programmes. The recognition that multiple facets require assessment during the clinical experience is gratifying: it reflects acceptance and understanding of competency-based education in the SA context and the increasing need for a more holistic approach to assessment. Using EPAs to put into practice an assessment framework that does not require excessive resources helps to demystify competency-based education. The use of locally constructed EPAs for focused assessment allows for a more integrated, relevant and holistic evaluation.[131]

Although the need to respond to priority health challenges in paediatrics was noted, the study also revealed the need to assess interns' abilities to develop skills beyond priority diseases. The major constructs determined in the final factor analysis include both clinical procedural and holistic-care skills deemed fundamental in providing quality healthcare. These holistic-care skills include clear communication, accurate documentation and interprofessional collaboration when managing paediatric patients. The finding not only reflects the increased complexity of modern medical care but also responds to the need for greater accountability and entrustment in the context of increasing concerns for patient safety.[31]

The constructs related to activities associated with the development of emotional skills is a notable finding of the final factor analysis. These are viewed as skills related to social competency and self-management, and reflect a growing trend in medical education to respond to and develop an awareness of emotions.[32] Leadership, advocacy and teamwork are notoriously difficult to assess in the context of acute clinical care in a busy hospital setting, despite being deemed critically important. Identifying concrete activities that encompass these non-clinical competencies therefore contributes to holistic assessment and adds to the strength of the instrument developed here.

The focus on time management and professional and ethical standards related to clinical care reflects a holistic assessment paradigm by including the assessment of self-management skills. The ability to manage stress and balance personal and work life has generally not been included in EPAs, as their measurement remains poorly defined.[13] These were identified as important skills that need to be assessed, which reflects concerns associated with increasing rates of burnout, depersonalisation and poor accountability reported among interns and other medical practitioners.[33] Our finding that emotional skills (variously defined as emotional intelligence) emerged as a major construct reflects a growing trend to focus on developing this aspect of education in health professions-[32] Although clear definitions of what constitutes emotional intelligence are lacking and the debate on whether it should be positioned as a trait or an ability is still confounding, our findings emphasise the importance of these skills and their value in making important entrustment decisions in the assessment of interns.[32,34]

Study limitations

The participants in steps 1 and 2 of the study (modified Delphi process and content validation) were drawn from one province and affiliated with a single university milieu. This may point to a potential bias in opinion and may not reflect experiences elsewhere in the country. The considerable HIV and poverty burden specific to this study setting could cloud decisions around healthcare priorities, which may not transfer to other sites where interns receive their training. This study furthermore also focused specifically on paediatric clinical activities at a specific time, and it should be kept in mind that WBA needs constant refreshing and review to be relevant to changing needs.

Conclusion

Locally relevant and responsive assessment instruments can be developed with a systematic approach in contexts with a high disease burden. The use of EPAs allows for competency-based assessment frameworks to be put into practice in clinical environments. A locally developed instrument to assess interns in hospitals in KwaZulu-Natal, which represents a setting with a high burden of childhood disease, proved reliable in this context. Multiple competencies were assessed, which clustered into four major skill categories required of interns working in busy clinical contexts. The instrument emphasised clinical skills related to procedural activities, generic holistic care and emotional skills needed to ensure the development of self-management and social competence for junior doctors.

Structured, robust and holistic assessment of both clinical and emotional skills is well overdue, and this study provides an example of such an approach and one that considers WBA principles relevant to the clinical context. Although the study focused on the skills pertaining to paediatrics and had a limited geographical reach, it offers a template that could also be used in other disciplines. Further studies in other disciplines that use observable clinical activities that can be aggregated into EPAs are required. The observable clinical activities identified in this study provide a departure point for formalising these daily clinical activities as entry-level descriptors for WBA and the process outlined for developing the instrument offers a structure for formal assessment of agreed-upon milestones. Accreditation bodies such as the HPCSA may find these validated processes valuable templates for revising assessment systems of interns.

Declaration. None.

Acknowledgements. The data sets used and analysed during the curren study are available from the corresponding author on reasonable reques The authors wish to thank the participants in the study, as well as Dr Petra Gaylard (data management and statistical analysis) for assistance with statistical analysis.

Author contributions. KLN was responsible for the study design, data collection and analysis, and drafting the manuscript. JMvW supervised the study and contributed to study design and manuscript review.

Funding. This study was conducted with funding from the Medical Education Partnership Initiative, under grant number R24TW008863, from the Office of the US Global AIDS Coordinator and the US Department of Health and Human Services, National Institutes of Health (NIH OAR and NIH ORWH). The contents of the study are the sole responsibility of the authors and do not necessarily represent the official views of the SA government.

Conflicts of interest. None.

References

1. Van Rensburg HCJ. South Africa's protracted struggle for equal distribution and equitable access - still not there. Hum Resour Health 2014;12( 1):26. https://doi.org/10.1186/1478^491-12-26 [ Links ]

2. Bola S, Trollip E, Parkinson F. The state of South African internships: A national survey against HPCSA guidelines. S Afr Med J 2015;105(7):535-539. https://doi.org/10.7196/SAMJnew.7923. [ Links ]

3. Coovadia H, Jewkes R, Barron P, Sanders D, McIntyre D. The health and health system of South Africa: Historical roots of current public health challenges. Lancet 2009;374(9692):817-834. https://doi.org/10.1016/s0140-6736(09)60951-x [ Links ]

4. Mayosi BM, Flisher AJ, Lalloo UG, Sitas F, Tollman SM, Bradshaw D. The burden of non-communicable diseases in South Africa. Lancet 2009;374(9693):934-947. https://doi.org/10.1016/s0140-6736(09)61087-4 [ Links ]

5. Naidoo K, Van Wyk J, Adhikari M. Comparing international and South African work-based assessment of medical interns' practice. Afr J Health Professions Educ 2018;10(1):44-49. https://doi.org/10.7196/AJHPE.2018.v10i1.9556 [ Links ]

6. Bateman C. System burning out our doctors - study. S Afr Med J 2012;102(7):593-594. https://doi.org/10.7196/samj.6040 [ Links ]

7. Rispel L. Analysing the progress and fault lines of health sector transformation in South Africa. S Afr Health Rev 2016;2016(1):17-23. [ Links ]

8. Naidoo K, Van Wyk J, Adhikari M. Impact of the learning environment on career intentions of paediatric interns. S Afr Med J 2017;107(11):987-993. https://doi.org/10.7196/samj.2017.v107i11.12589 [ Links ]

9. Burch V, McKinley D, Van Wyk J, et al. Career intentions of medical students trained in six sub-Saharan African countries. Educ Health 2011;24(3):614. [ Links ]

10. Frank JR, Snell LS, Ten Cate O, et al. Competency-based medical education: Theory to practice. Med Teach 2010;32(8):638-645. https://doi.org/10.3109/0142159x.2010.501190 [ Links ]

11. Ten Cate O, Scheele F. Competency-based postgraduate training: Can we bridge the gap between theory and clinical practice? Acad Med 2007;82(6):542-547. https://doi.org/10.1097/acm.0b013e31805559c7 [ Links ]

12. Hamui-Sutton A, Monterrosas-Rojas AM, Ortiz-Montalvo A, et al. Specific entrustable professional activities for undergraduate medical internships: A method compatible with the academic curriculum. BMC Med Educ 2017;17(1):143. https://doi.org/10.1186/s12909-017-0980-6 [ Links ]

13. Ten Cate O, Chen HC, Hoff RG, Peters H, Bok H, Van der Schaaf M. Curriculum development for the workplace using entrustable professional activities (EPAs): AMEE Guide no. 99. Med Teach 2015;37(11):983-1002. https://doi.org/10.3109/0142159x.2015.1060308 [ Links ]

14. Warm EJ, Mathis BR, Held JD, et al. Entrustment and mapping of observable practice activities for resident assessment. J Gen Intern Med 2014;29(8):1177-1182. https://doi.org/10.1007/s11606-014-2801-5 [ Links ]

15. Ten Cate O, Snell L, Carraccio C. Medical competence: The interplay between individual ability and the health care environment. Med Teach 2010;32(8):669-675. https://doi.org/10.3109/0142159x.2010.500897 [ Links ]

16. Tutarel O. Geographical distribution of publications in the field of medical education. BMC Med Educ 2002;2(1):1-7. https://doi.org/10.1186/1472-6920-2-3 [ Links ]

17. Scott J, Revera Morales D, McRitchie A, Riviello R, Smink D, Yule S. Nontechnical skills and health care provision in low- and middle-income countries: A systematic review. Med Educ 2016;50(4):441-455. https://doi.org/10.1111/medu.12939 [ Links ]

18. Chopra M, Davidaud E, Pattinson R, Fann S, Lawn JE. Saving the lives of South Africa's mothers, babies, and children: Can the health system deliver? Lancet 2009;374:835-846. https://doi.org/10.1016/s0140-6736(09)61123-5 [ Links ]

19. Pattinson R. Saving Babies 2010-2011 Eighth report on perinatal care in South Africa. Pretoria: Tshepesa Press, 2013. [ Links ]

20. Wisman-Zwarter N, Van der Schaaf M, Ten Cate O, Jonker G, Van Klei WA, Hoff RG. Transforming the learning outcomes of anaesthesiology training into entrustable professional activities: A Delphi study. Eur J Anaesthesiol 2016;33(8):559-567. https://doi.org/10.1097/eja.0000000000000474 [ Links ]

21. Ten Cate O. Nuts and bolts of entrustable professional activities. J Grad Med Educ 2013;5(1):157-158. https://doi.org/10.4300/JGME-D-12-00380.1 [ Links ]

22. Hill J, Rolfe IE, Pearson S-A, Heathcote A. Do junior doctors feel they are prepared for hospital practice? A study of graduates from traditional and non-traditional medical schools. Med Educ 1998;32:19-24. https://doi.org/10.1046/j.1365-2923.1998.00152.x [ Links ]

23. Davis LL. Instrument review: Getting the most from a panel of experts. Applied Nurs Res 1992;5(4):194-197. https://doi.org/10.1016/S0897-1897(05)80008-4 [ Links ]

24. Polit DF, Beck CT. The content validity index: Are you sure you know what's being reported? Critique and recommendations. Res Nurs Health 2006;29(5):489-497. https://doi.org/10.1002/nur.20147 [ Links ]

25. Hair JF, Anderson RE, Babin BJ, Black WC. Multivariate data analysis: A global perspective. 7th ed. Upper Saddle River: Pearson, 2010. [ Links ]

26. Frenk J, Chen L, Bhutta ZA, et al. Health professionals for a new century: Transforming education to strengthen health systems in an interdependent world. Lancet 2010;376:1923-1958. https://doi.org/10.1016/s0140-6736(10)61854-5 [ Links ]

27. Ross S, Preston R, Lindemann IC, et al. The training for health equity network evaluation framework: A pilot study at five health professional schools. Educ Health 2014;27(2):116-126. https://doi.org/10.4103/1357-6283.143727 [ Links ]

28. Boelen C. Coordinating medical education and health care systems: The power of the social accountability approach. Med Educ 2018;52(1):96-102. https://doi.org/10.1111/medu.13394 [ Links ]

29. Asumeng M. Managerial competency models: A critical review and proposed holistic-domain model. J Manage Res 2014;6(4):1-21. https://doi.org/10.5296/JMR.V6I4.5596 [ Links ]

30. Malone K, Supri S. A critical time for medical education: The perils of competence-based reform of the curriculum. Adv Health Sci Educ Theory Pract 2012;17(2):241-246. https://doi.org/10.1007/s10459-010-9247-2 [ Links ]

31. Fletcher KE, Davis SQ, Underwood W, Mangrulkar RS, McMahon Jr LF, Saint S. Systematic review: Effects of resident work hours on patient safety. Ann Intern Med 2004;141(11):851-857. https://doi.org/10.7326/0003-4819-141-11-200412070-00009 [ Links ]

32. Cherry MG, Fletcher I, O'Sullivan H, Dornan T. Emotional intelligence in medical education: A critical review. Med Educ 2014;48(5):468-478. https://doi.org/10.1111/medu.12406 [ Links ]

33. Rossouw L, Seedat S, Emsley R, Suliman S, Hagemeister D. The prevalence of burnout and depression in medical doctors working in the Cape Town Metropolitan Municipality community healthcare clinics and district hospitals of the Provincial Government of the Western Cape: A cross-sectional study. S Afr Fam Pract 2013;55(6):567-573. https://doi.org/10.1080/20786204.2013.10874418 [ Links ]

34. Arora S, Ashrafian H, Davis R, Athanasiou T, Darzi A, Sevdalis N. Emotional intelligence in medicine: A systematic review through the context of the ACGME competencies. Med Educ 2010;44(8):749-764. https://doi.org/10.1111/j.1365-2923.2010.03709.x [ Links ]

Correspondence:

Correspondence:

K L Naidoo

naidook9@ukzn.ac.za

Accepted 7 December 2020