Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

SAIEE Africa Research Journal

On-line version ISSN 1991-1696

Print version ISSN 0038-2221

SAIEE ARJ vol.109 n.1 Observatory, Johannesburg Mar. 2018

Enhanced congestion management for minimizing network performance degradation in OBS networks

B. NleyaI; A. MutsvangwaII

IDepartment. of Electronic Engineering, Steve Biko Campus, Durban University of Technology, Durban 4001, South Africa. E-mail: bmnleya@ieee.org

IIFaculty of Education, Mafkeng Campus, North West University, Mmabatho, 2735, South Africa. E-mail: andrew.mutsvangwa@nwu.ac.za

ABSTRACT

Current global data traffic is increasingly dominated by delay and loss intolerant IP traffic which generally displays a structural self-similarity. This has necessitated the introduction of optical burst switching (OBS) as a supporting optical backbone network switching technology. Due to the buffer-less nature of optical burst switched (OBS) networks, contention/congestion in the core network can quickly lead to degradation in overall network performance at moderate to high traffic levels due to heavy burst loses. Several approaches have been explored to address this problem, notably measures that would minimize burstification delays, congestion, blocking at the same time enhancing end-to-end throughput as well as rational and fair utilization of the links. The aim is to achieve a consistent quality of service (QoS). Noting that congestion minimization is key to a consistent QoS provisioning, in this paper we propose a congestion management approach called enhanced congestion management (ECM) that seeks to guarantee a consistent QoS as well as rational and fair use of available links. It is primarily a service differentiation based scheme that aims at congestion, blocking and latency minimization, by way of combining time averaged delay segmented burstification as well as random shortest path selection based deflection routing and wavelength assignment. Simulation results show that ECM can effectively minimise congestion and at the same time improve both throughput and effective utilization, under moderate to high network traffic conditions. Overall, we show that the approach guarantees a consistent QoS.

Keywords: Optical burst switching (OBS), service differentiation, streamline effect (SLE), effective utilization, ineffective utilization, segmentation, random shortest path selection

1 INTRODUCTION

Optical networks based on dense wavelength-division multiplexing (DWDM) technology have enabled increased data transmission capacities to terabits per second ranges. End users exchange data with one another through end to end all-optical channels, called lightpaths in which wavelength continuity is maintained.

The ever-surging global traffic levels brought about the need to design a switching paradigm that would match the DWDM transmission speeds as well as desired QoS demands. Traditional switching approaches have not kept pace with matching transmission speeds, hence currently a mismatch between switching and DWDM transmission speeds, with the earlier causing a serious bottleneck [1]. Of the three primary candidates; optical packet switching (OPS), optical circuit switching (OCS) and optical burst switching (OBS), the latter eventually emerges as a more promising solution because of its relative advantages over the other two. These include: high bandwidth utilisation, moderate set up latencies as well as processing complexities, traffic adaptiveness, as well as no requirement for optical buffering in the core network nodes [2].

The fundamental architecture of a communications network infrastructure supported by an OBS backbone is shown in Figure 1. It primarily comprises edge and core nodes interconnected via DWDM optical fibre links. Primarily, it interconnects, residential access, home networks (wired and wireless), metro core/optical access rings, data centers as well as microgrids. These connect directly to its edge nodes [1], [2]. The functions of edge and core nodes are summarized in Figure 2. The edge(ingress) aggregates data tapped from the access networks and destined to a common edge(egress) node into optical huge packets generally referred to as data bursts. Once a data burst has been assembled, an associated burst control packet (BCP) is generated and transmitted along a dedicated control channel and delivered with a carefully set small relative offsettime ahead of the data burst's own arrival. The offset timing generally allows for electronic processing of the BCP at a subsequent core node thus creating an allowance for reserving a wavelength in the latter's output port/ link, as well as reconfiguring of the switching fabric accordingly [1]. The burst will thus shortly cut-through this node and immediately afterwards the reserved wavelength is released and made available for any other new connection demands. In a way, this removes the need for optical buffering at core nodes which otherwise could further intricate network design as well escalate both operational costs and end-to-end delays. In addition, this temporary usage of wavelengths promotes enhanced resource utilization as well as improved adaptation to highly variable source traffic [4]. Proper routing and wavelength assignment(RWA) at the edge nodes reduces chances of data burst contentions at core nodes. A limited set of wavelength converters (WCs) as well as fiber delay lines (FDLs) will normally be provisioned in core nodes to help resolving contentions. Upon reaching the destination edge node, the burst is disassembled into packets which are then routed to their final destinations [5].

In practice, OBS backbone network deployment, in a cost-effective manner remains an inescapable challenge. The RWA problem thus entices finding a physical route for each lightpath demand, assigning to each route a wavelength and ensuring end-to-end continuity, subject to a set of constraints. The wavelengths must be assigned such that no two lightpaths that simultaneously share a fibre link use the same wavelength.

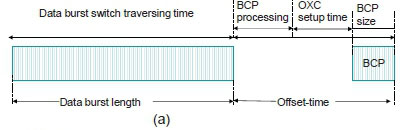

Core nodes do not directly interface with access networks. As shown in Figure 3(b) a core node comprises functional elements such as: a set of input/output ports, an optical cross-connect (OXC), a BCP processor, WCs, and FDLs [1]. Input ports extract BCPs and forward them to optical-to-electrical (O/E) converters before electronic processing by a BCP processor. The offset timing between a data burst and a BCP is shown in Figure 3(a).

In practical implementation, core nodes (Figure 3(b)), are not provisioned with buffering capabilities, thus cannot process data bursts wholly in optical domain. As a performance consequence in such networks, some data bursts are discarded whenever contention occurs among multiple bursts that arrive simultaneously at such nodes using the same wavelength and are intended for the same output port.

The resulting contention losses may occur frequently, but are not necessarily an indicator of congestion or its onset [2]. The well-known traditional packet switched network performance metrics such as throughput, mean queue length, burst loss probability, link utilization, fairness among different traffic types as well as end-to-end delays are not necessarily all applicable to OBS networks as congestion onset indicators. E.g. packet losses may be a result of the inevitable random burst contention in core nodes or network congestion within the periphery of access networks.

It is therefore emphasized that network congestion generally differs from random burst contentions in that it will cause high resource utilization states for relatively longer periods such that round-trip times (RTTs) will be relatively higher, but will drastically reduce in the low-utilization state [6]. This is attributed to by longer queuing delays during high- utilization within the access sections of the network as well as delays due to contention burst losses necessitating burst retransmissions [6]. Note that high utilization within the backbone network increases contention probabilities as well as associated burst retransmissions and deflections associated with contention resolutions and this can ultimately lead to network congestion. Overall it is noted that minimizing contention (losses) is therefore key towards minimizing congestion [6], [7].

1.1. Contribution of this paper

Based on previous studies, in this work we integrate various schemes that are geared towards improving the performance by minimizing congestion or its occurrence to guarantee a consistent QoS in both light and heavy traffic conditions. Overall burst loss probability (BLP), and end-to-end delays are the main primary performance metrics of interest which adequately represent the congestion state of the entire network and at the same time dictating renderable QoS. Provisioning QoS consistently and fairly for the various diverse applications with varying handling demands remains a problematic task. Thus, the paper introduces the ECM approach in this regard. We carry out a performance comparison evaluation considering fairness and efficiency trade-offs in the spirit of providing a consistent QoS to all applications irrespective of class.

1.2. Organization of this paper

The remainder of the paper is organized as follows. Studies related to congestion and its management in OBS networks are briefly reviewed in the next section. The proposed approach is described in section 3. This also includes discussion on key performance measures such as blocking probabilities, utilization, throughput, link loads, link congestion level as well as effective utilization. Section 4, presents the experimental setup, and this is followed in section 5 by a discussion of the results. Section 6 concludes the paper.

2 RELATED WORK

In general, congestion can be caused by unpredictable statistical fluctuations of traffic flows or fault conditions within the network. In an OBS backbone network, congestion can be e.g., at link level (more traffic than what the assigned wavelength(s) can handle), at wavelength level (non- availability of a wavelength, hence continuity failure), switch level (e.g. insufficient WCs or FDLs), as well as at BCP processor level (i.e. the processor not being able to process all the incoming BCPs in real time). Occurrence of contention will lead to an increase in BLPs and thus triggering re-transmissions. Thus, as emphasised in section one, minimizing contention (losses) is key towards minimizing congestion.

Generally, contentions are resolved by utilizing one or more of the contention resolution techniques such as optical buffering, deflection routing, wavelength conversion as well as segment discarding. The magnitude of the contention losses in a given network depends on factors such as number of nodes, path length, traffic matrix, route and wavelength selection criteria [11]. Because shortest paths are generally preferred, the loss rate, bottlenecks as well as congestion are likely to be more pronounced over them, rather than in the longer links [11]. Identifying a bottleneck link apriori, helps in dynamically changing the route, or at least to exert some control on the burst generation rate at edge nodes so as to avoid exasperating already existing congestion along those routes. Various path wise congestion notification mechanisms have been suggested in several literatures. These normally measure the burst loss rate or peak loads at the nodes preceding the affected paths and propagate the information to the edge nodes. Such congestion control proactive mechanisms will immediately isolate the affected links or paths. A draw back with this feed approach is that of the core nodes having been designed exclusively to schedule and forward data bursts to the next hop along the path to the egress node [1]. Thus, communicating in the reverse direction, i.e. generating feedback packets with the measured values of peak load or the BLP to the edge nodes is blocked. Furthermore, acquiring of per-flow burst loss rate at the core nodes and propagating the information results in additional management overhead which can possibly worsen both contentious and congestion. Hence estimation of loss rate at the interior nodes only with end-to-end measurements between the edge node pairs would be a better approach as it would result is less overhead. In addition, since typically the traffic is bursty, this critical information may be outdated by the time it reaches the targeted edge node, thus ideally the estimation of losses should be done using the measurements acquired at the edge nodes.

In [11], the authors propose measuring the burst loss rate on a per path basis and consequently reduce traffic accordingly only on affected paths so as to avoid losses. This traffic shaping and flow control per path is exerted at the edge nodes often leads to underutilization of the rest of the paths other than the congested path. Some mechanisms are based on adjusting the burst assembly time or its length, or both so as to reduce the overall bursts sending rate along the path. In that way the traffic load along the targeted paths lowers, and thus the risk of both congestion and contention occurrences lowers.

A network tomography based approach [9] has also been considered. Its basis is that any path-level parameter is estimated from a measurement of the parameter along various points in the network since there exists a correlation in the losses experienced along different paths passing through a given link. All the paths between different source-destination pairs passing through a link perceive loss on a link in the same way. By collecting the measurements of the parameter in question at different destinations, the link-level parameters are estimated with acceptable accuracy.

Network implementation of carefully designed routing and wavelength assignment algorithms will drastically reduce burst losses, enhance links utilization and promote acceptable fairness to all traffic types. This is likely to promote better chances of a guaranteed consistent QoS. Several studies have focused on end to end blocking probability as well as utilization in such networks [10]. In [11], utilisation of the links is studied in detail in which the concept of effective as well as ineffective utilization is used as key performance measures for OBS backbone networks. Effective utilisation relates to channels used by bursts that eventually reach their destinations, whereas ineffective utilization relates to channels that are used by bursts that are discarded before reaching their ultimate destinations. Reduction of the latter has the effect of improving both the performance and efficiency of the network. Ineffective utilization is particularly more pronounced in periods of heavy traffic loads. It is further shown in [11] that under heavy traffic load conditions, the effective utilization and network throughput (y) consequently suffer congestion collapse. In this case, the total network utilization tends to be quite high, even though ineffective utilization dominates, i.e. most of the utilized capacity is used by bursts that are eventually discarded before reaching the intended destinations.

Likewise, in [12], the authors seek to concurrently reduce burst loss probability and ineffective utilisation by implementing algorithms that reduce the likelihood of both contention and congestion for bursts already in the core network. In this way the overall network throughput improves and consequently QoS is better guaranteed. In the event of contention, selective re-routing of both low and high priority segments of the contending burst on alternate shortest path routes is carried out.

3 MATERIALS AND METHODS

In this section, the ECM congestion as well as contention resolution approach is described. The approach couples a low computational wavelength reservation algorithm, dual priority burst segmentation, as well as the Least Remaining Hop-count First scheme (LRHF) as described in [12]. It will be demonstrated that by comparison, it significantly lowers both contention and congestion, promotes the reduction of ineffective utilization, thus consequently improving on QoS guarantees under moderate to heavy network traffic loads.

With this approach, all segmented bursts with fewer remaining hops have higher priority so they pre-empt overlapping segments of contending bursts, and these preempted segments are deflected to alternate routes. Only when alternate routes are unavailable, are the segments discarded. As such once in the core network the pre-emption or total discarding of overlapping segments is determined according to the least remaining hops. Precaution is taken to limit pre-emption of lower priority segments (according to the least remaining hops). This is to ensure protection to long distance traffic traversing the same network. We carry out its performance evaluation and the results are compared with those of conventional OBS approaches.

This is achieved through minimising congestion or its frequency of occurrence in the core network. In the OBS domain, congestion occurs both at edge nodes (edge congestion) as well as in the core nodes (path congestion). Edge congestion is likely to occur when packet arrival rate is unexpectedly too high such that the edge node's limited buffering is unable to cope with the burstification process. Similarly, excessive data burst arrival rate for disassembling may lead to edge congestion. Path congestion is contributed to by contention, traffic overload as well as RWA.

3.1. Key Model Features

The key design and operational features of the ECM approach are summarily outlined as follows: At the edge nodes, segmented burst assembling is assumed [13], the fundamental concept being to initially assemble data segments using traffic from multiple traffic classes with different priorities. Segments with high priority are placed towards the head end of each burst and those with relatively low priorities tail it. Selective deflections of sections of the composite segmented burst in case of contention can be carried out, with the segments at the tail-end more likely to be dropped than those at the head-end.

Figure 4, illustrates the burstification process, in which several delay sensitive high priority (HP) and delay insensitive low priority (LP) sources are served at an edge node.

The first stage of the burstification creates equal sized class based data segments for each traffic class. Three burst assembly algorithm types were explored. These are length (Lmax), time (Tmax) [1], and time averaged (Tav)

[3] based algorithms. The overall burst assembly process model is both time and size constrained. The HP segments may not be delayed by Tav. The assembled segmented burst itself should be of minimum acceptable length Lmin<L(t) <Lmax, before it is placed for scheduling.

However, if the time constraint ( Tav) has expired, yet the burst size is still less than Lmin, a LP segment block of minimum size Lminis appended. A void between the two segment classes allows for separation in the core network should contention or congestion be experienced.

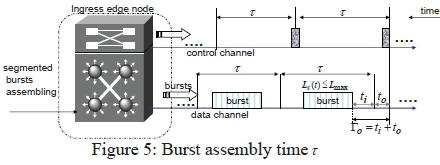

3.2. Channel Reservation and Scheduling

For channel reservation, we have assumed a one-way reservation protocol, called Just-Enough-Time (JET) [13], in which a data burst is transmitted after an offset time, To = ti + toseconds. This lags a control packet sent on a separate dedicated wavelength channel to reserve resources along the entire route in advance. The control packet ferries two values, offset time To, indicating expected burst arrival time, as well as actual burst length Ls(t). To-comprises two components, a fixed base offset time toand an additional variable offset time tias illustrated by Figure 5.

The first component tois an allowance for the control packet processing along the intended lightpath whereas the variable offset time ticaters for contention/ congestion resolution if encountered along the path. Note that overall JET uses the offset time information transmitted in each control packet to achieve higher wavelength utilization solely because of its delayed reservation feature.

Furthermore, JET affords capabilities of identifying situations where no transmission conflict occurs, although the start time of a new burst may be earlier than the finishing time of an already accepted burst. The burst length information carried in the preceding control packet is used to enable close-ended reservation instead of open-ended reservation, which otherwise would require explicit release of the configured resources [13]. The close-ended reservation helps nodes make intelligent decisions about whether it is possible to schedule another newly arriving burst or not. The model burstification approach described in section 3.1, inevitably generates bursts of variable sizes as well as offset times. They may not necessarily arrive at all subsequent nodes along the selected path in the original sent sequence as their associated control packets. For that reason, channel occupancy is expected to become fragmented with different voids between data bursts. We therefore opt for a JET-based scheduling algorithm called, the latest available unused channel with void filling ((LAUC-VF), which is designed to utilize such voids for scheduling newly arriving bursts [13]. As such, it will provision relatively better bandwidth utilization and BLPs.

3. 3 Random Shortest Path Searching

With this approach, all minimum hop routes between an edge node and the desired destination edge node are the candidate routes. However, given the numerous links in a typical network, a key challenge is in fast computing the shortest distance(s) between all pairs of communication nodes taking into account metrics such as congestion, wavelength availability as well as continuity, traffic load, etc. In this section, we refer to these metrics collectively as weights. Thus, the weight of each path can be determined by the number of nodes to be traversed, current traffic intensity on the path, as well as blocking probability etc. The entire web of nodes can be modelled as a weighted directed graph [14]. Our task is to implement a search algorithm to fast compute all possible distances between all pairs of ver-tices(nodes) on that graph(network), with an ultimate goal of finding the shortest path from any given vertex (edge node) to another (destination). Initially a matrix of all possible distances of a given network graph is drawn and thereafter we compute its distance product [15]. A distance product of the matrix is defined by letting A be an n x m matrix and B be an m x n matrix. Their distance product A * B = C would be of size n x n = E such that

To construct shortest paths, the notion of witnesses is used. The matrix n x n = V is a witness matrix for the distance .product C = A * B provided for every 1 < i , j < n we have

We can now use a random shortest path algorithm to compute the shortest path(s) between all pairs of vertices of the directed graph of n vertices in which all edge weights are taken from a set {-M ,...,0,..., M}.

The algorithm receives an n x n matrix E containing the weights (lengths) of the edges of the directed graph (representing the OBS network). The vertex set of the graph is V = {1,2,..n}. The weight of the directed edge from i to j is eijif such an edge exists, otherwise it is  . The algorithm initially lets F <- E before running log3/2n iterations each time setting s <- (3/2)l. A function rand next generates a subset B of V = {1,2,...n} whose individual elements are selected independently with a probability p . Matrix F[*.b] comprise columns of F that correspond to the vertices of B .

. The algorithm initially lets F <- E before running log3/2n iterations each time setting s <- (3/2)l. A function rand next generates a subset B of V = {1,2,...n} whose individual elements are selected independently with a probability p . Matrix F[*.b] comprise columns of F that correspond to the vertices of B .

F[b,*] is a matrix comprising rows of F that correspond to the vertices of B . Finally, a comparison of F versus F is carried out. When an entry in F is smaller, it is copied to F as well as its corresponding witness copied to V . The search algorithm is summarized as follows [14]:

Next, we implement this algorithm, in conjunction with RWA. We begin by defining a connected undirected graph G = (V, E), of a network consisting of V nodes and E edge nodes [14]. We let gij = N x n be the matrix such that gij = 1 , if there exists a path between the nodes i and j or else gij = 0

We also let V = (vi )=N x N = 1, if the node has been visited already, or 0 otherwise.

Our objective is to maximise the number of lightpaths that can be established for a limited number of wavelength Nλ . i.e., maximise: F =  where,

where,

In equation (1);

is the total number of light paths in the network g

is the total number of light paths in the network g  vector such that:

vector such that:

We summarise the random shortest path selection algorithm considering RWA as follows [15]:

3.4. Contention Resolution and Deflection Route Choice

With regards to the inevitable contention occurrences in the core network, which is the primary cause of burst losses, we adopt burst segmentation and deflection routing as our contention resolution choice.

Our primary goal is to segment any contending burst segment and deflect it to a non-congested route rather than discarding it completely. Care is taken not to trigger or escalate both congestion and contention in the deflection route. In that way, both burst loses as well as congestion are minimised.

The segmentation and subsequent deflection process is illustrated Figure 6. As an example, in Figure 6(i), a 50% overlapping is considered, i.e. the LP segments of bursts a' overlaps with the HP segments of burst b thus the earlier is truncated and deflected to an alternate route. In Figure 6(ii), a near to 100% overlapping will lead to one of the HP deflecting to an alternate path. Any further deadlock is resolved using least remaining hops criteria [4].

Critical however is the route choice. Note that these following factors are considered both in normal (global) network as well as deflection routing.

3.4.1 Wavelength and Link Utilization Factors

To dimension the available network resources optimally, and effectively it is necessary to take into account the prevailing wavelength as well as link utilisation of the chosen link(s). Wavelength utilization is the fractional time that a link is utilised, whereas link utilization would be the fractional proportion of all available wavelengths in each link in use. Overall utilisation along a selected path from source s to destination Usdcan be split into two components:

These are [16] :

Effective utilisation ( Ueff) accounting for fractional wavelengths/links/paths utilised by bursts that successfully reach the intended destination.

Ineffective utilisation ( Uineff ) accounting for bursts that are discarded before reaching their destination. Uineff tends to dominate in periods of heavy traffic. The goal is to dimension the network resources such that Ueff

is always maximised, and Uineff minimised.

To accomplish this, at each core node key related information is acquired: This includes [8], [16]: l(i, j): - possible links between two nodes along the route s to d .

t : - fixed interval over which the load of the links is calculated.

succ(l, t) : - set of bursts that successfully traversed link l in each set interval t .

Ti: - length of burst i respectively.

Using the information above, the effective utilization of a given link Uf (l,r) would be equivalent to the fractional total occupational time:

where Nl,λ is the total number of wavelengths comprising that link. We assume that Ueff(l,0) = 0 . We now let  be the set of possible paths

be the set of possible paths  , from s to d. In this case, at time t the chosen alternate path is

, from s to d. In this case, at time t the chosen alternate path is  whose index is obtained from the following formula [7].

whose index is obtained from the following formula [7].

3.4.2. Weighted Least Load Criteria

If we let PlMAX be the maximum load threshold, which when exceeded will cause congestion on the link:

The link is overloaded hence will not be chosen.

The path will be chosen.

3.4.3. Weighted Least congestion & distance Criteria

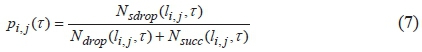

The primary objective of this criteria is to deflect and reroute the truncated bursts on the least congested route as well as least number of hops. From [16], the load on a single selected link as:

where,

Ndrop(li, j,t): - is the number of dropped bursts/segments on link li jover the observation interval r .

Nsucc(li, j,t) : - is the number of successfully transmitted bursts on link li,j over the observation interval r .

The route choice selection can now be based on a weighted function of both congestion as well as extra distance.

3.5. Stream Line Effect

To conclude this section, we also take into consideration the "streamline effect" in the overall network design. This is a phenomenon wherein bursts on a common link are streamlined and do not contend with each other (no intra-stream contention) until they diverge or the link merges with other links [18].

The significance of this streamline effect is two-fold. Firstly, since bursts within an input stream only contend with those from other input streams but not among themselves, their loss probability is lower than the one estimated by the well-known M/M/k/k model. Secondly, the burst loss probability is not uniform among the input streams. The higher the burst rate of the input stream, the lower its loss probability.

4 SIMULATION SETUP

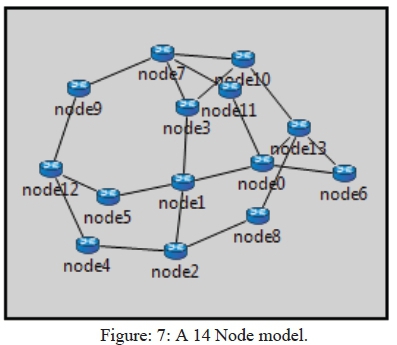

In this section the effectiveness of the proposed approach is evaluated through simulation on a 14-node network using OMNET++ (version 5.2), with necessary OBS and INET (version 3.6.2) modules and incorporated algorithms. Our choice of this platform is because of its not having been designed to be a network simulator as such, but rather a generic discrete event simulator framework with which to create simulators for different scenarios. Furthermore, its topologies are stored in editable plain text files. We therefore have relied on the available OBS protocol models available in its library for our evaluations.

In Figure 7, edge nodes 2, 4 and 12 are designated as ingress whereas nodes 6,10 and 13 are egress nodes. The rest are core nodes. Source based routing at the ingress node is assumed and that the same ingress node selects the best choice route using the random shortest path first algorithm. We make several other assumptions, pertaining to this simulation evaluation as follows:

• At the edge nodes, all traffic sources are categorized as either HP or LP, and that segmented burstification is assumed.

• That the links throughout the network are of varying lengths, and bidirectional, i.e. each link has two fibres and in turn, each fibre operates on 32 wavelengths, 4 of which are reserved for control purposes.

• The maximum data rate on each wavelength is limited to 10Gbps .

• BCPs processing and switching fabric configuring time (switching latency) in the core nodes is 1.5μ sec s .

• JET, one-way signalling and LAUC-VF scheduling are assumed.

• Random shortest path route selection using a modified version of the algorithm in [15] is used to find the shortest path between all node pairs, subject to all other RWA constraints being met.

• Physical impairments and their effects are not considered.

• Each node handles both transit and locally generated traffic flows, in which case the SLE is considered.

• Network-wise, all bursts are assumed to be uniformly distributed over all sender-receiver pairs.

• There is no buffering in the core nodes and as such contention resolution is by way of segmenting the contending (overlapping) sections and deflected.

• The least remaining hops policy is implemented during contention resolution.

We have chosen segmented burstification, as outlined in section 2, to facilitate easier separation of tail-end LP sections when contention occurs at core nodes.

5 RESULTS AND DISCUSSION

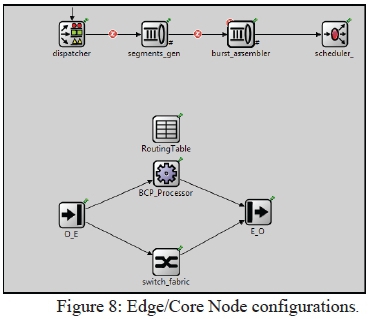

In this section we provide simulation results that compare the ECM and conventional OBS approaches. Data packets are fed directly to the dispatcher and then after to the segment generator (Figure 8). The segmented burst size is set to 100kB , so is the traffic generation load also moderately set to p = 0.35 . As can be observed from Figure 9, for a fixed packet delay value, the size burstification algorithm, Lmaxgenerates more segments, than the other two namely, Tmaxand Tave. This is partly because the traffic intensity is moderate, hence enough packets are received to assembly the required segment size/volume ( Lmax). In both, Tmaxand Tave, time dictates segment assembling. As such when we cannot tolerate large average packet delays, more segmented bursts are generated and conversely if we can tolerate longer delays, fewer are generated.

The smaller the segment size, the better the assembly algorithm, as the individual packets will incur lesser delays. This enhances QoS for delay sensitive (HP) traffic. However, lowering the frequency at which the segmented bursts are generated reduces the risk of congestion at the BCP processors.

Figure 10, plots the segment loss probability for both HP and LP segments. This is compared with conventional OBS approaches in which all traffic types have the same priority. The segment size is fixed to 40 packets for both HP and LP traffic classes. However, 30% of the traffic is HP and the rest is LP. Node 2 is the source, and node 10 is the destination. The RWA & random Shortest Path Selection Algorithm described in section 3.2 is implemented, and the weights in terms of traffic load considered.

We observe from the same graph that the HP segments have a lower blocking probability, partly because of their default priority. In general, the packet/segment loss probability for segmented data bursts is lower as their sizes are bounded and thus contention resolution techniques much easier to implement. However, the traditional non-segmented burst approach has exponentially distributed burst sizes and that aids to complexities in implementing contention resolution measures, hence the higher loss probabilities.

At higher traffic loads, both HP and LP blocking probabilities tend to increase more rapidly because of the increasing contentions as well as congestion on deflected links/routes.

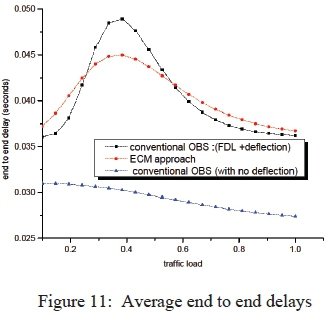

In Figure 11, the average end-to-end delay is plotted against traffic load. Three cases are compared, namely; (1) conventional OBS in which contention is resolved by way of using FDLs as well as deflection, (2) conventional OBS with no deflection and (3) the ECM approach. For each of the three cases, the delay peaks at about p » 0.4 before gradually decreasing. This is partly because of lesser SLE effects at higher network loads and that bursts traversing longer hops are likely to be discarded along the way (prior to reaching the destination) as the input load increases. Further, it is observed that for low-to-medium network traffic loads, deflection routing increases the overall number of hops.

Overall, the average end-to-end delays of the proposed approach moderately decrease at high network loads. Conventional OBS will incur less delays as the bursts only traverse the shortest path chosen to destination even though BLPs would be very high. We further evaluate the extent to which our proposed approach, will promote efficient use of available network resources. The throughput in this case is the average number of bursts that successfully traverse the end-to-end path per unit time. The ECM will yield relatively improved QoS in terms of delay constraints as it peaks at a much lower threshold.

Figure 12 plots the normalised average effective throughput as a function of the network traffic load. In general, as expected, the average effective throughput in the proposed approach gradually decreases with increase in network traffic, but at a lesser rate in comparison with conventional OBS with deflection routing

However, the effective throughput still hovers above 60% at high traffic loads for ECM. This is because at high loads all deflection routes are chosen considering network conditions in the candidate routes, which however, is not the case with conventional OBS. Therefore, the approach still enables the network to utilise resources efficiently at high traffic loads.

6. CONCLUSION

In this paper we have proposed and evaluated a congestion management approach we refer to as "enhanced congestion management" which gears towards rendering and guaranteeing a consistent QoS as well as rational and fair use of available links. This is primarily achieved by way of combining priority service differentiation, time averaged delay segmented burst assembling, and load based random shortest deflection path selection. The proposed approach does not add any extra hardware complexity to existing OBS network interior nodes, but provisions a variable offset time tiwhich will facilitate priority based contention resolution in the interior network, hence minimising its effects as well as congestion. In that way, the available network resources are appropriated more fairly and efficiently. In comparison to similar conventional OBS approaches, the proposed approach improves the network performance in terms of loss probability, throughput and end-to-end delays when the network is not so highly loaded.

7. REFERENCES

[1] W. M. Gaballah, A.S. Samra and A.A. tale "International Journal of Electronics & Data Communication". Vol. 5, No 1, pp195-203, 2017. [ Links ]

[2] Richa Awasthi, Lokesh Singh and Asif Ullah Khan: " Estimation off bursts length and design of a Fiber Delay Line based OBS Router", Journal of Engineering Science and Technology, Vol. 12, No. 3, pp 834 - 846., March 2017. [ Links ]

[3] K. Christodoulopoulo and E.V.K Vlachos: "A new burst assembly scheme based on the average packet delay and its performance for TCP traffic", Optical Switching and Networking, Vol.4, pp200-2012, 2007. [ Links ]

[4] D.H Patel, K. Batt and V. Dwivedi. "The efficient scheme for Contention reduction in buffer-less OBS network" Proceedings of International Conference on Communication and Networks, Advances in Intelligent networks and Computing, Springer Nature PV, LTD, pp95-106, Singapore, 2017.

[5] H. Zang, J. P. Jue and B. Mukherjee: "A Review of routing and wavelength assignment approaches for wavelength-routed optical WDM networks", Optical Networks Magazine, Vol.l, No 1, pp. 47-60, January 2000. [ Links ]

[6] S. Ahmad, A. Mustafa1, B. Ahmad, A. Bano and H. Al-Sammarraie: "Comparative study of congestion control techniques in High Speed Networks", International Journal of Computer Science and Information Security, Vol. 6, No. 2, February 2009. [ Links ]

[7] W.S. Park, M. Shin, H.W. Lee and S. Chong: "A joint design of congestion control and burst contention resolution for Optical Burst Switching network". Journal of Lightwave Technology, Vo. 21, No. 17, 2009. [ Links ]

[8] Li Yang and N. G. Rouskas: "Adaptive path selection in OBS Networks ", Journal of Lightwave Technology, Volume 24, Number 8, pp3002-, August 2006. [ Links ]

[9] Y. Vardi, "Network Tomography: Estimating source-destination traffic intensities," Link Data Journal of the American Statistical Association, March 1, 1996.

[10] Shuo Li, M. Zukerman, M. Wang and E.W.M. Wong: "Improving throughput and effective utilization in OBS networks", Optical Switching and Networking, Vol. 18, Part 3, pp. 222-234November 2015, Pages 222-234. [ Links ]

[11] Meiqian Wang, Shuo Li, Eric W. M. Wong and Moshe Zukerman. Evaluating OBS by Effective Utilization. IEEE Communications letters, Vol. 17, No. 3, March 2013. [ Links ]

[12] V. Kavitha and V. Palanisamy: "New Burst Assembly and scheduling technique for Optical Burst Switching Networks" Journal of Computer Science, Vol. 9, No 8, pp 1030-1040, 2013. [ Links ]

[13] Jinhu Xu, Chunming Qiao, Jikai Li, and Guang Xu: "Efficient hannel scheduling algorithms in Optical Burst Swiched Networks", IEEExplore, 2003.

[14] Madkour , W. G. Aref , F. Ur Rehman , M. A. Rahman and S. Basalamah: " Survey of Shortest-Path Algorithms" , 8 May, 2017, https://arxiv.org/pdf/1705.02044.pdf, [accessed on 16 June, 2017].

[15] F. Kharroubi. Random Search Algorithms for Solving the Routing and Wavelength Assignment in WDM Networks, PhD Dissertation, Changsha June-2014. https://www.academia.edu/9462731/. [accessed: 12/06/2015].

[16] G.P. Thaodine, V.M Vokraine and J.P Jue, "Dynamic congestion based load balanced routing in optical burst switched networks", in Proceeding Globecom, Volume 5, pp2628-2632, December 2003. [ Links ]

[17] M. zaka, B. Jaumard and E. Boha: "On the efficiency of StreamLine Effect for contention avoidance in Optical Burst Switching Networks, Optical Switching and Networking, Vol.18, Part 1 pp. 35-50, November, 2015. [ Links ]