Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

South African Journal of Higher Education

On-line version ISSN 1753-5913

S. Afr. J. High. Educ. vol.35 n.3 Stellenbosch Jul. 2021

http://dx.doi.org/10.20853/35-3-3962

GENERAL ARTICLES

Evaluation of quality assurance instruments in higher education institutions: a case of Oman

A. S. Al-AmriI, II; Y. Z. ZubairiIII; Jani,RIV; S. NaqviV

IInstitute of Advanced Studies University of Malaya Kuala Lumpur, Malaysia e-mail: amaloman@siswa.um.edu.my / https://orcid.org/0000-0001-7197-5428

IIPlanning and Development Department Middle East College Muscat, Sultanate of Oman e-mail: amals@mec.edu.om

IIICentre for Foundation Studies in Science University of Malaya Kuala Lumpur, Malaysia e-mail: yzulina@um.edu.my / https://orcid.org/0000-0002-6174-7285

IVFaculty of Economics and Administration University of Malaya Kuala Lumpur, Malaysia e-mail: rohanaj@um.edu.my / https://orcid.org/0000-0002-1388-0620

VCenter for Foundation Studies Middle East College Muscat, Sultanate of Oman e-mail: snaqvi@mec.edu.om / https://orcid.org/0000-0002-6033-9351

ABSTRACT

The use of a variety of instruments for quality assurance, management, and enhancement in higher education is well recognized. This article investigated the instruments used by Higher Education Institutions (HEIs) in Oman to measure, control, and manage the quality of their services in alignment with the standards set by Oman Academic Accreditation Authority (OAAA). Quality Assurance Managers (QAMs) from five HEIs were interviewed to identify the instruments used by them to fulfil the requirements of each standard and the way they make use of the data gathered by using these instruments. Findings from the study reveal that questionnaires and meetings are the most common instruments used by these institutions to measure, control and assure the efficacy of their current quality activities. In addition, HEIs use summary statistics to analyse data and then present them in meetings or through reports. On the other hand, it was found that substantial efforts are made to collect data but the efficient usage of data is missing. The QAMs reported a lack of awareness among the staff on the importance of collecting data since the staff members believe that these data are collected for documentation purposes only. This study emphasizes the importance of using the data gathered from different instruments in decision making and enhancing the quality of HEIs.

Keywords: data usage, higher education, instruments for quality assurance, quality assurance, Sultanate of Oman

INTRODUCTION

Quality Assurance (QA) supports organizations in ensuring that their services or products are as per the required standards. QA is defined by the American Society for Quality (ASQ) as "the planned and systematic activities implemented within the quality system that can be demonstrated to provide confidence that a product or service will fulfill requirements for quality" (ASQ 2017). However, it is difficult to define quality in higher education sector since it is complex, dynamic, and contextual (Brockerhoff, Huisman, and Laufer 2015; Singh, Sandeep, and Kumar 2008). According to the UNESCO, it is "a multidimensional concept which must involve all its functions and activities" (da Costa Vieira and Raguenet Troccoli 2012). Hamad and Hammadi (2011) report that there are five approaches of QA in HE, namely exceptional, perfection, fit for purpose, value for money, and transformation. In addition, it is important to highlight that stakeholders of HEIs have varying perceptions of what constitutes quality. These stakeholders comprise students, staff, alumni, employers of alumni and funding agencies. Each stakeholder has different perceptions and interests (Schindler et al. 2015; Al Tobi and Duque 2015; Sunder 2016; Akhter and Ibrahim 2016).

HEIs around the world use different instruments1 to control, assure, and manage the quality of their academic and administrative services. Many HEIs believe that instruments and measurements are the cornerstone of any quality enhancement and this resonates with observations made by Widrick, Mergen, and Grant (2010). In fact, there is public interest in the performance evaluation of HEIs' since HEIs rely on public resources as stated by Goos and Salomons (2017). Moreover, increase in the number of students in HEIs has made the field of Higher Education (HE) more competitive and customer focused (Duzevic, Ceh Casni, and Lazibat 2015). Countries around the world have established accreditation bodies to evaluate the quality of HEIs by setting different standards. Similarly, HEIs have established their internal quality mechanisms to strengthen their systems to be prepared for external quality evaluation.

Hence, different instruments are used by HEIs for quality purposes and a lot of data is collected. However, the question is whether HEIs effectively use this information for QA. Loukkola and Zhang (2010) had raised this point in the context of European HEIs and mentioned that there is uncertainty regarding the usage of the information and data gathered for institutional QA, planning, and improvements. Limited research has been conducted on the way HEIs in Oman gather and harness their data; therefore, the studies such as this one are the need of the hour.

The Sultanate of Oman is a relatively young nation in terms of its development in the higher education sector and quality assessment is a recent exercise taken up by the government as a regulatory requirement to maintain standards and ensure quality enhancement in higher education. In an effort to maintain high standards of quality at tertiary level education in the Sultanate, Oman Academic Accreditation Council was established in 2001 which was later renamed as Oman Accreditation Authority (OAAA). OAAA was established with a vision "to provide efficient, effective and internationally recognized services for accreditation in order to promote quality in higher education in Oman and meet the needs of the public and other stakeholders" (OAAA 2016, 14). HEIs in Oman are undergoing the second stage of accreditation which is called "Institutional Standard Assessment" (ISA) which plays an important role in assuring the quality of each HEI. The results of ISA accreditation can lead to strong decisions such as terminating an HEI if it has not met the required standards. Hence, HEIs as well as the Omani community look forward to the results of ISA.

OAAA has set nine standards and each HEI in Oman is required to fulfill the requirements set for each standard. With an assumption that the nature of each standard requires different types of methods to assure, measure, and manage the quality, this study investigated the instruments frequently used by HEIs for each standard, types of analyses used for the gathered data, the usage of generated data in making improvements, and the barriers faced by HEIs while making use of this data. The main objectives of the study are:

1) to explore the common instruments used by HEIs in Oman to measure quality,

2) to investigate how HEIs make use of the data gathered by using these instruments for quality improvement,

3) to examine the types of analyses used for the data gathered to measure the quality of HEIs

4) to identify the barriers that HEIs face when using the data gathered by these instruments to measure their quality.

The rest of the article is organized in the following manner: the second section presents the literature review, the third comprises research methods, and the fourth and fifth sections present and discuss the findings, and the last section is the conclusion.

LITERATURE REVIEW

The literature presented in this section covers the methods and techniques used by a number of HEIs across the world to measure the quality of its services with a focus on the instruments used to collect and analyze data and related issues.

The need for QA in the service sector was identified in 1980s with the realization of the service sector becoming the dominant element of the economy in the industrialized countries. This encouraged the development of SERVQUAL which was the first quality measurement tool for the service sector (Parasuraman, Zeithaml, and Berry 1988; Parasuraman, Zeithaml, and Berry 1985). SERVQUAL was adopted by HEIs for QA purposes and is still commonly used by HEIs across the globe. A similar method, HEdPERF, was developed by Abdullah (2006) specifically for HEIs to collect data from students which helps the institutions to evaluate the quality of its services. This method is also adopted in different parts of the world (Duzevic, Ceh Casni, and Lazibat 2015). Ranking is another technique commonly used in the HE sector according to the "new managerialism" at HEIs (Lynch 2015, 190). However, around 40 per cent weight is based on survey data in the global rankings like QS2 and THE.3 The key is that ranking depends on numbers and numbers have a strong influence on the public. When HEIs focus on Key Performance Indicators (KPIs), their direct attention is on "measured outputs rather than processes and inputs within education" (Lynch 2015, 194).

Generally, in the world of QA, there are Seven Basic Quality Tools (SBQT) which include: cause and effect diagram, check sheet, control charts, histogram, pareto chart, scatter diagram, and stratification/flow chart (ASQ 2017). In HE sector, the SBQT are used also for quality purpose (Foster 2010) in addition to some other instruments like focus group analyses, in-depth interviews, trend analyses, matrices, surveys, bar charts, benchmarking, tree diagrams, quality function deployment, conformation check-sheets, affinity diagrams, operational definitions, peer review (Widrick et al. 2010); frequency diagrams and pie charts, statistical quality control (SQC) and statistical process control (SPC) Charts, and correlation analyses (Iyer 2018); questionnaires (structured or unstructured), meetings (formal or informal/ structured or unstructured/ focus or non-directive), focus group discussion, observations, and other experiment and research techniques (Annum 2017). The checklist or sheet is one of the SBQT which was used by McGahan, Jackson, and Premer (2015) for evaluation of courses.

A database titled "Measuring Quality in Higher Education" was established by the National Institute for Learning Outcomes, United States, to assess the quality in HE which "includes four categories: assessment instruments; software tools and platforms; benchmarking systems and other data resources; and assessment initiatives, collaborations, and custom services" (National Research Council 2012, 31). In addition, Miller (2016) provided an in-depth explanation of different methods used in assessing the organizational performance in HE. These methods are used for measuring, evaluating, or conveying performance results. These methods are either direct like surveys, interviews, focus groups discussions or study documents which measure the levels of knowledge of students like assessment results, or indirect methods such as documents, databases, and published survey reports. She also explained the use of technology in assessments such as different software and platforms used by HEIs around the world. The results and output of these methods are normally written reports.

Furthermore, a structured mechanism is followed at HEIs for continuous review and development of teaching and learning processes such as teachers' diary, attendance register, student feedback, performance appraisal, result analysis, and management meetings with the faculty and other stakeholders (Aithal 2015). Many HEIs use peer observation method for different purposes, for example, QA, training and course development, or teaching improvement and innovation (Jones and Gallen 2016). Surveys or questionnaires are commonly used in HEIs for decision making, research, and to identify the opinions of the public (Barge and Gehlbach 2012; Mijić and Janković 2014). Like other business organizations, HEIs also use performance measures like Key Performance Indicators (KPIs) for planning and QA purposes. This covers evaluation of the individual staff, academic and non-academic programmes, departments, and courses or modules. It also covers competitive and internal data related to students or staff or other services like student assessments (National Institute of Standards and Technology 2015; Varouchas, Sicilia, and Sánchez-Alonso 2018). Moreover, HEIs use software and platforms for data collection, data management, and data analytics. Learning analytics is also used as a tool for QA and quality improvement, and to assess and act based on student data, and for learning development (Sclater, Peasgood, and Mullan 2016).

The above details reveal that there is a lot of impetus on data collection; however, the usage of collected data in making improvements is overlooked. In the report of the project "Examining Quality Culture in European Higher Education Institutions", the authors raised this concern stating that HEIs are "good at collecting information, but not always using it" which means that there is uncertainty regarding the usage of the information and data gathered for institutional QA, planning, and improvements (Loukkola and Zhang 2010, 37-38).

In the Sultanate of Oman, Al Sarmi and Al-Hemyari (2014) conducted a national project titled "Performance indicators for private HEIs" under the supervision of the Ministry of Higher Education (MOHE). They discovered that there is lack of clarity in assessing the performance of HEIs by using indicators. Hence, they raised another issue of the unavailability of data needed to calculate the indicators in Omani HEIs. At that stage, HEIs may have the data in general but not in the specific format and classification required to calculate the performance indicators. In terms of the instruments used for QA, Al Amri, Jani, and Zubairi (2015) found that surveys are the most common instruments used by HEIs in Oman and the data analysis is mainly limited to descriptive statistics. There is a limited usage of the quality tools like check sheets, control charts, and histograms. It was also found that institutions mainly focused on the quality standards related to student learning by coursework programme, academic support, student and student support services, and staff and staff support services. The interviewees rated the performance of the measurement tools currently used at their institutions4 as fair or good with no one rating them as very good. Moreover, Alsarmi and Al-Hemyari (2015, 1) claim that there are some other methods used to ensure the quality of HE sector like "accreditation standards and performative evaluations".

Although the above literature gave an overview of the available studies on the implementation of techniques used in measuring the quality of HEIs, there is no specific study that investigated the instruments used by HEIs to meet the requirements of accreditation standards specifically in Oman. In addition, there is no explanation of the usage of the data gathered as well as the type of analysis done.

RESEARCH METHODS

Two instruments, in-depth interviews and check lists, were used to collect data from QAMs from Omani HEIs. Due to the nature of the questions used in the interviews, only staff members involved in QA activities could answer them clearly and precisely. The criteria for selecting the interviewees included seniority, teaching background, and involvement in the preparation for ISA. Hence, the sample included QAMs at the Deanery level as well. The sample comprised QAMs from five Omani HEIs. According to Dworkin (2012), the acceptable sample size for qualitative studies used in in-depth interviews is from 5 to 50 participants. One HEI from each classification type of Omani HEIs was selected on the basis of the highest number of active students.5 This ensured representation from all classification of Omani HEIs. The classifications are; Private College (PRV-C), Private University College (PRV-UC), Private University (PRV-U), Public College (PUB-C), and Public University (PUB-U). For the sake of maintaining consistency, only HEIs which are directly under supervision of MOHE, Oman and have gone through OAAA audit (OAAA 2016) were considered for this study. The interviews were voice recorded after taking permission from each interviewee. The language used was English. The interview recordings were then transcribed. The interviews took place in January 2018 and each one lasted between 1 to 2 hours.

The qualitative data were collected using a semi structured interview. The quantitative data were collected using a checklist which was designed for this purpose and included the list of instruments used at HEIs for QA which are; questionnaire, meeting, observation, focus group discussion, experiment and research, check sheet, chart and diagram, student assessment, performance evaluation (staff/programs/courses), software tools and platforms, benchmarking systems, and other Instruments. Both methods were implemented together. The first part of the interview was devoted to the explanation of the checklist by the first author for accuracy, filling of the checklist by the interviewee and then in calculating the frequency of each instrument stated by the QAM of each institution. During this part, the first research objective "to explore the common instruments used by HEIs in Oman to measure quality" was considered. Afterwards, the interviewees were asked to explain how they make use of the results/output that are generated by these instruments in assuring quality at their institution. This helped the researchers in meeting the remaining objectives of the study. It is important to highlight that this article discusses and presents the results of some questions only and not all the interview questions and ensuing discussions.

The interview questions and checklist were reviewed and validated by two experts from the related field in Oman. In addition, a pilot interview was conducted with a QAM of another HEI.6 The main aim of the pilot was to test the relevance of the instruments used and to assess the time required to conduct each interview. It was noticed from the pilot study that it will be difficult for the QAMs to recall all the instruments used during the interview. Therefore, the researchers decided to send a copy of the checklist prior to the interview.

The data analyses methods used were different for each instrument used. The checklist was analyzed using descriptive statistics where frequencies of each instrument were counted separately to find out the commonly used instruments at HEIs. This method helped in answering the first research question. The interview questions were analyzed by using thematic text analysis.

FINDINGS

This section briefs the results gathered from the interview and checklist...

Common instruments used by HEIs to measure quality

The QAMs identified the instruments they use for each standard in the checklist designed for this purpose. The full list of the most frequent instruments used by HEIs for each standard is available in Table 1. In general, it was found that HEIs mostly use meetings to measure quality in the areas related to Governance and Management (Standard 1). For Student Learning by Coursework (Standard 2), HEIs use various instruments. Student Learning by Research (Standard 3) is only applicable to HEIs offering research programmes. Hence, only the QAMs of PUB-U and PRV-U responded and they use different instruments for this standard. The quality of areas under Staff Research and Consultancy (Standard 4) is measured by using different kinds of instruments in each HEI except PUB-C where this standard is not applicable.7Similarly, it was found that different instruments are used by these five HEIs to measure the quality of Industry and Community Engagement (Standard 5). The QAMs stated that they mainly use questionnaires to ensure the quality of Academic Support Services (Standard 6) except the QAM of PRV-U who mentioned that they use observation. For Students and Student Support Services (Standard 7), questionnaire is the most frequently used instrument in PUB-U, PRV-UC, and PUB-C. To measure the quality of Staff and Staff Support Services (Standard 8), HEIs use questionnaires most frequently, except PRV-U where meetings are used the most. Similar instruments are used to measure the quality of the last standard which focusses on General Support Services and Facilities (Standard 9).

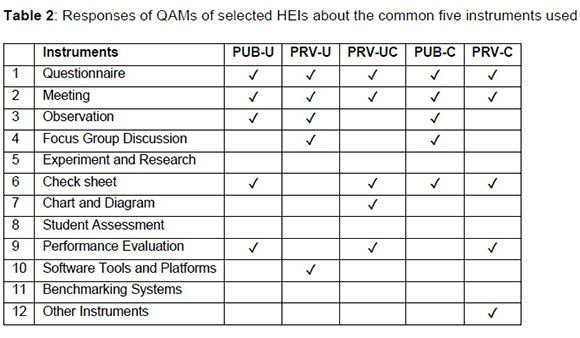

Moreover, the five most commonly used instruments at these five HEIs was calculated by identifying the instruments which have the highest frequencies irrespective of the standard. The results are summarized in Table 2 below. It is clear from the responses that questionnaires and meetings are the most frequently used instruments in all HEIs, followed by checklists which is commonly used in four HEIs out of five for QA purposes. Observations, performance evaluations, and focus group discussions are frequently used by three HEIs, and charts and diagrams are used in two HEIs only. To sum up, the five most common instruments used by HEIs are; questionnaire, meeting, check sheet, observation, and performance evaluation.

Approaches adopted by HEIs to make use of the data gathered by using these instruments for quality improvement

The most popular instrument to evaluate most of the educational and other administrative services by the HEIs was questionnaire. It is used "to collect feedback from faculty, administrative staff, and students, in addition to other stakeholders and representatives from the community" (PRV-UC). The HEIs prefer to use questionnaire because it can be used to track the improvements made over the years. It was found that all five HEIs somehow follow similar processes for questionnaire design and implementation. However, all the QAMs did not confirm the closing of the loop and informing the stakeholders about the results and actions taken. Some good practices were also identified, for instance, one HEI responded to the stakeholders' feedback by using IPTVs8 placed in different parts of the campus projecting the "You said, we did" campaign which meant that staff and students gave suggestions and HEIs took required actions. This assured their students and staff that their voice is heard (PRV-C). The QAMs of some HEIs clarified that steps are taken to ensure that students' comments are given appropriate attention and they are taken to higher authorities. They inform students about the action points drawn in response to their feedback during the student council meeting.

The second most popular instrument used by all the HEIs for QA is meetings. It is a platform for discussions based on which decisions are made with specified accountability and time frame. These decisions are used "to introduce changes or implement suggestions and recommendations" (PUB-U). The first point in the agenda to be discussed is usually the action points on the decisions made in the previous meeting. The follow up on the implementation of the actions is normally the responsibility of the QA team. One good practice followed at the PUB-U is the decision tracking system so that the institution can find out the number of action points targeted as well as actions implemented in a stipulated timeframe.

The next instrument in the order of popularity is the checklist. The HEIs use it for activities such as, staff induction, academic integrity, assessments approval, classrooms observations, laboratory activities, and performance measurements. The benefit of using a checklist is that it ensures that all the relevant areas are covered, and the process is moving in the well planned and right direction. It is also used "to measure certain performance or satisfaction of certain services provided" (PUB-U).

Observation is also used quite often by HEIs, specifically for teaching evaluation like Head of the Department (HoD) and peer reviews. These observations are conducted to ensure that the quality of teaching is maintained. They are also used for performance evaluation, "it helps us to know the shortcomings which are discussed in meetings and action plans are set" (PRV-U). Regarding the follow up mechanism for improvement, if there are any negative remarks, there is a re-observation.

Performance evaluation is implemented in HEIs by setting KPIs which is done at different layers comprising individuals, departments, programs, as well as the institution. It is introduced as an "assessment method in all areas. The results are used to take decisions to reach the targeted objectives" (PUB-U). The HEIs also have annual, biannual, and semester wise systems of tracking the performance and accomplishments in various areas. It is done for programmes as well. Each HEI has its own system for evaluating its academic programmes. For example, PRV-UC evaluates its performance with the affiliated university as their personnel come once a year for review.

There are some other instruments which are used frequently by a few HEIs such as Focus Group Discussion (FGD), charts and diagrams, software tools, and reports. FGDs are used by HEIs for discussions on important topics whenever, there is a need. Some HEIs prefer to use charts and diagrams "rather than writing long paragraphs. It helps especially for presenting information to the authorities at the top management level because they do not have time to go into details. It gives precise information in a few minutes" (PRV-UC). Software tools and platforms are also commonly used in HEIs to extract reports and to "analyze the statistics online" (PRV-U). The QAM of one HEI declared that other instruments such as reports are also used. These reports are submitted to internals and externals and then there are external review reports from partner institutions.

The type of analysis used for the data gathered

HEIs mainly discussed the data and information collected from different instruments during meetings. They discuss important points and make decisions at meetings. These decisions are written in the minutes of the meetings along with action points, responsibility, follow-ups, and deadlines - "accountability of the responsible person is established. Each meeting has a follow up meeting to make sure that the actions were implemented, and the systems and procedures are in place" (PRV-U). Usually, the QA departments within the HEI are responsible for the follow-up of the implementation. However, for specific issues, they tend to have FGDs

It was also noticed that HEIs prepare various kinds of reports from the outputs of these instruments such as, reports of survey results. Each unit or committee prepares its annual report and the QA Office makes the institutional annual report. On being asked about the steps taken on any serious observations or recommendations made in the reports, one of the QAM said "we again discuss it in the meetings ... where everybody is involved and knows what is happening." (PRV-U).

In addition to the discussion of results in formal meetings and preparing regular reports, the researchers also investigated the type of analysis used for the data gathered by these instruments to measure the quality at the chosen five HEIs. They studied the complete responses of the interviewees to identify the methods used for data analysis by these institutions. It was found that HEIs use graphs and diagrams to present the results gathered by using the mentioned instruments. For example, they prefer to show the results in graphs as they "try to analyze the student or staff profile or research performance and present results in the form of charts and diagrams rather than writing long paragraphs" (PRV-UC). It was also noticed that the results are presented as numbers and frequencies. They identify the services which received a high level of dissatisfaction by looking into the frequencies. "We do the analysis and then send results to the concerned departments and ask them to send action plans for the areas which were rated as unsatisfactory", mentioned a QAM (PRV-C). It is worth mentioning that some HEIs have set a benchmark score of satisfaction for the results attained through questionnaires. If the score is below 3.5 (out of 5), they prepare follow up action plans and make the desired changes (PUB-C).

The barriers that HEIs faced to make use of the data gathered by these instruments

Though the QAMs agree upon the importance of using the results gathered by these instruments, they mentioned that they are not able to accomplish much in this regard due to the difficulties they face. For example, one QAM informed, "we are facing a challenge in persuading people to make use of the available statistics. They believe that it is only for documentation purposes" (PRV-UC). Some QAMs mentioned that the other staff members at their institutions do not have much awareness about the importance of these instruments and the results to evaluate and manage quality. Another QAM stated "there is not much awareness among our staff including the management on how the information gathered by the instruments could effectively be used to ensure quality at our institution" (PUB-C).

DISCUSSION

The analysis of findings revealed that some data collection instruments are more popular than the others. For example, questionnaires for students, staff, alumni, and employer surveys are used quite frequently in Omani HEIs which is similar to the trend observed in other countries. Around 60 percent of the published HE researches used survey data (Fosnacht et al. 2017). It is the tool used to evaluate many services and assess the satisfaction level of different stakeholders. It was also found that HEIs conduct regular meetings as part of QA activities. Normally, during these meetings, staff members discuss issues, make decisions, and assign responsibilities which should be completed within the stipulated time frame. This finding supports the observation made by Aithal (2015) who mentioned that QA meetings are organized to discuss related matters with different stakeholders. In addition, HEIs use checklists quite frequently. It is used to ensure that the process is going in the right direction, all the requirements are met, all the required documents are submitted, and nothing is missed out. The QAMs also confirmed that the checklist is used for academic support activities. The results of other studies also confirm the use of checklists in academic support activities such as course evaluation (Krause et al. 2015). Observations were also found to be one of the important instruments used for quality monitoring. In the HE sector, they are mainly used for classroom or peer observation. According to Annum (2017), it is an important method to collect qualitative data which requires visual and oral information. The discussion with the QAMs indicated that HoD and Peer classroom observations are used for several reasons. These results support a previous research stating that the aim of using peer observations in HEIs could be a method for development or for QA evaluation (Jones and Gallen 2016). HEIs also use performance evaluation methods which is conducted at different levels of the institutions. This finding was discussed earlier by Miller (2016) who stated that educational institutions set different performance indicators. However, Lynch (2015) discussed in his paper that institutions which rely on KPIs focus more on the results than the process. Hence, it can be assumed that the focus of these HEIs might not be on values and other human factors but on the results. It was also found that HEIs use FGDs. According to the interviewed QAMs, FGDs are used to get detailed insights on stakeholders' perceptions on various academic and non-academic issues. Miller (2016) also stated that it is a method used to collect attitudes and perceptions of stakeholders. It was observed that charts and diagrams are used by one HEI which confirms the results found by the authors during the preliminary study (Al Amri, Jani, and Zubairi 2015). Miller (2016) also found that HEIs use flowcharts to draw the processes and charts to depict the organizational structure. However, they do not use the other types of charts and diagrams which are used in Total Quality Management (TQM) as stated by Iyer (2018) which are fishbone diagrams, pareto charts, statistical process control charts, and scatter diagrams and plots. The QAMs mentioned that they use Software tools and platforms to monitor the institutional data and produce statistical reports, which concurs with the findings of Miller (2016). Some QAMs declared that they use reports as a tool or an instrument for quality purposes which was also discussed by Miller (2016).

By putting the results, data, and information together, HEIs end up having a large amount of data. All this information should give strong support for decision making and quality enhancement. All QAMs stated that the results of the data gathered are discussed in formal meetings or presented as reports. Moreover, the data gathered by these instruments is analyzed by using summary statistics only which includes frequencies, and standardized scoring methods to measure the quality of HEIs. Therefore, the usage of the data collected appears to be rather basic. HEIs can use this information better for planning and improvements. Hence, it can be safely assumed that the situation in some HEIs in Oman is similar to the HEIs discussed by Loukkola and Zhang (2010) where HEIs are good at collecting information but not using it efficiently in making the required improvements.

CONCLUSION

This research article presented a part of results obtained from interviewing QAMs of five Omani HEIs. It identified the instruments used by HEIs in Oman to meet OAAA standards and examine their usage level, nature of analysis, and barriers faced. It was found that questionnaires and meetings are the most common instruments to measure the efficacy of their current practices. Additionally, checklists, observations, and performance evaluations are used for QA purposes. In addition, the QAMs explained the methods they followed at their HEIs to make use of the data gathered by using each instrument separately. In terms of the type of analysis used for the data gathered by these instruments to measure the quality of HEIs, it was found that the results are discussed in formal meetings and presented in reports. In addition, it was observed that most of the HEIs seem to use only the summary of the statistical data and do not get engaged in deeper analyses and follow ups. QAMs also mentioned that they face difficulties in making people understand the importance of using the results of these instruments for quality enhancement.

The study provides an in depth analysis of the instruments used by HEIs for QA purposes in Oman along with the discussion on the methods adopted for data analysis and usage of data to enhance quality, an area which has received little attention especially in the context of Oman. However, due to time and resource constraints, the sample of QAMs, which comprised five participants, was rather small. Despite this limitation, this study is unique as it evaluated the usefulness of the instruments used by QA professionals in the HE sector. Also, it presented some good practices in HEIs in Oman which can be used by other HEIs to enhance the quality of education and other support services. The findings of this study will add value to the enrichment of quality in HEIs in Oman as it gives insights into the common instruments used to meet the standards set by OAAA, the methods adopted to analyse data, and the barriers faced by HEIs.

In future, it will be worthwhile to examine the effectiveness of these instruments by linking them with the accreditation results of the targeted HEIs. This will help in finding out which instrument is more appropriate to manage, measure, and assure the quality of each standard which can then be uniformly used by all HEIs in Oman and even in HEIs across the globe.

NOTES

1 . The technical definition of instrument in the context of this study refers to any tool, method, techniques, or instrument used by HEIs to measure, control, mange, or enhance the quality of its academic or administrative services.

2 . QS World University Rankings.

3 . Times Higher Education World University Rankings.

4 . On a five-point rating scale ranging from very poor to very good.

5 . Except in one classification where the second highest HEI was selected and not the first highest to avoid biases as the first author worked as Head of Quality Assurance Office at this HEI.

6 . It is a HEI with a good standard and accredited by OAAA. It is not targeted in this study.

7 . It is a technical public college under the supervision of Oman Ministry of Manpower (MOMP).

8 . Internet Protocol Television.

REFERENCES

Abdullah, Firdaus. 2006. "The development of HEdPERF: A new measuring instrument of service quality for the higher education sector." International Journal of Consumer Studies 30(6): 569-581. [ Links ]

Aithal, P. S. 2015. "Internal Quality Assurance Cell and its Contribution to Quality Improvement in Higher Education Institutions: A Case of SIMS." GE-INTERNATIONAL JOURNAL OF MANAGEMENT RESEARCH 3: 70-83. [ Links ]

Akhter, Fahim and Yasser Ibrahim. 2016. "Intelligent Accreditation System: A Survey of the Issues, Challenges, and Solution." International Journal of Advanced Computer Science and Applications 7(1): 477-484. [ Links ]

Al Amri, Amal, Rohana Jani, and Yong Zubairi. 2015. "Measurement Tools used in Quality Assurance in Higher Education Institutions." OQNHE Conference 2015: Quality Management & Enhancement in Higher Education, Muscat, 24-25 February, 2015. [ Links ]

Al Sarmi, Abdullah M. and Zuhair A. Al-Hemyari. 2014. "Some statistical characteristics of performance indicators for continued advancement of HEIs." International Journal of Quality and Innovation 2(3/4): 285-309. doi: 10.1504/IJQI.2014.066385. [ Links ]

Al-Sarmi, A. M. and Z. A. Al-Hemyari. 2015. "Performance Measurement in Private HEIs: Performance Indicators, Data Collection and Analysis." Paper presented at the Proceedings of the Conference "The Future of Education". [ Links ]

Al Tobi, Abdullah Saif and Solane Duque. 2015. "Approaches to quality assurance and accreditation in higher education: A comparison between the Sultanate of Oman and the Philippines." Perspectives of Innovations, Economics and Business 15(1): 41-49. [ Links ]

ASQ see American Society for Quality.

American Society for Quality. 2017. "WHAT ARE QUALITY ASSURANCE AND QUALITY CONTROL?" ASQ. http://asq.org/learn-about-quality/quality-assurance-quality-control/overview/overview.html. [ Links ]

Annum, Godfred. 2017. "Research Instruments for Data Collection." Educadium. http://campus.educadium.com/newmediart/file.php/137/Thesis_Repository/recds/assets/TWs/Ug radResearch/ResMethgen/files/notes/resInstrsem1.pdf. [ Links ]

Barge, Scott and Hunter Gehlbach. 2012. "Using the theory of satisficing to evaluate the quality of survey data." Research in Higher Education 53(2): 182-200. [ Links ]

Brockerhoff, Lisa, Jeroen Huisman, and Melissa Laufer. 2015. "Quality in Higher Education: A literature review." CHEGG. Ghent University. Ghent. https://www.onderwijsraad.nl/publicaties/2015/quality-in-higher-education-a-literature-review/item7280. [ Links ]

da Costa Vieira, Roberto and Irene Raguenet Troccoli. 2012. "Perceived quality in higher education service in brazil: the importance of physical evidence." InterSciencePlace 1(20). [ Links ]

Dužević, Ines, Anita Čeh Časni, and Tonći Lazibat. 2015. "Students' perception of the higher education service quality." Croatian Journal of Education: Hrvatski časopis za odgoj i obrazovanje 17(4): 37-67. [ Links ]

Dworkin, Shari L. 2012. "Sample size policy for qualitative studies using in-depth interviews." Archives of Sexual Behavior 41: 1319-1320. [ Links ]

Fosnacht, Kevin, Shimon Sarraf, Elijah Howe, and Leah K Peck. 2017. "How important are high response rates for college surveys?" The Review of Higher Education 40(2): 245-265. [ Links ]

Foster, S. Thomas. 2010. Managing Quality: Integrating the Supply Chain. 4th Edition. Pearson. [ Links ]

Goos, Maarten and Anna Salomons. 2017. "Measuring teaching quality in higher education: Assessing selection bias in course evaluations." Research in Higher Education 58(4): 341-364. doi: 10.1007/s11162-016-9429-8. [ Links ]

Hamad, Murtadha M. and Shumos T. Hammadi. 2011. "QUALITY ASSURAANCE EVALUATION FOR HIGHER EDUCATION INSTITUTIONSHIGHER EDUCATION INSTITUTIONS." International Journal of Database Management Systems ( IJDMS ): 88-98. [ Links ]

Iyer, Vijayan Gurumurthy. 2018. "Total Quality Management (TQM) or Continuous Improvement System (CIS) in Education Sector and Its Implementation Framework towards Sustainable International Development." 2018 International Conference on Computer Science, Electronics and Communication Engineering (CSECE 2018). [ Links ]

Jones, Mark H. and Anne-Marie Gallen. 2016. "Peer observation, feedback and reflection for development of practice in synchronous online teaching." Innovations in Education and Teaching International 53(6): 616-626. [ Links ]

Krause, Jackie, Laura Portolese Dias, Chris Schedler, J. Krause, L. P. Dias, and C. Schedler. 2015. "Competency-based education: A framework for measuring quality courses." Online Journal of Distance Learning Administration 18(1): 1-9. [ Links ]

Loukkola, Tia and Thérèse Zhang. 2010. Examining quality culture: Part 1-Quality assurance processes in higher education institutions: European University Association Brussels. [ Links ]

Lynch, Kathleen. 2015. "Control by numbers: New managerialism and ranking in higher education." Critical Studies in Education 56(2): 190-207. [ Links ]

McGahan, Steven J., Christina M. Jackson, and Karen Premer. 2015. "Online course quality assurance: Development of a quality checklist." InSight: A Journal of Scholarly Teaching 10: 126-140. [ Links ]

Mijić, Danijel, and Dragan Janković. 2014. "Using ICT to Support Alumni Data Collection in Higher Education." Croatian Journal of Education: Hrvatski časopis za odgoj i obrazovanje 16(4): 1147-1172. [ Links ]

Miller, Barbara A. 2016. Assessing organizational performance in higher education. John Wiley & Sons. [ Links ]

National Institute of Standards and Technology. 2015. Baldrige Performance Excellence Program (Education) 2015-2016. [ Links ]

National Research Council. 2012. Improving measurement of productivity in higher education: National Academies Press Washington, DC. [ Links ]

OAAA see Oman Academic Accreditation Authority.

Oman Academic Accreditation Authority. 2016. "Institutional Standards Assessment Manual. [ Links ]"

Oman Academic Accreditation Authority. 2016. "Review Schedule." http://www.oaaa.gov.om/Institution.aspx#Inst_Review. (Accessed 2 January 2020). [ Links ]

Parasuraman, Ananthanarayanan, Valarie A. Zeithaml, and Leonard L. Berry. 1988. "Servqual: A multiple-item scale for measuring consumer perc." Journal of Retailing 64(1): 12. [ Links ]

Parasuraman, Anantharanthan, Valarie A. Zeithaml, and Leonard L. Berry. 1985. "A conceptual model of service quality and its implications for future research." Journal of Marketing 49(4): 41-50. [ Links ]

Schindler, Laura, Sarah Puls-Elvidge, Heather Welzant, and Linda Crawford. 2015. "Definitions of quality in higher education: A synthesis of the literature." Journal of Higher Learning Research Communications 5(3): 3-13. [ Links ]

Sclater, Niall, Alice Peasgood, and Joel Mullan. 2016. "Learning analytics in higher education." London: Jisc. (Accessed 8 February 2017). [ Links ]

Singh, Vikram, Grover Sandeep, and Ashok Kumar. 2008. "Evaluation of quality in an educational institute: A quality function deployment approach." Educational Research and Review 3(4): 162-168. [ Links ]

Sunder M, Vijaya. 2016. "Constructs of quality in higher education services." International Journal of Productivity Performance Management 65(8): 1091-1111. [ Links ]

Varouchas, Emmanouil, Miguel-Ángel Sicilia, and Salvador Sánchez-Alonso. 2018. "Academics' Perceptions on Quality in Higher Education Shaping Key Performance Indicators." Sustainability 10(12): 4752. [ Links ]

Widrick, Stanley M., Erhan Mergen, and Delvin Grant. 2010. "Measuring the dimensions of quality in higher education." Total Quality Management 13(1): 123-131. doi: 10.1080/09544120120098609. [ Links ]