Servicios Personalizados

Articulo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares en Google

Similares en Google

Compartir

South African Journal of Higher Education

versión On-line ISSN 1753-5913

S. Afr. J. High. Educ. vol.34 no.4 Stellenbosch 2020

http://dx.doi.org/10.20853/34-4-3607

GENERAL ARTICLES

Early assessment as a predictor of academic performance: an analysis of the interaction between early assessment and academic performance by first-year accounting students at a South African university

A. BruwerI; J. M. OntongII

ISchool of Accountancy, Stellenbosch University, Stellenbosch, South Africa; e-mail: bruwera@sun.ac.za / https://orcid.org/0000-0002-0951-8397

IISchool of Accountancy, Stellenbosch University, Stellenbosch, South Africa; e-mail: ontongj@sun.ac.za / https://orcid.org/0000-0001-5097-8988

ABSTRACT

Current emphasis on students' academic adaptation in higher education necessitates the evaluation of predictors of successful preparation of first-year students. This study evaluated the implementation of early assessment (EA) in two first-year financial accounting courses at a South African university, namely an introductory financial accounting course, aimed at students without prior exposure to accounting, and a professional body accredited accounting course, aimed at students with prior exposure to accounting. This module-specific benchmark assessment, early in the academic year is often used as a predictor of preparation, adaptation and potential future academic performance. Given the discontinuation of a university, wide EA protocol within a faculty the academic contribution of the EA has been questioned. The study's focus comprised two research questions, namely whether the EA can be used as a predictor of future academic performance of two different academic performance groups and whether students in a lower academic performance group are able to achieve success despite a low result in the EA. The research methodology included an analysis of variances to determine the correlation between the early assessment and either mid-year or final marks, as well as significance evaluation of the measured variance analysis using the Bonferroni test. The findings suggest that whilst the EA could potentially be used as an early warning sign for at-risk academically low performing students, the EA could also result in a misleading representation to students in the high academic performance category. Principally, the EA was found not to be a reliable predictor of future academic performance. In addition, the mixed results obtained from the evaluation of the effect of the nature and format of the assessment suggested that it had a low and non-meaningful effect on the predictive value of the early assessment. The fact that students in the academically low performance group were largely able to pass the module, however, suggests the success of intervention utilising the EA as an early warning. Higher education module developers could therefore consider the implementation of an appropriate EA in various undergraduate modules, based on the findings.

Keywords: early assessment, first-years, financial accounting, academic success, predictor

INTRODUCTION

Within the first year of their studies at a South African university, students face various challenges, including adapting to the university environment (Mahlangu and Fraser 2017, 104). Students cannot comprehensively prepare to face every challenge expected on their journey in higher education (Sander et al. 2000, 309). Moreover, each student experiences and reacts to the multitude and uncertainty of the challenges differently (Steenkamp, Baard and Frick 2009, 113). Some of the main factors considered by students to be of help in their attempt to face these challenges are their study habits and motivation (Steenkamp et al. 2009, 113). In order to better prepare first-year students for these challenges in higher education and create an expectation as to what the future in a specific module might hold, the Stellenbosch University implemented the early assessment (EA) system across all faculties within the University (Stellenbosch University 2017). The EA is an assessment that takes place early in the academic year, the results of which aim to address the factors of developing appropriate study habits. The main function of the EA is that of giving students an indication of what to expect in a module regarding possible future academic performance. The mark achieved in the EA therefore acts as a potential predictor of future academic performance, everything else remaining constant. The EA further aims to serve as a potential early warning to lower academic performing students to seek academic intervention as well as a confirmation of potential ability to higher academic performing students.

Although the EA protocol, within Stellenbosch University, is a university wide protocol the academic contribution of the EA within higher education has been questioned, as a result the faculty of engineering at Stellenbosch University discontinued the use of the EA as an assessment tool. The continued use of the EA assessment within the other faculties has also been noted to be considered for revision. The discontinuation of the use of the EA by the faculty Engineering and the prospective view of other faculties further indicates that within there are mixed views on the efficiency of an EA. This study will therefore attempt to add to the literature, on whether the EA should be used as an efficient predictor for future academic performance. As part of its research questions, this study will analyse whether students in the lower academic performance category are able to utilise academic resources and or interventions to promote success within the module. Within the Financial Accounting (FA) first-year modules at Stellenbosch University, the EA historically has taken place approximately 6 weeks into the start of the academic year. Although the timing of the EA may be considered early, with a limited scope of the content of the year module covered, the EA policy suggests that the results of the EA should provide students with a significant understanding of their potential academic ability and could act as an early warning system.

The focus of this study was two first-year FA modules within Stellenbosch University, namely Financial Accounting 178 (FA178) and Financial Accounting 188 (FA188). FA178 forms part of the SAICA accredited Bachelor of Accounting programme, whilst FA188 forms part of all other undergraduate degree programmes offered by the Economic and Management Sciences faculty. Students enrolled in FA178 are expected to have prior accounting exposure whilst this is not a requirement for FA188. The period covered in this study is 2016, 2017 and 2018 and the sample includes all students registered for the two respective modules.

For purposes of the analysis, consistent with the notion that the EA acts as an early warning mechanism for low academic performers and a confirming tool for high academic performers, the data were analysed per module, per year, and further divided into two academic performance categories, namely:

• Low academic performing students - This classification includes students with an EA mark of less than 50 per cent. Students in this category are expected to apply the available academic resources and or interventions within the university or outside, such as external tutoring, in order to increase their performance. The expectation is that the EA does not act as a predictor of future academic performance but as a catalyst to induce the use of academic aids and programmes to adopt to the higher education model.

• High academic performing students - This classification includes students with an EA mark of 50 per cent and more. Students in this category are expected to make use of the EA mark as an indication of how they are adopting to higher education and the pace applied in the course. The EA is expected to be a predictor of future academic performance for these students.

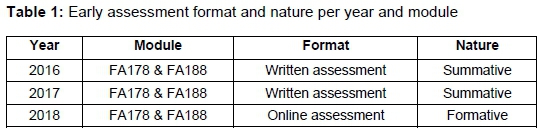

For the EA to fulfil its goal for the two groups, it is imperative that it presents accurate and reliable predictive results. The reliability of the EA influenced by the format and nature of the assessment. For the past three years (2016-2018), the EAs within the FA178 and FA188 modules took various forms in both format and nature, as shown in Table 1.

Regarding format, the EA changed from written assessments in 2016 and 2017 to an online assessment in 2018. In terms of the nature of the assessments, changes entailed being summative in 2016 and 2017 and formative in 2018. The implementation of a new flexible assessment regulation within the Economic and Management Sciences Faculty, effective from the 2018 academic year, prompted a review of the assessment method within the module and motivated the changes in the EA. The reliability of the EA results may be influenced by its format (electronic or written) and nature (formative or summative).

The effectiveness of the EA as risk identifier or predictor and its reliability based on the format and nature have been unclear and thus provided the platform for the research study performed by the team. Consequently, two research questions were identified:

• Research question 1: Is the EA mark, based on performance category classification, a predictor of future academic performance in the module, and could the format of the assessment influence the predictability?

• Research question 2: Does a low performance in the EA motivate students to seek intervention to improve their marks and achieve success in the module?

The study aims to contribute to the existing literature by providing evidence on whether early assessment can be utilised as a true reflection or predictor of either the actual mid-year June progress mark or final year-end marks achieved by students. This took into consideration the limited nature of content covered, as well as the characteristics of the format and nature of the assessment. Further to this, the results of this study give more clarity on whether students' predicted performance based on early assessment marks can be outperformed. The aim is for the low performing student, as identified by the EA, to achieve academic success within the module. The findings of this study could assist other module and course developers in determining the application of early assessments in their modules, and give guidance regarding the nature and format of the EA.

Following the discussion on the research questions and background to the EA concept, the existing literature on the use of early assessments as a benchmark is reviewed. Subsequently the descriptive and statistical analysis of the data obtained is presented. The study concludes with a summary of the findings and suggestions for future research on the use of EA as predictor in higher education.

LITERATURE REVIEW

The existing literature on the use of a module-specific early assessment as a predictor and indicator of student academic performance within a module at a higher education institution is very limited. It is however noted that a multitude of prior research studies have highlighted the importance of assessments for students' learning (Watson et al. 2007, 1; Rebele et al. 1998, 179). Literature suggests that the assessment opportunities presented to students are two-fold: It could be regarded as an opportunity to induce learning as well as a motivation, inducing a commitment to learn (Yorke 2003, 477). The motivation factor mainly aims at the academically low performing groups where the EA is utilised as a risk identifier and consequently an early warning mechanism. The motivation obtained from the EA would aim to encourage students with a lower measured level of academic skill to seek opportunity to develop their skills and increase their possible chance of pass the module (Steenkamp et al. 2009, 113).

Furthermore, prior research indicates that early assessment in the form of benchmark tests could potentially act as an indicator of a student's academic readiness for higher education (Howell, Kurlaender, and Grodsky 2010, 726). The benchmark test research performed by Howell et al. (2010, 726) at an American university indicated that students with a higher level of measured academic skills have a bigger chance of graduating from higher education. However, the benchmark test has the disposition of being based on prior high school knowledge and not on the knowledge and adaptation required by the module (Howell et al. 2010, 726). To address this disposition, Stellenbosch University adopted and implemented an EA system in 2007 (Stellenbosch University 2017). To date, limited research has been conducted on the success of this type of assessment within the South African higher education milieu.

Within the South African context, it has previously been found that students' prior exposure to accounting related subjects, such as mathematics and economic sciences, could influence their performance in the introduction to accounting module (Steenkamp et al. 2009, 113). It has further become evident that changes in the South African primary and secondary education system have amplified the challenges of student adaptation when entering higher education (Basson 2004, 31). To identify the extent to which students need to adapt when entering from the school system, South African universities implemented the national benchmark tests (NBT). Stellenbosch University uses the NBT for selection purposes in specific programmes and placement purposes with regard to extended programmes. Of note is that previous research suggests that, whilst the NBT could be complement to the selection processes, it could not be used as an academic predictor of performance (Van Walbeek, Rankin, Sebastiao and Schöer 2012, 564). Van Walbeek et al. (2012, 564) showed that the use of the National Senior Certificate (NSC) marks was a more accurate predictor of performance in an economic module based on quantitative and academic literacy.

The research by Howell et al. (2010, 726) and Van Walbeek et al. (2012, 564) thus sets a foundation for this study and supports the objectives of an EA as envisioned by the EA regulation and implementation (Stellenbosch University 2017). The study focused on the academic readiness of students in accounting-specific modules, with a particular focus on readiness for analytical thinking rather than general academic skill assessed by a benchmark test. It therefore contributes to the existing literature by providing research on module-specific EA based on content of the first-year financial accounting students.

RESEARCH METHODOLOGY

The study utilised quantitative data obtained from study records of students enrolled in FA178 and FA188 modules. The records comprised EA marks, June progress marks, and final year-end marks for the years 2016, 2017 and 2018. The research performed combined a descriptive and statistical analysis approach on the population of students which was limited to only include those students who obtained an EA mark in the specific year, and excluded students who discontinued the module or obtained a zero June progress or final year-end mark due to insufficient assessment opportunities used.

To address the two research questions identified for purposes of this study, the following methodology was applied:

• Research question 1: Is the EA mark, based on performance category classification, a predictor of future academic performance in the module, and could the format of the assessment influence the predictability?

The study analysed the relationship between students' EA marks and their June progress marks, as well as between students' EA marks and their final year-end marks. This was done to evaluate EA as a predictor of future academic performance in terms of statistically significant relationships between the marks achieved. Statistical significance was determined by applying an analysis of variances (ANOVA) to the variance between EA marks and either June progress or year-end final marks for the 2016, 2017 and 2018 academic years in the FA178 and FA 188 modules offered at Stellenbosch University. A 95 per cent confidence level was used and all items with p-values <0.05 were deemed statistically significant, this is indicated in bold throughout the study. Further statistical analysis was done using a Bonferroni test to determine the statistical significance of the variance of the EA mark and students' June progress marks or year-end final marks.

• Research question 2: Does a low performance in the EA motivate students to seek intervention to improve their marks and achieve success in the module?

All students with EA marks of less than 50 per cent had been included in the low academic performance group. The final marks of these students in the two FA modules were obtained and categorised into two groups, namely (1) students who passed the module (achieved a year-end final mark of 50% or more) and (2) students who did not pass the module. The number of students in each category was expressed as a percentage of the total low academic performance group to determine to what extent students who did not pass the EA were able to seek academic intervention and improve their marks.

FINDINGS

The summary of the findings commences with a descriptive analysis of the data, followed by an inferential statistical analysis per research question.

Descriptive analysis

Table 2 summarises the descriptive characteristics of students enrolled in the FA178 and FA188 modules, indicated per year and in terms of the mean EA mark, June progress mark (June) and year-end final mark (Final). The number of students included in the population of each category is also shown.

The descriptive analysis showed that, with the exception of 2016 for FA178, the mean EA mark is higher than the mean June progress mark and mean final mark. This suggests that students achieve higher mean marks in the EA than in later assessments. This trend was further analysed statistically.

Statistical analysis

The findings of the statistical analyses performed are presented per research question and further analysed and presented per module and year.

• Research question 1: Is the EA mark, based on performance category classification, a predictor of future academic performance in the module, and could the format of the assessment influence the predictability?

In order to determine if the EA mark serves as a potential predictor of future academic performance in the two modules, an ANOVA was performed on the variance between the EA marks and June progress marks as well as the variance between the EA mark and final year-end mark per module. This was done per year and per academic performance classification. The graphical depiction of the results of the ANOVA (Figures 1 and 2) and the results of the Bonferroni test (Tables 3, 4, 5 and 6) are shown below. The results are summarised and discussed per module, starting with FA178 and followed by FA188.

Results for FA178

The graphical representation of the ANOVA (Figure 1) below shows the findings in this module. Significance (p-value <0.05) is displayed via the plot point items having corresponding letters assigned to them on the ANOVA graph.

It is evident that in the majority of occurrences, the letter representation for the EA differed from the letter representations for the June progress and final year-end marks. This indicates that the mean EA marks differed significantly from the mean June progress and mean final year-end marks. However, significant statistical similarity was noted between the EA mark and June progress mark in 2016 for high academic performance and between the EA mark and June progress mark in 2017 for low academic performance group students. This was further analysed per academic performance category.

Results within the high academic performance category of FA178

Consistent with Figure 1, Table 3 shows that within the high academic performance category, the EA mark was statistically different to the June progress and final year-end mark for all years, with the exception of the EA and June progress in 2016. The mean EA mark was higher than the mean June progress mark and mean year-end final mark for all years, suggesting that the EA marks provided students in the high academic performance group with an unrealistic expectation of future academic performance.

The results of the Bonferroni test, included in Table 3, further support the effect of the EA reflected in depiction of the ANOVA in Figure 1. The significance of the difference between the EA and June progress mark had a p-value of 0.360, suggesting no significant difference. The similarity in the results of the EA and June progress mark in 2016 might suggest that written summative assessments provide a better predictor of academic performance, as no similarity was noted in the online formative assessments.

Results within the low academic performance category of FA178

Consistent with Figure 1, Table 4 shows mixed results within the low academic performance category. The EA mark is statistically different to the June progress and final year-end mark for 2016, whilst for 2017 the findings noted no statistically significant differences. The statistically significant difference between the EA marks and both the June progress mark and the year-end final mark, however, reappeared in 2018. It is further noted that the mean EA for 2016, 2017 and 2018 is lower than the mean June progress mark for all three years and the 2016, 2017 mean year-end final mark (conversely higher in 2017 than the mean final mark). The mean final mark, however, does not exceed 50 per cent, suggesting that a large number of these students were not able to achieve academic success in the module.

Given the statistical similarity in 2017, it is noteworthy that the EA, June progress and final year-end marks for 2017 were based on summative written assessments, suggesting that for the low academic performance group a written summative test provides a more accurate and reliable statistical predictor of future academic performance within a module.

Results for FA188

The findings of the ANOVA, represented in Figure 2 below, indicate the effect of the EA in the FA188 module. Significance (p-values <0.05) are displayed via the plot point items having corresponding letters assigned to them on the ANOVA graph.

It is noted that the letter representations of the EA marks for the high academic performance category occurrences differed in all three years. The difference in the letter depiction for the June progress and final year-end marks indicates that the mean EA marks differed significantly from the mean June progress and mean final year-end marks. Within the low academic performance category, all occurrences had significant statistical similarity. These results were further analysed per academic performance category and presented below. The study also evaluated the findings per academic group based on the results of the Bonferroni test as included in Table 5 and Table 6.

High academic performance category - FA188

Within the high academic performance category, the EA mark was statistically different to the June progress and final year-end marks for all years. The findings, consistent with Figure 2 above, support the results of the Bonferroni test, as included in Table 5 below. Consistent with the findings of FA178, it is noted that the mean EA mark was higher than the mean June progress and mean year-end final mark for all years.

The results shown in Figure 2 and Table 5 above suggest that the nature and format of the EA do not have a significant influence on the reliability of the EA as a predictor of future academic performance, as consistent results were obtained in FA178 for all years.

Low academic performance category - FA188

Within the low academic category, the EA mark is consistently statistically similar to both the June progress mark and final year-end mark for all years. The findings, as shown in Figure 2 above, are supported by the results of the Bonferroni test, with p-values above 0.05, as included in Table 6 below. Consistent with the findings of FA178, the mean year-end final mark for students with an EA mark of below 50 per cent also suggests that a large number of students in this performance category were not able to pass the module.

It is noteworthy that the assessment nature and format of the EA were different in 2016 and 2017 from that in 2018, being summative and written in 2016 and 2017 whilst formative and online in 2018. Evidently, the results suggest that format and nature do not play a significant role in the reliability of the EA marks as potential predictor of future academic performance.

High-level analysis of overall findings to address research question 1

The findings are mixed on determining whether the EA can be used as a reliable predictor of future academic performance. In the FA178 module, there was a significant statistical difference for eleven out of the twelve instances, suggesting that the EA is not a reliable predictor of future academic performance for both the high and low academic performance categories. However, in the low academic performance category within FA188, the findings suggest that the EA is a potential predictor of future academic performance, with the EA mark being statistically similar to either the June progress or year-end final mark for five out of six instances. Yet, for the high academic performance category within FA188, the results of the EA differed statistically significantly in all six instances, suggesting that the EA is not a reliable predictor of future academic performance for the high academic performance category.

The findings further suggest that within the high academic performance category of both modules, the nature and format of the EA did not have an impact on improving the predictability of the assessment. Within the low academic performance category of both modules, increased instances of statistical similarity were noted in the findings between the EA and either June progress or final year-end marks. This finding therefore suggests no clear preference in nature or format of assessments for the low academic performance category. These findings further supported the need to analyse the data in terms of research question 2 to determine whether students who failed the EA were able to improve their marks in order to eventually achieve success in the module.

Analysis of data to answer research question 2

• Research question 2: Does a low performance in the EA motivate students to seek intervention to improve their marks and achieve success in the module?

Data of students in the low academic performance group were further analysed to answer the second research question. The final year-end marks of all students with EA marks lower than 50 per cent in the two FA modules were obtained and categorised into two groups, namely (1) students who passed the module and (2) students who did not pass the module. The number of students in each category was calculated and indicated in terms of a percentage of the total low academic performance group. This analysis, shown in Table 7, illustrates whether failure to pass the EA served as motivation for students to seek academic intervention to promote their success in the module.

Markedly, the percentage pass rate of 15 per cent in FA188 in 2018 is significantly lower than the other pass rates observed. A possible reason for this is that the mean EA mark in 2018 of 29.722 per cent differs significantly from the 2016 and 2017 mean EA marks of 36.868 per cent and 37.212 per cent. The mean lower performance category students therefore required a larger positive improvement in their subsequent marks in order to be academically successful in the module. The analysis further suggests that reduced mean EA marks may be a result of the change in nature and format of the EA from a written summative assessment to an online formative assessment in 2018.

The results of this analysis, as shown in Table 7, suggest that certain students who had failed the EA were able to improve their marks in order to be academically successful in the module. The majority, more than 50 per cent of students in the low academic performance category, were able to still pass the module in all years except for in 2017 (FA178) and in 2018 (FA188). This shows that these students had been able to timely adopt academic interventions in order to ensure academic success in their first year based on their EA mark. Early warning provided by the EA mark had therefore been successful in assisting with future academic performance.

Further research is suggested to determine whether students who did not pass the module despite this early warning had made use of all the academic interventions available.

CONCLUSION

The findings of this study suggest for both first-year accounting modules, that although there are instances where the early assessment serves as reliable predictor of future academic performance, the results are mixed per module and per year. This suggest that, on an overall basis, the EA is not a reliable predictor of future academic performance and might not be a suitable benchmark test within higher education. It is, however, noteworthy that within the low academic performance category the EA marks largely serve as a reliable predictor of future performance as opposed to that within the high academic performance classification.

The high academic performance category on average experienced a decrease in future academic performance, observed mostly in the module, which required prior exposure to accounting. However, for the most part, the EA was found to be a reliable predictor of future academic performance within the academically low performance group. The findings further indicate that in all instances the mean EA mark for the high academic performance group was higher than the mean June progress as well as the mean final year-end mark, whereas in the low academic performance group the movement varied. This suggests that whilst the EA can serve as an early warning mechanism within the low academic performance group, it could conversely create misleading expectations in students in the high academic performance category regarding their future academic performance within the module. Further analysis of the low academic performance group showed that the majority of students who had not passed the EA were still capable of passing the module. This could be due to various interventions available within the modules. As mentioned, this phenomenon presents opportunities for future research.

Finally, the nature and format of the EA, being either summative or formative, and either a written or an online assessment, were not found to determine the reliability of the predictive value of the EA for future academic performance. Assessment policies on the implementation of the EA and its nature and format could benefit from considering the variables found in the study in the rollout of this module-specific benchmark test within higher education. The implication of these findings on higher education is that this study highlights the need for the review and potential modification of current assessment policies and regulations. The findings suggest that module planners will need to critically analyse the tools used measure student readiness and adaption within the first year of higher education. It is concluded it is critical that the EA, as an assessment tool, needs to be explained properly and understood by all relevant stakeholders in order to be utilised effectively.

REFERENCES

Basson, R. 2004. Interpreting an integrated curriculum in a non-racial, private, alternative secondary school in South Africa. South African Journal of Education 24(1): 31-41. [ Links ]

Howell, J., M. Kurlaender and E. Grodsky. 2010. Postsecondary preparation and remediation: Examining the effect of the early assessment program at California State University. Journal of Policy Analysis and Management 29(4): 726-748. [ Links ]

Mahlangu, T. P. and W. J. Fraser. 2017. The academic experiences of grade 12 top achievers in maintaining excellence in first-year university programmes. South African Journal of Higher Education 29(4): 104-118. [ Links ]

Rebele, J. E., B. A. Apostolou, F. A. Buckless, J. M. Hassell, L. R. Paquette and D. E. Stout. 1998. Accounting education literature review (1991-1997). Part II. Students, educational technology, assessment and faculty issues. Journal of Accounting Education 16(2): 179-245. [ Links ]

Sander, P., K. Stevenson, M. King and D. Coates. 2000. University students' expectations of teaching. Studies in Higher Education 25(3): 309-323. [ Links ]

Steenkamp, L. P., R. S. Baard and B. L. Frick. 2009. Factors influencing success in first-year Accounting at a South African university: A compromise comparison between lecturers' assumptions and students' perceptions. South African Journal of Accounting Research 23(1): 113-140. [ Links ]

Stellenbosch University. 2017. Early assessment: protocol. http://www.sun.ac.za/english/learning-teaching/ctl/Documents/Vroeë%20assessering%20protokol_Eng.pdf (Accessed June 2019). [ Links ]

Van Walbeek, C., N. Rankin, C. Sebastiao and V. Schöer. 2012. Predictors of academic performance: National Senior Certificate versus National Benchmark Test. South African Journal of Higher Education 26(3): 564-585. [ Links ]

Watson, S. F., B. Apostolou, J. M. Hassell and S. A. Webber. 2007. Accounting education literature review (2003-2005). Journal of Accounting Education 25(1-2): 1-58. [ Links ]

Yorke, M. 2003. Formative assessment in higher education: Moves towards theory and the enhancement of pedagogic practice. Higher Education 45(4): 477-501. [ Links ]