Servicios Personalizados

Articulo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares en Google

Similares en Google

Compartir

SA Orthopaedic Journal

versión On-line ISSN 2309-8309

versión impresa ISSN 1681-150X

SA orthop. j. vol.17 no.3 Centurion ago./sep. 2018

http://dx.doi.org/10.17159/2309-8309/2018/v17n3a2

EDUCATION

The FC Orth(SA) final examination: how effective is the written component?

Swanepoel SI; Dunn RII; Klopper JIII; Held MIV

IMBChB(UP), Registrar, Department of Orthopaedic Surgery, Faculty of Health Sciences, University of Cape Town, South Africa

IIMBChB(UCT), MMed(Orth), FC Orth (SA); Professor and Head of Department of Orthopaedic Surgery, Faculty of Health Sciences, University of Cape Town, South Africa

IIIMBChB, FCS(SA); Department of Surgery, Faculty of Health Sciences, University of Cape Town, South Africa

IVMD, PhD(Orth), FC Orth(SA), Department of Orthopaedic Surgery, Faculty of Health Sciences, University of Cape Town, South Africa

ABSTRACT

BACKGROUND: To determine the pass rate of the final exit examination of the College of Orthopaedic Surgeons of South Africa [FC Orth(SA)] and to assess the correlation between the written component with the clinical and oral component.

METHODS: Results of candidates who participated in the FC Orth(SA) final examination during a 12-year period from March 2005 through to November 2016 were assessed retrospectively. Pass rates and component averages were analysed using descriptive and inferential statistics. Spearman's rho test was used to determine the correlation between the components.

RESULTS: A total of 399 candidates made 541 attempts at the written component of the examination; 71.5% of attempts were successful and 387 candidates were invited to the clinical and oral component, of which 341 (88%) candidates were certified. The second-attempt pass rate for those candidates who wrote the written component again was 42%. The average annual increase in the number of certified candidates was 8.5%. The overall certifying rate increased by 1.5% for this period. Invited candidates who scored less than 54% for the written component were at significant risk of failing the clinical and oral component. The written component showed weak correlation with the clinical and oral component (r=0.48).

CONCLUSION: While the written component was found to be an effective gatekeeper, as evidenced by a high eventual certifying rate, the results of this component of the FC Orth(SA) final examination did not correlate strongly with the performance in the clinical and oral component. This finding confirms the value of the written component as part of a comprehensive assessment for the quality of orthopaedic surgeons.

Level of evidence: Level 4.

Key words: certification examinations, postgraduate training, orthopaedic surgery

Introduction and background

The urgent need to produce well-trained surgeons in low-middle income countries (LMIC) has recently been highlighted by the Lancet Commission on Global Surgery.1 A crucial requisite to evaluate the quality of surgeons produced is a comprehensive specialist exit examination which confirms a candidate is fit to practice. In South Africa, orthopaedic surgical training is under the supervision of eight academic institutions. The Health Professions Council of South Africa (HPCSA) has appointed the Colleges of Medicine of South Africa as the designated unitary examination body to evaluate and certify successful candidates of the College of Orthopaedic Surgeons of South Africa [FC Orth(SA)] final examination. Candidates need to complete training time, produce a dissertation and pass the final composite examination to become a specialist.

Although this format seems well suited to assess the complexity of surgical competence, there is limited evidence in the surgical domain regarding the description of the examination processes with the majority of literature devoted to the psychometric adequacy of various assessment methods.2,3 Furthermore, the composite examination format is a labour- and resource-intensive undertaking and depends to a great degree on the feasibility regarding high cost, examiners' time, facilities and funds, especially for LMICs.4,5 With resource limitations in sub-Saharan Africa a reality, the focus is to minimise the administrative burden for examination bodies and therefore constantly re-evaluate and choose appropriate examination components which can still deliver the desired quality in selecting our surgeons.

The overall aim of this study was therefore to analyse and describe the results of the FC Orth(SA) final examination. Specific objectives were to assess the correlation between the written component with the clinical and oral components of the examination as well as to determine the overall certifying rate of those candidates who passed the written component. The written component functions as a gatekeeper, preventing candidates who fail this component from progressing to the clinical and oral component. In addition, the written component measures higher order cognitive skills which is different from the more clinical skills required in the oral and clinical components.6

Methods

A retrospective review of the FC Orth(SA) final examination results was conducted and all test results of this specialist examination from March 2005 through to November 2016 were included. No demographic data was available and the results of all candidates who were admitted to the written component were included in the analysis.

Examination structure

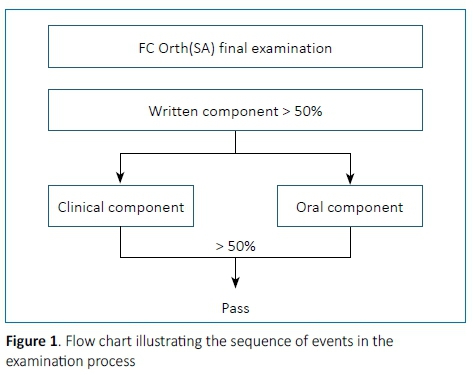

The FC Orth(SA) use a composite test format to assess candidates' knowledge and clinical skills. This examination comprises written papers, clinical cases and oral examinations (Figure 1).

During the period of this review, the written component consisted of three 3-hour papers with short- and essay-style questions.

The clinical component was composed of a long case with 30 minutes to interview and examine a non-standardised patient. The candidate then presented the case in 15 minutes to the examiners with an additional 15 minutes allocated for discussion around the case. These questions were not standardised. Furthermore, candidates were to examine two sets of short clinical cases, pathological cases as well as radiological material.

The oral examination consisted of three 30-minute examinations. Each candidate was assessed separately by three teams of two examiners each. They covered orthopaedic trauma, reconstructive orthopaedic surgery and orthopaedic pathology.

The overall mark for each component reflected a score made up of marks from the three sub-components. All scores were expressed on a percentage scale. The set pass mark for the written examination was 50% and subsequently allowed for entry into the clinical and oral examination. The weighing of these two components were equal. Candidates were unsuccessful if they failed two or more sub-components or if their combined mark for the clinical and oral examination was less than 50%.

Statistical analysis

Descriptive and inferential statistics were performed using the Wolfram Programming Lab (Wolfram Research, Inc. Champaign, Illinois) to analyse the data. The Shapiro-Wilk test was used to determine the data distribution for the three components of the examination. Non-parametric tests were used to analyse and describe the various results of the examination components as the data was not normally distributed. Continuous variables were analysed using the Mann-Whitney test (when two sets of data were compared) or Kruskal-Wallis test (when more than two sets of data were compared). Spearman's rank correlation was used to describe the relationship between the different components of the examination. A p-value of <0.05 was accepted as statistically significant.

Results

During the 12-year period, a total of 399 candidates made 541 attempts at the written part of the examination. At this written component, 71.5% of attempts were successful and 387 candidates were invited to the clinical and oral component, of which 341 (88%) candidates were certified.

Figure 2 gives details of the number of candidates admitted to the written component, invited candidates to the clinical and oral component, and number of certified candidates. An average annual increase of 8.5% was observed in the number of successful candidates during this period.

Figure 3 shows the pass rates of the three components and the overall certifying rate for each year. The overall certifying rate increased by 1.5% during the period of this study. Eighty-six candidates made 141 repeat attempts at the written component. Sixty-six candidates eventually passed the examination at an average of 2.5 attempts. The second-attempt pass rate for those candidates who attempted the written component again was 42%. Figure 4 shows the breakdown of the annual number of first-and second-attempt candidates who were successful in the FC Orth(SA) final examination.

The marks allocated for each component (written, clinical and oral) were analysed separately. Table I compares the average percentage scores of the sub-components. The average mark for the final examination was 60.1% (IQR 56-64%). There was a statistically s ignificant difference when comparing the averages of the written, clinical and oral components, the three written papers, as well as the three clinical sub-components (p<0.05). The averages for the three sub-components of the oral examination were similar (p=0.97). Furthermore, the annual averages of the written, clinical and oral components showed marked variance (p<0.5).

Candidates who passed the clinical and oral component scored significantly higher marks in the written component compared to candidates who were unsuccessful in the oral or clinical component. The average marks were 59.6% (IQR 56-63.5%) compared to 54.1% (IQR 51-57%) respectively in the written component (p<0.05). Sixty-nine per cent of candidates who were unsuccessful in the clinical and oral component failed due to poor performance in the clinical component of the examination.

The components correlated poorly with each other (p<0.05). The highest correlation coefficient was between the written and oral component (r=0.49). The written component correlated poorly with the clinical component (r=0.33) and showed a weak correlation with the combined clinical and oral mark (r=0.48).

Discussion

This is the first reported study evaluating the outcomes of an orthopaedic surgery specialist examination in an LMIC. The present study shows that the results of the written component did not correlate with the clinical and oral components; however, the written component was an effective gatekeeper as evidenced by the high certifying rate for candidates who passed this component. This finding confirms that the written component is an essential part of the composite examination process.

The poor correlation between the components likely indicates that the components are testing different aspects of competency. The essay-style questions in the written component were aimed at testing candidates' knowledge base and higher order cognitive processes when dealing with common orthopaedic problems.6 The long- and short-case clinical component aims to assess candidates' competency holistically by examining real patients with actual problems.7 This format requires candidates to display their knowledge, skills and judgement in a given sub-discipline. A possible explanation could be that the knowledge base tested in the written component has little relation to the more clinically based skills required by candidates for performance in the clinical and oral component of the examination. Deterioration of clinical examination skills among medical practitioners has been attributed to improvements in technology and a lack of time to properly examine patients.8,9 However, especially in resource-restricted countries, a thorough clinical examination remains an important skill in the armamentarium of healthcare professionals. It is postulated that the clinical examination component of an examination allows for evaluation of the effectiveness of a training programme and acts as a screening device to identify inadequately trained candidates.7 In this study, 69% of candidates who were unsuccessful in the clinical and oral component of the examination failed due to poor performance in the clinical component of the examination. This finding might point out inefficiencies in the training programme and poor candidate preparation.

The FC Orth(SA) final examination uses the traditional pass mark of 50% for the written component. This pass mark appears to be generous given that candidates who scored less than 54% for this component were at significant risk of failing the clinical and oral component of this examination. This finding should be interpreted with caution due to the weak positive correlation found between the first and second parts of the examination. The significant variation observed in the annual average mark of the written component suggests differences in the cognitive ability levels between the groups of candidates for each examination sitting or could indicate the lack of standardisation of the examination between different hosting centres. The process of determining an appropriate pass mark to separate the competent candidate from those who do not perform well enough is called standard setting. In the absence of formal standard setting methods to improve the fairness of the set pass mark, variations in the level of difficulty of each examination could potentially lead to the misclassification of candidates. The ideal pass mark is the one in which unsuccessful candidates are truly incompetent and successful candidates are truly competent. For this reason the medical education literature strongly recommends formal standard setting procedures to imp rove the quality of high stakes certifying examinations and to ensure that the pass mark is robust and defensible especially in an era of increased litigation.10

There is limited literature on specialist certification processes and objective measures to improve it.11 Historically assessment mainly focused on knowledge and know-how and less on skills and competencies. As the findings of our study were evident to the examination board, recent changes include the addition of an objective structured clinical examination (OSCE), multiple-choice questions (MCQ) with single best answers and extended matching questions. The introduction of an OSCE to the clinical component follows the international trend towards a more competency-based certification process.12 To our knowledge this will be the first postgraduate orthopaedic surgery exit examination in Africa to include an OSCE as part of the certifying process. Currently the essay-style questions are in a process of being phased out of the written component of the FC (Orth)SA final examination and have been replaced by the more reliable and reproducible MCQ format.6 The MCQ assessment format is well known for its superior objectivity and allows for a wider sampling of a subject, which results in a more reproducible assessment and reduces the perception of examiner bias. These changes also served to improve the cost-effectiveness of the written component given the superior efficiency of their marking. The introduction of formal standard setting methods in the written and clinical components has also improved the credibility of pass/fail decisions.

More research is required to guide evaluation bodies in resource-constrained environments to ensure that their examination processes are evidence-based in order to provide a credible and defensible certifying examination.11 The cost of the two-day assessment in the second part of the FC Orth(SA) examination is enormous for the examination body and the candidates, and more research is required that will lead to cost-effective and goal-directed changes in the clinical and oral component. The limitations of this study include the lack of additional objective variables that may predict candidate performance in the FC Orth(SA) examination and future research could potentially include the appraisal of surgical logbooks, primary and intermediate examination results as well as annual in-training examination results. These predictors could potentially lead to the identification of inadequately trained candidates prior to the final examinations and the initiation of appropriate remedial action to improve their success rates.

Conclusion

This study confirms that the results of the written component did not correlate with performance in the clinical and oral component. This finding highlights the importance of the various components of this examination. The written component was found to be an effective gatekeeper, as evidenced by a high eventual certifying rate for candidates who passed this component. This study adds a contribution to the medical education literature describing the value of the written component in the composite examination format of a high-stakes postgraduate certification examination.

Ethics statement

Ethical approval was obtained from the institution's Human Research Ethics Committee.

References

1. Meara JG, Greenberg SLM. The Lancet Commission on Global Surgery Global surgery 2030 : Evidence and solutions for achieving health , welfare and economic development. Surgery. 2014;157(5):834-35. doi:10.1016/j.surg.2015.02.009 [ Links ]

2. Chou S, Lockyer J, Cole G, et al. Assessing postgraduate trainees in Canada : Are we achieving diversity in methods. Medical Teacher. 2016 (Oct);31:2, e58-e63. doi:10.1080/01421590802512938 [ Links ]

3. Hutchinson L, Aitken P, Hayes T. Are medical postgraduate certification processes valid ? A systematic review of the published evidence. Med Educ 2002;36:73-91. doi:10.1046/j.1365-2923.2002.01120.x [ Links ]

4. Rahman G. Appropriateness of using oral examination as an assessment method in medical or dental education. Med Educ 2011;1(2). doi:10.4103/0974-7761.103674 [ Links ]

5. Turner JL, Dankoski ME. Objective structured clinical exams : a critical review. Fam Med2008;40(8):574-78. [ Links ]

6. Hift RJ. Should essays and other open-ended -type questions retain a place in written summative assessment in clinical medicine? BMC Medical Education 2014;14:249. doi:10.1186/s12909-014-0249-2 [ Links ]

7. Ponnamperuma GG, Karunathilake IM, Mcaleer S, Davis MH. Medical education in review. The long case and its modifications : a literature review. Med Educ 2009:936-41. doi:10.1111/j.1365-2923.2009.03448.x. [ Links ]

8. Feddock CA. The lost art of clinical skills. Am J Med 2007. doi:10.1016/j.amjmed.2007.01.023 [ Links ]

9. Asif T, Mohiuddin A, Hasan B, Pauly RR. Importance of thorough physical examination : a lost art. Cureus 2017 (May);9(5):e1212. DOI 10.7759/cureus [ Links ]

10. Goldenberg MG, Garbens A, Szasz P, Hauer T, Grantcharov TP. Systematic review to establish absolute standards for technical performance in surgery. Br J Surg 2017:13-21. doi:10.1002/bjs.10313 [ Links ]

11. Burch VC, Norman GR, Schmidt HG, van der Vleuten CPM. Are specialist certification examinations a reliable measure of physician competence? Adv in Health Sci Educ 2008:521-33. doi:10.1007/s10459-007-9063-5 [ Links ]

12. Jonker G, Manders LA, Marty AP, et al. Variations in assessment and certification in postgraduate anaesthesia training: a European survey. Br J Anaesth. 2017 (Sept):1-6. doi:10.1093/bja/aex196 [ Links ]

Correspondence:

Correspondence:

Dr S Swanepoel

Department of Orthopaedic Surgery

Groote Schuur Hospital, Observatory

Cape Town 8000

cell: 083 227 8594

work: 021 404 5108

Email: swanepoeles@gmail.com

Received: December 2017

Accepted: February 2018

Published: August 2018

Editor: Prof LC Marais, University of KwaZulu-Natal, Durban

Funding: The authors declare they received no funding for this research.

Conflict of interest: The authors declare that they have no conflicts of interest that are directly or indirectly related to the research.