Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

South African Journal of Agricultural Extension

On-line version ISSN 2413-3221

Print version ISSN 0301-603X

S Afr. Jnl. Agric. Ext. vol.37 n.1 Pretoria Jan. 2008

Towards the development of a monitoring and evaluation policy: an experience from Limpopo Province

E.M. ZwaneI; G.H. DüvelII

IManager, Advisory Services for Capricorn District, Dept of Agriculture, Limpopo Province, 317 Marshall Street, Flora Park, Pietersburg 0700

IIProfessor emeritus, Department of Agricultural Economics, Extension and Rural Development, University of Pretoria, Pretoria 0002, Tel. 012-4203811, Fax. 012-4203247, E-mail: gustav.duvel@up.ac.za

ABSTRACT

The near collapse of the public extension service in South Africa and the efforts currently under way to develop and implement recovery plans, call for actions that have significant and immediate results. Based on the assumed important role that monitoring and evaluation (M&E) can play in the improvement of current and future extension, this article is concerned with the development of an appropriate policy in this regard.

For such a policy to be appropriate and acceptable at the operational level, a total of 324 front line extension workers and managers (a total of 324 and representing a 30 percent sample) were involved in group interviews in which their views were captured in semi-structured questionnaires after making use of nominal group and Delphi techniques.

The article gives an overview of the perceived need for and importance of monitoring and evaluation as well as what should be the most important criteria and ingredients of an effective monitoring and evaluation policy for extension in the Limpopo Province; the most important of which pertain to the development of a unit (initially a working group) at provincial level taking responsibility of the further development and fine tuning of an M&E policy and its implementation. Recommendations relating to specific issues of monitoring and evaluation include: increased monitoring through continuous evaluation of behaviour determinants, setting a maximum rather than a minimum of objectives and encouraging accountability not only to management but also to local institutions and beneficiaries.

Keywords: Monitoring, evaluation, accountability, extension programme, experience.

1. INTRODUCTION

Admission of the near collapse of the public extension service in South Africa (Mankanzana, 2008), becoming evident in especially the Department of Agriculture's failure to respond to the needs of the majority of small-scale and commercial farmers, has led to calls for urgent intervention. In response national conferences and Ndabas have been convened and recovery plans designed. There is general agreement that over a wide spectrum of issues large scale changes will have to be made and reforms introduced - something which is hardly possible over the short term.

In this context the question arises as to which solutions or measures will make the biggest difference within the shortest period of time. The underlying assumption of this research is that an effective monitoring and evaluation programme is bound to have the biggest impact, irrespective of whether and which other measures are implemented. The theoretical basis for this reasoning is found, amongst others, in the nature of monitoring and evaluation (M&E) and its influence on human motivation.

M&E is only meaningful and possible where clear and measurable goals or objectives have been formulated (Halim and Mozahar-Ali, 1997:141). These objectives have a motivating effect on human behaviour, which, by nature is purposeful or intentional (Malle, Moses & Baldwin, 2001:27). This not only implies that behaviour is goal oriented, but also that, if individuals are confronted with acceptable goals, these tend to motivate or elicit movement towards the goal imposed on or introduced to the individual - in this case the extension worker.

A further motivating effect associated with goals and an assessment of their accomplishment, lies in the "activation" effect associated with the motivational experience that "success breeds success" (Garza &Neff, 2004). Not subjective impressions but the availability (through surveys) of reliable and valid evaluation results measured against baseline information can give the sense of achievement and success, which is likely to motivate the individual towards more success.

The potential value of M&E results is such that its implementation is generally regarded as non-negotiable and a must for any extension organisation wanting to be accountable, to justify budgetary allocations and to attract ongoing financial support. Particularly valuable is the ongoing monitoring for continuous adaptation and improvement of the extension approach, process and delivery.

Based on the assumed important role that M&E can play in the improvement of current and future extension, this article endeavours to contribute to the development of an effective M&E policy. Specific objectives of the research are:

- To determine extension workers's agreement with regards to the importance of M&E

- To determine respondents's perceived importance of different solutions to improve extension efficiency.

- To determine to what extent the different activities are being evaluated.

- To determine respondents views with regard to evaluation criteria.

- To make recommendations with regard to the development of an effective M&E policy.

2. METHODOLOGY

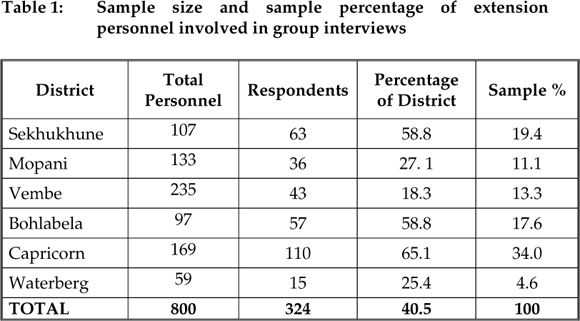

A discussion document or questionnaire developed by representatives from each of the nine South African provinces formed the basis of the survey, which took the shape of group interviews held at different localities throughout the Limpopo Province. The key issue guiding the investigation was the participatory condition, implying full involvement of role players, and especially frontline extension workers and their managers. A total of 324 respondents, representing a sample of 30 percent, were involved in the survey. The degree to which extension staff from the various districts was involved, is indicated in Table 1.

Group interviews were conducted in such a way that every participant was given a discussion form or questionnaire for completion. Before doing so, they were informed about the purpose and the importance of everyone giving his/her own honest opinion. Emphasis was, nonetheless, placed on informed opinions. This was accomplished by the facilitator providing the necessary background reasoning and explanation and pointing out the pros and cons and also the implications of many of the alternatives within the principles, and by allowing as much interaction and exchange of viewpoints between the participants as possible (Düvel, 2002).

3. FINDINGS AND DISCUSSION

The degree to which M&E can bring about an improvement in the current effectiveness and efficiency of extension delivery in Limpopo will depend on the current level of implementation of M&E and the level to which its implementation can be improved. The latter in turn is dependent on whether M&E is perceived to be important. The findings therefore relate to the perceived importance of M&E, the current level of implementation and to proposals as to how it can be improved.

3.1 Perceived importance of evaluation

Accountability has become the major issue worldwide, in view of general and even worldwide budgetary cutbacks, and the increasing pressure to justify public extension funding (Duvel, 2002:155). The importance of M&E also lies in its potential of improving all current and future extension. Extension staff's level of agreement with the statement, that M&E is one of the most important and effective instruments to improve current and future extension, is reflected in Figure 1.

These findings leave little doubt that extension personnel understand the importance and the value of M&E since the average assessment varies between about 80 and 95 percentage scale points out of a possible 100. Waterberg district reported the lowest rating (81.4 %), which is significantly lower than that of Mopani (94.8%). The latter's high rating could be attributed to the influence of the general extension system practiced in the former homeland of Gazankulu in which M&E was well supported and common practice.

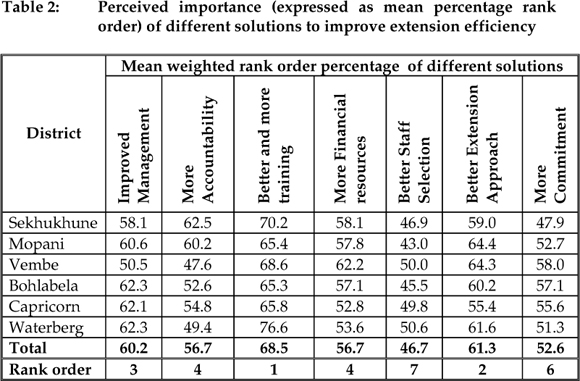

A further indication of the perceived importance of more accountability through effective monitoring and evaluation is given by respondents' rank order of solutions to the improvement of the effectiveness and efficiency of extension. The findings are presented in Table 2.

In general more accountability takes in a middle position as far as importance is concerned, while better and more training is almost without exception seen as the biggest potential contributor towards an improvement of extension delivery. The perceived importance of accountability (as form of M&E) varies significantly between the districts. In Sekhukhune it has the second highest position (62.5%), while it is regarded as the least important method in Vembe (47.6%) and Waterberg (49.4%). This gives an indication of the relative importance, but not necessarily of the importance as such. For example, although Vembe had the lowest importance rank order, M&E nevertheless features very strongly when assessed as a means of improving extension (see Figure 1).

3.2 Current evaluation activities

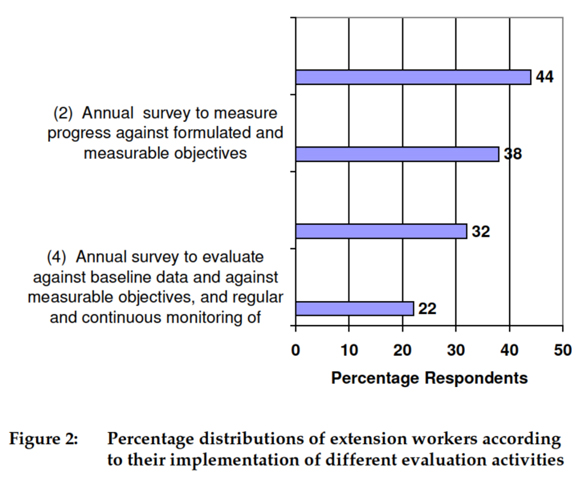

Monitoring and evaluation should be conducted regularly during programme implementation (Seepersad & Henderson, 1984:184). However, whether and to what degree M&E activities are carried out depends largely on interpretation. According to Duvel, (2002:55) evaluation can vary from casual everyday assessment as a form of subjective reflection to rigorous scientific studies; from being purely 'summatory' (Van den Ban & Hawkins, 1996:209) in nature to evaluations that also focus on monitoring or on formative evaluations; from being focussed only on input assessment to evaluations that are primarily output focussed. An impression of the current evaluation was obtained by asking respondents what they did to evaluate their extension. They were requested to indicate their evaluation activities by identifying them on a list of alternatives that were provided (see Figure 2).

The findings are somewhat contradictory and can be attributed to some confusion regarding the question which provided for more than one answer. One could have expected that respondents meeting the conditions of alternative (4), would automatically also meet the conditions of alternatives (1), (2) and (3). However, the frequencies or percentage distributions do not reflect this. Perhaps the most accurate figure is the 44 percent claiming to regularly complete and return monthly or quarterly report forms. If this is accepted as the most basic and minimum form of evaluation, but which Seepersad & Henderson (1984:184) regard as very important, then even 44 percent is not reflecting a healthy situation. It might even be an inflated figure in view of the fact that since 1999 extensionists in Limpopo no longer submit the "General Statistical Report". The only report submitted is an ad hoc report based on the priority areas of the strategic plan of a district (Department of Agriculture, 2006).

The other percentages appear to be highly inflated; something which is confirmed by leaders well acquainted with the situation and can be attributed to a lack of understanding or an attitude of wanting to provide pleasing or impressive answers. The latter is not far removed from purposeful deception in trying to create a positive but false picture. This in turn may be exacerbated by a perception that evaluations are primarily used as control measure rather than a tool to improve extension delivery.

Between the districts there are, according to Table 3, significant differences in the percentage of respondents performing the different M&E activities.

Again the Waterberg district is among the poorest performers, but there does not seem to be any relationship between the perceived importance and the implementation of M&E. This could be partially attributed to the fact that only about 30 percent of the respondents answered this question, thus not being a representative sample. Differences betweenthe districts can also be attributed to differences in management, which would suggest that management is, as has already been found in earlier studies (Mathabatha & Düvel, 2005), a definite weakness in the public extension service in South Africa.

3.3 Evaluation criteria and procedures

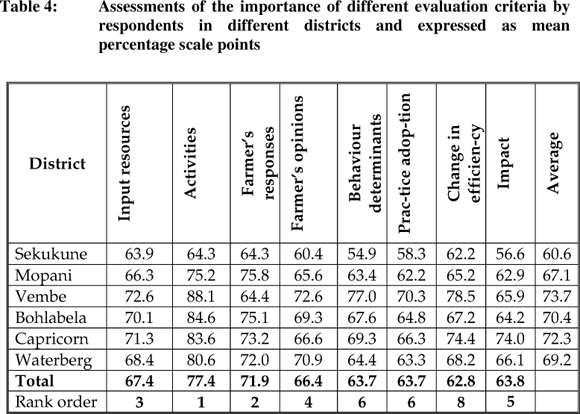

Effective monitoring and evaluation is only possible or meaningful against identified and formulated objectives and using appropriate criteria (Düvel, 2002). The question frequently asked is what should be evaluated? There are different types of criteria which have been hierarchically structured by Bennet (1975) as cited by Van den Ban & Hawkins (1990:235), and extend from input to output and outcome criteria. The views of extension staff regarding the importance of the different criteria, based on Bennett's hierarchy (1975), are summarised in Table 4.

Respondents clearly perceive input criteria such as activities (77.4%), farmer responses (71.9%), and input resources (67.4%), higher than the more important criteria such as behaviour determinants (63.7%, practice adoption(63.7%) and change in efficiency (62.8%). This explains the emphasis still placed on input activities as focus of evaluations.

By far the lowest importance assessments of the different criteria were made by the Sekhukhune District followed by Mopani. The smallest discrepancy between input and output criteria is found in Vembe, while respondents from Capricorn also award a relatively high assessment to the output criteria. Behaviour determinants as criteria appear to be reasonably well appreciated in most districts, which do imply that monitoring, which can be based on these criteria, could be introduced without too much resistance.

In a comparison of the perceived importance and perceived current efficiency use of the various criteria, as shown in Figure 3, it can be seen that the two perceptions follow the same pattern. Being acquainted with the practical situation, the author had expected lower use efficiency for the outcome criteria. These findings can be attributed to unreliable assessments due to a lack of understanding as to what is really meant by the various criteria.

Not quite independent of the choice or preference of criteria, is the number of criteria to be used. The extreme positions could be a preference for one or two criteria or a preference for a multitude or as many criteria as possible. Viewpoints differ as to what is the most appropriate, but according to Figure 4, there is a clear majority (about 70 to 75%) in favour of a maximum of objectives, with very little variation between the districts. Behind these perceptions are probably the realisations that extension has to be more accountable and that means providing management on an ongoing basis with accomplished results. In this regard it is the specific objectives related to the behaviour determinants that offer evaluation opportunities with just about every extension method input.

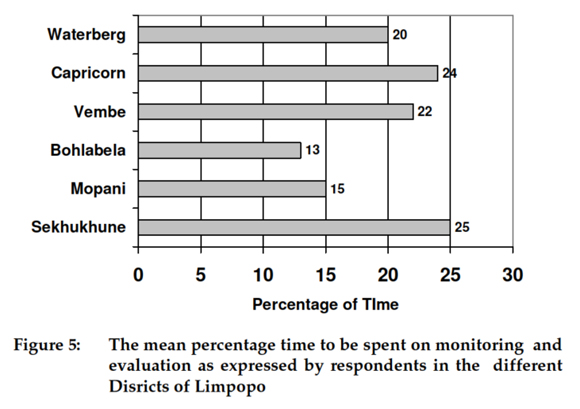

For an approach that is aimed at providing a maximum of evidence of impact achieved, more time for M&E will have to be budgeted for. How much time can frontline extension workers afford to spend or not to spend on evaluation activities? Respondents' views in this regard are summarised in Figure 5.

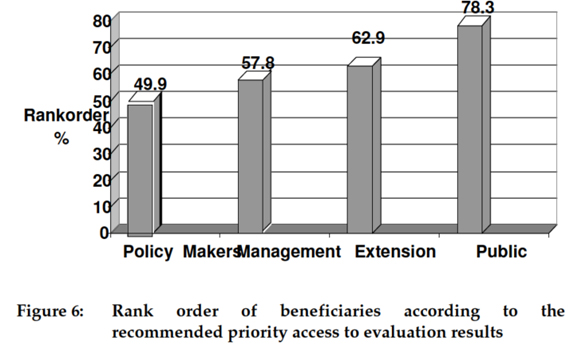

The differences between districts regarding the acceptable time to be spent on M&E are significant. For example it, varies from 13 % (Bohlabela) to 25 % (Sekhukhune). However, the overall mean of 20 percent recognizes the importance of more accountability if extension wants to fulfil the role expected of it. Obviously the expectations differ and an important question is who the recipients of the evaluation results should be, or to whom extension should be primarily accountable? Respondents' views in this regard are summarised in Figure 6.

The major claim that managers and policy makers are having regarding the results or outcome of evaluation seem to be reversed here, with public accountability (78.3%) and results for improvement of the extension process (62.9%) receiving the highest assessment or rank order. However, extension management and policy makers are similarly dependent on a regular flow of evaluation results to function effectively. In all cases extension personnel will have to be convinced about their usefulness and necessity to ensure the submission of reliable results.

4. CONCLUSIONS AND RECOMMENDATIONS

The following recommendations emerge from the study and should be considered for inclusion in the Limpopo Department of Agriculture's policy:

- The implementation of a provincial, if not national, programme of monitoring and evaluation. The necessity for accountability and therefore monitoring and evaluation is widely appreciated and is based on the realisation that the Department of Agriculture, in order to ensure ongoing budgetary allocation of public funds, must be accountable not only in terms of whether and how the budget is spent (inputs), but also in terms of outputs, measured against acceptable objectives. The information obtained from proper monitoring and evaluation is also essential for improving extension and provides essential information for policy makers, managers of extension and officials involved in the process and programs of extension. The mere fact that respondents saw in monitoring and evaluation one of the most effective methods of improving current and future extension, justifies such a programme.

- Objectives should be chosen to extend over the full spectrum of input and output criteria. They should focus on or include all the criteria ranging from resource and activity inputs to clients' responses and opinions, behaviour determinants, behaviour change (practice adoption), outcome or efficiency aspects and, where possible, the impact in terms of job creation, increase in living standard, etc.

- The number of objectives and criteria should be as many as possible. This is fo provide for as much evidence as possible. For evaluation between 10 - 20 percent of time should be budgeted for.

- Sufficient time should be budgeted for evaluation and monitoring. Opinions vary tremendously, but a time of at least 10 - 20 percent should be budgeted for.

- For monitoring purposes (which is the most important tool for improving extension delivery) objectives and criteria should be chosen that are focused on behaviour determinants, e.g. needs, perception and knowledge. These variables represent the actual focus of extension and their positive change is a precondition for behaviour change and the consequent change in efficiency and the resulting financial and other outcomes. Since behaviour determinants are the focus of every encounter they lend themselves to monitoring after every extension delivery or method used.

- Accountability should be as multi-focused as possible. Although preferences have to be set because of different needs regarding the nature of evaluation information required, beneficiaries, the local communities and the public at large should also have access to evaluation and monitoring results.

REFERENCES

BENNETT, C., 1975. Up the hierarchy. Journal of Extension, 13(2):7-12. [ Links ]

DEPARTMENT OF AGRICULTURE, 2006, Management plan, priority areas. [ Links ]

DÜVEL, G.H., 2002. Towards developing an appropriate extension system for South Africa. National research project. National Department of Agriculture. [ Links ]

GARZA, M. & NEFF, R.S., 2004. Motivation activation: The Psychology of Goal Attainment. Custom Publishing. [ Links ]

GUIDELINES FOR EVALUATION OF DISTRICT EXTENSION WORK, 1976, Choosing between Objectives and Priorities. National Extension Workshop, New South Wales Department of Agriculture, Sydney. [ Links ]

HALIM, A. & MOZAHAR-ALI M.D. 1997. Training and professional development. Types of Evaluation. In: (Eds) Swanson, B.E., and Claar, J.B. Agricultural Extension: A Reference Manual. Food and Agriculture Organization of the United Nations. Rome. [ Links ]

MANKANZANA, M. 2008. Agricultural recovery plan. Presentation during the Ministerial Extension Indaba held in East London. Department of Agriculture -RSA. [ Links ]

MATHABATHA, M.C. & DÜVEL, G.H., 2005. Supervisory skills of extension managers in Sekhukhune District of Limpopo Province. S. Afr. J. Agric. Ext. 34 (2):289-302. [ Links ]

MALLE, F., MOSES, L.J & BALDWIN, D.A., 2001. Intentions and intentionality: Foundations of social cognition. MIT Press. [ Links ]

SEEPERSAD, J. & HENDERSON, T.H., 1984. Evaluating extension programmes. In: (Eds) Swanson, B.E., and Claar, J.B. Agricultural Extension: A Reference Manual. Food and Agriculture Organization of the United Nations. Rome. [ Links ]

VAN DEN BAN, A.W. & HAWKINS, H.S., 1996. Agricultural Extension. 2nd Ed. Cambridge: Blackwell Science [ Links ]

Correspondence:

Correspondence:

Manager: Capricorn District, Dept of Agriculture, Limpopo Province, 317

Marshall Street, Flora Park, Pietersburg 0700

Tel. 015-6326652, Fax. 015-6324590

E-mail: zwaneme@agric.limpopo.gov.za