Serviços Personalizados

Artigo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares em Google

Similares em Google

Compartilhar

SAMJ: South African Medical Journal

versão On-line ISSN 2078-5135

versão impressa ISSN 0256-9574

SAMJ, S. Afr. med. j. vol.113 no.12 Pretoria Dez. 2023

http://dx.doi.org/10.7196/samj.2023.v113i12.1614

RESEARCH

District Health System performance in South Africa: Are current monitoring systems optimal?

P BarronI; H MahomedII; TC MasilelaIII; K VallabhjeeIV; H SchneiderV

IFFCH (SA); School of Public Health, University of the Witwatersrand, Johannesburg, South Africa

IIPhD; Western Cape Government Health and Wellness and Division of Health, Systems and Public Health, Department of Global Health, Stellenbosch University, Cape Town, South Africa

IIIMA; Department of Planning, Monitoring and Evaluation, Pretoria, South Africa

IVFFCH (SA); Clinton Health Access Initiative, Pretoria, South Africa

VPhD; School of Public Health, University of the Western Cape, Cape Town, South Africa

ABSTRACT

In this article, we review the monitoring and evaluation system that is used to measure the performance of primary healthcare delivered through the district health system and district management teams. We then review some global frameworks, especially linked to the World Health Organization, and look at some of the differences between what is internationally recommended and what we do in South Africa. We end with some recommendations to improve the system.

South Africa (SA)'s public health system is built on the foundation of the district health system (DHS) and the 1995 DHS policy framework.[1] Monitoring and evaluation (M&E) systems are aligned to the strategic goals and objectives of the health system, and serve to fine tune the health system response on an ongoing basis. M&E systems must be regularly reviewed and adjusted to address challenges, and SA is facing some significant challenges with the fiscal crisis[2] being the most immediate. Budget cuts, starting in the 2023/4 financial year, are projected to intensify over the medium term. Another challenge is the implementation of the National Health Insurance (NHI) Bill.[3] This will require a fundamental reconfiguration of the way the DHS will operate and be managed.

Internationally, there are multiple approaches, frameworks and guidelines in use to evaluate the performance of health systems as a whole.[4-6] However, we could not find clear, practical guidance on how to assess the performance of health systems at the primary level, i.e. the DHS.

This article provides an overview of the current approach to DHS performance monitoring measures in the SA context. It also gives an overview of the mainstream World Health Organization (WHO)'s approach to primary healthcare (PHC) monitoring, and culminates with some suggestions as to how SA may improve its current way of monitoring to one that is more comprehensive and in line with global thinking.

Current system of assessing district performance in SA

The DHS is formally established through the National Health Act No. 61 of 2003,[7] and is expected to provide equitable and effective PHC to all residents in the district. Currently, the DHS infrastructure in SA includes community-based services, around 3 500 clinics and community health centres,[8] and 260 district hospitals, of various sizes, in the public sector alone. Approximately 43% of total provincial spending on health is for the DHS, of which district hospitals consume around one-third. The overall DHS expenditure proportion of the total public sector health budget has been steadily increasing over the past 20 years.[9] Given the fiscal crisis, it is important for these resources to be used efficiently and effectively. Health system performance measurement is central to tracking efficiency, effectiveness, quality and equity.

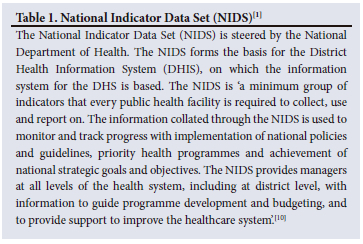

In this section we review how indicators are used in SA, and the information systems that underpin them. Formal DHS monitoring in SA combines annual planning and target setting and reporting on a National Indicator Data Set (NIDS) (Table 1). Prior to the beginning of the financial year, each district produces a district health plan with targets to achieve various levels of performance as well as programmatic outputs, outcomes and impacts. Sometimes the targets set by districts are 'stretch' targets, influenced by a range of external factors, including the Minister of Health's Performance Agreement with the President, the National Development Plan's goals, the Medium-Term Strategic Framework, the 2030 Sustainable Development Goals (SDGs) and the National and Provincial Annual Performance Plans (APPs). Each of these put pressure on the district to set targets that are unrealistic, given that public health improvements generally do not occur overnight. At other times, targets are based on historic performance and are too conservative in nature. Neither the optimistic nor the conservative targets are helpful as tools for monitoring the performance of the health districts, as neither is realistic and neither helps to show whether a good job is being done or not.

There are approximately 250 indicators and several hundred data elements in the NIDS, categorised mainly by disease programme, such as HIV, tuberculosis (TB), maternal and neonatal, and non-communicable diseases (NCDs). There are also elements measuring managerial aspects of the health system such as management of PHC (e.g. number of clients seen in different aspects of the services) and chronic medicines dispensing and distribution. Service outcomes numbers (numerators) can be linked with service usage numbers (denominators) to create metrics of impact (e.g. stillbirth rate), outcome (e.g. treatment success rate), output (e.g. early breastfeeding coverage rate), process (e.g. complaint resolution rate) and inputs (e.g. doctor-to-patient ratio in a particular facility). A key problem with the NIDS indicators is that they are not weighted evenly across priority areas. HIV has over 70 indicators, compared with child health, which has 19, and NCDs, which have 6. These indicators, all quantitative, currently form the basis for DHS monitoring and evaluation.

The NIDS is reviewed every second year in consultation with provinces. The selection of the specific NIDS indicators from the available data elements has improved over time. The health programmes at the national level, in conjunction with the provinces and other relevant national health departments, do this is in a single process. Previously, health programmes engaged directly with health districts about their individual data needs, which resulted in a chaotic system.

Since 2005 this reporting of the NIDS indicators to view aspects of district performance has been enhanced in a publication entitled the District Health Barometer (DHB).[10] The key purpose of the DHB is 'to monitor progress and support improvement of equitable provision of primary healthcare by:

• illustrating important aspects of the health system at district level through analysis of indicators;

• ranking, classifying and comparing health districts based on these indicators;

• comparing these indicators annually over time; and

• improving the quality of data collected.'

These indicators are sorted into league graphs by district and also categorised by province. To illustrate this, an example is the caesarean section rate (Fig. 1),[10] where the 52 health districts, colour-coded by province, are compared with each other in a snapshot of time over a 1-year period of 2004. These DHBs were produced annually between 2004 and 2020, with increased complexity, improved analysis and trend data, as illustrated in Fig. 2,[11] where districts are compared provincially over a 5-year period showing district hospital neonatal death rates.

Global frameworks for assessment of performance of health systems

In 2000, Murray and Frenk[12] asserted that a health system's performance should be judged by the extent to which it improves health, is responsive to the expectations of the population and ensures fairness of financial contributions. They argue that 'variation in performance is a function of the way in which the health system organises four key functions: stewardship (a broader concept than regulation); financing (including revenue collection, fund pooling and purchasing); service provision (for personal and non-personal health services); and resource generation (including personnel, facilities and knowledge)'.

This is captured in Fig. 3 from the World Health Report of 2000,[13] which focused on health systems performance.

In 2007, another seminal WHO publication[14] ('Everybody's Business - Strengthening Health Systems') put forward a framework for the building blocks of a health system. The six building blocks were service delivery, health workforce, finances, heath information systems, access to medicines, all underpinned by leadership and governance. These building blocks, optimally implemented, led to the intermediate goals of improved access, coverage, quality and safety and the final goals of improved health (level and equity), and responsiveness, efficiency and social and financial risk protection.

Building on this and other frameworks, and incorporating new thinking from the Sustainable Development Goals, and on universal health coverage (UHC) and health security, in 2020 WHO/the United Nations Children's Fund produced their theory of change (TOC) for PHC.[15] This TOC has three axes (Fig. 4): (i) integrated health services, with an emphasis on primary care, and essential public health functions; (ii) empowered people and communities; and (iii) multisectoral policy and actions. These axes, when applied to the PHC levers (under health systems determinants and service delivery) result in improved outcomes and impact, viz. UHC, health security, health status, responsiveness and equity. The TOC indicates four strategic levers. These are:

• political commitment and leadership

• governance and policy frameworks

• funding and allocation of resources

• engagement of communities and other stakeholders.

There are also 10 operational levers, which are:

• models of care

• PHC workforce

• physical infrastructure

• medicines and other health products

• engagement with private sector

• purchasing and payment systems

• digital technologies for health

• systems for improving quality of care

• PHC-oriented research

• monitoring and evaluation.

These 14 levers will culminate in improvements of PHC outputs through improved access, utilisation and quality; improved participation, health literacy and care seeking; and improved determinants of health.

The final operational lever, monitoring and evaluation, forms the basis for the 2022 technical output 'Primary health care measurement framework and indicators: monitoring health systems through a primary health care lens' (Fig. 4).[16] The figure clearly shows that the emphasis is on monitoring the capacity and performance of PHC through focusing on structures, inputs, processes and outputs, and less on outcomes and impacts. Each of the items in the PHC monitoring conceptual framework is unpacked into an indicator, labelled as tier 1 (39 indicators, which most countries will feasibly be able to collect) and tier 2 (48 indicators, designated as 'desirable', but not necessarily currently feasible). An example of a tier 1 indicator under inputs, medicines and other health products is 'availability of essential medicines', while an example of a tier 2 indicator in the same category is 'regulatory mechanisms for medicines'.

The sources of data for these indicators are mainly facility surveys, qualitative assessments and routine health information systems. These account for nearly 75% of the indicators, but the remainder come from a variety of sources, including national health accounts, cancer registry, patient survey and facility censuses.

Strengthening the current SA DHS monitoring system

In comparison with the global PHC monitoring framework, several observations can be made about the DHS M&E system in SA:

• There is a general lack of patient-centred and community responsiveness indicators.

• The quality and rigour of M&E processes at all levels has been variable, but in the main, there is a focus on meeting targets set in the APP and District Health Plans, and very limited questioning of whether the underlying assumption of this system fosters a culture of learning.[17]

• There is very limited triangulation of data from the routine information systems populating the NIDS with other sources, including quality assessments such as 'ideal' clinic and hospitals, Office of Health Standards Compliance (OHSC) assessments, complaints and compliments, programme-specific reviews and other research reports.

• The fundamentals of an electronic information system and modern M&E systems, including a unique patient identification number system and interoperability framework, have been defined. However, new digital information technologies have not been systematically implemented. Examples of datasets that could easily be integrated with programmatic data include laboratory information from the National Health Laboratory Services (NHLS) and birth and death data from the Department of Home Affairs. Pockets of good practice in integrating data from various systems have emerged in some provinces, such as the Western Cape.[18] This has occurred through improved health information exchange, and strengthening its use and value in clinical management of patients, as well as operational and strategic decision-making and research.

• However, large parts of the information system still remain manual. This places a time-consuming burden on frontline staff and limits the ability to optimally use the data.

• There are a number of improvements to monitoring the performance of SA's health districts that could be considered, as suggested below, to be more patient-centred, while user experiences need to be more systematically solicited and considered to inform service improvements. Patient experience of care survey results and complaints and compliments should be integrated into a more cohesive approach to quality-of-care assessments. One example of data reflecting community and user perceptions and experiences of PHC services is that collected by a group of non-governmental organisations under the name 'Ritshidze' (meaning 'Saving our lives'). Through a community-led monitoring system, Ritshidze has produced key indicators and dashboards that give an indication of clinic and district performance, especially around quality of care and drug availability for TB and HIV services.[19] Ritshidze uses these results to engage the management at all levels of the health system, from clinic to national, to augment improvements to the service. It is important from an accountability point of view to have such independent systems of assessment. There would need to be mechanisms in place to incorporate such assessments into the existing M&E processes for the DHS.

• To deepen community participation and social accountability, especially at local levels, transparency and sharing of information and performance results with communities and the public is important. Although the COVID experience was extremely negative for the health of South Africans, with nearly 300 000 excess deaths and substantially decreased performance of the overall health system,[20] there were some positive monitoring and evaluation features. These included COVID dashboards, information feedback and sharing through regular press briefings and social media. These have created a public appetite for health system information that should be sustained.

• In keeping with the NHI provisions, data from the private health sector should be integrated with that of the public sector, and M&E processes should include private provider voices. The COVID experience has been instructive in this regard in terms of test results, admissions and vaccination coverage from both sectors being aggregated.

• Another example of a data source in SA that could be added is the internal monitoring tool for assessing how well facilities (clinics, community health centres and district hospitals) in the DHS are working. This is an annual audit monitoring framework for 'an ideal clinic'.[21] A similar tool has been developed and is being applied to hospitals. In addition, SA has an independent OHSC. One of its functions is to inspect health facilities to assess compliance with national norms and standards and then certify health facilities as compliant or non-compliant. Over the 4-year period 2019/2020 -2022/23, the OHSC inspected a total of 2 410 facilities, of which only 473 (19.6%) were certified.[22] OHSC certification will be a prerequisite for accreditation as a health provider by the NHI fund. However, the proportion of compliant facilities as measured by the OHSC tool, and its norms, is very much lower than the proportion measured by means of the self-assessment of the 'ideal clinic'. This shows that measuring of performance is neither straightforward nor easy. Ideally the OHSC and internal monitoring should agree on a single tool that is accepted by all parties to prevent overlap and duplication and wastage of scarce resources.[23]

• Steps must be taken to build a more cohesive and integrated approach to monitoring DHS performance. This requires not only the indicators to be reviewed, but also the processes and participants. Inclusive processes with a rich mix of participants provide multiple perspectives that better inform understanding and improvements. All aspects of health system performance should be brought into a unified M&E process to avoid a fragmented piecemeal approach. This should include human resource, financial, infrastructure and other support services, integrated with service delivery data.

• Technical capability, criteria and processes need to be established at national level to provide stricter gatekeeping and co-ordination of all requests for indicators and data elements, including priority programmes. The voice of frontline staff also needs to be represented in this central process. A prioritised limited number of core indicators that have demonstrated value should be put in place to reduce the burden of data collection on frontline staff. Alternative methods of collecting certain data items should be explored, such as surveys, sentinel data collection and digitising operational processes.

• A more realistic and meaningful approach to target setting needs to be explored.

• More intensive efforts must be made to create a culture of using data for decision-making at multiple levels. Training programmes and support to frontline staff and facility management should be prioritised.

• A culture of learning needs to be fostered within the DHS. Current monitoring and evaluation processes provide a substantial opportunity for inculcating a learning approach.

However, this requires intentional reconfiguration of the way meetings and processes are undertaken. While the compliance focus on meeting of targets may be mandatory, structured spaces should be created for deeper dives into priority focus areas that include a good mix of participants and perspectives, routine data, ad hoc surveys, research findings and lived experience from the frontline that makes for data-led and evidence-informed conversations. Lessons and learnings from these engagements must be fed into the improvement cycle through the relevant structures, and into daily practice.

• Different stakeholders probably need to monitor the DHS in different ways, with national and provincial stakeholders more interested in the health system objectives of Fig. 4, while those at district, subdistrict and facility level (as well as communities) will be more interested in the health system determinants and service delivery components.

• There are a range of health system-strengthening areas that are not quantifiable, but important for building health system capability and sustainability in the long term. These would include, among others, system cohesion and connectedness, resilience, organisational learning, community engagement and staff morale, public confidence and trust. Qualitative reflection on progress and challenges and tracking these developments in a regular, structured manner are also important.

Some of these suggestions represent the low-hanging fruit, which if picked could immediately enrich the monitoring of the performance of the DHS. Others, which include metrics and indicators that require specific surveys, are more complex and difficult, and the cost and time of collecting these data needs to be weighed against utility.

Conclusion

We suggest that the current way in which the DHS performance is measured needs to be modified. There are too many programmatic indicators, which are unbalanced and weighted in favour of specific diseases. In addition, there is a dearth of indicators, and therefore inadequate monitoring, for very important specific components of the health system, such as patient responsiveness, equity and governance.

In a subsequent article we will discuss and make the case for a selected number of specific indicators, which are realistically available, which will enhance the current monitoring of district performance. In addition, we will also argue for the accelerated implementation of digital innovations, better role definition of national, provincial and district spheres, and wider participation in monitoring processes, including specifically community-led voices. Furthermore, we will argue for the better use of data and M&E results so that managers and policy makers at all levels engage in objective and scientific decision-making.

Declaration. None.

Acknowledgements. We thank the Health Systems Trust and the District Health Barometer for the use and duplication of Figs 1 and 2, and the WHO for the use and duplication of Figs 3 and 4.

Author contributions. PB conceptualised the article, which was then reviewed and critiqued by all the other authors. The first draft was written by PB, which then went through two rounds of comments and editing from all the authors. All authors contributed significantly to the final article.

Funding. The publication costs are funded by the University of the Western Cape. There is no other funding involved.

Conflicts of interest. None.

References

1. Owen CP. A Policy for the Development of the District Health System for South Africa. Johannesburg Health Policy Coordinating Unit, on behalf of the National District Health Systems Committee, 1995. [ Links ]

2. National Treasury, South Africa. 2024 Medium Term Expenditure Framework. Technical Guidelines. https://www.treasury.gov.za/publications/guidelines/MTEF%202024%20Guidelines.pdf (accessed 5 September 2023). [ Links ]

3. South Africa. National Health Insurance Bill. As amended by the Portfolio Committee on Health (National Assembly). https://www.parliament.gov.za/storage/app/media/Bills/2019/B11_2019_National_Health_Insurance_Bill/B11B_2019_National_Health_Insurance_BilLpdf (accessed 5 September 2023). [ Links ]

4. Bitton A, Fifield J, Ratcliffe H, et al. Primary healthcare system performance in low-income and middle-income countries: A scoping review of the evidence from 2010 to 2017. BMJ Global Health 2019;4:e001551. https://doi.org/10.1136/bmjgh-2019-001551 [ Links ]

5. Papanicolas I, Rajan D, Karanikolos M, Soucat A, Figueras J. Health system performance assessment: A framework for policy analysis. Geneva: World Health Organization, 2022. [ Links ]

6. Karamagi HC, Tumusiime P, Titi-Ofei R, et al. Towards universal health coverage in the WHO African Region: Assessing health system functionality, incorporating lessons from COVID-19. BMJ Global Health 2021;6:e004618. https://doi.org/10.1136/bmjgh-2020-004618 [ Links ]

7. South Africa. National Health Act No. 61 of 2003. [ Links ]

8. Hunter J, Asmall S, Ravhengani N, Chandran M, Tucker J-M, Mokgalagadi Y. The ideal clinic in South Africa: Progress and challenges in implementation. In: Padarath A, Barron P, eds. South African Health Review. Durban: Health Systems Trust, 2017. [ Links ]

9. Davén J, Madela N, Khoele A, Wishnia J, Blecher M. Finance. In: Massyn N, Barron P, Day C, Ndlovu N, Padarath A, eds. District Health Barometer 2018/19. Durban: Health Systems Trust, 2020. [ Links ]

10. Barron P, Day C, Loveday M, Monticelli F. The District Health Barometer Year 1. January - December 2004. Durban: Health Systems Trust, 2005. https://www.hst.org.za/publications/District%20Health%20Barometers/DHB_Year1.pdf (accessed 3 September 2023). [ Links ]

11. Murray C, Frenk J. A framework for assessing the performance of health systems. Bull World Health Org 2000;78(6);717-731. https://apps.who.int/iris/bitstream/handle/10665/268164/PMC2560787.pdf (accessed 3 September 2023). [ Links ]

12. Massyn N, Day C, Ndlovu N, Padayachee T, eds. District Health Barometer 2019/20. Durban: Health Systems Trust, 2020. https://www.hst.org.za/publications/Pages/DHB2019-20.aspx (accessed 3 September 2023). [ Links ]

13. World Health Organization. The World Health Report 2000. Health Systems: Improving Performance. Geneva: WHO, 2000. [ Links ]

14. World Health Organization. Everybody's Business - Strengthening Health Systems to Improve Health Outcomes. WHO's Framework for Action. Geneva: WHO, 2007. [ Links ]

15. World Health Organization, United Nations Children's Fund. Operational framework for primary health care: Transforming vision into action. Geneva: WHO, 2020. https://www.who.int/publications/i/item/9789240017832 (accessed 21 July 2023). [ Links ]

16. World Health Organization, United Nations Children's Fund. Primary health care measurement framework and indicators: Monitoring health systems through a primary health care lens. Geneva: WHO, UNICEF, 2022. [ Links ]

17. Sheikh K, Abimbola S. Learning health systems: Pathways to Progress. Flagship Report of the Alliance for Health Policy and Systems Research. Geneva: World Health Organization, 2021. [ Links ]

18. Boulle A, de Vega I, Moodley M, et al. 'Data Centre Profile: The Provincial Health Data Centre of the Western Cape Province, South Africa. Int J Population Data Science 2019;4(2):1143. https://doi.org/10.23889/ijpds.v4i2.1143 [ Links ]

19. Ritshidze. Community led clinic monitoring in South Africa. https://ritshidze.org.za/ (accessed 14 July 2023. [ Links ])

20. Pillay Y, Museriri H, Barron P, Zondi T. Recovering from COVID lockdowns: Routine public sector PHC services in South Africa, 2019 - 2021. S Afr Med J 2022;113(1):17-23. https://doi.org/10.7196/SAMJ.2022.v113i1.16619 [ Links ]

21. National Department of Health, South Africa. Ideal clinic definitions, components and checklists. April 2020, version 19, updated April 2023. https://www.idealhealthfacility.org.za/users/common/documents.php (accessed 20 July 2023). [ Links ]

22. Office of Health Standards Compliance, South Africa. Biannual Report October 2022 to March 2023. https://ohsc.org.za/publications/bi-annual-report-certification-of-health-establishments-october-2022-to-march-2023/ (accessed 21 July 2023). [ Links ]

23. Rispel R, Shisana O, Dhai A, et al. Achieving high-quality and accountable universal health coverage in South Africa: A synopsis of the Lancet National Commission Report. In: Moeti T, Padarath A, eds. South African Health Review 2019. Durban: Health Systems Trust, 2019. http://www.hst.org.za/publications/Pages/SAHR2019 (accessed 1 September 2023). [ Links ]

Correspondence:

Correspondence:

P Barron

pbarron@iafrica.com

Accepted 15 October 2023