Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

SAMJ: South African Medical Journal

On-line version ISSN 2078-5135

Print version ISSN 0256-9574

SAMJ, S. Afr. med. j. vol.107 n.10 Pretoria Oct. 2017

http://dx.doi.org/10.7196/samj.2017.v107i10.12822

CME

Gaps in monitoring systems for Implanon NXT services in South Africa: An assessment of12 facilities in two districts

D PillayI; C MorroniII, III, IV, V; M PleanerVI; O A AdeagboVII; M F ChersichVIII; N NaidooI; S MullickIX; H ReesX

IMPH; Wits Reproductive Health and HIV Institute, Faculty of Health Sciences, University of the Witwatersrand, Johannesburg, South Africa

IIMB ChB, DFSRH, DTM&H, MPH, MSc, PhD; Wits Reproductive Health and HIV Institute, Faculty of Health Sciences, University of the Witwatersrand, Johannesburg, South Africa

IIIMB ChB, DFSRH, DTM&H, MPH, MSc, PhD; Women's Health Research Unit, School of Public Health and Family Medicine, Faculty of Health Sciences, University of Cape Town, South Africa

IVMB ChB, DFSRH, DTM&H, MPH, MSc, PhD; EGA Institute for Women's Health and Institute for Global Health, University College London, UK

VMB ChB, DFSRH, DTM&H, MPH, MSc, PhD; The Botswana-UPenn Partnership, Gaborone, Botswana

VIMEd; Wits Reproductive Health and HIV Institute, Faculty of Health Sciences, University of the Witwatersrand, Johannesburg, South Africa

VIIMA, PhD; Wits Reproductive Health and HIV Institute, Faculty of Health Sciences, University of the Witwatersrand, Johannesburg, South Africa

VIIIMB BCh, PhD; Wits Reproductive Health and HIV Institute, Faculty of Health Sciences, University of the Witwatersrand, Johannesburg, South Africa

IXMB ChB, MSc, MPH, PhD; Wits Reproductive Health and HIV Institute, Faculty of Health Sciences, University of the Witwatersrand, Johannesburg, South Africa

XMB BChir, MA (Cantab), MRCGP, DCH, DRCOG; Wits Reproductive Health and HIV Institute, Faculty of Health Sciences, University of the Witwatersrand, Johannesburg, South Africa

ABSTRACT

BACKGROUND. Implanon NXT, a long-acting subdermal contraceptive implant, was introduced in South Africa (SA) in early 2014 as part of an expanded contraceptive method mix. After initial high levels of uptake, reports emerged of frequent early removals and declines in use. Monitoring of progress and challenges in implant service delivery could identify aspects of the programme that require strengthening.

OBJECTIVES. To assess data management and record keeping within implant services at primary care facilities.

METHODS. We developed a checklist to assess the tools used for monitoring implant services and data reporting to district offices. The checklist was piloted in seven facilities. An additional six high-volume and six low-volume implant insertion clinics in the City of Johannesburg (CoJ), Gauteng Province, and the Dr Kenneth Kaunda District, North West Province, were selected for assessment.

RESULTS. All 12 facilities completed a Daily Head Count Register, which tallied the number of clients attending the clinic, but not information about implant use. A more detailed Tick Register recorded services that clinic attendees received, with nine documenting number of implant insertions and six implant removals. A more specific tool, an Insertion Checklist, collected data on insertion procedures and client characteristics, but was only used in CoJ (five of six facilities). Other registers, which were developed de novo by staff at individual facilities, captured more detailed information about insertions and removals, including reasons. Five of six low-volume insertion facilities used these registers, but only three of six high-volume facilities. No facilities used the form specifically developed by the National Department of Health for implant pharmacovigilance. Nine of 12 clinics reported data on numbers of insertions to the district office, six reported removals and none provided data on reasons for removals.

CONCLUSION. For data to inform effective decision-making and quality improvement in implant services in SA, standardised reporting guidelines and data collection tools are needed, reinforced by staff training and quality assessment of data collection. Staff often took the initiative to fill gaps in reporting systems. Current systems are unable to accurately monitor uptake or discontinuation, or identify aspects of services requiring strengthening. Lack of pharmacovigilance data is especially concerning. Deficiencies noted in these monitoring systems may be common to family planning services more broadly, which warrants investigation.

In 2012, the National Department of Health (DoH) adopted the National Contraception and Fertility Planning Policy. The overarching goal of this policy was to expand the country's contraceptive method mix by promoting long-acting reversible contraceptive (LARC) methods, such as the subdermal contraceptive implant.[1] The policy narrative states that injectable contraceptives account for half of contraceptive use nationally and for up to 90% in some areas,[1] and that this predominance of short-term methods has several drawbacks. Many women discontinue injectable methods, or return late for their next injection,[1,2] placing them at risk for unintended pregnancy. Introducing the Implanon NXT, a long-acting subdermal contraceptive implant, in the public sector in 2014, thus aimed to provide a more effective contraceptive alternative.[3] Contraceptive implants, such as Implanon NXT, which offers 3 years of protection against pregnancy, are highly effective and acceptable across multiple settings.[4,5]

In the year after the launch of Implanon NXT, the DoH reported that ~800 000 implants had been inserted and that >6 000 healthcare providers had been trained with regard to implant provision (T Zulu. Updates on the implant roll-out in South Africa: National perspective - unpublished paper presented at a meeting, Pretoria 2015). The DoH stated that by April 2015, according to their estimates, ~5 000 removals had been recorded and that this figure has been steadily rising over time. The DoH, however, was increasingly concerned following negative media reports on the implant and District Health Information System (DHIS) data showing a steady decline in uptake. Aside from a case report, where a woman in South Africa (SA) had the implant removed owing to bleeding irregularities 10 months after insertion, and unconfirmed estimates from the DoH, the actual number of and reasons for implant removals are unknown. These gaps in data signal weaknesses in monitoring systems in the family planning programme.

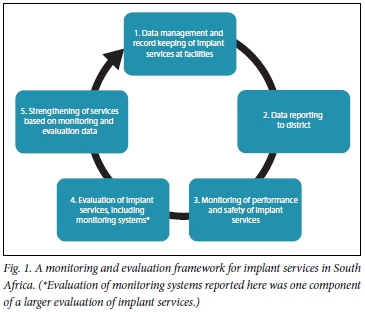

When new contraceptive methods are introduced, there may be delays in incorporating indicators for use and safety within existing information systems.[7] The analysis of monitoring data from new methods, such as the implant in SA, therefore needs to be supplemented by the findings of periodic evaluations, and more rigorous, independent assessments of specific aspects of the services, such as the quality of the routine monitoring systems. We therefore examined the data management and record-keeping systems used to monitor implant insertions and removals in two districts of SA, focusing on their quality and standardisation across facilities. Identifying and addressing gaps in these systems will enable the DoH and other stakeholders to monitor whether the goals of implant services are being achieved, and to identify aspects of the services that require strengthening as per the process (Fig. 1). These findings may also inform decisions around the selection of indicators for the programme and the design of data collection tools.

Methods

Study setting

This study, conducted in late 2016, formed one component of a larger evaluation in which we assessed the quality of implant services in SA. We selected six facilities in the City of Johannesburg (CoJ), Gauteng Province, and six in the Dr Kenneth Kaunda District (DKKD), North West Province. Sites were chosen based on the number of implant insertions recorded in the DHIS for 2015. The sampling frame for selecting the study sites in CoJ consisted of 17 primary care facilities, with a total of 1 045 insertions in 2015; the sites in DKKD were chosen from 40 facilities, with a total of 727 insertions. In each district, three facilities with the highest and three with the lowest number of insertions were included. High-volume clinics had inserted as many as 305 devices in CoJ in 2015, while all three in DKKD had inserted ~60. In both districts, most of the low-volume clinics had performed fewer than five insertions. All the selected facilities had been providing the implant since February 2014.

Development and piloting of a checklist tool

We developed a study checklist to assess the presence and content of monitoring tools for recording and reporting data on the implant at primary care level. The tool was designed in consultation with a clinician, who had trained healthcare providers regarding the implant and was familiar with the services in SA and elsewhere. The checklist was then piloted in seven primary health facilities (three in CoJ and four in DKKD - distinct from the study sites) in late 2015. Facilities for the pilot were also selected based on the number of insertions done (three high- and four low-volume clinics). The research team used the pilot checklist to review the tools used to monitor implant use and the mechanisms for reporting of data to district offices, and finalised the tool thereafter.

The final study checklist documented the presence of the tools (captured as a binary variable: present or not), purpose of five data collection tools that had been identified during the pilot, and variables collected in each (Table 1). The tool also assessed the reporting of statistics from facility to district level.

Audit of monitoring tools in the study sites

During site visits, using the study checklist, the study team reviewed the tools used for monitoring of implant services (any tool used from February 2014 onwards). In addition, family planning providers and clinic data capturers were asked about which data were reported to the district and the frequency of reporting. We present data using descriptive statistics for overall totals and for each district, and examine differences between high- and low-volume facilities.

Ethical approval

The study was approved by the University of the Witwatersrand Human Research Ethics Committee (ref. no. M151147).

Results

Data collection tools used for all facility attendees

All facilities have a Daily Reception Headcount Register, a standardised tool that was well established and maintained across all the facilities (Fig. 2). Although all clinics had a Primary Healthcare (PHC) Comprehensive Tick Register, different versions were being used in the two districts. The facilities in CoJ used the most updated version of the register, which included a section for recording both implant insertions and removals. DKKD facilities used an older version, which only records insertions. Overall, however, based on our observations, in only nine of the 12 facilities were data on insertions actually being captured in the PHC Comprehensive Tick Register, even though these registers included implant insertion fields in all clinics. Assessment of the Reception Headcount and PHC Tick registers during the pilot phase showed similar findings.

Data collection tools used only for implant insertions and removals

The Implant Insertion Checklist had been created specifically for the CoJ and was present in all six facilities in the district, but only used in five of them. No facilities in DKKD were using this or a similar checklist. However, in eight facilities (four in CoJ, four in DKKD), facility-based nurses had, on their own initiative, developed an Insertion and Removal Register to capture detailed data on implant insertions and removals. These consisted of either A4 sheets of paper or A5 books. While all eight of these entered data on insertions, only six collected data on removals. These registers were also noted at several of the clinics visited during the pilot phase of the study.

The 'home-made' Insertion and Removal registers collected considerably more detailed information on insertions and removals than the other registers. The variables collected varied across facilities, but included data on name of provider who did the insertion, date of expected return following insertion, date of removal, reason for removal and name of provider who removed the device. The tools also often contained data that could be used to identify aspects of the services that require further attention or investigation. For example, data in one removal register showed that the implant had been removed in 14 clients who had presented with a broken implant. In these cases, which occurred over the course of a year, the broken devices had been discarded and the cases not communicated to the district or national level DoH.

None of the 12 facilities was completing the Active Surveillance Reporting Form for Sub-Dermal Implant, and none of the staff was aware of the form's existence. Similarly, none of the seven sites in the pilot study was using this form.

Overall, 10 of the 12 facilities provided information to the district offices on the number of insertions done and nine reported removal numbers. No facilities reported the reasons for implant removals, or adverse events associated or potentially associated with the device.

Differences in monitoring of implant insertions and removals between high- and low-volume facilities

Recording of insertions in the PHC Comprehensive Tick Register was similar between high-volume (5/6) and low-volume (4/6) facilities (Fig. 3). Recording of removals in the PHC Comprehensive Tick Register was done in all three high-volume and three low-volume facilities in CoJ, and none in DKKD. However, more low-volume inserting facilities (5/6) were using implant insertion registers than high-volume inserting facilities (3/6). Half the high-volume and half the low-volume inserting facilities used the implant removal register. All the high-volume facilities reported insertion statistics to the district, compared with only four of the six low-volume facilities. More high-volume facilities (6/6) reported removal statistics to the district using the PHC Comprehensive Tick Register and removal register as source data compared with half of the low-volume facilities (3/6).

Discussion

The study shows major gaps in tools and standardisation of monitoring systems for implant services. Data are not systematically reported to district level, e.g. a quarter of facilities do not submit numbers of removals. Overall, gaps in data collection and reporting, even in high-volume clinics, mean that the current DHIS underestimates the actual utilisation of the implant and give little indication of the occurrence (and timing) of removals. The South Africa Family Planning programme is therefore unable to understand the true extent of and reasons for the decline in implant use. Importantly, more high- than low-inserting facilities were reporting both insertion and removal statistics to the district. Data are not available to guide the initiatives that are urgently needed to strengthen implant services in the country.[8] This is especially pressing given the importance of robust data for directing service delivery in the first years after the introduction of contraceptive methods.[9] Problems with new methods, if not corrected, often lead to withdrawal of methods from national programmes.[10]

The World Health Organization (WHO) framework for the introduction of new contraceptive methods suggests a three-stage process, which starts with determining a need for the new method according to end-user needs (Stage 1), conducting service delivery and end-user research (Stage 2), and exploring implications of research for utilisation of the method (Stage 3).[9] As per Stage 1, the DoH introduced the implant, recognising the need for an expanded method mix and methods that were not user dependent. This study addresses both Stage 2 (service-delivery research, monitoring in this instance) and Stage 3 (implications of assessment of monitoring for implant programmes). Poorly functioning monitoring systems hinder efforts of Stage 2 and compromise any efforts to strengthen services in Stage 3.

The Policy Project suggests that a performance-monitoring system should not be developed in a vacuum, but rather constitute an integral part of the overall service delivery system that is capable of identifying problems and taking corrective actions.[7] Traditionally, too much emphasis has been placed on mere recording of new acceptors of contraception, without consideration of other pertinent information, such as method continuation and reasons for discontinuation,[7] as noted in this study. Additional data sources, such as a repeat of this evaluation, may be needed in a few years to assess improvements in monitoring systems and other gaps in programming.[11]

Furthermore, there is a need for disaggregated data on implant insertions and removals, such as by age and whether women are new contraceptive users or method switchers. DHIS data are sourced from the PHC Tick Register, which as a monitoring tool is constrained by the limited data it gathers, which does not include age, for example. It is, however, encouraging that the PHC Tick Register has evolved over time, with later versions encompassing data on removals, although these were not yet being used in DKKD. Seemingly, deficiencies in the data collection tools were apparent to health providers, who themselves then developed data collection tools to capture pertinent information. This demonstrates considerable initiative and resource-fulness of nurses, and illustrates their awareness of the importance of data collection. Even though these data are useful for the purposes of fine-tuning services at individual facilities, standardising data collection and reporting across clinics could alter district and even national programming.

Commodity use does not appear to be captured in the DHIS for the implant, which should show numbers of devices ordered and returned, analogous to how antiretroviral drugs are monitored. Antiretroviral stocks are monitored by the DoH at drug depots and facility level. Stock delivered to individual facilities is also captured, and stock ordering and returns are accounted for at drug depots. The Handbook of Indicators for Family Planning Program Evaluation,[12] developed through the Evaluation Project, suggests that to measure service delivery operations, commodities need to be monitored. They suggest tracking quantities of contraceptives procured annually, quantities in stock at central stores, amount distributed from central stores, and inventory levels and stock-outs at service-delivery points. These data could complement facility-level reporting, and together provide useful insights to inform the fine-tuning, reorientation and planning of service improvements.

Of particular concern is that adverse events and other aspects of pharmacovigilance of the implant are not being collected. Removals, but also insertions, can have complications, which should be brought to the attention of district and provincial authorities. Even though complications are rare, a review of clinical studies of Implanon NXT showed that complications occur in ~1% of insertions.[13] These included deep insertions with fibrous adhesions, and non-palpable or broken implants.[13] The Active Surveillance Form for Sub-Dermal Implants has not been rolled out to the facilities, possibly as the number of data points it contains (24 variables) makes its completion onerous for health providers. If the purpose of this form is solely pharmacovigilance, fewer and more relevant indicators need to be selected and the form should be used.

Study limitations

The study is limited by not having examined the quality and completeness of the data. Furthermore, the findings may not reflect the monitoring systems in the entire country, or of clinics that perform a moderate number of insertions. The generalisability of the findings are, however, enhanced by the inclusion of 19 sites (seven pilot study sites and 12 study sites) across two provinces, encompassing both urban and peri-urban locations. Also, sampling of both high- and low-volume clinics allowed for more detailed analysis of data systems.

Conclusion

This study is the first assessment of data management and reporting structures for monitoring the contraceptive implant in SA. The findings highlight aspects of the monitoring system that need to be strengthened to provide timely, actionable information to guide improvements in the country's implant services. Our study underscores the need for standardised tools, and data collection and reporting guidelines. A single nationally standardised data collection tool could be developed, which consolidates and replaces the insertion checklist, insertion and removal registers, and pharmacovigilance forms. This could facilitate collection of data for a few carefully selected indicators of performance and obstacles to service delivery. Indicators need to be carefully considered and prioritised, so as to collect sufficiently detailed information, but without overburdening healthcare providers.[7] Clearly, data monitoring needs to extend beyond absolute counts of utilisation and discontinuation, which themselves are currently poorly collected. Other important data include client characteristics (especially age and most recent contraception), insertion and removal dates, reasons for removals, details of removal procedures (e.g. duration of the procedure and complications) and contraception choice after removal. As an immediate step, the reporting of data from the PHC Tick Register could be strengthened, and encompass insertions, removals and reasons for removal. Lastly, it is possible that deficiencies noted in this study are common to family planning services in SA in general, a concern that warrants further investigation.

Acknowledgements. We thank the Department of Health for allowing us access to the health facilities, the anonymous reviewers of this article, clinics that participated, and Ntombizethu Dumakude for her contribution to tool development and the pilot study. We would also like to acknowledge the data collectors (Fortunate Gombela, Iris Sishi, Lindiwe Mbuyisa, Ntombenhle Twala, Siziwe Sidabuka and Tebogo Mokoena).

Author contributions. DP: project leader and responsible for project design, implementation and write-up, and supported by CM, MP, OAA, NN, MFC, and SM. HR: senior author, providing oversight and review.

Funding. Funding was received from the United Nations Population Fund (UNFPA), which also provided technical assistance.

Conflicts of interest. None.

References

1. National Department of Health. National Contraception and Fertility Planning Policy and Service Delivery Guidelines: A Companion to the National Contraception Clinical Guidelines. Pretoria: DoH, 2012. [ Links ]

2. Baumgartner JN, Morroni C, Mlobeli RD, et al. Timeliness of contraceptive reinjections in South Africa and its relation to unintentional discontinuation. Int Fam Plan Perspect 2007;33(2):66-74. [ Links ]

3. Patel M. Contraception: Everyone's responsibility. S Afr Med J 2014;104(9):644. https://doi.org/10.7196/SAMJ.8764 [ Links ]

4. Rowlands S, Searle S. Contraceptive implants. Curr Perspect 2014;5:73-84. https://doi.org/10.2147/OAJC.S55968 [ Links ]

5. Dickerson LM, Diaz VA, Jordon J, et al. Satisfaction, early removal, and side effects associated with long-acting reversible contraception. Fam Med 2013;45(10):701-707. [ Links ]

6. Adams B. Subdermal implants: A recent addition to the choice of South African contraceptives. Prof Nurs Today 2015;19(2):32-34. [ Links ]

7. United States Agency for International Development. The POLICY project - performance monitoring for family planning and reproductive health programs: An approach paper. Geneva: USAID, 1996. http://policyproject.com/pubs/workingpapers/wps-01.pdf (accessed 4 September 2017). [ Links ]

8. Adamchak SE, Okello FO, Kaboré I. Developing a system to monitor family planning and HIV service integration: Results from a pilot test of indicators. J Fam Plann Reprod Health Care 2016;42(1):24-29. https://doi.org/10.1136/jfprhc-2014-101047 [ Links ]

9. Spicehandler JSR. Contraceptive Introduction Reconsidered: A Review and Conceptual Framework. Geneva: WHO, 1994. http://who.int/reproductivehealth/publications/family_planning/HRP_ITT_94_1/en/ (accessed 4 September 2017). [ Links ]

10. Pleaner M, Morroni C, Smit J, et al. Lessons learnt from the introduction of the contraceptive implant in South Africa. S Afr Med J 2017;107(11). (Accepted for publication. [ Links ])

11. Igras S, Sinai I, Mukabatsinda M, Ngabo F, Jennings V, Lundgren R. Systems approach to monitoring and evaluation guides scale up of the Standard Days Method of family planning in Rwanda. Glob Health Sci Pract 2014;2(2):234-244. https://doi.org/10.9745/GHSP-D-13-00165 [ Links ]

12. Bertrand JTMR, Rutenberg N. Handbook of Indicators for Family Planning Program Evaluation. Geneva: USAID, 1994. [ Links ]

13. Evans R, Holman R, Lindsay E. Migration of Implanon®: Two case reports. J Fam Plan Reprod Health Care 2005;31(1):71-72. https://doi.org/10.1783/0000000052973068 [ Links ]

Correspondence:

Correspondence:

D Pillay

dianthapillay@gmail.com

Accepted 23 August 2017