Serviços Personalizados

Artigo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares em Google

Similares em Google

Compartilhar

South African Journal of Education

versão On-line ISSN 2076-3433

versão impressa ISSN 0256-0100

S. Afr. j. educ. vol.43 no.4 Pretoria Nov. 2023

http://dx.doi.org/10.15700/saje.v43n4a2230

ARTICLES

Comparing the technological pedagogical content knowledge-practical proficiency of novice and experienced life sciences teachers

Umesh Ramnarain; Memory Malope

Department of Science and Technology Education, Faculty of Education, University of Johannesburg, Johannesburg, South Africa uramnarain@uj.ac.za

ABSTRACT

The purpose of this study was to assess and compare the technological pedagogical content knowledge-practical (TPACK-Practical) proficiency of novice and experienced South African life sciences teachers. A quantitative design was followed and 155 life sciences teachers participated. A 17-item questionnaire was administered to establish the TPACK-P proficiency levels of the life sciences teachers. Five TPACK-P proficiency levels were identified based on the questionnaire responses, namely: level 0 (lack of use of information communications technology [ICT]); level 1 (basic understanding of ICT use); level 2 (simple adoption of ICT use); level 3 (infusive application of ICT); and level 4 (reflective application of ICT use). The ANOVA test showed that there was no significant difference between the TPACK-P proficiency of novice and experienced teachers. Based on responses to the questionnaire, the majority of the teachers who participated in the study demonstrated level 3 proficiency. A small percentage of teachers displayed levels 1, 2, and 4 proficiencies across the groups. We conclude with a recommendation for professional development to support the teachers' TPACK-P better. The purpose of such a professional development programme should be to instil a deeper appreciation of ICT within life sciences teachers and to capacitate them to integrate ICT during their classroom practice, ultimately conducting lessons that are more meaningful to the learners.

Keywords: life sciences teacher; pedagogical content knowledge (PCK); Rasch analysis (RA); technological pedagogical content knowledge-practical (TPACK-P) proficiency level; technology integration

Introduction

The emergence of new educational technologies pushes teachers to understand and leverage these technologies for classroom use (Sharma, 2020). Gauteng Member of the Executive Council (MEC), Panyaza Lusufi, told reporters on 20 July 2015 that the Gauteng Department of Education (GDE) has spent almost R2 billion on a project to implement its "paperless classroom" programme, which entails a move towards learning using tablets (Matwadia, 2018:1). The GDE also aims to have chalkboards replaced with interactive whiteboards and aims to spend up to R37 billion on the project. The integration of information communications technology (ICT) for teaching and learning is, therefore, of considerable interest in teacher education.

Teachers need to realise the potential of technology for teaching and learning. Twining and Henry (2014) argue that the use of digital resources by teachers in the classroom can play an important role in improving learning. Additionally, well-planned lessons in which technology is used help learners to acquire the necessary skills to survive in a complex, highly technological knowledge-based economy (Dudko, 2016). In the digital age in which we live, one of the key roles of teachers is to help learners to obtain the necessary skills to become digitally literate and competent in today's society, and thereby meet the global demand for digital skills (Avidov-Ungar & Forkosh-Baruch, 2018). Technology has the potential to advance learner knowledge and skills through both cooperation and autonomous learning (Little & Throne, 2017). At the same time, the use of technology in the school curriculum has the potential to add value to curricula and transform learners into constructors of knowledge (Ilomäki & Lakkala, 2018). Numerous studies have concluded that the integration of technology into classroom practice is a slow and complex process (e.g. Dele-Ajayi, Fasae & Okoli, 2021; Ramnarain & Penn, 2021 ; Tweed, 2013). Teachers' professional development needs in ICT skills and competencies have been flagged as critical for teaching and learning (Dlamini & Mbatha, 2018). The effective use of ICT in the classroom is dependent not just on the teachers' ICT skills but their ability to understand and apply the pedagogy of using ICT as a learning tool. Such ability is often acquired through experience in using ICT. However, the literature shows a gap regarding the analysis of the effect that experience plays in the integration of ICT in teaching.

With this study we compared the competency level of novice and experienced life sciences teachers in the integration of ICT in their classroom practice.

Literature Review

Shulman introduced the pedagogical content knowledge (PCK) model in the education realm in the 1980s. He did so after taking note that educational policies ignored content and fixated largely on basic pedagogy. PCK is the knowledge that teachers develop through experience, about how to teach particular content in particular ways in order to lead to enhanced student understanding (Loughran, Berry & Mulhall, 2012). The introduction of technology led to the reconfiguration of PCK. Koehler, Mishra and Cain (2013) noticed that technological knowledge was treated as a knowledge set outside of and unconnected to PCK. Consequently, they added the element of technology to Shulman's PCK concept. After 5 years of research, Mishra and Koehler created a new framework. The concept of technological pedagogical content knowledge (TPCK) was introduced to the field of scholastic research as a theoretical framework that could be used to understand the knowledge that teachers require for effective technology integration (Mishra & Koehler, 2006). The term "TPCK" gained popularity in 2006 when Mishra and Koehler presented their seminal work outlining the model and describing each central variable thereof (Graham, 2011). TPCK was used as an acronym in the literature until 2008 (Graham, 2011), when educational researchers in the community of practice proposed using a more easily pronounceable term, TPACK (Graham, 2011). TPACK refers to a thoughtful understanding of how teaching and learning vary when particular technologies are used. Koehler et al. (2013:16) defined TPACK as "the basis of effective teaching with technology requiring an understanding of the representation of concepts using technology."

Graham (2011) suggests that TPACK has the potential to build a strong foundation for planning and teaching technologically-integrated lessons. In the education community, the TPACK framework has become a popular construct for examining the sets of knowledge that teachers need to teach a subject effectively and achieve technology integration (Ghavifekr & Rosdy, 2015).

Van Driel, Verloop and De Vos (1998) regarded teaching experience as a major component of the PCK of teachers. Similarly, this may also apply to TPACK. The experience of teachers in using ICTs can be an indicator of their proficiency in TPACK (Jang & Tsai, 2012). In this study we compared the interconnection of teaching experience and TPACK proficiency between novice and experienced life sciences teachers. According to Mathipa and Mukhari (2014), one of the factors at play in the implementation of ICT in teaching and learning is the proficiency of teachers in ICT integration. Seasoned teachers who are comfortable with traditional ways of teaching such as teacher-centred approaches tend not to want to apply new and innovative methods of teaching (Makgato, 2012) and this invokes the need to investigate and compare the TPACK competency of novice and experienced teachers when integrating ICT.

Conceptual Framework

TPACK-P framework

Although various models of TPACK exist, the technological pedagogical content knowledge-practical (TPACK-P) model was considered appropriate in framing this investigation because it is based on both knowledge and experience, delineating the practical TPACK that teachers have developed from teaching practice (Yeh, Hsu, Wu, Hwang & Lin, 2014). TPACK-P is an extension of the TPACK model and is regarded as useful in understanding the knowledge construction that teachers in the high-tech era need to attain meaningful engagement in classroom teaching and learning (Srisawasdi, 2012). Moreover, knowledge of the skills and strategies to employ in the classroom for effective deliverance of the topic and assessment of learners during classroom practice is essential to impart knowledge to learners that they can relate to and apply outside the classroom (Yeh et al., 2014).

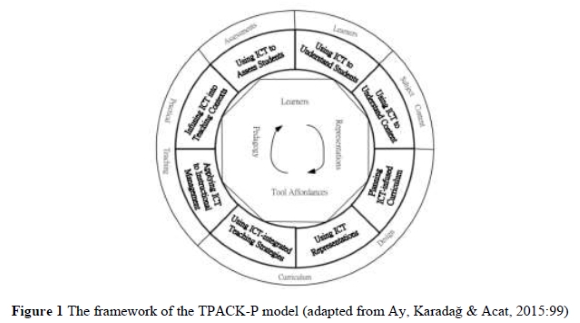

The TPACK-P model consists of three knowledge domains, namely, assessment, curriculum, and teaching practice. The three domains can be further refined and demonstrate eight knowledge constructs, as shown in Table 1.

The three knowledge domains are categorised into five pedagogical areas in which teachers' competencies must be developed. The five pedagogical areas are learner content, subject content, curriculum design, practical teaching, and assessments. Figure 1 shows the eight knowledge dimensions of teachers' TPACK-P in five pedagogical areas.

The three knowledge domains of TPACK-P are discussed below.

Assessment domain

Assessment plays an integral part in every teaching practicum and is pivotal in assessing learners' progress. This allows the teacher to determine whether the learners understand the topic that has been taught and can construct their own knowledge (Yeh, Hwang & Hsu, 2015). Huba and Freed (2000) define assessment as the process of gathering and discussing information from multiple and diverse sources in order to develop a deep understanding of what learners know and understand, and what they can do with their new knowledge. The process ends when assessment results are used to improve subsequent learning (Huba & Freed, 2000). Yeh et al. (2015:79) added that "formative assessment informs the teacher and empowers the process of teaching and learning since it provides checkpoints and feedback for teachers and students on their progress, as it is common to see students develop alternative concepts compared to those being taught to them, also referred to as faulty understanding."

Teachers' knowledge in ICT-infused assessment is critical because, with the use of technology, learners can be continuously assessed during the lesson (Yeh et al., 2015). The teacher can use video simulations, animations, or PowerPoint presentations that can demonstrate the concepts, probe the problem, and allow the learners to respond. This also allows the teacher to self-reflect on their own effectiveness in teaching the concept. They can then use the feedback from their reflection to adopt a better method of teaching and learning (Yeh et al., 2015).

Planning and design domain

Teachers are expected to plan and design upcoming lessons using their insights into pedagogy and content obtained from their professional training and prior experience, to match learners' needs with the intended outcomes (Sherin & Drake, 2009). Therefore, the teacher must investigate what the learners will be taught and how they will be taught. Shulman (1986) reminded us that the way in which the teacher plans and designs the lesson is an indication of the teacher's PCK. The teacher must have good knowledge and clear appreciation of the benefits of technology in lesson preparation in order to plan and design appropriate lessons that can meet the needs of learners and the curriculum (Angeli & Valanides, 2009). Good knowledge of available technology will also enable them to choose the most appropriate technological support.

Practical teaching domain

This domain can be viewed as the actual activities that teachers implement in the classroom. Shulman (1986:9) refers to this as the "wisdom of practice." Practical teaching occurs when the teacher interacts with the learners during the lesson. Practical teaching needs to support and guide the learner to the desired objective, while exploring nature and various concepts, and developing an enquiring mind (Yeh et al., 2015). Yeh et al. (2015:80) explain that ICT-infused lessons "offer stimuli" such that successful integration of appropriate technology in classroom practice will allow the learners to explore and visualise nature or concepts while in the classroom.

Yeh et al. (2015) postulate that a teacher's proficiency in TPACK-P will improve with teaching experience. Therefore, developing TPACK-P is a gradual process that depends on the frequency with which teachers use technology in their practice. Thus, it is expected that experienced teachers have stronger TPACK-P than novice or pre-service teachers. Mulholland and Wallace (2005) agree, stating that science teachers' PCK requires the longitudinal development of experience as they develop from novice teachers into experienced teachers.

With this study we investigated this postulate by comparing the competency level of novice and experienced life sciences teachers in the integration of ICT in their classroom practice. The study was guided by the following question: How do the TPACK-P proficiencies of novice and experienced life sciences teachers compare?

Methodology

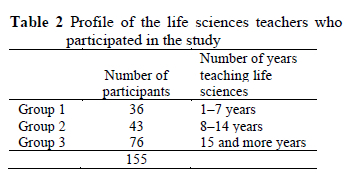

A quantitative design was followed whereby a TPACK-P questionnaire was distributed to 155 life sciences teachers within the vicinity of the schools where the researcher taught. The sample was hence purposefully and conveniently selected since the teachers were easy to access and available when required. Table 2 shows the profile of the life sciences teachers who participated in the study.

A 17-item ordered multiple-choice questionnaire developed by Yeh et al. (2014) was adopted for this research. The items on the questionnaire were designed to determine life sciences teachers' understanding and integration of various technologies in classroom instruction (Yeh et al., 2014). The content validity of the questionnaire is therefore confirmed. The questionnaire was divided into three categories, namely, assessment, curriculum design, and teaching practice, which correspond with the three domains of TPACK-P (Yeh et al., 2014). Each item has four response options. Each response option classifies the participants' competency level in ICT integration in classroom practice at different levels. For instance, teachers at level 4 apply ICT reflectively and are also considered highly competent in the understanding and integration of technology. Level 3 participants enthusiastically apply ICT in their classroom practice. Level 2 means that the teachers merely adopt ICT in their classroom practice, and lastly, level 1 indicates low levels or a lack of ICT use in classroom practice.

The instrument has been used previously in studies involving science teachers. For example, in Taiwan, Jen, Yeh, Hsu, Wu and Chen (2016)

conducted a study involving a sample of 99 participants (52 pre-service and 47 in-service science teachers). It was found that there were no significant differences between the TPACK-P of in-service and pre-service teachers. This instrument has been applied in South Africa and was administered to a group of pre-service science teachers. Due to its content validity being established and it being previously used in the South African context, the instrument was deemed appropriate for use in this study.

The data collected in this study were used to compute scores for individuals regarding the four levels. This was achieved by entering the scores into a frequency distribution table for each response option chosen. The mean and standard deviation of responses according to the four levels was calculated. The normality in the distribution of scores was assessed using the Rasch analysis (RA), a unique approach of mathematical modelling based upon a latent trait that accomplishes stochastic (probabilistic) conjoint additivity (conjoint means the measurement of persons and items on the same scale), and additivity (the equal-interval property of the scale) (Boone, 2016). In this process, item values are calibrated and individual abilities are measured on a shared continuum that accounts for the latent trait.

The sampled teachers were then grouped based on their years of teaching experience. Group 1 consisted of life sciences teachers with teaching experience of 1 to 7 years; group 2 consisted of life sciences teachers with teaching experience of 8 to 14 years; and group 3 consisted of life sciences teachers with teaching experience of 15 years and more. Furthermore, the three groups of teachers were profiled (levels of competency) according to their scores on the questionnaires. Reliability (internal consistency) was established by calculating Cronbach's alpha in an attempt to confirm the factor structure of the scores obtained from the 17-item questionnaire.

The data collected were analysed using Rasch modelling, employing the item response theory (IRT). IRT is a way to analyse responses to tests or questionnaires. Multidimensional IRT is described as a generalisation of interactions between a person and a task where the characteristics of the person are described by a vector of constructs (Yao & Schwarz, 2006).

Benjamin Wright and Mike Linacre devised the Wright map analysis (Boone, Staver & Yale, 2014). The multidimensional IRT is a suitable model to use in producing a Wright map. Using Rasch modelling, the thresholds of the 17 questions were established. A Wright map was then generated from the established thresholds. The data collected were used to compute scores for individuals at the five proficiency levels. The scores were entered into a frequency distribution table for each response option chosen. The mean and standard deviation of responses according to the five levels was calculated. The normality in the distribution of scores was assessed by calculating the Kolmogorov-Smirnov statistic.

The ANOVA test is regarded as a parametric test. Parametric analysis is the type of analysis to test group means (Altman & Bland, 2009) because the parameters of the groups are calculated and estimated. The calculated parameters assumed for the data were used to compare the means of the three groups. The ANOVA test was used to determine whether there were any statistical differences between the means of the three groups (Altman & Bland, 2009). This test is considered appropriate in assessing the "statistical significance of the difference between three sample means for a single dependent variable" (McMillan & Schumacher, 1993:442). In this case, the dependent variable was TPACK-P competency (as determined by the instrument) and the independent variable was years of experience (novice vs experienced).

The three groups of teachers were clustered into profiles (levels of competency) according to their scores on the questionnaires. Reliability (internal consistency) was measured by calculating Cronbach's alpha. In an attempt to confirm the factor structure of the scores obtained from the 17-item questionnaire (construct validity), confirmatory factor analysis was employed. The development of a construct map provides an empirical description of TPACK-P and what it means to have more or less proficiency in TPACK-P.

Results

Reliability and Validity of Rasch Framework An important consideration within a Rasch framework is "fit." As quality control mechanism fit evaluates how well the data conform to the Rasch model. If data deviate greatly from the Rasch model, the causes need to be investigated. Moreover, fit assists in investigating the items of an instrument involving one trait and determining whether the responses of individuals agree with that trait. In simpler terms, the fit analysis evaluates the validity of the instrument.

The mean-square statistics were used to evaluate the validity of the assessment. Two fit indices were computed. Infit, inlier-pattern-sensitive, is more sensitive to unexpected patterns of observations by persons on items that are roughly targeted on them (Linacre, 2012). Outfit, an outlier-sensitive fit statistic, is more sensitive to unexpected observations by persons on items that are relatively easy or difficult for them (Linacre, 2012). These indices "represent the differences between the Rasch model's theoretical expectation of the item performance and the performance actually encountered for that item in the data matrix" (Bond & Fox, 2015:57). The mean square (MNSQ)-values of the items should be close to 1 (Linacre, 2012). Values greater than 1.0 (underfit) indicate that the data are less predictable than the model predicts. Values less than 1.0 (overfit) indicate that the data are more predictable than the model predicts. The outfit value was 0.160144 and the infit value was 0.872. If these two values are added together, the infit and outfit MNSQ will be equal to 1.03, ultimately read as 1. For all 17 questions of the instrument, the infit and outfit MNSQ were equal to 1.00.

The weighted mean likelihood estimation (WLE) reliability for the 17 items was 0.64. This is regarded as acceptable (Linacre, 2012).

TPACK-P proficiency levels of teachers By applying Rasch modelling, the thresholds of the 17 questions were established by generating a Wright map (cf. Figure 2). The Wright map is arranged as a vertical histogram. The left side shows candidates and the right side shows items. The left side of the map shows the distribution of the measured ability of the candidates from most able at the top to least able at the bottom. The items on the right side of the map are distributed from the most difficult at the top to the least difficult at the bottom. In simpler terms, Rasch item maps show the distribution of the item difficulties in logits aligned with the distribution of person abilities in logits. A logit is defined as a natural log of an odds ratio (Ludlow & Haley, 1995). These are interval level units, which are the Rasch-derived estimates of ability and difficulty. The easiest items are at the bottom of the item map. The most difficult items are at the top of the item map. The ablest students are at the top of the item map. The least able students are at the bottom of the item map.

Each item had four options that individually represent typical performances that teachers at levels 1 to 4 display. The Wright map denotes an overall distribution of the four options for each item. Therefore, the map shows life sciences teachers' TPACK-P proficiency levels distributed according to the teachers' individual measures. Figure 2 indicates that items with a high conceptual understanding of ICT integration were in the upper portion of the map. On the other hand, items with a low level of conceptual understanding of ICT integration were at the bottom of the map. As can be seen from the map, the majority of the items related to the teachers' ability to integrate ICT are situated below the average value (0.0 logit). This shows that the life sciences teachers had a good conceptual understanding of ICT integration in the classroom for lesson preparation, presentation, and assessment of learners. Items situated above the +0.0-logit mark means that life sciences teachers found these items very challenging. Therefore, these teachers experienced difficulties in conceptualising these types of items. It can be deduced from the Wright map frequency of distribution that the life sciences teachers had a comprehensive conceptual understanding of ICT integration for classroom practice.

According to the WLE, the teachers' scores were allocated according to their proficiency levels (proficiency level 0, 1, 2, 3 and 4). Thus, the threshold bracket (average) had to be determined across the five proficiency levels to allocate the teachers to the relevant proficiency level. A threshold is defined as a level or point at which something would happen or cease to happen or take effect (Boone, 2016). The thresholds of proficiency levels 0, 1, 2, 3 and 4 were located for the dimension of knowledge about TPACK-P as -1.46, -1.13, - 0.34 and 0.48 (Wright map logit). These dimensions were obtained by averaging the thresholds across the items. For instance, for level 1 proficiency level, the threshold dimension of -1.46 was calculated by adding all the mean scores of questions 1 to 17 and then dividing the total by 17. A total of -24.88 was obtained, which resulted in a threshold of -1.46 for proficiency level 1. The same process was followed for proficiency levels 2, 3 and 4. Therefore, the proficiency level 1-dimension threshold bracket was estimated to be from -1.46 to -1.13, therefore a teacher's WLE scoring below -1.46 to -1.13 was allocated to level 1 proficiency level. The proficiency level 2 threshold bracket was estimated to be -1.12 to -0.35, and again a teacher's WLE scored between these two dimensions was allocated to proficiency level 2. The proficiency level 3 threshold bracket was estimated to be -0.34 to 0.47 and, lastly, the proficiency level 4 threshold bracket was estimated to be 0.48 and greater. Therefore, teachers' WLE scores greater than 0.48 were allocated to proficiency level 4.

Level 4 indicates life sciences teachers' ability to integrate ICT, thus having the necessary knowledge and skill to design a lesson, teach, and then assess the learners on the lesson taught. Level 3 refers to life sciences teachers who infuse ICT in their classroom practice to simply guide the content of life sciences. Life sciences teachers classified at this level seldom reflect on their ICT skills. At level 2 teachers simply adopt ICT during classroom practice, but no reflection from these teachers is noted at this level. Teaching strategies to allow the learner to explore the learning material are not well developed. Life sciences teachers at level 1 indicate low levels or no competency in using technology in classroom practice. This could be due to a lack of experience or a lack of exposure to the use of ICT resulting in teaching and learning being more teacher-centred. And lastly, teachers who failed to respond to an item were scored 0, in this case, 0 referring to the participants' inability to use ICT in classroom settings (Jen et al., 2016).

Table 3 shows the distribution of teachers in the three groups across proficiency levels. It is evident that the highest proportion of teachers in all three groups was concentrated at level 3. This was determined from the analysis of the teachers' responses to the questionnaire, allocating teachers' responses based on the thresholds and dimensions of TPACK-P as explained earlier. Level 3, according to the TPACK-P proficiency level, signifies the teachers' ability to infuse ICTs during classroom practice, resulting in learners being guided to discover and autonomously construct their scientific knowledge. The teachers in group 3 (15 years and more of teaching experience), however, showed a higher percentage (85.3%) at level 3 compared to the other two groups. Group 2 (8 to 14 years of teaching experience) had a higher percentage at level 4 (16.3%) compared to group 1 (13.9%) and group 3 (4%). Group 3 had the lowest percentage at level 4. A difference of roughly 1% was found between the three groups for proficiency level 2, with group 3 showing a slightly higher percentage (2.3%) than group 2.

Comparison of life sciences teachers' proficiency levels using the ANOVA test

The one-way analysis of variance (ANOVA) is used to determine whether there are any statistically significant differences between the means of three or more independent groups (Kishore, Jaswal & Mahajan, 2022). For this study, the proficiency levels of the three groups were compared using ANOVA to analyse the responses to the questionnaires.

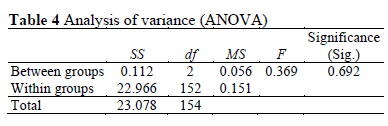

According to the analysis of variance (ANOVA) shown in Table 3, the differences between each of the three groups were not significant (p = 0.692). Teachers with eight to 14 years of teaching experience had the highest TPACK-P of the three groups but by a very small margin (group 1(1-7 yrs) = 0.03 logits, group 2(8-14yrs) = 0.06 logits, group 3(15+ yrs) = 0.00 logits).

A logit significance level of 0.03 for group 1 indicates a 3% level, a 0.06 logit for group 2 indicates a 6% level and a 0.00 logit for group 3 indicates a 0% level. Table 4 indicates the statistical value of difference between the three groups of life sciences teachers. The F-statistic is simply a ratio of variances. Variances are a measure of dispersion (how far the data are scattered from the mean). Larger values represent greater dispersion. F-statistics are based on the ratio of MNSQs. The term "mean squares" is simply an estimate of population variance that accounts for the degrees of freedom (df) used to calculate that estimate. According to Table 4, the F-statistical value is 0.369. These results translate into the mean difference between the three groups of 0% to 3%. The difference is so small that is it not significant. Therefore, there was no significant difference between the three groups of life sciences teachers regarding their integration of ICT during classroom practice.

Discussion

The findings show that both novice and experienced life sciences teachers involved in this study were knowledgeable about the integration of ICT in their lesson plans and classroom practice. This was indicated by the Rasch analyses, indicating teachers scoring at level 3 proficiency level. Level 3 proficiency level was reported as "infusive application." At this level, life sciences teachers are able to explain and describe technology integration during classroom practice. Moreover, the ANOVA analyses indicate that there was no significant difference (0.03 logit) between the teachers' proficiency levels in ICT integration.

This finding is in agreement with that of a study by Jen et al. (2016) conducted with science teachers in Taiwan where no significant TPACK-P differences between teachers with little experience (pre-service) and those with more than 5 years' experience (in-service) were found. Most of the participants displayed knowledge about TPACK-P at levels 2 and 3, but their application was at level 1. However, experienced teachers displayed a greater collection of representations and flexible teaching strategies in their PCK (Jen et al., 2016). The findings of our study, therefore, add knowledge to other work that has been done worldwide on the emerging body of knowledge on the enactment of TPACK in practice. A similar finding emerged in a study with South African pre-service science teachers where it was reported that the great majority of pre-service science teachers at a university had a proficiency level of 3 for their knowledge of TPACK-P (Ramnarain, Pieters & Wu, 2021).

Two limitations were identified. Initially, 200 life sciences teachers in Soweto schools were selected to participate in the study but only 155 participated. In future, a larger sample, including different schools from different districts may produce results that are more generalisable to the population. The second limitation was the exclusion of classroom observation as a measure of collecting qualitative data. Classroom observations were excluded from this research due to the coronavirus (COVID-19) pandemic restrictions imposed on schools. Classroom observation would have allowed us to explore the TPACK-P domains in practice and could have helped us to obtain an in-depth understanding of the life sciences teachers' TPACK-P proficiency levels.

The findings of this study reveal that across the three groups, a small percentage of life sciences teachers demonstrated proficiency levels of 1 and 2 (cf. Table 3). Table 3 shows that group 1 had 8.3% of teachers operating at level 2 proficiency, 9.3% of group 2 had a proficiency level of 1 or 2, and 10.6% of group 3 had a proficiency level of 1 or 2. These teachers either lacked the skills to integrate technology in their classrooms (proficiency level 1) or they possessed basic skills in the integration of technology in their classroom (proficiency level 2). These teachers were lacking in the curriculum design and teaching practice domains of the TPACK-P model. The teachers managed to indicate a basic understanding of ICT in assessments but could not describe how to use ICT to design a lesson or present a whole lesson. They were able to indicate universal principles of ICT usage, for example, the teachers were able to identify the weaknesses and strengths of ICT, but failed to give a detailed description of how they would implement ICT in the class. Therefore, it becomes vital that teachers are trained on designing a lesson and teaching a topic using technological tools. If not, learners who are taught be teachers with low proficiency levels might be disadvantaged. Professional development becomes one of the ways for teachers to gain knowledge about ICT integration.

Conclusion

Jen et al. (2016), in their study to cross-validate ranks of proficiency levels mentioned that the validated four proficiency levels (level 1, 2, 3, and 4) of teacher ranking, coupled with typical performances of the teachers, can be viewed as a roadmap of Science teachers' TPACK-P development. The gap between the knowledge about and application of TPACK-P suggests that further practical experiences in supportive environments are needed in science teacher education programmes. Only when teachers gain and learn from practical usage of technology to support science education can their TPACK-P be further developed and strengthened (Jen et al., 2016).

To cultivate a culture where ICT integration in classroom practice becomes the main pedagogical strategy, teachers need to develop a vision of teaching and learning (Ghavifekr & Rosdy, 2015). Poorly trained teachers are consumed by fear and will have low self-confidence about the integration of technology in the classroom, and therefore may avoid the use of technology (Jamieson-Proctor, Burnett, Finger & Watson, 2006). Johnson, Jacovina, Russell and Soto (2016) identified the absence of sustained professional development activities as one of the drawbacks that hinder the implementation of ICT in the classroom.

It is worth emphasising that TPACK-P knowledge develops over time. The more frequently teachers use technology during classroom practice the more well-developed their TPACK-P will be. It is recommended that future studies be conducted where qualitative data from classroom observations are collected to establish how teachers' measured TPACK-P competencies are reflected in their classroom practices.

Authors' Contributions

UR wrote the article and contributed to the data analysis and interpretation of the findings. MM collected data and did the data analysis.

Notes

i. This article is based on the dissertation of Memory Malope.

ii. Published under a Creative Commons Attribution Licence.

References

Altman DG & Bland JM 2009. Parametric v non-parametric methods for data analysis. BMJ, 338:a3167. https://doi.org/10.1136/bmj.a3167 [ Links ]

Angeli C & Valanides N 2009. Epistemological and methodological issues for the conceptualization, development, and assessment of ICT-TPCK: Advances in technological pedagogical content knowledge (TPCK). Computers & Education, 52(1):154-168. https://doi.org/10.1016/j.compedu.2008.07.006 [ Links ]

Avidov-Ungar O & Forkosh-Baruch A 2018. Professional identity of teacher educators in the digital era in light of demands of pedagogical innovation. Teaching and Teacher Education, 73:183-191. https://doi.org/10.1016/j.tate.2018.03.017 [ Links ]

Ay Y, Karadağ E & Acat MB 2015. The Technological Pedagogical Content Knowledge-practical (TPACK-Practical) model: Examination of its validity in the Turkish culture via structural equation modeling. Computers & Education, 88:97-108. https://doi.org/10.1016/j.compedu.2015.04.017 [ Links ]

Bond TG & Fox CM 2015. Applying the Rasch model: Fundamental measurement in the human sciences (3rd ed). Mahwah, NJ: Lawrence Erlbaum Associates. [ Links ]

Boone WJ 2016. Rasch analysis for instrument development: Why, when, and how? CBE-Life Sciences Education, 15(4):rm4, 2-rm4, 7. https://doi.org/10.1187/cbe.16-04-0148 [ Links ]

Boone WJ, Staver JR & Yale MS 2014. Rasch analysis in the human sciences. London, England: Springer. [ Links ]

Dele-Ajayi O, Fasae OD & Okoli A 2021. Teachers' concerns about integrating information and communication technologies in the classrooms. PLoS ONE, 16(5):e0249703. https://doi.org/10.1371/journal.pone.0249703 [ Links ]

Dlamini R & Mbatha K 2018. The discourse on ICT teacher professional development needs: The case of a South African teachers' union. International Journal of Education and Development using Information and Communication Technology, 14(2):17-37. Available at https://www.learntechlib.org/p/184684/. Accessed 30 November 2023. [ Links ]

Dudko SA 2016. The role of information technologies in lifelong learning development. In SV Ivanova & EV Nikulchev (eds). SHS Web of Conferences (Vol. 29). Les Ulis, France: EDP Sciences. https://doi.org/10T051/shsconf/20162901019 [ Links ]

Ghavifekr S & Rosdy WAW 2015. Teaching and learning with technology: Effectiveness of ICT integration in schools. International Journal of Research in Education and Science, 1(2):175-191. Available at https://files.eric.ed.gov/fulltext/EJ1105224.pdf. Accessed 30 November 2023. [ Links ]

Graham CR 2011. Theoretical considerations for understanding technological pedagogical content knowledge (TPACK). Computers & Education, 57(3):1953-1960. https://doi.org/10.1016/j.compedu.2011.04.010 [ Links ]

Huba ME & Freed JE 2000. Learner-centered assessment on college campuses: Shifting the focus from teaching to learning. Boston, MA: Allyn & Bacon. [ Links ]

Ilomäki L & Lakkala M 2018. Digital technology and practices for school improvement: Innovative digital school model. Research and Practice in Technology Enhanced Learning, 13:25. https://doi.org/10.1186/s41039-018-0094-8 [ Links ]

Jamieson-Proctor R, Burnett PC, Finger G & Watson G 2006. ICT integration and teachers' confidence in using ICT for teaching and learning in Queensland state schools. Australasian Journal of Educational Technology, 22(4):511-530. https://doi.org/10.14742/ajet.1283 [ Links ]

Jang SJ & Tsai MF 2012. Exploring the TPACK of Taiwanese elementary mathematics and science teachers with respect to use of interactive whiteboards. Computers & Education, 59(2):327-338. https://doi.org/10.1016/j.compedu.2012.02.003 [ Links ]

Jen TH, Yeh YF, Hsu YS, Wu HK & Chen KM 2016. Science teachers' TPACK-Practical: Standard-setting using an evidence-based approach. Computers & Education, 95:45-62. https://doi.org/10.1016/j.compedu.2015.12.009 [ Links ]

Johnson AM, Jacovina ME, Russell DG & Soto CM 2016. Challenges and solutions when using technologies in the classroom. In SA Crossley & DS McNamara (eds). Adaptive educational technologies for literacy instruction. New York, NY: Routledge. [ Links ]

Kishore K, Jaswal V & Mahajan R 2022. The challenges of interpreting ANOVA by dermatologists. Indian Dermatology Online Journal, 13(1): 109-113. https://doi.org/104103/idoj.idoj_307_21 [ Links ]

Koehler MJ, Mishra P & Cain W 2013. What is technological pedagogical content knowledge (TPACK)? Journal of Education, 193(3): 13-19. https://doi.org/10.1177/002205741319300303 [ Links ]

Linacre JM 2012. Many-facet Rasch measurement: Facets tutorial. Available at http://winsteps.com/tutorials.htm. Accessed 13 June 2021. [ Links ]

Little D & Throne S 2017. From learner autonomy to rewilding: A discussion. In M Cappellini, T Lewis & A Rivens Mompean (eds). Learner autonomy and Web 2.0. Bristol, CT: Equinox. [ Links ]

Loughran J, Berry A & Mulhall P 2012. Understanding and developing science teachers' pedagogical content knowledge (2nd ed). Rotterdam, The Netherlands: Sense. [ Links ]

Ludlow LH & Haley SM 1995. Rasch model logits: Interpretation, use, and transformation. Educational and Psychological Measurement, 55(6):967-975. https://doi.org/10.1177/0013164495055006005 [ Links ]

Makgato M 2012. Identifying constructivist methodologies and pedagogic content knowledge in the teaching and learning of technology. Procedia - Social and Behavioral Sciences, 47:1398-1402. https://doi.org/10.1016/j.sbspro.2012.06.832 [ Links ]

Mathipa ER & Mukhari S 2014. Teacher factors influencing the use of ICT in teaching and learning in South African urban schools. Mediterranean Journal of Social Sciences, 5(23): 1213-1220. https://doi.org/10.5901/mjss.2014.v5n23p1213 [ Links ]

Matwadia Z 2018. The influence of digital media use in classrooms on teacher stress in Gauteng schools. MEd dissertation. Pretoria, South Africa: University of South Africa. Available at https://core.ac.uk/download/pdf/219609609.pdf. Accessed 30 November 2023. [ Links ]

McMillan JH & Schumacher 1993. Research in education: A conceptual introduction (3rd ed). New York, NY: Harper Collins College. [ Links ]

Mishra P & Koehler MJ 2006. Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108(6):1017-1054. https://doi.org/10T111/jT467-9620.2006.00684.x [ Links ]

Mulholland J & Wallace J 2005. Growing the tree of teacher knowledge: Ten years of learning to teach elementary science. Journal of Research in Science Teaching, 42(7):767-790. https://doi.org/10T002/tea.20073 [ Links ]

Ramnarain U & Penn M 2021. An investigation of South African pre-service teachers' use of simulations in virtual physical sciences learning: Process, attitudes and reflections. In Y Cai, W van Joolingen & K Veermans (eds). Virtual and augmented reality, simulation and serious games for education. Singapore: Springer. https://doi.org/10.1007/978-981-16-1361-6_7 [ Links ]

Ramnarain U, Pieters A & Wu HK 2021. Assessing the technological pedagogical content knowledge of pre-service science teachers at a South African university. International Journal of Information and Communication Technology Education, 17(3):123-136. https://doi.org/10.4018/IJICTE.20210701.oa8 [ Links ]

Sharma V 2020. A literature review on the effective use of ICT in education. Journal of Computer Science and Technology Studies, 2(1):9-17. Available at https://www.alkindipublisher.com/index.php/jcsts/article/view/41/34. Accessed 30 November 2023. [ Links ]

Sherin MG & Drake C 2009. Curriculum strategy framework: Investigating patterns in teachers' use of a reform-based elementary mathematics curriculum. Journal of Curriculum Studies, 41(4):467-500. https://doi.org/10.1080/00220270802696115 [ Links ]

Shulman LS 1986. Those who understand: Knowledge growth in teaching. Educational Researcher, 15(2):4-14. https://doi.org/10.3102/0013189X015002004 [ Links ]

Srisawasdi N 2012. Student teachers' perceptions of computerized laboratory practice for science teaching: A comparative analysis. Procedia -Social and Behavioral Sciences, 46:4031-1038. https://doi.org/10.1016/j.sbspro.2012.06.192 [ Links ]

Tweed S 2013. Technology implementation: Teacher age, experience, self-efficacy, and professional development as related to classroom technology integration. PhD dissertation. Johnson City, TN: East Tennessee State University. Available at https://dc.etsu.edu/etd/1109. Accessed 27 June 2021. [ Links ]

Twining P & Henry F 2014. Enhancing 'ICT teaching' in English schools: Vital lessons. World Journal of Education, 4(2):12-36. https://doi.org/10.5430/wje.v4n2p12 [ Links ]

Van Driel JH, Verloop N & De Vos W 1998. Developing science teachers' pedagogical content knowledge. Journal of Research in Science Teaching, 35(6):673-695. [ Links ]

Yao L & Schwarz RD 2006. A multidimensional partial credit model with associated item and test statistics: An application to mixed-format tests. Applied Psychological Measurement, 30(6):469-492. https://doi.org/10.1177/0146621605284537 [ Links ]

Yeh YF, Hsu YS, Wu HK, Hwang FK & Lin TC 2014. Developing and validating technological pedagogical content knowledge-practical (TPACK-practical) through the Delphi survey technique. British Journal of Educational Technology, 45(4):707-722. https://doi.org/10.1111/bjet.12078 [ Links ]

Yeh YF, Hwang FK & Hsu YS 2015. Applying TPACK-P to a teacher education program. In YS Hsu (ed). Development of science teachers ' TPACK. Singapore: Springer. https://doi.org/10.1007/978-981-287-441-2_5 [ Links ]

Received: 5 October 2021

Revised: 7 March 2023

Accepted: 13 September 2023

Published: 30 November 2023