Serviços Personalizados

Artigo

Indicadores

Links relacionados

-

Citado por Google

Citado por Google -

Similares em Google

Similares em Google

Compartilhar

South African Journal of Education

versão On-line ISSN 2076-3433

versão impressa ISSN 0256-0100

S. Afr. j. educ. vol.41 supl.2 Pretoria Dez. 2021

http://dx.doi.org/10.15700/saje.v41ns2a1883

The curriculum tracker: A tool to improve curriculum coverage or just a tick-box exercise?

T Mkhwanazi; AZ Ngcobo; S Ngema; S Bansilal

School of Education, University of Kwazulu-Natal, Pinetown, South Africa. mkhwanazit2@ukzn.ac.za

ABSTRACT

Research on teacher professional development generally states that teachers do not take new innovations on board easily. The study reported on here focused on the uptake of a curriculum tracker tool designed to improve curriculum coverage by mathematics teachers. The tool formed part of the Jika iMfundo (JiM) programme launched by the KwaZulu-Natal Department of Education and a partner organisation. The purpose of this study was to explore the extent to which secondary mathematics teachers and heads of department (HoDs) used the tools for their intended purposes. The study was carried out with teachers and department heads from 14 schools located in 2 districts of KwaZulu-Natal. Data were generated by 21 interviews, supplemented by secondary data sourced from responses to previous surveys conducted by JiM. The findings show that most teachers considered the tool as a tick-box activity, instead of using it to guide their planning in a meaningful manner. Furthermore, there was misalignment between planning undertaken by the provincial education department and JiM. It is crucial that teachers on the ground are consulted first in order to jointly identify how certain problems can be addressed before any professional development activity is implemented.

Keywords: curriculum coverage; curriculum tracker; heads of department; Jika iMfundo; mathematics teachers

Introduction

There has been much concern about the low learning outcomes attained by South African learners. The Trends in International Mathematics and Science Study results from 1999 to 2015 show that South African learners' performances are consistently close to the lowest amongst the countries being assessed (Reddy, Visser, Winnaar, Arends, Juan, Prinsloo & Isdale, 2016). At national level, the performance in the Grade 9 Annual National Assessment (ANA) show similarly low results: during the years 2012 to 2014 the national average for the mathematics ANA hovered at between 11% and 14% (Department of Basic Education [DBE], Republic of South Africa [RSA], 2014). At Grade 12 level a similar trend of very low pass rates is evidenced in the National Senior Certificate examinations, where not more than 37% of mathematics learners obtained 40% or higher in each of the years 2015 to 2019 (DBE, RSA, 2020).

One of the reasons for the low outcomes seems to be that not all aspects of the curriculum are covered (DBE, RSA, 2016, 2020; Taylor, 2011). In targeting this problem, the JiM programme was launched in the King Cetshwayo and Pinetown districts by an implementing agency together with the KwaZulu-Natal Department of Education (KZN DoE), with the intention of improving the quality of curriculum coverage. Curriculum coverage improvement is defined by Metcalfe and Witten (2019:338) as "more learners learning more, and more at the appropriate depth." The belief was that an improvement in curriculum coverage would lead to an improvement in learning outcomes over the long term. Mathematics teachers were given a curriculum planner and tracker (CT), while heads of department (HoDs) were trained to use tools that could assist them in their support and monitoring functions. This curriculum intervention was to help mathematics teachers focus on tracking curriculum coverage as well as developing teachers professionally by encouraging professional conversations within groups. It was hoped that HoDs would engage in the monitoring process while also engaging in professional conversations with teachers. However, initial findings from JiM feedback instruments indicate that in the Pinetown district less than half of the mathematics teachers used the tracker on a routine basis, and 37% of the HoDs did not have any curriculum management plans at all.

This study was designed to investigate this issue further by exploring the extent to which secondary mathematics teachers and HoDs used these tools for their intended purposes.

Literature Review

Low levels of curriculum coverage in mathematics have been identified by some studies as an explanation for regular poor learning outcomes (Makhubele & Luneta, 2014; Reeves & Muller, 2005; Stols, 2013; Taylor, 2011). Reeves and Muller (2005) found that on average only 29% and 22% of the important mathematics topics were covered in Grade 5 and Grade 6 respectively. The study by Makhubele and Luneta (2014) shows that during terms two and three the teachers completed only 40% of the topics they were supposed to teach in Grade 9 mathematics. In a similar study with Grade 12 mathematics teachers, Stols (2013) found that they spent only half of the recommended time on most topics.

Research studies also point to the strong and logical relationship between curriculum coverage and learner performance. Taylor (2011) reports that for those Grade 6 learners who completed over 25 of the mathematics topics, there was a significant positive influence on their mathematics scores. Despite the fact that annual teaching plans (ATPs) and pace setters (work programmes) were provided to teachers, Makhubele and Luneta (2014) found that they did not use these resources for their lesson preparation and presentations. The ATP is a planner designed by the KZN DoE to advise teachers which topics should be covered and when. Metcalfe and Witten (2019:346) caution that resources which focus on pace - by requiring teachers to tick a box if activities are completed -instead of focusing on the quality of learning, are based on a "one-dimensional" and limited view of curriculum coverage.

Curriculum reform is a complex process, and Muller and Hoadley (2019) comment that there is no successful case of curriculum reform in the developing world. One factor that influences teachers' uptake of an intervention is whether they view it as something that will increase their workload or reduce the demands placed on them. Carless (1997) argues that teachers' preparedness to take on a new task is strongly influenced by their assumptions of how practical it will be to implement the intervention. Hence an intervention that would require a teacher to embark on major reorganisation, introduce new practices or duplicate existing processes has a lower likelihood of being adopted than one planned according to the teachers' needs and which does not represent a major disruption to existing practices. The degree of ownership by teachers and school leaders is also a factor which influences the uptake of a curriculum innovation (Carless, 1997:352).

Adler (2000:37) reminds us that teacher learning is "a process of increasing participation in the practice of teaching, and through this participation, a process of becoming knowledgeable in and about teaching." Teacher learning is a dynamic process, which is the result of the collaborative actions of teachers and learners as they work together, and it is a constructive process, which is internalised by the participants (Kelly, 2006). These perspectives highlight the importance of ongoing dialogues, shared reflections and professional conversations between teachers in mediating teacher learning within professional learning communities. As teachers work in curriculum reform processes it is even more important to create the space for these professional conversations to take place, so that teacher learning can be enabled within the context of curriculum reform.

Theoretical underpinnings: Theory of change

The underlying beliefs and assumptions about how change could happen within a programme of action are referred to as a theory of change (Vogel, 2012). A theory of change can take a reflexive approach (Stein & Valters, 2012) in the context of a teacher development initiative, in order to develop a nuanced understanding of how teacher change could be taking place. In trying to explore the extent to which the intervention was able to achieve some of its outcomes, we interpreted six specific theory of change constructs from Christie and Monyokolo (2018), that is analysis, long-term goals, assumptions, activities, short-term outcomes, and external factors embedded within a Theory of Change, as suggested by Stein and Valters (2012) and Vogel (2012).

The starting point of any theory of change is analysis of the current situation. In the JiM intervention the problem identified was the low rate of curriculum coverage at most South African schools, which many stakeholders view as the main reason for low learning outcomes in mathematics. It is expected that if teachers and HoDs work together in improving their curriculum coverage, this would lead to more learning opportunities being made available to the learners. Long-term goals refer to the main purpose of the intervention, which in this context, were improvements in actual curriculum coverage by schools, which was then expected to lead to an improvement in mathematics learning outcomes at the participating schools.

The articulation of assumptions underpinning any intervention is to ensure a common understanding of the main ideas related to the problem under consideration. In terms of the JiM programme, the assumption seems to be that teachers are not aware that they are not covering enough of the curriculum, and that if a curriculum tracking tool is provided this can be used by the teachers to keep up with the curriculum. With regard to the HoDs, the assumption is that they need a tool that can help them carry out their monitoring and support responsibilities.

Activities and inputs refer to the direct contributions made by the programme to achieve the short-term outcomes. At teacher level, JiM developed a tool called the CT to supplement the teachers' general planning, which allowed them to track the extent of curriculum coverage while reflecting (in writing) on their teaching strengths and weaknesses. Supervision tools were developed for HoDs to monitor and support the teachers' efforts while regularly initiating professional conversations with teachers. The programme also included support workshops for HoDs to help them promote effective use of the CT.

Short-term outcomes are used as markers to indicate progress towards achievement of the main goals. In this context, the short-term outcomes for teachers were that they would be routinely using the CT for planning, tracking and reporting on their curriculum coverage, while also reflecting on their teaching (Metcalfe, 2018:52). With respect to HoDs, the outcome was regular checking of "teachers' curriculum tracking and learners' work" while supporting teachers to "improve curriculum coverage" (Metcalfe, 2018:52).

External factors refer to factors emanating from within or outside of the context that present a challenge to the success of the programme. The external factors were identified upon analysis of the data.

In this article, we explore the extent to which the intended short-term outcomes have been achieved, by answering the following research questions: 1) What is the extent to which the HoDs and the teachers utilised the curriculum tools for the intended purposes? and 2) What are teachers' perceptions of external factors that negatively impact on the usage of the CT?

Methodology

This study formed part of a larger study (Mkhwanazi, Ndlovu, Ngema & Bansilal, 2018) which focused on identifying conditions influencing the use of the curriculum tools and explored the relationship between the CT use and curriculum coverage. It was found that the few schools which used the tracker routinely were on track. However, for the majority of the schools there was no evidence of authentic tracker use or evidence of minimal use and these schools also had very low rates of curriculum coverage. In this study we used a theory of change lens to understand better the teachers' views of the external factors which negatively influenced their use of the CT. We used a qualitative approach because of the need to find multiple ways of understanding the complexities of the teachers' and HODs' experiences in working with the curriculum support tool (Creswell, 2009).

Data Generation

Sixteen schools from the Pinetown and King Cetshwayo districts were originally selected for the study, based on the criterion that they had participated in at least three of the four previous surveys conducted by the programme developers. However, two schools declined to participate in the interviews, leaving 14 participating schools. Data used in the study were generated from secondary as well as primary sources. We drew upon secondary data collected by the programme developers in four surveys which were the School Review 1 Survey 2015 (Survey 1), the Self-Evaluation Survey 2016 (Survey 2), Curriculum Coverage Survey 2016 (Survey 3) and the School Review 2 Survey 2016 (Survey 4) (for more details see Mkhwanazi et al., 2018).

In addition to these secondary data sources, we generated primary data during site visits to the 14 schools consisting of 21 semi-structured interviews with teachers and HODs as well as textual data including learners' exercise books and teachers' records, as indicated in Table 1. The project team, consisting of the four authors and postgraduate student researchers, conducted the interviews. The interviews with HoDs and teachers were intended to provide more insight into their uptake of the programme tools. These interviews were audio-recorded and then transcribed. In addition, we analysed Grade 9 learner exercises in terms of the topics and amount of work covered. Table 1 provides details of the participants from each school, as well as the data sources we referred to in the interviews. We used pseudonyms for the schools and codes for the teachers to guarantee participant confidentiality.

Data Analysis and Results

Data analysis

The inductive data analysis involved four phases (McMillan & Schumacher, 2001): identifying patterns; categorising and ordering data; refining patterns through determining the trustworthiness of the data; and synthesising themes. In each phase the authors first worked separately, analysing the data, generating codes and creating their own categories. In refining the themes, we then met to present our own coding before working as a group to elaborate on, collapse or distinguish between similar codes. To improve the trustworthiness of the analysis, data from different sources were triangulated whenever possible. For example, some of the claims made in the secondary data concerning the extent to which the tools were used, were sometimes supported and at other times contradicted by the classroom and teacher records. These details are discussed in the results. The final phase involved synthesising the themes, which were arranged to align with the research questions.

Results

The results are reported according to the two research questions.

Extent to which Mathematics Teachers and HoDs Used the Tools for the Intended Purposes

The secondary data sources, the interview transcripts and written records presented during the interviews were analysed to provide insight into the extent to which the teachers and HoDs used the tools.

Teachers' usage of the tools

The secondary data obtained from three surveys suggested an uneven use of the CT across participating schools. In the first survey (Survey 1), only one school out of the five that took part reported routine usage of the CT, while in Survey 2 nine of the 14 participating schools stated that they used the CT on a routine basis. In the last survey (Survey 4, seven of the 14 schools that took part reported routine usage of the CT while the other schools reported that they did not use it. During the primary data collection phase, we tried to gather more insight into the extent to which the CT was being used. However, only five of the participating schools provided tangible evidence to support their claims of routine usage of the tools. For the remaining nine schools, when we requested the completed CTs we were told that these were not available, implying that self-reports of routine usage of the CT could only be verified for five schools.

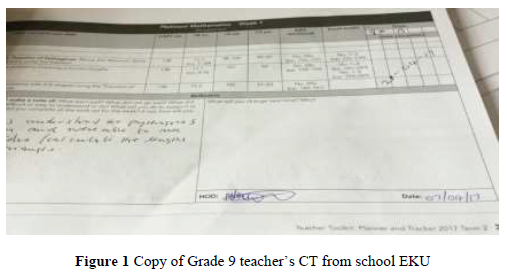

One of the nine schools (ISIZ) reported routine use in two surveys as well as in the interviews, however, an examination of the teachers' actual records found that the CT was completed for only 2 weeks in term one and just 1 week in term two. In another school (EKU), the learners' books provided disconfirming evidence for the teachers' claims of routine use. His copy of the CT showed the relevant sections marked as complete on the specified dates (see Figure 1).

However, the learners' exercise books provided a different view. For example, the suggested topic in the Grade 9 CT for week 1 of term two was Pythagoras' theorem - but the learners' books focused on circles. When interviewed, the teacher acknowledged that he did not complete the topics on the dates advised by the CT, saying: "Sometimes I do not follow the tracker, when I realise that learners are blank I teach something else!"

Furthermore, Figure 1 points to duplicity between the teachers and HoDs. The teacher's signature confirming that the week' s work was completed was dated 21/04/2017. However, the HoD's signature for term two was dated 07/04/2017 - yet the term only started on 20 April 2017. During the interview with the HoD, he was unable to explain why the signature was given before the onset of the second term. In addition, although the teacher maintained that he gave the CT to the HoD every week, the HoD's signature appeared only once in the CT, dated 7 April 2017.

Overall, when the teachers' records were checked during the primary data collection phase, it was found that while many schools reported routine use of the CT, in reality most of them did not use the CT for its intended purpose. Hence, although up to nine schools reported in the surveys that they used the CT routinely, only five of the 14 participating schools were able to provide evidence of this routine use from actual records.

HoDs' use of the tools to track and monitor curriculum coverage

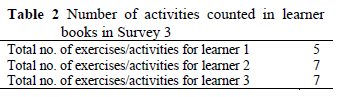

The evidence indicates that curriculum coverage was not on track. Tables 2 and 3 showed the number of activities completed by learners in one school (for term two), suggesting that there has not been effective monitoring of curriculum coverage.

The number of activities completed according to the learners' books shows that these surprisingly low numbers were quite common in various schools. A year later (2017), evidence from the interviews showed that there was little improvement, as shown in Table 3.

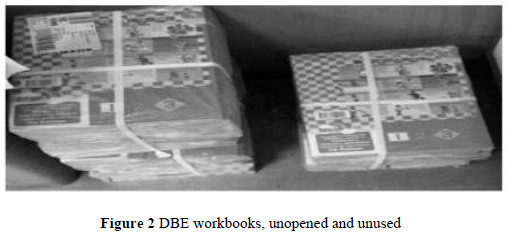

In one school that reported routine curriculum coverage in the initial survey (Survey 1), during the interviews the DBE workbooks were found in the principal's office still packed in their original sealed bundles, as shown in Figure 2, suggesting that they were not being used.

During the interviews teachers asserted that the HoDs were not using the tools to support teachers and monitor curriculum coverage in their monthly meetings. Instead, these meetings were used for discussion of general matters. The evidence at EKU (see Figure 1) where an HoD signed off the CT before the beginning of the second term shows that some HoDs were in effect "ticking the appropriate boxes" to make it seem as if they were compliant, without checking whether the CT was being used for its intended purpose. In one school (UMZI) for example, the HoD could not even produce these tools.

Teachers' Perceptions of External Factors Negatively Impacting Teachers' Usage of the CT Data used to respond to the second question were generated from secondary data sources and the interviews with teachers. After synthesising the data, four main themes emerged since they were cited most often in the interviews.

Teachers felt that they did not receive sufficient training and support to use the CT effectively

During the interviews, teachers from seven schools claimed that they were not using the CT because they did not have sufficient knowledge and skills on how to do so. It had not been properly introduced to them, and they were not given training on how to use it. One teacher said that on his arrival he had received a textbook, and later on, the DBE Workbook. He did not ask about the CT because he did not know about it then.

One early career teacher felt that the HoD did not provide them with adequate support, as explained: "La ikwamazibonele", meaning "Here you fend for yourself with no support at all." The rapid turnover of staff because of transfers or resignations also impacted on teachers' readiness to use the CT. New teachers among the seven said that nobody at the school was able to help because the teachers who had received training or the HoDs were no longer at the school. The lack of training and planning within the schools resulted in misconceptions about the purpose of the CT, where teachers perceived it as a means of recording what was done, instead of a tool for planning.

The Tracker programme was perceived as being too rigid

Teachers from all the schools raised the issue that they found the tracker to be rigid and did not allow them to teach at a pace that was suitable for their learners. Some teachers felt that the daily breakdown of concepts was too rigid because it did not give them the flexibility to teach in the sequence and the depth they thought necessary. For example, one teacher at EKU said:

Sometimes when I introduce a topic, I found that learners are blank, but I am forced to follow it through because, if I don't, I would be seen as someone not covering the curriculum. Anyway, I do change it, for example, when teaching Pythagoras' theorem, I might decide it is better to teach types of triangles first before introducing Pythagoras' theorem.

A teacher from TISA raised a similar point:

The tracker is rigid and does not allow for different approaches ... I prefer to start with solving for x, then factorisation before the introduction of a quadratic formula ... I teach the topic and then take a learner exercise book to check the end dates for recording in the tracker.

Teachers from nine of the 14 schools opted to use other tools, for example, the ATP and "1+9" lesson plans. Moreover, the teachers pointed out that the CT did not cater for mastery of the concepts. In the words of a teacher at EMO:

What I am supposed to cover in one day according to the CT takes me 3 days with my learners, so every time I am behind. Then I chose to ignore it since it makes me feel guilty all the time, as if I am not doing the work.

These teachers felt that the CT restricted their ability to respond to their learners' needs, which made their task of teaching harder. These comments illustrate that teachers have their own reasons and ideas about what to teach and when to teach it, and these should be taken into consideration in any curriculum innovation.

The CT reflection component was perceived as impractical and out of touch with their realities The interview responses suggest that across the schools, teachers were not using the reflection component of the CT as expected, by consistently writing about what worked and what did not work in each lesson. When checking the CT, we found that the reflection sections were either not completed or did not focus on classroom issues.

Teachers from five schools specifically mentioned that the reflection components were too time-consuming. One teacher from EMO said: "Usually I do not write reflections kuba into engiyicabanga mina [it's what I think about]. I know it's important to write down my reflections but there is no time to do it."

Another teacher said writing their reflections on lesson plans was sufficient and producing the same written reflections in the CT was just an exercise in duplication. However, when probed further about the reflections forming part of the lesson plan, the teacher was not able to show that such reflections were made. One teacher at GLEV made a strong statement that it was a waste of time to write these reflections. He felt that in expecting them to do so much paperwork, the DoE did not show an understanding of how difficult teaching and learning conditions have become: "I think the department has lost track of what is happening at grassroots level'" This teacher felt that the reflection section in the CT was not planned well enough to allow teachers to reflect honestly on their real problems: "What you did and what you enjoy and what you will do better next time is nonsense. We never enjoy anything; we are struggling to get concepts across to learners." These statements indicate that teachers felt that there was a misalignment of the reflection sections with the teachers' own reality.

Teachers were caught up by the conflicting plans of the provincial DoE structures and those of the JiM team

One factor that impeded the use of the CT was the disjuncture between the plans made by the provincial DoE and those of the programme developers. Five teachers explicitly articulated the conflicting issues, for example their involvement in the "1+9" programme. One teacher explained that as a facilitator of the departmental "1+9" programme, he spent 1 day a week away from school to plan the workshop and another day each week facilitating the workshops, meaning that he was at school for only 3 days a week.

Another conflicting point raised by teachers and HoDs was the use of the ATP instead of the CT, which they said was encouraged by subject advisors. As noted by a teacher (ZWELI): "... even our subject advisers are biased towards the ATP, and at no stage was the tracker promoted in the workshops that I attended'" Another teacher (GLEV) said: "I do not follow the CT 100% because our subject advisor said you don't have to follow it."

Some teachers identified a misalignment between the CT and the ATP in terms of the sequencing and content that should be covered. Hence, some teachers followed the ATP because it fitted in with the examination guidelines. One teacher (KWABA) noted: "Last year I had a bad experience when I was using the tracker. It said that in term two I had to cover trigonometry, but the ATP said that trigonometry should be covered in term three."

Discussion

One of the short-term outcomes of the intervention was that teachers would use the CT routinely. The findings show that the programme did not achieve this short-term outcome with all the participant schools. We found that teachers and HoDs were not using the tools for the intended purposes. In this study, only five of the 14 schools provided evidence of routine use of the CT. Among the other schools it was found that the teachers marked the CT as complete just for compliance purposes, not to address curriculum coverage as intended. As noted by Jones and Eick (2007), teachers' adoption of an innovation is dependent on whether they think it adds to their workload or whether they think it could make their teaching lives easier. Hence, compliance with the CT was reduced to an exercise of ticking the boxes, without paying the necessary attention to the quality of the learning that was taking place during the prescribed lessons. This compliance-directed activity may be in response to the pressure from the top exerted by managers, who prioritise completion of monitoring templates for compliance purposes with higher authorities (Metcalfe & Witten, 2019). It is important for teachers and managers to move beyond a compliance mindset (Metcalfe & Witten, 2019), toward a mindset where they take ownership of the curriculum (Carless, 1997) and see themselves as co-constructers thereof.

An important part of improving any intervention involves identifying external factors which impede the success of the programme. These are the factors emanating from within or outside of the context which presented a challenge to the effective use of the CT. It was found that the teachers' (and HoDs') understanding of the purpose of the CT was limited to it being used for monitoring curriculum coverage, instead of regarding it being a tool to improve learning experiences in the classroom. Teachers also thought it was too rigid in its timelines. Metcalfe and Witten (2019) comment that one of the reasons for curriculum coverage problems could be related to pedagogy. By jointly interrogating their practices, assumptions and challenges related to the pedagogy, teachers and HoDs could address their problems collaboratively by using the CT more effectively. However, this can only work if teachers, HoDs and the professional development team all share a common understanding of how the CT could be used more effectively.

A further challenge to the uptake of the programme was the perceptions of the teachers towards the reflection component, which they did not consider as an authentic means of enabling reflective practice. This development supports Carless's (1997) argument that if a teacher does not perceive an innovation as practical and useful, it is less likely to be adopted and promoted. However, the finding that teachers paid little or no attention to written reflections is a concern. Many studies highlight the crucial role of reflections during the teacher learning process (Bansilal & Rosenberg, 2011; Brookfield, 1995). The written individual and joint reflections required by the CT form an essential component of the JiM programme, because these are planned as the point around which HODs can start professional conversations with teachers. This key focus area needs to be strengthened, and perhaps other ways of encouraging professional conversations should be investigated.

A further external factor identified by teachers as hindering the uptake of the CT was misalignment between the planning undertaken by the provincial DoE structures and the JiM team. Working together for the improvement of education must be prioritised by all stakeholders, and not only by the programme developers.

Concluding Remarks

As part of the reflective stance taken by any theory of change approach, one needs to question why the intervention did not achieve the long-term or even short-term outcomes. In terms of the JiM programme, the assumption was that teachers needed help in determining how they could cover the curriculum. It was expected that if teachers were provided with a tool that could help them track their curriculum coverage, then there would be an improvement in the coverage rate, which in turn would result in an improvement in learning outcomes in mathematics. However, with this study we demonstrated that these assumptions were somewhat simplistic. The teachers in this study found innovative ways of making it seem as if they were complying with the CT, without considering whether learning opportunities were improved. There was also evidence of HoDs being complicit in endorsing false reports of curriculum coverage.

An important finding was that teachers cited their own reasons and ideas about what to teach and when to teach it. It is important that professional development agencies recognise this fact, and take the teachers' own ideas and plans into account when implementing new procedures. The low rate of curriculum coverage is a multifaceted systemic problem that can only be addressed as part of a transformation of the schooling system.

Interventions such as JiM need to be investigated systematically, so that deep-seated problems can be identified and addressed before further cycles are implemented. It is hence a great concern that the KZN provincial DoE has scaled up this JiM intervention, and is implementing it across four more districts, without evidence that the intervention has resulted in improved authentic curriculum coverage.

Acknowledgement

Funding for the project was provided by the National Research Foundation (NRF). Grant No. HSD180430325077.

Authors' Contributions

All authors contributed to the collection and analysis of the data. TM wrote the methodology section and provided data for the tables; AZN wrote the data analysis and results section and provided data for tables and figures; SN wrote the introduction section, provided data for tables and edited Table 1; SB wrote the literature review and concluding remarks and also provided data for tables and figures. All authors contributed to the discussion section and reviewed the final manuscript.

Notes

i. Published under a Creative Commons Attribution Licence.

ii. DATES: Received: 14 June 2019; Revised: 8 February 2021; Accepted: 5 April 2021; Published: 31 December 2021.

References

Adler J 2000. Social practice theory and mathematics teacher education: A conversation between theory and practice. Nordic Mathematics Education Journal, 8(3):31-53. [ Links ]

Bansilal S & Rosenberg T 2011. South African rural teachers' reflections on their problems of practice: Taking modest steps in professional development. Mathematics Education Research Journal, 23(2):107-127. https://doi.org/10.1007/s13394-011-0007-2 [ Links ]

Brookfield SD 1995. Becoming a critically reflective teacher. San Francisco, CA: Jossey-Bass. [ Links ]

Carless DR 1997. Managing systematic curriculum change: A critical analysis of Hong Kong's Target-Oriented Curriculum initiative. International Review of Education, 43(4):349-366. [ Links ]

Christie P & Monyokolo M 2018. Reflections on curriculum and education system change. In P Christie & M Monyokolo (eds). Learning about sustainable change in education in South Africa: The Jika iMfundo campaign 2015-2017. Johannesburg, South Africa: Saide. Available at https://www.saide.org.za/documents/lsc_interior_20180511_screen-pdf.pdf. Accessed 31 December 2021. [ Links ]

Creswell JW 2009. Editorial: Mapping the field of mixed methods research. Journal of Mixed Method Research, 3(2):95-108. https://doi.org/10.1177/1558689808330883 [ Links ]

Department of Basic Education, Republic of South Africa 2014. Report on the Annual National Assessment of 2014: Grades 1 to 6 & 9. Pretoria: Author. Available at https://www.saqa.org.za/docs/rep_annual/2014/REPORT%20ON%20THE%20ANA%20OF%202014.pdf. Accessed 31 December 2021. [ Links ]

Department of Basic Education, Republic of South Africa 2016. National Senior Certificate 2016 Diagnostic Report. Pretoria: Author. Available at https://www.ecexams.co.za/2016_Nov_Exam_Results/NSC%20DIAGNOSTIC%20REPORT%202016%20WEB.pdf. Accessed 31 December 2021. [ Links ]

Department of Basic Education, Republic of South Africa 2020. Report on the 2019 National Senior Certificate 2019 Diagnostic Report: Part 1 . Available at https://www.education.gov.za/Portals/0/Documents/Reports/DBE%20Diagnostic%20Report%202019%20-%20Book%201.pdf?ver=2020-02-06-140204-000. Accessed 31 December 2021. [ Links ]

Jones MT & Eick CJ 2007. Providing bottom-up support to middle school science teachers' reform efforts in using inquiry-based kits. Journal of Science Teacher Education, 18(6):913-934. https://doi.org/10.1007/s10972-007-9069-0 [ Links ]

Kelly P 2006. What is teacher learning? A socio-cultural perspective. Oxford Review of Education, 32(4):505-519. https://doi.org/10.1080/03054980600884227 [ Links ]

Makhubele Y & Luneta K 2014. Factors attributed to poor performance in grade 9 mathematics learners secondary analysis of Annual National Assessments (ANA). In ISTE International Conference on Mathematics, Science and Technology Education. Available at https://uir.unisa.ac.za/bitstream/handle/10500/22925/Yeyisani%20Makhubele%2c%20Kakoma%20Luneta.pdf?sequence=1&isAllowed=y. Accessed 31 December 2021. [ Links ]

McMillan JH & Schumacher S 2001. Research in education: A conceptual introduction (5th ed). New York, NY: Addison Wesley Longman. [ Links ]

Metcalfe M 2018. Jika iMfundo 2015-2017: Why, what and key learnings. In P Christie & M Monyokolo (eds). Learning about sustainable change in education in South Africa: The Jika iMfundo campaign 2015-2017. Johannesburg, South Africa: Saide. Available at https://www.saide.org.za/documents/lsc_interior_20180511_screen-pdf.pdf. Accessed 31 December 2021. [ Links ]

Metcalfe M & Witten A 2019. Taking change to scale: Lessons from the Jika iMfundo campaign for overcoming inequalities in schools. In N Spaull & J Jansen (eds). South African schooling: The enigma of inequality. Cham, Switzerland: Springer. https://doi.org/10.1007/978-3-030-18811-5_18 [ Links ]

Mkhwanazi T, Ndlovu Z, Ngema S & Bansilal S 2018. Exploring mathematics teachers' usage of the curriculum planner and tracker in secondary schools in King Cetshwayo and Pinetown districts. In P Christie & M Monyokolo (eds). Learning about sustainable change in education in South Africa: The Jika iMfundo campaign 2015-2017. Johannesburg, South Africa: Saide. Available at https://www.saide.org.za/documents/lsc_interior_20180511_screen-pdf.pdf. Accessed 31 December 2021. [ Links ]

Muller J & Hoadley U 2019. Curriculum reform and learner performance: An obstinate paradox in the quest for equality. In N Spaull & J Jansen (eds). South African schooling: The enigma of inequality. Cham, Switzerland: Springer. https://doi.org/10.1007/978-3-030-18811-5_6 [ Links ]

Reddy V, Visser M, Winnaar L, Arends F, Juan A, Prinsloo C & Isdale K 2016. TMSS 2015: Highlights of Mathematics and Science achievement of Grade 9 South African learners. Pretoria, South Africa: Human Sciences Research Council. Available at https://repository.hsrc.ac.za/bitstream/handle/20.500.11910/10673/9591.pdf?sequence=1&isAllowed=y. Accessed 31 December 2021. [ Links ]

Reeves C & Muller J 2005. Picking up the pace: Variation in the structure and organization of teaching school mathematics. Journal of Education, 37:103-130. Available at https://journals.co.za/doi/pdf/10.10520/AJA0259479X_157. Accessed 31 December 2021. [ Links ]

Stein D & Valters C 2012. Understanding theory of change in international development. London, England: Justice and Security Research Programme. Available at https://www.researchgate.net/publication/259999367_Understanding_Theory_of_Change_in_International_Development. Accessed 31 December 2021. [ Links ]

Stols G 2013. An investigation into the opportunity to learn that is available to Grade 12 mathematics learners. South African Journal of Education, 33(1):Art. #563, 18 pages. https://doi.org/10.15700/saje.v33n1a563 [ Links ]

Taylor N 2011. The national school effectiveness study. Summary for the synthesis report. Johannesburg, South Africa: JET Education Services. Available at https://www.jet.org.za/resources/summary-for-synthesis-report-ed.pdf. Accessed 31 December 2021. [ Links ]

Vogel I 2012. Review of the use of 'theory of change' in international development. London, England: Department for International Development (DFID). Available at https://www.theoryofchange.org/pdf/DFID_ToC_Review_VogelV7.pdf. Accessed 31 December 2021. [ Links ]