Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

South African Journal of Education

On-line version ISSN 2076-3433

Print version ISSN 0256-0100

S. Afr. j. educ. vol.39 n.3 Pretoria Aug. 2019

http://dx.doi.org/10.15700/saje.v39n3a1656

ARTICLES

Learning with mobile devices: A comparison of four mobile learning pilots in Africa

Shafika Isaacs; Nicky Roberts; Garth Spencer-Smith

Centre for Education Practice Research, Faculty of Education, University of Johannesburg, Soweto, South Africa. shafika@shafika.co.za

ABSTRACT

This paper compares the mixed-methods evaluation findings of the ukuFUNda Virtual School (UVS) with evaluations of three different mobile learning (m-learning) programmes in Africa: the information and communication technologies for rural education (ICT4RED); the Kenya Primary Math and Reading (PRIMR) study and the Nokia Mobile Mathematics (MoMath) evaluation. The comparison applies a conceptual model based on m-learning affordances and configurations (Strigel & Pouezevara, 2012), as well as on uptake, use, and responses by program beneficiaries; and on stakeholder learning. The findings show varied successes across all four programs and highlight important lessons for stakeholders with particular reference to scaling up of m-learning interventions in an African context.

Keywords: information and communication technologies (ICT); learning configurations; m-learning; m-learning affordances; mobile phones; mobile platforms; stakeholder learning; tablets

Introduction

Even though growth seems to be slowing down, sub-Saharan Africa remains one of the fastest growing regions in mobile subscription access in the world, with a mobile penetration ratei of 75% in 2018 (GSMA, 2018). In 2017, third generation (3G) connectivity via mobile phone was almost universal in South Africa (GSMA, 2017), while in Kenya, mobile penetration based on SIM connections stood at 91% (Jumia, 2019), demonstrating that most people have access to a mobile service in these two countries.

With decreasing mobile costs and increasing mobile access, many have explored whether mobile technologies, albeit designed originally as lifestyle technologies (Traxler, 2010), could support learning in resource-challenged schooling contexts in Africa. These include a mobile literacy game designed to support Grade 1 children in Zambia (Jere-Folotiya, Chansa-Kabali, Munachaka, Sampa, Yalukanda, Westerholm, Richardson, Serpell & Lyytinen, 2014), e-readers to support children to read in Ghana (Worldreader, 2012), or to help the youth learn mathematics via a mobile math learning platform (Roberts, Spencer-Smith, Vänskä & Eskelinen, 2015). These findings resonate with various global landscape studies that highlight key lessons for stakeholders on designing effective m-learning programmes in resource-challenged contexts (Isaacs, 2012a, 2012b; Raftree, 2013; Spencer-Smith & Roberts, 2014; Wagner, 2014; West, 2012).

Drawing on these experiences, the South African Department of Basic Education (DBE), the United Nations Children's Fund (UNICEF) South Africa (SA), and the Reach Trust designed an m-learning platform called the UVS in 2012. This platform was accessible on 8,000 different types of handsets, from low-end feature phones to high-end smartphones and tablets. The UVS used an existing social networking platform (Mxit) to offer a range of educational and psychosocial support services to secondary school learners, teachers, and parents across SA. The platform was hosted on a server using a Structured Query Language (SQL) database. It aggregated third-party applications (or apps) accessible from a common menu. In addition, several bespoke applications were developed such as a school self-evaluation tool, a school nutrition app, and a communication tool for teachers, learners, and parents. It also included a dashboard to provide uptake, usage, and user demographic data.

While a number of m-learning programmes had emerged in Africa by 2013, few included monitoring and evaluation (M&E) to build evidence on their experiences. The value of monitoring and evaluation in enabling stakeholder learning and continuous improvement in ICT for development practice and policy was highlighted by Hollow (2010, 2015). This view is supported by the South African government framework for evaluating its policies and programmes in order to provide evidence of their effectiveness, efficiency, and value for money (Department of Performance Monitoring and Evaluation, The Presidency, Republic of South Africa, 2011).

Yet, because M&E research mainly takes place outside of universities, it is classified as grey research as opposed to real research (Henning, 2017). Henning (2017:para. 2) calls for a shift in approach to "real" research by recognising the value of "evaluation research" in areas such as design logic. This article is an attempt to make grey research accessible to academia by analysing policy-relevant evaluation research findings to inform a scientific knowledge conversation. It highlights stakeholder learning by government and its partners, by comparing the UVS evaluation findings (Roberts, Spencer-Smith & Butcher, 2016) with three other m-learning programme evaluations in Africa: the evaluation of an ICT-integration model involving education communities in 26 schools in Cofimvaba, a rural town in the Eastern Cape, South Africa (Botha & Herselman, 2015; Williams, 2014), the PRIMR study (Piper, Zuilkowski, Kwayumba & Strigel, 2016), which investigated whether mobile devices can be effective in supporting reading in Kenyan schools, and the Nokia MoMath evaluation (Roberts et al., 2015) of how learners in South Africa could improve their Mathematics performance via an m-learning platform.

Literature Review

Mobile learning (m-learning) may be defined as "learning across multiple contexts, through social and content interactions, using personal electronic devices" (Crompton, 2013:4). Implicit in this definition is the dominant, yet contentious, view that the technological capabilities of mobile phones can be harnessed to support existing and new forms of learning in, and across contexts, devoid of time and space (Wright & Parchoma, 2011). For more than a decade, this predominantly positive view has been widely advocated in research (Crompton, Burke & Gregory, 2017; Hsu & Ching, 2015; Traxler, 2016, 2018; Zelezny-Green, 2014, 2018a, 2018b).

In their systematic review of mobile learning articles in PK-12 education that were published in 10 top educational technology journals, Crompton et al. (2017) highlight that 62 per cent of the 113 papers reviewed reported positive learning outcomes associated with mobile learning. They also found that the research for only 1 per cent of the papers reviewed were conducted in Africa. Beyond their review, however, the literature reveals rich m‑learning experiences in Africa, documented by local researchers that have contributed to a growing global m-learning knowledge base. Jere‑Folotiya et al. (2014) and Worldreader (2012) show how children and the youth can learn to read and write through mobile phones such as Kontax, Yoza Cell Phone Stories and FunDza Literacy Trust (Vosloo, Walton & Deumert, 2009; West & Chew, 2014); how the youth can become motivated to learn Mathematics through a mobile scaffolding environment of a Dr Math tutoring program (Botha & Butgereit, 2012); how a mobile learning curriculum framework can be designed to support learning (Botha, Batchelor, Traxler, De Waard & Herselman, 2012); how the M4Girls initiative promoted mathematics education among secondary school girls in South Africa (Wan, 2010). Together, these studies highlight important lessons for future m-learning designed at scale, even though they were not all based on independent evaluations of these programmes. These lessons include, among others, the importance of involving "users" (Wan, 2010) as well as curriculum decision-makers (Botha et al., 2012) in the m-learning design, and that scale-up be part of the imagination of designers when designing pilot programmes (Botha & Butgereit, 2012).

In an attempt to encourage evidence-based stakeholder learning through evaluation research, we found three comparable evaluation studies on the African m-learning experience, incorporating mobile phones, tablets, and e-readers.

The first was the ICT4RED study, a developmental evaluation of a holistic ICT‑integration model involving education communities in 26 schools in Cofimvaba, a rural town in the Eastern Cape (Botha & Herselman, 2015; Williams, 2014). The ICT4RED initiative was a comprehensive tablet integration program designed to test a range of infrastructural, pedagogical, and operational models (Ford, Botha, & Herselman, 2014).

Secondly, the PRIMR study (Piper et al., 2016) was a randomised control trial, which investigated whether e-readers for learners and tablets for teachers and instructional supervisors improved reading in schools in Kisumu County, Kenya. The PRIMR programme was a package of interventions that evolved over time - from an initial base reading and maths project, to the rollout of tablets to teachers and instructional supervisors, and e-readers to Grade 2 learners in Kisumu (Piper et al., 2016).

The third was the Nokia MoMath evaluation (Roberts et al., 2015), a quasi-experimental design which explored whether and how learners in South Africa could improve their Mathematics performance using an m-learning platform to support Mathematics learning. Like the UVS, the Nokia MoMath (Roberts et al., 2015) provided a platform, accessible via mobile phones, which offered a free maths service to secondary school learners across South Africa.

The findings from the three evaluations are compared with those of the UVS within a mobile learning affordances and learning configurations conceptual framework with a focus on stakeholder learning.

Conceptual Framework

The UVS evaluation applied a conceptual framework that expanded Strigel and Pouezevara's (2012) model of m-learning affordances and learning configurations, drawing on the work of Roberts and Spencer-Smith (2019).

M-learning affordances refer to six potential ways in which mobile technologies could enhance learning: accessibility (access to learning opportunities, reference materials, experts/mentors, other learners); immediacy (on-demand learning, real-time communication and data sharing, situated learning); individualisation (bite-size learning on familiar devices; promotion of active learning and a more personalised experience); intelligence (advanced features making learning richer through context-aware features, data capture, multimedia); big data (large and complex data sets collected from user information), and context management (delivering content appropriate to the learner's goals, situation, and resources) (Roberts & Spencer-Smith, 2019).

Strigel and Pouezevara (2012) identified variations in m-learning configurations: a learning spectrum which ranges from formal (in class, in school) to informal (out-of-school but formal learning, and/or informal learning for pleasure or entertainment); a kinetic spectrum which ranges from the learners being stationary to being mobile; and a collaborative spectrum from individual to collaborative. Roberts et al. (2015) include three additional spectra to the m-learning configuration framework: an "access" spectrum (pertaining to the availability of devices), "affordability" (pertaining to user costs, including subscription and data costs) and a "pedagogy" spectrum (the articulated approach to learning). Each spectrum for the m-learning configurations is presented in Figure 1.

To analyse uptake and use of the UVS, a waterbirds metaphor proposed by Dr Konstantin Mitgutschii to develop an uptake and use model was developed. The terms "skimmers," "duckers" and "divers" are used in order of increasing frequency of use as shown in Table 1.

Another key concept used in this paper is that of "stakeholder learning." In a programme evaluation context, stakeholders, a term borrowed from management consulting, refers to people who have a vested interest in the evaluation findings, who are affected by a programme, and who make decisions about the programme (Patton, 1997). In his model of utilization-focused evaluations, Patton (1997, 2015) highlights the tendency of evaluations to mainly demonstrate positive results and makes the case for multi-stakeholder engagement that fosters stakeholder learning for future programme improvement. We draw a distinction between stakeholders as programme decision-makers and beneficiaries who are recipients meant to benefit from a programme intervention. Stakeholders in all four programmes included representatives of government which we refer to as intermediaries, and development and donor agencies, which are called partners. We recognise the importance, however, of beneficiaries as active agents (Patton, 2015), which is not discussed in this paper. The purpose of all four programme evaluations was to foster stakeholder learning and improve policy and practice as part of attempts at scaling up m-learning.

Method

The UVS evaluation used a mixed-methods approach by applying both quantitative and qualitative research techniques. It drew on Creswell and Plano Clark's (2011) approach to mixed methods and their value in enabling different data sources to be triangulated. The quantitative data included a beneficiary survey and analysis of uptake and usage data, while qualitative data was sourced from interviews and focus groups with stakeholders and beneficiaries.

Survey data was primarily obtained from online questionnaires administered via SurveyMonkey targeting stakeholders, and Mxit‑located questionnaires targeting project beneficiaries. As electronic surveys, they have a number of advantages over paper surveys (Boyer, Olson, Calantone & Jackson, 2002) and do not result in a reduction of data quality (Nicholls, Baker & Martin, 1997).

To ensure that the internal reliability of questionnaire responses was high, alternate forms of the same question were provided in the same questionnaire. Any responses that contained a contradiction between the two versions of the question were excluded from analysis, as the contradiction indicated that it was highly likely that the respondent was either making up responses randomly and/or was not paying close attention to what was being asked.

Feedback received from these surveys represented the views of those who were highly motivated to respond and who visited the UVS platform frequently.

To ensure validity, the quantitative data was supplemented by qualitative data collected through visits to one case study teachers' centre and one case study high school. These cases were selected through purposive sampling in which the choice of the sample is based on evaluator judgement and the purpose of the study (Palys, 2008). At the case study sites, focus groups of officials, teachers, learners that used the service, and learners that didn't use the service, were held.

Finally, dashboard and back-end data provided by the Reach Trust were described and analysed to quantify the uptake and use of the UVS applications.

The data collection process for each of the stakeholder and beneficiary groups is outlined in Figure 2.

The above methodology compares with the ICT4RED, which applied a more complex, multi-faceted design science research methodology based on extensive multi-stakeholder engagement, utilising a range of quantitative and qualitative data collection methods. These included textual analyses of Twitter feeds and WhatsApp group discussions, ethnographic classroom observations, teacher questionnaires, and focus group discussions with teachers and parents.

The PRIMR evaluation study was quasi-experimental, using a randomised control trial design involving three treatment groups and one control group. The PRIMR evaluation included a focus on learner literacy outcomes for primary school children, assessing 1,580 learners at baseline and 1,560 at endline in 60 treatment and 20 control schools. They used an Early Grade Reading Assessment (EGRA) to ascertain reading outcomes, and early learning assessment software to enable data collection efficiency.

The MoMath service analysed data on the voluntary uptake and use of the service of nearly 4,000 learners in 30 township and rural schools. Learner outcomes were considered in relation to changes in Mathematics attainment over one academic year. A quasi-experimental design allowed comparison between the changes in attainment for learners who used the MoMath service with learners (in the same schools) who did not use it.

Each of these evaluations had specific evaluation purposes aligned to specific research questions; the answers to which do not all align neatly with the conceptual framework chosen for this article. However, the m-learning affordances and configurations were gleaned from the findings and analysis in each of the respective evaluation reports.

Results

Global and national policy commitments framed the rationale for all four programmes. Here, the prioritization of education and the enabling role of digital technologies in achieving the Millennium Development Goals (MDGs), the Education for All (EFA) goals and, later, the Sustainable Development Goals (SDGs) (United Nations, 2016), were paramount.

South African national policy commitments to education access, delivery, and innovation were articulated via a complex web of national plans, frameworks, policies, laws, and regulations (Isaacs, 2015) including the National Development Plan (NDP): Vision 2030 (National Planning Commission, The Presidency, Republic of South Africa, 2011), the e-Education White Paper (Department of Education, 2004), the DBE's Action Plan to 2019 (DBE, Republic of South Africa, 2015), and Operation Phakisa (Department of Planning, Monitoring & Evaluation, Republic of South Africa, n.d.). Similarly, Kenya's policy on Free Primary Education - aligned to the MDGs and later, the SDGs - enabled access to universal primary education even though challenges with education quality still existed (Mulinya & Orodho, 2015).

Similarities and Differences in Service Design

The four m-learning programmes named above were each situated within a resource-challenged K‑12 formal school setting, targeting disadvantaged education communities. All were pilots intended to ultimately inform a larger-scale m‑learning intervention. While the UVS and ICT4RED were more generic in their support for secondary school communities, ICT4RED also strongly supported teacher development, while MoMath focused on mathematics learning for secondary school children, and PRIMR focused on literacy learning for primary school children, scaffolding literacy instruction support for teachers and instructional supervisors. The PRIMR built on an initial base intervention involving teacher and head teacher training in literacy and numeracy for a year before their ICT trial began. Similarly, ICT4RED initially piloted the programme in a few schools before it was extended to 26 schools.

The service design of the pilots varied. Supplying content-rich, learning-platform-enabled tablets to teachers, and tablets or e-readers to learners, was adopted by ICT4RED and PRIMR. For example, the PRIMR teacher tablet included multi-media lesson plans, embedded audio files, supplementary pedagogical aids such as letter flashcards, applications to support letter-sound practice in English and Kiswahili, and a classroom feedback (Piper et al., 2016). ICT4RED integrated a design science research methodology in their programme with a strong focus on developing an appropriate, gamified, teacher professional development course supported by content-rich tablets provided to teachers, and exploring an iterative pedagogical model (Botha & Herselman, 2013, 2015). This compared with a learning-platform-enabled bring-your-own-device (BYOD) approach adopted by the UVS and MoMath (although the MoMath also provided a bank of devices to certain pilot schools to accommodate learners who did not have access to mobile phones). Each of the four programmes evolved over a three-year period or more and adopted different research methodologies appropriate to their respective evaluation designs.

M-Learning Affordances

For all four programmes the accessibility affordance ranked uppermost in view of their provision of digital resources to education communities. The UVS and MoMath offered access to a free digital platform with resources and tools that learners could access on their phones at any time. The UVS stakeholders were unanimous about the value of making learning resources available to disadvantaged learners, teachers, and parents. The accessibility affordance of the PRIMR lay in their provision of content-rich devices in English and Kiswahili for teachers, instructional supervisors, and children. In the case of the ICT4RED, the accessibility affordance included access to a comprehensive suite of resources including school infrastructure, relevant content for learners and teachers, an iterative professional development model for teachers, principals, district officials, skills development programmes for learners, and community-building opportunities.

The m-learning affordances of big data and immediacy were considered a lesser priority by most UVS partners. For both UVS and MoMath, however, the immediacy and big data affordance allowed for access to back-end data that enabled detailed usage analysis and the development of a usage framework based on the Mitgutsch waterbirds metaphor.

According to the back-end measure of messages to and from the UVS during the 16‑month evaluation period (September 2014 to December 2015), 1,048,576 users interacted with the service in some way, sending and/or receiving at least one message on the service.iii Of these, 179,074 (17.1% of the total) individuals registered for the service during the evaluation period. Those who registered were 150,321 learners, 7,290 teachers, and 21,463 parents (from 8,809 different schools). In relation to uptake, therefore, while a million people were reached, there was a limited uptake of 17% (of those reached who then registered).

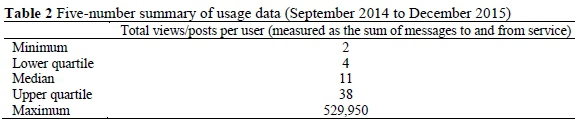

Since simply landing on the UVS home page and doing nothing more would result in a message count of one, the 241,085 users with such a message count were excluded from the UVS uptake and usage analysis. The usage of the remaining 807,491 users produced the five-number summary shown in Table 2.

Considering the median usage of only 11 total messages over sixteen months, it was clear that the UVS was not used as much as expected and hoped. However, a small number of users (1,301 users, representing 0.16% of the total) showed exceptionally high usage and sent/received more than 10,000 messages over the evaluation period.

For MoMath, 62.4% of registered learners at the 30 pilot schools completed at least 10 questions over one school year. More than 150 questions were completed by 20.7% of the learners in that same school year, while nine learners, from four different schools, completed more than 10,000 questions each (Roberts et al., 2015).

The evaluation design of ICT4RED and PRIMR used, to some extent, the big data and immediacy affordances offered by the devices and associated software that allowed for analysis of patterns of usage and outcomes. ICT4RED analysed Twitter feeds and WhatsApp group discussions as well as photographs and video-recordings taken by teachers as part of a host of data collection methods and sources, whereas PRIMR used early learning assessment software called Tangerine, to support timeous, efficient data collection.

The m-learning affordances of individualisation, intelligence, and context management were ranked as the lowest priority by UVS project partners. It was generally acknowledged that the UVS did not offer a personalised experience, but that bite-sized chunks of information were available. Context management was, according to the stakeholders, "not a priority" and "not a major consideration in the design."

For PRIMR student e-readers did not produce more learning gains than the cheaper ICT options provided. The researchers concluded that a more structured programme with specific activities and support would have yielded better results based on harnessing the individualisation affordance. Since ICT4RED was designed as a three-year iterative design-based intervention, their teacher development model was continuously improved over time, allowing them to integrate and make optimal use of the individualisation and intelligence affordance of the platforms available on the teacher tablets, together with the mentorship and coaching programme that formed part of their teacher development model.

MoMath utilised the individualisation affordance by allowing learners to improve their mathematics understanding by using a device that was familiar to all teenagers, and to choose topics or sub-topics to study (through the reading of background theory and worked examples, or the completion of actual examples).

M-Learning Configurations

In terms of the learning spectrum the UVS and MoMath were informal (used out-of-school) but supported formal learning, including academic support and, in the case of the UVS, psychosocial support. This compares with PRIMR where the focus was more on formal learning in classrooms whereas ICT4RED encouraged teacher professional learning formally in workshops, and informally, on their own beyond the classroom and workshops.

The UVS and MoMath services favoured the mobile end of the kinetic spectrum as learners/parents/teachers could be moving and/or travelling while using the service. Although PRIMR could be mobile, most of their devices were used in formal classroom settings, whereas the ICT4RED teacher development model was premised on 24/7 access to the tablet for teachers to learn at and beyond school.

In terms of the collaborative spectrum, the UVS and MoMath were nearer to the individual end of the spectrum as individual learners/teachers/parents typically worked independently on the service. However, the UVS included some messaging and calendar functionality which could allow for collaboration, although this was not fully utilised at the time of the evaluation. MoMath allowed for a degree of collaboration since users could form groups, compete against, and message each other.

The ICT4RED and PRIMR services were more on the collaborative end of the spectrum. Teachers, instructional tutors, and district officials (in the case of ICT4RED) were involved in face-to-face training workshops and online collaborative spaces created with their mentorship and support programmes. However, ICT4RED also enabled individualised private and professional learning by teachers.

Considering the access spectrum of the mobile learning configurations framework, the UVS and MoMath services adopted a BYOD approach, although MoMath also made available a bank of mobile devices to cater for learners who did not have access to devices at school. This meant that the UVS relied on the individual user's personal access to mobile device and data. Initiated in 2013, the UVS harnessed Mxit, a social networking platform that was accessible via 8,000 different handsets and via second-generation (2G) and 3G connectivity. At the time it was among the few platforms that accommodated low‑end feature phones accessible to the most disadvantaged communities in SA; and was a very popular platform with 10 million users (Walton, Haßreiter, Marsden & Allen, 2012). The majority of its users were located in South Africa, India, Nigeria, and Indonesia. This highlights the education policy goal related to promoting equity that informed the UVS design.

PRIMR and ICT4RED relied on the institutionalised supply of digital devices and resources to education communities at schools. Unlike the other three programmes, however, ICT4RED invested more in school infrastructure that could support the optimal educational use of tablets in classroom practice.

Considering the affordability spectrum, no licensing or subscription costs were required for using the UVS service. Users were expected to pay for their own data, but the design was deliberately restricted to texts and some images to keep the UVS data-light. The data relating to the UVS was zero-rated by one mobile operator in SA, Cell C.

The situation with MoMath was similar to that of UVS. Not only did it involve no service subscription costs for users, but the service was zero-rated by all the South African mobile service providers (meaning no data charges).

ICT4RED utilised low-cost affordable access strategies such as creating an internet-like experience through access to content on servers in view of prohibitive internet costs. For ICT4RED, as with PRIMR, there were no direct costs to the users because both programmes were pilot projects of which the costs were covered by donor funds. While PRIMR provided an analysis of cost effectiveness and provided per unit cost for tablets and e-readers compared to base costs, providing an affordability analysis was not within the scope of their evaluation.

Considering the pedagogy spectrum, the UVS did not follow a common approach to pedagogy or theory of learning, as each application on the UVS adopted its own approach. Some stakeholders regarded the UVS as disrupting the current approach to pedagogy within the DBE, because it was attempting different pedagogical approaches, although difficulties in changing DBE practice were also acknowledged. A salient attribute of the UVS pedagogic design was its additional provision of psychosocial support resources and related services such as career guidance and access to counselling support services in addition to providing learning and administrative support resources. Both ICT4RED and PRIMR had detailed, coherent, and structured pedagogy that involved both formalised classroom-based instruction and training, as well as informal learning.

In their 2015 analysis, Roberts et al. (2015) suggest that there was no mention of any specific pedagogy adopted by the creators of MoMath at the time. This analysis was provided in subsequent iterations of the MoMath project (Roberts & Spencer-Smith, 2019). Figure 3 provides the m-learning configurations of each of the four m-learning projects.

Stakeholder Learning

Each of the four programmes engaged in stakeholder learning processes during and following their respective programme evaluations. Following the publication of the evaluation report, the DBE and UNICEF hosted a workshop with eight service providers to learn more from existing interventions (DBE & UNICEF, 2017b), and subsequently hosted an international expert consultation to share the lessons from the UVS (DBE & UNICEF, 2017a) and work towards an appropriate scalable mobile learning model for South Africa. The ICT4RED engaged a wide range of stakeholders extensively on the findings from its evaluation where they shared their 12-component model. Similarly, presentations were delivered to the senior officials at the DBE on MoMath, while PRIMR engaged with the Kenyan Ministry of Education and donor partners during and following the evaluation.

Discussion

While each of the four m-learning programmes and their respective evaluations had unique attributes, frameworks and methodologies, their m-learning affordances and configurations and stakeholder lessons for scale-up, were the main focusses of this paper. Each programme offered specific models of accessibility which all highlighted the centrality of equity and redress policy goals that informed their design. Each targeted rural and/or peri-urban education communities and attempted affordable access strategies ranging from low-cost platforms accessible on feature phones (UVS) to open-source software (PRIMR and ICT4RED) and open education resources (ICT4RED).

The UVS and MoMath experience showed that while a BYOD approach would reduce costs for an intervention, not all targeted beneficiaries were able to access the service as a result. Furthermore, accessibility to a suitable device was not the same as affordability, and the need to pay for data (in the UVS model) prohibited extensive use by many from poorer communities. This highlights a continuing challenge to develop universally affordable and accessible m-learning models. As discussed at the knowledge-sharing workshop and international expert panel hosted by the DBE and UNICEF SA, preferential pricing and payment mechanisms to encourage personal ownership of mobile devices targeted for educational use, zero-rating digital education content that have been vetted by Ministries of Education, and open licensing and access to open education resources (OER), are some of the mechanisms that could provide impetus towards universal access.

The UVS experience also showed that simply providing access to digital materials and services does not necessarily lead to their educational use. Driving widespread adoption and active use of mobile learning platforms requires ongoing and concerted activities that are focused on raising awareness of the education resources available and encouraging active use of these resources.

All four programmes provided a fertile environment for stakeholders to learn how to engage with learning technologies that people already have, or how to supply content-rich learning technologies in ways that are cost-effective. Each raised awareness about the efficacy of m-learning as an avenue to address urgent educational challenges. Here a key lesson that featured across all four programmes was that, despite the increasing ubiquity in access to mobile technologies, their optimal use and integration to support learning, teaching, and professional development at scale and sustainably, rely on a host of complex dependencies.

While using the lens of affordances and learning configurations encourages a positive framing of mobile learning, these evaluations have also highlighted the need to consider challenges, obstacles and threats to learning. This conversation is particularly relevant at present, in view of the French government's recent legislation (implemented from September 2018) which bans smart mobile devices from being used in schools by children between 3 and 15 years. Their decision was reportedly informed by Beland and Murphy (2015) who cited higher test scores in schools where mobile phones were banned as well as reports on screen addiction by teenagers. This opens up the need for further research and engagement for mobile learning design in the context of a growing awareness of the darker side of internet access.

Conclusion and Further Research

In its attempt to compare four m-learning programme evaluations, this article has reflected on filling an important knowledge gap on the role of independent evaluations of mobile learning and ICT for development interventions. It reflects particularly on the potential that independent evaluations have in enabling continuous improvement in practice through stakeholder learning, and that this value also lies in comparing evaluations of similar m-learning interventions across similar contexts.

By comparing four different m-learning evaluations in context, the article further tries to show the value in recognising that M&E work should be regarded as real research that can place the world of practice in perspective for academia, and that it has potential to bridge the worlds between scientific knowledge production, practice-based knowledge production, and improved programme design, policy, and practice. In this way the article shows the need for a research agenda that traverses beyond university borders. It is recommended that more comparative research on available evidence-based mobile learning and ICT for development interventions be conducted as part of the generalised clamour for more evidence-based practice, policy, and research. It is also recommended that research that addresses stakeholder learning and bridge-building between stakeholders based in communities, industry, universities, and government form part of such an evidence-based knowledge production process.

Acknowledgements

The funding for the evaluation research undertaken to inform this paper was provided by UNICEF SA under the leadership of Dr Saadhna Panday-Soobrayan, Education Specialist, and Dr Wycliffe Otieno, Chief of Education and Adolescent Development. UNICEF partnered with the DBE where the UVS was led and managed by Ms Shafika Isaacs, first as an official of the DBE and later as UNICEF Technical Advisor to the DBE. Ms Kulula Manona, Director of Learning and Teaching Support Materials (LTSM) Policy and Innovation at the DBE, led the process from 2014. The service provider that designed and implemented the UVS since 2012 was the Reach Trust represented by its Chief Education Officer, Mr Andrew Rudge.

Authors' Contribution

Shafika Isaacs wrote the initial drafts of the article and wrote the abstract. Nicky Roberts added to initial drafts and wrote the conclusion. Garth Spencer-Smith added to the initial drafts and prepared the figures and tables. All authors reviewed the final manuscript.

Notes

i. In this case, the mobile penetration rate refers to the number of Subscriber Identity Module (SIM) connections. When the number of unique mobile subscribers are taken into account, the penetration rate is 44% (GSMA, 2018)

ii. See http://www.playfulsolutions.net/. The terms "skimmers, duckers" and "divers" are in order of increasing frequency of use.

iii. These messages should not be understood as an overt form of communication between the user and the service. For example, if a user landed on the ukuFUNda home page without doing anything else, that visit was recorded as one message to the service.

iv. Published under a Creative Commons Attribution Licence.

v. DATES: Received: 21 February 2018; Revised: 19 November 2018; Accepted: 17 April 2019; Published: 31 August 2019.

References

Beland LP & Murphy R 2015. Ill communication : Technology, distraction and student performance (CEP Discussion Paper No 1350). London, England: Centre for Economic Performance. Available at https://cep.lse.ac.uk/pubs/download/dp1350.pdf. Accessed 1 July 2019. [ Links ]

Botha A, Batchelor J, Traxler J, De Waard I & Herselman M 2012. Towards a mobile learning curriculum framework. In P Cunningham & M Cunningham (eds). IST-Africa 2012 Conference Proceedings. Dar es salaam, Tanzania: International Information Management Corporation (IIMC). Available at https://researchspace.csir.co.za/dspace/bitstream/handle/10204/6057/Botha3_2012.pdf?sequence=1&isAllowed=y. Accessed 19 November 2017. [ Links ]

Botha A & Butgereit L 2012. Dr Math: A mobile scaffolding environment. International Journal of Mobile and Blended Learning, 4(2):15-29. Available at https://www.researchgate.net/profile/Adele_Botha/publication/279421084_Dr_Math/links/559e778208aea946c06a09cb/Dr-Math.pdf. Accessed 1 July 2019. [ Links ]

Botha A & Herselman M 2013. Supporting rural teachers 21st century skills development through mobile technology use: A case in Cofimvaba, Eastern Cape, South Africa. In 2013 International Conference on Adaptive Science and Technology. IEEE. https://doi.org/10.1109/ICASTech.2013.6707490 [ Links ]

Botha A & Herselman M 2015. A Teacher Tablet Toolkit to meet the challenges posed by 21st century rural teaching and learning environments. South African Journal of Education, 35(4):Art. # 1218, 19 pages. https://doi.org/10.15700/saje.v35n4a1218 [ Links ]

Boyer KK, Olson JR, Calantone RJ & Jackson EC 2002. Print versus electronic surveys: A comparison of two data collection methodologies. Journal of Operations Management, 20(4):357-373. https://doi.org/10.1016/S0272-6963(02)00004-9 [ Links ]

Creswell JW & Plano Clark VL 2011. Designing and conducting mixed methods research (2nd ed). Thousand Oaks, CA: Sage. [ Links ]

Crompton H 2013. A historical overview of m-learning: Toward learner-centered education. In ZL Berge & LY Muilenburg (eds). Handbook of mobile learning. New York, NY: Routledge. [ Links ]

Crompton H, Burke D & Gregory KH 2017. The use of mobile learning in PK-12 education: A systematic review. Computers & Education, 110:51-63. https://doi.org/10.1016/j.compedu.2017.03.013 [ Links ]

Department of Basic Education, Republic of South Africa 2015. Action plan to 2019: Towards the realisation of schooling 2030. Taking forward South Africa's National Development Plan 2030. Pretoria: Author. Available at https://www.education.gov.za/Portals/0/Documents/Publications/Action%20Plan%202019.pdf?ver=2015-11-11-162424-417. Accessed 4 July 2019. [ Links ]

Department of Basic Education (DBE) & UNICEF 2017a. Expert panel on designing an effective large-scale mobile learning initiative for public schools in South Africa. Pretoria, South Africa: Author. [ Links ]

Department of Basic Education (DBE) & UNICEF 2017b. Report on the Knowledge-sharing Workshop on Mobile Learning for South African Schools. Pretoria, South Africa: Author. [ Links ]

Department of Education 2004. White Paper on e-Education: Transforming learning and teaching through information and communication technologies (ICTs). Government Gazette, 26762:3-46, September 2. Available at https://www.education.gov.za/Portals/0/Documents/Legislation/White%20paper/DoE%20White%20Paper%207.pdf?ver=2008-03-05-111708-000. Accessed 14 October 2017. [ Links ]

Department of Performance Monitoring and Evaluation, The Presidency, Republic of South Africa 2011. National Evaluation Policy Framework. Pretoria: Author. Available at https://www.dpme.gov.za/publications/Policy%20Framework/National%20Evaluation%20Policy%20Framework.pdf. Accessed 4 July 2019. [ Links ]

Department of Planning, Monitoring & Evaluation, Republic of South Africa n.d. Operation Phakisa: ICT in education. Available at http://www.operationphakisa.gov.za/operations/EducationLab/pages/default.aspx. Accessed 17 October 2017. [ Links ]

Ford M, Botha A & Herselman M 2014. ICT4RED 12 - Component implementation framework: A conceptual framework for integrating mobile technology into resource-constrained rural schools. In P Cunningham & M Cunningham (eds). IST-Africa 2014 Conference Proceedings. Dublin, Ireland: International Information Management Corporation (IIMC). Available at https://researchspace.csir.co.za/dspace/bitstream/handle/10204/7761/Ford_2014.pdf?sequence=1&isAllowed=y. Accessed 4 July 2019. [ Links ]

GSMA 2017. The mobile economy Sub-Saharan Africa 2017. London, England: Author. Available at https://www.gsmaintelligence.com/research/?file=7bf3592e6d750144e58d9dcfac6adfab&download. Accessed 10 November 2017. [ Links ]

GSMA 2018. The mobile economy 2018. London, England: Author.Available at https://www.gsma.com/mobileeconomy/wp-content/uploads/2018/05/The-Mobile-Economy-2018.pdf. Accessed 10 November 2018. [ Links ]

Henning E 2017. South African educational research journals. South African Journal of Childhood Education, 7(1):a605. https://doi.org/10.4102/sajce.v7i1.605 [ Links ]

Hollow D 2010. Evaluating ICT for education in Africa. PhD thesis. London, England: University of London. Available at https://s3.amazonaws.com/academia.edu.documents/35988971/DavidHollowThesisFinalCopy.pdf?response-content-disposition=inline%3B%20filename%3DEvaluating_ICT_for_education_in_Africa.pdf&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWOWYYGZ2Y53UL3A%2F20190627%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20190627T062652Z&X-Amz-Expires=3600&X-Amz-SignedHeaders=host&X-Amz-Signature=8ba1a18026e0f851e114541a273e79b5e8f4a4ef213a0c06426cad54a1ce77cb. Accessed 10 November 2017. [ Links ]

Hollow D 2015. Monitoring and evaluation of ICT4D. In PH Ang & R Mansell (eds). The International Encyclopedia of Digital Communication and Society. https://doi.org/10.1002/9781118767771.wbiedcs128 [ Links ]

Hsu YC & Ching YH 2015. Un examen des modèles et cadres de travail pour la conceptioncd'expériences et d'environnements d'apprentissage mobile [A review of models and frameworks for designing mobile learning experiences and environments]. Canadian Journal of Learning and Technology, 41(3):1-22. https://doi.org/10.21432/t2v616 [ Links ]

Isaacs S 2012a. Mobile learning for teachers in Africa and the Middle East: Exploring the potential of mobile technologies to support teachers and improve practice. Paris, France: United Nations Educational, Scientific and Cultural Organization (UNESCO). Available at http://www.schoolnet.org.za/sharing/mobile_learning_AME.pdf. Accessed 27 July 2019. [ Links ]

Isaacs S 2012b. Turning on mobile learning in Africa and the Middle East: Illustrative initiatives and policy implications. Paris, France: United Nations Educational, Scientific and Cultural Organization (UNESCO). Available at https://tostan.org/wp-content/uploads/unesco_turning_on_mobile_learning_in_africa_and_the_middle_east.pdf. Accessed 27 July 2019. [ Links ]

Isaacs S 2015. A baseline study on technology-enabled learning in the African and Mediterranean countries of the Commonwealth (Report). Burnaby, Canada: Commonwealth of Learning. Available at http://oasis.col.org/bitstream/handle/11599/1674/2015_Isaacs_Baselin-Africa.pdf?sequence=1. Accessed 27 July 2019. [ Links ]

Jere-Folotiya J, Chansa-Kabali T, Munachaka JC, Sampa F, Yalukanda C, Westerholm J, Richardson U, Serpell R & Lyytinen H 2014. The effect of using a mobile literacy game to improve literacy levels of grade one students in Zambian schools. Educational Technology Research and Development, 62(4):417-436. https://doi.org/10.1007/s11423-014-9342-9 [ Links ]

Jumia 2019. Kenya mobile report 2019. Available at https://www.jumia.co.ke/mobile-report/. Accessed 25 July 2019. [ Links ]

Mulinya LC & Orodho JA 2015. Free primary education policy: Coping strategies in public primary schools in Kakamega South District, Kakamega County, Kenya. Journal of Education and Practice, 6(12):162-172. Available at https://files.eric.ed.gov/fulltext/EJ1080614.pdf. Accessed 10 August 2018. [ Links ]

National Planning Commission, The Presidency, Republic of South Africa 2011. Diagnostic overview. Pretoria: Author. [ Links ]

Nicholls WL II, Baker RP & Martin J 1997. The effect of new data collection technologies on survey data quality. In L Lyberg, P Biemer, M Collins, E de Leeuw, C Dippo, N Schwarz & D Trewin (eds). Survey measurement and process quality. Hoboken, NJ: John Wiley & Sons. [ Links ]

Palys T 2008. Purposive sampling. In LM Given (ed). The SAGE Encyclopedia of Qualitative Research Methods (Vol. 2). Thousand Oaks, CA: Sage. Available at http://www.yanchukvladimir.com/docs/Library/Sage%20Encyclopedia%20of%20Qualitative%20Research%20Methods-%202008.pdf. Accessed 11 July 2019. [ Links ]

Patton MQ 1997. Utilization-focused evaluation: The new century text. Thousand Oaks, CA: Sage. [ Links ]

Patton MQ 2015. Qualitative research and evaluation methods: Integrating theory and practice (4th ed). Thousand Oaks, CA: Sage. [ Links ]

Piper B, Zuilkowski SS, Kwayumba D & Strigel C 2016. Does technology improve reading outcomes? Comparing the effectiveness and cost-effectiveness of ICT interventions for early grade reading in Kenya. International Journal of Educational Development, 49:204-214. https://doi.org/10.1016/j.ijedudev.2016.03.006 [ Links ]

Raftree L 2013. Landscape review: Mobiles for youth workforce development. Rockville, MD: JBS International. Available at https://youtheconomicopportunities.org/sites/default/files/uploads/resource/mywd_landscape_review_final2013_0.pdf. Accessed 13 July 2019. [ Links ]

Roberts N & Spencer-Smith G 2019. A modified analytical framework for describing m-learning (as applied to early grade Mathematics). South African Journal of Childhood Education, 9(1):a532. https://doi.org/10.4102/sajce.v9i1.532 [ Links ]

Roberts N, Spencer-Smith G & Butcher N 2016. An implementation evaluation of the ukuFUNda virtual school. Johannesburg, South Africa: Kelello. https://doi.org/10.13140/RG.2.2.30180.27528 [ Links ]

Roberts N, Spencer-Smith G, Vänskä R & Eskelinen S 2015. From challenging assumptions to measuring effect: Researching the Nokia Mobile Mathematics Service in South Africa. South African Journal of Education, 35(2):Art. # 1045, 13 pages. https://doi.org/10.15700/saje.v35n2a1045 [ Links ]

Spencer-Smith G & Roberts N 2014. Landscape review mobile education for numeracy: Evidence from interventions in low-incomes countries. Bonn, Germany: M-Education Alliance GIZ. [ Links ]

Strigel C & Pouezevara S 2012. Mobile learning and numeracy: Filling gaps and expanding opportunities for early grade learning. Durham, NC: RTI International. Available at https://www.rti.org/sites/default/files/resources/mobilelearningnumeracy_rti_final_17dec12_edit.pdf. Accessed 13 July 2019. [ Links ]

Traxler J 2010. Students and mobile devices. ALT-J, Research in Learning Technology, 18(2):149-160. https://doi.org/10.1080/09687769.2010.492847 [ Links ]

Traxler J 2016. Mobile learning research: The focus for policy-makers. Journal of Learning for Development, 3(2):7-25. Available at http://files.eric.ed.gov/fulltext/EJ1108183.pdf. Accessed 20 August 2018. [ Links ]

Traxler J 2018. Learning with mobiles: The Global South. Research in Comparative and International Education, 13(1):152-175. https://doi.org/10.1177/1745499918761509 [ Links ]

United Nations 2016. The Sustainable Development Goals Report 2016. New York, NY: Author. https://doi.org/10.18356/3405d09f-en [ Links ]

Vosloo S, Walton M & Deumert A 2009. m4Lit: A teen m-novel project in South Africa. Paper presented at the 8th World Conference on Mobile and Contextual Learning, Orlando, FL, 26-30 October. Available at https://marionwalton.files.wordpress.com/2009/09/mlearn2009_07_sv_mw_ad.pdf. Accessed 10 October 2017. [ Links ]

Wagner DA 2014. Mobiles for reading: A landscape research review (Working Paper 6-2014). Available at https://repository.upenn.edu/cgi/viewcontent.cgi?article=1006&context=literacyorg_workingpapers. Accessed 2 February 2018. [ Links ]

Walton M, Haßreiter S, Marsden G & Allen S 2012. Degrees of sharing: Proximate media sharing and messaging by young people in Khayelitsha. In E Churchill & S Subramanian (eds). MobileHCI '12 Proceedings of the 14th international conference on human-computer interaction with mobile devices and services. San Francisco, CA: Association for Computing Machinery (ACM). Available at https://s3.amazonaws.com/academia.edu.documents/32385720/p403.pdf?response-content-disposition=inline%3B%20filename%3DDegrees_of_Sharing_Proximate_Media_Shari.pdf&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWOWYYGZ2Y53UL3A%2F20190716%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20190716T061134Z&X-Amz-Expires=3600&X-Amz-SignedHeaders=host&X-Amz-Signature=8153da59b447acdf7ba98366665d4dec454db2aa6b7cc105d474292a0dc2e5a9. Accessed 16 July 2019. [ Links ]

Wan N 2010. Mobile technologies and handheld devices for ubiquitous learning: Research and pedagogy. New York, NY: Information Science Reference. [ Links ]

West M 2012. Turning on mobile learning: Global themes. Paris, France: United Nations Educational, Scientific and Cultural Organization (UNESCO). Available at https://unesdoc.unesco.org/ark:/48223/pf0000216451. Accessed 18 July 2019. [ Links ]

West M & Chew HE 2014. Reading in the mobile era: A study of mobile reading in developing countries. Paris, France: United Nations Educational, Scientific and Cultural Organization (UNESCO). [ Links ]

Williams B 2014. Preliminary evaluation of the PHASE 2 rollout of the ICT4RED project implemented in Cofimvaba in the Eastern Cape (Unpublished report). Pretoria, South Africa: Centre for Scientific and Industrial Research, Department of Science and Technology. [ Links ]

Worldreader 2012. IREAD Ghana study: Final evaluation report. Available at https://comms.worldreader.org/wp-content/uploads/2015/03/10.-Worldreader-ILC-USAID-iREAD-Final-Report-Jan-2012_low.pdf. Accessed 10 August 2018. [ Links ]

Wright S & Parchoma G 2011. Technologies for learning? An actor-network theory critique of 'affordances' in research on mobile learning. Research in Learning Technology, 19(3):247-258. https://doi.org/10.1080/21567069.2011.624168 [ Links ]

Zelezny-Green R 2014. She called, she Googled, she knew: Girls' secondary education, interrupted school attendance, and educational use of mobile phones in Nairobi. Gender & Development, 22(1):63-74. https://doi.org/10.1080/13552074.2014.889338 [ Links ]

Zelezny-Green R 2018a. Mother, may I? Conceptualizing the role of personal characteristics and the influence of intermediaries on girls' after-school mobile appropriation in Nairobi. Information Technologies & International Development, 14:48-65. [ Links ]

Zelezny-Green R 2018b. 'Now I want to use it to learn more': Using mobile phones to further the educational rights of the girl child in Kenya. Gender & Development, 26(2):299-311. https://doi.org/10.1080/13552074.2018.1473226 [ Links ]