Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

South African Journal of Science

On-line version ISSN 1996-7489

Print version ISSN 0038-2353

S. Afr. j. sci. vol.115 n.7-8 Pretoria Jul./Aug. 2019

http://dx.doi.org/10.17159/sajs.2019/5867

RESEARCH ARTICLES

Transition to open: A metrics analysis of discoverability and accessibility of LIS scholarship

Jaya RajuI; Andiswa MfenguI; Michelle KahnI; Reggie RajuII

IDepartment of Knowledge and Information Stewardship, University of Cape Town, Cape Town, South Africa

IIUCT Libraries, University of Cape Town, Cape Town, South Africa

ABSTRACT

Metrics analysis of journal content has become an important point for debate and discussion in research and in higher education. The South African Journal of Libraries and Information Science (SAJLIS), a premier journal in the library and information science (LIS) field in South Africa, in its 85-year history, has had multiple editors and many contributing authors and has published over 80 volumes and 160 issues on a diversity of topics reflective of LIS theory, policy and practice. However, how discoverable and accessible has the LIS scholarship carried by the Journal been to its intended readership? SAJLIS transitioned to open access in 2012 and this new format in scholarly communication impacted the Journal significantly. The purpose of this paper is to report on a multiple metrics analysis of discoverability and accessibility of LIS scholarship via SAJLIS from 2012 to 2017. The inquiry takes a quantitative approach within a post-positivist paradigm involving computer-generated numerical data as well as manual data mining for extraction of qualitative elements. In using such a multiple metrics analysis to ascertain the discoverability and accessibility of LIS scholarship via SAJLIS in the period 2012 to 2017, the study employs performance metrics theory to guide the analysis. We highlight performance strengths of SAJLIS in terms of discoverability and accessibility of the scholarship it conveys; identify possible growth areas for strategic planning for the next 5 years; and make recommendations for further study for a more complete picture of performance strengths and areas for improvement.

SIGNIFICANCE:

•The importance of discoverability and accessibility of scholarship carried by a scholarly journal is conveyed.

•The need to use multiple metrics for objective evaluation of the discoverability and accessibility of the scholarly content of a journal is emphasised.

•The impact of open access on the discoverability and accessibility of the content of a scholarly journal is assessed.

Keywords: open access; journal metrics; scholarship discoverability; scholarship accessibility

Introduction and background to the study

Evaluation of scientific and scholarly content is a critical element of the scholarly communication process. Harnad1 differentiates between subjective evaluation of such content (for example peer review) and objective evaluation (for example metrics analysis such as bibliometrics, or more broadly scientometrics). Neither evaluation, Harnad1 claims, has 'face-validity' (that is, a personal assessment that the evaluation instrument appears, on the face of it, to measure the construct it is intended to measure) and thus 'multiple tests rather than a single test [are required] for evaluation'. It is in this context that we report here on the use of multiple metrics as an objective means of determining the discoverability and accessibility of library and information science (LIS) scholarship via the South African Journal of Libraries and Information Science (SAJLIS) when it transitioned to an online open access platform; after all, the benefit of open access (that is, free online availability of scholarly content for all to access without cost or licensing barriers) cannot be realised if such content is not discoverable. For purposes of providing a research context, an account of the history of the Journal is necessary.

History of the Journal

The South African Journal of Libraries and Information Science 'has, since 2002, Vol. 68(1), been published as the official research journal of the Library and Information Association of South Africa (LIASA)'2. LIASA was established in 1997 as the result of a unification of the library and information services (LIS) associations SAILIS (South African Institute for Librarianship and Information Science) and ALASA (African Library Association of South Africa). The establishment of LIASA was part of a nationwide reconstruction and development effort in the aftermath of the establishment of a new democratic order in South Africa in 1994 following decades of apartheid governance.

The first issue of the Journal was published in July 1933 as South African Libraries, the quarterly journal of the South African Library Association (SALA), founded in 1930. The title of the Journal has 'changed slightly at various stages in its existence' but the Journal itself has run continuously since its first issue.2 Volumes 1 to 48 of South African Libraries (1933-1980) were published by SALA and were edited by prominent South African academic and public librarians appointed by SALA.2 In 1979, SALA reconstituted itself as a professional graduate association known as the South African Institute for Librarianship and Information Science (SAILIS). Following this reconstitution, from 1982 the title of South African Libraries changed to the South African Journal for Librarianship and Information Science.

Walker2 explains that, in 1984, SAILIS transferred the management and publication of SAJLIS to the Bureau for Scientific Publications of the Foundation for Education, Science and Technology for financial and operational reasons. Within this structure, the Council for Scientific Publications managed the publication of a number of South African scientific journals. SAJLIS joined this stable of publications from 1984 and was then renamed the South African Journal of Library and Information Science. SAJLIS remained a quarterly publication but the Bureau for Scientific Publications imposed some design and content changes.2 SAILIS appointed a Scientific Editor, a Reviews Editor and an Editorial Secretary. The Journal's Editorial Committee was made up of senior South African LIS professionals. Instructions to Authors and an Editorial Policy focusing on contributions that reflect scientific investigation were published with each issue. SAJLIS remained with the Bureau for Scientific Publications from 1984 until the disestablishment of SAILIS in 1998 when it was transferred to the SALI (South African Library and Information) Trust, which was formed to manage the assets of SAILIS until their transfer to LIASA. During this transitional period spanning 1998-2001, Walker2 records 'a hiatus in the frequency of publication' of SAJLIS. Responsibility for the Journal was transferred by the SALI Trust to LIASA in 2002 and, once again, continuity in publication of issues was re-instated but with a slight change in title and a new ISSN. The South African Journal of Libraries and Information Science became the official journal of LIASA, with two issues published per year.

Since 2002, SAJLIS has had three Editors-in-Chief - all senior LIS academics - an Editorial with each issue and a globally representative Editorial Advisory Board of eminent library and information scholars and professionals. The Editor-in-Chief serves for fixed maximum terms of office as per LIASA's election of office bearers' policy. In 2011, LIASA signed the Berlin Declaration on Open Access to Knowledge in the Sciences and the Humanities and, as a demonstration of its commitment to the open access movement, took the decision to publish SAJLIS as an online-only open access publication from 2012 onwards using OJS (Open Journal Systems) as an online publishing platform. All back issues of SAJLIS from 1997 to 2011 have been digitised, thus making all issues of SAJLIS in the LIASA era openly available for all to access freely at http://sajlis.journals.ac.za/pub.

The online era of the Journal also saw the establishment of a journal management team comprising the Editor-in-Chief, a Journal Manager to look after the OJS management of the Journal, a Language and Layout Editor, and Communication and Advocacy Support. The Journal has an online ISSN, digital object identifiers (DOIs) are assigned via Crossref to each article published, and the content is licensed under a Creative Commons Attribution-Share-Alike 4.0 International Licence. The Journal continued with its practice of charging page fees to authors for manuscripts accepted for publication, in the form of article processing charges (APCs) to cover basic online publication costs. Author guidelines were updated for an online format and online submission guidance is provided on the Journal site. This phase in the development of SAJLIS saw the inclusion of ORCIDs for each author to promote the unique identification of authors and their contribution to scholarly literature; and emerging scholars began to be included on the Editorial Advisory Board. In 2015-2017, SAJLIS underwent the Academy of Science of South Africa (ASSAf)'s rigorous peer-review evaluation. The outcome, announced in 2018, included continued listing of SAJLIS on the Department of Higher Education and Training (DHET) list of accredited journals and an invitation for SAJLIS to join the SciELO South Africa platform - South Africa's premier open access full-text journal database which includes a selected collection of peer-reviewed South African scholarly journals.

Since its inception to the present, South African Libraries and then SAJLIS have been indexed by a range of local and international bibliographical and indexing services. These include Library Literature, Library Science Abstracts (later called Library and Information Science Abstracts), Index to South African Periodicals (ISAP), INSPEC (later INSPEC-Computer and Control Abstracts), Academic Abstracts, Academic Search, Current Awareness Bulletin IBZ+IBR, Internet Access BUBL, Masterfile, South African Studies, Information Science and Technical Abstracts (ISTA).2 Currently, SAJLIS is indexed by, inter alia, EBSCOhost, Proquest and Online Computer Library Center.3 The inclusion of a journal in indexes allows its content to be discoverable and, importantly, the selection of a journal for inclusion in indexing services is also a reflection of its quality. SAJLIS is yet to access Web of Science and Scopus index listings.

Research problem

In its 85-year-old history, SAJLIS has seen over 80 volumes, more than 160 issues, multiple editors, many authors and a diversity of topics within LIS theory, policy and practice reflective of the times through which the Journal has been published. The objective of the Journal is to 'serve and reflect the interests of the South Africa LIS community across the spectrum of its wide-ranging activities and research'4. In addition to formal scholarly articles, articles on issues of practice are solicited 'to actively encourage young writers, researchers and practitioners to share their experiences and findings so that all aspects of research, teaching, thinking and practice are brought together'4. The Journal's primary target audience is LIS and related research communities, including academics and scholars (nationally and internationally), practising information professionals as well as policymakers.

Despite this illustrious history of a premier journal in the LIS field in South Africa, the question is: how discoverable and accessible has the LIS scholarship carried by the Journal been to its intended readership? As mentioned earlier, motivated by the Open Movement and its promotion of discoverability and accessibility,5,6SAJLIS transitioned to open access in 2012, one of the first titles, both within South Africa and internationally, to do so. This new format in scholarly communication impacted the Journal significantly. The purpose of this paper is to report on a metrics analysis of discoverability and accessibility of LIS scholarship via SAJLIS from 2012 to 2017.

Theoretical framing

A metric 'is a verifiable measure, stated in either quantitative or qualitative terms and defined with respect to a reference point'7. Metrics exist as tools demonstrating performance and as such provide the following functions: control (metrics enable people to evaluate and control the performance of a resource for which they are responsible, in this case SAJLIS); communication (metrics communicate performance to stakeholders such as the discoverability and accessibility of LIS scholarship carried by SAJLIS); and improvement (metrics identify gaps 'between performance and expectation' that 'ideally point the way for intervention and improvement').7 Using metrics analysis to ascertain discoverability and accessibility of LIS scholarship via SAJLIS in the period 2012 to 2017 addresses these three areas of control, communication and improvement for purposes of demonstrating strengths and gaps and to identify growth areas and future challenges.

Literature

We present a review of the literature related to discoverability and accessibility of open access journals, including metrics that may be used for evaluating journals, communicating performance and identifying gaps in performance (the three functions of metrics - control, communication and improvement - as identified by Melnyk et al.7). These metrics include bibliometrics, such as citation analysis; webometrics like downloads, views and reads, and altmetrics like social media links and mentions; and indications of journal rigour like the review process or editorial board composition.

Citations as indicators of discoverability and accessibility

Historically, citations have been viewed as a measure of use and 'the best available approximations of academic impact'8, whether of the individual author, an institution or country, or a journal. Schimmer et al.9 report that evidence of growth in open access publishing can be seen in the growth in the number of papers published in open access journals. Whether this growth is reflected in citation counts has been a subject of several studies.

Sotudeh and Horri10 - examining a sample of gold open access journals - found that, although not all open access articles are cited, 'citations to [open access] articles increase at a faster rate relative to the increase in publication of [open access] articles'10, thus indicating that citation counts can be affected by the number of articles published in a journal. Mukherjee11 counted total articles published in a 4-year period (2000-2004) for 17 open access LIS journals but did not find an increase in citations for all journals in the sample, thus concluding that 'just being open access is not a guarantee of success'11. Mukherjee11 counted only content considered 'citable' (that is, excluding editorials, book reviews, news items and such) and listed on Google Scholar, as not all journals - and particularly LIS journals - are indexed in the large scientific databases of Web of Science and Scopus.

The ease with which open access journal articles can be discovered and accessed can create a 'citation advantage' for open access journals12 in which the availability of these articles results in more reads and citations than those of articles from closed journals. While citation advantage is not a given, results from a study by Atchison and Bull13 who looked at citations of self-archived (green) open access articles, were 'mixed' across disciplines, but there was shown to be citation advantage in the social sciences when it came to open access publishing.

Citation counts can likewise be affected by geography: Fukuzawa14 found that papers published in open access journals were cited in a greater number of countries. The wide discoverability and free online accessibility of these journals also resulted in total citations for open access journals being higher than those for non-open access journals.14 Tang et al.12 tested whether geography had an effect on citations, hypothesising that open access articles, because free, would be more highly cited in developing countries. While they were not able to prove their assumption, they did find that open access articles in their sample 'showed significant citation advantages' overall over a 4-year period.12

Mukherjee11 concluded that 'open access journals in LIS are rapidly establishing themselves as a viable medium for scholarly communication' because of the quantity of open access articles being published. LIS open access journals had a low journal self-citation rate (that is, contributors do not cite the journal to which they are contributing), which could mean 'higher visibility and a higher impact in the field' for these journals as citations are coming from outside the contributor community.11

Alternative measures of performance

While citations are one way of demonstrating the visibility and accessibility of a journal, alternative counts, such as downloads, views, reads and social media mentions can also be used as indicators, particularly in a non-traditional publishing context. Kurtz and Henneken15 defined 'download' as 'any accessing of data on an article, whether full text, abstract, citations, references, associated data, or one of several other lesser-used options'. Downloads are 'a good surrogate for usage' with an added advantage of being a simple measure.16 A download, even if it does not result in a citation, can indicate 'respect' for research15, an interest in it and intention to read it. Altmetrics, which count social media activity at the article level (for example, recommendations and 'captures' such as bookmarks and saves of the online article)17 can, along with views and downloads, be used to complement traditional metrics. Through tools such as Altmetric Explorer, ImpactStory and Plum Analytics, more immediate evidence of engagement with scholarly content can be collected than what traditional metrics can supply. Altmetrics can be used to study 'the attention received by journals through social media and other online access platforms'18. In an investigation of the activity of six PLoS journals, Huang et al.17 compared traditional metrics (citations) and altmetrics to see whether there was a correlation between altmetrics, measured using the Altmetric Attention Score (AAS), and citations in Web of Science. They found that there was 'a possibility that AAS may be an indicator of citation numbers' if the nature of the journal is considered,17 supporting the call for altmetrics to be used alongside traditional metrics.

With access to relevant software, views and downloads are easy to count and can thus be used to track the growth of a journal. Mintz and Mograbi19 did so for the journal Political Psychology over a 6-year period (2009-2014) and discovered that the number of downloads grew by over 680% in the period under review. Views and downloads are also a more immediate measure of use than citations which require years to accumulate.20 Moed and Halevi20 also report that downloads do not necessarily result in citations.

Similarly, Bazrafan et al.21 investigated submissions, downloads, readership and citation data for the Journal of Medical Hypotheses and Ideas for the year 2012 and found that, while all articles had been viewed and downloaded, the average citation per article of those published in 2012 was only 0.7.

Downloads can be affected by the interest and location of the reader. While download numbers were high overall for the Journal of Medical Hypotheses and Ideas, it was discovered that articles about innovative ideas or on topical research were downloaded more than others.21 There were more downloads from America and Africa because of 'the role of these regions in the share of the world's medical knowledge'21 but the journal was accessed from all over the world.

As with downloads, altmetrics such as tweets, likes and shares can easily be counted but do not necessarily result in citations. They are, however, awareness- and visibility-generating altmetrics.22 Onyancha18 found that the 273 DHET-accredited South African journals (not all open access) that he investigated had a social media presence, particularly on Twitter. Papers from these journals that received the most mentions on social media were multidisciplinary.18

Journal rigour and content

While studies focusing on objective (quantitative) measures to analyse discoverability and accessibility dominate the literature, Fischer23 advocated for 'top-quality service to authors and other stakeholders', along with a rigorous review process and a highly reputable editorial board, to improve journal performance, particularly when it comes to attracting submissions. Open access journals have had to deal with the fallout from predatory journals which have 'tainted the reputation' of genuine open access publishing.24 Thus, open access journals which retain the rigour and standards of traditionally published academic journals are bound to receive more submissions - and consequently more downloads and citations - than other open access journals. Te et al.25 examined LIS journals that were indexed in the Directory of Open Access Journals (DOAJ) and the Open Access Journal Search Engine (OAJSE) and found that the 65 LIS journals in these lists were of a high standard and maintained levels of rigour of traditional journals.

Subjects covered in a journal also affect its use and therefore Te et al.25 also examined the content matter of the LIS journals in their sample, finding that the most popular topics were research, information systems and technology, information science, information literacy, academic librarianship and libraries, and local librarianship.25 Mukherjee11 found that information technology articles predominated in the LIS journals sampled. Logically, articles related to on-trend topics would positively influence the attention a journal receives, both from contributors and readers. Despite increased submissions, academic rigour of a journal would preclude a high acceptance rate.23

Björk24 claims that open access publishing also increases the societal impact of the research that is available open access - something that publishers and researchers should not overlook. South Africa is playing a role in the open access movement by being the biggest producer of open access journals on the continent after Egypt, as highlighted by Nwagwu and Makhubela26 in a study assessing the progress of open access in Africa. However, with Egypt publishing 75.9% of the continent's open access journals, South Africa 11.1% and Nigeria 5.9%, the uptake of open access journals in the rest of Africa is low.26 Nwagwu and Makhubela26 report that, in a global context, the number of African open access journals is small (only 6.3% of journals listed in the DOAJ database are African). The small uptake means less competition and therefore an increased visibility for the open access journals already being published, particularly if they are addressing important research areas on the continent.

In summary, while it is still relevant to measure the performance of a journal using traditional means (for example, via peer review), as research is no longer disseminated and accessed only via traditional channels such as journal publication, measurement must take place via other channels too.18 For a holistic picture, multiple indicators are necessary, as pointed out by Harnad1. Metrics can include objective evaluation involving bibliometrics and altmetrics as well as subjective evaluation of the quality of a journal (such as its peer review process and the content it publishes), all of which influence visibility and accessibility. The literature reviewed here shows that becoming open access does not automatically mean an improvement in journal performance but that better discoverability and accessibility, publishing articles on topical issues in the discipline, and a good reputation (founded on journal rigour) will lead to more exposure for a journal, thus giving it the potential to improve its performance. In this inquiry, the focus is on objective indicators of SAJLIS performance.

Methodology

The inquiry takes a quantitative approach within a post-positivist paradigm, that is, objective or empirical science that allows for the consideration of the behaviour of humans. In the case of this research, this approach translates into the use of largely computer-generated numerical data but also some manual data mining for extraction of a few qualitative elements which are then reduced to quantitative measures.27 We use performance metrics theory to guide the analysis, which covers three broad performance areas during the Journal's open era:

1.The extent of growth and rigour of the Journal covering areas such as number of submissions, acceptances and rejections; geographical distribution of authors; academic and practitioner input;

2.Discoverability and accessibility using Matomo (Piwik) to ascertain the geographical distribution of the views and downloads; and altmetrics tools such as Plum Analytics to determine usage, capture and social media mentions at article level; and,

3.Citation analysis of the Journal using Google Scholar. Google Scholar was used to count citations as SAJLIS is not currently indexed by the large indexing services, Scopus and Web of Science. For article-level analysis, purposive sampling was employed to select 25% of the journal articles published over the identified 6-year period based on topical issues in LIS as reflected in the Association of College and Research Libraries (ACRL)28 top trends and in the International Federation of Library Associations and Institutions (IFLA)29 trend report.

Such a metrics analysis using objective albeit multiple indicators of performance as advocated by Harnad1 to achieve 'face-validity' in the evaluation of the Journal's performance would serve to highlight performance strengths of SAJLIS as well as identify growth areas for the next 5 years in terms of discoverability and accessibility of the scholarship it conveys.

For this multiple testing, data were retrieved from the journal hosting platform, OJS, and Matomo (previously known as Piwik), an open source web analytics application, to track the visits and downloads. As much as OJS and Matomo provide important data, there was a need for the researchers to do a combination of computer-generated data and manual data mining to extract such data as geographical distribution of authors, and practitioner and academic contribution. Article-level metrics (altmetrics) were accessed from PlumX metrics to provide insights into the ways people interact with individual pieces of research output in the online environment,30 and citation data were retrieved from Google Scholar. These data extractions provided means for objective evaluation of the Journal using multiple metrics or indicators.

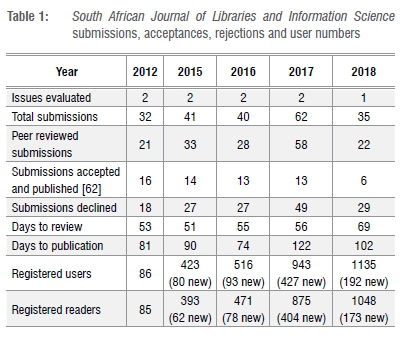

Each visit by an individual to the website, as long as subsequent visits are more than 30 minutes later, is counted by Matomo31 as a new site visit. Actions refer to the number of actions performed by visitors to a site. Actions can be page views, internal site searches, downloads or outlinks (that is, external URLs that are clicked by visitors from SAJLIS website pages).31 Unique visitors refer to the number of unduplicated visitors calling at the website, and every user is only counted once even if they visit the website multiple times a day.31SAJLIS published 62 articles between 2012 and 2017 (excluding editorials and book reviews), as shown in Table 1. The study sampled 25% (16) of the published articles in this period, and the sample was purposively selected according to the ACRL top trends28 and IFLA trend report29. The ACRL reviews developments and issues affecting academic libraries and higher education while IFLA in its trend report reflects on the forces shaping library and information services broadly. These reports identified the following top trends, among others: research data management, open scholarship and open access, open education, e-books, information literacy, social media, patron-driven collection development, ICTs (infrastructure and connectivity).

It should be noted that in the data presentation (see Table 1), the number of submitted, accepted for publication and rejected manuscripts for the years 2013 and 2014 were not included because the SAJLIS digitisation of back issues (referred to earlier) in 2013 and 2014 inadvertently distorted the OJS statistics for these years. Notwithstanding this exclusion, sufficient chronological data are available to reflect important trends. Similarly, PlumX metrics for SAJLIS articles published in 2012 were not available, for reasons beyond our control. PlumX metrics are divided into five categories: usage, capture, mentions, social media and citations. Usage is a way to signal if anyone is reading the articles or otherwise using the research; usage counts such things as clicks, downloads and views, and it is the most used metric after citations.30 Captures indicate that someone wants to return to the work as it would be bookmarked, added to favourites, saved to readers, and so on; captures can be an indicator of future citations.30 Mentions are a measure of activity in news articles or blog posts while social media measures track the attention around the research, and these collectively ascertain if people are truly engaging with the research and how well the research is being promoted.30 Three of the PlumX metrics (that is, usage, captures and social media) were used for this inquiry; mentions were excluded (because there were limited mention metrics), as were citations (because Google Scholar was available for complete citation analysis).

Findings and discussion

Findings and related discussions are presented in terms of the core metric functions of control, communication and improvement.7

Control

Metrics may be used to monitor and evaluate (control) the performance of SAJLIS. The extent of growth and rigour of the Journal is reported using the number of submissions, acceptances and rejections, the geographic distribution of authors, and practitioner and academic contribution. Table 1 reflects the growth pattern of SAJLIS (despite the omission of years 2013 and 2014). Also note that, for 2018, statistics are presented for one issue only - the second issue for the year was yet to be published at the time of undertaking this research. Table 1 reflects a general trend of increase in the number of submissions. In 2012, 2016 and 2018, an average of over 30% of manuscripts was declined before the peer review process, perhaps because of non-compliance with submission requirements, demonstrating the rigour of the Journal in terms of acceptance of manuscripts for peer review. In 2017, 62 manuscripts were received and 58 were peer reviewed (the decrease in desk rejection possibly indicating that authors were more compliant). However, of the 58 papers that were peer reviewed, only 22.41% were published - demonstrating the rigour of the SAJLIS peer review process. The open access years have seen continuous growth in the number of users (authors and reviewers) and readers who have registered with the Journal. There has been a 166.7% increase in registered readers from 2015 to 2018 despite the fact that, at the time of data generation, 2018 still had 4 months to completion.

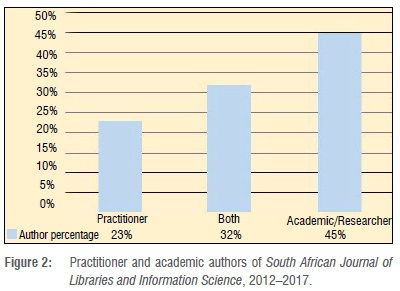

The growth pattern in Figure 1 shows that the discoverability of the Journal (its openness) promotes accessibility. This finding is in alignment with the assertion made by Gargouri32 that there are many independent studies which show that discoverability improves accessibility. In the fledgling year of its openness mode, SAJLIS did not attract too many international authors. Hence it can be inferred that, as SAJLIS has become more discoverable and accessible, it has been able to attract authors from several countries besides South Africa. As pointed out by Czerniewicz and Goodier33, genuine global scholarship should be 'shaped by academic rigour and quality' and not geographical borders, technical and other inequalities. The academic rigour of the Journal, as assessed by the number of submissions declined, has not been compromised with this trend of submissions from different regions of the world (see Table 1). A consistently high rejection rate is an indication of journal rigour23, and in the case of SAJLIS, when viewed in the context of its use of APCs, supports the case for its academic rigour as manuscripts are not being accepted for the sake of making a profit through APCs. Manual data mining of OJS reveals that SAJLIS has published articles from India, Kenya, Lesotho, Malawi, New Zealand, Nigeria, Senegal, Swaziland, Tanzania, Zambia and Zimbabwe. In volume 80(1), there were more international authors (either as first or co-authors) than there were South African authors; in volume 83(2), there was a near 50-50 split between South African and non-South African authors, possibly indicating that the open access mode has made a contribution to the growth of SAJLIS in terms of expanding the geographical spread of contributing authors. This wider reach affords the Journal more from which to select (evident in the increasing submission figures in Table 1), thus allowing the Journal the opportunity to enhance quality promotion in the content that it publishes. SAJLIS has gained an increasing number of registered readers (see Table 1) during its open access years, because it is accessible to any reader in the world who has access to the Internet. Likewise, Figure 2 indicates that the number of LIS practitioners publishing in SAJLIS has grown by 55% in the period under review (when articles written among practitioners as well as in conjunction with academics/researchers are considered), which suggests the Journal is more accessible to practitioners. This finding speaks to the high views and downloads but low citations (see Figures 5 and 8), as the readership includes both scholars and practitioners. Views and downloads indicate an interest in the material15 but would not necessarily result in formal citation, despite the content being regarded as valuable to the reader.

Communication

Metrics communicate performance to stakeholders,7 such as the discoverability and accessibility of LIS scholarship. Views and downloads for the journal and its articles may be used to ascertain the discoverability and accessibility of SAJLIS content. As SAJLIS is openly accessible, a subscription fee or pay-per-view charge, which some cannot afford, is not required for access. Figure 3 shows that the Journal had an aggregated 23 543 unique visitors over the review period. Figure 3 also indicates that SAJLIS is not only discoverable, but that users can access the research - in aggregate, SAJLIS articles were downloaded 75 461 times over the review period, worldwide. For the same period, there were 46 615 downloads from Africa (Figure 4), which represents 62% of the total downloads worldwide. The number of downloads, both worldwide and in Africa, is high, which suggests the Journal is more accessible to practitioners, as it is likely that practitioners rarely cite but make use of the research in their professional practice.

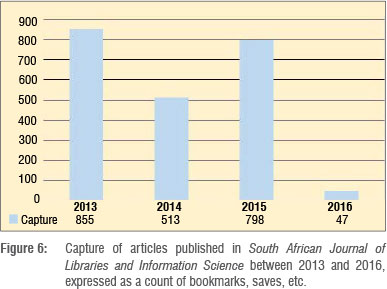

Figures 5 and 6 show the usage of articles in the purposive sample between 2013 and 2016 (2012 and 2017 are not reflected because the selection of 25% of articles for article-level analysis, explained earlier, fell outside of these two years). Data show spikes for 2013 and 2015, years in which several significant articles - five and seven, respectively - were published on e-books, research data services and social media and seem to have attracted more usage and capture. The year 2014, while still showing respectably high usage and capture, only had two such significant articles, which resulted in the observed decline because fewer articles relating to the top LIS trends were published in that year. Even though there is a drop in usage and capture for 2014, one significant cross-disciplinary article was published which contributed to most of the observed usage and capture counts for 2014 while other articles, by comparison, underperformed. Globally, multidisciplinary research does tend to have higher views and downloads.18

The social media metrics demonstrate how research published in the Journal is promoted. Figure 7 reflects that most of the SAJLIS articles were promoted on Facebook (a total of 340 visits and actions) as compared to 189 visits and actions on Twitter. This suggests SAJLIS researchers/authors and users are more active on Facebook (as opposed to that reported in Onyancha's18 study which found Twitter to be more popular among South African journals). While the current study used Plum Analytics to ascertain social media metrics, Onyancha18 used Altmetric.com. We did not use Altmetric.com as it did not include complete data for all the sample articles. Notwithstanding that different altmetrics tools present different social media data depending on the harvesting coverage of the tool, the SAJLIS trend, established with the use of Plum Analytics, is commensurate with the universal inclination of Facebook being more popular amongst middle-aged adults (such as researchers, authors, scholarly journal users) than any other social network.34

Even though altmetrics are open to 'gaming', they give a good overview of how accessible a journal's content is to the broader community. Citations, on the other hand, indicate how well used the research is and accumulate over time. Figure 8 shows a steady accumulation of citations for SAJLIS articles from 2012 to 2017. Logically, citations are the highest for 2012 and lowest for 2017 as there is a 2-3-year 'gestation period' for the generation of citations for published research.20 Citations of SAJLIS articles follow a similar pattern to that of usage and capture counts (see Figures 5 and 6), namely with 2013 and 2015 showing spikes in usage relative to 2014 and 2016. This reflection supports views and downloads as an indicator of future citations.30 Accordingly, Figure 8 shows that, in 2014, there was a small decline in citations due to fewer articles covering top trends compared to 2013 and 2015 (as pointed out earlier). Harnad and Brody6 state that access is not a sufficient condition for citation, but it is a necessary one. Google Scholar analytics show that, within 3 months of publication, an article published in SAJLIS in the second half of 2017 had already generated citations, thus demonstrating that openness and online availability improve accessibility which increases the chances of citation. It is understood that discoverability must precede accessibility; this suggests that openness improves discoverability. It is acknowledged that Google Scholar citations include both scholarly and grey literature; however, citation or use of articles would not be possible if the articles were not discoverable and accessible. Harnad and Brody6, among others12,13, demonstrate that open access articles can have an advantage compared to non-open access articles when it comes to citation counts.

The patterns from the views, downloads, PlumX metrics (article level usage, capture, social media measures) and article citations are indicative that SAJLIS content is discoverable and accessible globally, thus promoting its reach and impact.

Improvement

Notwithstanding these positives relating to discoverability and accessibility in SAJLIS's 'open era', as pointed out by Melnyk et al.7, metrics also serve to identify gaps and 'point the way for intervention and improvement'7, and these are identified here for SAJLIS for the next 5 years. While findings indicate that open access and an online presence have extended the reach of SAJLIS (see Table 1 and Figure 1) beyond South Africa into the African continent and even to other parts of the world, this growth trajectory has potential for further global expansion. This potential applies both geographically and across the theory and practice divide for further reach and impact of LIS scholarly endeavours as well as LIS policy and professional practice. In pursuing this growth trajectory, SAJLIS would need to continue to give attention to the academic rigour of its peer review process and promote quality of scholarship published in order to further increase its registration of authors, reviewers, readers and other users (see Table 1).

While Figures 3 to 7 demonstrate healthy indicators of discoverability and accessibility (views, downloads, usage, capture and social media measures) of SAJLIS and Figure 8 reflects citation numbers that reflect use of the LIS scholarship published, there is room for improvement to increase publishing activity. Figures 5 and 6, as well as Figure 8, show that article usage (views and downloads), captures (bookmarking and saves) as well as article citations (use of research) are influenced by the topic of published research.28,29 Bazrafshan et al.21 too discovered in their study that articles on topical areas were downloaded more often than others. Based on this observation, a possible intervention for SAJLIS would be to increase its two issues per year by the publication of special issues targeting top LIS trends as themes of these special issues, for greater discoverability and accessibility through the open access format and online tools and environments discussed in this paper. Such expansion would lead to greater use of the scholarship SAJLIS carries through citations and subsequent impact on LIS and related research, policy and professional practice.

In this quest for further growth, SAJLIS also needs to explore advancing software delivery platforms that promote greater discoverability and accessibility through views, downloads, captures, social media measures, and other newer forms of user engagement, as the latter are often precursors to future citations30 and research use.

As observed from the literature reviewed, open access alone does not lead to better journal performance. Hence, further promoting discoverability and accessibility of SAJLIS content through, for example, greater social media presence; expanding the reach of the Journal to further increase submissions and subsequent publishing of more articles, especially on topical issues in the LIS discipline; and, promoting the reputation of the Journal based on its academic rigour, are ways and means of affording SAJLIS opportunity to improve its performance further. Such improved performance in publishing activity (quantity) and quality will hold the Journal in good stead in possible applications in the future for Scopus and Web of Science index listings.

Conclusion and recommendation

We have reported on the use of multiple metrics (as advised by Harnad1) as an objective means of determining the discoverability and accessibility of LIS scholarship via the South African Journal of Libraries and Information Science (SAJLIS) in its open years (2012-2017). The inquiry was guided by the core metric functions of control, communication and improvement as identified by Melnyk et al.7 in relation to performance metrics theory. The findings highlight performance strengths of SAJLIS in terms of discoverability and accessibility of the scholarship it conveys. Despite SAJLIS being a 'small' journal title communicating scholarship for a small discipline (LIS), its performance strengths highlighted in this study are commensurate with its 2018 ASSAf peer review evaluation resulting in its continued accreditation by the DHET for author subsidy earning purposes and its selection for inclusion in the SciELO South Africa list of accredited journals. This study also highlights growth areas for SAJLIS for its strategic planning for the next 5 years. This inquiry focused on objective evaluation of the discoverability and accessibility performance of SAJLIS using multiple metrics (data mining on OJS, webometrics, altmetrics and citation analysis). It is therefore recommended that, for a more complete picture of performance strengths and areas for improvement, future enquiries could also target subjective evaluation of, for example, the SAJLIS peer review process, quality and diversity of the editorial board, and editor profile. However, as cautioned by Harnad1, this subjective evaluation too requires 'multiple tests' to achieve 'face-validity' necessary in research.

Authors' contributions

J.R.: Conceptualisation, formulation of overarching research aims, compiling the paper, theory integration and write-up, methodology write-up. A.M.: Data collection, data analysis and write up of analysis. M.K.: Crafting the literature review and formatting the paper for submission. R.R.: Data collection, data analysis and write up of analysis.

References

1.Harnad S. Validating research performance metrics against peer rankings. Ethics Sci Environ Polit. 2008;8:103-107. https://doi.org/10.3354/esep00088 [ Links ]

2.Walker C. Brief history of the journal [homepage on the Internet]. c2014 [cited 2018 Sep 20]. Available from: http://sajlis.journals.ac.za/pub/about/editorialPolicies#custom-1 [ Links ]

3.Ulrichsweb. Title details [homepage on the Internet]. c2018 [cited 2018 Oct 04]. Available from: http://ulrichsweb.serialssolutions.com/title/1538648430754/467586 [ Links ]

4.South African Journal of Libraries and Information Science. Focus and scope [homepage on the Internet]. c2018 [cited 2018 Sep 20]. Available from: http://sajlis.journals.ac.za/pub/about/editorialPolicies#focusAndScope [ Links ]

5.Lynch CA. Institutional repositories: Essential infrastructure for scholarship in the digital age. Portal-Libr Acad. 2003;3(2):327-336. http://dx.doi.org/10.1353/pla.2003.0039 [ Links ]

6.Harnad S, Brody T. Comparing the impact of open access (OA) vs. non-OA articles in the same journals. D-Lib. 2004;10(6). https://doi.org/10.1045/june2004-harnad [ Links ]

7.Melnyk SA, Stewart DM, Swink, M. Metrics and performance measurement in operations management: Dealing with the metrics maze. J Oper Manag. 2004;22:209-217. https://doi.org/10.1016/j.jom.2004.01.004 [ Links ]

8.Roemer RC, Borchardt R. Meaningful metrics: A 21st century librarian's guide to bibliometrics, altmetrics, and research impact. Chicago, IL: Association of College and Research Libraries; 2015. [ Links ]

9.Schimmer R, Geschuhn KK, Vogler A. Disrupting the subscription journals' business model for the necessary large-scale transformation to open access. A Max Planck Digital Library Open Access Policy White Paper; 2015. https://doi.org/10.17617/1.3 [ Links ]

10.Sotudeh H, Horri A. The citation performance of open access journals: A disciplinary investigation of citation distribution models. J Am Soc Inf Sci Technol. 2007;58(13):2145-2156. https://doi.org/10.1002/asi.20676 [ Links ]

11.Mukherjee B. Do open-access journals in Library and Information Science have any scholarly impact? A bibliometric study of selected open-access journals using Google Scholar. J Am Soc Inf Sci Technol. 2009;60(3):581-594. https://doi.org/10.1002/asi.21003 [ Links ]

12.Tang M, Bever JD, Yu F-H. Open access increases citations of papers in Ecology. Ecosphere. 2017;8(7), e01887, 9 pages. https://doi.org/10.1002/ecs2.1887 [ Links ]

13.Atchison A, Bull J. Will open access get me cited? An analysis of the efficacy of open access publishing in Political Science. PS Polit Sci Polit. 2015;48(1):129-137. https://doi.org/10.1017/S1049096514001668 [ Links ]

14.Fukuzawa N. Characteristics of papers published in journals: An analysis of open access journals, country of publication, and languages used. Scientometrics. 2017;112:1007-1023. https://doi.org/10.1007/s11192-017-2414-y [ Links ]

15.Kurtz MJ, Henneken EA. Measuring metrics: A 40-year longitudinal cross-validation of citations, downloads, and peer review in astrophysics. J Am Soc Inf Sci Technol. 2017;68(3):695-708. https://doi.org/10.1002/asi.23689 [ Links ]

16.Coughlin DM, Jansen BJ. Modeling journal bibliometrics to predict downloads and inform purchase decisions at university research libraries. J Am Soc Inf Sci Technol. 2016;64(9):2263-2273. https://doi.org/10.1002/asi.23549 [ Links ]

17.Huang W, Wang P, Wu Q. A correlation comparison between Altmetric Attention Scores and citations for six PLOS journals. PLoS ONE. 2018;13(4), e0194962, 15 pages. https://doi.org/10.1371/journal.pone.0194962 [ Links ]

18.Onyancha OB. Altmetrics of South African journals: Implications for scholarly 0impact of South African research. Pub Res Q. 2017;33:71-91. https://doi.org/10.1007/s12109-016-9485-0 [ Links ]

19.Mintz A, Mograbi E. Political Psychology: Submissions, acceptances, downloads, and citations. Polit Psychol. 2015;36(3):267-275. https://doi.org/10.1111/pops.12277 [ Links ]

20.Moed HF, Halevi G. On full text download and citation distributions in scientific-scholarly journals. J Am Soc Inf Sci Technol. 2016;67(2):412-431. https://doi.org/10.1002/asi.23405 [ Links ]

21.Bazrafshan A, Haghdoost AA, Zare M. A comparison of downloads, readership and citations data for the Journal of Medical Hypotheses and Ideas. J Med Hypotheses Ideas. 2015;9:1-4. https://doi.org/10.1016/j.jmhi.2014.06.001 [ Links ]

22.Holmberg K. Classifying altmetrics by level of impact. In: Salah AA, Tonta Y, Salah AAA, Sugimoto CR, Al U, editors. Proceedings of ISSI 2015: 15th International Conference on Scientometrics & Informetrics; 2015 June 29 - July 03; Istanbul, Turkey. Istanbul: Bogaziçi University Printhouse; 2015. [ Links ]

23.Fischer CC. Editor as good steward of manuscript submissions: 'Culture,' tone, and procedures. J Scholarly Publ. 2004;36(1):34-42. [ Links ]

24.Björk B. Scholarly journal publishing in transition: From restricted to open access. Electron Markets. 2017;27:101-109. https://doi.org/10.1007/s12525-017-0249-2 [ Links ]

25.Te EE, Owens F, Lohnash M, Christen-Whitney J, Radsliff Rebmann K. Charting the landscape of open access journals in Library and Information Science. Webology. 2017;14(1):8-20. [ Links ]

26.Nwagwu W, Makhubela S. Status and performance of open access journals in Africa. Mousaion. 2017;35(1):1-27. https://doi.org/10.25159/0027-2639/1262 [ Links ]

27.Creswell JW. Research design: Qualitative, quantitative and mixed methods approaches. 4th ed. Los Angeles, CA: Sage; 2014. [ Links ]

28.Association of College and Research Libraries (ACRL) Research Planning and Review Committee. 2018 Top trends in academic libraries: A review of the trends and issues affecting academic libraries in higher education. C&RL News. 2018;79(6):286. https://doi.org/10.5860/crln.79.6.286 [ Links ]

29.International Federation of Library Associations and Institutions (IFLA). IFLA trend report [homepage on the Internet]. c2018 [cited 2018 Sep 25]. Available from: https://trends.ifla.org/ [ Links ]

30.Plum Analytics. PlumX metrics [homepage on the Internet]. c2018 [cited 2018 Sep 25]. Available from: https://plumanalytics.com/learn/about-metrics/ [ Links ]

31.Matomo. Frequently asked questions [homepage on the Internet]. c2018 [cited 2018 Oct 01]. Available from: https://matomo.org/faq/ [ Links ]

32.Gargouri Y, Hajjem C, Larivière V, Gingras Y, Carr L, Brody T, et al. Self-selected or mandated, open access increases citation impact for higher quality research. PLoS ONE. 2010;5(10), e13636, 12 pages. https://doi.org/10.1371/journal.pone.0013636 [ Links ]

33.Czerniewicz L, Goodier S. Open access in South Africa: A case study and reflections. S Afr J Sci. 2014;110(9-10), Art. #20140111, 9 pages. https://doi.org/10.1590/sajs.2014/20140111 [ Links ]

34.Walton J. Twitter vs. Facebook vs. Instagram: Who is the target audience? (TWTR, FB) [homepage on the Internet]. c2015 [cited 2018 Sep 25]. Available from: https://www.investopedia.com/articles/markets/100215/twitter-vs-facebook-vs-instagram-who-target-audience.asp [ Links ]

Correspondence:

Correspondence:

Jaya Raju

Email: Jaya.Raju@uct.ac.za

Received: 11 Dec. 2018

Revised: 05 Mar. 2019

Accepted: 05 Mar. 2019

Published: 30 July 2019

EDITORS:John Butler-Adam, Maitumeleng Nthontho

FUNDING: None