Services on Demand

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

South African Journal of Science

On-line version ISSN 1996-7489

Print version ISSN 0038-2353

S. Afr. j. sci. vol.111 n.3-4 Pretoria Mar./Apr. 2015

http://dx.doi.org/10.17159/sajs.2015/20130358

RESEARCH ARTICLE

An assessment of South Africa's research journals: impact factors, Eigenfactors and structure of editorial boards

Androniki E.M. PourisI; Anastassios PourisII

IFaculty of Science, Tshwane University of Technology, Pretoria, South Africa

IIInstitute for Technological Innovation, University of Pretoria, Pretoria, South Africa

ABSTRACT

Scientific journals play an important role in academic information exchange and their assessment is of interest to science authorities, editors and researchers. The assessment of journals is of particular interest to South African authorities as the country's universities are partially funded according to the number of publications they produce in accredited journals, such as the Thomson Reuters indexed journals. Scientific publishing in South Africa has experienced a revolution during the last 10 years. Our objective here is to report the performance of the country's journals during 2009 and 2010 according to a number of metrics (i.e. impact factors, Eigenfactors® and the international character of editorial boards); to identify and compare the impact of the South African journals that have been recently added to the Thomson Reuters' Journal Citation Reports®; and to elaborate on issues related to science policy.

Keywords: Journal Citation Reports; journal performance; South Africa; citation analysis

Introduction

Journals are the main vehicle for scholarly communication within the academic community. As such, assessments of journals are of interest to a number of stakeholders from scientists and librarians to research administrators, editors, policy analysts and policymakers and for a variety of reasons.

Researchers would like to know where to publish in order to maximise the exposure of their research, and what to read in order to keep abreast of developments in their fields within the time constraints they face. Librarians would like to keep available the most reputed journals within their budget constraints. Research administrators use journal assessments in their evaluations of academics for recruitment, promotion and funding reasons. Editors are interested to know the relative performance of their journal in comparison with competitor journals. Finally, policymakers have to monitor the quality of journals as they use published articles as indicators of success of the research system, for identification of priorities, for funding and other similar reasons.

In South Africa, the assessment of scholarly and scientific journals is of particular importance and the subject of policy debate. The importance of the issue arises from the fact that higher education institutions receive financial support from the government for their research activities. Financial support is received by these institutions according to the number of publications produced and published in predetermined journals by their staff members.

Two approaches are used for the comparison and assessment of journals - expert opinion1 and citation analysis2. In expert opinion assessments, experts such as well-known researchers and deans of faculties are asked to assess particular journals. Subsequently, the collected opinions are aggregated and a relative statement can be made. The approach suffers from the normal deficiencies of peer review. Will the opinions remain the same if the experts were different? How can an astronomer assess a plant science journal? Are there unbiased researchers in scientifically small countries?3, andsoon.

The second approach is using citation analysis in order to rank journals. Citations are the formal acknowledgement of intellectual debt to previously published research. The impact factor of a journal is a measure of the frequency with which the average article in that journal has been cited in a particular year. Despite the continuous debate related to the validity of Garfield's journal impact factor for the identification of the journals' standing,4,5 citation analysis has prevailed historically.

It should be mentioned that the availability of journal impact factors from Thomson Reuters means that the journals have been assessed by their own experts and meet their criteria for inclusion in the citation indices. Such criteria include the availability of adequate articles to the journal, publication on time (timeliness) and peer review of the articles submitted to the journal. A journal with a high impact factor means that the journal has not only qualified for inclusion in the indices, but also that researchers often cite its articles.

Scientific publishing in South Africa has experienced a revolution during the last 10 years. In 2000, the South African government terminated its direct financial support to research journals,6 and only the South African Journal of Science and Water SA continued to receive financial support. An investigation in 20056 showed that the termination of government involvement in the affairs of the journals had on average a beneficial effect on the impact factors of the journals.

During 2006, the Academy of Science of South Africa (ASSAf), at the request of the Department of Science and Technology (DST), produced a new strategic framework for South Africa's research journals. This strategic framework recommends, among others, the periodic peer review of the country's journals and a change in the publishing approach, i.e. a move into an open-access system. Finally, during 2008, Thomson Reuters increased substantially the coverage of South African journals. The number of journals indexed in the Science Citation Index increased from 17 in 2002 to 29 in 2009 - an increase of 70%. The coverage of social sciences journals in the Social Sciences Index showed an even more substantial increase: from 4 in 2002 to 16 in 2009 - a 400% increase.

In this article, we aim to answer two questions related to South African research journals. Firstly, we compare the performance of the journals indexed in Thomson Reuters' Journal Citation Reports® (JCR) during 2002 with their performance during 2009 and 2010. The year 2002 was chosen as it is the time after the government terminated financial support to what used to be called 'national journals'. The years 2009 and 2010 were decided upon by the availability of relevant data. Secondly, we identify whether the newly added South African journals in the JCR are of similar quality as the pre-existing journals. Both findings are of policy interest. The relevance of the investigation is emphasised by the fact that South African researchers do not engage actively and do not publish research investigating the assessment of scientific journals. A search in the citation indices (excluding the authors of this article) identified only three relevant articles emphasising specific disciplinary journals.7-9

Ten years of change in scientific publishing in South Africa

During 2000, the South African government, based on a relevant investigation10 terminated the financial support of the country's scientific journals. Comparing the journals' performance during 1996 (before the termination of funding) and during 2002 it was stated that6:

The South African journals are identified as performing better without government interference imposed by the constraints attached to financial support offered to them. The impact factors of six out of the eight journals that received financial support were higher in 2002 than in 1996. Evidently the editors and the editorial boards have been able to support their journals better without the interference of the bureaucracy.

During 2006, ASSAf published the results of its investigation related to research publishing in South Africa.11 This publication reported on the country's research publishing profile, the availability and practices of local research journals, and the global e-research trends and their implications for South Africa, and advanced a number of recommendations. The recommendations included the adoption of best practice by editors and publishers in the country, the undertaking of an external peer review and quality audit of all research journals in 5-year cycles, and the adoption of an open-access publishing model enhancing the visibility and accessibility of the country's research.

In the above context, ASSAf, with the support of the DST, established a forum of editors of national scholarly journals - the National Scholarly Editors' Forum (NSEF). The NSEF, through annual meetings, has reached the following decisions: (1) A mandate was given to sustain the NSEF as a consultative and advisory body managed by ASSAf; terms of reference for the NSEF were drafted and adopted, (2) a National Code of Best Practice in Editorial Discretion and Peer Review12 was drafted and adopted and (3) a mandate was given for the envisaged quality assurance regime of ASSAf, based on peer review of discipline-grouped journal titles. NSEF also held plenary consultations on topics such as copyright issues, open access conversion, open-source software, and economies of scale in publishing logistics.

ASSAf, following on its recommendations, initiated an external peer review and associated quality audit of all South African research journals in 5-year cycles. The panels carrying out the reviews comprise six to eight experts, at least half of whom are not directly drawn from the disciplinary areas concerned. The reviews focus on: the quality of editorial and review processes; fitness of purpose; positioning in the global cycle of new and old journals listed and indexed in databases; financial sustainability and scope and size issues.

The approach comprises a detailed questionnaire which is sent to the editors, independent peer review of the journals in terms of content and a panel meeting to review these materials and all other available evidence in order to make appropriate findings and recommendations. The reports with recommendations are considered by the ASSAf Committee onScholarly Publishing and are released to the publishers and editors of the journals concerned as well as other relevant stakeholders and the public.

In addition, an open-source software-based system called Scientific Electronic Library Online (SciELO) has been adopted in South Africa. A pilot site for the SciELO SA, initially on the SciELO Brazil site, has been established and has been live since 1 June 2009. A number of training visits took place between South Africa and Brazil. During 2010 the SciELO platform was successfully installed on the ASSAf infrastructure.

Currently, 51 journals are available on this open-access platform. It is expected that almost 200 South African journals will eventually be available on the platform.

In 2008, Thomson Reuters added 700 regional journals to the Web of Science. According to a press announcement of Thomson Reuters13, 'The newly identified collection contains journals that typically target a regional rather than international audience by approaching subjects from a local perspective or focusing on particular topics of regional interest'. The press release emphasised:

Although selection criteria for a regional journal are fundamentally the same as for an international journal, the importance of the regional journal is measured in terms of the specificity of its content rather than in its citation impact.

The criteria used for selection include publication on time, English language bibliographic information (title, abstract, keywords) and cited references must be in the Roman alphabet.

The number of South African journals increased by 19 in this intake. Other countries that experienced a large increase in the number of journals indexed were Brazil, with 132 additional journals, Australia with 52, Germany with 50, Chile with 45, Spain with 44, and Poland and Mexico each with 43 additional journals. From the African continent, Nigeria's and Kenya's collections each increased by one journal.

Methodology

Assessment and comparison of journals according to their impact factors is a well-established approach in scientometrics14,15, despite the limitations and shortcomings16. The impact factor of a journal is defined as the quotient between the number of times an article published in the journal in the previous 2 years is cited in the year of observation and the number of articles this journal has published in these previous 2 years. Today, the journal impact factor, as estimated by Thomson Reuters in the JCR, is one of the most important indicators in evaluative bibliometrics.17 It is used internationally by the scientific community for journal assessments, research grants, academic subsidy purposes, for hiring and promotion decisions, and others.18,19

More recently, the Eigenfactor® approach has been developed and used for journal assessment.20,21 The Eigenfactor ranking system is also based on citations but it also accounts for differences in prestige among citing journals. Hence, citations from Nature or Cell are valued highly relative to citations from third-tier journals with narrower readership. Another difference is that whilst the impact factor of a journal has a 1-year census period and uses the previous 2 years for the target window, the Eigenfactor metrics have a 1-year census period and use the previous 5 years for the target window.

An additional indicator that we use to assess journals is the internationalisation of the editorial boards of the country's journals as it is manifested in the number of foreign academics in the journals' editorial boards. The composition of the editorial boards is an indicator of the internationalisation of the journal. It should be clarified that the editorial boards are both indicators of quality and inputs in the process of publishing a journal. For example, researchers are selective on how they spend their time and they would prefer to be associated with 'top' journals. On the other hand, international researchers introduce standards and approaches in the peer review of the articles that may improve the relevant journals.

The performance of South African journals

Table 1 shows the impact factors, the quartiles and the scientific categories of the South African journals covered by the JCR during 2002 for that year and for 2009 and 2010. The ranking to quartiles has been undertaken in order to take into consideration the variation in citations among the various scientific disciplines. Of the 17 South African journals in JCR, 4 journals declined in terms of quartiles from 2002 to 2010. Only one journal - the African Journal of Marine Science - improved its performance and moved from the third quartile to the second quartile. The South African Journal of Geology moved definitively into the second quartile in the list of the relevant disciplinary journals during 2009, while it was exactly in the middle of the second and third quartiles during 2002. During 2010 this journal moved into the fourth quartile. The journal's impact factor dropped from 1.013 during 2009 to 0.638 during 2010.

Examination of the impact factors indicates that 12 journals increased their impact factors. However, these increases were insufficient to move them into higher quartiles. It seems that, as in the domain of institutions,22 in the domain of journals it has become more and more difficult to compete internationally. It is interesting also to note that the South African Journal of Science - the country's flagship journal -exhibited a substantial drop in its impact factor and its position among the multidisciplinary journals internationally during 2009. However, it recovered during 2010 and it was part of the second quartile journals. It can be argued that this variability is the result of changes in the management structure of the journal. Prior to 2009, the journal had a full-time editor and from 2009 moved into a model with a part-time editor assisted by an editorial board.

Table 2 shows the impact factors, the quartiles and the scientific categories of the South African journals newly added to JCR. With the exception of African Invertebrates, which is positioned in the second quartile of the relevant journals with an impact factor of 1.216, all other journals fall within the fourth quartile of their categories.

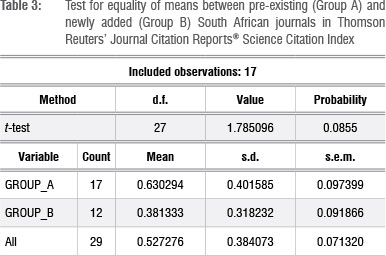

In order to compare the quality of the pre-existing journals with that of the journals new to Web of Science, as it is manifested in their impact factors, we undertook a two-sample t-test. In this test, two sample means are compared to discover whether they come from the same population (meaning there is no difference between the two population means). The null hypothesis is that the means of the two samples are the same (i.e. they come from the same population). The alternative hypothesis is that the two means are statistically different (i.e. they come from different populations).

Table 3 shows the results of the test of equality of means of the two series: the identified t=1.785 and p=0.0855. As the p-value is bigger than 0.05 (level of significance), we cannot reject the null hypothesis that the two sets of journals come from the same population.

This finding indicates that even though Thomson Reuters stated that 'the importance of the regional journal is measured in terms of the specificity of its content rather than in its citation impact', at least the newly added South African journals are coming from the same population as the journals which were already in JCR. The newly added journals did not have a significantly different impact factor from the original set in the index. The index continues to cover good-quality journals.

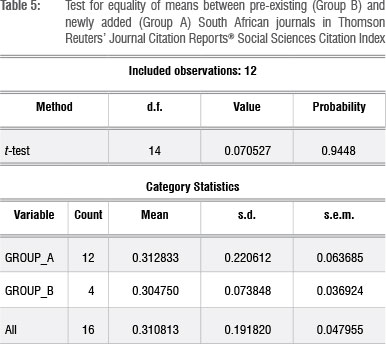

Table 4 shows the South African journals in the JCR Social Sciences Citation Index. The asterisk indicates the journals which were indexed during 2002. South Africa is represented by 16 journals during 2009, whereas only 4 journals were indexed in 2002. Only four journals fall in the third quartile of the lists of the relevant journals in the index; all others fall in the fourth quartile.

We undertook a two-sample t-test to compare the two sets of journals in order to identify whether they were from the same population (Table 5). The results again indicate that all journals were from the same population in terms of their impact factors.

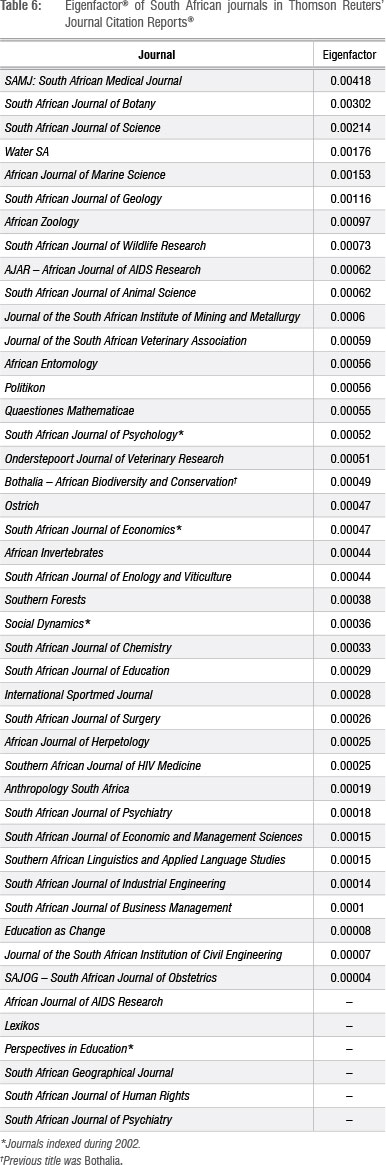

Table 6 shows the Eigenfactors of the South African journals. The SAMJ: South African Medical Journal has the highest Eigenfactor score followed by the South African Journal of Botany and the South African Journal of Science. These journals are cited by highly ranked journals.

Table 7 shows the structure of the editorial boards (as they appear in the relevant journals) of the South African journals. The International Sportmed Journal and South African Journal on Human Rights have more foreign than local academics on their boards. A large number of journals have no foreign academics on their editorial boards. As the structure of the editorial board reveals, at least partially, the international character and the quality of a journal, this situation is a policy concern.

It is interesting to compare the number of South African journals covered by Thomson Reuters with those of some other countries. Table 8 shows the number of journals indexed in the JCR from South Africa and other selected countries. South Africa, with 29 journals in the scientific domain, has more indexed journals than New Zealand, Ireland, Mexico and Israel. In the social sciences, South Africa has even more than countries like Japan and China, which probably is related to language issues. On the African continent, Nigeria follows South Africa with eight and two journals in the sciences and social sciences, respectively.

Analysis of the disciplinary fields covered by the indexed South African journals shows that 13 journals (out of 29) belong in the plant and animal sciences category. Social sciences follows with 7 journals, and education, economics and business, psychology/psychiatry and engineering with 3 journals each. The dominance of plant and animal journals is the result of the country's wealth in flora and fauna resources.

Discussion

We report the results of an investigation aimed at identifying the performance of South African journals indexed by Thomson Reuters' JCR. Inclusion in the citation indices is of importance internationally as an indicator of journal visibility.

In South Africa, inclusion in the citation indices is of particular interest as publications in the indexed journals automatically qualify the country's universities for government subsidy. Universities in South Africa receive government subsidy according to a funding formula in which one of the components is the number of research publications. Universities currently receive more than ZAR120 000 (approximately USD12 000) for each publication that their members of staff and students publish in qualifying journals.

The increase in the number of indexed South African journals during the recent years undoubtedly increases the country's scientific visibility.

A comparison of the journal's performance during 2002, 2009 and 2010 identified a relative deterioration in terms of the impact factor. During the most recent period, the majority of the South African indexed journals belong to the fourth and third quartile in terms of impact factor. Only three of the scientific journals were in the second quartile. Similarly, all the social sciences journals were in the third and fourth quartiles. Journals in the tail of the Thomson Reuters ranking are at risk of being dropped from the citation indices. Furthermore, as researchers prefer to submit their articles to high-impact journals, the journals in the tail run the danger of not receiving an adequate number of quality articles and hence will either reduce their quality standards or cease to exist.

The identification of the Eigenfactors of the South African journals will provide a valuable benchmark of performance for future investigations as there currently are no historical data of this indicator.

The identification of the structures of the editorial boards emphasises the above findings. The majority of South African journals are dominated by local researchers. As many as 20 journals do not have any foreign researchers or academics on their editorial boards. As international gatekeepers can transmit international standards and practices in the local journals, and because they may increase the prestige of the journal with their presence, the issue should receive attention by the relevant authorities. It should be emphasised that international researchers on editorial boards alleviate the shortcomings of peer review in scientifically small countries like South Africa. It has been argued that in scientifically small countries, a small number of researchers work in the same field, they know each other personally and they are socially tied to each other and to the social community surrounding them. 'When they have to render a verdict on a research proposal or research article these ties impair them from being objective'3. While other approaches (such as monitoring comments of peers and increasing the number of members on editorial boards) may be able to alleviate the challenges of biases, the incorporation of international researchers on editorial boards is probably among the most effective approaches.

It is important for the prestige of the country, and of ASSAf, to take appropriate actions to improve the country's journals. Their approach of coupling scientometric assessments with peer reviews can further provide evidence of the validity of the above findings.

The addition of new journals in the indices has implications for the future of science policy and warrants intensive relevant monitoring in all countries like South Africa which monitor their science performance using the Thomson Reuter indices. The addition of journals in the indices increased the coverage of the various countries' scientific articles but created discontinuities in the time series data. Similarly, the differentiated coverage of the various disciplines (e.g. social sciences versus engineering) can create changes in the publication profile of the countries. Science authorities should take action to create compatible time series and relevant country scientific profiles.

Finally, it should be mentioned that the addition of South African journals in the citation indices has not adversely affected the country's scientific profile. Even though Thomson Reuters13 stated that 'the importance of the [inclusion of] regional journal is measured in terms of the specificity of its content rather than in its citation impact', our investigation shows that the newly added journals were of the same quality in terms of impact factor as the pre-existing ones.

Acknowledgements

We thank two anonymous referees for comments on a previous version of the article which was presented at the ISSI2013 conference in Vienna, Austria. Similarly, we thank two anonymous referees of the South African Journal of Science for their comments. All normal caveats apply.

Authors' contributions

Both authors contributed equally to this article.

References

1. Zhou D, Ma J, Turban E, Bolloju N. A fuzzy set approach to the evaluation of journal grades. Fuzzy Set Syst. 2002;131:63-74. http://dx.doi.org/10.1016/S0165-0114(01)00255-X [ Links ]

2. Ren S, Rousseau R. International visibility of Chinese scientific journals. Scientometrics. 2002;53(3):389-405. http://dx.doi.org/10.1023/A:1014877130166 [ Links ]

3. Pouris A. Peer review in scientifically small countries. R&D Manage. 1988;18(4):333-340. http://dx.doi.org/10.1111/j.1467-9310.1988.tb00608.x [ Links ]

4. Vanclay KJ. Impact factor: Outdated artefact or stepping-stone to journal certification? Scientometrics. 2012;92(2):211-238. http://dx.doi.org/10.1007/s11192-011-0561-0 [ Links ]

5. Bensman JS. The impact factor: Its place in Garfield's thought, in science evaluation, and in library collection management. Scientometrics. 2012;92(2):263-275. http://dx.doi.org/10.1007/s11192-011-0601-9 [ Links ]

6. Pouris A. An assessment of the impact and visibility of South African journals. Scientometrics. 2005;62(2):213-222. http://dx.doi.org/10.1007/s11192-005-0015-7 [ Links ]

7. Onyacha OB. A citation analysis of sub-Saharan African library and information science journals using Google Scholar. Afr J Libr Arch Info. 2009;19(2):101-116. [ Links ]

8. Nkomo SM. The seductive power of academic journal rankings: Challenges of searching for the otherwise. Acad Manag Learn Educ. 2009;8(1):106-112. http://dx.doi.org/10.5465/AMLE.2009.37012184 [ Links ]

9. Brendenkamp CL, Smith GF. Perspectives on botanical research publications in South Africa: an assessment of five local journals from 1988 to 2002, a period of transition and transformation. S Afr J Sci. 2008;104(11-12):473-l78. [ Links ]

10. Pouris A, Richter L. Investigation into state funded research journals in South Africa. S Afr J Sci. 2000;96(3):98-104. [ Links ]

11. Academy of Science of South Africa (ASSAf). Report on a strategic approach to research publishing in South Africa. Pretoria: ASSAf; 2006. [ Links ]

12. Academy of Science of South Africa (ASSAf). National code of best practice in editorial discretion and peer review for South African scholarly journals. Pretoria: ASSAf; 2008. Available from: http://www.assaf.co.za/wp-content/uploads/2009/11/National%20Code%20of%20Best%20Practice%20BodyX20Content.pdf [ Links ]

13. Thomson Reuters. The scientific business of Thomson Reuters launches expanded journal coverage in Web of Science by adding 700 regional journals [press release]. c2008 [cited 2008 May 27]. Available from: http://science.thomsonreuters.com/press/2008/ [ Links ]

14. Weingart P Impact of bibliometrics upon the science system: Inadvertent consequences? Scientometrics. 2005;62(1):117-131. http://dx.doi.org/10.1007/s11192-005-0007-7 [ Links ]

15. Jennings C. Citation data: The wrong impact? Nat Neurosci. 1998;1(8):641-642. http://dx.doi.org/10.1038/3639 [ Links ]

16. Van Raan AFJ. Fatal attraction: Conceptual and methodological problems in the ranking of universities by bibliometric methods, Scientometrics. 2005;62:133-143. http://dx.doi.org/10.1007/s11192-005-0008-6 [ Links ]

17. Bornmann L, Neuhaus C, Daniel H-D. The effects of a two stage publication process on the journal impact factor: A case study on the interactive open access journal Atmospheric Chemistry and Physics. Scientometrics. 2011;86(1):93-97. http://dx.doi.org/10.1007/s11192-010-0250-4 [ Links ]

18. Andries M, Jokic M. An impact of Croatian journals measured by citation analysis from SCI expanded database in time span 1975-2001. Scientometrics. 2008;75(2):263-288. http://dx.doi.org/10.1007/s11192-007-1858-x [ Links ]

19. Monastersky R. The number that's devouring science. Chron High Educ. 2005;October: A12-A17. [ Links ]

20. Bergstorm C, West JD. Assessing citations with Eigenfactor™ metrics. Neurology. 2008;71:1850-1851. http://dx.doi.org/10.1212/01.wnl.0000338904.37585.66 [ Links ]

21. Fersht A. The most influential journals: Impact factor and Eigenfactor. Proc Natl Acad Sci USA. 2009;106(17):6883-6884. http://dx.doi.org/10.1073/pnas.0903307106 [ Links ]

22. Pouris A, Pouris A. Competing in a globalising world: International ranking of South African universities. Procedia Soc Behav Sci. 2010;2:515-520. http://dx.doi.org/10.1016/j.sbspro.2010.03.055 [ Links ]

Correspondence:

Correspondence:

Anastassios Pouris

Institute for Technological Innovation

Engineering Building I

University of Pretoria

Pretoria 0002

South Africa

Email: apouris@icon.co.za

Received: 18 Nov. 2013

Revised: 06 Jan. 2014

Accepted: 08 July 2014