Services on Demand

Journal

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

South African Journal of Childhood Education

On-line version ISSN 2223-7682Print version ISSN 2223-7674

SAJCE vol.5 n.1 Johannesburg 2015

ARTICLES

Exploring the complexities of describing foundation phase teachers' professional knowledge base

Carol Bertram*; Iben Christiansen; Tabitha Mukeredzi

University of Kwazulu-Natal

ABSTRACT

The purpose of this paper is to engage with the complexities of describing teachers' professional knowledge and eventually also their learning through written tests. The bigger research aim is to describe what knowledge foundation phase teachers acquired during their two years of study towards the Advanced Certificate of Teaching (ACT). We designed a written test to investigate the professional knowledge that teachers bring with them when they enrol for the ACT, with the aim of comparing their responses to the same test two years later, when they had completed the programme. The questionnaire included questions on teachers' content knowledge; their pedagogical content knowledge (in particular, teachers' knowledge about learner misconceptions, stages of learning, and ways of engaging these in making teaching decisions); and their personal knowledge (such as their beliefs about how children learn and barriers to learning). It spanned the fields of literacy in English and isiZulu, numeracy, and general pedagogy. Eighty-six foundation phase teachers enrolled for the ACT at the University of KwaZulu-Natal completed the questionnaire, and their responses pointed us to further methodological issues. We discuss the assumptions behind the design of the test/ questionnaire, the difficulties in formulating relevant questions, and the problems of 'accessing' specific elements of teacher knowledge through this type of instrument. Our process shows the difficulties both in constructing questions and in coding the responses, in particular concerning the pedagogical content knowledge component for teachers from Grade R to Grade 3.

Keywords: foundation phase teachers, metric, teacher knowledge, professional knowledge, pedagogical content knowledge, elementary teachers

Introduction

This paper engages with the complexities of what we as researchers can claim to know about teachers' knowledge from a pen and paper test. Thus, our purpose is to interrogate the methodology of designing a test that can illuminate teachers' professional knowledge base. We draw our data from tests that were written by a cohort of foundation phase teachers who enrolled for the Advanced Certificate of Teaching (ACT) at the University of KwaZulu-Natal (UKZN) in February 2013. The bigger research project aims to describe in some way what knowledge teachers have learned during their two years of study on the ACT for foundation phase teacher education. We designed a questionnaire to use as one method of data collection to investigate the professional knowledge that teachers bring with them when they enrol for the ACT, with the aim of comparing their responses to the same test two years later, when they had completed the programme.

We start the paper by engaging with the question of what we mean by the concept 'professional knowledge', and to what extent is it possible to measure this through pen and paper tests. We review the literature on testing teacher knowledge and show that there is a strong tradition of this in the United States, primarily within mathematics education, and to some extent also in reading and writing education. In South Africa, there has recently been a spate of tests to ascertain teachers' content knowledge in mathematics and language, but not specifically on foundation phase (elementary school) teacher knowledge. We then move onto discussing the conceptual framework that we used to design a test for foundation phase teachers when they enrolled in the ACT programme. The purpose of the test was to describe their propositional and personal knowledge within the learning areas of mathematics, language and life skills, with the purpose of analysing how their knowledge shifted over the two years that they were studying the ACT programme.

Since the data from the second test is not yet available, we do not detail the findings about teachers' knowledge base and how this has changed after the completion of the two-year ACT programme here. This will be the subject of another paper. Rather, we focus on the methodological issues that emerged from the design of the test and describe how we grappled with alternative ways of getting an understanding of what teachers know and believe.

Teacher professional knowledge

One of the key defining aspects of a profession is that it has a specialised knowledge base that enables the professionals to perform their work (Abbott 1988). There are writers who argue that, if teaching is to be understood as a profession, those who are part of the profession need to define a knowledge base (Taylor & Taylor 2013). This knowledge base has to be specialised, in the sense that it is particular to what teachers (as opposed to social workers, psychologists, lawyers or doctors) need to know. From an ethical perspective, the profession owes it to society to ensure that its members have the requisite knowledge and skill (Reutzel, Dole, Fawson et al 2011). Of course, the fact that teaching is indeed a profession cannot be taken unproblematically, and Hugo (2012) reminds us that teacher educators are still struggling to establish teaching as a recognisable profession.

The research that describes a knowledge base for teaching stretches back to Shulman's (1986; 1987) first engagements with the domains of knowledge that teachers needed. Shulman and his colleagues made a contribution that emphasised the role of content knowledge and pedagogical content knowledge (PCK), at a time when most research focused on the general aspects of teaching (Ball, Thames & Phelps 2008). The domains of knowledge proposed by Shulman are still taken as the basis for much work in the field today. He, however, tended to focus more on the propositional nature of teachers' knowledge ('knowing that'), and not very much on procedural knowledge ('knowing how to'). Even PCK is generally understood as propositional knowledge that needs to be made practical, although science education works with the notion of procedural PCK (Olszewski, Neumann & Fischer 2010) and other studies have started to explore the manifestations of PCK in teaching (Maniraho [forthcoming]).

The debate about the nature of knowing that and knowing how is complex. Suffice to say that there are some who claim that knowing how is learned in practice, through experience (Cochran-Smith & Lytle 1999); while others believe in articulating theoretical perspectives to obtain a better purchase on practice (see Shalem & Slonimsky 2013 for a discussion). We would place ourselves within the camp that understands that knowing how and knowing that are in some ways inseparable (Muller 2014; Winch 2014). On the one hand, we argue that with regard to a professional practice like teaching, practical knowledge or knowing how benefits when inferences are made from a systematic body of knowledge, not only from everyday experiences. In other words, knowing how is 'stronger' when it is underpinned by specific knowing that (Muller 2012), and 'diagnosing' situations with useful discriminations as well as choosing an appropriate response benefit from drawing on knowing that (Shalem & Slonimsky 2013). This knowing that of teaching contains academic as well as diagnostic classifications. On the other hand, knowing that implies knowledge of the particular inferential relationships that are accepted within the given domain of knowledge (Winch 2012; 2014). This is often learned as tacit knowledge or co-learning (Andersen 2000) through participation in practice, but can be articulated if needs be. It is a different knowing how, because it is not about what to do in practice as much as knowing what counts as legitimate practice. In that sense, it becomes linked to legitimisation codes (Carvalho, Dong & Maton 2009), the regulative discourse, or what in mathematics education has been called 'socio-mathematical norms' (Yackel & Cobb 1996). It is reflected not just in what can be said, but how it can be said: "[...] the crux of professional knowledge lies in specialised 'practice language' [...] which constitutes criteria for seeing distinctions and relations in the particulars of practice' (Shalem & Slonimsky 2013:70).

Professional knowledge cannot only be propositional knowledge, but such knowledge must be used to make professional judgements, or to take what Muller (2012) describes as 'intelligent action', where the professional draws on the generalised 'conceptual pile' to unpack or engage a particular instance. Thus, when a particular child is struggling to understand how time is measured in the Western world, the teacher can draw on her own historical understanding and use appropriate analogies and resources to increase the child's understanding; or the mathematics teacher can utilise her knowledge of different stages of geometrical understanding1 to distinguish between learners who operate with a relational notion of 'triangle' and learners who operate with a visual recognition notion only.

So, starting with the assumption that professional practice is ideally informed by specialised knowledge, what constitutes that specialised knowledge for teachers? There are a number of authors who narrow down a knowledge base for teachers to a few key components. For example, Taylor and Taylor (2013) describe professional knowledge as comprising three elements: disciplinary knowledge, subject knowledge for teaching (Shulman's pedagogical content knowledge (PCK) and curriculum knowledge), and classroom competence. Building on Shulman's work and their own extensive empirical work in mathematics education, Ball et al (2008) argue that teachers need a particular type of disciplinary knowledge, over and above pedagogical content knowledge, and have proposed sub-categories of subject knowledge for teaching, such as common content knowledge, specialised content knowledge and horizon content knowledge.

Others have proposed a stronger focus on a reflective practice dimension of teaching, which may rely on substantive or personal knowledge (see discussion in Hostetler 2014). With roots going back to Bertrand Russell's distinction between knowledge by acquaintance and knowledge by description (Russell 1910), one can include a personal knowledge dimension, which would include what is experienced as knowledge by the cognising individual, but which others may label 'beliefs' or 'dispositions'. For teachers, in particular, this would include personal narratives and beliefs about what learning is, what teaching is, what, for example, numeracy and literacy are, and a sense of their own competency, but also about acceptable forms of conduct (the regulative discourse) and what a good society would be (Boltanski & Thévenot 2006). In mathematics education, research on teachers' beliefs and links to their classroom practice has received much attention, but has been challenged recently for its individualistic assumptions. For instance, Skott (2013) has argued for seeing teachers' practices as a result of constant 'negotiation' amongst past and current communities of practice.

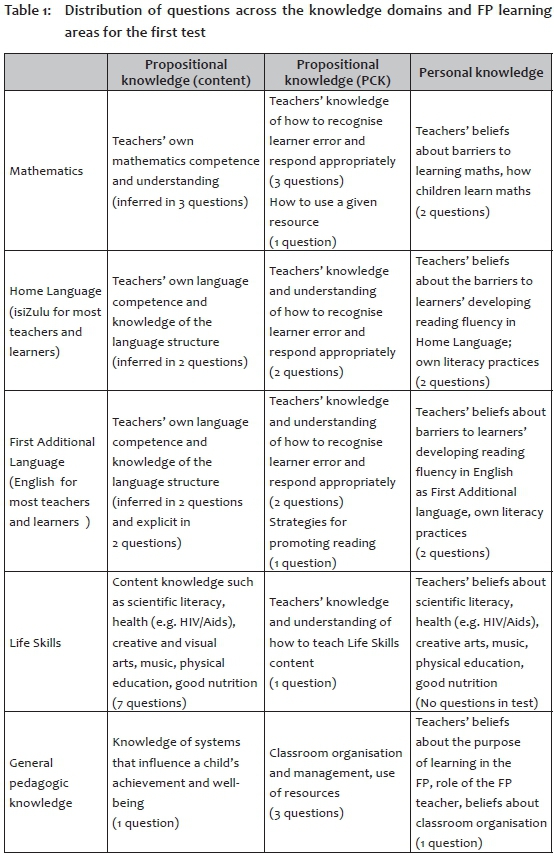

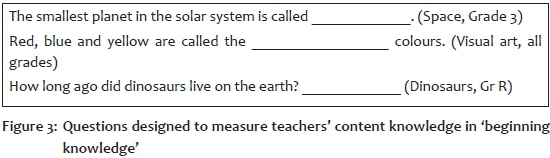

While there are certainly overlaps in the descriptions of domains of teacher knowledge, there is still not one framework that is accepted by all in the profession, and "the differing frameworks constitute clear evidence of the elusiveness and complexity of specifying adequately the nature of the knowledge teachers need to teach effectively" (Reutzel et al 2011). Nevertheless, we need to move forward in the field, and our choice for this study was to use the three domains of teacher knowledge that we have described elsewhere: the propositional, the practical and the personal (Christiansen & Bertram 2012). These three overarching domains constituted one dimension of our instrument (see Table 1), while learning areas taught in foundation phase constituted the other.

Teacher knowledge in South Africa

There are a number of recent studies on the state of teachers' content knowledge in mathematics and competence in reading and comprehending English texts that are drawn from the Southern and Eastern Africa Consortium for Monitoring Educational Quality (SACMEQ) III data (Taylor & Taylor 2013). A study done by Carnoy and Chisholm (2008) aimed to measure both the content knowledge and PCK of Grade 6 mathematics teachers, and this study was also replicated in the province of KwaZulu-Natal (KZN) and later in Rwanda, but with more detailed and specific categorisations under PCK (Maniraho (forthcoming); Ramdhany 2010). The findings showed that teachers generally had low content and pedagogical content knowledge. Several studies have reported on teachers' scores on content knowledge tests, such as a standardised reading or numeracy test, across the SACMEQ countries (Makuwa 2011; RSA DBE 2010), but rarely for the foundation phase, and rarely spanning professional knowledge for teaching, which goes beyond content knowledge.

There does not appear to be much research on measuring South African foundation phase teachers' knowledge through written tests. However, there is a growing set of studies that focus on the practices of foundation phase teachers (Aploon-Zokufa 2013; Ensor, Hoadley, Jacklin et al 2009; Venkat & Askew 2012). With Numeracy Chairs at Rhodes and Wits Universities, work in foundation phase mathematics teaching is developing (for example, Abdulhamid & Venkat 2014; Graven, Venkat, Westaway & Tshesane al 2013), but these are general small scale or case studies.

The teacher education policy that replaced the Norms and Standards, the Minimum Requirements for Teacher Education Qualifications (RSA DHET 2011), foregrounds the knowledge needed for teaching and notes that teaching is "premised upon the acquisition, integration and application of different types of knowledge practices or learning" (ibid:10). It gives more emphasis to what is to be learned and how it is to be learned. Thus it appears that teacher knowledge is emerging as important, but at present the main fields that are being researched in South Africa are teachers' content knowledge in relation to mathematics and language. The knowledge base for foundation phase teachers is more elusive, as it comprises three learning areas: numeracy, language (Home Language and First Additional Language) and life skills.

Measuring teacher knowledge

The measuring of teacher knowledge through tests appears to be a robust field in the United States. Since the 1960s there have been various attempts to measure teachers' knowledge of reading and writing instruction (Moats & Foorman 2003; Reutzel et al 2011). These studies have generally focused on the content knowledge that language teachers need to have; for example, reading teachers should have knowledge of the five components of reading, namely phonemic awareness, word recognition, vocabulary, fluency and comprehension.

In mathematics, probably due to the nature of the subject matter, there is a huge body of literature on how to measure teachers' knowledge; for example, the Diagnostic Teacher Assessment of Mathematics and Science (DTAMS), which aims to assess both depth of conceptual knowledge and pedagogical content knowledge (Holmes 2012). Best known is the work by Hill, Ball and their colleagues in the Learning Mathematics for Teaching (LMT) project. They have developed instruments for measuring teachers' mathematical knowledge for teaching (Hill, Schilling & Ball 2004). They claim that their results show that teachers' knowledge for teaching elementary mathematics is multidimensional, both in terms of sub-areas of mathematics and types of teacher knowledge. However, their measures have been challenged for not making it possible to distinguish between content knowledge and knowledge of content and students, and for being unclear about the accumulation of scores (Adler & Patahuddin 2012). An alternative approach has been developed in the German COACTIV project, with a stronger focus on the construction and use of tasks (Krauss, Baumert & Blum 2008). The problem for someone wanting to use these instruments is that they are not entirely compatible: a recent comparison of the LMT, COACTIV and a third framework for measuring teacher knowledge showed that the same item would be classified differently in the different frameworks (Karstein 2014).

A different challenge comes from the work of Beswick and colleagues, who also developed an instrument for large-scale studies of teachers’ mathematical knowledge for teaching (Beswick, Callingham & Watson 2011). Their instrument was an extension of the LMT one, as they included teachers’ confidence and beliefs. Using a Rasch model, they claim to have found that the test items were measuring a single underlying construct, which would counter the multidimensionality proposed by Hill et al (2004). Yet, they characterise four levels of the construct that in some ways reflect the analytical distinction between confidence, content knowledge, general pedagogical knowledge, and PCK. They do not make a distinction between confidence that is strongly knowledge-based and confidence that is less strongly supported by knowledge, but the surprising result that confidence tends to precede content knowledge in their study indicates that they have taken it as personally experienced and not necessarily knowledge-based confidence. In our view, they also have not separated beliefs from knowledge in their study, as reflected in numerous questions asking teachers whether they agree with statements about numeracy in everyday life or about the teaching of mathematics.

Approaches to identifying teacher learning do not always engage these ways of characterising teacher knowledge. For instance, a longitudinal study in Canada summarises teacher learning as “growth in the extent to which teachers:

- Understand the key goals of schooling

- Pursue them in an effective manner

- Assess pupils appropriately

- Make learning relevant

- Master subject content and pedagogy

- Organize their classroom effectively

- Foster a safe, social, and inclusive classroom

- Have a strong sense of professional identity" (Beck & Kosnik 2014).

This list combines declarative, procedural and personal knowledge, and at the same time highlights the normative dimension of this kind of work, as it begs clarification of what is understood by 'appropriately,' 'effective,' etcetera.

These studies and their critiques all point to the daunting task of trying to construct a measure of teacher knowledge, in particular one that can be applied to scale. Nonetheless, we set out to do so, in an attempt to construct data that could illuminate teachers' learning in the ACT foundation phase programme. We needed an instrument that could be used with a sample of approximately 100 students.

Constructing the teacher test

In constructing the test, we made a number of assumptions. First, that - as discussed above - teachers' professional knowledge is a complex amalgam of propositional, procedural and personal knowledge. Second, that a written test is able to reveal at least some aspects of a teacher's propositional and personal knowledge, but not of practical knowledge. The latter assumption does, however, not make a distinction between knowledge how in the practice and knowledge how of the relevant discourses (such as recognising what constitutes an argument in the discourse).

In the foundation phase, South African teachers have to have knowledge of mathematics, language and life skills. In each of these learning areas, they need to have in-depth subject knowledge (of both the substantive and the procedural knowledge of the discipline), as well as specialised pedagogical knowledge of how to teach number sense, reading and writing. We thus organised the questions that would provide data on the teachers' propositional and personal knowledge in these different fields. Table 1 maps out the various domains of teacher knowledge that we wanted to measure, with the assumption that propositional knowledge encompasses both content knowledge and PCK, and that practical knowledge cannot be measured through a pen and paper test.

In terms of propositional and personal knowledge related to content knowledge, questions aimed to obtain substantive knowledge of the subject, as well as teachers' beliefs about the importance and nature of the subject and how learners learn it. Under general pedagogical knowledge we were looking for teachers' understanding of teaching and assessment strategies as well as research-based theories of learning, teaching and assessment strategies developed through experience, and their beliefs about learning and teaching. Lastly, in relation to pedagogical content knowledge, we aimed to capture teachers' knowledge about learner errors, good analogies and explanations, and examples and activities, as well as their own experiences of and beliefs about what works well to teach particular concepts.

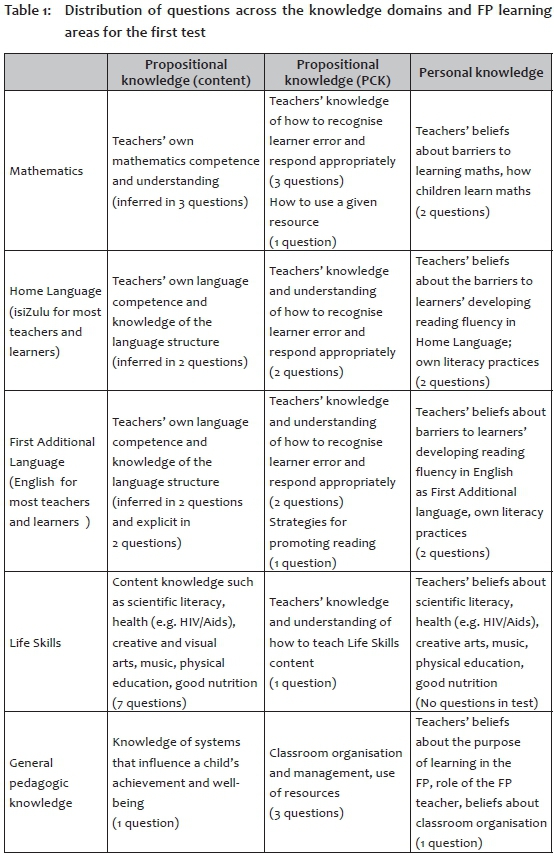

The resulting test had seven biographical background questions (such as the teacher's years of teaching, how many children were in her class, the language of instruction, etcetera). With regards to knowledge in mathematics, we asked two open-ended questions relating to teachers' beliefs about learning mathematics ('What do you think prevents some of your learners from achieving well in mathematics?' and 'Do you think that children need a special talent in order to achieve well in mathematics?'). In order to measure teachers' own mathematical competence and PCK, we asked questions that required teachers to recognise an error made by a learner, as well as to explain the nature of the child's misconception and how the teacher would intervene (see Figure 1).

There were three such questionsin the test: one focused on the operation of addition and one on subtraction, while in question 14, the construct was measuring the length of a pencil. These questions on how teachers might respond to learners' work were intended to engage both subject content knowledge and pedagogical content knowledge. This was done in order both to avoid teachers feeling threatened by content knowledge questions, and to limit the number of questions in the test.

The test had one question that engaged teachers' knowledge of how they would use a resource (a picture of women selling vegetables at the market) in a mathematics lesson. The purpose of this question was to describe PCK.

With regard to language, the test contained two questions that focused on teachers' beliefs about barriers to learning: 'What do you think prevents some of your learners from learning to read fluently and with understanding in their home language? And in their mother tongue?'. Two open-ended questions focused on learners' reading in English and isiZulu, and two questions focused on learners' writing in English and isiZulu. Figure 2 shows the example of the English writing question.

The purpose of this question was to test teachers' understanding of English grammar. It also aimed to elicit their PCK in terms of how they would give feedback and work with learner error.

Two questions tested teachers' English language knowledge. One of these questions asked teachers to circle the word containing both a prefix and a suffix from the following selection: 'underground/ungrateful/disregard'.

In order to focus on general pedagogic knowledge, we constructed six questions on how teachers understood the main purpose of learning in the foundation phase, how a particular classroom arrangement would support learning, and how teachers developed their learners' fine motor skills.

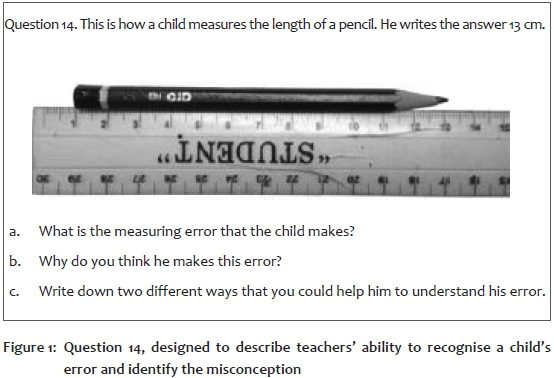

Lastly, there were eight questions focusing on 'beginning knowledge', as it is referred to in the life skills curriculum. Figure 3 provides a sample of these questions.

As we have explained, the questions were designed to generate data from three domains of knowledge (propositional knowledge of content and PCK, and personal knowledge) across the different learning areas of the foundation phase curriculum. There were twenty-nine questions, some with two or three sub-questions. The types of questions are plotted in Table 1 to show how they were distributed. The main threat to validity is the limited number of questions within each cell in our grid of knowledge categories. Most of the questions were developed from similar types of questions that have already been well documented in the literature.2 Taking cognisance of the fact that the knowledge categories are not clearly distinguishable (as previously discussed), we chose typical PCK test items that would be included in the most widely recognised definitions (see Depaepe, Verschaffel & Kelchtermans 2013; Karstein 2014; and Ramdhany 2010 for discussions of this for mathematics education).

Analysing the responses

Due to the open-ended nature of some of the questions, it would not be possible to assign Likert scale values to the responses; thus we could not use any of the standard measures of calculating reliability, such as Pearson's correlation coefficient or Cronbach's alpha. As the test spanned so many categories, it was also not possible to use internal consistency through splitting the test and comparing as a measure of reliability.

Only nine of the questions (content questions on general or 'beginning knowledge') had responses that could be scored right or wrong. The other questions generated open-ended, qualitative data. All responses were coded inductively by the team of three researchers. Each of us read 10-15 responses to one question, and we generated a range of codes from the responses. Figure 4 provides examples of two responses to Question 2.

Figure 4 depicts the responses of two teachers to the question: 'What do you think prevents some of your learners from achieving well in mathematics?' Both teachers noted that a shortage of learning material is one of the main barriers, while the first teacher also noted that her learners struggle with concepts. We thus generated two codes, 'Lack of resources' and 'Not understanding concepts'. The second teacher's response also indicated another code, one which we saw often, namely that concrete counters/counting areimportant to learning in mathematics. In this manner, we generated categories for the range of responses to each question. The coded data were entered into SPSS and frequencies calculated for the various responses.

At the time of writing, we do not yet have the data of the second test that the teachers wrote at the end of the ACT programme. Thus we will not fully engage here with the detailed findings from both tests. Instead, we will point to some key themes that emerged from the first test, with particular focus on the methodological issues that were thrown up.

Themes identified from the test data

The following themes were identified from the frequency tabulations of the data.

Difficulties with understanding the questions

The rate of non-response for many of the questions was very high, which seems to indicate that many teachers did not understand the questions that were asked. Isi Zulu was the home language of nearly all the teachers in the sample, and would be the language of instruction in their classrooms. For the 'beginning knowledge' questions and the questions that required teachers to diagnose a learner's error and explain the misconception, the non-response rate ranged from 10-30% of respondents. This may have been because 48% of the sample were Grade R teachers, whose English competence may be weaker than teachers who teach the higher grades and English as a First Additional Language.

Content knowledge and PCK

The responses to the content questions linked to 'beginning knowledge' generally revealed poor content knowledge, particularly of more specialised knowledge. For example, only 8% of respondents were able to correctly identify that metal was a good conductor of heat, and only 29% could identify the word 'ungrateful' as having both a prefix and a suffix. However, 67% knew that red, blue and yellow are the primary colours, and 71% could correctly provide an example of an amphibian. The majority of teachers (78%) could identify the measuring error that the child made with the pencil and ruler (that is, starting to measure from one rather than zero), but only 11% could meaningfully describe why the child made this error. Very few were able to articulate different ways that they would be able to help learners see their error. However, it is not possible to claim that the teachers do not know how to respond to learners, as it may be that they are simply unable to articulate this knowledge.

In terms of PCK and how teachers would respond to learner error, responses showed a tendency towards the more atomistic or technical aspects of education, such as spelling or punctuation being valued over meaning. For example, about a quarter of the teachers noted that the child who wrote about 'wotching a moovey' had managed to convey meaning, while the other teachers noted the correct or incorrect spelling, tense, punctuation, etcetera.

In the numeracy questions, we also saw a tendency amongst some of the teachers to consider counting and the use of counters as essential. For example, 37% of respondents noted that the reason that learners did not achieve well in mathematics was a lack of resources, particularly counters. The endorsed narrative in mathematics teacher education is to move from concrete (manipulatives) to iconic (place value cards, Monopoly money, learners' drawings) to symbolic, but some teachers appeared to have focused on the appearance of this, rather than its pedagogical process. This focus on counting and counters is also seen in Ensor et al's (2009) study of nine foundation phase teachers.

Locus of control

In the questions on barriers to learning, the teachers generally noted that the learners, the parents, lack of resources, or the 'system' were the major barriers to learning in mathematics and language. Few responses reflected a sense of own agency or a personal belief that their pedagogic practice could change learners' learning (an internal locus of control). It will be interesting to see whether this belief has shifted after the two-year ACT programme.

Reflecting on the data generated from the first administration of the test

The teachers tended to give short answers to the questions, which made it difficult to deduce anything about the extent to which these responses truly reflected the teachers' knowledge, as opposed to simply 'what first came to mind'. In addition, teachers' responses to test items may not only reflect their knowledge but also their perceptions of what is valued by their lecturers or the researchers. Furthermore, responses may not fully reflect what the teacher knows about the area.

For instance, we had used open-ended questions almost exclusively. This would mean that teachers, on their own and away from their practice, would have to produce what we could recognise as relevant responses, which would have to be fairly coherent, declarative written knowledge. Narrower questions, and even multiple-choice questions concerning very specific teacher actions, could possibly be easier for teachers to respond to and indicate whether teachers were able to recognise appropriate teaching strategies.

The tasks we had used to prompt pedagogical content knowledge were derived from such tasks in the existing literature - tried and tested tasks on interrogating learners' errors and responding to them. However, the recent work of Adler and Rhonda (2014) indicated to us that this is a higher level teaching task. More accessible to most teachers are the skills of explaining and exemplifying. Thus it appeared that drawing on international practices had led us to ask questions of high complexity, whereas it would be useful to also include questions on more fundamental teacher competencies such as explaining or exemplifying. Since we had asked questions requiring higher level teaching competencies, such as interrogating learner errors, the responses tended to show what the teachers could not do or may not know, rather than what they may know, such as explaining and exemplifying.

In our interviews with the teachers after their first semester on the programme, we had noticed that increasing confidence was often mentioned by the teachers. This is in line with the local work of Graven (2004) on teachers' learning in in-service courses, where she found confidence to be a key factor. Moreover, the issue of increased confidence in teaching mathematics aligns with the findings of research conducted by Beswick and colleagues in Australia (Beswick et al 2011). They explored not just teachers' knowledge of content and PCK, but also their personal confidence about their own numeracy knowledge and their teaching, as well as their beliefs about the use of materials. These researchers suggested that confidence may even precede content knowledge. We had asked very specific questions about beliefs concerning the use of materials and learners' barriers to learning, but none about the teachers' confidence and self-concept as mathematics teachers.

Finally, the strong knowledge frame that we used to design the test meant that we had posed many questions about how teachers could recognise learner errors and respond to them. In the post-test, we will be able to document whether teachers have acquired any more knowledge in this regard from the ACT programme. The post-test would, however, not indicate a change in their beliefs or how their narratives about teaching had perhaps shifted. This thinking lead us to Anna Sfard's work (Sfard 2007). When considering mathematics learning, Sfard distinguishes between two types of learning, object-level learning and meta-level learning. Object-level learning leads simply to an extension of the discourse; "it increases the set of 'known facts' (endorsed narratives) about the investigated objects" (Ben-Zvi & Sfard 2007:120). Meta-level learning, on the other hand, is characterised by a change in the discourse. Thus, if we also understood teaching competence as a discourse, in the sense of a particular type of communication that includes some and excludes others (Sfard 2007), we could view teacher learning as changes in the teachers' discourses, and not only the acquisition of new knowledge.

This perspective offers a different methodological approach. It allows the researcher to interrogate changes within a discourse as well as moves from one discourse to another. In education, a number of competing discourses exist, reflecting very different beliefs about what is important in teaching, which may have some implications for learning. For example, is pedagogic discourse a reflection of the education of democratic, 'independently thinking' individuals; caring and supporting the expression of the creative self; or the learning of skills that are considered useful in the current society? Bauml (2011) would argue that when teachers encounter new material in the programme, they can adapt, imitate, modify or completely avoid it. A discourse perspective would help distinguish the extent to which teachers are imitating or have adapted the different discourses they have encountered on the ACT programme.

Sfard (2007:573) 3 characterises discourses by highlighting four aspects:

Discursive development of individuals or of entire classes can then be studied by identifying transformations in each of the four discursive characteristics: the use of words characteristic of the discourse, the use of mediators, endorsed narratives, and routines.

Only by considering changes in vocabulary, mediators, narratives and routines together is it possible to determine whether the teachers have adapted a new discourse or have modified the course content to fit into their existing discourse, etcetera.

Adopting this perspective, we looked at some of the existing teacher responses on the first version of the test. We found that even the many open-ended questions provided a potential, although limited, basis for inferring endorsed narratives, while routines are not easily engaged. This is not only because routines comprise practical knowledge, but also because the questions generally did not inquire about teachers' classroom practices. Finally, we realised that the first version of the test did not provide enough opportunities for the teachers to demonstrate whether they had indeed acquired key concepts from the ACT course.

Based on these reflections, we reworked the test and have since administered it to the cohort of teachers who enrolled for the ACT programme in 2014.

The revised test

The section on 'general knowledge' was omitted completely, as it did not speak directly to the main categories of knowledge that we were researching. The very general questions about how teachers may use certain materials in their teaching were deemed more suitable for the interview component of the study, as prompting may lead to more elaborate text productions, which could be interpreted discursively.

Next there were six questions about the teachers' own use of literacy and numeracy in the test, where the teachers were asked to rate statements such as 'I often read for enjoyment' on a continuous scale from 'strongly agree' to 'strongly disagree'. The questions served to indicate how comfortable the teachers were with numeracy and literacy, and were selected and adapted from Beswick's instrument (Beswick et al 2011).

The next twenty-six questions asked the teachers to rate their confidence in teaching various foundation phase topics (such as reading, punctuation, fractions, the solar system) on a scale from 'low confidence' to 'high confidence', including the option of 'would not be teaching this'. A few of the general knowledge topics had been included here, but engaging confidence only. By including these questions, we were hoping to document any changes in confidence experienced by the teachers after completing the ACT course. We note that a decrease in confidence may not imply that learning did not take place, as experts tend to have a stronger sense of their own strengths and weaknesses.

To prompt teachers' discourse practices and beliefs about teaching numeracy and literacy more strongly, we included twenty-one questions with statements about 'good' teaching and learning, asking teachers to indicate their degree of agreement. These statements were chosen so as to reflect a range of common narratives within education. For instance, one statement said, 'In numeracy, understanding only comes with sufficient practice of calculations.' Another read, 'Grade 1-3 children are too young to think for themselves.' By including a substantial number of closed-end questions on beliefs, we hoped to be able to identify changes in the narratives endorsed by the teachers. In order to also allow space for teachers' own voices, the section on teachers' beliefs concluded with two open-ended questions taken from the original version on the test asking teachers about factors that prevented learning.

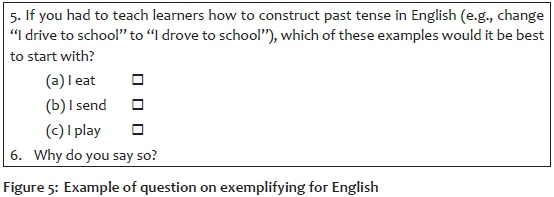

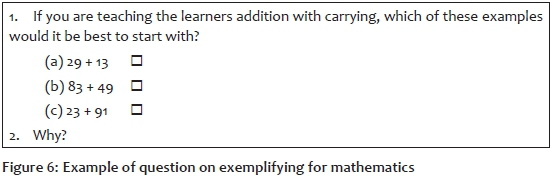

This was followed by three sections on the content areas of isiZulu, English and mathematics, respectively. For the languages, the first question was about the teachers' current practices regarding helping learners to develop vocabulary. Each section also contained a multiple-choice question, where the teachers were asked to choose an example to teach a particular concept and justify their choice. Two examples are included in figures 5 and 6. In the first of these, we were interested in the extent to which the teachers referred to grammatical reasons (regular versus irregular verb) or to learners' familiarity with the word. In the second, we were looking to see whether teachers would refer to the complexity of regrouping ones to tens, tens to hundreds, or both, or whether they would refer simply to the magnitude of the numbers.

The two language sections also included a question on teachers' beliefs about the importance of teaching phonemes, asking them to justify their answer and state the purpose of teaching phonemes. These questions would not only assist in identifying the teachers' endorsed narratives about teaching literacy, but would also allow us to identify any take-up on this from the ACT course. In order to get the teachers to 'narrate' about their current practices, we added open questions of the type 'How do you help your learners develop vocabulary in isiZulu?'

All three sections had one or two questions where teachers were asked to identify the level of writing development or counting suggested by a child's textual production (see figure 7). These questions were also included to assist us in identifying specific take-up on this, as it was included as content in the ACT course.

Finally, we retained one question on identifying and responding to learners' misconceptions in each of these sections, but with minor changes from the first version of the test. We have administered this test once to the new cohort of teachers in 2014, but have not yet engaged with the data, thus reflections on the data that it generated are not yet possible.

Discussion and way forward

It is clear to us that despite our best intentions and the design of the test to target various components of teacher knowledge, the instrument did not allow us to fully capture the teachers' current views on teaching, their current practices, or their sense of confidence as teachers. It is easy to measure teachers' content knowledge, but it is the personal knowledge/beliefs that give us more of an opportunity to obtain insight into the discourses/narratives that are likely to inform teachers' practice. Thus, a good instrument should probably span both these dimensions. To some extent, it is in the nature of a written questionnaire or test that it cannot generate a more in-depth understanding of teachers' knowledge, thinking, beliefs and practices, but we still felt that we could do better in capturing such issues.

Therefore, we redesigned the test, and the new version of the instrument contains questions inspired by Beswick et al's (2011) instrument (which is not in the public sphere, but was kindly shared by Beswick) on confidence, personal numeracy in context and beliefs about teaching, all requiring the teachers to indicate agreement/ disagreement on a continuous scale. We retained some of the questions from the original test that aimed to interrogate teachers' PCK, and added extra ones to reflect the consideration of explaining and exemplifying. Finally, we wanted to see to what extent the teachers had acquired vocabulary and routines that are endorsed by the ACT programme, so we asked the teachers to identify the stages of writing or counting of learners' work.

Our hope is that the new instrument will prove to be more capable of capturing a wider range of teachers' responses and will allow us to look for learning both in the sense of object-level learning and meta-level learning, with shifts in the teachers' narratives. We look forward to reporting on the findings from the use of the revised instrument, and comparing it to the responses that were generated by the first test.

Acknowledgements

This research was supported by funding from the University of KwaZulu-Natal's Teaching and Learning Office (TLCRG10 'Exploring knowledge and learning of the ACT Foundation Phase students') and the National Research Foundation (grant no 85560).

References

Abbott, A. 1988. The system of professions: An essay on the division of expert labour. Chicago, IL: University of Chicago. [ Links ]

Abdulhamid, L. & Venkat, H. 2014. Research-led development of primary school teachers' mathematical knowledge for teaching. A case study. Education as Change, 18(S1):S137-150. [ Links ]

Adler, J. & Patahuddin, S.M. 2012. Quantitative tools with qualitative methodsin investigating mathematical knowledge for teaching. Journal of Education, (56):17-43.

Adler, J. & Ronda, E. 2014. An analytic framework for describing teachers' mathematics discourse in instruction. Paper presented at Psychology of Maths Education (PME) 38, Vancouver, Canada, 15-20 July 2014.

Andersen, C.K. 2000. The Influence of Technology on Potential Co-learnings in University Mathematics. PhD thesis. Aalborg: Aalborg University. [ Links ]

Aploon-Zokufa, K. 2013. Locating the difference: A comparison of pedagogic strategies in high and low performing schools. South African Journal of Childhood Education, 3(2):112-130. [ Links ]

Ball, D.L., Thames, M.H. & Phelps, G. 2008. Content knowledge for teaching: what makes it special? Journal of Teacher Education, 59:389-407. [ Links ]

Bauml, M. 2011. "We learned all about that in college": The role of teacher preparation in novice kindergarten/primary teachers' practice. Journal of Early Childhood Teacher Education, 32:225-239. [ Links ]

Beck, C. & Kosnik, C. 2014. Growing as teacher: Goals and pathways of ongoing teacher learning. Rotterdam: Sense Publishers. [ Links ]

Ben-Zvi, D. & Sfard, A. 2007. Ariadne's Thread, Daedalus' wings, and the learner's autonomy. Education et didactique, 1(3):117-134. [ Links ]

Beswick, K., Callingham, R. & Watson, J. 2011. The nature and development of middle school mathematics teachers' knowledge. Journal of Mathematics Teacher Education. Retrieved from http://www.springerlink.com/content/th22781265818125/fulltext.pdf (accessed 1 September 2012).

Boltanski, L. & Thévenot, L. 2006. On Justification: Economies of Worth. Princeton, NJ: Princeton University Press. [ Links ]

Carnoy, M. & Chisholm, L. 2008. Towards Understanding Student Academic Performance in South Africa: A Pilot Study of Grade 6 Mathematics Lessons in South Africa. Pretoria: HSRC Press. [ Links ]

Carvalho, L., Dong, A. & Maton, K. 2009. Legitimating design: A sociology of knowledge account of the field. Design Studies, 30(5):483-502. [ Links ]

Christiansen, I.M. & Bertram, C. 2012. Editorial: Special issue on teacher knowledge and teacher learning. Journal of Education, (56):1-16.

Cochran-Smith, M. & Lytle, S.L. 1999. Relationships of knowledge and practice: teacher learning in communities. Review of Research in Education, 24:249-305. [ Links ]

Depaepe, F., Verschaffel, L. & Kelchtermans, G. 2013. Pedagogical content knowledge: A systematic review of the way in which the concept has pervaded mathematics educational research. Teaching and Teacher Education, 34:12-25. [ Links ]

Ensor, P., Hoadley, U., Jacklin, H., Kuhne, C., Schmitt, E., Lombard, A. & Van Den Heuvel Panhuizen, M. 2009. Specialising pedagogic text and time in foundation phase numeracy classrooms. Journal of Education, 47:5-29. [ Links ]

Graven, M. 2004. Investigating mathematics teacher learning within an in-service community of practice: The centrality of confidence. Educational Studies in Mathematics, 57(2):177-211. [ Links ]

Graven, M., Venkat, H., Westaway, L. & Tshesane, H. 2013. Place value without number sense: Exploring the need for mental mathematical skills assessment within the Annual National Assessments. South African Journal of Childhood Education, 3(2):131-143. [ Links ]

Hill, H.C., Schilling, S.G. & Ball, D.L. 2004. Developing measures of teachers' mathematics knowledge for teaching. The Elementary School Journal, 105(1):11-30. [ Links ]

Holmes, V. 2012. Depth of teachers' knowledge: Frameworks for teachers' knowledge of mathematics. Journal of STEM Education, 13(1):55-69. [ Links ]

Hostetler, K.D. 2014. Beyond reflection: Perception, virtue, and teacher knowledge: Educational Philosophy and Teaching, 2014. DOI: 10.1080/00131857.2014.989950.

Hugo, W. 2012. Specialised knowledge and professional judgement in teacher education: an address to teacher educators in South Africa. Plenary presentation given at the Teacher Education Conference at the University of Pretoria, 19 September 2012.

Karstein, H. 2014. A comparison of three frameworks for measuring knowledge for teaching mathematics. Nordic Studies in Mathematics Education, 19(1):23-52. [ Links ]

Krauss, S., Baumert, J. & Blum, W. 2008. Secondary mathematics teachers' pedagogical content knowledge and content knowledge: validation of the COACTIV constructs. Mathematics Education, 40:873-892. [ Links ]

Makuwa, D. 2011. Characteristics of Grade 6 Teachers. Paris: SACMEQ/UNESCO. [ Links ]

Maniraho, J.F. (forthcoming). The pedagogical content knowledge of Rwandan Grade 6 teachers and its relations to learning. PhD thesis. Durban: University of KwaZulu-Natal. [ Links ]

Moats, l.C. & Foorman, B.R. 2003. Measuring teachers' content knowledge of language and reading. Annals of Dyslexia, 53(1):23-45. [ Links ]

Muller, J. 2012. The body of knowledge/le corps du savoir. Paper presented at the Seventh Basil Bernstein Symposium, Aix-en-Provence, France, 27-30 June 2012.

Muller, J. 2014. Every picture tells a story: Epistemological access and knowledge. Education as Change, 18(2):255-269. [ Links ]

Olszewski, J., Neumann, K. & Fischer, H.E. 2010. Measuring physics teachers' declarative and procedural PCK. In: M.F. Tasar & G. Cakmakci (Eds). Contemporary science education research: Teaching. Ankara: Pegem Akademi. 87-94. [ Links ]

Ramdhany, V. 2010. Tracing the use of Pedagogical Content Knowledge in grade 6 mathematics classrooms in KwaZulu-Natal. MEd thesis. Durban: University of KwaZulu-Natal. [ Links ]

Reutzel, D.R., Dole, J.A., Fawson, S.R.P., Herman, K., Jones, C.D., Sudweeks, R. & Fargo, J. 2011. Conceptually and methodologically vexing issues in teacher knowledge assessment. Reading & Writing Quarterly, 27:183-211. [ Links ]

RSA DBE (Republic of South Africa. Department of Basic Education). 2010. The SACMEQ III project in South Africa: A study of the conditions of schooling and the quality of education. Pretoria: SACMEQ/DBE. [ Links ]

RSA DHET (Republic of South Africa. Department of Higher Education and Training). 2011. National Qualifications Framework, Act 67 of 2008: Policy on the Minimum Requirements for Teacher Education Qualifications. Pretoria: Government Printers. [ Links ]

Russell, B. 1910. Knowledge by acquaintance and knowledge by description. Proceedings of the Aristotelian Society, 11:108-128. [ Links ]

Sfard, A. 2001. Learning mathematics as developing a discourse. In: R. Speiser, C. Maher & C. Walter (Eds). Proceedings of the 21st Conference of PME-NA 2001, Columbus, Ohio. Clearing House for Science, Mathematics and Environmental Education. 23-44.

Sfard, A. 2007. When the rules of discourse change, but nobody tells you: Making sense of mathematics learning from a commognitive standpoint: The Journal of the Learning Sciences, 16(4):565-613. [ Links ]

Shalem, Y. & Slonimsky, L. 2013. Practical knowledge of teaching practice: What counts? Journal of Education, 58:67-85. [ Links ]

Shulman, L.S. 1986. Those who understand: knowledge growth in teaching. Educational Researcher, 15(2):4-14. [ Links ]

Shulman, L.S. 1987. Knowledge and teaching: foundations of the new reform. Harvard Educational Review, 57(1):1-22. [ Links ]

Skott, J. 2013. Understanding the role of the teacher in emerging classroom practices: Searching for patterns of participation. ZDM Mathematics Education, 45:547-559. [ Links ]

Taylor, N. & Taylor, S. 2013. Teacher knowledge and professional habitus. In: N. Taylor, S. van den Berg & T. Mabogoane (Eds). Creating effective schools. Cape Town: Pearson. 202-230. [ Links ]

Thévenot, L. 2002. Which road to follow? The moral complexity of an 'equipped' humanity. In: J. Law & A. Mol (Eds). Complexities: Social studies of knowledge practices. Durham, NC: Duke University Press. 53-87. [ Links ]

Van Hiele-Geldof, D. 1957. De didaktiek van de meetkunde in de eerste klas van het VHMO. PhD thesis. Utrecht: University of Utrecht. [ Links ]

Venkat, H. & Askew, M. 2012. Mediating early number learning: specialising across teacher talk and tools? Journal of Education, 56:67-90. [ Links ]

Winch, C. 2012. Curriculum design and epistemic ascent. Journal of Philosophy of Education, 47(1):128-146. [ Links ]

Winch, C. 2014. Know-how and knowledge in the professional curriculum. In: M. Young & J. Muller (Eds). Knowledge, expertise and the professions. London and New York: Routledge. 47-60. [ Links ]

Yackel, E. & Cobb, P. 1996. Sociomathematical norms, argumentation, and autonomy in mathematics. Journal for Research in Mathematics Education, 27(4):458-477. [ Links ]

* Email address: BertramC@ukzn.ac.za.

1 The by now well-known Van Hiele levels were first described in 1957, in a doctoral thesis by van Hiele-Geldof.

2 As the test spanned a range of different knowledge categories, we drew on different sources for the different aspects. For mathematical knowledge for teaching/PCK, for instance, we used the idea that teachers should be able to identify and describe the errors of learners, which was used in the test items of the 'Learning Mathematics for Teaching Project' at the University of Michigan (http://sitemaker.umich.edu/lmt/people). However, we moved away from a multiple-choice format, and asked questions about what the learners did correctly too, in order to assess whether the teachers have an awareness of both correct and incorrect aspects in learners' work. For knowledge on English language, we drew on Moats and Foorman 2003.

3 The 'words characteristic of the discourse' is referred to as vocabulary. "Visual mediators are the means with which participants of discourses identify the object of their talk and coordinate their communication" (Sfard 2007:573). In mathematics, these can be formulae, graphs, diagrams, etcetera. "Narrative is any text, spoken or written, that is framed as a description of objects, or of relations between objects or activities with or by objects, and that is subject to endorsement or rejection, that is, to being labeled as true or false. Terms and criteria of endorsement may vary considerably from discourse to discourse" (ibid:574). "Routines are well-defined repetitive patterns in interlocutors' actions, characteristic of a given discourse"(ibid). Routines are regulated by principles that can be explicit or implicit.