Services on Demand

Journal

Article

Indicators

Related links

-

Cited by Google

Cited by Google -

Similars in Google

Similars in Google

Share

The Independent Journal of Teaching and Learning

On-line version ISSN 2519-5670

IJTL vol.13 n.1 Sandton 2018

ARTICLES

To use electronic assessment or paper-based assessment? That is the question (apologies to Shakespeare)

Rehana Minty

University of Johannesburg, South Africa

ABSTRACT

This investigation, conducted in a Higher Education Institution (HEI) aimed to compare the results of an electronic assessment with a paper-based assessment on MS Excel in the module End User Computing (EUC) that equips students with the necessary computer literacy skills for the workplace. Three hundred and thirty-seven (337) students registered for the module EUC participated in this investigation. Students wrote two assessments, namely an electronic assessment followed by a paper-based assessment in a controlled environment. The same concepts were assessed in both assessments. The results of each assessment were captured and compared to establish how students performed in each of the assessments. What was evident in this investigation at one HEI, is that more students passed the paper-based assessment when compared to the electronic assessment. Both the merits and demerits of the electronic and paper-based assessments were highlighted, thereby concluding that neither method is superior to the other. Consequently, a 'blended' method of assessment is recommended since one method of assessment complements the other. Additionally, the blended method of assessment accommodates students with diverse abilities, thereby eliminating the possibility of disadvantaging some students. This investigation despite its limitations could pave the way for more in-depth studies to be conducted.

Keywords: assessment, electronic assessment, paper-based assessment, End-User Computing, computer literacy skills, blended approach

INTRODUCTION AND BACKGROUND TO THE INQUIRY

Technology dominates life in the 21st century, every facet of life whether it be education, work, home or play is currently a significant aspect of education. There is a variety of computer software that may be used as an alternative to teaching and learning in a traditional classroom, such as computer software that allows the user to interact with the subject content, to gain the requisite computer literacy skills (Varank, 2006). Similarly, lecturers choose to use computer-based assessment tools to assess basic computer literacy skills. Initially, computer literacy was defined as the knowledge and skills that an individual should have about computers to operate proficiently in society (Halaris & Sloan, 1985 in Masouras & Avgousti, 2010). However, a more recent definition is provided by the Ministerial Council on Education, Employment, Training and Youth Affairs (MCEETYA, 2005: 2) who define computer literacy as 'the ability of individuals to use ICT appropriately to access, manage, integrate and evaluate information, develop new understandings, and communicate with others in order to participate effectively in society'. The use of ICTs in Higher Education Institutions (HEIs) is integral to teaching and learning and for students to function competently in society and the workplace. Consequently, it is incumbent upon HEIs to ensure that students entering the workplace have the requisite computer literacy skills.

MS Excel is a component of the End User Computing (EUC) module at a selected HEI and is a compulsory module for a number of qualifications. This module focuses on basic computer literacy skills such as Word Processing, Spreadsheets, Presentations and Databases. Assessment is compulsory to ascertain whether students have attained the above-mentioned computer literacy skills. According to Mohamad, Dahlan, Talmizie, Rizman and Rabi'ah (2013: 191) 'competence expects a person to be able to exhibit a hands-on expertise with a software application'. Competence is determined through assessments. Assessments may be conducted using either the paper-based assessment method or an electronic assessment, thereby affording students the opportunity to 'demonstrate their competencies in the given tasks' (Mohamad et al., 2013: 191). For this module, all assessments are conducted with the use of an electronic testing tool. Consequently, the intention of this investigation was to compare the results obtained by students who wrote both an electronic assessment and a paper-based assessment that assessed the same MS Excel concepts, to determine whether assessments for this module should be solely electronic, paper-based or blended. For the purpose of this paper it is necessary to distinguish between a paper-based assessment and an electronic assessment.

A paper-based assessment is a question paper (hard copy) that is distributed to students during an assessment, requiring them to answer the questions using a software program, in this case MS Excel. The student's document is saved on a removable storage device, which is later marked by a lecturer. However, with electronic assessment, an electronic assessment tool is used, in the form of a software program that assesses students' computer skills in a simulated environment which presents 'only limited functionality to the user' (Masouras & Avgousti, 2010: 4). Limited functionality with regard to this online assessment tool is that the student has to know on which tab the icon is found and if s/he clicks for example, on another tab, this will be recorded as one submission attempt. At this particular HEI, students are allowed only three attempts per question in an electronic assessment for the EUC module. If the electronic testing tool limits this functionality of allowing the student to browse the different tabs to find the solution, this could be disadvantageous to the student because in a 'live' environment in MS Excel, the student is allowed unlimited attempts to search for the appropriate icon to answer the question.

Since lecturers are able to gauge through assessments whether learning has taken place or not, assessment forms an integral part of teaching and learning in HEIs. The use of technology in assessment could assist lecturers to re-examine their teaching and learning methods, as well as to introduce new or innovative methods of assessing students. However, a variety of assessment strategies should be used to ascertain whether learning has taken place or not. Since technology may be used to enhance students' learning experiences, it is imperative that assessors take cognisance of this when including the use of technology in assessment. Phillips (1998 in Coetzee 2009: 27) contends that 'a sound pedagogical basis must be in place for the application of technology to succeed. We must not let the media limit our approaches; technology without pedagogy is nothing'. The teaching and learning centre at Macquarie University (2011) supports this claim and points out that when technology is used in assessment, it is necessary to plan carefully and manage the assessment because the assessment should focus on improving learning while being cognisant of not placing students with disabilities at a disadvantage. Although electronic assessment is recommended, there are both advantages and disadvantages experienced by both students and lecturers.

An advantage of an electronic assessment is that lecturers find compiling an electronic assessment easier as questions are selected from a database. Since questions are selected from a database, the time spent on compiling an electronic assessment is reduced when compared with compiling a paper-based assessment, which requires a lecturer to formulate questions as well as structure and compile the assessment on paper. In addition, on completion of an electronic assessment, results are available immediately to both students and lecturers as the electronic testing tool is responsible for marking the assessment. However, assessing students using the paper-based method of assessment is labour intensive and time-consuming as each assessment has to be marked manually by the lecturer. According to this particular HEI's assessment policy, results should be published within seven to 10 working days from the date of the assessment. Rising student numbers per module would increase the pressure and workload on lecturers to mark the paper-based assessments during this specified time. Electronic assessments on the other hand give rise to an easier assessment process especially when dealing with large student numbers and could reduce the workload of lecturers since the electronic testing tool marks assessments. However, Bull and Danson (2004: 4) caution that electronic testing tools are 'not a panacea for rising student numbers and marking overload though if used appropriately, it can support and enhance student learning in ways which are not possible with paper-based assessments'. In addition, when lecturers mark a paper-based assessment, there is a possibility that subjectivity may influence the marking whereas if an electronic assessment tool is used, subjectivity is eliminated.

Capturing of results when using an electronic assessment tool is also advantageous to the lecturer (Chalmers & McAusland, 2002). The results of an entire class may be exported from the electronic assessment tool into a spreadsheet in a matter of seconds whereas the results of individual students from a paper-based assessment have to be captured 'manually' on a spreadsheet, this exercise is time-consuming and the possibility of the occurrence of errors while capturing results, exists (Chalmers & McAusland, 2002). Additionally, with electronic assessment, feedback in the form of an automated report is available to each student on completion of the assessment, also allowing lecturers to identify 'at risk' students as early as in the first assessment task (Conole & Warburton, 2005). Consequently, lecturers prefer the use of electronic assessment tools to conduct assessments since electronic assessment tools do not require much effort on the part of the lecturer (Coetzee, 2009).

Despite electronic assessments having advantages, lecturers at this HEI need to take cognisance of the fact that the intention of assessment in EUC is to establish students' competence levels and whether the necessary module outcomes have been achieved. It is therefore imperative that lecturers take into consideration the aims of assessment and that 'academic aims determine the framework of assessment that is adopted and not vice versa' (Chalmers & McAusland, 2002: 5). Another important aspect to consider when deciding to use electronic assessments is 'although in many cases these systems appear to be impressive and resemble to a very high degree to the actual application software, a closer look immediately reveals their limitations' (Masouras & Avgousti, 2010: 4). The initial limitation identified in this particular electronic testing tool is that there is only one question per concept in the database. Consequently, a lecturer has no choice but to repeat the question in consecutive assessments that the student may be required to write because of admission to a sick assessment, supplementary assessment or a special assessment. This begs the following question: 'Did the student pass the module because of the repetition of the questions or did s/he understand the concepts being assessed?'

In a paper-based assessment, the student is required initially to determine the correct function in MS Excel to use in order to answer the question before typing or inserting the function. Whereas, in the electronic assessment, the actual function name is visible to students using the 'show task lisf on the screen (see Figure 1 below, questions 6 and 7). This task list indicates to students exactly which function to use in MS Excel to answer a question. This implies that the use of the electronic testing tool does not require any cognitive function but the mere application of the listed function. The fact that the questions in the electronic assessment informs students which functions to employ is not a true reflection of what is required in the workplace. Consequently, the electronic assessment method may not adequately prepare students for the workplace.

As mentioned previously, yet another limitation is that students are not allowed the opportunity to browse the different tabs in order to find the correct icon or solution to a question in an electronic assessment. The paper-based assessment, which closely replicates the work environment, is more beneficial in preparing students for the workplace because they will have to decide for themselves the appropriate functions to use. Additionally, Harding and Raikes (2002) add that assessments and learning should be effected within an identical educational environment for summative assessment to be authentic. In this regard, the paper-based assessment replicates the teaching environment. It is therefore advisable that before an assessment method is chosen, the advantages and disadvantages of each assessment method, which are the paper-based assessment and the electronic assessment, be considered.

The rationale for conducting this study emanated from numerous concerns voiced by both students and lecturers regarding the 'difficulty and unfairness' of electronic assessments. Students were of the opinion that electronic assessments were unfair and difficult because they were not allowed to browse the different tabs to answer the questions as they were taught during lectures and were only permitted three attempts to answer each question. Additionally, if the duration of the test was for example, 60 minutes and the student completed all attempts per question in less than the allotted time, the test would 'shut down' and the result of the assessment would appear on the screen, giving students no time to peruse or change their answers. In contrast, a paper-based assessment would allow students to browse and utilise the full time allocated to the assessment. Lecturers who teach this module at this HEI concur with the students regarding the unfairness and difficulty of electronic assessments. Furthermore, since the majority of the students registered for the EUC module are second language speakers of English in this HEI, and English spoken in South Africa is Standard South African English, students may encounter difficulty comprehending the requirements of the electronic assessment tool, which uses American English. Additionally, in an electronic assessment, certain concepts may be assessed using the 'fill in the blanks' method of questioning where students are required to type an answer. In the event of the student misspelling the concept, the electronic assessment tool will mark the answer as incorrect because of the spelling error. Regarding spelling errors in students' answers, a lecturer in the paper-based assessment may be more flexible and disregard incorrect spelling. Lecturers also claimed that students who had failed the electronic assessment could have improved their results had they been given a paper-based assessment instead. Bearing the above concerns in mind, this investigation was conducted at an HEI and attempted to examine the veracity of these concerns. The sample was chosen from the population of students registered for the EUC course at the selected HEI.

Sample of participants

The sample included 337 participants and comprised part-time students from the workplace as well as full-time students, who are currently registered for the EUC module. No specific criteria were used in the selection of these participants regarding gender, age or race. The only criterion was that they had to be registered for the EUC module.

METHOD OF DATA COLLECTION

This investigation compared two types of assessment methods which focused on assessing the acquisition of skills on a particular component in the EUC module. Students' results from an electronic assessment and a paper-based assessment were compared looking for similarities and differences, thereby highlighting the advantages and disadvantages associated with each assessment strategy. The paper-based and the electronic assessment comprised 50 questions each and the duration of each assessment was 75 minutes. Both assessments focused on identical concepts, thereby enabling the comparison of the results obtained in each assessment. The electronic assessment was conducted first as the electronic assessment tool does not allow the student to search for answers to a question, however, to reiterate, students were allowed only three attempts to answer each question in an assessment. On completion of the electronic assessment, the same students proceeded to complete the paper-based assessment. Both assessments were conducted in a controlled environment with two invigilators per venue. A controlled environment was necessary to ensure that students did not have access to any assistance and there was no time lapse between the two assessments, namely the electronic and paper-based. This controlled environment was necessary to prevent participants from consulting sources for assistance. Once the results were available, the data were analysed.

ANALYSIS OF RAW DATA

Initially, the results for both the assessments were analysed by assigning a 'pass' or 'fail' to each student's result. At this HEI, a student is required to attain 50% or more to pass an assessment. The analysis revealed that 194 students passed the electronic assessment whereas 202 students passed the paper-based assessment, indicating that the pass rate for the paper-based assessment was higher when compared to the electronic assessment. On further investigation, the results revealed that 57 students, who had failed the electronic assessment, had passed the paper-based assessment, whereas 44 students who failed the paper-based assessment had passed the electronic assessment. An explanation as to why more students failed the electronic assessment than those who failed the paper-based assessment is discussed below. Additionally, the competence levels of those students who failed the paper-based assessment but passed the electronic assessment is of concern and perhaps the discussion that follows could shed light on these issues.

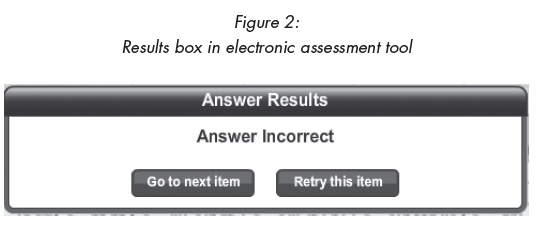

To reiterate, in order for the student to attain the correct answer in an electronic assessment, s/he should know exactly on which tab in MS Excel as well as on which icon on the ribbon to click. Additionally, the electronic assessment currently used at this HEI allows students three attempts at answering a question. If students do not answer the question correctly on either of the first two attempts, the message as displayed in Figure 2 below, appears on the screen.

The above message 'Answer Incorrecf indicates to the student that the choice of method used to answer the question was incorrect. Consider the following example of a question on MS Excel from an electronic assessment; 'Change the font color of the selected text to standard blue'. In the electronic assessment, the student may click on the 'Fill Color' icon on the home tab. Since this is not the correct icon to answer the question, the above box as indicated in Figure 2 will appear on the screen. Consequently, the student has the option to use another method or icon in the second and third attempts. The student is aware that another icon that refers to colour may be used and will therefore use the 'font color' icon. In this case, the student is directed to the correct answer, as there are only two icons on the ribbon that refer to colour, indicating that the student did not understand the difference between the concepts pertaining to colour. However, in a paper-based assessment, if the student had to follow the identical steps to answer the question, there will be no prompt by the computer to use another method if the 'fill color' icon was chosen initially.

Additionally, an instruction like 'Bold the selected text' which is a sample question from the electronic assessment tool only assesses whether the student can click on the bold icon, whereas in a paper-based Excel test the student should first select the text before clicking on the bold icon. This instruction as assessed in the electronic testing tool once again requires no cognitive effort on the part of the student as it is merely a 'mechanical' exercise. Whereas, in a paper-based assessment, students must first know how to select the text before they can click on the bold icon. In addition, in paper-based assessments, students are able to return to their answers to revise or change accordingly if they realise that they have answered the question incorrectly (Masouras & Avgousti, 2010). What is important to note is that there is no prompt, as indicated in Figure 2, in a paper-based assessment to indicate to students that they have clicked on the wrong icon. Consequently, a paper-based assessment is more authentic since the teaching environment is replicated as well as highlighting the necessary skills that are required in the workplace.

Another drawback of the electronic testing tool is evident in the explanation that is based on Figure 3 below.

Above is a spreadsheet that comprises instructions that may be found in both, a paper-based assessment and an electronic assessment. In the comment column in Figure 3 above, students are required to 'type' the appropriate function to insert the comment 'excellent profit' if the value in the profit (box) column is greater than R10. If the value is R10 or lower in the profit (box) column, students are required to leave the cells in the comment column blank. The appropriate solution to such an instruction in the electronic assessment tool that specifically requires the student to type the function, is as follows: =IF(H5>10,"excellent profit",""). If the student does not type "is equal to (=)", then this is not regarded as a calculation in MS Excel. If s/he does not use a comma (,) to separate the three different sections as indicated in the above solution or leaves out a comma (,) the attempt is marked as incorrect. Additionally, to leave a cell blank the student needs to know that the correct syntax to be used is an open double quotation mark immediately followed by a closed double quotation mark ("") with no space left in-between the quotation marks. The complex syntax used when typing such a solution may lead to students failing to carry out the instruction correctly. However, if this instruction was asked in a paper-based MS Excel assessment, the lecturer would be able to apply his/her expertise and make a judgement on awarding marks for a partly correct solution (JISC, 2010).

In the above-mentioned example using the electronic testing tool, students were required to 'type' the appropriate function, however, another way in which the same function could be tested also using an electronic testing tool is to 'insert' the appropriate function. In this case, the student would click on the FORMULAS tab and then click on the icon as indicated in Figure 4 below.

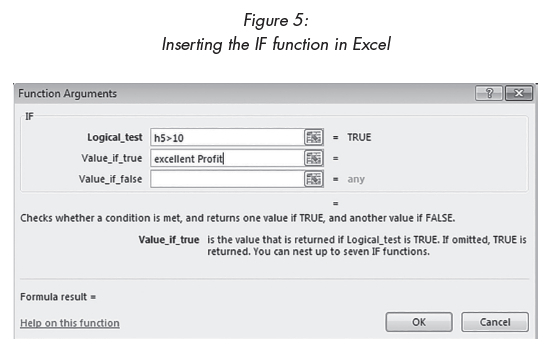

The dialog box, as depicted in Figure 5 below will then appear on the screen where the student is required to type.

In Figure 5 above, it is evident that the student is not required to type the syntax (, or "") which makes it an easier task that is 'user friendly'. This method affords students a greater probability of obtaining the correct solution. If a paper-based assessment is employed as opposed to an electronic assessment, students have the option of using either method, depending upon the individual's preferred method. This could be another reason why more students passed the paper-based assessment as opposed to students who passed the electronic assessment. Another reason why more students could have passed the paper-based assessment as opposed to the electronic assessment, is having unlimited attempts to find the correct answer, which once again replicates the teaching and learning environment and the reality of the workplace. When formulating assessments, lecturers should be aware that students will naturally choose the easier method to answer a question.

Prescribing the method, 'type the appropriate function' in an electronic assessment, could disadvantage some students because they are required to know the syntax when typing the answer whereas if they inserted the function it would not be necessary for them to have knowledge of the syntax, as indicated in Figure 5 above. This could be yet another reason as to why fewer students passed the electronic assessment when compared to the paper-based assessment. It is important to point out that although students are taught both methods of inserting and typing functions in MS Excel, they should not be limited by the prescription of the method to be used in an assessment as in the real world, which is the workplace, students have a choice as to the method they feel comfortable to use. The paper-based assessment therefore offers the student the opportunity to either insert or type the appropriate function.

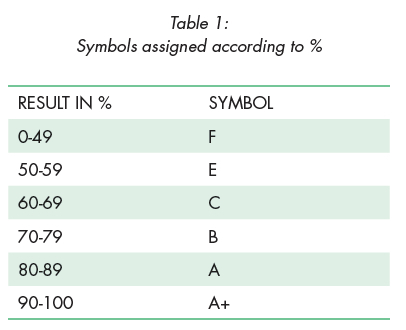

The data were analysed to determine the difference between the results obtained for the paper-based assessment and electronic assessment for each student respectively. In order to accomplish this, symbols were assigned to students' results obtained for each assessment as indicated in Table 1 below, to investigate whether there were any significant differences in the symbols obtained between the two assessment strategies used.

The analysis revealed that 129 students improved their symbols when they wrote the paper-based test, whereas 86 students obtained a higher symbol in the electronic test. Some students improved their mark in the paper-based assessment by up to 25%, which is an indication that perhaps the prescription of the method to be used to answer a question could be an inhibitor to students obtaining a higher percentage in the electronic assessment. This may be applicable where a student obtained 64% for the electronic assessment but 72% in the paper-based assessment. This particular student's symbol moved from a C to a B, while a student who attained 72% in the electronic assessment, attained 94% for the paper-based assessment. In this case, the student's symbol moved from a B to an A+, which is a significant difference. The implication of this student's attainment in the paper-based assessment may be attributed to the fact that in the paper-based assessment s/he had the advantage of both unlimited attempts to respond to the instructions as well as the advantage of being able to use the entire duration allotted to the assessment. The final assumption emanating from this investigation is discussed below.

DISCUSSION AND CONCLUSIONS

In the HEI where the investigation was conducted, a lecturer teaches the skills required to use MS Excel, in a computer laboratory. The instructions given by the lecturer during lectures requires a 'hands on' approach and is similar to the type of questions that would appear in a paper-based assessment, if they were to write paper-based assessments. However, at this HEI, students are exposed only to electronic assessments for both formative and summative assessments for this module.

The results from this investigation at one HEI indicated that some students fared well in the paper-based assessment while others achieved higher results in the electronic assessment. This is evidence that neither the paper-based nor the electronic assessment is superior to the other. Since the focus of the module is to assess whether students have acquired the necessary knowledge and skills on MS Excel, from the above discussion it is apparent that certain concepts are better suited to be assessed using an electronic assessment. It is also evident that the paper-based assessment method could offer additional advantages. Consequently, it is important that before lecturers set assessments the strengths and weaknesses of each assessment method are considered and the assessment method chosen should ensure that students are not disadvantaged in any way. Therefore, a 'blended' method of assessment is recommended, as the disadvantages of an electronic assessment may be resolved by the use of a paper-based assessment and vice versa.

To reiterate, the results emanating from this investigation are not conclusive regarding the superiority of either the electronic assessment method or the paper-based method. The onus is upon the lecturer to use a 'blended' approach to assessment and decide which method of assessment is more appropriate to assess the content that was taught. What emerged from this minor investigation is that more students passed the paper-based assessment when compared to the electronic assessment; this could perhaps be related to the mode of delivery that is similar to a paper-based assessment. Although the trend in a global society is towards technology, it is necessary to take cognisance of the fact that both the paper-based and electronic methods of assessment are necessary, specifically where readiness and competence for the workplace is a pre-requisite.

REFERENCES

Bull, J. & Danson, M. (2004) Computer Assisted Assessment (CAA). http://www.heacademy.ac.uk/assets/documents/assessment/LTSNassess14_computer_assisted_assessment.pdf (Accessed 13 March 2018).

Canole, G. & Warburton, B. (2005) 'A review of computer-assisted assessment' Research in learning Technology 13(1) pp.17-31. [ Links ]

Chalmers, D. & McAusland, W. (2002) Computer-assisted Assessment: The Handbook for Economic lecturers. Glasgow, Scotland. https://economicsnetwork.ac.uk/handbook/printable/caa_v5.pdf (Accessed 13 March 2018).

Coetzee, R. (2009) Beyond Buzzwords: Towards an evaluation framework for Computer Assisted Language Learning in the South African FET sector: mini-thesis. Stellenbosch University, South Africa. https://scholar.sun.ac.za/bitstream/handle/10019.1/2729/Coetzee%2c%20RW.pdf?sequence=1 (Accessed 13 March 2018).

Conole, G. & Warburton, B. (2005) 'A review of computer-assisted assessment' Research in learning Technology 13(1) pp.17-31. [ Links ]

Dalziel, J. (2001) 'Enhancing web-based learning with computer assisted assessment: Pedagogical and technical considerations' Proceedings of the 5th CAA Conference. Loughborough: Loughborough University, UK. https://dspace.lboro.ac.uk/dspace-jspui/handle/2134/1795 (Accessed 13 March 2018).

Harding, R. & Raikes, N. (2002) ICT in Assessment and Learning: The Evolving Role of an External Examinations Board. Cambridge, UK: Scottish Centre for Research into on-line learning and assessment (SCROLLA). http://www.cambridgeassessment.org.uk/Images/109689-ict-in-assessment-and-learning-the-evolving-role-of-an-external-examinations-board.pdf (Accessed 13 March 2018). [ Links ]

JISC. (2010) Making the most of a computer-assisted assessment system. University of Manchester, UK. http://www.jisc.ac.uk/media/documents/programmes/elearning/digiassess_makingthemost.pdf (Accessed 13 March 2018).

Macquarie University: Learning and Teaching Centre. (2011) Using technologies to support assessment. http://staff.mq.edu.au/public/download/?id=40247 (Accessed 13 March 2018).

Masouras, P. & Avgousti, S. (2010) 'The design and implementation of an E-Assessment system for Computer Literacy Skills' 13th CAA International Computer Assisted Assessment Conference, University of Southampton, UK. http://caaconference.co.uk/pastConferences/2010/Masouras-CAA2010.pdf (Accessed 13 March 2018).

Ministerial Council on Education, Employment, Training and Youth Affairs. (2005) National Assessment Program - ICT Literacy Years 6 & 10 Report. http://www.nap.edu.au/verve/_resources/2005_ICTL_Public_Report.pdf (Accessed 13 March 2018).

Mohamad, N., Dahlan, A., Talmizie, M., Rizman, Z.I. & Rabi'ah, N.H. (2013) 'Automated ICT Literacy Skill Assessment Using RateSkill System' International Journal of Science and Research (IJSR) 2(8) pp.190-195. [ Links ]

Varank, I. (2006) 'A comparison of a computer-based and a lecture-based Computer Literacy course: A Turkish Case' Eurasia Journal of Mathematics, Science and Technology Education 2(3) pp.112-123. [ Links ]

Date of submission 28 March 2017

Date of review outcome 30 August 2017

Date of final acceptance 11 November 2017